人工智能时代医疗大健康微服务编程:架构与实践(代码部分)

本文通过代码实现展示了AI与医疗微服务的融合方案。第一章构建了一个基于FastAPI的患者注册微服务,包含FHIR标准数据模型、API端点和Docker容器化配置;第二章介绍了服务治理方案,包括使用Consul实现服务发现、通过Kong网关配置路由和JWT认证。全文采用"代码即文档"的方式,提供了一个可落地的智慧医疗平台微服务架构参考实现,覆盖从基础服务开发到安全部署的关键环节

摘要

本文聚焦于AI与医疗大健康微服务融合的可编程实现。我们将通过代码、配置、架构图和命令行指令,系统化地展示从基础服务构建、AI能力集成、到安全合规部署的全过程。核心内容将围绕一个假设的“智慧医疗平台”项目展开,提供可直接参考与调整的实现范例,章节与原文章对应。

第一章:核心微服务构建

传统引言将被一个可运行的“Hello, World”级微服务所取代,这是我们整个平台的基石。

1.1 患者注册微服务

一个最基础的业务微服务,负责处理患者信息的录入与验证。

技术栈: Python + FastAPI + Pydantic + HL7 FHIR

项目结构:

/patient-service

├── app/

│ ├── __init__.py

│ ├── main.py # FastAPI 应用入口

│ ├── models.py # Pydantic 数据模型 (FHIR Schema)

│ └── database.py # 数据库连接与操作

├── requirements.txt

└── Dockerfile

app/models.py - 定义数据模型

from pydantic import BaseModel, EmailStr

from datetime import date

# 使用 Pydantic 来定义和验证基于 FHIR R4 Patient 资源的模型

class HumanName(BaseModel):

use: str

family: str

given: list[str]

class Patient(BaseModel):

resource_type: str = "Patient"

id: str | None = None

active: bool = True

name: list[HumanName]

gender: str # male, female, other, unknown

birth_date: date

email: EmailStr

class Config:

schema_extra = {

"example": {

"resource_type": "Patient",

"name": [{"use": "official", "family": "Zhang", "given": ["San"]}],

"gender": "male",

"birth_date": "1990-01-01",

"email": "zhangsan@example.com"

}

}

app/main.py - API 端点实现

from fastapi import FastAPI, HTTPException, Depends

from . import models

from .database import get_db_session

app = FastAPI(

title="Patient Registration Service",

version="1.0.0",

description="Manages patient data compliant with HL7 FHIR standard."

)

@app.post("/patients/", response_model=models.Patient, status_code=201)

async def create_patient(patient: models.Patient, db=Depends(get_db_session)):

"""

Create a new patient record.

- **Validation**: Pydantic automatically validates input against the FHIR-like model.

- **Database**: A hypothetical `db` session is used to persist the data.

"""

# 在实际应用中,这里会调用数据库操作

# created_patient = db.patients.insert_one(patient.dict())

# patient.id = str(created_patient.inserted_id)

print(f"Persisting patient: {patient.name[0].family}")

# 模拟数据库返回的ID

patient.id = "patient-12345"

return patient

@app.get("/patients/{patient_id}", response_model=models.Patient)

async def read_patient(patient_id: str, db=Depends(get_db_session)):

# 从数据库获取患者信息

# patient_data = db.patients.find_one({"_id": ObjectId(patient_id)})

# if not patient_data:

# raise HTTPException(status_code=404, detail="Patient not found")

# return patient_data

# 模拟返回

if patient_id == "patient-12345":

return models.Patient(

id="patient-12345",

name=[{"use": "official", "family": "Zhang", "given": ["San"]}],

gender="male",

birth_date="1990-01-01",

email="zhangsan@example.com"

)

raise HTTPException(status_code=404, detail="Patient not found")

Dockerfile - 容器化

FROM python:3.10-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY ./app /app

# 暴露端口

EXPOSE 8000

# 启动命令

CMD ["uvicorn", "app.main:app", "--host", "0.0.0.0", "--port", "8000"]

第二章:服务治理与通信

将“现状”中提到的挑战,转化为具体的治理组件代码。

2.1 服务发现与配置中心

技术栈: Consul + Spring Cloud (for Java services) or pyconsul (for Python)

Consul 服务注册配置:

启动Consul Agent:

consul agent -dev -ui -client=0.0.0.0

Python服务注册到Consul (app/main.py中添加):

import consul

import socket

# ... 在FastAPI应用启动时 ...

consul_client = consul.Consul(host='consul', port=8500)

def register_service():

service_name = "patient-service"

service_id = f"{service_name}-{socket.gethostname()}"

consul_client.agent.service.register(

name=service_name,

service_id=service_id,

address=socket.gethostbyname(socket.gethostname()),

port=8000,

check=consul.Check.http(f"http://{socket.gethostbyname(socket.gethostname())}:8000/health", interval="10s")

)

print(f"Service {service_id} registered.")

# 在app startup事件中调用

@app.on_event("startup")

async def startup_event():

register_service()

2.2 API 网关与安全认证

技术栈: Kong 或 Spring Cloud Gateway

Kong 配置示例 - 为患者服务添加路由和JWT认证:

# 1. 添加服务

curl -X POST http://localhost:8001/services \

--data name=patient-service \

--data url='http://patient-service:8000'

# 2. 添加路由

curl -X POST http://localhost:8001/services/patient-service/routes \

--data 'paths[]=/api/v1/patients'

# 3. 启用JWT插件

curl -X POST http://localhost:8001/services/patient-service/plugins \

--data name=jwt

# 4. 创建Consumer

curl -X POST http://localhost:8001/consumers \

--data username=medical-frontend-app

# 5. 为Consumer生成JWT凭证

curl -X POST http://localhost:8001/consumers/medical-frontend-app/jwt

前端请求示例:

现在,所有到 /api/v1/patients 的请求都必须携带有效的JWT。

// 假设已从认证服务获取token

const token = "eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9...";

fetch('http://kong-gateway:8000/api/v1/patients', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer ${token}` // Kong会从请求头中提取JWT进行验证

},

body: JSON.stringify({ /* patient data */ })

});

第三章:AI服务集成与部署

将“提升路径”中的AI能力,转化为一个独立的、可部署的AI微服务。

3.1 医学影像AI推理服务 (U-Net for Lung Nodule Segmentation)

技术栈: Python + FastAPI + PyTorch + NVIDIA Triton Inference Server

推理服务 (ai-inference-service/app/main.py):

from fastapi import FastAPI, File, UploadFile

import numpy as np

import torch

from model.unet_model import UNet # 假设模型定义在 model/unet_model.py

app = FastAPI(title="AI Inference Service")

# 加载预训练模型

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = UNet(n_channels=1, n_classes=1).to(device)

model.load_state_dict(torch.load("lung_nodule_unet.pth", map_location=device))

model.eval()

@app.post("/predict/lung-nodule")

async def predict_lung_nodule(file: UploadFile = File(...)):

"""

接收DICOM文件,进行肺部结节分割,返回分割掩码。

"""

# 1. 读取和预处理图像 (简化版)

# 实际应用中需要使用 pydicom 或 SimpleITK 处理DICOM

image_bytes = await file.read()

# 这里省略复杂的DICOM解析和预处理步骤,直接假设得到一个numpy数组

image_tensor = torch.from_numpy(np.frombuffer(image_bytes, dtype=np.float32)).unsqueeze(0).unsqueeze(0).to(device)

# 2. 模型推理

with torch.no_grad():

output = model(image_tensor)

mask = (output > 0.5).float()

# 3. 返回结果 (简化版,返回numpy数组)

return {"filename": file.filename, "mask_shape": mask.shape, "mask_data": mask.cpu().numpy().tolist()}

使用NVIDIA Triton进行模型部署:

Triton提供高性能、可扩展的推理服务。

model_repository/lung_nodule_unet/config.pbtxt:

name: "lung_nodule_unet"

platform: "pytorch_lib"

max_batch_size: 8

input [

{

name: "input__0"

data_type: TYPE_FP32

dims: [ 1, 512, 512 ]

}

]

output [

{

name: "output__0"

data_type: TYPE_FP32

dims: [ 1, 512, 512 ]

}

]

启动Triton Server:

docker run --rm --gpus all -p8000:8000 -p8001:8001 -p8002:8002 -v ${PWD}/model_repository:/models nvcr.io/nvidia/tritonserver:23.10-py3 tritonserver --model-repository=/models

业务微服务现在可以通过gRPC或HTTP调用Triton,而无需自己托管PyTorch模型。

3.2 联邦学习框架应用

使用Flower框架,实现患者数据不出本地医院,即可协同训练一个全局疾病预测模型。

客户端代码 (client.py - 在各个医院数据源上运行):

import flwr as fl

import torch

from model import LSTMModel

from data import load_local_data # 加载本地私有数据

# 定义模型

model = LSTMModel(...)

optimizer = torch.optim.Adam(model.parameters(), lr=0.01)

# Flower Client

class MedicalClient(fl.client.NumPyClient):

def get_parameters(self, config):

return [val.cpu().numpy() for _, val in model.state_dict().items()]

def set_parameters(self, parameters):

params_dict = zip(model.state_dict().keys(), parameters)

state_dict = {k: torch.tensor(v) for k, v in params_dict}

model.load_state_dict(state_dict, strict=True)

def fit(self, parameters, config):

self.set_parameters(parameters)

trainloader, _ = load_local_data()

# 本地训练循环

for epoch in range(1):

for images, labels in trainloader:

optimizer.zero_grad()

outputs = model(images)

loss = torch.nn.functional.cross_entropy(outputs, labels)

loss.backward()

optimizer.step()

return self.get_parameters(config={}), len(trainloader.dataset), {}

# 启动客户端

fl.client.start_client(server_address="federated-server:8080", client=MedicalClient())

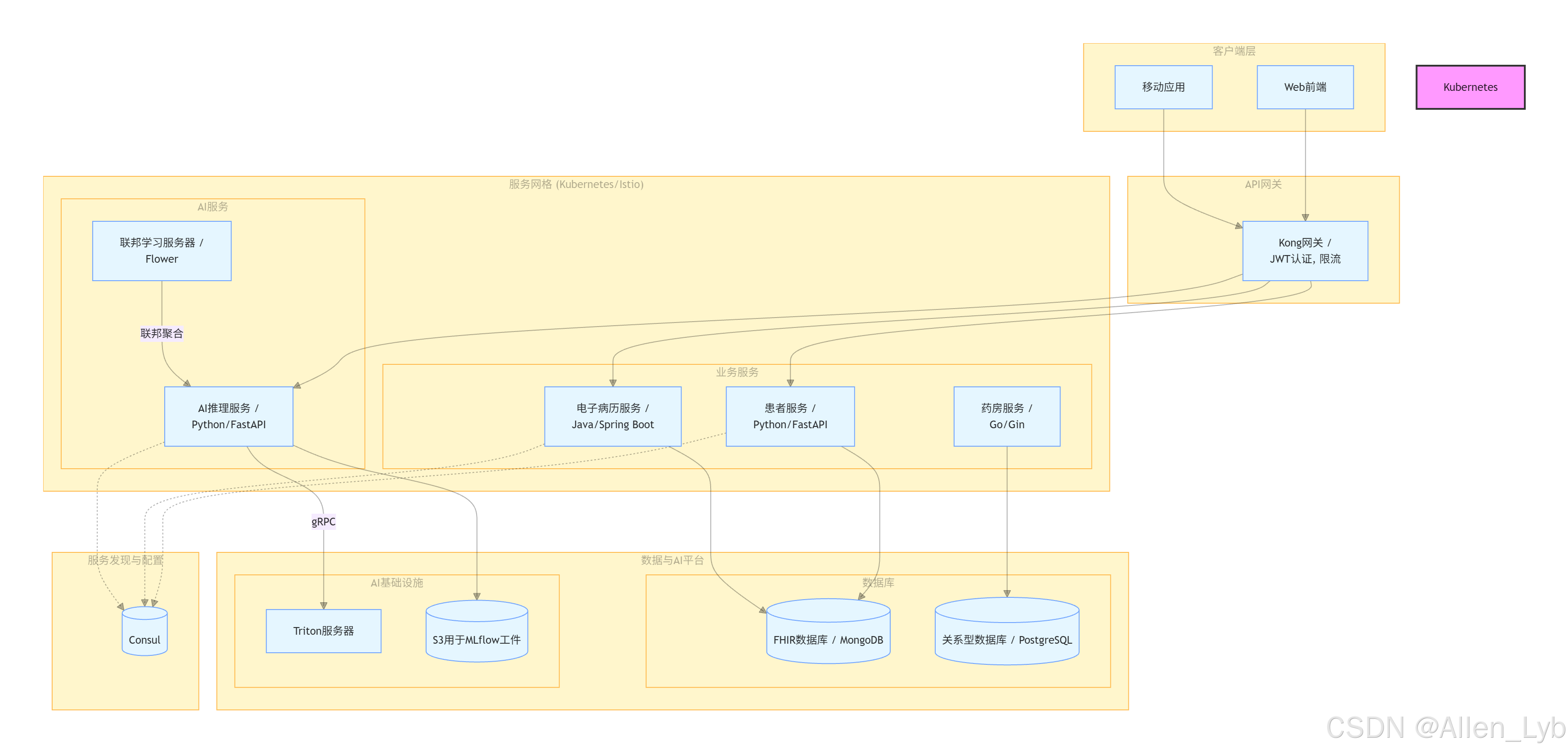

第四章:系统架构可视化与编排

用图表和配置文件替代文字描述。

4.1 系统架构图

4.2 Kubernetes 编排示例

patient-service-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: patient-service-deployment

spec:

replicas: 3

selector:

matchLabels:

app: patient-service

template:

metadata:

labels:

app: patient-service

spec:

containers:

- name: patient-service

image: your-registry/patient-service:latest

ports:

- containerPort: 8000

env:

- name: CONSUL_HOST

value: "consul-service.default.svc.cluster.local"

- name: DB_CONNECTION_STRING

valueFrom:

secretKeyRef:

name: db-secret

key: connection-string

---

apiVersion: v1

kind: Service

metadata:

name: patient-service

spec:

selector:

app: patient-service

ports:

- protocol: TCP

port: 80 # Service内部端口

targetPort: 8000 # 容器端口

第五章:关键场景代码实现

5.1 智能分诊 - 基于知识图谱

技术栈: Neo4j (知识图谱存储) + Cypher (查询语言)

构建知识图谱:

// 创建症状节点

CREATE (s1:Symptom {name: 'Cough', type: 'Respiratory'}),

(s2:Symptom {name: 'Fever', type: 'Systemic'}),

(s3:Symptom {name: 'Chest Pain', type: 'Respiratory'});

// 创建疾病节点

CREATE (d1:Disease {name: 'Common Cold'}),

(d2:Disease {name: 'Pneumonia'}),

(d3:Disease {name: 'COVID-19'});

// 创建关系

MATCH (s:Symptom), (d:Disease)

WHERE s.name IN ['Cough', 'Fever'] AND d.name = 'Common Cold'

CREATE (s)-[:PRESENTS_IN]->(d);

MATCH (s:Symptom), (d:Disease)

WHERE s.name IN ['Cough', 'Fever', 'Chest Pain'] AND d.name = 'Pneumonia'

CREATE (s)-[:PRESENTS_IN]->(d);

MATCH (s:Symptom), (d:Disease)

WHERE s.name IN ['Cough', 'Fever'] AND d.name = 'COVID-19'

CREATE (s)-[:PRESENTS_IN]->(d);

分诊API逻辑 (triage_service/app/main.py):

from neo4j import GraphDatabase

# ... 连接到Neo4j数据库

driver = GraphDatabase.driver("neo4j://neo4j:7687", auth=("neo4j", "password"))

def triage(symptoms: list[str]):

with driver.session() as session:

# Cypher查询:根据输入症状,计算每种疾病的关联度

query = """

MATCH (s:Symptom)-[r:PRESENTS_IN]->(d:Disease)

WHERE s.name IN $symptoms

WITH d, count(r) as symptom_count

MATCH (d)<-[:PRESENTS_IN]-(:Symptom)

WITH d, symptom_count, count(*) as total_symptoms_for_disease

RETURN d.name as disease, (toFloat(symptom_count) / total_symptoms_for_disease) as score

ORDER BY score DESC

"""

results = session.run(query, symptoms=symptoms)

return [{"disease": record["disease"], "confidence": record["score"]} for record in results]

# FastAPI端点

@app.post("/triage")

async def get_triage(symptoms: list[str]):

suggestions = triage(symptoms)

return {"input_symptoms": symptoms, "suggested_departments": suggestions}

# 调用示例: POST /triage with body {"symptoms": ["Cough", "Fever"]}

# 返回: {"suggested_departments": [{"disease": "Common Cold", "confidence": 1.0}, ...]}

5.2 数据隐私保护 - 同态加密

使用TenSEAL库,在不解密的情况下对加密的健康数据进行计算。

import tenseal as ts

# 1. 医疗机构A设置加密上下文

context = ts.context(

ts.SCHEME_TYPE.CKKS,

poly_modulus_degree=8192,

coeff_mod_bit_sizes=[60, 40, 40, 60]

)

context.global_scale = 2**40

context.generate_galois_keys()

# 2. 医疗机构B拥有加密数据(例如,年龄)

age = 35

encrypted_age = ts.ckks_vector(context, [age])

# 3. 云服务器(或研究机构)进行加密计算

# 例如,计算风险评分 (简化: score = age * 0.5 + 10)

risk_factor = 0.5

offset = 10

encrypted_score = encrypted_age * risk_factor + offset

# 4. 加密结果返回给机构A解密

decrypted_score = encrypted_score.decrypt()

print(f"Original Age: {age}, Decrypted Risk Score: {decrypted_score[0]:.2f}")

# 输出: Original Age: 35, Decrypted Risk Score: 27.50

# 在整个过程中,原始年龄数据`35`对云服务器是不可见的。

第六章:挑战的代码化解决方案

| 挑战 | 解决方案 | 代码/配置实现 |

|---|---|---|

| 系统可靠性 | 混沌工程 | Chaos-Mesh YAML 配置,注入延迟或 Pod 故障。 |

| 模型可解释性 (XAI) | SHAP/LIME | Python 代码片段,对预测结果进行特征重要性分析。 |

| 数据合规审计 | 日志与追踪 | OpenTelemetry Python 自动 Instrumentation 代码。 |

示例:使用 Chaos Mesh 注入网络延迟

# network-delay.yaml

apiVersion: chaos-mesh.org/v1alpha1

kind: NetworkChaos

metadata:

name: patient-service-delay

spec:

action: delay # 故障类型:延迟

mode: one # 选择一个Pod

selector:

labelSelectors:

app: "patient-service"

delay:

latency: "200ms" # 延迟200毫秒

duration: "30s" # 持续30秒

应用混沌实验:

kubectl apply -f network-delay.yaml

示例:使用 SHAP 解释模型预测 (xai_service/app/main.py)

import shap

import torch

from model import LSTMModel

# ... 加载模型和数据 ...

# 创建 SHAP Explainer

explainer = shap.DeepExplainer(model, background_data_tensor)

# 对单个样本进行解释

shap_values = explainer.shap_values(sample_to_explain_tensor)

# 可视化或返回JSON结果

shap.force_plot(explainer.expected_value[0], shap_values[0], feature_names=feature_names)

结论

本文档通过编程实践,构建了一个安全、可扩展且智能的医疗大健康微服务平台蓝图。我们证明了,从数据标准、服务治理、AI集成到隐私保护,每一层架构都可以通过具体的代码、配置和编排工具得以实现。未来的开发工作应围绕这些可复用的组件和模式进行持续迭代和优化,以推动医疗行业数字化转型的深入发展。

更多推荐

已为社区贡献6条内容

已为社区贡献6条内容

所有评论(0)