Unmasking the Ranking Scam: Skill, MCP, RAG, Agent & OpenClaw

A CLIAn IDEIn reality?In essence?That’s it.Agents?

You’ll eventually realize that what people call an “agent” is simply the collection of all the parts that don’t require intelligence.

And “skill”? That’s just old wine in a new bottle — a branding scam dressed up as innovation.

By the end, I’ll give you a unified methodology that cuts through every current buzzword — and even the ones that haven’t been invented yet — so you can instantly see through them.

That sentence might be grammatically questionable. But that doesn’t matter.

Right now, just clear your mind. Forget everything you think you know — and don’t know. Let’s enter the dream together.

Everything started with this ancient thing: the language model.

Early small language models were basically idiots. But as the number of parameters kept increasing, at some critical point, something emerged — intelligence.

To distinguish this new “intelligent” model from the old idiot, you simply added the word “Large” in front of it.

And just like that, you invented today’s first buzzword:

Large Language Model. LLM.

Congratulations.

But fundamentally, a large model can only do one thing: predict the next token.

If you just let it continue text, it still looks like an idiot.

But if you artificially split roles — one side asking, one side answering — suddenly you have something that resembles intelligence: dialogue.

Now imagine you’re a boss.

LLM is your employee. Let’s call him Little L.

But there’s a catch: he only answers once. One question, one answer. No follow-ups.

That limitation is extremely important.

Your mission from here on is simple: squeeze every ounce of value out of this one-shot employee.

But first, you give each interaction a fancy name: prompt.

Congratulations. Second buzzword unlocked.

Then you realize prompts have structure. Some parts are background information, others are the final instruction.

So you name the background part context.

Third buzzword.

But what if you want follow-up questions?

Remember: Little L only answers once.

So you come up with a trick. Before every new question, you paste the entire conversation history into the context. You fake a multi-turn dialogue.

You call that memory.

Fourth buzzword.

Then you even use the model itself to summarize and compress memory to save tokens.

And just like that, your once-idiotic text predictor has become a seemingly thoughtful conversational employee.

But you’re not satisfied.

Little L can’t browse the web. If it doesn’t know something, it hallucinates outdated nonsense.

Easy fix, right? Just give him a computer.

But wait — he still only predicts tokens.

So you tell him:

“If you need to search the web, tell me.”

You manually perform the search, then feed results back.

Soon you realize… who’s the real employee here?

So you automate the search logic with a program that sits between you and Little L.

To outsiders, it still looks like simple Q&A. But now there’s a mysterious program in between.

This is brilliant.

It feels like intelligence.

Tool-using intelligence.

You name it: Agent.

Don’t feel embarrassed. Early so-called “agents” were literally just a longer prompt.

Looking back, it was borderline marketing fraud.

Since your agent can search the web, why not search local documents too?

Sure. But now instead of keyword matching, you use vector databases to retrieve semantically similar chunks.

You name this approach: Retrieval-Augmented Generation. RAG.

And maybe you give web search its own fancy name too.

In the end, they’re all the same category:

Ways of injecting information beyond the model’s parameters into the context.

At this point, you’ve already invented eight buzzwords.

Now let’s examine the architecture.

Between you and Little L sits the agent. It reduces direct communication and handles tool usage.

But if the agent and the model communicate in free-form natural language, it’s impossible to reliably parse tool calls in code.

So you impose structure.

You require the model to output JSON.

You call this agreement: function calling.

It’s nothing more than a format contract — just like frontend and backend agreeing on an API schema.

Then you notice tool implementations are hardcoded inside the agent.

So you separate them into standalone services.

Now the agent needs a protocol to discover and call tools.

You define conventions like tools/list and tools/call.

You name this agreement: MCP — Model Context Protocol.

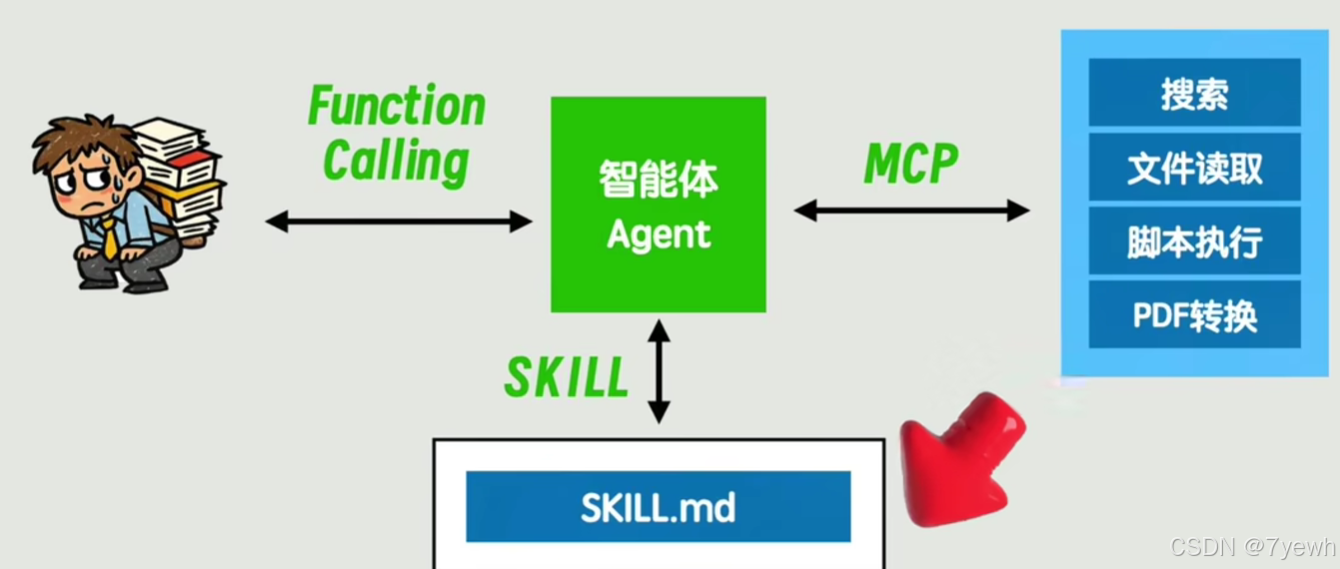

Now the architecture looks like this:

The model is a wise philosopher who can only speak.

MCP services are tool providers.

The agent is the messenger translating speech into API calls and passing results back.

And to you, it says: I don’t produce information. I just transport it.

On the interface side, the agent can appear as:

-

A CLI

-

An IDE

-

A desktop assistant

-

Or viral products like Cloud Bot / Mult Bot / Open Cloud

Different skins. Same core.

Now suppose the task is:

Extract text from an English PDF → translate to Chinese → save as Markdown.

You could let the agent plan freely every time.

But that wastes tokens and reduces stability.

So you hardcode stable parts into scripts.

You build chains.

You invent LangChain.

Then low-code workflow builders.

But what if input formats vary? PDF, Word, TXT, PPT? Output formats vary?

Do you build workflows for every combination?

You could write if-else logic. But that breaks natural-language flexibility.

So instead, you create a directory of scripts and a master instruction file.

The agent reads instructions and selects scripts dynamically.

Then you optimize again: hardcode a loader.

You name it: skill.

In reality?

You just moved prompts into a different folder.

Then comes sub-agent.

For complex tasks, you isolate context into smaller agents.

In essence? Context isolation.

That’s it.

Let’s zoom out.

Every new term arrived with exaggerated hype and marketing.

But fundamentally, these are transitional artifacts of technological evolution.

Clarifying common confusions:

Function calling vs MCP?

One defines format between model and agent.

The other defines protocol between agent and tools.

Different layers.

Skill vs MCP?

Skill is just a prompt loader.

MCP is a tool protocol.

Different dimensions.

From rigid to flexible:

LangChain → Workflow → Skill → Pure Agent

Hardcoded stability → low-code → semi-guided agent → fully autonomous agent.

More flexibility means more unpredictability.

The tradeoff is obvious.

Now the deeper truth:

All of this still revolves around prompts and context.

Search, RAG, Skill — they’re all mechanisms for stuffing more information into context.

Agents?

They handle everything that doesn’t require intelligence.

Deterministic logic → code

Ambiguous branching → model

That’s the real division of labor.

The biggest bottleneck today?

Token cost.

Powerful agents consume enormous tokens.

But token cost will drop.

Eventually, production-level models will run locally.

At that point, tokens become effectively free.

Look at software history.

Java has Spring Boot.

Python has uv.

They prioritize developer convenience over performance micro-optimizations.

Convenience wins.

In the agent world, it’s even more extreme.

End users won’t configure MCP services.

They won’t manage skill directories.

They won’t choose APIs.

The product that hides complexity will win.

Why is Cloud Bot so popular?

Not because it’s fundamentally different.

It connects to social apps.

Supports scheduled tasks.

Has a visual skill manager.

For the first time, ordinary users feel like they’re interacting with an intelligent being — not a background service.

更多推荐

已为社区贡献4条内容

已为社区贡献4条内容

所有评论(0)