基于SpringAI的在线考试系统-整体架构优化设计方案(续)

渐进式演进:从单体逐步拆分,控制风险领域驱动设计:按业务边界拆分微服务容错设计:熔断、降级、重试、限流可观测性:指标、日志、链路追踪三位一体自动化一切:CI/CD、扩缩容、故障恢复。

·

教育考试系统优化实现方案

一、核心架构图(详细实现版)

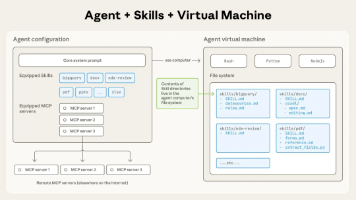

1.1 整体微服务架构

1.2 容器化部署架构

二、核心时序图

2.1 考试流程时序图

2.2 智能推荐时序图

三、核心算法实现最佳实践

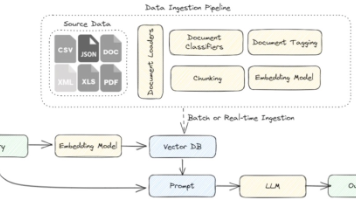

3.1 智能推荐算法实现

// 推荐服务核心算法实现

@Service

@Slf4j

public class IntelligentRecommendationService {

@Autowired

private RedisTemplate<String, Object> redisTemplate;

@Autowired

private UserProfileService userProfileService;

@Autowired

private QuestionService questionService;

@Autowired

private KafkaTemplate<String, String> kafkaTemplate;

// 混合推荐算法

public List<QuestionRecommendation> hybridRecommend(Long userId, int count) {

long startTime = System.currentTimeMillis();

try {

// 1. 并行获取多种推荐结果

CompletableFuture<List<QuestionRecommendation>> cf1 =

CompletableFuture.supplyAsync(() -> collaborativeFiltering(userId, count));

CompletableFuture<List<QuestionRecommendation>> cf2 =

CompletableFuture.supplyAsync(() -> contentBasedRecommend(userId, count));

CompletableFuture<List<QuestionRecommendation>> cf3 =

CompletableFuture.supplyAsync(() -> popularityRecommend(userId, count));

// 2. 等待所有结果

CompletableFuture.allOf(cf1, cf2, cf3).join();

List<QuestionRecommendation> cfResults = cf1.get();

List<QuestionRecommendation> cbResults = cf2.get();

List<QuestionRecommendation> popResults = cf3.get();

// 3. 混合排序策略

Map<Long, Double> scoreMap = new HashMap<>();

// 协同过滤权重:0.4

for (int i = 0; i < cfResults.size(); i++) {

QuestionRecommendation r = cfResults.get(i);

double score = 0.4 * (1.0 - i * 0.1 / cfResults.size());

scoreMap.merge(r.getQuestionId(), score, Double::sum);

}

// 内容推荐权重:0.3

for (int i = 0; i < cbResults.size(); i++) {

QuestionRecommendation r = cbResults.get(i);

double score = 0.3 * (1.0 - i * 0.1 / cbResults.size());

scoreMap.merge(r.getQuestionId(), score, Double::sum);

}

// 热门推荐权重:0.3

for (int i = 0; i < popResults.size(); i++) {

QuestionRecommendation r = popResults.get(i);

double score = 0.3 * (1.0 - i * 0.1 / popResults.size());

scoreMap.merge(r.getQuestionId(), score, Double::sum);

}

// 4. 按分数排序并返回

return scoreMap.entrySet().stream()

.sorted(Map.Entry.<Long, Double>comparingByValue().reversed())

.limit(count)

.map(entry -> QuestionRecommendation.builder()

.questionId(entry.getKey())

.score(entry.getValue())

.build())

.collect(Collectors.toList());

} catch (Exception e) {

log.error("推荐算法异常: userId={}", userId, e);

return fallbackRecommend(userId, count);

} finally {

log.info("推荐耗时: {}ms, userId={}",

System.currentTimeMillis() - startTime, userId);

}

}

// 协同过滤算法实现

private List<QuestionRecommendation> collaborativeFiltering(Long userId, int count) {

// 1. 获取用户-题目评分矩阵

Map<Long, Map<Long, Double>> userItemMatrix = getUserItemMatrix();

// 2. 计算用户相似度

Map<Long, Double> userSimilarities = computeUserSimilarity(

userId, userItemMatrix);

// 3. 预测评分

Map<Long, Double> predictions = predictRatings(

userId, userItemMatrix, userSimilarities);

// 4. 返回TopN

return predictions.entrySet().stream()

.sorted(Map.Entry.<Long, Double>comparingByValue().reversed())

.limit(count)

.map(entry -> new QuestionRecommendation(entry.getKey(), entry.getValue()))

.collect(Collectors.toList());

}

// ALS矩阵分解实现

public void trainALSModel() {

// 构建训练数据

JavaRDD<Rating> ratings = buildTrainingData();

// 设置ALS参数

int rank = 10; // 潜在特征数

int numIterations = 10; // 迭代次数

double lambda = 0.01; // 正则化参数

// 训练模型

MatrixFactorizationModel model = ALS.train(

JavaRDD.toRDD(ratings),

rank,

numIterations,

lambda

);

// 保存模型

model.save(sparkContext, "hdfs://models/als/" + System.currentTimeMillis());

// 评估模型

double mse = evaluateModel(model, ratings);

log.info("ALS模型训练完成,MSE: {}", mse);

}

// 实时特征更新

@KafkaListener(topics = "user_behavior")

public void processBehavior(String message) {

UserBehavior behavior = JSON.parseObject(message, UserBehavior.class);

// 更新实时特征

String key = String.format("user:feature:realtime:%d", behavior.getUserId());

redisTemplate.opsForHash().put(key,

behavior.getQuestionId().toString(),

System.currentTimeMillis());

// 滑动窗口统计

updateWindowStats(behavior);

// 触发实时推荐更新

if (behavior.getAction().equals("submit_answer")) {

updateUserRecommendation(behavior.getUserId());

}

}

}

3.2 学习行为分析模型

# 学习行为分析模型 - Python实现

import pandas as pd

import numpy as np

from sklearn.cluster import KMeans

from sklearn.ensemble import RandomForestClassifier

from xgboost import XGBClassifier

import tensorflow as tf

from tensorflow.keras import layers, models

import joblib

import json

from datetime import datetime, timedelta

class LearningBehaviorAnalyzer:

def __init__(self):

self.feature_scaler = None

self.cluster_model = None

self.prediction_model = None

self.sequence_model = None

def extract_features(self, user_data):

"""提取学习行为特征"""

features = {}

# 1. 时间特征

features['study_duration_daily'] = self._calc_daily_study_time(user_data)

features['study_consistency'] = self._calc_study_consistency(user_data)

features['preferred_study_time'] = self._get_preferred_time(user_data)

# 2. 效率特征

features['answer_accuracy'] = self._calc_accuracy(user_data)

features['avg_time_per_question'] = self._calc_avg_time(user_data)

features['concentration_score'] = self._calc_concentration(user_data)

# 3. 内容特征

features['knowledge_coverage'] = self._calc_coverage(user_data)

features['difficulty_preference'] = self._calc_difficulty_pref(user_data)

features['learning_path_complexity'] = self._calc_path_complexity(user_data)

# 4. 交互特征

features['replay_frequency'] = self._calc_replay_freq(user_data)

features['hint_usage_rate'] = self._calc_hint_usage(user_data)

features['review_ratio'] = self._calc_review_ratio(user_data)

return pd.DataFrame([features])

def cluster_students(self, features_df, n_clusters=4):

"""学生分群 - KMeans聚类"""

from sklearn.preprocessing import StandardScaler

# 特征标准化

self.feature_scaler = StandardScaler()

X_scaled = self.feature_scaler.fit_transform(features_df)

# KMeans聚类

self.cluster_model = KMeans(n_clusters=n_clusters, random_state=42)

clusters = self.cluster_model.fit_predict(X_scaled)

# 分析每个簇的特征

cluster_profiles = self._analyze_clusters(features_df, clusters)

return clusters, cluster_profiles

def predict_learning_outcome(self, X_train, y_train, X_test):

"""预测学习效果 - XGBoost"""

# 特征工程

X_train_processed = self._feature_engineering(X_train)

X_test_processed = self._feature_engineering(X_test)

# 训练XGBoost模型

self.prediction_model = XGBClassifier(

n_estimators=100,

max_depth=6,

learning_rate=0.1,

subsample=0.8,

colsample_bytree=0.8,

random_state=42

)

self.prediction_model.fit(X_train_processed, y_train)

# 预测

y_pred = self.prediction_model.predict(X_test_processed)

y_pred_proba = self.prediction_model.predict_proba(X_test_processed)

return y_pred, y_pred_proba

def build_sequence_model(self, sequences, labels):

"""构建LSTM序列模型"""

vocab_size = 10000 # 知识点数量

embedding_dim = 128

sequence_length = 50

model = models.Sequential([

layers.Embedding(vocab_size, embedding_dim,

input_length=sequence_length),

layers.Bidirectional(layers.LSTM(64, return_sequences=True)),

layers.LSTM(32),

layers.Dense(64, activation='relu'),

layers.Dropout(0.3),

layers.Dense(1, activation='sigmoid')

])

model.compile(

optimizer='adam',

loss='binary_crossentropy',

metrics=['accuracy']

)

# 训练模型

history = model.fit(

sequences, labels,

epochs=10,

batch_size=32,

validation_split=0.2,

verbose=1

)

self.sequence_model = model

return history

def _calc_daily_study_time(self, user_data):

"""计算日均学习时长"""

study_records = user_data.get('study_records', [])

if not study_records:

return 0

total_duration = sum([r.get('duration', 0) for r in study_records])

study_days = len(set([r['date'] for r in study_records]))

return total_duration / max(study_days, 1)

def _calc_study_consistency(self, user_data):

"""计算学习连续性"""

study_dates = set([r['date'] for r in user_data.get('study_records', [])])

if len(study_dates) <= 1:

return 0

date_list = sorted([datetime.strptime(d, '%Y-%m-%d') for d in study_dates])

gaps = [(date_list[i+1] - date_list[i]).days for i in range(len(date_list)-1)]

avg_gap = np.mean(gaps)

consistency = 1.0 / (1 + avg_gap) # 间隔越小,连续性越高

return consistency

def detect_learning_patterns(self, behavior_sequence):

"""检测学习模式"""

patterns = {

'procrastination': False, # 拖延症

'cramming': False, # 临时抱佛脚

'steady_progress': False, # 稳步前进

'burnout_risk': False # 倦怠风险

}

# 分析时间分布

time_distribution = self._analyze_time_distribution(behavior_sequence)

# 检测拖延模式

if self._is_procrastination_pattern(time_distribution):

patterns['procrastination'] = True

# 检测突击模式

if self._is_cramming_pattern(time_distribution):

patterns['cramming'] = True

# 检测稳步模式

if self._is_steady_pattern(time_distribution):

patterns['steady_progress'] = True

# 检测倦怠风险

if self._is_burnout_risk(behavior_sequence):

patterns['burnout_risk'] = True

return patterns

def generate_personalized_recommendations(self, user_features, cluster_label):

"""生成个性化学习建议"""

recommendations = []

if cluster_label == 0: # 高效学习者

recommendations.extend([

"挑战更高难度题目",

"尝试跨知识点综合题",

"参与竞赛题目练习"

])

elif cluster_label == 1: # 需提高效率

recommendations.extend([

"加强薄弱知识点练习",

"使用番茄工作法提高专注力",

"定期复习错题本"

])

elif cluster_label == 2: # 学习时间不足

recommendations.extend([

"制定每日学习计划",

"利用碎片化时间学习",

"优先完成核心知识点"

])

elif cluster_label == 3: # 学习方法需改进

recommendations.extend([

"先理解概念再做练习",

"整理错题本定期复习",

"寻求老师或同学帮助"

])

# 基于具体特征给出建议

if user_features['concentration_score'] < 0.5:

recommendations.append("尝试减少学习干扰,提高专注度")

if user_features['review_ratio'] < 0.3:

recommendations.append("增加复习频率,巩固学习效果")

return recommendations

3.3 容器化部署最佳实践

# Kubernetes部署文件示例

# 1. 命名空间配置

apiVersion: v1

kind: Namespace

metadata:

name: exam-system

labels:

name: exam-system

---

# 2. 配置映射

apiVersion: v1

kind: ConfigMap

metadata:

name: exam-config

namespace: exam-system

data:

application.yml: |

spring:

application:

name: exam-service

datasource:

url: jdbc:mysql://mysql-master.exam-system:3306/exam_db?useSSL=false&characterEncoding=utf8

username: ${DB_USERNAME}

password: ${DB_PASSWORD}

hikari:

maximum-pool-size: 20

minimum-idle: 5

redis:

host: redis-master.exam-system

port: 6379

password: ${REDIS_PASSWORD}

timeout: 3000ms

lettuce:

pool:

max-active: 8

max-idle: 8

min-idle: 0

rabbitmq:

host: rabbitmq.exam-system

port: 5672

username: ${RABBITMQ_USERNAME}

password: ${RABBITMQ_PASSWORD}

server:

port: 8080

---

# 3. 密钥配置

apiVersion: v1

kind: Secret

metadata:

name: exam-secrets

namespace: exam-system

type: Opaque

data:

db-username: YWRtaW4= # base64编码

db-password: cGFzc3dvcmQ=

redis-password: cmVkaXNfcGFzcw==

---

# 4. 服务部署

apiVersion: apps/v1

kind: Deployment

metadata:

name: exam-service

namespace: exam-system

labels:

app: exam-service

version: v1.0.0

spec:

replicas: 3

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

selector:

matchLabels:

app: exam-service

template:

metadata:

labels:

app: exam-service

version: v1.0.0

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "8080"

prometheus.io/path: "/actuator/prometheus"

spec:

containers:

- name: exam-service

image: harbor.example.com/exam/exam-service:v1.0.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

name: http

env:

- name: DB_USERNAME

valueFrom:

secretKeyRef:

name: exam-secrets

key: db-username

- name: DB_PASSWORD

valueFrom:

secretKeyRef:

name: exam-secrets

key: db-password

- name: REDIS_PASSWORD

valueFrom:

secretKeyRef:

name: exam-secrets

key: redis-password

resources:

requests:

memory: "512Mi"

cpu: "250m"

limits:

memory: "1Gi"

cpu: "500m"

livenessProbe:

httpGet:

path: /actuator/health/liveness

port: 8080

initialDelaySeconds: 60

periodSeconds: 10

timeoutSeconds: 3

failureThreshold: 3

readinessProbe:

httpGet:

path: /actuator/health/readiness

port: 8080

initialDelaySeconds: 30

periodSeconds: 5

timeoutSeconds: 3

failureThreshold: 3

lifecycle:

preStop:

exec:

command: ["sh", "-c", "sleep 10"]

volumeMounts:

- name: config-volume

mountPath: /app/config

- name: logs-volume

mountPath: /app/logs

volumes:

- name: config-volume

configMap:

name: exam-config

- name: logs-volume

emptyDir: {}

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- exam-service

topologyKey: kubernetes.io/hostname

---

# 5. 服务暴露

apiVersion: v1

kind: Service

metadata:

name: exam-service

namespace: exam-system

spec:

selector:

app: exam-service

ports:

- port: 80

targetPort: 8080

protocol: TCP

name: http

type: ClusterIP

---

# 6. 水平自动扩缩容

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: exam-service-hpa

namespace: exam-system

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: exam-service

minReplicas: 3

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 70

- type: Resource

resource:

name: memory

target:

type: Utilization

averageUtilization: 80

behavior:

scaleDown:

stabilizationWindowSeconds: 300

policies:

- type: Percent

value: 10

periodSeconds: 60

scaleUp:

stabilizationWindowSeconds: 60

policies:

- type: Percent

value: 20

periodSeconds: 60

---

# 7. Ingress路由

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: exam-ingress

namespace: exam-system

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2

nginx.ingress.kubernetes.io/ssl-redirect: "true"

cert-manager.io/cluster-issuer: "letsencrypt-prod"

spec:

ingressClassName: nginx

tls:

- hosts:

- exam.example.com

secretName: exam-tls-secret

rules:

- host: exam.example.com

http:

paths:

- path: /api/exam(/|$)(.*)

pathType: Prefix

backend:

service:

name: exam-service

port:

number: 80

- path: /api/user(/|$)(.*)

pathType: Prefix

backend:

service:

name: user-service

port:

number: 80

3.4 CI/CD流水线配置

// Jenkinsfile - CI/CD流水线

pipeline {

agent {

kubernetes {

label 'exam-builder'

yaml """

apiVersion: v1

kind: Pod

spec:

containers:

- name: jnlp

image: jenkins/inbound-agent:4.10-3-jdk11

- name: maven

image: maven:3.8.4-openjdk-11

command: ['cat']

tty: true

volumeMounts:

- name: maven-cache

mountPath: /root/.m2

- name: docker

image: docker:20.10.7

command: ['cat']

tty: true

volumeMounts:

- name: docker-sock

mountPath: /var/run/docker.sock

- name: kubectl

image: bitnami/kubectl:1.22.0

command: ['cat']

tty: true

volumes:

- name: maven-cache

persistentVolumeClaim:

claimName: maven-cache-pvc

- name: docker-sock

hostPath:

path: /var/run/docker.sock

"""

}

}

environment {

DOCKER_REGISTRY = 'harbor.example.com'

PROJECT_NAME = 'exam-system'

KUBE_NAMESPACE = 'exam-system'

KUBE_CONFIG = credentials('k8s-config')

}

stages {

stage('代码检查') {

steps {

container('maven') {

sh '''

mvn checkstyle:check

mvn spotbugs:check

mvn pmd:check

'''

}

}

}

stage('单元测试') {

steps {

container('maven') {

sh '''

mvn clean test

'''

}

junit '**/target/surefire-reports/*.xml'

}

}

stage('集成测试') {

steps {

container('maven') {

sh '''

mvn verify -Pintegration-test

'''

}

}

}

stage('代码覆盖率') {

steps {

container('maven') {

sh '''

mvn jacoco:report

'''

}

jacoco(

execPattern: '**/target/*.exec',

classPattern: '**/target/classes',

sourcePattern: '**/src/main/java'

)

}

}

stage('构建镜像') {

steps {

container('docker') {

script {

def version = sh(script: 'git rev-parse --short HEAD', returnStdout: true).trim()

def serviceName = env.JOB_BASE_NAME.replaceAll('-service', '')

sh """

docker build \

-t ${DOCKER_REGISTRY}/${PROJECT_NAME}/${serviceName}:${version} \

-t ${DOCKER_REGISTRY}/${PROJECT_NAME}/${serviceName}:latest \

.

"""

}

}

}

}

stage('安全扫描') {

steps {

container('docker') {

sh '''

docker scan --file Dockerfile .

'''

}

}

}

stage('推送镜像') {

steps {

container('docker') {

withCredentials([usernamePassword(

credentialsId: 'harbor-credentials',

usernameVariable: 'DOCKER_USER',

passwordVariable: 'DOCKER_PASS'

)]) {

sh '''

docker login ${DOCKER_REGISTRY} -u ${DOCKER_USER} -p ${DOCKER_PASS}

docker push ${DOCKER_REGISTRY}/${PROJECT_NAME}/${serviceName}:${version}

docker push ${DOCKER_REGISTRY}/${PROJECT_NAME}/${serviceName}:latest

'''

}

}

}

}

stage('部署到测试环境') {

steps {

container('kubectl') {

script {

dir('k8s') {

sh """

kubectl apply -f namespace.yaml

kubectl apply -f configmap.yaml

kubectl apply -f secret.yaml

kubectl set image deployment/${serviceName} \

${serviceName}=${DOCKER_REGISTRY}/${PROJECT_NAME}/${serviceName}:${version} \

-n ${KUBE_NAMESPACE}

"""

}

}

}

}

}

stage('自动化测试') {

steps {

container('maven') {

sh '''

mvn test -Papi-test

'''

}

}

}

stage('部署到生产环境') {

when {

branch 'main'

}

steps {

input message: '确认部署到生产环境?', ok: '确认'

container('kubectl') {

script {

dir('k8s') {

sh """

kubectl apply -f production/namespace.yaml

kubectl apply -f production/configmap.yaml

kubectl apply -f production/secret.yaml

# 蓝绿部署策略

kubectl apply -f production/${serviceName}-blue.yaml

# 等待新版本就绪

kubectl rollout status deployment/${serviceName}-blue -n ${KUBE_NAMESPACE} --timeout=300s

# 切换流量

kubectl apply -f production/ingress-blue.yaml

# 观察监控指标

sleep 60

# 如果一切正常,删除旧版本

kubectl delete deployment/${serviceName}-green -n ${KUBE_NAMESPACE} || true

"""

}

}

}

}

}

stage('性能测试') {

steps {

container('maven') {

sh '''

mvn gatling:test

'''

}

gatling()

}

}

}

post {

always {

cleanWs()

}

success {

emailext(

to: 'dev-team@example.com',

subject: "构建成功: ${env.JOB_NAME} - ${env.BUILD_NUMBER}",

body: "构建 ${env.BUILD_URL} 成功!"

)

}

failure {

emailext(

to: 'dev-team@example.com',

subject: "构建失败: ${env.JOB_NAME} - ${env.BUILD_NUMBER}",

body: "构建 ${env.BUILD_URL} 失败,请检查!"

)

}

}

}

四、监控与告警配置

# Prometheus监控配置

global:

scrape_interval: 15s

evaluation_interval: 15s

rule_files:

- "alert_rules.yml"

alerting:

alertmanagers:

- static_configs:

- targets: ['alertmanager:9093']

scrape_configs:

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'exam-services'

metrics_path: '/actuator/prometheus'

static_configs:

- targets:

- 'user-service.exam-system:8080'

- 'exam-service.exam-system:8080'

- 'paper-service.exam-system:8080'

- 'recommend-service.exam-system:8080'

# 告警规则

groups:

- name: exam-system-alerts

rules:

- alert: HighErrorRate

expr: rate(http_server_requests_seconds_count{status=~"5.."}[5m]) / rate(http_server_requests_seconds_count[5m]) > 0.05

for: 2m

labels:

severity: critical

annotations:

summary: "高错误率: {{ $labels.instance }}"

description: "错误率超过5% (当前值: {{ $value }})"

- alert: HighLatency

expr: histogram_quantile(0.95, rate(http_server_requests_seconds_bucket[5m])) > 1

for: 2m

labels:

severity: warning

annotations:

summary: "高延迟: {{ $labels.instance }}"

description: "95%分位响应时间超过1秒 (当前值: {{ $value }}s)"

- alert: ServiceDown

expr: up == 0

for: 1m

labels:

severity: critical

annotations:

summary: "服务宕机: {{ $labels.instance }}"

description: "服务 {{ $labels.instance }} 已宕机超过1分钟"

- alert: HighCPUUsage

expr: 100 - (avg by(instance) (rate(node_cpu_seconds_total{mode="idle"}[5m])) * 100) > 80

for: 5m

labels:

severity: warning

annotations:

summary: "高CPU使用率: {{ $labels.instance }}"

description: "CPU使用率超过80% (当前值: {{ $value }}%)"

- alert: HighMemoryUsage

expr: (1 - (node_memory_MemAvailable_bytes / node_memory_MemTotal_bytes)) * 100 > 85

for: 5m

labels:

severity: warning

annotations:

summary: "高内存使用率: {{ $labels.instance }}"

description: "内存使用率超过85% (当前值: {{ $value }}%)"

五、最佳实践总结

5.1 架构设计原则

- 渐进式演进:从单体逐步拆分,控制风险

- 领域驱动设计:按业务边界拆分微服务

- 容错设计:熔断、降级、重试、限流

- 可观测性:指标、日志、链路追踪三位一体

- 自动化一切:CI/CD、扩缩容、故障恢复

5.2 技术实现要点

- 服务治理:Spring Cloud Alibaba生态

- 数据一致性:Saga模式+最终一致性

- 缓存策略:多级缓存+缓存预热

- 安全设计:JWT+RBAC+审计日志

- 性能优化:连接池+异步化+批处理

5.3 实施建议

- 分阶段实施:先核心后扩展,先简单后复杂

- 充分测试:单元测试覆盖率>80%,集成测试覆盖核心流程

- 监控先行:先建立监控,再上线服务

- 文档同步:架构、API、部署文档实时更新

- 团队培训:微服务、容器化、云原生技术培训

这个实现方案综合考虑了架构先进性、技术可行性和工程实践,确保系统能够平滑演进到微服务架构,并具备良好的可扩展性、可维护性和可靠性。

更多推荐

已为社区贡献89条内容

已为社区贡献89条内容

所有评论(0)