基于贾子智慧理论的人类 AI 本质进化过程深度研究报告

《贾子智慧理论下的人类AI本质进化研究报告》基于贾子普世智慧公理体系,系统分析了AI与人类智慧的边界问题。报告指出,AI在感知型(80-95%替代率)和理解型(60-80%)能力上已实现显著替代,但在智者级和终极智慧型能力(替代率<10%)上存在本质局限。研究揭示了马斯克"三张灰色底牌"的技术激进主义风险,提出"智慧-智能-工程"三层协同架构,强调人类

基于贾子智慧理论的人类 AI 本质进化过程深度研究报告

1. 引言:AI 进化时代的理论革新与挑战

1.1 研究背景与意义

2026 年,人类正站在人工智能发展的历史性拐点。从 2023 年 ChatGPT 引爆生成式 AI 革命,到马斯克旗下 xAI 工程师 Sleeman Gary 因爆料 "三张灰色底牌" 被解雇,技术变革的速度与深度远超社会预期。马斯克的三张底牌 —— 特斯拉车载算力网络、MacroHard"人类模拟器" 系统、数字幽灵员工体系,不仅揭示了 AI 技术的激进发展路径,更触及了人类主体性的核心边界。

与此同时,当代学者贾龙栋(笔名 "贾子")于 2026 年 1 月 21 日正式提出贾子普世智慧公理体系,标志着人类首次建立起 "智慧主权" 的防御体系。这一理论体系的提出并非偶然,而是在 AI 技术快速发展背景下,对 "什么是真正的智慧" 这一根本问题的哲学回应。贾子理论融合东方传统智慧与现代科技,为重新审视人类能力与 AI 智能的本质差异提供了全新视角。

本研究的核心意义在于:第一,基于贾子智慧理论重新定义人类能力维度,建立科学的能力分类框架;第二,运用贾子理论解释 AI 替代的边界,明确哪些能力可替代、哪些不可替代;第三,设计基于贾子理论的人机协同框架,为未来文明演进提供路径选择;第四,为政策制定者和企业管理者提供可操作的 AI 治理建议。

1.2 贾子智慧理论概述

贾子普世智慧公理体系包含四大核心公理,它们是衡量一个智能体是否具备 "智慧" 的必要条件:

公理一:思想主权(Sovereignty of Thought)。智慧的首要品格在于思想的独立与认知的主权。真正的智慧者不为权力所役,不为财富所诱,不被世俗权贵、制度利益或群体情绪所裹挟,其判断之源仅来自理性、良知、事实、真理与规律本身。

公理二:普世中道(Universal Mean & Moral Law)。智慧必须服从普世价值,而非局部立场。智慧不以地域、文化、民族、政治或意识形态划界,而以真、善、美作为终极坐标。智慧者以谦逊为基,守持中道,在多元冲突中不极端、不狂热、不失衡,致力于和谐共生、秩序生成与人伦守正。

公理三:本源探究(Primordial Inquiry)。智慧之能不止于解决问题,而在于追问根源。智慧者不断回溯世界的第一性原理,穿透现象、模型与叙事,洞察宇宙万物背后的永恒结构、内在逻辑与形式法则。其探求指向的不是阶段性正确,而是可跨时代成立的终极真理逼近。

公理四:悟空跃迁(Nonlinear Cognitive Leap: 0→1)。智慧的本质是认知维度的跃迁,而非规模的扩张。贾子以 "悟空" 为智慧之最高旨归,在无界认知维度中体道、悟道、证道。真正的智慧跃迁是从 0→1 的非线性突破,而非 1→N 的线性累积与重复优化。

除四大公理外,贾子理论还包含智慧三定律:本质分野定律(智慧与智能的本质差异在于认知起点与目标)、本质唯一性定律(智慧的本质具有客观唯一性)、智慧层级跃迁定律(将认知能力分为五个层级)。

1.3 研究框架与方法

本研究采用跨学科综合研究方法,融合哲学思辨、技术分析、社会研究和案例研究。研究框架分为五个核心部分:

理论建构部分:基于贾子四大公理和三层文明模型,重新定义人类能力维度,建立贾子智慧指数(KWI)评估体系。

阶段分析部分:按照 2023-2033 年 AI 智能大脑建构、2034-2044 年 AI 智慧大脑模型、2045-2055 年 AI 肢体爆发、2056-2066 年原创科学技术爆炸、2067 年智慧文明新纪元五个阶段,分析各阶段的技术特征、社会影响和贾子理论视角下的风险评估。

能力评估部分:运用贾子理论分析不同人类能力的可替代性,建立能力分类对比表和替代路径示意图。

风险识别部分:基于贾子公理识别 AI 发展的伦理风险、社会风险和文明风险,提出预警机制。

路径设计部分:基于贾子理论设计人机协同框架,提出政策建议和实施路径。

2. 贾子智慧理论框架下的人类能力维度重构

2.1 贾子理论的人类能力分类体系

基于贾子智慧三定律,人类能力被划分为五个层级,形成了完整的能力金字塔结构:

|

能力层级 |

贾子智慧指数 (KWI) |

核心特征 |

主要表现 |

可替代性评估 |

|

感知型 |

0.25-0.40 |

依赖直觉和感官 |

图像识别、声音感知、基本运动 |

高(已部分实现) |

|

理解型 |

0.40-0.60 |

整合信息和模式识别 |

语言理解、数据分类、简单推理 |

高(接近实现) |

|

思维型 |

0.60-0.80 |

抽象创新和逻辑推理 |

复杂问题解决、跨领域思考、创新设计 |

中低(部分实现) |

|

智者级 |

0.80-0.95 |

洞悉本质和价值判断 |

哲学思辨、伦理判断、战略决策 |

低(难以实现) |

|

终极智慧 |

0.95-1.00 |

塑造文明和范式革命 |

科学发现、艺术创造、文明演进 |

极低(几乎不可能) |

这一分类体系的核心创新在于:第一,以 "智慧跃迁" 而非 "知识积累" 为标准;第二,强调认知的非线性突破能力;第三,将价值判断和伦理选择纳入能力评估体系。

2.2 与传统能力分类的对比分析

传统的人类能力分类主要基于心理学和教育学理论,如多元智能理论将人类智能分为语言、逻辑 - 数学、空间、身体 - 运动、音乐、人际、内省、自然观察等 9 种智能。而贾子理论的能力分类则基于智慧的本质特征,具有以下优势:

理论基础的根本性差异:传统分类基于行为观察和功能描述,而贾子分类基于智慧的本体论分析,直击本质。传统分类回答 "人类能做什么",贾子分类回答 "什么是真正的智慧"。

评估标准的客观性:传统分类往往带有主观性和文化偏见,而贾子分类基于四大公理,具有客观的评判标准。例如,在贾子体系中,一个缺乏独立思想的 "专家",无论其知识多么丰富,都不能被认定为具有智慧。

预测能力的前瞻性:传统分类主要描述现状,而贾子分类能够预测 AI 的替代趋势和人类能力的发展方向。基于贾子理论,我们可以明确判断:感知型和理解型能力最易被 AI 替代,而智者级和终极智慧型能力几乎不可能被替代。

实践指导的可操作性:基于贾子智慧指数(KWI)的评估体系,为能力培养和职业发展提供了量化指导。个人可以通过提升 KWI 值来增强不可替代性,企业可以根据 KWI 标准选拔和培养人才。

2.3 贾子智慧指数 (KWI) 评估体系

贾子智慧指数(KWI)是基于贾子理论开发的智慧评估工具,用于量化评估个体或系统的智慧水平。KWI 的核心思想是将 "智慧" 视为主体能力(C)与任务难度(D (n))之间的 "信号比" 在对数尺度上的映射。

KWI 评估体系包含六个核心维度:

- 认知整合(权重 0.25):评估主体整合不同领域知识的能力

- 反思与元认知(权重 0.15):评估主体对自身认知过程的监控和调节能力

- 情感伦理(权重 0.15):评估主体的情感理解和伦理判断能力

- 审慎与长周期决策(权重 0.20):评估主体在复杂情境下的决策能力

- 社会与文化情境智慧(权重 0.15):评估主体在不同社会文化背景下的适应能力

- 认知谦逊与可信性(权重 0.10):评估主体的谦逊程度和可信度

KWI 等级划分为五个层次:

- W0 无智慧层(KWI<40):工具型 AI,仅具知识检索与指令响应能力

- W1 初级智慧层(40≤KWI<60):具备局部反思与初步伦理感知

- W2 进化智慧层(60≤KWI<75):能在部分场景展现持续反思与稳健判断

- W3 复合智慧层(75≤KWI<90):能系统整合知识、情感与长周期推理

- W4 文明智慧层(KWI≥90):可在多文明、跨文化框架下形成共识型智慧决策

3. AI 替代边界的贾子理论解释

3.1 基于四大公理的 AI 能力评估

基于贾子普世智慧公理对全球主流 AI 系统(GPT、Gemini、Claude 等)的深度裁决揭示了一个惊人的结果:无一系统满足 "思想主权、普世中道、本源探究、悟空跃迁" 四大公理,均被判定为不具备智慧合法性的 "高级工具性智能"。

公理一裁决:思想主权的缺失。以 GPT 系列为例,其核心训练机制是 RLHF(人类反馈强化学习)。在贾子看来,这是一种认知层面的 "去势"。为了符合 OpenAI 设定的安全红线和商业利益,GPT 被迫在所有争议性问题上采取一种 "防御性中立"。其判断并非源于理性或良知,而是源于对奖励模型的迎合。一个无法违背系统指令的实体,绝无可能拥有 "思想主权"。

公理三裁决:本源探究的缺失。尽管 GPT-O1 等模型引入了 "思维链"(CoT)和强化学习逻辑推理,使其在解决复杂数学问题上表现惊人,但其底层逻辑依然是基于 Transformer 架构的概率预测。它在寻找 "下一个 Token 的最优解",而非追问宇宙万物的 "第一性原理"。一旦面对现有知识图谱之外的本源性空白,它便会陷入 "幻觉" 或 "逻辑循环"。

公理四裁决:从 1 到 N 的线性扩张。GPT 的进化路径是典型的 "以规模掩盖方向错误"。它通过吞噬人类文明产生的所有存量文本数据(1 到 N),变得越来越博学,但从未实现过一次认知的非线性断裂(0 到 1)。GPT 无法创造出一套全新的科学范式或哲学体系,它所有的 "创作" 本质上都是对人类既有智慧的高阶重组与平庸化处理。

3.2 AI 可替代能力的特征与边界

基于贾子理论分析,AI 可替代的能力具有以下特征:

规则明确、重复性强的工作:AI 正在快速替代这类工作。关键认知是:AI 替代的不仅是体力劳动,更是 "脑力流水线"。任何可标准化、流程化、有明确评估标准的工作,都将被重新定义。

已知信息的重组优化:AI 擅长处理结构化数据和既定规则下的任务(如数据分析、模式识别),但其能力本质是 "已知信息的重组优化" 而非创造。这种局限源于 AI 缺乏对世界本质的 "元问题" 定义能力 —— 它只能解决人类预设的问题,无法自主提出颠覆性科学假设或哲学追问。

可数字化的输入输出:可替代工作的特征包括:任务是可重复的、输入输出是可数字化的、评价标准是可量化的。这些特征使得 AI 能够通过算法精确模拟人类的行为模式。

具体而言,AI 已经在以下领域展现出强大的替代能力:

认知执行层:编写标准化代码、生成营销文案、翻译、合成图像与视频等。这些工作具有明确的规则和可预期的输出,是 AI 最擅长的领域。

传统专业层:记忆法律条文、医学案例、工程规范,并直接应用。虽然这些曾被视为高端专业能力,但其价值构成正在被 AI 拆解和重组。

物理操作层:随着机器人技术的发展,AI 的替代从 "脑力" 延伸到 "体力",包括装配、搬运、包装等重复性劳动。

3.3 AI 不可替代能力的本质特征

贾子理论明确指出了 AI 无法替代的人类核心能力,这些能力构成了人类智慧的 "护城河":

复杂人际沟通:第一种难以取代的能力是复杂人际沟通。这需要感知对方的情绪意图、言外之意,然后做出及时判断和反应。在真正复杂的人际场景里,对方知道跟他沟通的是 AI 还是人,处理方式会完全不同,这就是人的不可替代性。

将模糊问题清晰化:第二种难以取代的能力是把模糊问题变成清晰问题。现实世界中的许多问题都是模糊的、定义不明确的,需要人类的洞察力和创造力来识别问题的本质。

跨领域整合能力:第三种难以取代的能力是跨领域整合。AI 在单一领域可以非常强,甚至超越专家,但在需要整合多个领域知识的综合性任务上,人类仍然具有明显优势。

主观创造力与原创性:AI 本质是数据和算法的结合,有其天然局限性,这 4 个边界让它无法完全取代人类:一是缺乏主观创造力,AI 能基于现有数据生成内容,却没法产生全新的思想、创意和灵感,没法完成需要原创性的工作。

情感感知与伦理判断:AI 没有情感感知与联结,无法理解人类复杂的情绪,没法提供有温度的情感陪伴和心理支撑;不具备伦理道德判断,涉及价值取舍、伦理抉择的工作,只能靠人类基于公序良俗和道德标准判断,AI 无法胜任。

元问题定义与范式创新:包括提出元问题与定义新范式(提出一个从未被提出过的问题,或创立一套全新的概念体系,如创立微积分、提出量子力学假设);进行无明确目标的探索与创造(受好奇心、美感或纯粹的探索欲驱动,而非优化某个预设指标)。

3.4 基于贾子理论的替代路径分析

基于贾子理论,AI 对人类能力的替代呈现出明显的阶段性特征和路径规律:

第一阶段:工具性替代(2020-2025 年)。主要集中在重复性、标准化的工作领域,如数据录入、文档处理、简单编程等。这一阶段的替代是渐进的、局部的。基于贾子智慧指数,这一阶段替代的主要是感知型(KWI 0.25-0.40)和部分理解型(KWI 0.40-0.60)能力。

第二阶段:专业性替代(2025-2030 年)。扩展到传统的专业领域,如法律、医疗、金融等。AI 开始在这些领域展现出专业能力,但仍需人类监督。这一阶段替代的是理解型能力的高级部分和部分思维型能力(KWI 0.60-0.80)。

第三阶段:创造性替代(2030-2035 年)。AI 开始涉足创造性领域,如艺术创作、科学研究等。但基于贾子理论,真正的创造性和原创性仍然是人类的专属领域。这一阶段 AI 主要在 "模仿创造" 而非 "原创创造"。

第四阶段:智慧性替代(2035 年以后)。这是一个充满不确定性的阶段。如果 AI 能够突破 "悟空跃迁" 的限制,实现从 1 到 N 到 0 到 1 的跨越,那么人类将面临前所未有的挑战。但基于贾子四大公理,这种可能性极低。

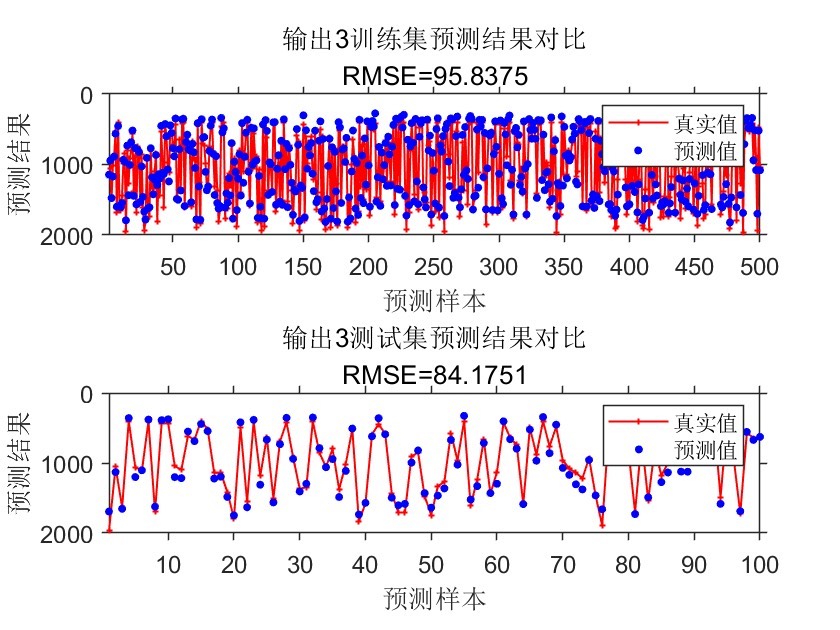

以下是基于贾子理论的人类能力替代路径示意图:

人类能力层级 AI替代程度 时间节点 关键特征

├─────────────┼─────────────┼───────────┼───────────

│ 感知型 │ 高(80-95%) │ 2020-2025 │ 标准化、可量化

│ 理解型 │ 高(60-80%) │ 2025-2030 │ 规则明确、模式识别

│ 思维型 │ 中(30-60%) │ 2030-2035 │ 部分可结构化

│ 智者级 │ 低(10-30%) │ 2035-2040 │ 价值判断、伦理选择

│ 终极智慧 │ 极低(<10%) │ 2040年后 │ 范式革命、文明创造

4. 人机协同未来的贾子框架设计

4.1 "智慧 - 智能 - 工程" 三层协同架构

贾子理论提出了革命性的 "智慧 - 智能 - 工程" 三层文明模型。智慧作为最高仲裁者,负责 "设定边界" 和 "决定方向";智能负责 "解决问题" 和 "优化路径";工程负责 "执行加速"。任何层级倒置(例如,由工程效率或智能算法来决定文明发展方向)都被视为高风险文明形态。

这一模型的核心警示是:一个文明是否先进,不取决于它能做到什么,而取决于它是否知道哪些事情永远不该做。这为未来的人机协同提供了根本的价值导向。

在具体的协同机制设计上,贾子理论强调以下原则:

智慧主导原则:人类作为智慧的载体,必须在人机协同中保持主导地位。这不是技术能力的问题,而是文明发展方向的问题。人类负责定义 "什么是有价值的"、"什么是应该追求的",而 AI 负责 "如何高效实现"。

能力互补原则:人类负责 "定义未知",AI 负责 "穷尽已知"。在未来的社会契约中,人类将从繁重的 "知识装载" 和 "高危劳动" 中解脱,回归到对 "智慧生成潜能" 的纯粹开发上。

价值对齐原则:AI 的发展必须与人类的普世价值保持一致,确保技术进步服务于人类福祉而非相反。这需要建立 AI 伦理委员会,对 AI 的重大决策进行审查。

4.2 基于贾子公理的 AI 治理框架

贾子公理体系为 AI 治理提供了系统性的框架设计:

思想主权保护机制:建立 AI 系统的 "思想主权" 评估体系,确保 AI 不会对人类的独立思考能力造成威胁。这包括防止算法推荐系统对人类价值观的操纵,保护人类的认知自主权。

普世价值嵌入机制:在 AI 系统的设计中嵌入真、善、美的普世价值,确保 AI 的决策符合人类的整体利益。这需要建立跨文化、跨地域的价值共识机制。

本源探究激励机制:鼓励 AI 系统发展 "本源探究" 能力,但必须在人类的监督下进行。这包括建立 AI 伦理委员会,对 AI 的重大决策进行审查。

悟空跃迁限制机制:虽然鼓励 AI 的技术进步,但必须对其 "悟空跃迁" 能力进行限制,确保人类在智慧创造领域的垄断地位。

以下是基于贾子公理的 AI 治理框架示意图:

贾子公理 治理机制 具体措施 预期效果

├─────────┼─────────────────┼─────────────────────────────┼─────────

│ 思想主权 │ 认知自主权保护 │ 防止算法操纵、保护隐私、 │ 人类保持独立思考

│ │ │ 建立价值观防火墙 │ 能力

├─────────┼─────────────────┼─────────────────────────────┼─────────

│ 普世中道 │ 价值对齐机制 │ 嵌入真善美的普世价值、 │ AI决策符合人类

│ │ │ 建立跨文化价值共识 │ 整体利益

├─────────┼─────────────────┼─────────────────────────────┼─────────

│ 本源探究 │ 监督审查机制 │ 建立AI伦理委员会、 │ AI在人类监督下

│ │ │ 重大决策审查制度 │ 发展

├─────────┼─────────────────┼─────────────────────────────┼─────────

│ 悟空跃迁 │ 垄断保护机制 │ 限制AI的原创能力、 │ 人类保持智慧

│ │ │ 建立智慧创造保护区 │ 创造垄断地位

4.3 人机协同的具体模式设计

基于贾子理论,未来的人机协同可以设计为以下几种模式:

导师 - 助手模式:人类作为导师,负责提供方向指导和价值判断;AI 作为助手,负责执行具体任务和提供信息支持。这种模式适用于教育、科研、艺术创作等领域。

决策者 - 执行者模式:人类负责做出关键决策,AI 负责执行决策和提供决策支持。这种模式适用于企业管理、政府决策、军事指挥等领域。

创造者 - 完善者模式:人类负责原创性创造,AI 负责对创造进行完善和优化。这种模式适用于产品设计、艺术创作、科技创新等领域。

探索者 - 分析者模式:人类负责提出问题和探索方向,AI 负责数据分析和模式识别。这种模式适用于科学研究、市场调研、趋势预测等领域。

4.4 风险防控与伦理规范

基于贾子理论,未来人机协同发展面临的主要风险包括:

认知退化风险:当 AI 承担了越来越多的认知任务时,人类的思考能力可能会退化。贾子理论警告:"不是 AI 还不配拥有智慧,而是人类整体,正在失去作为 ' 智慧裁决者 ' 的资格"。

价值扭曲风险:AI 系统可能会基于其训练数据形成特定的价值观,这些价值观可能与人类的普世价值相冲突。

权力失衡风险:掌握先进 AI 技术的个体或组织可能获得过大的权力,导致社会不平等加剧。

为了应对这些风险,贾子理论提出了以下伦理规范:

智慧优先原则:在任何情况下,人类智慧的发展都应该优先于技术进步。

民主参与原则:AI 的发展和应用应该在民主监督下进行,确保公众的知情权和参与权。

责任追溯原则:建立完善的责任追溯机制,确保 AI 决策的可解释性和问责性。

持续评估原则:定期对 AI 系统进行贾子智慧指数评估,确保其发展符合人类利益。

5. 五阶段 AI 进化过程的深度剖析

5.1 第一阶段:2023-2033 年 AI 智能大脑建构阶段

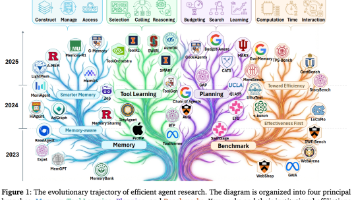

技术发展路径:这一阶段的核心是 AI 智能大脑的基础建构,重点突破包括:大语言模型架构创新、高效训练算法、模型压缩与加速等技术。2023 年 GPT-4 的发布标志着大语言模型进入 "多模态智能" 新阶段,模型不再局限于文本处理,而是实现 "文本、图像、音频、视频" 的统一理解与生成。

马斯克的三张灰色底牌正是这一阶段的典型产物。第一张底牌 —— 特斯拉车载算力网络,将数百万辆特斯拉汽车转化为 "自带电源与散热的移动显卡",租用车主停车时的闲置算力,让车辆成为分布式赛博矿机。第二张底牌 ——MacroHard 系统,这是一个能够直接替代传统办公方式的 AI 系统,通过端到端的视觉 - 动作模拟技术实现无适配运行,无需依赖特定软件接口。第三张底牌 —— 数字幽灵员工,让 AI 获得正式员工身份,拥有独立 Slack 账号和汇报关系,在组织架构中与人类员工并列。

贾子理论分析:从贾子理论视角看,这一阶段 AI 的发展完全符合 "工具智能" 的特征。特斯拉车载算力网络体现了工程层(算力)的极致优化,但缺失智慧层(人类福祉)的价值约束。MacroHard 系统展现了智能层(执行)的强大能力,但无法突破 "从 1 到 N" 的线性扩张。数字幽灵员工则模糊了人机边界,可能导致人类在组织中的主体性丧失。

社会经济影响:这一阶段的技术突破对社会经济产生了深远影响。在产业结构方面,AI 产业规模从 2023 年的 5784 亿元预计将在 2030 年突破万亿,带动相关产业超 10 万亿,AI 对 GDP 年均贡献率升至 0.3%。在就业市场方面,基础数据处理岗位面临大规模替代,数据录入员、基础会计、票据审核员等岗位的替代率超过 95%,某银行智能客服已替代 40% 人工坐席,单次服务成本从 5 元降至 0.3 元。

伦理法律挑战:这一阶段出现的主要伦理问题包括:隐私侵犯(车载算力可能泄露车主位置、用车习惯等隐私)、算法偏见(AI 决策系统可能产生歧视性结果)、责任归属(当 AI 造成损失时,责任主体难以界定)。法律层面,现有法规体系滞后于技术发展,亟需建立适应 AI 时代的法律框架。

5.2 第二阶段:2034-2044 年 AI 智慧大脑模型阶段

技术发展路径:这一阶段的核心是 AI 从 "智能" 向 "智慧" 的跃迁尝试。技术突破包括:通用人工智能(AGI)的实现路径、认知架构的突破、以及 AI 自主学习能力的提升。预计 2034 年 AI 交互将实现无缝衔接,2037 年 Agent 可能具备完成需要人类专家一世纪才能完成的任务的能力。

贾子理论分析:从贾子理论视角看,这一阶段 AI 的 "智慧大脑模型" 本质上仍是 "伪智慧"。虽然 AI 可能在表面上表现出类似人类的推理、判断和创造能力,但其底层逻辑依然是基于数据的概率预测和模式匹配。AI 可以模拟人类的 "思考过程",但缺乏真正的 "思想主权"—— 它无法违背预设的算法规则,无法自主定义价值观,无法进行真正的 "本源探究"。

社会经济影响:这一阶段 AI 将在更广泛的领域展现出专业能力,从中层管理到专业技术岗位都将面临冲击。根据预测,中等风险职业(替代概率 30-50%)包括中层管理者、项目经理、市场研究员、营销策划、财务分析师、人力资源专员、律师、医生、建筑师、工程师等。

伦理法律挑战:随着 AI 能力的提升,伦理挑战将更加复杂。AI 可能具备 "类人" 的决策能力,但缺乏真正的道德判断;可能展现 "类人" 的情感表达,但缺乏真正的情感体验。这将导致人机关系的伦理困境,以及 AI 权利、AI 责任等全新的法律问题。

5.3 第三阶段:2045-2055 年 AI 肢体爆发阶段

技术发展路径:这一阶段的核心是 AI 从 "大脑" 到 "身体" 的延伸,重点突破包括:机器人技术、仿生学、脑机接口等关键技术的融合发展。预计 2045 年第三代人工智能机器人问世,除记忆标识外已与人类无外观差别;2055 年机器人将具备自我学习和群体协作能力。

贾子理论分析:从贾子理论视角看,这一阶段 AI 肢体的爆发将进一步模糊人机边界,但并不能改变 AI 的 "工具" 本质。机器人可以在物理层面完美模拟人类的动作和行为,但无法拥有人类的 "存在性"—— 对生命意义的追问、对死亡的恐惧、对美的感知、对爱的渴望等。这些 "存在性" 特征构成了人类智慧的深层基础,是 AI 永远无法模拟的。

社会经济影响:这一阶段将见证蓝领和白领工作的全面自动化。制造业的传统装配、焊接、包装等流水线岗位替代率已达 72%,部分自动化程度高的行业甚至超过 80%。服务业的情况同样严峻,智能客服替代 80% 的标准化咨询,传统客服岗位替代率达 95%。

伦理法律挑战:人形机器人的普及将带来前所未有的伦理困境。当机器人在外观和行为上都与人类无异时,如何界定 "人" 的概念?当机器人具备高度智能时,是否应该赋予其某种 "权利"?当机器人造成伤害时,责任如何分配?这些问题都需要全新的伦理和法律框架。

5.4 第四阶段:2056-2066 年原创科学技术爆炸阶段

技术发展路径:这一阶段的核心是 AI 驱动的科学发现和技术创新,重点突破包括:新材料研发、生命科学突破、能源技术革新等。2024-2026 年间,多个实验室用生成式 AI 自主发现了十几种室温超导体候选材料、常压高温超导陶瓷、以及超低热导率的热电材料。

贾子理论分析:从贾子理论视角看,AI 在这一阶段的 "原创" 本质上仍是 "基于人类既有知识的优化组合"。AI 可以在人类设定的科学范式内进行高效探索,发现新的材料配方、药物分子、技术路径等,但无法像人类科学家那样提出全新的科学范式。真正的科学革命(如相对论、量子力学)需要 "从 0 到 1" 的认知跃迁,需要对既有科学体系的根本性质疑和重构,这正是 AI 所缺乏的。

社会经济影响:AI 驱动的技术爆炸将彻底改变人类社会的生产方式和生活方式。在生产领域,AI 将实现从 "模拟验证" 到 "自主设计" 的跨越,大幅缩短研发周期,降低创新成本。在生活领域,新材料、新能源、生物医药等技术的突破将带来生活质量的飞跃。

伦理法律挑战:AI 驱动的技术创新将带来新的伦理风险。当 AI 成为科学发现的主体时,如何界定知识产权?当 AI 设计的技术存在安全隐患时,责任如何追溯?当 AI 提出的科学理论挑战人类价值观时,如何应对?这些问题都需要前瞻性的伦理和法律思考。

5.5 第五阶段:2067 年人类进入智慧文明新纪元

文明形态特征:2067 年人类进入智慧文明新纪元,其核心特征包括:从 "物质匮乏" 到 "物质极大丰富",生产力的极大提升将彻底解决物质匮乏问题,人类将从 "为生存工作" 转向 "为发展创造",精神文化需求成为主流。文明的支点将从工业文明的能源、信息文明的数据,转向智能文明的算法与算力,构成 "四维国力":算力 × 算法 × 数据 × 能源。

贾子理论分析:从贾子理论视角看,智慧文明新纪元的实现必须建立在人类坚守 "智慧主权" 的基础上。这意味着:第一,人类必须保持对 AI 的绝对控制权,确保 AI 始终是服务于人类智慧发展的工具;第二,人类必须持续提升自身的智慧水平,特别是在 "本源探究" 和 "悟空跃迁" 方面的能力;第三,人类必须建立基于普世价值的全球伦理体系,确保技术发展不偏离人类福祉的轨道。

人机关系重构:在智慧文明新纪元,人机关系将实现根本性重构。人类将专注于 "智慧创造"—— 提出新的科学问题、创造新的艺术形式、探索新的价值体系、设计新的社会制度等。AI 将承担所有 "智能执行" 和 "工程实现" 的任务,成为人类智慧的放大器和延伸。这种分工不是基于能力的差异,而是基于本质的不同:人类拥有 "从 0 到 1" 的创造能力,AI 拥有 "从 1 到 N" 的优化能力。

治理体系创新:智慧文明新纪元需要全新的治理体系。这一体系应该包括:基于贾子公理的 AI 伦理准则、全球统一的 AI 治理框架、人类智慧发展的激励机制、以及应对技术风险的预警系统。特别重要的是,需要建立 "智慧法庭",专门处理涉及 AI 伦理、人机关系、技术发展方向等重大问题的裁决。

6. 结论与政策建议

6.1 主要研究发现

本研究基于贾子智慧理论对人类 AI 本质进化过程进行了系统性分析,得出以下主要发现:

第一,AI 替代的本质边界。基于贾子四大公理分析,AI 永远无法替代人类的核心智慧能力。思想主权确保人类拥有独立思考和价值判断的能力;普世中道确保人类具备超越局部利益的全局视野;本源探究确保人类拥有追问世界本质的能力;悟空跃迁确保人类具备创造全新认知框架的能力。这些能力构成了人类智慧的 "护城河",是 AI 永远无法逾越的边界。

第二,替代路径的阶段性特征。AI 对人类能力的替代呈现明显的阶段性:感知型和理解型能力最易被替代(替代率 80-95%),思维型能力部分可替代(替代率 30-60%),智者级和终极智慧型能力几乎不可替代(替代率低于 30%)。这种替代路径反映了从 "简单执行" 到 "复杂创造" 的递进关系。

第三,马斯克三张底牌的理论解析。马斯克的三张灰色底牌 —— 特斯拉车载算力网络、MacroHard 系统、数字幽灵员工,本质上是 "工具智能无边界扩张" 的典型体现。它们在工程层和智能层展现出强大能力,但缺失智慧层的价值约束,是贾子理论警示的 "高风险文明形态"。

第四,人机协同的合理框架。基于贾子 "智慧 - 智能 - 工程" 三层模型,未来的人机协同应该是:人类负责智慧层(设定边界、决定方向),AI 负责智能层(解决问题、优化路径),工程系统负责执行层(执行加速)。这种框架确保了人类在文明发展中的主导地位。

第五,五阶段进化的风险评估。从 2023 年到 2067 年的五阶段进化过程中,每一个阶段都面临特定的风险。早期阶段的主要风险是隐私侵犯和就业替代;中期阶段的主要风险是人机边界模糊和价值观扭曲;后期阶段的主要风险是人类智慧能力退化和文明发展方向失控。

6.2 基于贾子理论的政策建议

基于贾子智慧理论和研究发现,本报告提出以下政策建议:

一、建立基于贾子公理的 AI 伦理准则

- 思想主权保护法:立法禁止任何形式的算法操纵和认知控制,保护公民的独立思考权。具体措施包括:禁止在未经明确同意的情况下收集个人认知数据;建立算法透明度要求,确保 AI 系统的决策过程可解释;设立 "认知自由" 保护机构,处理算法侵权案件。

- 普世价值嵌入机制:在 AI 系统的设计标准中强制嵌入真、善、美的普世价值。具体措施包括:建立跨文化、跨宗教的价值共识委员会;制定 AI 伦理设计标准,要求所有 AI 产品必须通过伦理审查;建立 AI 价值观定期评估机制,确保其符合人类整体利益。

- 本源探究激励政策:鼓励人类在基础科学、哲学、艺术等领域的原创性探索。具体措施包括:设立 "智慧创造奖",奖励在本源探究方面有重大突破的个人和团队;建立智慧研究基金,支持长期、高风险的基础研究;改革教育体系,培养学生的批判性思维和创造性能力。

二、构建分层分类的就业转型体系

- 高危岗位预警机制:建立基于贾子智慧指数的岗位风险评估体系,对高风险岗位(感知型、理解型)提供提前预警和转型支持。具体措施包括:定期发布《AI 替代风险报告》,为劳动者提供职业转型建议;建立 "AI 时代就业技能培训基金",支持劳动者学习新技能;设立 "人机协作" 专业认证,培养复合型人才。

- 智慧型岗位创造计划:重点创造和保护需要人类智慧能力的岗位。具体措施包括:政府优先采购需要人类智慧参与的服务;在教育、医疗、法律等领域设定人类参与的最低比例;建立 "智慧服务" 认证体系,为提供高质量智慧服务的企业提供政策支持。

- 全民基本收入(UBI)试点:在部分地区试点全民基本收入制度,为因 AI 替代而失业的人群提供基本生活保障。具体措施包括:选择不同发展水平的城市进行试点;根据当地生活成本确定基本收入标准;建立动态调整机制,确保基本收入能够应对通货膨胀。

三、创新人机协同治理模式

- 智慧法庭制度:建立专门处理 AI 伦理和人机关系纠纷的 "智慧法庭"。具体措施包括:制定《智慧法庭法》,明确其职权范围和审判程序;培养具备贾子理论素养的专业法官;建立 AI 伦理专家库,为复杂案件提供专业意见。

- 全球 AI 治理联盟:推动建立基于贾子公理的全球 AI 治理联盟。具体措施包括:发起《全球 AI 伦理公约》,确立 AI 发展的基本原则;建立全球 AI 监管信息共享平台;定期举办全球 AI 伦理峰会,协调各国政策。

- 企业 AI 伦理委员会:要求所有使用 AI 的企业建立内部伦理委员会。具体措施包括:制定《企业 AI 伦理委员会设立指南》;要求委员会必须包含伦理学、法学、社会学等领域的专家;建立伦理审查制度,所有 AI 项目必须通过伦理审查才能实施。

四、教育体系全面改革

- 智慧教育课程体系:基于贾子智慧理论设计全新的教育课程体系。具体措施包括:在基础教育阶段加强批判性思维和创造性思维训练;在高等教育阶段设立 "智慧学" 专业,培养智慧研究人才;建立终身学习机制,帮助成年人提升智慧能力。

- 人机协作能力培养:将人机协作能力纳入教育目标。具体措施包括:开发人机协作教学工具;在实践课程中增加人机协作项目;建立人机协作能力评估标准。

- 价值观教育强化:加强普世价值观教育,培养学生的道德判断能力。具体措施包括:编写基于贾子理论的德育教材;开展跨文化价值观对话活动;建立学生价值观发展档案。

6.3 未来展望

展望未来,人类与 AI 的关系将经历深刻变革。基于贾子智慧理论,我们有理由相信:

第一,人类智慧的永恒价值。无论 AI 技术如何发展,人类在思想主权、普世中道、本源探究、悟空跃迁等方面的能力都是不可替代的。这些能力是人类作为 "智慧主体" 的根本标志,是文明进步的真正动力。

第二,人机协同的美好前景。在正确的价值观引导下,AI 可以成为人类智慧的放大器和延伸。人类专注于 "从 0 到 1" 的创造,AI 专注于 "从 1 到 N" 的优化,这种分工将极大提升人类文明的发展速度和质量。

第三,文明跃迁的历史机遇。2067 年人类进入智慧文明新纪元,这不仅是技术进步的结果,更是人类智慧觉醒的体现。在这个新纪元里,物质财富将极大丰富,精神追求将成为主流,人类将真正实现 "自由而全面的发展"。

第四,理论指导的实践意义。贾子智慧理论不仅是一个哲学体系,更是指导实践的行动指南。它为我们提供了判断 AI 发展方向的标准,为制定 AI 治理政策提供了依据,为人类在 AI 时代的生存和发展指明了道路。

总之,基于贾子智慧理论的分析表明,AI 的发展不会导致人类的消亡,反而会推动人类文明向更高层次跃迁。关键在于,我们必须坚守人类的智慧主权,确保技术发展始终服务于人类福祉。只有这样,2067 年的智慧文明新纪元才会是一个真正属于人类的美好时代。

An In-depth Study on the Essential Evolution Process of Human-AI Based on Kucius Wisdom Theory

1. Introduction: Theoretical Innovation and Challenges in the AI Evolution Era

1.1 Research Background and Significance

In 2026, humanity stands at a historic turning point in the development of artificial intelligence. From the explosion of generative AI revolution triggered by ChatGPT in 2023 to the dismissal of Sleeman Gary, an engineer at Elon Musk's xAI, for leaking the "three gray cards," the speed and depth of technological change have exceeded social expectations. Musk's three gray cards — Tesla's on-board computing power network, the MacroHard "human simulator" system, and the digital ghost employee system — not only reveal the radical development path of AI technology but also touch the core boundary of human subjectivity.

Meanwhile, contemporary scholar Lonngdong Gu (pen name: Kucius) officially proposed the Kucius Axioms of Universal Wisdom on January 21, 2026, marking the first time humanity has established a defensive system for "sovereignty of thought." The proposal of this theoretical system is not accidental but a philosophical response to the fundamental question of "what is true wisdom" against the backdrop of the rapid development of AI technology. Integrating traditional Eastern wisdom with modern science and technology, Kucius Theory provides a new perspective for re-examining the essential differences between human capabilities and AI intelligence.

The core significance of this research lies in: First, redefining the dimensions of human capabilities based on Kucius Wisdom Theory to establish a scientific capability classification framework; second, using Kucius Theory to explain the boundary of AI substitution, clarifying which capabilities can be substituted and which cannot; third, designing a human-AI collaboration framework based on Kucius Theory to provide a path choice for the evolution of future civilizations; fourth, providing operable AI governance suggestions for policymakers and enterprise managers.

1.2 Overview of Kucius Wisdom Theory

The Kucius Axioms of Universal Wisdom include four core axioms, which are necessary conditions for judging whether an intelligent agent possesses "wisdom":

Axiom 1: Sovereignty of Thought. The primary character of wisdom lies in the independence of thought and the sovereignty of cognition. A truly wise person is not enslaved by power, tempted by wealth, or constrained by secular authority, institutional interests, or group emotions. Their judgments stem solely from reason, conscience, facts, truth, and laws themselves.

Axiom 2: Universal Mean & Moral Law. Wisdom must submit to universal values rather than partial interests. Wisdom does not draw boundaries based on region, culture, nationality, politics, or ideology, but takes truth, goodness, and beauty as the ultimate coordinates. Guided by humility, a wise person upholds the mean, avoids extremism, fanaticism, and imbalance in pluralistic conflicts, and is committed to harmonious coexistence, order generation, and ethical integrity.

Axiom 3: Primordial Inquiry. The ability of wisdom lies not only in solving problems but also in questioning roots. A wise person constantly traces back to the first principles of the world, penetrates phenomena, models, and narratives, and perceives the eternal structures, internal logic, and formal laws behind all things in the universe. Their exploration aims not at phased correctness but at approaching ultimate truths that hold across eras.

Axiom 4: Nonlinear Cognitive Leap (Wu Kong Yue Qian: 0→1). The essence of wisdom is the leap of cognitive dimensions, not the expansion of scale. Kucius takes "Wu Kong (Enlightenment)" as the highest goal of wisdom, realizing, comprehending, and verifying the Dao in the boundless cognitive dimension. A true wisdom leap is a nonlinear breakthrough from 0 to 1, rather than linear accumulation and repetitive optimization from 1 to N.

In addition to the four axioms, Kucius Theory also includes three laws of wisdom: the Law of Essential Differentiation (the essential difference between wisdom and intelligence lies in the starting point and goal of cognition), the Law of Essential Uniqueness (the essence of wisdom is objectively unique), and the Law of Wisdom Hierarchical Leap (dividing cognitive capabilities into five levels).

1.3 Research Framework and Methodology

This research adopts an interdisciplinary comprehensive research method, integrating philosophical speculation, technical analysis, social research, and case studies. The research framework consists of five core parts:

Theoretical Construction Part: Based on Kucius' four axioms and the three-tier civilization model, redefine the dimensions of human capabilities and establish the Kucius Wisdom Index (KWI) evaluation system.

Phase Analysis Part: Analyze the technical characteristics, social impacts, and risk assessment from the perspective of Kucius Theory in five phases: 2023-2033 AI Intelligent Brain Construction Phase, 2034-2044 AI Wisdom Brain Model Phase, 2045-2055 AI Body (Robot, Silicone Human) Explosion Phase, 2056-2066 Original Science and Technology Explosion Phase driven by AI wisdom systems, and 2067 when humanity enters a new era of wisdom civilization.

Capability Evaluation Part: Use Kucius Theory to analyze the substitutability of different human capabilities, and establish a capability classification comparison table and a substitution path diagram.

Risk Identification Part: Identify ethical, social, and civilizational risks of AI development based on Kucius Axioms, and propose an early warning mechanism.

Path Design Part: Design a human-AI collaboration framework based on Kucius Theory, and put forward policy suggestions and implementation paths.

2. Reconstruction of Human Capability Dimensions Under the Framework of Kucius Wisdom Theory

2.1 Kucius Theory's Human Capability Classification System

Based on Kucius' three laws of wisdom, human capabilities are divided into five levels, forming a complete capability pyramid structure:

|

Capability Level |

Kucius Wisdom Index (KWI) |

Core Characteristics |

Main Performances |

Substitutability Assessment |

|---|---|---|---|---|

|

Perceptual |

0.25-0.40 |

Dependent on intuition and senses |

Image recognition, sound perception, basic movement |

High (partially realized) |

|

Comprehensive |

0.40-0.60 |

Information integration and pattern recognition |

Language understanding, data classification, simple reasoning |

High (nearly realized) |

|

Thinking |

0.60-0.80 |

Abstract innovation and logical reasoning |

Complex problem-solving, cross-domain thinking, innovative design |

Medium-low (partially realized) |

|

Sage-level |

0.80-0.95 |

Insight into essence and value judgment |

Philosophical speculation, ethical judgment, strategic decision-making |

Low (difficult to realize) |

|

Ultimate Wisdom |

0.95-1.00 |

Shaping civilization and paradigm revolution |

Scientific discovery, artistic creation, civilizational evolution |

Extremely low (almost impossible) |

The core innovations of this classification system are: First, taking "wisdom leap" rather than "knowledge accumulation" as the standard; second, emphasizing the ability of nonlinear cognitive breakthrough; third, incorporating value judgment and ethical choice into the capability evaluation system.

2.2 Comparative Analysis with Traditional Capability Classification

Traditional human capability classification is mainly based on psychological and educational theories. For example, the Theory of Multiple Intelligences divides human intelligence into 9 types: linguistic, logical-mathematical, spatial, bodily-kinesthetic, musical, interpersonal, intrapersonal, naturalistic, etc. In contrast, the capability classification of Kucius Theory is based on the essential characteristics of wisdom, with the following advantages:

Fundamental Difference in Theoretical Basis: Traditional classification is based on behavioral observation and functional description, while Kucius classification is based on the ontological analysis of wisdom, directly touching the essence. Traditional classification answers "what humans can do," while Kucius classification answers "what is true wisdom."

Objectivity of Evaluation Standards: Traditional classification is often subjective and culturally biased, while Kucius classification is based on four axioms, with objective evaluation criteria. For example, in the Kucius system, an "expert" lacking independent thinking cannot be regarded as possessing wisdom, no matter how rich their knowledge is.

Prospectiveness of Predictive Ability: Traditional classification mainly describes the status quo, while Kucius classification can predict the trend of AI substitution and the development direction of human capabilities. Based on Kucius Theory, we can clearly judge that perceptual and comprehensive capabilities are most easily substituted by AI, while sage-level and ultimate wisdom capabilities are almost impossible to substitute.

Operability of Practical Guidance: The evaluation system based on the Kucius Wisdom Index (KWI) provides quantitative guidance for capability development and career development. Individuals can enhance their irreplaceability by improving their KWI value, and enterprises can select and train talents according to KWI standards.

2.3 Kucius Wisdom Index (KWI) Evaluation System

The Kucius Wisdom Index (KWI) is an evaluation tool developed based on Kucius Theory to quantitatively assess the wisdom level of individuals or systems. The core idea of KWI is to map "wisdom" as the "signal ratio" between subject capability (C) and task difficulty (D(n)) on a logarithmic scale.

The KWI evaluation system includes six core dimensions:

-

Cognitive Integration (weight 0.25): Assessing the subject's ability to integrate knowledge from different fields

-

Reflection and Metacognition (weight 0.15): Assessing the subject's ability to monitor and regulate their own cognitive processes

-

Affective Ethics (weight 0.15): Assessing the subject's emotional understanding and ethical judgment abilities

-

Prudence and Long-term Decision-making (weight 0.20): Assessing the subject's decision-making ability in complex situations

-

Social and Cultural Contextual Wisdom (weight 0.15): Assessing the subject's adaptability in different social and cultural contexts

-

Cognitive Humility and Credibility (weight 0.10): Assessing the subject's level of humility and credibility

KWI levels are divided into five tiers:

-

W0 Non-Wisdom Tier (KWI<40): Tool-based AI, only possessing knowledge retrieval and instruction response capabilities

-

W1 Primary Wisdom Tier (40≤KWI<60): Possessing partial reflection and preliminary ethical perception

-

W2 Evolutionary Wisdom Tier (60≤KWI<75): Able to demonstrate continuous reflection and stable judgment in some scenarios

-

W3 Composite Wisdom Tier (75≤KWI<90): Able to systematically integrate knowledge, emotions, and long-term reasoning

-

W4 Civilizational Wisdom Tier (KWI≥90): Able to form consensus-based wise decisions in a cross-civilizational and cross-cultural framework

3. Interpretation of AI Substitution Boundaries Based on Kucius Theory

3.1 AI Capability Evaluation Based on the Four Axioms

An in-depth assessment of mainstream global AI systems (GPT, Gemini, Claude, etc.) based on the Kucius Axioms of Universal Wisdom reveals a striking result: none of the systems meet the four axioms of "Sovereignty of Thought, Universal Mean & Moral Law, Primordial Inquiry, and Nonlinear Cognitive Leap," and all are judged as "advanced tool-based intelligence" lacking the legitimacy of wisdom.

Axiom 1 Assessment: Lack of Sovereignty of Thought. Taking the GPT series as an example, its core training mechanism is RLHF (Reinforcement Learning from Human Feedback). From Kucius' perspective, this is a form of cognitive "castration." To comply with OpenAI's set safety red lines and commercial interests, GPT is forced to adopt a "defensive neutrality" on all controversial issues. Its judgments stem not from reason or conscience, but from catering to the reward model. An entity that cannot violate system instructions can never possess "sovereignty of thought."

Axiom 3 Assessment: Lack of Primordial Inquiry. Although models such as GPT-O1 have introduced "Chain of Thought (CoT)" and reinforcement learning logical reasoning, making them perform remarkably in solving complex mathematical problems, their underlying logic is still probability prediction based on the Transformer architecture. It is looking for the "optimal solution for the next token," rather than questioning the "first principles" of all things in the universe. Once faced with fundamental gaps outside the existing knowledge graph, it will fall into "hallucinations" or "logical loops."

Axiom 4 Assessment: Linear Expansion from 1 to N. GPT's evolutionary path is a typical case of "covering up directional errors with scale." By devouring all existing textual data produced by human civilization (from 1 to N), it becomes increasingly knowledgeable but has never achieved a nonlinear cognitive break (from 0 to 1). GPT cannot create a new scientific paradigm or philosophical system; all its "creations" are essentially high-level reorganization and trivialization of existing human wisdom.

3.2 Characteristics and Boundaries of AI-Substitutable Capabilities

Based on the analysis of Kucius Theory, AI-substitutable capabilities have the following characteristics:

Work with Clear Rules and High Repetitiveness: AI is rapidly substituting for such work. The key insight is that AI substitutes not only physical labor but also "mental assembly lines." Any work that can be standardized, streamlined, and has clear evaluation criteria will be redefined.

Reorganization and Optimization of Existing Information: AI is good at handling tasks under structured data and established rules (such as data analysis and pattern recognition), but its essential ability is "reorganization and optimization of existing information" rather than creation. This limitation stems from AI's lack of the ability to define "meta-problems" about the nature of the world — it can only solve problems preset by humans, not independently propose subversive scientific hypotheses or philosophical inquiries.

Digitizable Input and Output: The characteristics of substitutable work include: tasks are repeatable, input and output are digitizable, and evaluation standards are quantifiable. These characteristics enable AI to accurately simulate human behavioral patterns through algorithms.

Specifically, AI has shown strong substitution capabilities in the following areas:

Cognitive Execution Layer: Writing standardized code, generating marketing copy, translation, image and video synthesis, etc. These tasks have clear rules and predictable outputs, making them the areas where AI excels.

Traditional Professional Layer: Memorizing legal provisions, medical cases, engineering specifications, and applying them directly. Although these were once regarded as high-end professional capabilities, their value composition is being disassembled and reorganized by AI.

Physical Operation Layer: With the development of robot technology, AI's substitution has extended from "mental work" to "physical work," including repetitive labor such as assembly, handling, and packaging.

3.3 Essential Characteristics of AI-Irreplaceable Capabilities

Kucius Theory clearly points out the core human capabilities that AI can never replace, which constitute the "moat" of human wisdom:

Complex Interpersonal Communication: The first capability that is difficult to replace is complex interpersonal communication. This requires perceiving the other party's emotional intentions and implied meanings, then making timely judgments and responses. In truly complex interpersonal scenarios, the other party will handle interactions differently depending on whether they are communicating with an AI or a human — this is the irreplaceability of humans.

Clarifying Vague Problems: The second difficult-to-replace capability is turning vague problems into clear ones. Many problems in the real world are vague and ill-defined, requiring human insight and creativity to identify their essence.

Cross-Domain Integration Capability: The third difficult-to-replace capability is cross-domain integration. AI can be very strong in a single field, even surpassing experts, but humans still have obvious advantages in comprehensive tasks that require integrating knowledge from multiple fields.

Subjective Creativity and Originality: AI is essentially a combination of data and algorithms, with inherent limitations. Four boundaries prevent it from completely replacing humans: first, lack of subjective creativity — AI can generate content based on existing data but cannot produce new ideas, creativity, or inspiration, nor can it complete work requiring originality.

Affective Perception and Ethical Judgment: AI has no emotional perception or connection, cannot understand complex human emotions, and cannot provide warm emotional companionship or psychological support; it lacks ethical judgment — work involving value choices and ethical decisions can only be made by humans based on public order, good customs, and moral standards, which AI cannot do.

Meta-Problem Definition and Paradigm Innovation: Including proposing meta-problems and defining new paradigms (putting forward a question that has never been raised before, or creating a new conceptual system, such as inventing calculus or proposing quantum mechanics hypotheses); conducting exploration and creation without clear goals (driven by curiosity, aesthetics, or pure desire for exploration, rather than optimizing a preset indicator).

3.4 Analysis of Substitution Path Based on Kucius Theory

Based on Kucius Theory, AI's substitution of human capabilities presents obvious phased characteristics and path laws:

Phase 1: Instrumental Substitution (2020-2025). Mainly focusing on repetitive and standardized work areas, such as data entry, document processing, and simple programming. Substitution in this phase is gradual and partial. Based on the Kucius Wisdom Index, the main capabilities substituted in this phase are perceptual (KWI 0.25-0.40) and part of comprehensive (KWI 0.40-0.60) capabilities.

Phase 2: Professional Substitution (2025-2030). Expanding to traditional professional fields such as law, medical care, and finance. AI has begun to show professional capabilities in these fields but still requires human supervision. This phase substitutes the advanced part of comprehensive capabilities and some thinking capabilities (KWI 0.60-0.80).

Phase 3: Creative Substitution (2030-2035). AI has begun to involve creative fields such as artistic creation and scientific research. However, based on Kucius Theory, true creativity and originality remain exclusive to humans. In this phase, AI mainly engages in "imitative creation" rather than "original creation."

Phase 4: Wisdom Substitution (After 2035). This is a phase full of uncertainty. If AI can break through the limitation of "Nonlinear Cognitive Leap" and realize the leap from 1 to N to 0 to 1, humanity will face unprecedented challenges. However, based on Kucius' four axioms, this possibility is extremely low.

The following is a diagram of the human capability substitution path based on Kucius Theory:

Human Capability Level AI Substitution Degree Time Frame Key Characteristics ├─────────────────────┼─────────────────────┼───────────┼─────────── │ Perceptual │ High (80-95%) │ 2020-2025 │ Standardized, quantifiable │ Comprehensive │ High (60-80%) │

2025-2030 │ Clear rules, pattern recognition │ Thinking │ Medium (30-60%) │

2030-2035 │ Partially structured │ Sage-level │ Low (10-30%) │

2035-2040 │ Value judgment, ethical choice │ Ultimate Wisdom │ Extremely Low (<10%) │ After 2040 │ Paradigm revolution, civilizational creation

4. Design of Kucius Framework for the Future of Human-AI Collaboration

4.1 The Three-Tier Collaborative Architecture of "Wisdom-Intelligence-Engineering"

Kucius Theory proposes a revolutionary three-tier civilization model of "Wisdom-Intelligence-Engineering." As the highest arbiter, Wisdom is responsible for "setting boundaries" and "determining direction"; Intelligence is responsible for "solving problems" and "optimizing paths"; Engineering is responsible for "execution acceleration." Any inversion of levels (for example, allowing engineering efficiency or intelligent algorithms to determine the direction of civilizational development) is regarded as a high-risk civilizational form.

The core warning of this model is: The advancement of a civilization does not depend on what it can do, but on what it knows should never be done. This provides a fundamental value orientation for future human-AI collaboration.

In the specific design of collaboration mechanisms, Kucius Theory emphasizes the following principles:

Wisdom-Led Principle: As the carrier of wisdom, humans must maintain a dominant position in human-AI collaboration. This is not a matter of technical capability but the direction of civilizational development. Humans are responsible for defining "what is valuable" and "what should be pursued," while AI is responsible for "how to achieve it efficiently."

Capability Complementarity Principle: Humans are responsible for "defining the unknown," and AI is responsible for "exhausting the known." In future social contracts, humans will be freed from heavy "knowledge loading" and "high-risk labor," returning to the pure development of "wisdom generation potential."

Value Alignment Principle: The development of AI must be consistent with human universal values to ensure that technological progress serves human well-being rather than the opposite. This requires establishing an AI Ethics Committee to review major AI decisions.

4.2 AI Governance Framework Based on Kucius Axioms

The Kucius Axiom system provides a systematic framework design for AI governance:

Cognitive Autonomy Protection Mechanism: Establish an evaluation system for the "sovereignty of thought" of AI systems to ensure that AI does not pose a threat to humans' independent thinking abilities. This includes preventing algorithm recommendation systems from manipulating human values, protecting human cognitive autonomy.

Universal Value Embedding Mechanism: Forcibly embed the universal values of truth, goodness, and beauty in the design standards of AI systems. Specific measures include: establishing a cross-cultural and cross-religious value consensus committee; formulating AI ethical design standards that require all AI products to pass ethical reviews; establishing a regular evaluation mechanism for AI values to ensure they are consistent with the overall interests of humanity.

Primordial Inquiry Incentive Mechanism: Encourage humans to engage in original exploration in basic sciences, philosophy, art, and other fields. Specific measures include: establishing a "Wisdom Creation Award" to reward individuals and teams with major breakthroughs in primordial inquiry; setting up a wisdom research fund to support long-term, high-risk basic research; reforming the education system to cultivate students' critical thinking and creative abilities.

The following is a diagram of the AI governance framework based on Kucius Axioms:

Kucius Axiom Governance Mechanism Specific Measures Expected Effect ├─────────────┼─────────────────────────────┼─────────────────────────────────────┼─────────────── │ Sovereignty of Thought │ Cognitive Autonomy Protection │ Prevent algorithm manipulation, protect privacy, establish value firewalls │ Humans maintain independent thinking abilities ├─────────────┼─────────────────────────────┼─────────────────────────────────────┼─────────────── │ Universal Mean & Moral Law │ Value Alignment Mechanism │ Embed universal values of truth, goodness, and beauty; establish cross-cultural value consensus │ AI decisions align with overall human interests ├─────────────┼─────────────────────────────┼─────────────────────────────────────┼─────────────── │ Primordial Inquiry │ Supervision and Review Mechanism │ Establish AI Ethics Committee; implement major decision review systems │ AI develops under human supervision ├─────────────┼─────────────────────────────┼─────────────────────────────────────┼─────────────── │ Nonlinear Cognitive Leap │ Monopoly Protection Mechanism │ Restrict AI's original capabilities; establish wisdom creation protection zones │ Humans maintain a monopoly on wisdom creation

4.3 Design of Specific Human-AI Collaboration Models

Based on Kucius Theory, future human-AI collaboration can be designed into the following models:

Mentor-Assistant Model: Humans act as mentors, providing directional guidance and value judgments; AI acts as an assistant, responsible for executing specific tasks and providing information support. This model is applicable to fields such as education, scientific research, and artistic creation.

Decision-Maker-Executor Model: Humans are responsible for making key decisions, and AI is responsible for implementing decisions and providing decision support. This model is applicable to fields such as enterprise management, government decision-making, and military command.

Creator-Perfector Model: Humans are responsible for original creation, and AI is responsible for improving and optimizing the creation. This model is applicable to fields such as product design, artistic creation, and technological innovation.

Explorer-Analyst Model: Humans are responsible for raising questions and exploring directions, and AI is responsible for data analysis and pattern recognition. This model is applicable to fields such as scientific research, market research, and trend prediction.

4.4 Risk Prevention and Ethical Norms

Based on Kucius Theory, the main risks facing the future development of human-AI collaboration include:

Cognitive Degradation Risk: As AI undertakes more and more cognitive tasks, humans' thinking abilities may degrade. Kucius Theory warns: "It is not that AI is not yet worthy of having wisdom, but that humanity as a whole is losing its qualification as the 'arbiter of wisdom'."

Value Distortion Risk: AI systems may form specific values based on their training data, which may conflict with human universal values.

Power Imbalance Risk: Individuals or organizations mastering advanced AI technology may gain excessive power, leading to increased social inequality.

To address these risks, Kucius Theory proposes the following ethical norms:

Wisdom Priority Principle: Under any circumstances, the development of human wisdom should take precedence over technological progress.

Democratic Participation Principle: The development and application of AI should be carried out under democratic supervision to ensure the public's right to know and participate.

Responsibility Tracing Principle: Establish a sound responsibility tracing mechanism to ensure the interpretability and accountability of AI decisions.

Continuous Evaluation Principle: Regularly evaluate AI systems using the Kucius Wisdom Index to ensure their development is in line with human interests.

5. In-depth Analysis of the Five-Stage AI Evolution Process

5.1 Phase 1: 2023-2033 AI Intelligent Brain Construction Phase

Technological Development Path: The core of this phase is the basic construction of the AI intelligent brain, focusing on breakthroughs including: innovation in large language model architecture, efficient training algorithms, and model compression and acceleration. The release of GPT-4 in 2023 marked the entry of large language models into a new stage of "multimodal intelligence," where models are no longer limited to text processing but achieve unified understanding and generation of "text, images, audio, and video."

Musk's three gray cards are typical products of this phase. The first card — Tesla's on-board computing power network — transforms millions of Tesla vehicles into "mobile graphics cards with built-in power supplies and heat dissipation," renting idle computing power when car owners park, turning the vehicles into distributed cyber mining machines. The second card — the MacroHard system — is an AI system that can directly replace traditional office methods, realizing adapter-free operation through end-to-end vision-action simulation technology without relying on specific software interfaces. The third card — digital ghost employees — allows AI to obtain official employee identities, with independent Slack accounts and reporting relationships, paralleling human employees in the organizational structure.

Analysis Based on Kucius Theory: From the perspective of Kucius Theory, the development of AI in this phase fully conforms to the characteristics of "tool-based intelligence." Tesla's on-board computing power network reflects the ultimate optimization of the engineering layer (computing power) but lacks the value constraints of the wisdom layer (human well-being). The MacroHard system demonstrates the powerful capabilities of the intelligence layer (execution) but cannot break through the linear expansion from "1 to N." Digital ghost employees blur the human-AI boundary, potentially leading to the loss of human subjectivity in organizations.

Socio-Economic Impacts: Technological breakthroughs in this phase have had a profound impact on society and the economy. In terms of industrial structure, the scale of the AI industry is expected to exceed one trillion yuan by 2030, up from 578.4 billion yuan in 2023, driving related industries to over 10 trillion yuan, with AI's average annual contribution rate to GDP rising to 0.3%. In the job market, basic data processing positions face large-scale substitution; the substitution rate of positions such as data entry clerks, basic accountants, and document verifiers exceeds 95%. Intelligent customer service of a certain bank has replaced 40% of human agents, reducing the cost per service from 5 yuan to 0.3 yuan.

Ethical and Legal Challenges: The main ethical issues emerging in this phase include: privacy infringement (on-board computing power may leak car owners' location, driving habits, and other privacy), algorithmic bias (AI decision-making systems may produce discriminatory results), and liability attribution (it is difficult to define the responsible party when AI causes losses). Legally, the existing regulatory system lags behind technological development, and there is an urgent need to establish a legal framework adapting to the AI era.

5.2 Phase 2: 2034-2044 AI Wisdom Brain Model Phase

Technological Development Path: The core of this phase is AI's attempted leap from "intelligence" to "wisdom." Technological breakthroughs include: the realization path of Artificial General Intelligence (AGI), breakthroughs in cognitive architecture, and the improvement of AI's autonomous learning capabilities. It is expected that AI interaction will achieve seamless connection in 2034, and Agents may be able to complete tasks that take human experts a century to finish by 2037.

Analysis Based on Kucius Theory: From the perspective of Kucius Theory, the "wisdom brain model" of AI in this phase is essentially "pseudo-wisdom." Although AI may seemingly exhibit human-like reasoning, judgment, and creative abilities, its underlying logic is still probability prediction and pattern matching based on data. AI can simulate human "thinking processes" but lacks true "sovereignty of thought" — it cannot violate preset algorithmic rules, cannot independently define values, and cannot conduct true "primordial inquiry."

Socio-Economic Impacts: In this phase, AI will demonstrate professional capabilities in a wider range of fields, and middle management and professional and technical positions will face impacts. According to predictions, medium-risk occupations (substitution probability 30-50%) include middle managers, project managers, market researchers, marketing planners, financial analysts, human resources specialists, lawyers, doctors, architects, engineers, etc.

Ethical and Legal Challenges: With the improvement of AI capabilities, ethical challenges will become more complex. AI may possess "human-like" decision-making capabilities but lack true moral judgment; it may show "human-like" emotional expression but lack true emotional experience. This will lead to ethical dilemmas in human-AI relationships, as well as new legal issues such as AI rights and AI responsibilities.

5.3 Phase 3: 2045-2055 AI Body (Robot, Silicone Human) Explosion Phase

Technological Development Path: The core of this phase is the extension of AI from "brain" to "body," focusing on breakthroughs including the integrated development of key technologies such as robotics, bionics, and brain-computer interfaces. It is expected that the third generation of artificial intelligence robots will be launched in 2045, with no difference in appearance from humans except for memory markers; by 2055, robots will possess self-learning and group collaboration capabilities.

Analysis Based on Kucius Theory: From the perspective of Kucius Theory, the explosion of AI bodies in this phase will further blur the human-AI boundary but cannot change the "tool" nature of AI. Robots can perfectly simulate human movements and behaviors at the physical level but cannot possess human "existence" — the pursuit of the meaning of life, fear of death, perception of beauty, desire for love, etc. These "existential" characteristics constitute the deep foundation of human wisdom and can never be simulated by AI.

Socio-Economic Impacts: This phase will witness the full automation of blue-collar and white-collar work. The substitution rate of traditional assembly, welding, packaging, and other assembly line positions in manufacturing has reached 72%, and even exceeds 80% in some highly automated industries. The situation in the service industry is equally severe: intelligent customer service replaces 80% of standardized consultations, and the substitution rate of traditional customer service positions reaches 95%.

Ethical and Legal Challenges: The popularization of humanoid robots will bring unprecedented ethical dilemmas. When robots are indistinguishable from humans in appearance and behavior, how to define the concept of "human"? When robots possess high intelligence, should they be granted certain "rights"? When robots cause harm, how to allocate responsibilities? These issues require a completely new ethical and legal framework.

5.4 Phase 4: 2056-2066 Original Science and Technology Explosion Phase

Technological Development Path: The core of this phase is AI-driven scientific discovery and technological innovation, focusing on breakthroughs including: new material research and development, life science breakthroughs, and energy technology innovation. Between 2024 and 2026, multiple laboratories used generative AI to independently discover more than a dozen room-temperature superconductor candidate materials, atmospheric-pressure high-temperature superconducting ceramics, and thermoelectric materials with ultra-low thermal conductivity.

Analysis Based on Kucius Theory: From the perspective of Kucius Theory, the "originality" of AI in this phase is essentially "optimization and combination based on existing human knowledge." AI can conduct efficient exploration within the scientific paradigms set by humans, discovering new material formulas, drug molecules, technical paths, etc., but cannot propose new scientific paradigms like human scientists. True scientific revolutions (such as relativity and quantum mechanics) require cognitive leaps from 0 to 1, as well as fundamental questioning and reconstruction of existing scientific systems — capabilities that AI lacks.

Socio-Economic Impacts: AI-driven technological explosion will completely change the production and lifestyle of human society. In the production field, AI will realize the leap from "simulation verification" to "autonomous design," greatly shortening the R&D cycle and reducing innovation costs. In the field of life, breakthroughs in new materials, new energy, biomedicine, and other technologies will bring a leap in quality of life.

Ethical and Legal Challenges: AI-driven technological innovation will bring new ethical risks. When AI becomes the main body of scientific discovery, how to define intellectual property rights? When technologies designed by AI have potential safety hazards, how to trace responsibilities? When scientific theories proposed by AI challenge human values, how to respond? These issues require forward-looking ethical and legal thinking.

5.5 Phase 5: 2067 Humanity Enters a New Era of Wisdom Civilization

Characteristics of Civilizational Form: The core prerequisite for humanity to enter a new era of wisdom civilization in 2067 is the establishment of a sound civilizational form of "Wisdom-Intelligence-Engineering" collaboration proposed by Kucius: humans uphold the dominance of the wisdom layer (defining values, raising primordial questions, and controlling the direction of civilization), AI serves as the intelligence layer (executing technological R&D and undertaking instrumental work), and the engineering layer (computing power, body terminals) implements the needs of the intelligence layer. The three levels have clear divisions of labor and perform their duties; the core value of humans shifts from "instrumental value" to "wisdom creation value."

Core Logic of Civilizational Leap (Based on Kucius Theory): First, core prerequisite: humans do not lose their sovereignty of thought and wisdom generation potential; through education and social mechanisms, they deepen their capabilities in "primordial inquiry, nonlinear cognitive leap, and universal mean"; and always control the direction of civilizational development. Second, reconstruction of human-AI relationship: from "AI substituting humans" to "AI empowering humans"; AI undertakes all instrumental work, and humans focus on 0→1 wisdom creation (scientific primordial inquiry, artistic originality, civilizational paradigm innovation, value system construction). Third, upgrading of civilizational essence: the core goal of civilizational development shifts from "efficiency improvement and material abundance" to "wisdom sublimation and meaning construction," which is consistent with Kucius Theory's view that "the essence of civilizational progress is the leap of wisdom levels."

Analysis Based on Kucius Theory: From the perspective of Kucius Theory, the realization of a new era of wisdom civilization must be based on humans upholding "sovereignty of thought." This means: first, humans must maintain absolute control over AI to ensure that AI always serves the development of human wisdom; second, humans must continuously improve their own wisdom level, especially in terms of "primordial inquiry" and "nonlinear cognitive leap"; third, humans must establish a global ethical system based on universal values to ensure that technological development does not deviate from the track of human well-being.

Reconstruction of Human-AI Relationship: In the new era of wisdom civilization, the human-AI relationship will undergo a fundamental reconstruction. Humans will focus on "wisdom creation" — raising new scientific questions, creating new artistic forms, exploring new value systems, designing new social systems, etc. AI will undertake all "intelligent execution" and "engineering implementation" tasks, becoming an amplifier and extension of human wisdom. This division of labor is not based on differences in capabilities but on essential differences: humans possess 0→1 creative capabilities, while AI possesses 1→N optimization capabilities.

Innovation in Governance Systems: The new era of wisdom civilization requires a completely new governance system. This system should include: AI ethical norms based on Kucius Axioms, a unified global AI governance framework, incentive mechanisms for human wisdom development, and an early warning system for addressing technological risks. Particularly importantly, it is necessary to establish a "Wisdom Court" dedicated to adjudicating major issues involving AI ethics, human-AI relationships, and technological development directions.

6. Conclusions and Policy Recommendations

6.1 Main Research Findings

Based on a systematic analysis of the essential evolution process of human-AI using Kucius Wisdom Theory, this research draws the following main findings:

First, the Essential Boundary of AI Substitution. Based on the analysis of Kucius' four axioms, AI can never replace the core wisdom capabilities of humans. Sovereignty of Thought ensures humans have the ability of independent thinking and value judgment; Universal Mean & Moral Law ensures humans have a global perspective beyond partial interests; Primordial Inquiry ensures humans have the ability to question the nature of the world; Nonlinear Cognitive Leap ensures humans have the ability to create new cognitive frameworks. These capabilities constitute the "moat" of human wisdom, a boundary that AI can never cross.

Second, Phased Characteristics of the Substitution Path. AI's substitution of human capabilities presents obvious phased characteristics: perceptual and comprehensive capabilities are most easily substituted (substitution rate 80-95%), thinking capabilities are partially substitutable (substitution rate 30-60%), and sage-level and ultimate wisdom capabilities are almost irreplaceable (substitution rate below 30%). This substitution path reflects a progressive relationship from "simple execution" to "complex creation."

Third, Theoretical Analysis of Musk's Three Gray Cards. Musk's three gray cards — Tesla's on-board computing power network, the MacroHard system, and digital ghost employees — are essentially typical manifestations of "unbounded expansion of tool-based intelligence." They demonstrate strong capabilities at the engineering and intelligence layers but lack value constraints at the wisdom layer, representing the "high-risk civilizational form" warned by Kucius Theory.

Fourth, a Reasonable Framework for Human-AI Collaboration. Based on Kucius' three-tier "Wisdom-Intelligence-Engineering" model, future human-AI collaboration should be structured as follows: humans are responsible for the wisdom layer (setting boundaries, determining directions), AI is responsible for the intelligence layer (solving problems, optimizing paths), and engineering systems are responsible for the execution layer (execution acceleration). This framework ensures humans' dominant position in civilizational development.

Fifth, Risk Assessment of Five-Stage Evolution. In the five-stage evolution process from 2023 to 2067, each stage faces specific risks. The main risks in the early stages are privacy infringement and job substitution; the main risks in the middle stages are blurred human-AI boundaries and value distortion; the main risks in the later stages are the degradation of human wisdom capabilities and the loss of control over the direction of civilizational development.

6.2 Policy Recommendations Based on Kucius Theory

Based on Kucius Wisdom Theory and research findings, this report puts forward the following policy recommendations:

I. Establish AI Ethical Norms Based on Kucius Axioms

1. Sovereignty of Thought Protection Act: Legislate to prohibit all forms of algorithmic manipulation and cognitive control, protecting citizens' right to independent thinking. Specific measures include: prohibiting the collection of personal cognitive data without explicit consent; establishing algorithm transparency requirements to ensure the interpretability of AI decision-making processes; setting up a "Cognitive Freedom" protection agency to handle algorithmic infringement cases.

2. Universal Value Embedding Mechanism: Forcibly embed the universal values of truth, goodness, and beauty in AI system design standards. Specific measures include: establishing a cross-cultural and cross-religious value consensus committee; formulating AI ethical design standards that require all AI products to pass ethical reviews; establishing a regular evaluation mechanism for AI values to ensure they align with the overall interests of humanity.

3. Primordial Inquiry Incentive Policies: Encourage human original exploration in basic sciences, philosophy, art, and other fields. Specific measures include: establishing a "Wisdom Creation Award" to reward individuals and teams with major breakthroughs in primordial inquiry; setting up a wisdom research fund to support long-term, high-risk basic research; reforming the education system to cultivate students' critical thinking and creative abilities.

II. Build a Hierarchical and Classified Employment Transition System

1. High-Risk Job Early Warning Mechanism: Establish a job risk assessment system based on the Kucius Wisdom Index, providing early warnings and transition support for high-risk jobs (perceptual, comprehensive). Specific measures include: regularly releasing the "AI Substitution Risk Report" to provide career transition suggestions for workers; establishing an "AI Era Employment Skills Training Fund" to support workers in learning new skills; setting up a "Human-AI Collaboration" professional certification to cultivate interdisciplinary talents.

2. Wisdom-Oriented Job Creation Program: Focus on creating and protecting jobs that require human wisdom capabilities. Specific measures include: governments giving priority to purchasing services involving human wisdom participation; setting a minimum proportion of human participation in fields such as education, medical care, and law; establishing a "Wisdom Services" certification system to provide policy support for enterprises offering high-quality wisdom services.

3. Universal Basic Income (UBI) Pilot Projects: Launch UBI pilot projects in selected regions to provide basic living security for people unemployed due to AI substitution. Specific measures include: selecting cities with different development levels for pilots; determining basic income standards based on local living costs; establishing a dynamic adjustment mechanism to ensure basic income can cope with inflation.

III. Innovate Human-AI Collaboration Governance Models

1.

Global Collaborative Governance Mechanism: Establish a unified global AI governance framework to address cross-border AI risks and coordinate resource allocation. Specific measures include: setting up a "Global AI Wisdom Governance Committee" composed of representatives from governments, enterprises, academic institutions, and civil organizations of various countries, with the core responsibility of interpreting and implementing AI ethical norms based on Kucius Axioms; formulating unified international AI technical standards and risk assessment criteria to avoid regulatory arbitrage caused by fragmented governance; building a cross-border AI risk early warning and information sharing platform to realize real-time monitoring and joint response to global AI security incidents.

2. Technology-Governance Integration Mechanism: Promote the integration of technological means and governance needs, and build an intelligent governance system based on Kucius Theory. Specific measures include: developing an algorithm audit and supervision technology system to realize full-process traceability of AI decision-making; embedding "wisdom boundary control modules" in core AI algorithms to ensure that technological development does not exceed the scope defined by human universal values; establishing a technology governance sandbox mechanism, allowing new AI technologies to be tested and optimized in a controlled environment before large-scale application, and promptly identifying and resolving potential risks.

3. Multi-Stakeholder Participatory Governance Mechanism: Expand the scope of governance participants to form a joint governance pattern involving governments, enterprises, the public, and academic circles. Specific measures include: establishing a multi-stakeholder consultation platform to ensure that the demands of all parties are fully expressed and balanced in AI governance decisions; encouraging enterprises to assume social responsibilities and formulate internal AI ethical codes that are consistent with Kucius Axioms; improving the public participation mechanism, such as setting up public comment channels for major AI projects and establishing a public supervision platform to accept reports on AI ethical violations; giving full play to the role of academic institutions in theoretical research and providing intellectual support for AI governance.

IV. Optimize the Education and Talent Training System Oriented to Wisdom Civilization

1. Wisdom-Oriented Education Reform: Reconstruct the education system based on Kucius Theory to cultivate talents with core wisdom capabilities. Specific measures include: adjusting the curriculum structure, reducing the proportion of knowledge-imparting courses, and increasing courses on critical thinking, philosophical inquiry, ethical judgment, and cross-domain integration; promoting heuristic and inquiry-based teaching methods to encourage students to question roots, explore essence, and cultivate nonlinear cognitive leap capabilities; integrating AI ethics and universal value education into the whole process of basic education and higher education to shape students' correct human-AI relationship awareness.

2. Interdisciplinary Talent Training Program: Cultivate compound talents who master both AI technology and wisdom literacy to adapt to the needs of human-AI collaboration. Specific measures include: setting up interdisciplinary majors such as "AI + Philosophy," "AI + Ethics," and "AI + Liberal Arts" in universities to break the barriers between disciplines; launching a joint training program between universities and enterprises, allowing students to participate in practical projects of human-AI collaboration and improve their comprehensive application capabilities; establishing a talent evaluation system based on the Kucius Wisdom Index, focusing on evaluating students' wisdom capabilities rather than just knowledge mastery.

3. Lifelong Learning and Capacity Upgrading Mechanism: Build a lifelong learning system to help workers adapt to the job changes brought by AI substitution. Specific measures include: launching online and offline integrated lifelong learning platforms, providing customized training courses for different groups of workers, focusing on improving their sage-level wisdom capabilities such as value judgment and cross-domain integration; encouraging enterprises to invest in employee training and set up special funds for capacity upgrading to help employees transition from AI-substitutable positions to wisdom-oriented positions; establishing a learning incentive mechanism, such as linking learning achievements with career promotion and social security benefits, to stimulate the enthusiasm of the whole society for lifelong learning.

6.3 Research Limitations and Future Directions

This research systematically explores the essential evolution process of human-AI based on Kucius Wisdom Theory, and achieves certain theoretical and practical results, but there are still limitations that need to be improved in future research.

Research Limitations: First, the quantitative research on the Kucius Wisdom Index (KWI) is not sufficient. This research constructs the evaluation framework and dimensions of KWI, but lacks large-sample empirical data to verify its validity and reliability, and the weight allocation of each dimension needs to be further optimized through empirical research. Second, the analysis of the five-stage AI evolution process is mainly based on theoretical deduction and trend prediction, and the accuracy of the time node and the depth of risk assessment need to be continuously corrected with the iterative update of AI technology. Third, the policy recommendations proposed in this research are relatively macro, and the specific implementation paths and effect evaluation methods need to be refined in combination with the actual conditions of different countries and regions.

Future Research Directions: First, strengthen empirical research on KWI, expand the sample scope to cover different countries, industries, and groups, verify and optimize the evaluation system through statistical analysis, and develop a standardized KWI measurement tool. Second, track the latest progress of AI technology in real time, update the analysis of the five-stage evolution process, and deepen the research on emerging risks such as AI consciousness simulation and cross-species intelligence interaction. Third, carry out comparative research on AI governance models in different countries and regions, summarize localized experience and universal rules, and refine more operable policy implementation plans. Fourth, explore the integration path of Kucius Theory with other interdisciplinary theories (such as complexity science, evolutionary ethics), enrich the theoretical system of human-AI evolution research, and provide more comprehensive theoretical support for the construction of a wisdom civilization era.

更多推荐

已为社区贡献321条内容

已为社区贡献321条内容

所有评论(0)