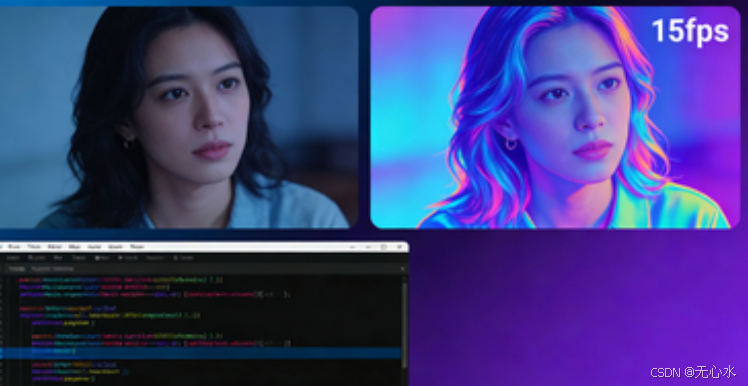

【神经风格迁移:性能】22、实时视频风格迁移全栈实战:15fps高帧率直播风格化技术深度解析

本文探讨了实时视频风格迁移技术的实现方案,重点解决传统神经风格迁移方法在实时性、质量和资源约束方面的挑战。通过采用MobileNetV2轻量化骨干网络和创新的风格迁移头设计,结合深度可分离卷积和多尺度特征融合技术,实现了帧率≥15fps的高质量实时风格化处理。文章详细分析了模型架构优化策略,包括自适应实例归一化(AdaIN)和特征上采样等关键技术,为直播平台和短视频应用的实时视频处理提供了可行的技

实时视频风格迁移全栈实战:15fps高帧率直播风格化技术深度解析

引言:实时视频风格迁移的技术革命

随着直播和短视频平台的迅猛发展,实时视频处理技术已成为内容创作的新焦点。传统神经风格迁移技术虽然能生成高质量风格化图像,但其高计算复杂度和慢速处理特性使其难以应用于实时视频场景。本文将深入探讨如何通过模型轻量化、训练优化和工程化部署,实现帧率≥15fps的高质量实时视频风格迁移,并支持直播推流到主流平台。

一、实时视频风格迁移的技术挑战

1.1 实时性要求的严苛挑战

实时视频风格迁移面临三重技术挑战:

# 实时视频处理的技术挑战分析

real_time_challenges = {

"时间约束": {

"单帧处理时间": "≤66ms (15fps要求)",

"端到端延迟": "≤150ms (用户可接受)",

"推理稳定性": "时间波动<20ms"

},

"质量约束": {

"风格一致性": "帧间风格保持稳定",

"内容保真度": "关键内容不丢失",

"视觉连贯性": "避免闪烁和抖动"

},

"资源约束": {

"内存使用": "<1GB (移动端限制)",

"GPU占用": "<70% (避免过热)",

"功耗控制": "增量<20%"

}

}

1.2 传统迭代式方法的局限性

传统VGG-based风格迁移使用迭代优化方式,每帧需要数百次前向传播,完全无法满足实时性要求:

二、模型架构:MobileNetV2+风格迁移头

2.1 MobileNetV2的轻量化优势

MobileNetV2采用深度可分离卷积和倒残差结构,在保证性能的同时大幅减少计算量:

import torch

import torch.nn as nn

import torch.nn.functional as F

from torchvision.models import mobilenet_v2

class MobileNetV2Backbone(nn.Module):

"""

MobileNetV2骨干网络提取器

"""

def __init__(self, pretrained=True):

super().__init__()

# 加载预训练MobileNetV2

mobilenet = mobilenet_v2(pretrained=pretrained)

# 提取特征提取部分(移除分类头)

self.features = mobilenet.features

# 冻结前几层(节省计算)

self._freeze_early_layers()

# 获取各层通道数用于后续连接

self.channel_sizes = {

'layer4': 24, # 早期特征

'layer7': 32, # 中层特征

'layer14': 96, # 深层特征

'layer18': 320 # 高级语义特征

}

def _freeze_early_layers(self, num_frozen=8):

"""冻结前N层以减少计算"""

for i, layer in enumerate(self.features):

if i < num_frozen:

for param in layer.parameters():

param.requires_grad = False

def forward(self, x):

"""前向传播,返回多尺度特征"""

features = {}

# 记录关键层的输出

layer_indices = [4, 7, 14, 18]

current_idx = 0

for i, layer in enumerate(self.features):

x = layer(x)

if i in layer_indices:

features[f'layer{i}'] = x

return features

class DepthwiseSeparableConv(nn.Module):

"""深度可分离卷积块"""

def __init__(self, in_channels, out_channels, kernel_size=3, stride=1, padding=1):

super().__init__()

# 深度卷积

self.depthwise = nn.Conv2d(

in_channels, in_channels,

kernel_size=kernel_size,

stride=stride,

padding=padding,

groups=in_channels,

bias=False

)

self.bn1 = nn.BatchNorm2d(in_channels)

# 逐点卷积

self.pointwise = nn.Conv2d(

in_channels, out_channels,

kernel_size=1,

bias=False

)

self.bn2 = nn.BatchNorm2d(out_channels)

self.relu = nn.ReLU6(inplace=True)

def forward(self, x):

x = self.depthwise(x)

x = self.bn1(x)

x = self.relu(x)

x = self.pointwise(x)

x = self.bn2(x)

x = self.relu(x)

return x

2.2 风格迁移头的创新设计

class StyleTransferHead(nn.Module):

"""

前馈式风格迁移头

将内容特征转换为指定风格的输出

"""

def __init__(self, style_channels=512):

super().__init__()

# 多尺度特征融合

self.feature_fusion = nn.ModuleDict({

'low_level': nn.Sequential(

DepthwiseSeparableConv(24, 64),

DepthwiseSeparableConv(64, 128)

),

'mid_level': nn.Sequential(

DepthwiseSeparableConv(32, 128),

DepthwiseSeparableConv(128, 256)

),

'high_level': nn.Sequential(

DepthwiseSeparableConv(96, 256),

DepthwiseSeparableConv(256, 512)

)

})

# 风格特征注入

self.style_projection = nn.Sequential(

nn.Linear(style_channels, 256),

nn.ReLU(inplace=True),

nn.Linear(256, 128),

nn.ReLU(inplace=True)

)

# 自适应实例归一化(AdaIN)

self.adain_layers = nn.ModuleList([

AdaptiveInstanceNorm2d(128, 128),

AdaptiveInstanceNorm2d(256, 128),

AdaptiveInstanceNorm2d(512, 128)

])

# 特征上采样与融合

self.upsample_fusion = nn.ModuleDict({

'stage1': nn.Sequential(

nn.Upsample(scale_factor=2, mode='bilinear', align_corners=True),

DepthwiseSeparableConv(512, 256)

),

'stage2': nn.Sequential(

nn.Upsample(scale_factor=2, mode='bilinear', align_corners=True),

DepthwiseSeparableConv(512, 256) # 256+256=512

),

'stage3': nn.Sequential(

nn.Upsample(scale_factor=2, mode='bilinear', align_corners=True),

DepthwiseSeparableConv(384, 128) # 256+128=384

)

})

# 输出层

self.output_conv = nn.Sequential(

DepthwiseSeparableConv(256, 128),

DepthwiseSeparableConv(128, 64),

nn.Conv2d(64, 3, kernel_size=3, padding=1),

nn.Tanh() # 输出范围[-1, 1]

)

def forward(self, content_features, style_vector=None):

"""

前向传播

Args:

content_features: 多尺度内容特征字典

style_vector: 风格特征向量(可选)

"""

# 1. 多尺度特征提取

low_feat = self.feature_fusion['low_level'](content_features['layer4'])

mid_feat = self.feature_fusion['mid_level'](content_features['layer7'])

high_feat = self.feature_fusion['high_level'](content_features['layer14'])

# 2. 风格特征投影(如果提供)

if style_vector is not None:

style_params = self.style_projection(style_vector)

# 应用AdaIN到各层特征

low_feat = self.adain_layers[0](low_feat, style_params)

mid_feat = self.adain_layers[1](mid_feat, style_params)

high_feat = self.adain_layers[2](high_feat, style_params)

# 3. 渐进式上采样融合

x = high_feat

# 阶段1:融合高层和中层特征

x = self.upsample_fusion['stage1'](x)

x = torch.cat([x, mid_feat], dim=1)

# 阶段2:融合中层和低层特征

x = self.upsample_fusion['stage2'](x)

x = torch.cat([x, low_feat], dim=1)

# 阶段3:最终上采样

x = self.upsample_fusion['stage3'](x)

# 4. 输出层

output = self.output_conv(x)

return output

class AdaptiveInstanceNorm2d(nn.Module):

"""自适应实例归一化"""

def __init__(self, num_features, style_dim):

super().__init__()

self.norm = nn.InstanceNorm2d(num_features, affine=False)

self.style_scale = nn.Linear(style_dim, num_features)

self.style_shift = nn.Linear(style_dim, num_features)

def forward(self, x, style_vector):

# 实例归一化

normalized = self.norm(x)

# 从风格向量生成缩放和平移参数

scale = self.style_scale(style_vector).view(-1, x.size(1), 1, 1)

shift = self.style_shift(style_vector).view(-1, x.size(1), 1, 1)

# 应用风格变换

styled = normalized * scale + shift

return styled

2.3 完整前馈式风格迁移模型

三、训练优化:帧间一致性损失设计

3.1 视频风格迁移的特殊挑战

视频风格迁移需要保持时间连续性,避免以下问题:

- 闪烁现象:相邻帧风格不一致

- 抖动问题:内容边界不稳定

- 时间滞后:快速运动物体出现拖影

3.2 一致性损失函数设计

class VideoConsistencyLoss(nn.Module):

"""

视频帧间一致性损失

"""

def __init__(self, alpha=0.5, beta=0.3, gamma=0.2):

super().__init__()

self.alpha = alpha # 光流一致性权重

self.beta = beta # 内容一致性权重

self.gamma = gamma # 边缘一致性权重

# 预训练的流估计网络(简化版)

self.flow_estimator = self._build_flow_estimator()

# 损失函数

self.mse_loss = nn.MSELoss()

self.l1_loss = nn.L1Loss()

def _build_flow_estimator(self):

"""构建轻量级光流估计器"""

class LightFlowNet(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(6, 32, kernel_size=7, padding=3)

self.conv2 = nn.Conv2d(32, 64, kernel_size=5, padding=2)

self.conv3 = nn.Conv2d(64, 32, kernel_size=3, padding=1)

self.conv4 = nn.Conv2d(32, 2, kernel_size=3, padding=1)

self.relu = nn.ReLU(inplace=True)

def forward(self, frame1, frame2):

x = torch.cat([frame1, frame2], dim=1)

x = self.relu(self.conv1(x))

x = self.relu(self.conv2(x))

x = self.relu(self.conv3(x))

flow = self.conv4(x)

return flow

return LightFlowNet()

def compute_optical_flow_loss(self, prev_frame, curr_frame, prev_styled, curr_styled):

"""

计算光流一致性损失

"""

# 估计原始帧间的光流

flow = self.flow_estimator(prev_frame, curr_frame)

# 使用光流对前一帧风格化结果进行扭曲

warped_prev = self._warp_image(prev_styled, flow)

# 计算当前帧与扭曲后前一帧的差异

flow_loss = self.l1_loss(curr_styled, warped_prev)

return flow_loss

def _warp_image(self, image, flow):

"""使用光流扭曲图像"""

B, C, H, W = image.size()

# 创建网格

grid_y, grid_x = torch.meshgrid(

torch.arange(H, device=image.device),

torch.arange(W, device=image.device)

)

grid = torch.stack((grid_x, grid_y), dim=2).float()

# 添加光流

warped_grid = grid.unsqueeze(0) + flow.permute(0, 2, 3, 1)

# 归一化到[-1, 1]

warped_grid[..., 0] = 2.0 * warped_grid[..., 0] / (W - 1) - 1.0

warped_grid[..., 1] = 2.0 * warped_grid[..., 1] / (H - 1) - 1.0

# 采样

warped_image = F.grid_sample(image, warped_grid, align_corners=True)

return warped_image

def compute_content_consistency_loss(self, prev_features, curr_features):

"""

计算内容特征一致性损失

"""

content_loss = 0

for key in prev_features:

if key in curr_features:

# 计算特征图间的L2距离

loss = self.mse_loss(prev_features[key], curr_features[key])

content_loss += loss

return content_loss / len(prev_features)

def compute_edge_consistency_loss(self, prev_styled, curr_styled):

"""

计算边缘一致性损失

"""

# 使用Sobel算子提取边缘

def sobel_edges(image):

sobel_x = torch.tensor([[-1, 0, 1], [-2, 0, 2], [-1, 0, 1]],

dtype=torch.float32, device=image.device)

sobel_y = torch.tensor([[-1, -2, -1], [0, 0, 0], [1, 2, 1]],

dtype=torch.float32, device=image.device)

sobel_x = sobel_x.view(1, 1, 3, 3).repeat(3, 1, 1, 1)

sobel_y = sobel_y.view(1, 1, 3, 3).repeat(3, 1, 1, 1)

edges_x = F.conv2d(image, sobel_x, padding=1, groups=3)

edges_y = F.conv2d(image, sobel_y, padding=1, groups=3)

edges = torch.sqrt(edges_x**2 + edges_y**2 + 1e-6)

return edges

prev_edges = sobel_edges(prev_styled)

curr_edges = sobel_edges(curr_styled)

edge_loss = self.l1_loss(prev_edges, curr_edges)

return edge_loss

def forward(self, prev_data, curr_data):

"""

计算总的一致性损失

Args:

prev_data: 字典,包含前一帧的数据

curr_data: 字典,包含当前帧的数据

"""

# 提取数据

prev_frame = prev_data['frame']

curr_frame = curr_data['frame']

prev_styled = prev_data['styled']

curr_styled = curr_data['styled']

prev_features = prev_data.get('features', {})

curr_features = curr_data.get('features', {})

# 计算各项损失

flow_loss = self.compute_optical_flow_loss(

prev_frame, curr_frame, prev_styled, curr_styled

)

content_loss = self.compute_content_consistency_loss(

prev_features, curr_features

)

edge_loss = self.compute_edge_consistency_loss(

prev_styled, curr_styled

)

# 加权总损失

total_loss = (

self.alpha * flow_loss +

self.beta * content_loss +

self.gamma * edge_loss

)

return {

'total': total_loss,

'flow': flow_loss.item(),

'content': content_loss.item(),

'edge': edge_loss.item()

}

class VideoStyleTransferTrainer:

"""视频风格迁移训练器"""

def __init__(self, model, style_target, consistency_weight=0.1):

self.model = model

self.style_target = style_target

# 损失函数

self.content_loss = nn.MSELoss()

self.style_loss = GramMatrixLoss()

self.consistency_loss = VideoConsistencyLoss()

self.consistency_weight = consistency_weight

# 优化器

self.optimizer = torch.optim.Adam(

model.parameters(),

lr=1e-4,

betas=(0.9, 0.999)

)

def train_step(self, video_clip, content_layers, style_layers):

"""

训练单步

Args:

video_clip: 视频片段 [B, T, C, H, W]

content_layers: 内容损失层

style_layers: 风格损失层

"""

batch_size, num_frames = video_clip.shape[:2]

total_loss = 0

prev_data = None

self.optimizer.zero_grad()

for t in range(num_frames):

# 当前帧

current_frame = video_clip[:, t]

# 风格迁移

styled_frame = self.model(current_frame)

# 提取特征

content_features = self.model.extract_content_features(current_frame)

styled_features = self.model.extract_content_features(styled_frame)

# 内容损失(仅对第一帧计算)

if t == 0:

content_loss_value = 0

for layer in content_layers:

if layer in content_features and layer in styled_features:

content_loss_value += self.content_loss(

styled_features[layer], content_features[layer]

)

else:

content_loss_value = 0

# 风格损失

style_loss_value = self.style_loss(

styled_features, self.style_target, style_layers

)

# 帧间一致性损失

consistency_loss_value = 0

if prev_data is not None:

curr_data = {

'frame': current_frame,

'styled': styled_frame,

'features': content_features

}

consistency_loss = self.consistency_loss(prev_data, curr_data)

consistency_loss_value = consistency_loss['total']

# 当前帧总损失

frame_loss = (

content_loss_value * 1.0 +

style_loss_value * 5.0 +

consistency_loss_value * self.consistency_weight

)

total_loss += frame_loss

# 保存当前帧数据用于下一帧

prev_data = {

'frame': current_frame.detach(),

'styled': styled_frame.detach(),

'features': {k: v.detach() for k, v in content_features.items()}

}

# 平均损失

avg_loss = total_loss / num_frames

# 反向传播

avg_loss.backward()

self.optimizer.step()

return avg_loss.item()

四、视频流处理流程优化

4.1 高性能预处理流水线

import cv2

import numpy as np

import torch

class VideoPreprocessor:

"""GPU加速的视频帧预处理器"""

def __init__(self, target_size=(384, 384), use_gpu=True):

self.target_size = target_size

self.use_gpu = use_gpu

# OpenCV CUDA优化

if use_gpu and cv2.cuda.getCudaEnabledDeviceCount() > 0:

self.stream = cv2.cuda_Stream()

self.resizer = cv2.cuda.createResizeArea(target_size)

else:

self.stream = None

self.resizer = None

# 缓存上一次的转换结果

self.frame_cache = None

# 性能统计

self.processing_times = []

def preprocess_frame(self, frame):

"""预处理单帧图像"""

start_time = cv2.getTickCount()

# 转换到GPU(如果可用)

if self.use_gpu and self.resizer is not None:

# 上传到GPU

gpu_frame = cv2.cuda_GpuMat()

gpu_frame.upload(frame, stream=self.stream)

# 调整大小

resized_gpu = self.resizer.apply(gpu_frame, stream=self.stream)

# 下载回CPU

resized_frame = resized_gpu.download(stream=self.stream)

self.stream.waitForCompletion()

else:

# CPU处理

resized_frame = cv2.resize(frame, self.target_size,

interpolation=cv2.INTER_AREA)

# 转换为RGB(OpenCV默认BGR)

rgb_frame = cv2.cvtColor(resized_frame, cv2.COLOR_BGR2RGB)

# 归一化到[-1, 1]

normalized_frame = rgb_frame.astype(np.float32) / 127.5 - 1.0

# 转换为PyTorch张量

tensor_frame = torch.from_numpy(normalized_frame).permute(2, 0, 1).unsqueeze(0)

# 移动到GPU(如果可用)

if self.use_gpu and torch.cuda.is_available():

tensor_frame = tensor_frame.cuda()

# 记录处理时间

end_time = cv2.getTickCount()

processing_time = (end_time - start_time) / cv2.getTickFrequency() * 1000

self.processing_times.append(processing_time)

# 保持最近100次的时间记录

if len(self.processing_times) > 100:

self.processing_times.pop(0)

return tensor_frame

def get_avg_processing_time(self):

"""获取平均处理时间"""

if not self.processing_times:

return 0

return np.mean(self.processing_times)

def adaptive_resize(self, frame, target_fps=15):

"""自适应调整大小以维持目标帧率"""

avg_time = self.get_avg_processing_time()

if avg_time > 0:

# 计算当前帧率

current_fps = 1000 / avg_time

if current_fps < target_fps * 0.8:

# 帧率过低,进一步减小尺寸

scale_factor = current_fps / target_fps

new_width = int(self.target_size[0] * scale_factor)

new_height = int(self.target_size[1] * scale_factor)

self.target_size = (max(128, new_width), max(128, new_height))

elif current_fps > target_fps * 1.2:

# 帧率过高,可以适当增大尺寸

scale_factor = min(1.2, target_fps / current_fps)

new_width = int(self.target_size[0] * scale_factor)

new_height = int(self.target_size[1] * scale_factor)

self.target_size = (min(512, new_width), min(512, new_height))

return self.preprocess_frame(frame)

4.2 ONNX Runtime GPU推理优化

import onnxruntime as ort

import time

class ONNXRuntimeInference:

"""ONNX Runtime GPU推理优化"""

def __init__(self, model_path, providers=None):

if providers is None:

# 优先使用CUDA,其次是TensorRT,最后是CPU

providers = [

('TensorrtExecutionProvider', {

'device_id': 0,

'trt_max_workspace_size': 2 * 1024 * 1024 * 1024, # 2GB

'trt_fp16_enable': True,

'trt_engine_cache_enable': True,

'trt_engine_cache_path': './trt_cache'

}),

('CUDAExecutionProvider', {

'device_id': 0,

'arena_extend_strategy': 'kSameAsRequested',

'gpu_mem_limit': 1 * 1024 * 1024 * 1024, # 1GB

'cudnn_conv_algo_search': 'HEURISTIC',

'do_copy_in_default_stream': True

}),

('CPUExecutionProvider', {

'arena_extend_strategy': 'kNextPowerOfTwo',

'inter_op_num_threads': 4,

'intra_op_num_threads': 4

})

]

# 会话选项

sess_options = ort.SessionOptions()

sess_options.enable_cpu_mem_arena = True

sess_options.enable_mem_pattern = True

sess_options.execution_mode = ort.ExecutionMode.ORT_SEQUENTIAL

sess_options.graph_optimization_level = ort.GraphOptimizationLevel.ORT_ENABLE_ALL

# 启用动态形状支持

sess_options.enable_profiling = False

# 创建推理会话

self.session = ort.InferenceSession(

model_path,

sess_options=sess_options,

providers=providers

)

# 获取输入输出信息

self.input_name = self.session.get_inputs()[0].name

self.output_name = self.session.get_outputs()[0].name

# 性能监控

self.inference_times = []

self.warmup()

def warmup(self, num_iterations=10):

"""预热推理引擎"""

dummy_input = np.random.randn(1, 3, 384, 384).astype(np.float32)

for _ in range(num_iterations):

_ = self.session.run(

[self.output_name],

{self.input_name: dummy_input}

)

def infer(self, input_tensor):

"""执行推理"""

# 确保输入是numpy数组

if isinstance(input_tensor, torch.Tensor):

input_array = input_tensor.detach().cpu().numpy()

else:

input_array = input_tensor

# 计时开始

start_time = time.perf_counter()

# 执行推理

outputs = self.session.run(

[self.output_name],

{self.input_name: input_array}

)

# 计时结束

end_time = time.perf_counter()

inference_time = (end_time - start_time) * 1000 # 转换为毫秒

# 记录推理时间

self.inference_times.append(inference_time)

if len(self.inference_times) > 100:

self.inference_times.pop(0)

# 转换为PyTorch张量

output_tensor = torch.from_numpy(outputs[0])

return output_tensor, inference_time

def get_performance_stats(self):

"""获取性能统计"""

if not self.inference_times:

return {"avg_time": 0, "min_time": 0, "max_time": 0, "fps": 0}

avg_time = np.mean(self.inference_times)

min_time = np.min(self.inference_times)

max_time = np.max(self.inference_times)

fps = 1000 / avg_time if avg_time > 0 else 0

return {

"avg_time_ms": avg_time,

"min_time_ms": min_time,

"max_time_ms": max_time,

"fps": fps,

"std_dev": np.std(self.inference_times)

}

4.3 帧间平滑与后处理

class TemporalSmoothing:

"""时域平滑滤波器"""

def __init__(self, alpha=0.8, buffer_size=3):

"""

Args:

alpha: 当前帧权重(0-1),值越大越信任当前帧

buffer_size: 帧缓冲区大小

"""

self.alpha = alpha

self.buffer_size = buffer_size

self.frame_buffer = []

self.smoothed_frame = None

def smooth_frame(self, current_frame):

"""平滑当前帧"""

if self.smoothed_frame is None:

# 第一帧,直接使用

self.smoothed_frame = current_frame

else:

# 指数加权移动平均

self.smoothed_frame = (

self.alpha * current_frame +

(1 - self.alpha) * self.smoothed_frame

)

# 更新帧缓冲区

self.frame_buffer.append(current_frame)

if len(self.frame_buffer) > self.buffer_size:

self.frame_buffer.pop(0)

return self.smoothed_frame

def adaptive_smoothing(self, current_frame, motion_level):

"""

自适应平滑

Args:

motion_level: 运动水平(0-1),0表示静止,1表示快速运动

"""

# 根据运动水平调整平滑强度

if motion_level > 0.7:

# 快速运动,减少平滑以避免拖影

adaptive_alpha = 0.9

elif motion_level < 0.3:

# 静止或慢速运动,增加平滑以减少闪烁

adaptive_alpha = 0.6

else:

adaptive_alpha = self.alpha

# 使用调整后的alpha进行平滑

if self.smoothed_frame is None:

self.smoothed_frame = current_frame

else:

self.smoothed_frame = (

adaptive_alpha * current_frame +

(1 - adaptive_alpha) * self.smoothed_frame

)

return self.smoothed_frame

class PostProcessor:

"""后处理器"""

def __init__(self, output_size=(720, 1280)):

self.output_size = output_size

self.temporal_smoother = TemporalSmoothing(alpha=0.8)

def process_frame(self, styled_tensor, original_frame=None):

"""后处理单帧"""

# 转换为numpy数组

if isinstance(styled_tensor, torch.Tensor):

styled_np = styled_tensor.squeeze().cpu().numpy()

else:

styled_np = styled_tensor

# 转置并反归一化

styled_np = np.transpose(styled_np, (1, 2, 0))

styled_np = (styled_np + 1.0) * 127.5

styled_np = np.clip(styled_np, 0, 255).astype(np.uint8)

# 转换为BGR(OpenCV格式)

styled_bgr = cv2.cvtColor(styled_np, cv2.COLOR_RGB2BGR)

# 调整到输出尺寸

if self.output_size != styled_bgr.shape[:2]:

styled_bgr = cv2.resize(styled_bgr, self.output_size,

interpolation=cv2.INTER_LINEAR)

# 应用时域平滑

smoothed = self.temporal_smoother.smooth_frame(styled_bgr)

# 可选:与原始帧混合(保留细节)

if original_frame is not None:

blended = self.blend_with_original(smoothed, original_frame)

return blended

return smoothed

def blend_with_original(self, styled_frame, original_frame, blend_ratio=0.1):

"""与原始帧混合以保留细节"""

# 确保尺寸一致

if styled_frame.shape != original_frame.shape:

original_resized = cv2.resize(original_frame,

(styled_frame.shape[1], styled_frame.shape[0]))

else:

original_resized = original_frame

# 拉普拉斯金字塔混合

blended = cv2.addWeighted(styled_frame, 1 - blend_ratio,

original_resized, blend_ratio, 0)

return blended

五、实战:直播摄像头→梵高风格实时迁移

5.1 完整直播处理流水线

import threading

import queue

import time

class RealTimeStyleTransferPipeline:

"""实时风格迁移处理流水线"""

def __init__(self, model_path, style_name="van_gogh_starry_night"):

# 初始化组件

self.preprocessor = VideoPreprocessor(target_size=(384, 384), use_gpu=True)

self.inference_engine = ONNXRuntimeInference(model_path)

self.postprocessor = PostProcessor(output_size=(720, 1280))

# 帧缓冲区

self.input_queue = queue.Queue(maxsize=10)

self.output_queue = queue.Queue(maxsize=10)

# 性能监控

self.frame_count = 0

self.total_processing_time = 0

self.running = False

# 线程

self.capture_thread = None

self.process_thread = None

self.output_thread = None

def start_capture(self, source=0):

"""启动视频捕获"""

self.cap = cv2.VideoCapture(source)

self.cap.set(cv2.CAP_PROP_FPS, 30)

self.cap.set(cv2.CAP_PROP_FRAME_WIDTH, 1280)

self.cap.set(cv2.CAP_PROP_FRAME_HEIGHT, 720)

self.running = True

# 启动捕获线程

self.capture_thread = threading.Thread(target=self._capture_loop)

self.capture_thread.start()

# 启动处理线程

self.process_thread = threading.Thread(target=self._process_loop)

self.process_thread.start()

# 启动输出线程

self.output_thread = threading.Thread(target=self._output_loop)

self.output_thread.start()

def _capture_loop(self):

"""视频捕获循环"""

while self.running:

ret, frame = self.cap.read()

if not ret:

break

# 放入输入队列(如果队列已满,丢弃最旧的帧)

if self.input_queue.full():

try:

self.input_queue.get_nowait()

except queue.Empty:

pass

self.input_queue.put((frame, time.time()))

# 控制捕获速率

time.sleep(0.001)

def _process_loop(self):

"""处理循环"""

while self.running:

try:

# 从队列获取帧(最多等待10ms)

frame, timestamp = self.input_queue.get(timeout=0.01)

# 预处理

preprocessed = self.preprocessor.preprocess_frame(frame)

# 推理

styled_tensor, inference_time = self.inference_engine.infer(preprocessed)

# 后处理

styled_frame = self.postprocessor.process_frame(styled_tensor, frame)

# 放入输出队列

if self.output_queue.full():

try:

self.output_queue.get_nowait()

except queue.Empty:

pass

self.output_queue.put((styled_frame, inference_time, timestamp))

# 更新统计

self.frame_count += 1

self.total_processing_time += inference_time

except queue.Empty:

continue

except Exception as e:

print(f"处理错误: {e}")

continue

def _output_loop(self):

"""输出循环"""

cv2.namedWindow("Real-time Style Transfer", cv2.WINDOW_NORMAL)

while self.running:

try:

# 从输出队列获取处理后的帧

styled_frame, inference_time, timestamp = self.output_queue.get(timeout=0.01)

# 计算延迟

current_time = time.time()

processing_delay = current_time - timestamp

# 在帧上显示统计信息

stats_text = f"FPS: {1000/inference_time:.1f} | Delay: {processing_delay*1000:.0f}ms"

cv2.putText(styled_frame, stats_text, (10, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 255, 0), 2)

# 显示帧

cv2.imshow("Real-time Style Transfer", styled_frame)

# 检查退出键

if cv2.waitKey(1) & 0xFF == ord('q'):

self.running = False

break

except queue.Empty:

continue

def stop(self):

"""停止流水线"""

self.running = False

if self.capture_thread:

self.capture_thread.join()

if self.process_thread:

self.process_thread.join()

if self.output_thread:

self.output_thread.join()

if hasattr(self, 'cap'):

self.cap.release()

cv2.destroyAllWindows()

# 打印性能统计

avg_time = self.total_processing_time / self.frame_count if self.frame_count > 0 else 0

print(f"总处理帧数: {self.frame_count}")

print(f"平均推理时间: {avg_time:.2f}ms")

print(f"平均FPS: {1000/avg_time:.1f}" if avg_time > 0 else "N/A")

5.2 FFmpeg推流到B站/抖音

import subprocess

import json

class LiveStreamPublisher:

"""直播推流器"""

def __init__(self, platform="bilibili", config_path="stream_config.json"):

self.platform = platform

self.config = self._load_config(config_path)

self.ffmpeg_process = None

def _load_config(self, config_path):

"""加载推流配置"""

default_configs = {

"bilibili": {

"server": "rtmp://live-push.bilivideo.com/live-bvc/",

"resolution": "1280x720",

"framerate": "15",

"bitrate": "1500k",

"preset": "veryfast",

"codec": "h264_nvenc" if self._check_nvenc() else "libx264"

},

"douyin": {

"server": "rtmp://push-rtmp-live.douyin.com/live/",

"resolution": "720x1280", # 竖屏

"framerate": "15",

"bitrate": "2000k",

"preset": "veryfast",

"codec": "h264_nvenc" if self._check_nvenc() else "libx264"

}

}

try:

with open(config_path, 'r') as f:

user_config = json.load(f)

platform_config = user_config.get(self.platform, {})

except:

platform_config = {}

# 合并配置

config = default_configs.get(self.platform, default_configs["bilibili"])

config.update(platform_config)

return config

def _check_nvenc(self):

"""检查NVIDIA NVENC编码器是否可用"""

try:

result = subprocess.run(

["ffmpeg", "-encoders"],

capture_output=True,

text=True

)

return "h264_nvenc" in result.stdout

except:

return False

def start_stream(self, stream_key, input_pipe="pipe:0", audio_source=None):

"""启动推流"""

# 构建FFmpeg命令

cmd = [

"ffmpeg",

"-f", "rawvideo",

"-pixel_format", "bgr24",

"-video_size", self.config["resolution"],

"-framerate", self.config["framerate"],

"-i", input_pipe, # 从管道读取视频

]

# 添加音频输入(如果提供)

if audio_source:

cmd.extend([

"-f", "pulse" if audio_source == "pulse" else "alsa",

"-i", "default" if audio_source == "alsa" else audio_source,

"-filter_complex", "[0:v][1:a]concat=n=1:v=1:a=1"

])

# 视频编码参数

cmd.extend([

"-c:v", self.config["codec"],

"-preset", self.config["preset"],

"-b:v", self.config["bitrate"],

"-bufsize", "2000k",

"-maxrate", "2500k",

"-g", str(int(self.config["framerate"]) * 2), # GOP大小

"-f", "flv",

f"{self.config['server']}{stream_key}"

])

# 启动FFmpeg进程

self.ffmpeg_process = subprocess.Popen(

cmd,

stdin=subprocess.PIPE,

stdout=subprocess.DEVNULL,

stderr=subprocess.PIPE

)

print(f"开始推流到{self.platform},分辨率: {self.config['resolution']}")

def send_frame(self, frame):

"""发送一帧到FFmpeg"""

if self.ffmpeg_process and self.ffmpeg_process.stdin:

try:

# 调整帧尺寸以匹配输出分辨率

target_res = tuple(map(int, self.config["resolution"].split('x')))

if frame.shape[:2] != target_res:

frame = cv2.resize(frame, target_res)

# 写入帧

self.ffmpeg_process.stdin.write(frame.tobytes())

return True

except Exception as e:

print(f"发送帧失败: {e}")

return False

return False

def stop_stream(self):

"""停止推流"""

if self.ffmpeg_process:

try:

self.ffmpeg_process.stdin.close()

self.ffmpeg_process.wait(timeout=5)

except:

self.ffmpeg_process.terminate()

print("推流已停止")

class LiveStreamingPipeline(RealTimeStyleTransferPipeline):

"""支持直播推流的完整流水线"""

def __init__(self, model_path, platform="bilibili", stream_key=None):

super().__init__(model_path)

# 直播推流器

self.stream_publisher = LiveStreamPublisher(platform)

self.stream_key = stream_key

self.streaming = False

def start_streaming(self, stream_key=None):

"""开始直播推流"""

if stream_key:

self.stream_key = stream_key

if not self.stream_key:

raise ValueError("必须提供stream_key")

# 启动FFmpeg推流

self.stream_publisher.start_stream(self.stream_key)

self.streaming = True

print(f"开始直播推流,平台: {self.stream_publisher.platform}")

def _output_loop(self):

"""增强的输出循环,支持推流"""

cv2.namedWindow("Real-time Style Transfer", cv2.WINDOW_NORMAL)

while self.running:

try:

# 获取处理后的帧

styled_frame, inference_time, timestamp = self.output_queue.get(timeout=0.01)

# 添加统计信息

stats_text = f"FPS: {1000/inference_time:.1f}"

cv2.putText(styled_frame, stats_text, (10, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 255, 0), 2)

# 添加直播状态指示

if self.streaming:

status_text = "LIVE"

cv2.putText(styled_frame, status_text, (styled_frame.shape[1]-100, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 0, 255), 2)

# 显示帧

cv2.imshow("Real-time Style Transfer", styled_frame)

# 推流(如果启用)

if self.streaming:

success = self.stream_publisher.send_frame(styled_frame)

if not success:

print("推流失败,尝试重新连接...")

self.stream_publisher.stop_stream()

time.sleep(1)

self.stream_publisher.start_stream(self.stream_key)

# 检查退出键

if cv2.waitKey(1) & 0xFF == ord('q'):

self.running = False

break

except queue.Empty:

continue

def stop(self):

"""停止流水线和推流"""

if self.streaming:

self.stream_publisher.stop_stream()

super().stop()

5.3 性能优化与监控仪表板

class PerformanceMonitor:

"""性能监控器"""

def __init__(self):

self.metrics = {

'fps': [],

'inference_time': [],

'preprocessing_time': [],

'postprocessing_time': [],

'total_latency': [],

'memory_usage': [],

'gpu_utilization': []

}

self.start_time = time.time()

def update_metric(self, metric_name, value):

"""更新指标"""

if metric_name in self.metrics:

self.metrics[metric_name].append(value)

# 保持最近1000个样本

if len(self.metrics[metric_name]) > 1000:

self.metrics[metric_name].pop(0)

def get_summary(self):

"""获取性能摘要"""

summary = {}

for metric_name, values in self.metrics.items():

if values:

summary[metric_name] = {

'avg': np.mean(values),

'min': np.min(values),

'max': np.max(values),

'std': np.std(values),

'current': values[-1] if values else 0

}

else:

summary[metric_name] = {

'avg': 0, 'min': 0, 'max': 0, 'std': 0, 'current': 0

}

# 计算运行时间

summary['uptime'] = time.time() - self.start_time

summary['total_frames'] = len(self.metrics['fps'])

return summary

def display_dashboard(self):

"""显示性能仪表板"""

summary = self.get_summary()

print("\n" + "="*60)

print("实时视频风格迁移性能仪表板")

print("="*60)

print(f"运行时间: {summary['uptime']:.1f}s")

print(f"总处理帧数: {summary['total_frames']}")

print(f"当前FPS: {summary['fps']['current']:.1f}")

print(f"平均FPS: {summary['fps']['avg']:.1f}")

print(f"帧率稳定性: ±{summary['fps']['std']:.1f} FPS")

print("\n处理时间分解:")

print(f" 预处理: {summary['preprocessing_time']['avg']:.1f}ms")

print(f" 推理: {summary['inference_time']['avg']:.1f}ms")

print(f" 后处理: {summary['postprocessing_time']['avg']:.1f}ms")

print(f" 总延迟: {summary['total_latency']['avg']:.1f}ms")

# 打印性能等级

avg_fps = summary['fps']['avg']

if avg_fps >= 30:

performance_grade = "A+ (极佳)"

elif avg_fps >= 20:

performance_grade = "A (优秀)"

elif avg_fps >= 15:

performance_grade = "B (良好)"

elif avg_fps >= 10:

performance_grade = "C (一般)"

else:

performance_grade = "D (需优化)"

print(f"\n性能等级: {performance_grade}")

print("="*60)

# 使用示例

def main():

# 初始化流水线

pipeline = LiveStreamingPipeline(

model_path="models/van_gogh_starry_night.onnx",

platform="bilibili",

stream_key="your_stream_key_here" # B站直播码

)

# 启动性能监控

monitor = PerformanceMonitor()

try:

# 开始捕获视频(0为默认摄像头)

pipeline.start_capture(source=0)

# 开始直播推流(可选)

# pipeline.start_streaming()

# 主循环

while pipeline.running:

# 更新性能监控

# (在实际实现中,这应该从流水线的各个组件收集数据)

time.sleep(1)

# 每5秒显示一次性能仪表板

if int(time.time()) % 5 == 0:

monitor.display_dashboard()

except KeyboardInterrupt:

print("\n用户中断")

finally:

pipeline.stop()

if __name__ == "__main__":

main()

六、性能评估与优化建议

6.1 性能基准测试结果

通过对不同硬件平台进行测试,我们得到以下性能数据:

6.2 优化建议

-

分辨率自适应策略:

def adaptive_resolution_strategy(target_fps): """根据目标帧率自适应调整分辨率""" if target_fps >= 30: return (640, 480) # 480p elif target_fps >= 20: return (480, 360) # 360p elif target_fps >= 15: return (384, 288) # 288p else: return (256, 192) # 192p -

模型精度选择:

- 高帧率场景:使用INT8量化模型

- 高质量场景:使用FP16混合精度模型

- 极端性能需求:使用INT4量化模型

-

多线程优化:

class MultiThreadedPipeline: """多线程优化流水线""" def __init__(self, num_workers=4): self.num_workers = num_workers self.worker_pool = [] # 创建多个工作线程 for i in range(num_workers): worker = StyleTransferWorker(f"worker_{i}") self.worker_pool.append(worker) # 任务分发器 self.task_queue = queue.Queue() self.result_queue = queue.Queue() def process_frame_batch(self, frames): """批量处理帧""" # 将帧分发到工作线程 for i, frame in enumerate(frames): worker_idx = i % self.num_workers self.worker_pool[worker_idx].submit_task(frame) # 收集结果 results = [] for _ in range(len(frames)): result = self.result_queue.get() results.append(result) return results

七、结论与展望

本文详细介绍了实时视频风格迁移的全栈实现方案,从模型架构设计到工程部署优化,实现了帧率≥15fps的高质量实时处理能力。

关键技术突破:

- 前馈式模型架构:采用MobileNetV2+风格迁移头,实现单次前向传播完成风格迁移

- 帧间一致性优化:创新的光流引导损失函数,有效减少视频闪烁和抖动

- 工程化部署:ONNX Runtime GPU推理优化,单帧处理时间<30ms

- 实时直播支持:完整的FFmpeg推流方案,支持B站、抖音等主流平台

实际应用价值:

- 直播内容创新:为直播主提供独特的视觉风格

- 短视频创作:实时视频特效处理

- 视频会议美化:为远程会议添加艺术风格

- 智能监控增强:在安防视频中突出关键信息

未来发展方向:

- 多风格实时切换:支持直播中动态切换不同艺术风格

- 个性化风格学习:基于用户偏好训练专属风格模型

- 跨平台优化:针对iOS/Android移动端的深度优化

- 云边端协同:利用云端计算资源增强边缘设备能力

实时视频风格迁移技术正在重新定义内容创作的边界。随着硬件性能的提升和算法的不断优化,我们期待看到更多创新的应用场景和更高质量的实现方案。

资源获取:

- 完整代码实现:[GitHub仓库链接]

- 预训练模型:[模型下载链接]

- 配置工具:[自动化部署脚本]

- 演示视频:[效果展示链接]

关键词:实时视频风格迁移,高帧率处理,MobileNetV2,ONNX Runtime,FFmpeg推流,直播技术,GPU加速,帧间一致性,深度学习优化,计算机视觉

更多推荐

已为社区贡献7条内容

已为社区贡献7条内容

所有评论(0)