uos server 1070e部署Kubernetes

本文记录了在UOS Server 1070e系统上部署Kubernetes集群的过程。采用1个控制平面节点+1个工作节点的架构,使用containerd作为容器运行时和flannel网络插件。关键步骤包括:关闭swap分区、安装必要软件包、配置主机名和hosts文件、关闭防火墙和SELinux、导入K8s镜像、初始化master节点并部署flannel网络插件,最后加入worker节点。作者特别指

今天来验证在uos server 1070e版本上部署Kubernetes,我在这里还是使用虚拟机方式部署验证,使用集群由1个控制平面节点 + 1个工作节点组成,节点使用containerd作为容器运行时,集群使用flannel作为Pod网络插件。

1. 环境准备

不要创建swap分区;若已安装的系统存在swap分区,执行 swapoff -a 临时关闭

需开启everything、Kubernetes、Preview仓库

2. 部署Kubernetes

安装CRI容器运行时、CRI命令行工具、CNI网络插件、Kubernetes

# yum install -y containerd runc cri-tools containernetworking-plugins cni-plugin-flannel kubernetes-node kubernetes-client kubernetes-kubeadm

# hostnamectl set-hostname control

# hostnamectl set-hostname node1

# cat >> /etc/hosts << EOF

192.168.15.58 control

192.168.15.59 node1

EOF

需要在所有节点执行

# systemctl disable --now firewalld

# setenforce 0

# sed -i s'/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

开启IPv4数据包转发

# sed -i 's/net.ipv4.ip_forward=0/net.ipv4.ip_forward=1/g' /etc/sysctl.conf && sysctl -p

开启containerd和kubelet服务

# systemctl enable --now containerd

# systemctl enable kubelet

可以先在uos registry.uniontech.com拉取容器镜像,手动导入离线容器镜像

# ctr -n k8s.io images import /usr/share/k8s-container-images/kube-apiserver.tar

# ctr -n k8s.io images import /usr/share/k8s-container-images/kube-controller-manager.tar

# ctr -n k8s.io images import /usr/share/k8s-container-images/kube-scheduler.tar

# ctr -n k8s.io images import /usr/share/k8s-container-images/kube-proxy.tar

# ctr -n k8s.io images import /usr/share/k8s-container-images/coredns.tar

# ctr -n k8s.io images import /usr/share/k8s-container-images/pause.tar

# ctr -n k8s.io images import /usr/share/k8s-container-images/etcd.tar

# ctr -n k8s.io images import /usr/share/k8s-container-images/flannel.tar

都导入完成后开始正式部署集群,这里可以记录一下如果registry.uniontech.com访问不了也可以直接用k8s社区的容器镜像,我自己测试x86架构的是可以的

# kubeadm init --pod-network-cidr=192.168.15.0/24

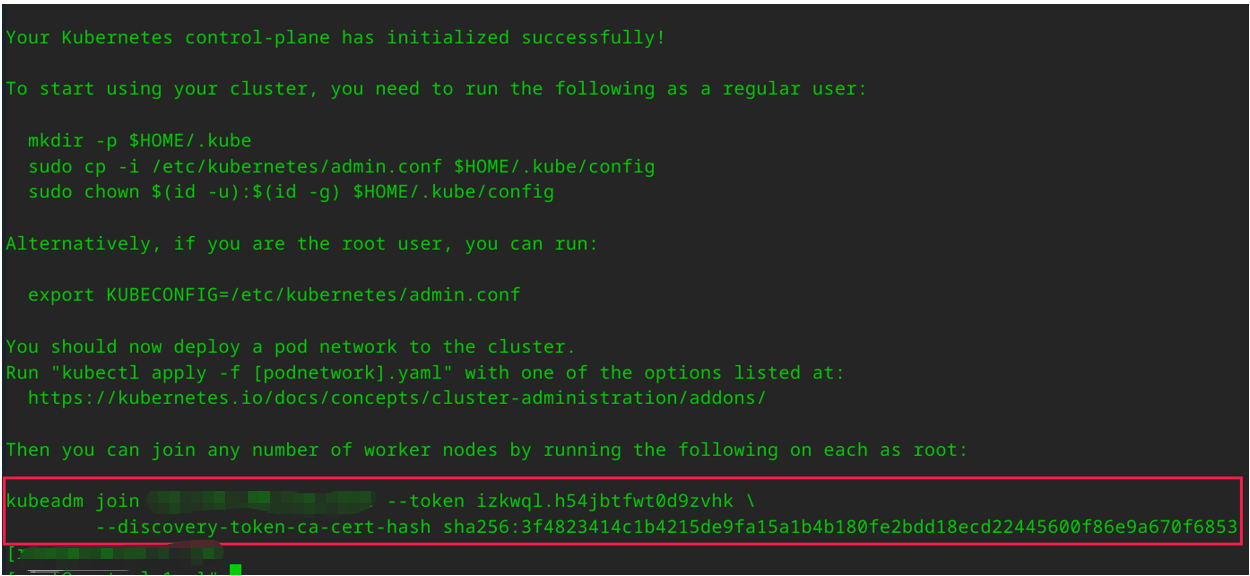

初始化成功后,会看到如下图所示输出:

注意:记录下命令输出中这串,后续加入work节点会用到

配置集群配置文件:

运行kubectl执行如下命令:

# mkdir -p $HOME/.kube

# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# sudo chown $(id -u):$(id -g) $HOME/.kube/config

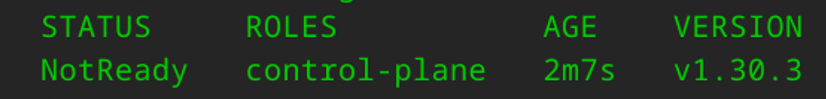

查看当前集群节点

# kubectl get node

由于尚未部署网络插件,当前集群中的master节点为NotReady状态

# kubectl apply -f kube-flannel.yml

kube-flannel.yml 我贴出来了,但是在复制粘贴时候需要注意格式:

---

kind: Namespace

apiVersion: v1

metadata:

name: kube-flannel

labels:

pod-security.kubernetes.io/enforce: privileged

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-flannel

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-flannel

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "192.168.15.0/24",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-flannel

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: registry.uniontech.com/uos-app/flannel:v0.19.1

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: registry.uniontech.com/uos-app/flannel:v0.19.1

command:

- /usr/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: xtables-lock

mountPath: /run/xtables.lock

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

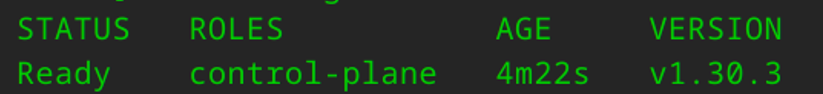

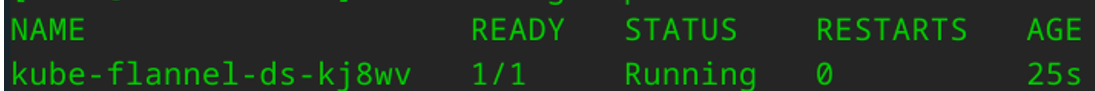

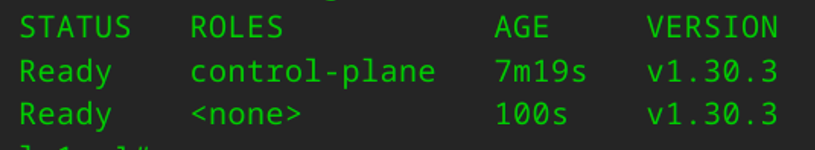

查看flannel相关pod为Running状态,并且当前集群中的master节点变为Ready状态,如下图所示

# kubectl get node

# kubectl get pod -n kubu-flannel

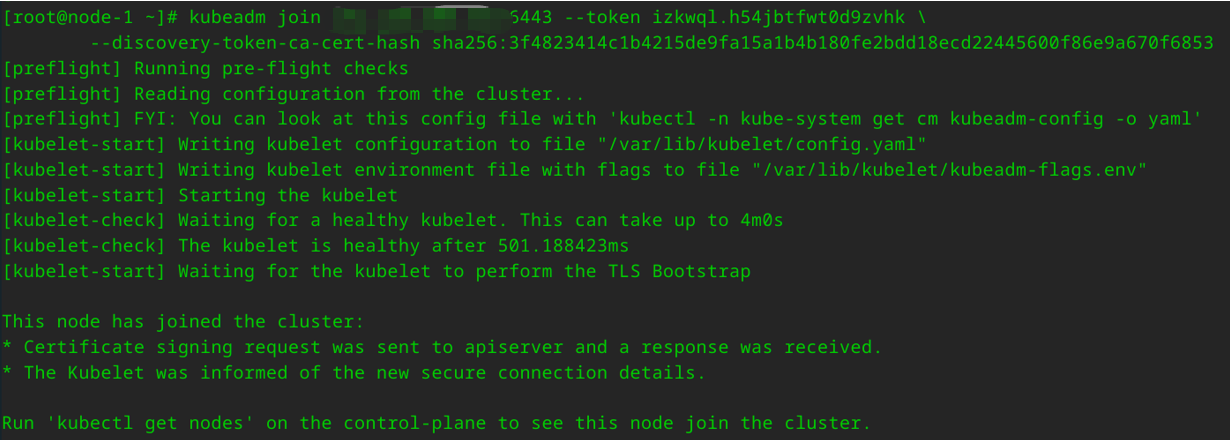

在worker节点上,执行上面kubeadm init命令输出信息中

在master节点上,查看当前集群节点信息,可以看到当前工作节点已加入集群,示例输出如下图

到这里k8s的集群就部署完成了,今天我在uos上面k8s集群真的是花了不少时间,主要是containernetworking-plugins包在之前centos上面没有,导致我的CNI网络插件一直都是NotReady状态。

更多推荐

已为社区贡献3条内容

已为社区贡献3条内容

所有评论(0)