智能体RAG与MCP架构实战

【技术架构摘要】本文介绍了一套基于AI智能体+RAG+MCP协议的智能系统架构。核心采用三层设计:AIAgent负责任务调度与执行,RAG引擎实现检索增强(结合Elasticsearch/Milvus向量库),MCP客户端处理外部协议交互。关键技术包括:1)多智能体协作框架,支持代码生成、数据分析等场景;2)混合检索算法,融合语义与关键词搜索;3)MCP协议标准化集成。系统通过SpringBoot

·

目录

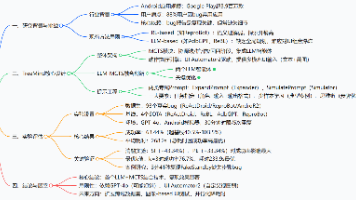

技术概览与架构设计

核心架构图

┌─────────────────┐ ┌─────────────────┐ ┌─────────────────┐ │ AI Agent │◄──►│ RAG Engine │◄──►│ MCP Client │ │ (智能体) │ │ (检索增强) │ │ (客户端) │ └─────────────────┘ └─────────────────┘ └─────────────────┘ │ │ │ ▼ ▼ ▼ ┌─────────────────┐ ┌─────────────────┐ ┌─────────────────┐ │ 任务调度与执行 │ │ 向量数据库 │ │ 上下文管理 │ │ │ │ │ │ │ └─────────────────┘ └─────────────────┘ └─────────────────┘

技术栈选择

-

AI Agent: Spring AI + LangChain4j + OpenAI

-

RAG: Elasticsearch + Milvus + Embedding + ChromaDB

-

MCP: 官方MCP Client SDK

-

整体框架: Spring Boot + Spring WebFlux

项目依赖配置

Maven 依赖配置

pom.xml 核心依赖

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>3.2.0</version>

<relativePath/>

</parent>

<properties>

<java.version>17</java.version>

<spring-ai.version>1.0.0-M3</spring-ai.version>

<langchain4j.version>0.29.1</langchain4j.version>

</properties>

<dependencies>

<!-- Spring AI 核心依赖 -->

<dependency>

<groupId>org.springframework.experimental</groupId>

<artifactId>spring-ai-core</artifactId>

<version>${spring-ai.version}</version>

</dependency>

<dependency>

<groupId>org.springframework.experimental</groupId>

<artifactId>spring-ai-openai</artifactId>

<version>${spring-ai.version}</version>

</dependency>

<dependency>

<groupId>org.springframework.experimental</groupId>

<artifactId>spring-ai-bom</artifactId>

<version>${spring-ai.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<!-- LangChain4j -->

<dependency>

<groupId>dev.langchain4j</groupId>

<artifactId>langchain4j-open-ai</artifactId>

<version>${langchain4j.version}</version>

</dependency>

<dependency>

<groupId>dev.langchain4j</groupId>

<artifactId>langchain4j-embeddings-openai</artifactId>

<version>${langchain4j.version}</version>

</dependency>

<!-- RAG 技术栈 -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-elasticsearch</artifactId>

</dependency>

<dependency>

<groupId>io.milvus</groupId>

<artifactId>milvus-sdk-java</artifactId>

<version>2.3.4</version>

</dependency>

<dependency>

<groupId>dev.langchain4j</groupId>

<artifactId>langchain4j-chroma</artifactId>

<version>${langchain4j.version}</version>

</dependency>

<!-- MCP 官方 Client SDK -->

<dependency>

<groupId>ai.modelcontextprotocol</groupId>

<artifactId>mcp-client-java</artifactId>

<version>0.4.0</version>

</dependency>

<!-- Spring Boot 核心 -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-webflux</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<!-- 监控和指标 -->

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-registry-prometheus</artifactId>

</dependency>

<dependency>

<groupId>io.zipkin.brave</groupId>

<artifactId>brave-spring-boot-starter</artifactId>

<version>5.16.0</version>

</dependency>

<!-- 测试依赖 -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

</dependencies>

<repositories>

<repository>

<id>spring-milestones</id>

<name>Spring Milestones</name>

<url>https://repo.spring.io/milestone</url>

<snapshots><enabled>false</enabled></snapshots>

</repository>

<repository>

<id>maven-central</id>

<name>Maven Central</name>

<url>https://repo1.maven.org/maven2/</url>

</repository>

</repositories>

配置文件示例

application.yml 配置

spring:

ai:

openai:

api-key: ${OPENAI_API_KEY:your-openai-api-key}

chat:

options:

model: gpt-3.5-turbo

temperature: 0.7

max-tokens: 2000

embedding:

options:

model: text-embedding-ada-002

data:

elasticsearch:

uris: ${ES_HOST:http://localhost:9200}

username: ${ES_USERNAME:elastic}

password: ${ES_PASSWORD:changeme}

redis:

host: ${REDIS_HOST:localhost}

port: ${REDIS_PORT:6379}

password: ${REDIS_PASSWORD:}

# MCP Client 配置

mcp:

client:

server-url: ${MCP_SERVER_URL:https://mcp-server.example.com}

timeout: 30000

pool-size: 10

retry-count: 3

retry-delay: 1000

api-key: ${MCP_API_KEY:your-mcp-api-key}

# Milvus 向量数据库配置

milvus:

uri: ${MILVUS_URI:http://localhost:19530}

collection-name: ${MILVUS_COLLECTION:document_embeddings}

embedding-dimension: 1536

# 监控配置

management:

endpoints:

web:

exposure:

include: "*"

metrics:

export:

prometheus:

enabled: true

AI智能体核心实现

1. Agent基础架构

智能体引擎

package com.example.ai.agent;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Component;

import org.springframework.ai.chat.model.ChatRequest;

import org.springframework.ai.chat.model.ChatResponse;

import reactor.core.publisher.Mono;

import java.util.List;

import java.util.Map;

import lombok.extern.slf4j.Slf4j;

@Component

@Slf4j

public class AgentEngine {

private final Map<AgentType, BaseAgent> agents;

private final TaskDispatcher taskDispatcher;

private final ContextManager contextManager;

@Autowired

public AgentEngine(TaskDispatcher taskDispatcher, ContextManager contextManager) {

this.taskDispatcher = taskDispatcher;

this.contextManager = contextManager;

this.agents = initializeAgents();

}

/**

* 智能体任务执行入口

*/

public Mono<AgentResponse> executeTask(AgentRequest request) {

return Mono.fromCallable(() -> {

// 1. 任务分析

TaskContext context = analyzeTask(request);

// 2. 智能体选择

BaseAgent agent = selectAgent(context);

// 3. 上下文准备

ContextSnapshot snapshot = contextManager.snapshotContext();

return agent;

})

.flatMap(agent -> agent.execute(request))

.doOnNext(response -> contextManager.updateContext(request, response));

}

private TaskContext analyzeTask(AgentRequest request) {

// 关键词提取和意图识别

List<String> keywords = extractKeywords(request.getContent());

Intent intent = classifyIntent(keywords);

Priority priority = calculatePriority(intent, request);

return TaskContext.builder()

.withKeywords(keywords)

.withIntent(intent)

.withPriority(priority)

.withRequiredResources(identifyResources(intent))

.build();

}

private BaseAgent selectAgent(TaskContext context) {

switch (context.getIntent()) {

case DOCUMENT_PROCESSING:

return agents.get(AgentType.DOCUMENT_AGENT);

case CODE_GENERATION:

return agents.get(AgentType.CODE_AGENT);

case DATA_ANALYSIS:

return agents.get(AgentType.DATA_AGENT);

default:

return agents.get(AgentType.GENERAL_AGENT);

}

}

}

2. 专用智能体实现

代码生成智能体

@Service

@Slf4j

public class CodeAgent extends BaseAgent {

private final RAGService ragService;

private final CodeGenerator codeGenerator;

private final SyntaxValidator syntaxValidator;

private final MCPClientManager mcpHandler;

private final AIService aiService;

/**

* 代码生成核心逻辑

*/

@Override

public Mono<AgentResponse> execute(AgentRequest request) {

// 1. RAG检索相关代码片段

List<CodeContext> codeContexts = ragService.searchCodeContext(request.getContent());

// 2. MCP协议处理

MCPMessage mcpContext = mcpHandler.processContext(codeContexts);

// 3. 生成代码优化提示

String enhancedPrompt = buildCodePrompt(request, codeContexts, mcpContext);

// 4. AI代码生成

return aiService.generateCode(enhancedPrompt)

.flatMap(codeResponse -> {

String generatedCode = codeResponse.getCode();

// 5. 语法验证和优化

return validateAndOptimizeCode(generatedCode, request)

.map(optimizedCode -> AgentResponse.builder()

.withTaskId(request.getTaskId())

.withType(ResponseType.CODE_GENERATION)

.withContent(optimizedCode)

.withQualityScore(calculateQualityScore(generatedCode, optimizedCode))

.withDebugInfo(codeResponse.getDebugInfo())

.build());

});

}

private String buildCodePrompt(AgentRequest request,

List<CodeContext> contexts,

MCPMessage mcpContext) {

StringBuilder prompt = new StringBuilder();

prompt.append("基于以下上下文,生成高质量的代码:\n\n");

// 添加RAG检索到的相关代码

contexts.forEach(ctx -> {

prompt.append("相关代码示例:\n");

prompt.append(ctx.getCodeSnippet()).append("\n");

prompt.append("使用说明:").append(ctx.getExplanation()).append("\n\n");

});

// 添加MCP处理后的上下文

prompt.append("项目上下文:\n");

prompt.append(mcpContext.getFormattedContext()).append("\n\n");

// 用户需求

prompt.append("用户需求:").append(request.getContent()).append("\n\n");

prompt.append("要求:\n");

prompt.append("- 代码规范,注释完整\n");

prompt.append("- 错误处理完善\n");

prompt.append("- 性能优化合理\n");

prompt.append("- 遵循最佳实践\n");

return prompt.toString();

}

}

RAG检索增强生成

1. RAG核心引擎

检索增强服务

@Service

public class RAGService {

private final EmbeddingClient embeddingClient;

private final VectorStore vectorStore;

private final DocumentProcessor documentProcessor;

private final SimilarityCalculator similarityCalculator;

/**

* 文档索引构建

*/

@Async

public CompletableFuture<Void> buildIndex(List<Document> documents) {

return CompletableFuture.supplyAsync(() -> {

documents.parallelStream().forEach(doc -> {

try {

// 1. 文档预处理

ProcessedDocument processed = documentProcessor.process(doc);

// 2. 分块处理

List<DocumentChunk> chunks = chunkDocument(processed);

// 3. 生成嵌入向量

chunks.parallelStream().forEach(chunk -> {

List<Double> embedding = generateEmbedding(chunk.getContent());

VectorRecord record = VectorRecord.builder()

.withId(chunk.getId())

.withContent(chunk.getContent())

.withEmbedding(embedding)

.withMetadata(chunk.getMetadata())

.build();

vectorStore.store(record);

});

} catch (Exception e) {

log.error("文档处理失败: {}", doc.getId(), e);

}

});

return null;

});

}

/**

* 语义检索核心算法

*/

public List<SearchResult> semanticSearch(String query,

SearchOptions options) {

// 1. 查询向量化

List<Double> queryEmbedding = generateEmbedding(query);

// 2. 向量相似度检索

List<VectorRecord> candidates = vectorStore.search(queryEmbedding,

options.getTopK());

// 3. 重新排序

List<SearchResult> results = candidates.stream()

.map(candidate -> SearchResult.builder()

.withId(candidate.getId())

.withContent(candidate.getContent())

.withScore(similarityCalculator.calculate(queryEmbedding,

candidate.getEmbedding()))

.withMetadata(candidate.getMetadata())

.build())

.sorted((a, b) -> Double.compare(b.getScore(), a.getScore()))

.limit(options.getMaxResults())

.collect(Collectors.toList());

// 4. 多样性过滤(可选)

if (options.isEnableDiversityFilter()) {

results = diversityFilter.filter(results, options.getDiversityThreshold());

}

return results;

}

/**

* 混合检索策略

*/

public List<SearchResult> hybridSearch(String query, SearchOptions options) {

List<SearchResult> semanticResults = semanticSearch(query, options);

List<SearchResult> keywordResults = keywordSearch(query, options);

// BM25 + 向量相似度混合算法

Map<String, Double> combinedScores = new HashMap<>();

// 语义搜索结果归一化

semanticResults.forEach(result -> {

String id = result.getId();

double normalizedScore = normalizeScore(result.getScore(),

semanticResults.stream()

.mapToDouble(SearchResult::getScore)

.max().orElse(1.0));

combinedScores.put(id, normalizedScore * options.getWeight("semantic"));

});

// 关键词搜索结果归一化

keywordResults.forEach(result -> {

String id = result.getId();

double existingScore = combinedScores.getOrDefault(id, 0.0);

double keywordNormalized = normalizeScore(result.getScore(),

keywordResults.stream()

.mapToDouble(SearchResult::getScore)

.max().orElse(1.0));

combinedScores.put(id, existingScore + keywordNormalized * options.getWeight("keyword"));

});

// 融合排序

return combinedScores.entrySet().stream()

.sorted(Map.Entry.<String, Double>comparingByValue().reversed())

.map(entry -> enrichWithContent(entry.getKey(), entry.getValue()))

.collect(Collectors.toList());

}

}

MCP协议处理

1. MCP Client 集成

MCP客户端配置

@Component

@ConfigurationProperties(prefix = "mcp.client")

public class MCPClientManager {

private String serverUrl;

private Duration timeout = Duration.ofSeconds(30);

private int poolSize = 10;

private int retryCount = 3;

private Duration retryDelay = Duration.ofSeconds(1);

private String apiKey;

private final MCPClient mcpClient;

@PostConstruct

public void initializeMCPClient() {

// 使用官方MCP Client SDK

this.mcpClient = MCPClient.builder()

.serverUrl(serverUrl)

.timeout(timeout)

.connectionPoolSize(poolSize)

.retryCount(retryCount)

.retryDelay(retryDelay)

.apiKey(apiKey)

.build();

}

/**

* 调用外部MCP服务器进行模型上下文处理

*/

public Mono<MCPResponse> processWithExternalServer(MCPRequest request) {

return Mono.fromCallable(() -> {

// 1. 构建MCP工具调用请求

MCPToolCall toolCall = MCPToolCall.builder()

.withId(UUID.randomUUID().toString())

.withName(request.getToolName())

.withArguments(request.getArguments())

.withMetadata(buildMetadata(request))

.build();

// 2. 发送到外部MCP服务器

MCPResponse response = mcpClient.callTool(toolCall);

// 3. 处理响应结果

return processMCPResponse(response);

})

.subscribeOn(Schedulers.boundedElastic())

.timeout(timeout)

.doOnError(error ->

log.warn("MCP服务器调用失败: {}", serverUrl, error));

}

/**

* 获取MCP服务器可用工具列表

*/

public Mono<List<MCPToolInfo>> getAvailableTools() {

return Mono.fromCallable(() -> mcpClient.listTools())

.subscribeOn(Schedulers.boundedElastic())

.timeout(Duration.ofSeconds(10));

}

private Metadata buildMetadata(MCPRequest request) {

return Metadata.builder()

.withTimestamp(System.currentTimeMillis())

.withRequestType(request.getType())

.withVersion("1.0")

.build();

}

private MCPResponse processMCPResponse(MCPResponse rawResponse) {

// 响应处理和验证

return MCPResponse.builder()

.withId(rawResponse.getId())

.withContent(rawResponse.getContent())

.withMetadata(rawResponse.getMetadata())

.withProcessingTime(rawResponse.getProcessingTime())

.build();

}

}

2. 快速启动指南

环境要求

# Java 环境 java --version # Java 17+ mvn --version # Maven 3.6+ # 基础服务 docker --version docker-compose --version

启动步骤

# 1. 启动基础服务 (Elasticsearch, Redis, Milvus) docker-compose up -d elasticsearch redis milvus # 2. 启动Java应用 mvn clean install mvn spring-boot:run

Docker Compose 配置

# docker-compose.yml

version: '3.8'

services:

elasticsearch:

image: elasticsearch:8.11.0

environment:

- discovery.type=single-node

- xpack.security.enabled=false

ports:

- "9200:9200"

redis:

image: redis:7-alpine

ports:

- "6379:6379"

milvus:

image: milvusdb/milvus:v2.3.4

ports:

- "19530:19530"

environment:

ETCD_ENDPOINTS: etcd:2379

MINIO_ADDRESS: minio:9000

完整业务场景实战

智能代码助手完整流程

CodeAssistantController

@RestController

@RequestMapping("/api/code-assistant")

public class CodeAssistantController {

private final AgentEngine agentEngine;

private final RAGService ragService;

private final MCPClientManager mcpClientManager;

/**

* 智能代码生成完整流程

*/

@PostMapping("/generate")

public Mono<CodeGenerationResponse> generateCode(@RequestBody CodeGenerationRequest request) {

String sessionId = request.getSessionId();

return Mono.just(request)

// 1. RAG检索阶段

.flatMap(req -> ragService.searchCodeContext(req.getRequirements())

.flatMap(contexts -> {

// 2. RAG结果处理

List<SearchResult> ragResults = enrichSearchResults(contexts);

// 3. MCP上下文处理

return mcpClientManager.processWithExternalServer(

buildMCPRequest(req, ragResults))

.flatMap(mcpMessage -> {

// 4. Agent任务执行

AgentRequest agentRequest = buildAgentRequest(req, ragResults, mcpMessage);

return agentEngine.executeTask(agentRequest);

});

}))

// 5. 结果优化和反馈

.flatMap(response -> enhanceResponse(response, request))

// 6. 错误处理和重试

.retryWhen(Retry.fixedDelay(2, Duration.ofSeconds(1))

.filter(throwable -> throwable instanceof TemporaryException))

.doOnError(error -> log.error("代码生成失败", error))

.subscribeOn(Schedulers.boundedElastic());

}

}

关键技术点详解

1. 智能体调度算法

TaskScheduler

@Component

public class TaskScheduler {

/**

* 智能体调度核心算法

*/

public Mono<SchedulingResult> scheduleTask(Task task) {

return Mono.fromCallable(() -> {

// 1. 分析任务特性

TaskAnalysis analysis = analyzeTask(task);

// 2. 选择最优智能体

Agent bestAgent = selectOptimalAgent(analysis);

// 3. 资源分配

ResourceAllocation allocation = allocateResources(bestAgent, analysis);

// 4. 任务分解

List<SubTask> subTasks = decomposeTask(task, analysis);

return SchedulingResult.builder()

.withAgent(bestAgent)

.withAllocation(allocation)

.withSubTasks(subTasks)

.withEstimatedDuration(estimateDuration(allocation, subTasks))

.withPriority(task.getPriority())

.build();

}).subscribeOn(Schedulers.boundedElastic());

}

}

2. RAG重排序算法

ReRankingEngine

@Service

public class ReRankingEngine {

/**

* 神经网络重排序

*/

public List<SearchResult> neuralReRank(List<SearchResult> initialResults,

QueryContext context) {

// 1. 特征提取

List<FeatureVector> features = extractFeatures(initialResults, context);

// 2. 神经网络推理

Map<String, Double> relevanceScores = neuralNetworkInference(features);

// 3. 重排序

return initialResults.stream()

.map(result -> {

double originalScore = result.getScore();

double neuralScore = relevanceScores.get(result.getId());

// 融合传统检索分数和神经网络分数

double finalScore = combineScores(originalScore, neuralScore);

return result.withScore(finalScore);

})

.sorted((a, b) -> Double.compare(b.getScore(), a.getScore()))

.collect(Collectors.toList());

}

}

总结

本文详细介绍了智能体、RAG和MCP三大技术的核心应用:

核心技术点:

-

AI Agent: 智能任务调度、多智能体协作

-

RAG: 语义检索、混合搜索、实时增强

-

MCP Client: 外部MCP服务器调用、工具列表获取

关键技术优势:

-

智能化: Agent自主决策和任务分解

-

增强性: RAG提供实时知识检索和上下文增强

-

标准化: MCP Client连接外部服务器,无需自建

实际应用场景:

-

智能代码生成助手

-

文档智能问答系统

-

多模态内容处理平台

-

企业级AI工作流

这些技术的结合能够构建更加智能、高效、可扩展的AI应用系统,通过MCP Client可以灵活连接各种外部智能服务。

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)