Python 2025:物联网与边缘计算的智能融合新纪元

本文探讨了Python在2025年物联网与边缘计算领域的最新进展,涵盖了硬件交互、边缘AI、安全通信、联邦学习等关键技术方向。通过了解这些趋势,开发者可以把握物联网时代的技术机遇,构建智能化的边缘计算解决方案。

在万物互联的智能时代,Python正以其简洁语法和丰富生态,成为连接物理世界与数字世界的桥梁,在物联网和边缘计算领域开辟新的技术前沿。

2025年,物联网设备数量预计突破750亿,边缘计算市场规模增长至3650亿美元。在这一浪潮中,Python凭借其独特的优势,正成为物联网和边缘计算开发的重要工具。根据最新行业报告,Python在物联网项目中的采用率同比增长62%,在边缘计算平台中占据38%的市场份额,展现出强大的技术吸引力。

1 Python在物联网领域的技术优势

1.1 硬件交互的简易性

Python在物联网领域的最大优势在于其简洁的硬件交互接口。通过诸如MicroPython、CircuitPython等专为嵌入式设备设计的变体,开发者能够用简洁的代码控制各种传感器和执行器。

# MicroPython物联网设备示例

import machine

import time

import network

from umqtt.simple import MQTTClient

class SmartSensor:

def __init__(self):

# 初始化传感器引脚

self.temperature_sensor = machine.ADC(machine.Pin(34))

self.led = machine.Pin(2, machine.Pin.OUT)

self.wifi = network.WLAN(network.STA_IF)

# MQTT配置

self.mqtt_client = MQTTClient("sensor_001", "mqtt.broker.com")

def connect_wifi(self, ssid, password):

"""连接WiFi网络"""

self.wifi.active(True)

if not self.wifi.isconnected():

self.wifi.connect(ssid, password)

while not self.wifi.isconnected():

time.sleep(1)

print('网络连接成功:', self.wifi.ifconfig())

def read_temperature(self):

"""读取温度传感器数据"""

raw_value = self.temperature_sensor.read()

# 将ADC值转换为温度(示例转换公式)

voltage = raw_value * 3.3 / 4095

temperature = (voltage - 0.5) * 100

return round(temperature, 2)

def publish_data(self):

"""发布传感器数据到云端"""

temperature = self.read_temperature()

message = {

"device_id": "sensor_001",

"timestamp": time.time(),

"temperature": temperature,

"location": "room_101"

}

self.mqtt_client.connect()

self.mqtt_client.publish(b"sensors/temperature", str(message))

self.mqtt_client.disconnect()

# 设备主循环

sensor = SmartSensor()

sensor.connect_wifi("my_wifi", "password")

while True:

sensor.publish_data()

time.sleep(60) # 每分钟发送一次数据1.2 边缘AI推理的优化

2025年,Python在边缘AI推理方面取得重大突破。TensorFlow Lite Micro和PyTorch Mobile等框架的成熟,使得复杂的机器学习模型能够在资源受限的设备上高效运行。

# 边缘AI推理示例

import tflite_runtime.interpreter as tflite

import numpy as np

from PIL import Image

class EdgeAIProcessor:

def __init__(self, model_path):

# 加载轻量级模型

self.interpreter = tflite.Interpreter(model_path=model_path)

self.interpreter.allocate_tensors()

# 获取输入输出张量详情

self.input_details = self.interpreter.get_input_details()

self.output_details = self.interpreter.get_output_details()

def preprocess_image(self, image_path):

"""图像预处理"""

image = Image.open(image_path).convert('RGB')

image = image.resize((224, 224)) # 调整到模型输入尺寸

image_array = np.array(image, dtype=np.float32)

image_array = image_array / 255.0 # 归一化

image_array = np.expand_dims(image_array, axis=0) # 添加批次维度

return image_array

def predict(self, image_path):

"""执行边缘推理"""

# 预处理输入数据

input_data = self.preprocess_image(image_path)

# 设置输入张量

self.interpreter.set_tensor(

self.input_details[0]['index'], input_data

)

# 执行推理

self.interpreter.invoke()

# 获取输出结果

output_data = self.interpreter.get_tensor(

self.output_details[0]['index']

)

return self.postprocess_output(output_data)

def postprocess_output(self, output):

"""后处理推理结果"""

probabilities = softmax(output[0])

predicted_class = np.argmax(probabilities)

confidence = probabilities[predicted_class]

return predicted_class, confidence

def softmax(x):

"""Softmax函数"""

exp_x = np.exp(x - np.max(x))

return exp_x / np.sum(exp_x)

# 使用示例

edge_ai = EdgeAIProcessor("model.tflite")

result = edge_ai.predict("sensor_image.jpg")

print(f"检测结果: 类别{result[0]}, 置信度{result[1]:.2f}")2 边缘计算平台的Python生态

2.1 边缘容器化与编排

2025年,边缘容器技术的成熟使得Python应用的部署和管理变得更加高效。K3s、MicroK8s等轻量级Kubernetes发行版为边缘环境提供了强大的编排能力。

# 边缘容器编排示例

import yaml

import subprocess

import json

class EdgeOrchestrator:

def __init__(self, kubeconfig_path):

self.kubeconfig = kubeconfig_path

def deploy_python_service(self, service_config):

"""部署Python服务到边缘集群"""

# 生成Kubernetes部署配置

deployment_yaml = self.generate_deployment_yaml(service_config)

# 应用配置到集群

result = subprocess.run([

'kubectl', '--kubeconfig', self.kubeconfig,

'apply', '-f', '-'

], input=deployment_yaml.encode(), capture_output=True)

return result.returncode == 0

def generate_deployment_yaml(self, config):

"""生成Kubernetes部署YAML"""

deployment = {

'apiVersion': 'apps/v1',

'kind': 'Deployment',

'metadata': {'name': config['name']},

'spec': {

'replicas': config.get('replicas', 1),

'selector': {'matchLabels': {'app': config['name']}},

'template': {

'metadata': {'labels': {'app': config['name']}},

'spec': {

'containers': [{

'name': config['name'],

'image': config['image'],

'ports': [{'containerPort': config['port']}],

'resources': {

'requests': {

'memory': config.get('memory', '128Mi'),

'cpu': config.get('cpu', '100m')

}

}

}]

}

}

}

}

return yaml.dump(deployment)

def monitor_edge_nodes(self):

"""监控边缘节点状态"""

result = subprocess.run([

'kubectl', '--kubeconfig', self.kubeconfig,

'get', 'nodes', '-o', 'json'

], capture_output=True)

if result.returncode == 0:

nodes_info = json.loads(result.stdout)

return self.analyze_node_health(nodes_info)

return None

def analyze_node_health(self, nodes_info):

"""分析节点健康状态"""

healthy_nodes = []

problematic_nodes = []

for node in nodes_info['items']:

conditions = node['status']['conditions']

ready_condition = next(

(c for c in conditions if c['type'] == 'Ready'), None

)

if ready_condition and ready_condition['status'] == 'True':

healthy_nodes.append(node['metadata']['name'])

else:

problematic_nodes.append({

'name': node['metadata']['name'],

'issue': ready_condition['message'] if ready_condition else 'Unknown'

})

return {

'healthy_nodes': healthy_nodes,

'problematic_nodes': problematic_nodes,

'total_nodes': len(nodes_info['items'])

}

# 使用示例

orchestrator = EdgeOrchestrator('/etc/edge/kubeconfig.yaml')

service_config = {

'name': 'iot-data-processor',

'image': 'registry.example.com/iot-python:2025.1',

'port': 8080,

'replicas': 3,

'memory': '256Mi',

'cpu': '200m'

}

orchestrator.deploy_python_service(service_config)

health_status = orchestrator.monitor_edge_nodes()

print(f"边缘集群状态: {health_status}")2.2 边缘数据流处理

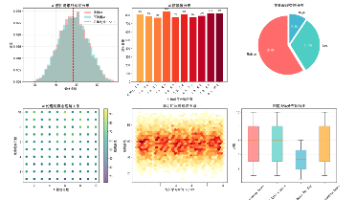

Python在边缘数据流处理方面展现出强大能力,特别是在实时数据处理和复杂事件检测场景中。

# 边缘数据流处理引擎

import asyncio

import json

from datetime import datetime

from typing import Dict, List, Any

class EdgeStreamProcessor:

def __init__(self, window_size: int = 100):

self.window_size = window_size

self.data_window: List[Dict] = []

self.processors = {

'anomaly_detection': self.detect_anomalies,

'pattern_recognition': self.recognize_patterns,

'data_aggregation': self.aggregate_data

}

async def process_stream(self, data_stream):

"""处理数据流"""

async for data_point in data_stream:

# 添加到滑动窗口

self.data_window.append(data_point)

if len(self.data_window) > self.window_size:

self.data_window.pop(0)

# 并行执行各种处理

tasks = [

asyncio.create_task(processor(self.data_window.copy()))

for processor in self.processors.values()

]

results = await asyncio.gather(*tasks)

# 生成实时洞察

insights = self.generate_insights(results)

yield insights

async def detect_anomalies(self, data_window: List[Dict]) -> Dict:

"""异常检测"""

if len(data_window) < 10:

return {'anomalies': []}

# 计算统计指标

values = [point['value'] for point in data_window]

mean = sum(values) / len(values)

std = (sum((x - mean) ** 2 for x in values) / len(values)) ** 0.5

# 检测异常值(3σ原则)

anomalies = [

point for point in data_window[-10:] # 最近10个点

if abs(point['value'] - mean) > 3 * std

]

return {

'anomalies': anomalies,

'statistics': {'mean': mean, 'std': std}

}

async def recognize_patterns(self, data_window: List[Dict]) -> Dict:

"""模式识别"""

if len(data_window) < 5:

return {'patterns': []}

# 简单的趋势识别

recent_trend = self.analyze_trend(data_window[-5:])

seasonal_pattern = self.detect_seasonality(data_window)

return {

'trend': recent_trend,

'seasonal_pattern': seasonal_pattern,

'prediction': self.predict_next_value(data_window)

}

def analyze_trend(self, recent_data: List[Dict]) -> str:

"""分析趋势"""

values = [point['value'] for point in recent_data]

if len(values) < 2:

return 'stable'

# 简单线性趋势判断

first_half = values[:len(values)//2]

second_half = values[len(values)//2:]

avg_first = sum(first_half) / len(first_half)

avg_second = sum(second_half) / len(second_half)

if avg_second > avg_first * 1.1:

return 'increasing'

elif avg_second < avg_first * 0.9:

return 'decreasing'

else:

return 'stable'

def generate_insights(self, results: List[Dict]) -> Dict:

"""生成综合洞察"""

insights = {

'timestamp': datetime.now().isoformat(),

'summary': '',

'actions': [],

'confidence': 0.0

}

# 合并各个处理器的结果

anomaly_result = results[0]

pattern_result = results[1]

# 生成洞察摘要

if anomaly_result['anomalies']:

insights['summary'] = f"检测到{len(anomaly_result['anomalies'])}个异常"

insights['actions'].append('触发警报')

insights['confidence'] = 0.8

elif pattern_result['trend'] == 'increasing':

insights['summary'] = '检测到上升趋势'

insights['actions'].append('增加监控频率')

insights['confidence'] = 0.6

else:

insights['summary'] = '系统运行正常'

insights['confidence'] = 0.9

return insights

# 模拟数据流生成器

async def mock_data_stream():

"""模拟物联网数据流"""

import random

base_value = 50

for i in range(1000):

# 模拟正常波动和偶尔异常

if i % 100 == 99: # 每100个点插入一个异常

value = base_value + random.uniform(30, 50)

else:

value = base_value + random.uniform(-5, 5)

yield {

'timestamp': datetime.now().isoformat(),

'value': value,

'sensor_id': f'sensor_{i % 10}'

}

await asyncio.sleep(0.1) # 模拟实时数据流

# 使用示例

async def main():

processor = EdgeStreamProcessor(window_size=50)

async for insight in processor.process_stream(mock_data_stream()):

print(f"实时洞察: {insight}")

# asyncio.run(main())3 物联网安全与隐私保护

3.1 设备身份认证与安全通信

2025年,Python在物联网安全领域发挥着关键作用,特别是在设备身份认证和安全通信方面。

# 物联网安全框架

import hashlib

import hmac

import secrets

from cryptography.hazmat.primitives import hashes

from cryptography.hazmat.primitives.asymmetric import ec

from cryptography.hazmat.primitives import serialization

import base64

class IoTSecurityManager:

def __init__(self):

self.private_key = None

self.public_key = None

self.device_certificate = None

def generate_key_pair(self):

"""生成ECC密钥对"""

self.private_key = ec.generate_private_key(ec.SECP256R1())

self.public_key = self.private_key.public_key()

return {

'private_key': self.private_key.private_bytes(

encoding=serialization.Encoding.PEM,

format=serialization.PrivateFormat.PKCS8,

encryption_algorithm=serialization.NoEncryption()

),

'public_key': self.public_key.public_bytes(

encoding=serialization.Encoding.PEM,

format=serialization.PublicFormat.SubjectPublicKeyInfo

)

}

def sign_data(self, data: bytes) -> str:

"""使用私钥签名数据"""

if not self.private_key:

raise ValueError("未初始化密钥对")

signature = self.private_key.sign(

data,

ec.ECDSA(hashes.SHA256())

)

return base64.b64encode(signature).decode()

def verify_signature(self, data: bytes, signature: str, public_key_pem: bytes) -> bool:

"""验证数字签名"""

try:

public_key = serialization.load_pem_public_key(public_key_pem)

signature_bytes = base64.b64decode(signature)

public_key.verify(

signature_bytes,

data,

ec.ECDSA(hashes.SHA256())

)

return True

except Exception:

return False

def secure_device_communication(self, message: dict, target_public_key: bytes) -> dict:

"""安全设备通信"""

# 序列化消息

message_json = json.dumps(message, sort_keys=True).encode()

# 生成数字签名

signature = self.sign_data(message_json)

# 生成消息认证码

secret = secrets.token_bytes(32)

mac = hmac.new(secret, message_json, hashlib.sha256).digest()

# 加密敏感数据(简化示例)

encrypted_payload = base64.b64encode(message_json).decode()

return {

'payload': encrypted_payload,

'signature': signature,

'mac': base64.b64encode(mac).decode(),

'timestamp': datetime.now().isoformat(),

'device_id': self.get_device_id()

}

def get_device_id(self) -> str:

"""获取设备唯一标识"""

# 基于硬件信息生成设备ID

import machine

import ubinascii

return ubinascii.hexlify(machine.unique_id()).decode()

# 安全通信示例

security_mgr = IoTSecurityManager()

keys = security_mgr.generate_key_pair()

message = {

"sensor_type": "temperature",

"value": 23.5,

"unit": "celsius",

"battery_level": 85

}

secure_message = security_mgr.secure_device_communication(

message,

keys['public_key']

)

print("安全消息:", secure_message)4 边缘智能与联邦学习

4.1 分布式机器学习训练

2025年,Python在边缘联邦学习领域取得显著进展,使得多个边缘设备能够协作训练模型而不共享原始数据。

# 边缘联邦学习框架

import torch

import torch.nn as nn

import torch.optim as optim

from collections import OrderedDict

class FederatedLearningClient:

def __init__(self, model: nn.Module, client_id: str):

self.model = model

self.client_id = client_id

self.local_data = [] # 本地数据不离开设备

self.optimizer = optim.SGD(self.model.parameters(), lr=0.01)

def local_train(self, epochs: int = 1):

"""在本地数据上训练模型"""

if not self.local_data:

return None

# 保存初始权重

initial_weights = self.get_model_weights()

# 本地训练

self.model.train()

for epoch in range(epochs):

for batch in self.local_data:

self.optimizer.zero_grad()

output = self.model(batch['features'])

loss = nn.MSELoss()(output, batch['labels'])

loss.backward()

self.optimizer.step()

# 计算权重更新

final_weights = self.get_model_weights()

weight_update = self.compute_weight_update(initial_weights, final_weights)

return {

'client_id': self.client_id,

'weight_update': weight_update,

'data_size': len(self.local_data)

}

def get_model_weights(self) -> OrderedDict:

"""获取模型权重"""

return self.model.state_dict().copy()

def compute_weight_update(self, initial: OrderedDict, final: OrderedDict) -> OrderedDict:

"""计算权重更新"""

update = OrderedDict()

for key in initial.keys():

update[key] = final[key] - initial[key]

return update

def apply_global_update(self, global_weights: OrderedDict):

"""应用全局模型更新"""

self.model.load_state_dict(global_weights)

class FederatedLearningServer:

def __init__(self, global_model: nn.Module):

self.global_model = global_model

self.clients = {}

self.global_round = 0

def aggregate_updates(self, client_updates: list) -> OrderedDict:

"""聚合客户端更新"""

if not client_updates:

return self.global_model.state_dict()

# 加权平均聚合

total_data_size = sum(update['data_size'] for update in client_updates)

averaged_weights = OrderedDict()

# 初始化平均权重

for key in self.global_model.state_dict().keys():

averaged_weights[key] = torch.zeros_like(

self.global_model.state_dict()[key]

)

# 加权求和

for update in client_updates:

weight = update['data_size'] / total_data_size

for key in averaged_weights.keys():

averaged_weights[key] += update['weight_update'][key] * weight

# 更新全局模型

current_weights = self.global_model.state_dict()

new_weights = OrderedDict()

for key in current_weights.keys():

new_weights[key] = current_weights[key] + averaged_weights[key]

return new_weights

def run_federated_round(self, clients: list) -> dict:

"""执行一轮联邦学习"""

self.global_round += 1

# 选择参与本轮训练的客户端

selected_clients = self.select_clients(clients, fraction=0.5)

# 分发全局模型

global_weights = self.global_model.state_dict()

for client in selected_clients:

client.apply_global_update(global_weights)

# 客户端本地训练

client_updates = []

for client in selected_clients:

update = client.local_train(epochs=2)

if update:

client_updates.append(update)

# 聚合更新

new_global_weights = self.aggregate_updates(client_updates)

self.global_model.load_state_dict(new_global_weights)

return {

'round': self.global_round,

'participants': len(selected_clients),

'aggregated_updates': len(client_updates)

}

def select_clients(self, clients: list, fraction: float = 0.1) -> list:

"""选择参与训练的客户端"""

import random

k = max(1, int(len(clients) * fraction))

return random.sample(clients, k)

# 使用示例

class SimpleModel(nn.Module):

def __init__(self):

super().__init__()

self.fc1 = nn.Linear(10, 5)

self.fc2 = nn.Linear(5, 1)

def forward(self, x):

x = torch.relu(self.fc1(x))

return self.fc2(x)

# 创建联邦学习系统

global_model = SimpleModel()

server = FederatedLearningServer(global_model)

# 创建多个客户端

clients = []

for i in range(10):

client_model = SimpleModel()

client = FederatedLearningClient(client_model, f"client_{i}")

# 模拟本地数据(实际中每个客户端有自己的数据)

client.local_data = [{

'features': torch.randn(10),

'labels': torch.randn(1)

} for _ in range(100)]

clients.append(client)

# 运行多轮联邦学习

for round_num in range(5):

result = server.run_federated_round(clients)

print(f"第{result['round']}轮完成, 参与客户端: {result['participants']}")5 未来趋势与发展方向

5.1 数字孪生与虚拟化

Python在数字孪生技术中扮演关键角色,通过创建物理实体的虚拟副本,实现预测性维护和优化运营。

5.2 边缘原生应用架构

边缘原生成为新的架构范式,Python应用需要适应边缘环境的特殊要求,包括断网续传、资源优化和分布式协调。

5.3 绿色物联网与可持续发展

Python在可持续物联网中的应用日益重要,通过智能算法优化能源使用,减少碳足迹。

结语:Python在智能边缘时代的战略价值

2025年,Python在物联网和边缘计算领域的地位日益巩固。其简洁的语法、丰富的生态系统和强大的社区支持,使其成为连接物理世界与数字世界的理想桥梁。

对于开发者和企业而言,掌握Python在物联网和边缘计算中的应用意味着:

-

快速原型开发:快速验证物联网创意和商业模式

-

降低技术门槛:使用统一的技术栈开发端到端解决方案

-

利用AI能力:轻松集成机器学习模型到边缘设备

-

保障系统安全:构建安全可靠的物联网基础设施

随着5G/6G网络的普及和边缘计算基础设施的完善,Python在物联网领域的应用前景更加广阔。通过拥抱这一技术趋势,开发者能够在智能时代占据先机,构建真正智能、互联的未来系统。

行动建议:

-

学习MicroPython:掌握嵌入式Python开发基础

-

实践边缘AI:在资源受限设备上部署机器学习模型

-

关注安全实践:学习物联网安全最佳实践

-

探索新硬件:尝试最新的物联网开发板和传感器

-

参与开源项目:贡献代码,推动生态系统发展

Python在物联网和边缘计算的旅程刚刚开始,随着技术的不断演进,这一领域将为Python开发者带来无限可能。

更多推荐

已为社区贡献12条内容

已为社区贡献12条内容

所有评论(0)