istio仪表面板部署

其实这过程中有一个问题我至今还没解决 loki-0每次电脑关机后再开总是1/2 running ,查日志就说loki-memberlist无法解析dns的问题,但是我dns和endpoint都正常,直接手动解析也是好的,然后我觉着可能是数据没刷新之类的,把corndns的pod delete了让他重新起pod 就好了,后面再研究下吧。无非就是查日志,看报错,定位原因,搜索解决办法,尝试修改后重试,

基于上一篇博客bookinfo演示

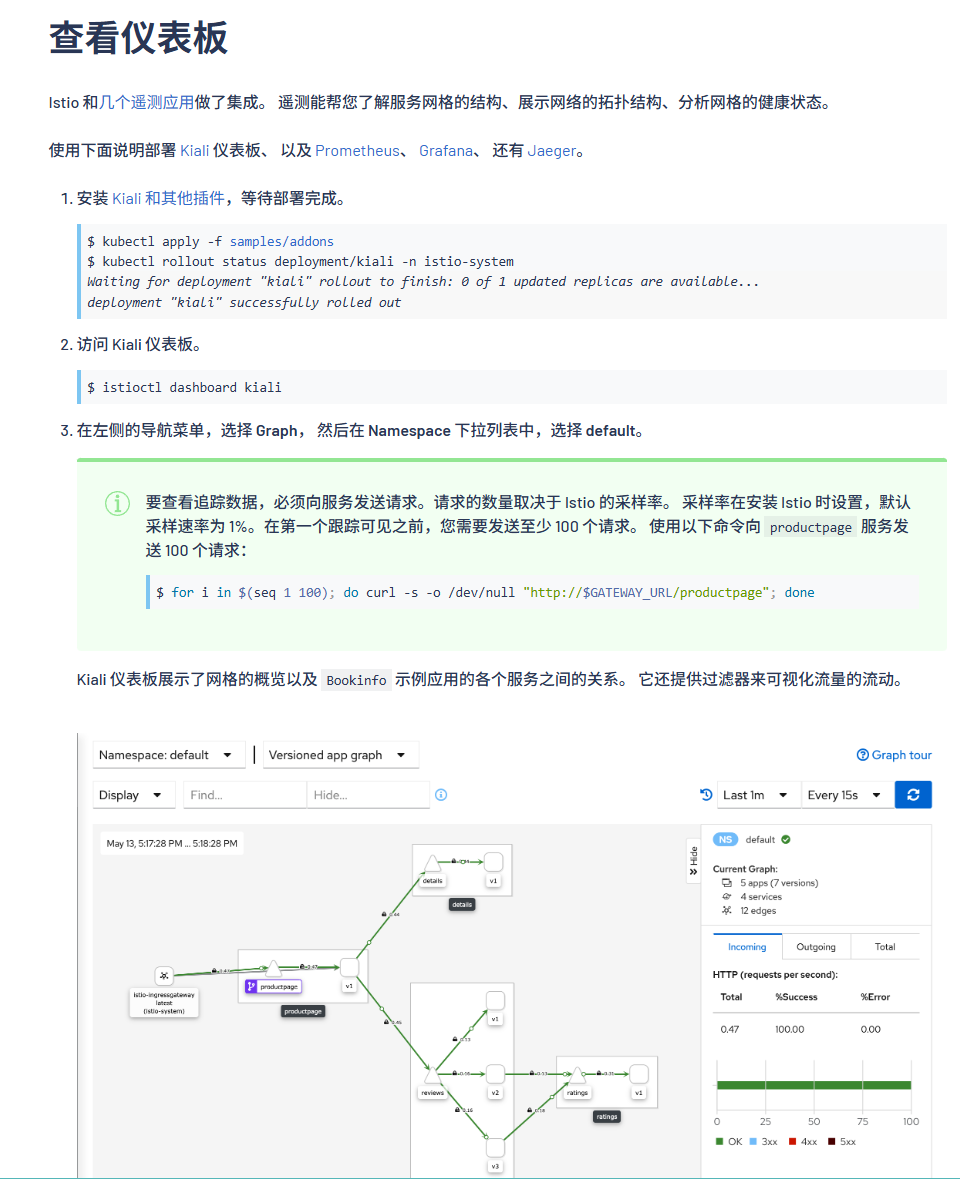

上图是istio官网的指导文档 基本就一条命令 kubectl apply -f samples/addons 一键部署addons目录下所有配置文件 简单来说 addons目录下就是所有仪表面板的组件的各种配置文件 如果不考虑网络的问题 会减少99%的麻烦 你执行这条命令 就是可以部署成功 但是考虑到国内的网络 你就需要查很多东西 修改很多东西

[root@master addons]# pwd

/root/istio-1.24.0/samples/addons

[root@master addons]# ll

total 308

drwxr-xr-x 2 root root 80 Nov 6 2024 extras

-rw-r--r-- 1 root root 236121 Sep 17 22:17 grafana.yaml

-rw-r--r-- 1 root root 2644 Sep 17 22:18 jaeger.yaml

-rw-r--r-- 1 root root 9703 Sep 17 22:19 kiali.yaml

-rw-r--r-- 1 root root 516 Sep 17 23:16 loki-pv.yaml

-rw-r--r-- 1 root root 163 Sep 17 23:08 loki-storageclass.yaml

-rw-r--r-- 1 root root 10069 Sep 17 23:09 loki.yaml

-rw-r--r-- 1 root root 10051 Sep 17 22:37 loki.yaml.bak

-rw-r--r-- 1 root root 17174 Sep 17 22:57 prometheus.yaml

-rw-r--r-- 1 root root 5441 Nov 6 2024 README.md

[root@master addons]#

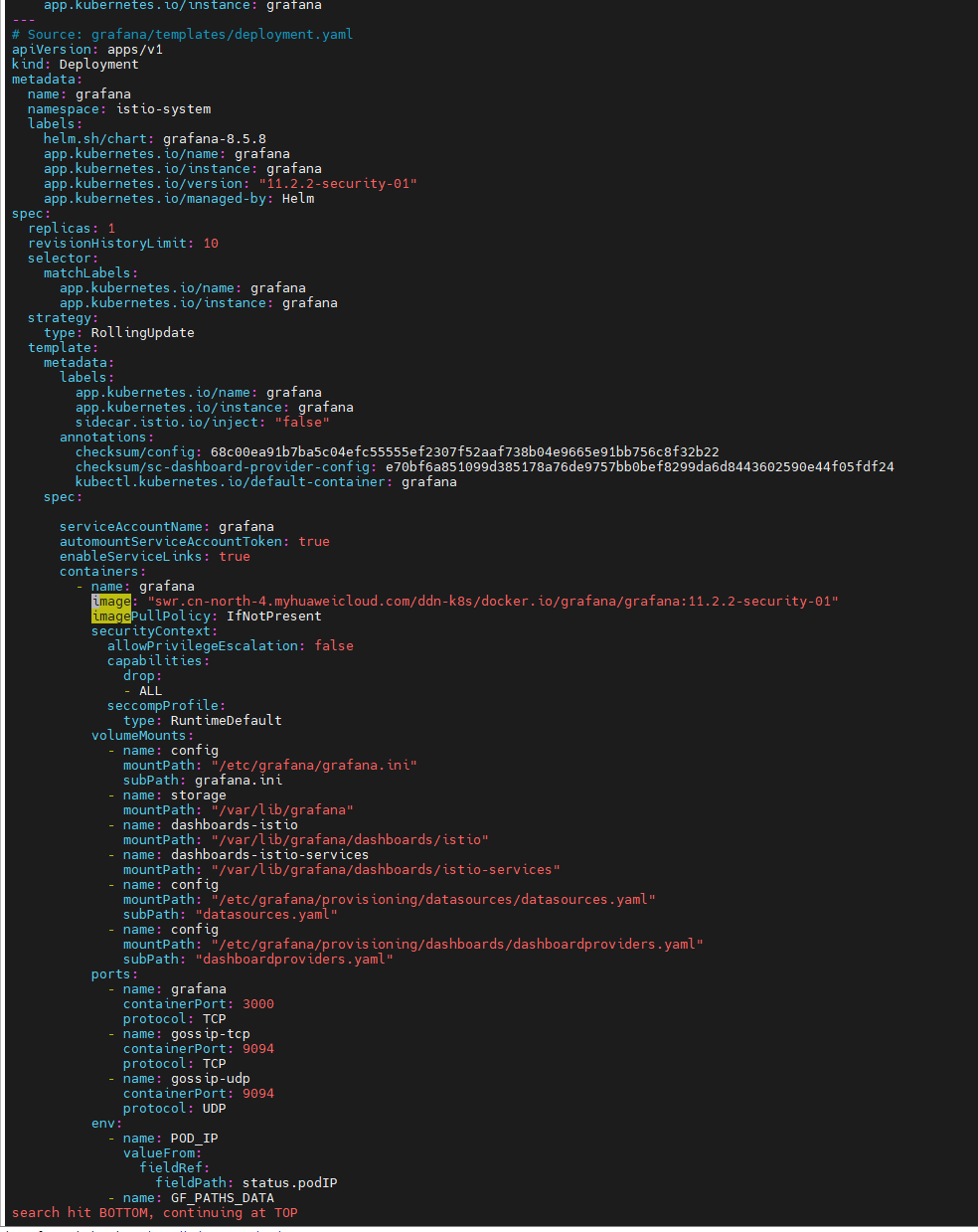

可以看到 该目录下就是这些组件的yam配置文件 其中有几个是我自己加的和修改的 loki-pv.yaml loki-storageclass.yaml 是没有云存储动态分配,所以使用的本地PV,还有就是几个组件的配置文件都需要修改镜像地址 以grafana为例 其他的一样

修改镜像后直接部署 再根据具体的情况分析 成功了万事大吉 报错了也挺好 ,可以锻炼下解决问题的能力。无非就是查日志,看报错,定位原因,搜索解决办法,尝试修改后重试,如此反复。但是这有个大前提,就是你得先大概了解整体的逻辑,不然就摸不着头脑。随机应变和使用工具这两点很重要!我基本就是靠AI搜索的 ,极大的提升了搜索效率,但是他只能作为参考,你得有自己的判断和分析能力。

部署成功后 集群大概就是下面这个样子

[root@master addons]# kubectl get nodes -A -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master Ready control-plane 29d v1.28.15 192.168.124.128 <none> Rocky Linux 9.6 (Blue Onyx) 5.14.0-570.17.1.el9_6.x86_64 containerd://1.7.27

node1 Ready <none> 29d v1.28.15 192.168.124.129 <none> Rocky Linux 9.6 (Blue Onyx) 5.14.0-570.17.1.el9_6.x86_64 containerd://1.7.27

node2 Ready <none> 29d v1.28.15 192.168.124.130 <none> Rocky Linux 9.6 (Blue Onyx) 5.14.0-570.17.1.el9_6.x86_64 containerd://1.7.27

[root@master addons]# kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

bookinfo details-v1-5fbd8f9745-hqbrq 2/2 Running 2 (71m ago) 46h 10.244.1.3 node1 <none> <none>

bookinfo productpage-v1-77b784c466-w6tww 2/2 Running 2 (71m ago) 46h 10.244.2.4 node2 <none> <none>

bookinfo ratings-v1-548854d8d9-599pm 2/2 Running 2 (71m ago) 46h 10.244.1.5 node1 <none> <none>

bookinfo reviews-v1-7c885c9549-z8brt 2/2 Running 2 (71m ago) 46h 10.244.1.9 node1 <none> <none>

bookinfo reviews-v2-85d44c548c-zhjnr 2/2 Running 2 (71m ago) 46h 10.244.1.4 node1 <none> <none>

bookinfo reviews-v3-64c75fff5c-z5xq7 2/2 Running 2 (71m ago) 46h 10.244.1.7 node1 <none> <none>

ingress-nginx nginx-ingress-controller-64cf48c6f4-km8f6 1/1 Running 3 (71m ago) 21d 10.244.1.6 node1 <none> <none>

istio-system grafana-5b7cf9ffcc-bvjwc 1/1 Running 1 (71m ago) 45h 10.244.1.2 node1 <none> <none>

istio-system istio-ingressgateway-6b85fdb7f5-b868x 1/1 Running 2 (71m ago) 2d22h 10.244.1.10 node1 <none> <none>

istio-system istiod-b5c779cd7-lg9sg 1/1 Running 2 (71m ago) 2d22h 10.244.2.6 node2 <none> <none>

istio-system jaeger-54dbf9f77f-rsfg5 1/1 Running 1 (71m ago) 45h 10.244.2.7 node2 <none> <none>

istio-system kiali-757c9ddc7c-vzjpl 1/1 Running 0 24m 10.244.1.12 node1 <none> <none>

istio-system loki-0 2/2 Running 2 (71m ago) 45h 10.244.1.8 node1 <none> <none>

istio-system prometheus-8b88c684f-sngkd 2/2 Running 2 (71m ago) 45h 10.244.2.2 node2 <none> <none>

kube-flannel kube-flannel-ds-4gpsd 1/1 Running 4 (71m ago) 29d 192.168.124.128 master <none> <none>

kube-flannel kube-flannel-ds-wlk7w 1/1 Running 4 (71m ago) 29d 192.168.124.129 node1 <none> <none>

kube-flannel kube-flannel-ds-zhppg 1/1 Running 4 (71m ago) 29d 192.168.124.130 node2 <none> <none>

kube-system coredns-66f779496c-stjwc 1/1 Running 0 69m 10.244.0.2 master <none> <none>

kube-system coredns-66f779496c-wcmm8 1/1 Running 0 69m 10.244.2.8 node2 <none> <none>

kube-system etcd-master 1/1 Running 4 (71m ago) 29d 192.168.124.128 master <none> <none>

kube-system kube-apiserver-master 1/1 Running 4 (71m ago) 29d 192.168.124.128 master <none> <none>

kube-system kube-controller-manager-master 1/1 Running 4 (71m ago) 29d 192.168.124.128 master <none> <none>

kube-system kube-proxy-29x8n 1/1 Running 4 (71m ago) 29d 192.168.124.128 master <none> <none>

kube-system kube-proxy-nbp9w 1/1 Running 4 (71m ago) 29d 192.168.124.129 node1 <none> <none>

kube-system kube-proxy-vg64b 1/1 Running 4 (71m ago) 29d 192.168.124.130 node2 <none> <none>

kube-system kube-scheduler-master 1/1 Running 4 (71m ago) 29d 192.168.124.128 master <none> <none>

[root@master addons]# kubectl get svc -A -o wide

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

bookinfo details ClusterIP 10.107.201.216 <none> 9080/TCP 46h app=details

bookinfo productpage ClusterIP 10.98.212.205 <none> 9080/TCP 46h app=productpage

bookinfo ratings ClusterIP 10.108.38.178 <none> 9080/TCP 46h app=ratings

bookinfo reviews ClusterIP 10.109.105.29 <none> 9080/TCP 46h app=reviews

default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 29d <none>

ingress-nginx nginx-ingress-controller NodePort 10.103.226.59 <none> 80:30080/TCP,443:30443/TCP 22d app.kubernetes.io/name=ingress-nginx,app.kubernetes.io/part-of=ingress-nginx

istio-system grafana ClusterIP 10.110.90.196 <none> 3000/TCP 45h app.kubernetes.io/instance=grafana,app.kubernetes.io/name=grafana

istio-system istio-ingressgateway NodePort 10.100.70.172 <none> 15021:30646/TCP,80:30588/TCP,443:30738/TCP 2d22h app=istio-ingressgateway,istio=ingressgateway

istio-system istiod ClusterIP 10.101.172.91 <none> 15010/TCP,15012/TCP,443/TCP,15014/TCP 2d22h app=istiod,istio=pilot

istio-system jaeger-collector ClusterIP 10.102.21.130 <none> 14268/TCP,14250/TCP,9411/TCP,4317/TCP,4318/TCP 45h app=jaeger

istio-system kiali NodePort 10.105.163.32 <none> 20001:30991/TCP,9090:31511/TCP 45h app.kubernetes.io/instance=kiali,app.kubernetes.io/name=kiali

istio-system loki ClusterIP 10.104.75.37 <none> 3100/TCP,9095/TCP 45h app.kubernetes.io/component=single-binary,app.kubernetes.io/instance=loki,app.kubernetes.io/name=loki

istio-system loki-headless ClusterIP None <none> 3100/TCP 45h app.kubernetes.io/instance=loki,app.kubernetes.io/name=loki

istio-system loki-memberlist ClusterIP None <none> 7946/TCP 45h app.kubernetes.io/instance=loki,app.kubernetes.io/name=loki,app.kubernetes.io/part-of=memberlist

istio-system prometheus ClusterIP 10.111.19.131 <none> 9090/TCP 45h app.kubernetes.io/component=server,app.kubernetes.io/instance=prometheus,app.kubernetes.io/name=prometheus

istio-system tracing ClusterIP 10.106.121.205 <none> 80/TCP,16685/TCP 45h app=jaeger

istio-system zipkin ClusterIP 10.103.67.206 <none> 9411/TCP 45h app=jaeger

kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 29d k8s-app=kube-dns

对于初学者 当你看到所有的pod 都是running 有一种难以言表的喜悦,哈哈 。其实这过程中有一个问题我至今还没解决 loki-0每次电脑关机后再开总是1/2 running ,查日志就说loki-memberlist无法解析dns的问题,但是我dns和endpoint都正常,直接手动解析也是好的,然后我觉着可能是数据没刷新之类的,把corndns的pod delete了让他重新起pod 就好了,后面再研究下吧。

下面是让AI帮我整理的当前集群的状况

Kubernetes 集群环境分析报告

📊 集群概览

您的 Kubernetes 集群包含 3个节点,运行状态良好:

| 节点名称 | 角色 | 内部IP | 状态 | 容器运行时 |

|---|---|---|---|---|

| master | control-plane | 192.168.124.128 | Ready | containerd |

| node1 | worker | 192.168.124.129 | Ready | containerd |

| node2 | worker | 192.168.124.130 | Ready | containerd |

🔧 服务网格组件 (Istio)

1. 控制平面

- istiod-b5c779cd7-lg9sg (运行在 node2)

- 服务:

istiod(ClusterIP: 10.101.172.91) - 端口: 15010,15012,443,15014/TCP

- 服务:

2. 入口网关

- istio-ingressgateway-6b85fdb7f5-b868x (运行在 node1)

- 服务:

istio-ingressgateway(NodePort) - 关键端口映射:

- 80:30588/TCP - HTTP流量入口

- 443:30738/TCP - HTTPS流量入口

- 15021:30646/TCP - 健康检查

- 服务:

📈 可观测性工具

1. Prometheus (指标监控)

- prometheus-8b88c684f-sngkd (运行在 node2)

- 服务:

prometheus(ClusterIP: 10.111.19.131) - 端口: 9090/TCP

- 服务:

2. Grafana (数据可视化)

- grafana-5b7cf9ffcc-bvjwc (运行在 node1)

- 服务:

grafana(ClusterIP: 10.110.90.196) - 端口: 3000/TCP

- 服务:

3. Kiali (服务网格可视化)

- kiali-757c9ddc7c-vzjpl (运行在 node1)

- 服务:

kiali(NodePort) - 端口映射:

- 20001:30991/TCP - 主界面

- 9090:31511/TCP - 指标接口

- 服务:

4. Jaeger (分布式追踪)

- jaeger-54dbf9f77f-rsfg5 (运行在 node2)

- 服务:

jaeger-collector(ClusterIP: 10.102.21.130)tracing(ClusterIP: 10.106.121.205)zipkin(ClusterIP: 10.103.67.206)

- 服务:

5. Loki (日志聚合)

- loki-0 (运行在 node1)

- 服务:

loki(ClusterIP: 10.104.75.37) - 主服务loki-headless(Headless) - 集群发现loki-memberlist(Headless) - 集群状态同步

- 服务:

📚 Bookinfo 示例应用

服务部署情况

所有服务都部署在 bookinfo 命名空间:

| 服务 | Pod名称 | 运行节点 | 服务IP |

|---|---|---|---|

| productpage | productpage-v1-77b784c466-w6tww | node2 | 10.98.212.205 |

| details | details-v1-5fbd8f9745-hqbrq | node1 | 10.107.201.216 |

| ratings | ratings-v1-548854d8d9-599pm | node1 | 10.108.38.178 |

| reviews v1 | reviews-v1-7c885c9549-z8brt | node1 | 10.109.105.29 |

| reviews v2 | reviews-v2-85d44c548c-zhjnr | node1 | 10.109.105.29 |

| reviews v3 | reviews-v3-64c75fff5c-z5xq7 | node1 | 10.109.105.29 |

🌐 网络访问方式

应用访问入口

- Bookinfo 应用: http://192.168.124.128:30588/productpage

- Kiali 控制台: http://192.168.124.128:30991

🔍 环境健康状况

从输出结果看,您的环境运行状况良好:

- 所有 Pod 都处于

Running状态 - 服务网格组件完整部署

- 可观测性工具齐全

- Bookinfo 示例应用正常运行

💡 建议与下一步操作

-

验证服务网格功能:

# 发送测试流量 for i in {1..100}; do curl -s http://192.168.124.128:30588/productpage > /dev/null done -

检查监控数据:

# 在 Prometheus Pod 中查询请求指标 kubectl exec -n istio-system prometheus-8b88c684f-sngkd -c prometheus-server -- \ wget -qO- "http://localhost:9090/api/v1/query?query=sum(istio_requests_total{destination_service=~\"productpage.*\"})" -

访问 Kiali 控制台:

通过 NodePort 30991 访问 Kiali,查看服务网格拓扑和流量指标。

这个环境已经完整部署了 Istio 服务网格及其全套可观测性工具,可以直接用于学习和测试服务网格的各种功能。

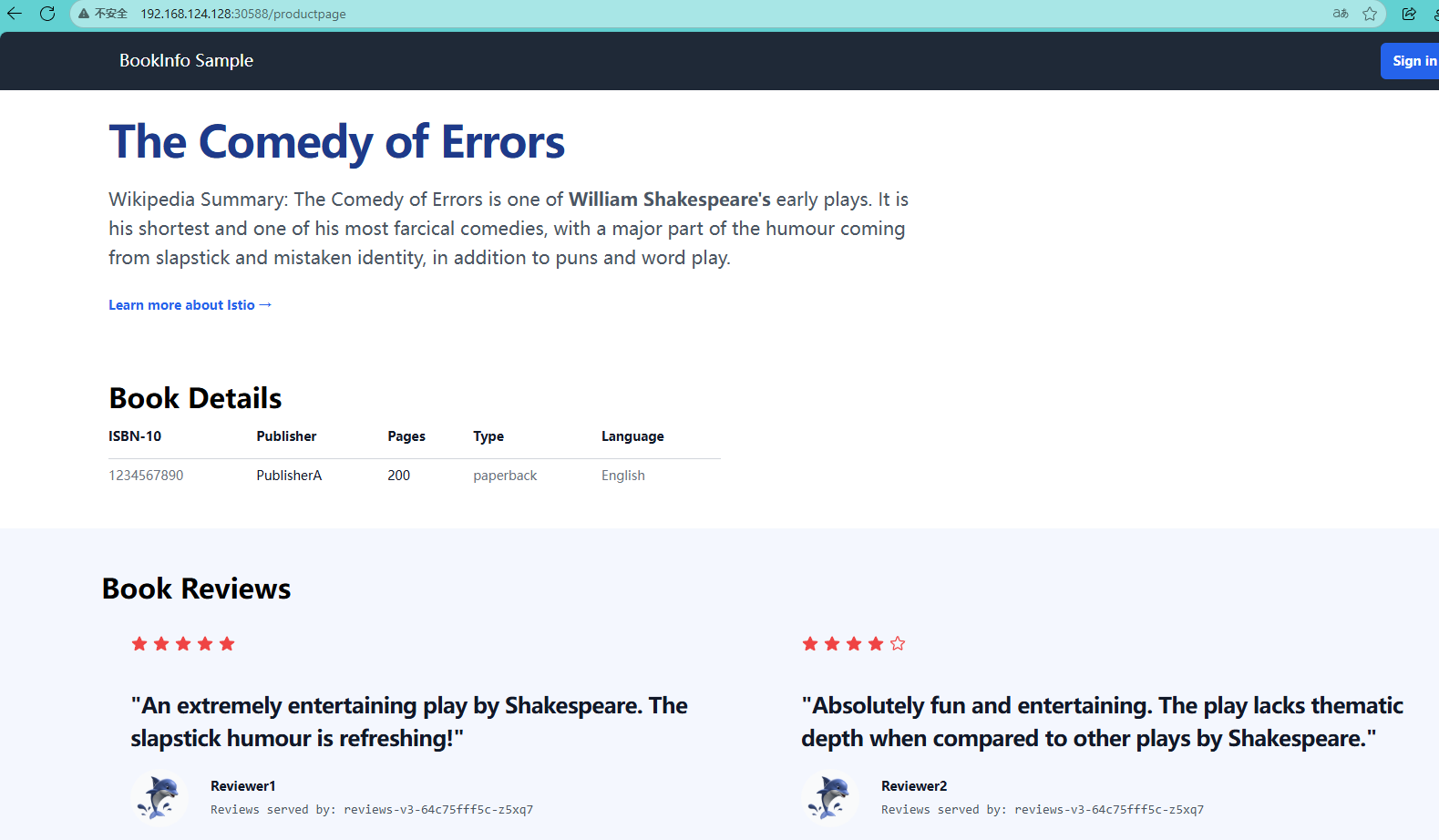

下面是浏览器访问的示例

通过istio-ingressgateway的node port访问示例应用bookinfo

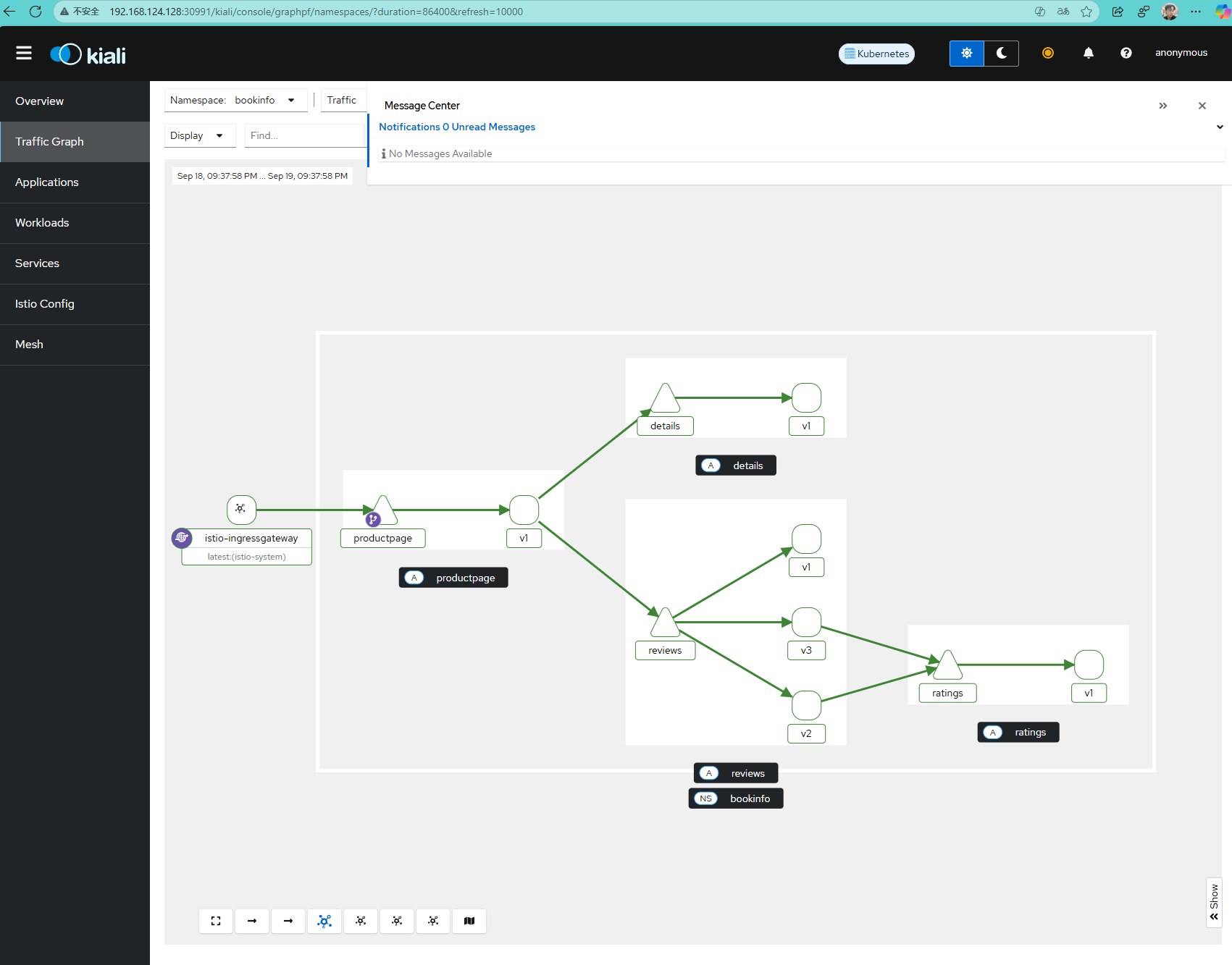

仪表面板 kiali

下面是验证请求数 通过在prometheus容器内查询

[root@master addons]# kubectl exec -n istio-system prometheus-8b88c684f-sngkd -c prometheus-server -- wget -qO- "http://localhost:9090/api/v1/query?query=sum(istio_requests_total{destination_service=~\"productpage.*\"})"

{"status":"success","data":{"resultType":"vector","result":[{"metric":{},"value":[1758289329.024,"1842"]}]}}[root@master addons]# kubectl exec -n istio-system prometheus-8b88c684f-sngkd for i in {1..100}; do curl -s http://192.168.124.128:30588/productpage > /dev/null; done [root@master addons]# kubectl exec -n istio-system prometheus-8b88c684f-sngkd -c prometheus-server -- wget -qO- "http://localhost:9090/api/v1/query?query=sum(istio_requests_total{destination_service=~\"productpage.*\"})"

{"status":"success","data":{"resultType":"vector","result":[{"metric":{},"value":[1758289340.807,"1842"]}]}}[root@master addons]# kubectl exec -n istio-system prometheus-8b88c684f-sngkd -c prometheus-server -- wget -qO- "http://localhost:9090/api/v1/query?query=sum(istio_requests_total{destination_service=~\"productpage.*\"})"

{"status":"success","data":{"resultType":"vector","result":[{"metric":{},"value":[1758289343.634,"1942"]}]}}[root@master addons]#

历史累计1842个请求 执行了100次请求后 为1942

待续

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)