RAID磁盘阵列

RAID LEVEL0:N块相同大小的存储空间,数据分割为N份,每个空间存1/N,提高读写速度,数据安全性不变。可以发现原来的热备盘/dev/sdb8正在参与RAID 5的重建,而原来的/dev/sdb5变成了坏盘。RAID LEVEL1:N块相同空间,同一份数据,拷贝N份,提高数据安全性,浪费存储空间。RAID LEVEL5:最少3块空间,2块空间存储分割的数据,第3块空间校验,硬盘可以损坏1块

·

RAID磁盘阵列

RAID磁盘冗余阵列

RAID LEVEL0:N块相同大小的存储空间,数据分割为N份,每个空间存1/N,提高读写速度,数据安全性不变

RAID LEVEL1:N块相同空间,同一份数据,拷贝N份,提高数据安全性,浪费存储空间

RAID LEVEL5:最少3块空间,2块空间存储分割的数据,第3块空间校验,

硬盘可以损坏1块,读写速度变慢,数据利用率高,数据安全性有一定提高

RAID LEVEL10 1+0 四块

RAID LEVEL50 5+0 六块

实现:

新建一块硬盘(20G),在这个硬盘里面新建分区模拟磁盘,每个磁盘大小为1G

一、创建raid0

1、先用parted命令里面的mklabel子命令给它一个gpt的标签

[root@stw2 ~]# parted /dev/sdb

GNU Parted 3.1

Using /dev/sdb

Welcome to GNU Parted! Type 'help' to view a list of commands.

(parted) p

Error: /dev/sdb: unrecognised disk label

Model: VMware, VMware Virtual S (scsi)

Disk /dev/sdb: 21.5GB

Sector size (logical/physical): 512B/512B

Partition Table: unknown

Disk Flags:

(parted) mklabel

New disk label type?

aix amiga bsd dvh gpt loop mac msdos pc98 sun

New disk label type? gpt

(parted) p

Model: VMware, VMware Virtual S (scsi)

Disk /dev/sdb: 21.5GB

Sector size (logical/physical): 512B/512B

Partition Table: gpt

Disk Flags:

Number Start End Size File system Name Flags

(parted) quit

Information: You may need to update /etc/fstab.

[root@stw2 ~]# udevadm settle

2、利用磁盘分区新建2个磁盘分区,每个大小为1 GB

[root@stw2 ~]# fdisk /dev/sdb

WARNING: fdisk GPT support is currently new, and therefore in an experimental phase. Use at your own discretion.

Welcome to fdisk (util-linux 2.23.2).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Command (m for help): n

Partition number (1-128, default 1):

First sector (34-41943006, default 2048):

Last sector, +sectors or +size{K,M,G,T,P} (2048-41943006, default 41943006): +1G

Created partition 1

Command (m for help): n

Partition number (2-128, default 2):

First sector (34-41943006, default 2099200):

Last sector, +sectors or +size{K,M,G,T,P} (2099200-41943006, default 41943006): +1G

Created partition 2

Command (m for help): p

Disk /dev/sdb: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: gpt

Disk identifier: 06E5E1E8-0E35-42D1-895D-CFEC7398C365

# Start End Size Type Name

1 2048 2099199 1G Linux filesyste

2 2099200 4196351 1G Linux filesyste

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

[root@stw2 ~]# partprobe /dev/sdb

[root@stw2 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1G 0 part /boot

├─sda2 8:2 0 50G 0 part /

└─sda3 8:3 0 4G 0 part [SWAP]

sdb 8:16 0 20G 0 disk

├─sdb1 8:17 0 1G 0 part

└─sdb2 8:18 0 1G 0 part

sr0 11:0 1 4.3G 0 rom

3、将sdb1和sdb2建立RAID等级为raid0的md0(设备名)

[root@stw2 ~]# mdadm -Cv /dev/md0 -l 0 -n 2 /dev/sdb1 /dev/sdb2

mdadm: chunk size defaults to 512K

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md0 started.

[root@stw2 ~]# mdadm -D /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Mon Aug 18 10:04:47 2025

Raid Level : raid0

Array Size : 2093056 (2044.00 MiB 2143.29 MB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Mon Aug 18 10:04:47 2025

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Chunk Size : 512K

Consistency Policy : none

Name : stw2.example.com:0 (local to host stw2.example.com)

UUID : e4d678dd:322ed9fb:406d0c6a:a48ef576

Events : 0

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 18 1 active sync /dev/sdb2

[root@stw2 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1G 0 part /boot

├─sda2 8:2 0 50G 0 part /

└─sda3 8:3 0 4G 0 part [SWAP]

sdb 8:16 0 20G 0 disk

├─sdb1 8:17 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0

└─sdb2 8:18 0 1G 0 part

└─md0 9:0 0 2G 0 raid0

sr0 11:0 1 4.3G 0 rom

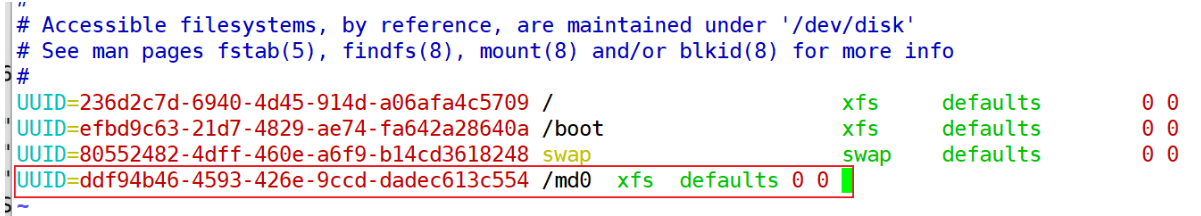

4、格式化并手动挂载md0

[root@stw2 ~]# mkfs.xfs /dev/md0

meta-data=/dev/md0 isize=512 agcount=8, agsize=65408 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=523264, imaxpct=25

= sunit=128 swidth=256 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@stw2 ~]# blkid

/dev/sda1: UUID="efbd9c63-21d7-4829-ae74-fa642a28640a" TYPE="xfs"

/dev/sda2: UUID="236d2c7d-6940-4d45-914d-a06afa4c5709" TYPE="xfs"

/dev/sda3: UUID="80552482-4dff-460e-a6f9-b14cd3618248" TYPE="swap"

/dev/sdb1: UUID="e4d678dd-322e-d9fb-406d-0c6aa48ef576" UUID_SUB="c91fe913-8324-1615-79a4-0962349d7863" LABEL="stw2.example.com:0" TYPE="linux_raid_member" PARTUUID="f2421c60-785e-41f4-b724-9df1d17c18c1"

/dev/sdb2: UUID="e4d678dd-322e-d9fb-406d-0c6aa48ef576" UUID_SUB="61d77597-a973-6248-9dc0-b4204526e50e" LABEL="stw2.example.com:0" TYPE="linux_raid_member" PARTUUID="966253fe-b45c-47ad-8bf5-b265a895426b"

/dev/sr0: UUID="2018-11-25-23-54-16-00" LABEL="CentOS 7 x86_64" TYPE="iso9660" PTTYPE="dos"

/dev/md0: UUID="ddf94b46-4593-426e-9ccd-dadec613c554" TYPE="xfs"

[root@stw2 ~]# mkdir /md0

[root@stw2 ~]# vim /etc/fstab

[root@stw2 ~]# mount -a

[root@stw2 ~]# df -Th

Filesystem Type Size Used Avail Use% Mounted on

/dev/sda2 xfs 50G 3.5G 47G 7% /

devtmpfs devtmpfs 2.0G 0 2.0G 0% /dev

tmpfs tmpfs 2.0G 0 2.0G 0% /dev/shm

tmpfs tmpfs 2.0G 13M 2.0G 1% /run

tmpfs tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/sda1 xfs 1014M 163M 852M 17% /boot

tmpfs tmpfs 394M 12K 394M 1% /run/user/42

tmpfs tmpfs 394M 0 394M 0% /run/user/0

/dev/md0 xfs 2.0G 33M 2.0G 2% /md0

[root@stw2 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1G 0 part /boot

├─sda2 8:2 0 50G 0 part /

└─sda3 8:3 0 4G 0 part [SWAP]

sdb 8:16 0 20G 0 disk

├─sdb1 8:17 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0 /md0

└─sdb2 8:18 0 1G 0 part

└─md0 9:0 0 2G 0 raid0 /md0

sr0 11:0 1 4.3G 0 rom

二、创建raid1

1、利用磁盘分区新建2个磁盘分区,每个大小为1 GB

[root@stw2 ~]# fdisk /dev/sdb

WARNING: fdisk GPT support is currently new, and therefore in an experimental phase. Use at your own discretion.

Welcome to fdisk (util-linux 2.23.2).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Command (m for help): n

Partition number (3-128, default 3):

First sector (34-41943006, default 4196352):

Last sector, +sectors or +size{K,M,G,T,P} (4196352-41943006, default 41943006): +1G

Created partition 3

Command (m for help): n

Partition number (4-128, default 4):

First sector (34-41943006, default 6293504):

Last sector, +sectors or +size{K,M,G,T,P} (6293504-41943006, default 41943006): +1G

Created partition 4

Command (m for help): p

Disk /dev/sdb: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: gpt

Disk identifier: 06E5E1E8-0E35-42D1-895D-CFEC7398C365

# Start End Size Type Name

1 2048 2099199 1G Linux filesyste

2 2099200 4196351 1G Linux filesyste

3 4196352 6293503 1G Linux filesyste

4 6293504 8390655 1G Linux filesyste

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

WARNING: Re-reading the partition table failed with error 16: Device or resource busy.

The kernel still uses the old table. The new table will be used at

the next reboot or after you run partprobe(8) or kpartx(8)

Syncing disks.

[root@stw2 ~]# partprobe /dev/sdb

[root@stw2 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1G 0 part /boot

├─sda2 8:2 0 50G 0 part /

└─sda3 8:3 0 4G 0 part [SWAP]

sdb 8:16 0 20G 0 disk

├─sdb1 8:17 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0 /md0

├─sdb2 8:18 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0 /md0

├─sdb3 8:19 0 1G 0 part

└─sdb4 8:20 0 1G 0 part

sr0 11:0 1 4.3G 0 rom

2、将sdb3和sdb4建立RAID等级为raid1的md1(设备名)

[root@stw2 ~]# mdadm -Cv /dev/md1 -l 1 -n 2 /dev/sdb{3..4}

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

mdadm: size set to 1046528K

Continue creating array? y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md1 started.

[root@stw2 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1G 0 part /boot

├─sda2 8:2 0 50G 0 part /

└─sda3 8:3 0 4G 0 part [SWAP]

sdb 8:16 0 20G 0 disk

├─sdb1 8:17 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0 /md0

├─sdb2 8:18 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0 /md0

├─sdb3 8:19 0 1G 0 part

│ └─md1 9:1 0 1022M 0 raid1

└─sdb4 8:20 0 1G 0 part

└─md1 9:1 0 1022M 0 raid1

sr0 11:0 1 4.3G 0 rom

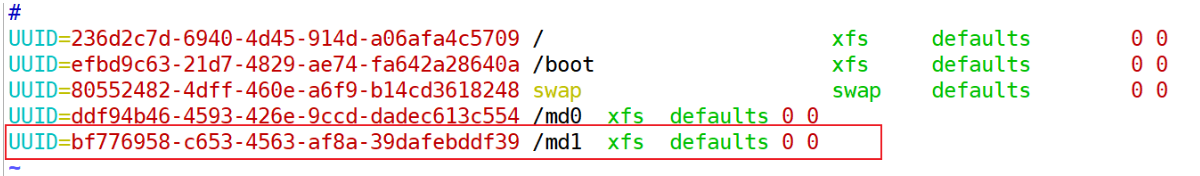

3、格式化并手动挂载md1

[root@stw2 ~]# mkfs.xfs /dev/md1

meta-data=/dev/md1 isize=512 agcount=4, agsize=65408 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=261632, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=855, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@stw2 ~]# blkid

/dev/sda1: UUID="efbd9c63-21d7-4829-ae74-fa642a28640a" TYPE="xfs"

/dev/sda2: UUID="236d2c7d-6940-4d45-914d-a06afa4c5709" TYPE="xfs"

/dev/sda3: UUID="80552482-4dff-460e-a6f9-b14cd3618248" TYPE="swap"

/dev/sdb1: UUID="e4d678dd-322e-d9fb-406d-0c6aa48ef576" UUID_SUB="c91fe913-8324-1615-79a4-0962349d7863" LABEL="stw2.example.com:0" TYPE="linux_raid_member" PARTUUID="f2421c60-785e-41f4-b724-9df1d17c18c1"

/dev/sdb2: UUID="e4d678dd-322e-d9fb-406d-0c6aa48ef576" UUID_SUB="61d77597-a973-6248-9dc0-b4204526e50e" LABEL="stw2.example.com:0" TYPE="linux_raid_member" PARTUUID="966253fe-b45c-47ad-8bf5-b265a895426b"

/dev/sr0: UUID="2018-11-25-23-54-16-00" LABEL="CentOS 7 x86_64" TYPE="iso9660" PTTYPE="dos"

/dev/md0: UUID="ddf94b46-4593-426e-9ccd-dadec613c554" TYPE="xfs"

/dev/sdb3: UUID="02de80c2-590f-b120-0cb0-309fd7eb11e6" UUID_SUB="c95eb1db-4bbf-2c57-9c45-b8545e9ce7d5" LABEL="stw2.example.com:1" TYPE="linux_raid_member" PARTUUID="6b72dbc2-1dce-4922-a299-3b10f094f8fd"

/dev/sdb4: UUID="02de80c2-590f-b120-0cb0-309fd7eb11e6" UUID_SUB="c2724409-5937-4261-2822-5cf4f1ea14b2" LABEL="stw2.example.com:1" TYPE="linux_raid_member" PARTUUID="74d18fa6-5f72-4819-aadb-e0ce7541d02a"

/dev/md1: UUID="bf776958-c653-4563-af8a-39dafebddf39" TYPE="xfs"

[root@stw2 ~]# mkdir /md1

[root@stw2 ~]# vim /etc/fatab

[root@stw2 ~]# vim /etc/fstab

[root@stw2 ~]# mount -a

[root@stw2 ~]# df -Th

Filesystem Type Size Used Avail Use% Mounted on

/dev/sda2 xfs 50G 3.5G 47G 7% /

devtmpfs devtmpfs 2.0G 0 2.0G 0% /dev

tmpfs tmpfs 2.0G 0 2.0G 0% /dev/shm

tmpfs tmpfs 2.0G 13M 2.0G 1% /run

tmpfs tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/sda1 xfs 1014M 163M 852M 17% /boot

tmpfs tmpfs 394M 12K 394M 1% /run/user/42

tmpfs tmpfs 394M 0 394M 0% /run/user/0

/dev/md0 xfs 2.0G 33M 2.0G 2% /md0

/dev/md1 xfs 1019M 33M 987M 4% /md1

[root@stw2 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1G 0 part /boot

├─sda2 8:2 0 50G 0 part /

└─sda3 8:3 0 4G 0 part [SWAP]

sdb 8:16 0 20G 0 disk

├─sdb1 8:17 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0 /md0

├─sdb2 8:18 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0 /md0

├─sdb3 8:19 0 1G 0 part

│ └─md1 9:1 0 1022M 0 raid1 /md1

└─sdb4 8:20 0 1G 0 part

└─md1 9:1 0 1022M 0 raid1 /md1

sr0 11:0 1 4.3G 0 rom

三、创建raid5

1、利用磁盘分区新建4个磁盘分区,每个大小为1 GB(2块存储数据,一块校验,一块热备盘)

[root@stw2 ~]# fdisk /dev/sdb

WARNING: fdisk GPT support is currently new, and therefore in an experimental phase. Use at your own discretion.

Welcome to fdisk (util-linux 2.23.2).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Command (m for help): n

Partition number (5-128, default 5):

First sector (34-41943006, default 8390656):

Last sector, +sectors or +size{K,M,G,T,P} (8390656-41943006, default 41943006): +1G

Created partition 5

Command (m for help): n

Partition number (6-128, default 6):

First sector (34-41943006, default 10487808):

Last sector, +sectors or +size{K,M,G,T,P} (10487808-41943006, default 41943006): +1G

Created partition 6

Command (m for help): n

Partition number (7-128, default 7):

First sector (34-41943006, default 12584960):

Last sector, +sectors or +size{K,M,G,T,P} (12584960-41943006, default 41943006): +1G

Created partition 7

Command (m for help): n

Partition number (8-128, default 8):

First sector (34-41943006, default 14682112):

Last sector, +sectors or +size{K,M,G,T,P} (14682112-41943006, default 41943006): +1G

Created partition 8

Command (m for help): p

Disk /dev/sdb: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: gpt

Disk identifier: 06E5E1E8-0E35-42D1-895D-CFEC7398C365

# Start End Size Type Name

1 2048 2099199 1G Linux filesyste

2 2099200 4196351 1G Linux filesyste

3 4196352 6293503 1G Linux filesyste

4 6293504 8390655 1G Linux filesyste

5 8390656 10487807 1G Linux filesyste

6 10487808 12584959 1G Linux filesyste

7 12584960 14682111 1G Linux filesyste

8 14682112 16779263 1G Linux filesyste

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

WARNING: Re-reading the partition table failed with error 16: Device or resource busy.

The kernel still uses the old table. The new table will be used at

the next reboot or after you run partprobe(8) or kpartx(8)

Syncing disks.

[root@stw2 ~]# partprobe /dev/sdb

[root@stw2 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1G 0 part /boot

├─sda2 8:2 0 50G 0 part /

└─sda3 8:3 0 4G 0 part [SWAP]

sdb 8:16 0 20G 0 disk

├─sdb1 8:17 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0 /md0

├─sdb2 8:18 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0 /md0

├─sdb3 8:19 0 1G 0 part

│ └─md1 9:1 0 1022M 0 raid1 /md1

├─sdb4 8:20 0 1G 0 part

│ └─md1 9:1 0 1022M 0 raid1 /md1

├─sdb5 8:21 0 1G 0 part

├─sdb6 8:22 0 1G 0 part

├─sdb7 8:23 0 1G 0 part

└─sdb8 8:24 0 1G 0 part

sr0 11:0 1 4.3G 0 rom

2、将sdb5、sdb6、sdb7建立RAID等级为raid5的md5(设备名),sdb8为热备盘

[root@stw2 ~]# mdadm -Cv /dev/md5 -l 5 -n 3 /dev/sdb{5..7} --spare-devices=1 /dev/sdb8

mdadm: layout defaults to left-symmetric

mdadm: layout defaults to left-symmetric

mdadm: chunk size defaults to 512K

mdadm: size set to 1046528K

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md5 started.

[root@stw2 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1G 0 part /boot

├─sda2 8:2 0 50G 0 part /

└─sda3 8:3 0 4G 0 part [SWAP]

sdb 8:16 0 20G 0 disk

├─sdb1 8:17 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0 /md0

├─sdb2 8:18 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0 /md0

├─sdb3 8:19 0 1G 0 part

│ └─md1 9:1 0 1022M 0 raid1 /md1

├─sdb4 8:20 0 1G 0 part

│ └─md1 9:1 0 1022M 0 raid1 /md1

├─sdb5 8:21 0 1G 0 part

│ └─md5 9:5 0 2G 0 raid5

├─sdb6 8:22 0 1G 0 part

│ └─md5 9:5 0 2G 0 raid5

├─sdb7 8:23 0 1G 0 part

│ └─md5 9:5 0 2G 0 raid5

└─sdb8 8:24 0 1G 0 part

└─md5 9:5 0 2G 0 raid5

sr0 11:0 1 4.3G 0 rom

[root@stw2 ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Mon Aug 18 10:35:40 2025

Raid Level : raid5

Array Size : 2093056 (2044.00 MiB 2143.29 MB)

Used Dev Size : 1046528 (1022.00 MiB 1071.64 MB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Mon Aug 18 10:35:51 2025

State : clean

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : stw2.example.com:5 (local to host stw2.example.com)

UUID : 326c3d85:39d2b6c0:10c35aa9:1185c42c

Events : 18

Number Major Minor RaidDevice State

0 8 21 0 active sync /dev/sdb5

1 8 22 1 active sync /dev/sdb6

4 8 23 2 active sync /dev/sdb7

3 8 24 - spare /dev/sdb8

此时,模拟一下硬盘故障(sdb5故障),看热备盘(sdb8)是否会工作

可以发现原来的热备盘/dev/sdb8正在参与RAID 5的重建,而原来的/dev/sdb5变成了坏盘

[root@stw2 ~]# mdadm -f /dev/md5 /dev/sdb5

mdadm: set /dev/sdb5 faulty in /dev/md5

[root@stw2 ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Mon Aug 18 10:35:40 2025

Raid Level : raid5

Array Size : 2093056 (2044.00 MiB 2143.29 MB)

Used Dev Size : 1046528 (1022.00 MiB 1071.64 MB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Mon Aug 18 10:37:07 2025

State : clean, degraded, recovering

Active Devices : 2

Working Devices : 3

Failed Devices : 1

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Rebuild Status : 52% complete

Name : stw2.example.com:5 (local to host stw2.example.com)

UUID : 326c3d85:39d2b6c0:10c35aa9:1185c42c

Events : 28

Number Major Minor RaidDevice State

3 8 24 0 spare rebuilding /dev/sdb8

1 8 22 1 active sync /dev/sdb6

4 8 23 2 active sync /dev/sdb7

0 8 21 - faulty /dev/sdb5

[root@stw2 ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Mon Aug 18 10:35:40 2025

Raid Level : raid5

Array Size : 2093056 (2044.00 MiB 2143.29 MB)

Used Dev Size : 1046528 (1022.00 MiB 1071.64 MB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Mon Aug 18 10:37:11 2025

State : clean

Active Devices : 3

Working Devices : 3

Failed Devices : 1

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : stw2.example.com:5 (local to host stw2.example.com)

UUID : 326c3d85:39d2b6c0:10c35aa9:1185c42c

Events : 37

Number Major Minor RaidDevice State

3 8 24 0 active sync /dev/sdb8

1 8 22 1 active sync /dev/sdb6

4 8 23 2 active sync /dev/sdb7

0 8 21 - faulty /dev/sdb5

移除故障盘

[root@stw2 ~]# mdadm -r /dev/md5 /dev/sdb5

mdadm: hot removed /dev/sdb5 from /dev/md5

[root@stw2 ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Mon Aug 18 10:35:40 2025

Raid Level : raid5

Array Size : 2093056 (2044.00 MiB 2143.29 MB)

Used Dev Size : 1046528 (1022.00 MiB 1071.64 MB)

Raid Devices : 3

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Mon Aug 18 10:37:41 2025

State : clean

Active Devices : 3

Working Devices : 3

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : stw2.example.com:5 (local to host stw2.example.com)

UUID : 326c3d85:39d2b6c0:10c35aa9:1185c42c

Events : 38

Number Major Minor RaidDevice State

3 8 24 0 active sync /dev/sdb8

1 8 22 1 active sync /dev/sdb6

4 8 23 2 active sync /dev/sdb7

重新添加sdb5

[root@stw2 ~]# mdadm -a /dev/md5 /dev/sdb5

mdadm: added /dev/sdb5

[root@stw2 ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Mon Aug 18 10:35:40 2025

Raid Level : raid5

Array Size : 2093056 (2044.00 MiB 2143.29 MB)

Used Dev Size : 1046528 (1022.00 MiB 1071.64 MB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Mon Aug 18 10:38:13 2025

State : clean

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : stw2.example.com:5 (local to host stw2.example.com)

UUID : 326c3d85:39d2b6c0:10c35aa9:1185c42c

Events : 39

Number Major Minor RaidDevice State

3 8 24 0 active sync /dev/sdb8

1 8 22 1 active sync /dev/sdb6

4 8 23 2 active sync /dev/sdb7

5 8 21 - spare /dev/sdb5

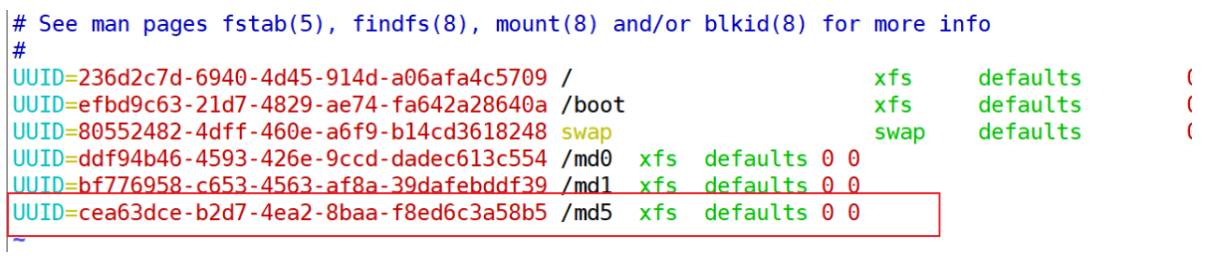

3、格式化并手动挂载md5

[root@stw2 ~]# mkfs.xfs /dev/md5

meta-data=/dev/md5 isize=512 agcount=8, agsize=65408 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=523264, imaxpct=25

= sunit=128 swidth=256 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@stw2 ~]# blkid

/dev/sda1: UUID="efbd9c63-21d7-4829-ae74-fa642a28640a" TYPE="xfs"

/dev/sda2: UUID="236d2c7d-6940-4d45-914d-a06afa4c5709" TYPE="xfs"

/dev/sda3: UUID="80552482-4dff-460e-a6f9-b14cd3618248" TYPE="swap"

/dev/sdb1: UUID="e4d678dd-322e-d9fb-406d-0c6aa48ef576" UUID_SUB="c91fe913-8324-1615-79a4-0962349d7863" LABEL="stw2.example.com:0" TYPE="linux_raid_member" PARTUUID="f2421c60-785e-41f4-b724-9df1d17c18c1"

/dev/sdb2: UUID="e4d678dd-322e-d9fb-406d-0c6aa48ef576" UUID_SUB="61d77597-a973-6248-9dc0-b4204526e50e" LABEL="stw2.example.com:0" TYPE="linux_raid_member" PARTUUID="966253fe-b45c-47ad-8bf5-b265a895426b"

/dev/sr0: UUID="2018-11-25-23-54-16-00" LABEL="CentOS 7 x86_64" TYPE="iso9660" PTTYPE="dos"

/dev/md0: UUID="ddf94b46-4593-426e-9ccd-dadec613c554" TYPE="xfs"

/dev/sdb3: UUID="02de80c2-590f-b120-0cb0-309fd7eb11e6" UUID_SUB="c95eb1db-4bbf-2c57-9c45-b8545e9ce7d5" LABEL="stw2.example.com:1" TYPE="linux_raid_member" PARTUUID="6b72dbc2-1dce-4922-a299-3b10f094f8fd"

/dev/sdb4: UUID="02de80c2-590f-b120-0cb0-309fd7eb11e6" UUID_SUB="c2724409-5937-4261-2822-5cf4f1ea14b2" LABEL="stw2.example.com:1" TYPE="linux_raid_member" PARTUUID="74d18fa6-5f72-4819-aadb-e0ce7541d02a"

/dev/md1: UUID="bf776958-c653-4563-af8a-39dafebddf39" TYPE="xfs"

/dev/sdb5: UUID="326c3d85-39d2-b6c0-10c3-5aa91185c42c" UUID_SUB="8ab41f9c-3616-d3e0-d20d-3635f8fe47d4" LABEL="stw2.example.com:5" TYPE="linux_raid_member" PARTUUID="9880f0f1-ec14-44e3-a499-20040f02b81a"

/dev/sdb6: UUID="326c3d85-39d2-b6c0-10c3-5aa91185c42c" UUID_SUB="e7dcfb0d-8ee8-6ae7-aae1-ac10c4937f88" LABEL="stw2.example.com:5" TYPE="linux_raid_member" PARTUUID="1bcb575b-766f-4719-9464-c3301e6506eb"

/dev/sdb7: UUID="326c3d85-39d2-b6c0-10c3-5aa91185c42c" UUID_SUB="afcfc5ca-f528-1fbc-b184-4e40444a5cd5" LABEL="stw2.example.com:5" TYPE="linux_raid_member" PARTUUID="cb09c16a-0fcf-4618-a80c-f0093ca5ab02"

/dev/sdb8: UUID="326c3d85-39d2-b6c0-10c3-5aa91185c42c" UUID_SUB="bab190e3-f331-d810-9ed8-aa85dc3c7796" LABEL="stw2.example.com:5" TYPE="linux_raid_member" PARTUUID="6c34f4dc-5a0b-452a-99ff-c21579f1ffb3"

/dev/md5: UUID="cea63dce-b2d7-4ea2-8baa-f8ed6c3a58b5" TYPE="xfs"

[root@stw2 ~]# mkdir /md5

[root@stw2 ~]# vim /etc/fstab

[root@stw2 ~]# mount -a

[root@stw2 ~]# df -Th

Filesystem Type Size Used Avail Use% Mounted on

/dev/sda2 xfs 50G 3.5G 47G 7% /

devtmpfs devtmpfs 2.0G 0 2.0G 0% /dev

tmpfs tmpfs 2.0G 0 2.0G 0% /dev/shm

tmpfs tmpfs 2.0G 13M 2.0G 1% /run

tmpfs tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/sda1 xfs 1014M 163M 852M 17% /boot

tmpfs tmpfs 394M 12K 394M 1% /run/user/42

tmpfs tmpfs 394M 0 394M 0% /run/user/0

/dev/md0 xfs 2.0G 33M 2.0G 2% /md0

/dev/md1 xfs 1019M 33M 987M 4% /md1

/dev/md5 xfs 2.0G 33M 2.0G 2% /md5

[root@stw2 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1G 0 part /boot

├─sda2 8:2 0 50G 0 part /

└─sda3 8:3 0 4G 0 part [SWAP]

sdb 8:16 0 20G 0 disk

├─sdb1 8:17 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0 /md0

├─sdb2 8:18 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0 /md0

├─sdb3 8:19 0 1G 0 part

│ └─md1 9:1 0 1022M 0 raid1 /md1

├─sdb4 8:20 0 1G 0 part

│ └─md1 9:1 0 1022M 0 raid1 /md1

├─sdb5 8:21 0 1G 0 part

│ └─md5 9:5 0 2G 0 raid5 /md5

├─sdb6 8:22 0 1G 0 part

│ └─md5 9:5 0 2G 0 raid5 /md5

├─sdb7 8:23 0 1G 0 part

│ └─md5 9:5 0 2G 0 raid5 /md5

└─sdb8 8:24 0 1G 0 part

└─md5 9:5 0 2G 0 raid5 /md5

sr0 11:0 1 4.3G 0 rom

四、创建raid10(至少创建4块)

1、利用磁盘分区新建4个磁盘分区,每个大小为1 GB(raid10是raid1和raid0结合)

[root@stw2 ~]# fdisk /dev/sdb

WARNING: fdisk GPT support is currently new, and therefore in an experimental phase. Use at your own discretion.

Welcome to fdisk (util-linux 2.23.2).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Command (m for help): n

Partition number (9-128, default 9):

First sector (34-41943006, default 16779264):

Last sector, +sectors or +size{K,M,G,T,P} (16779264-41943006, default 41943006): +1G

Created partition 9

Command (m for help): n

Partition number (10-128, default 10):

First sector (34-41943006, default 18876416):

Last sector, +sectors or +size{K,M,G,T,P} (18876416-41943006, default 41943006): +1G

Created partition 10

Command (m for help): n

Partition number (11-128, default 11):

First sector (34-41943006, default 20973568):

Last sector, +sectors or +size{K,M,G,T,P} (20973568-41943006, default 41943006): +1G

Created partition 11

Command (m for help): n

Partition number (12-128, default 12):

First sector (34-41943006, default 23070720):

Last sector, +sectors or +size{K,M,G,T,P} (23070720-41943006, default 41943006): +1G

Created partition 12

Command (m for help): p

Disk /dev/sdb: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: gpt

Disk identifier: 06E5E1E8-0E35-42D1-895D-CFEC7398C365

# Start End Size Type Name

1 2048 2099199 1G Linux filesyste

2 2099200 4196351 1G Linux filesyste

3 4196352 6293503 1G Linux filesyste

4 6293504 8390655 1G Linux filesyste

5 8390656 10487807 1G Linux filesyste

6 10487808 12584959 1G Linux filesyste

7 12584960 14682111 1G Linux filesyste

8 14682112 16779263 1G Linux filesyste

9 16779264 18876415 1G Linux filesyste

10 18876416 20973567 1G Linux filesyste

11 20973568 23070719 1G Linux filesyste

12 23070720 25167871 1G Linux filesyste

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

WARNING: Re-reading the partition table failed with error 16: Device or resource busy.

The kernel still uses the old table. The new table will be used at

the next reboot or after you run partprobe(8) or kpartx(8)

Syncing disks.

[root@stw2 ~]# partprobe /dev/sdb

2、将sdb9、sdb10先建立RAID等级为raid1的md101(设备名),sdb11、sdb12建立RAID等级为raid1的md102(设备名),再将md101、md102建立RAID等级为raid0的md10(设备名)

[root@stw2 ~]# mdadm -Cv /dev/md101 -l 1 -n 2 /dev/sdb9 /dev/sdb10

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

mdadm: size set to 1046528K

Continue creating array? y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md101 started.

[root@stw2 ~]# mdadm -Cv /dev/md102 -l 1 -n 2 /dev/sdb11 /dev/sdb12

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

mdadm: size set to 1046528K

Continue creating array? y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md102 started.

[root@stw2 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1G 0 part /boot

├─sda2 8:2 0 50G 0 part /

└─sda3 8:3 0 4G 0 part [SWAP]

sdb 8:16 0 20G 0 disk

├─sdb1 8:17 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0 /md0

├─sdb2 8:18 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0 /md0

├─sdb3 8:19 0 1G 0 part

│ └─md1 9:1 0 1022M 0 raid1 /md1

├─sdb4 8:20 0 1G 0 part

│ └─md1 9:1 0 1022M 0 raid1 /md1

├─sdb5 8:21 0 1G 0 part

│ └─md5 9:5 0 2G 0 raid5 /md5

├─sdb6 8:22 0 1G 0 part

│ └─md5 9:5 0 2G 0 raid5 /md5

├─sdb7 8:23 0 1G 0 part

│ └─md5 9:5 0 2G 0 raid5 /md5

├─sdb8 8:24 0 1G 0 part

│ └─md5 9:5 0 2G 0 raid5 /md5

├─sdb9 8:25 0 1G 0 part

│ └─md101 9:101 0 1022M 0 raid1

├─sdb10 8:26 0 1G 0 part

│ └─md101 9:101 0 1022M 0 raid1

├─sdb11 8:27 0 1G 0 part

│ └─md102 9:102 0 1022M 0 raid1

└─sdb12 8:28 0 1G 0 part

└─md102 9:102 0 1022M 0 raid1

sr0 11:0 1 4.3G 0 rom

[root@stw2 ~]# mdadm -Cv /dev/md10 -l 0 -n 2 /dev/md101 /dev/md102

mdadm: chunk size defaults to 512K

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md10 started.

[root@stw2 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1G 0 part /boot

├─sda2 8:2 0 50G 0 part /

└─sda3 8:3 0 4G 0 part [SWAP]

sdb 8:16 0 20G 0 disk

├─sdb1 8:17 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0 /md0

├─sdb2 8:18 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0 /md0

├─sdb3 8:19 0 1G 0 part

│ └─md1 9:1 0 1022M 0 raid1 /md1

├─sdb4 8:20 0 1G 0 part

│ └─md1 9:1 0 1022M 0 raid1 /md1

├─sdb5 8:21 0 1G 0 part

│ └─md5 9:5 0 2G 0 raid5 /md5

├─sdb6 8:22 0 1G 0 part

│ └─md5 9:5 0 2G 0 raid5 /md5

├─sdb7 8:23 0 1G 0 part

│ └─md5 9:5 0 2G 0 raid5 /md5

├─sdb8 8:24 0 1G 0 part

│ └─md5 9:5 0 2G 0 raid5 /md5

├─sdb9 8:25 0 1G 0 part

│ └─md101 9:101 0 1022M 0 raid1

│ └─md10 9:10 0 2G 0 raid0

├─sdb10 8:26 0 1G 0 part

│ └─md101 9:101 0 1022M 0 raid1

│ └─md10 9:10 0 2G 0 raid0

├─sdb11 8:27 0 1G 0 part

│ └─md102 9:102 0 1022M 0 raid1

│ └─md10 9:10 0 2G 0 raid0

└─sdb12 8:28 0 1G 0 part

└─md102 9:102 0 1022M 0 raid1

└─md10 9:10 0 2G 0 raid0

sr0 11:0 1 4.3G 0 rom

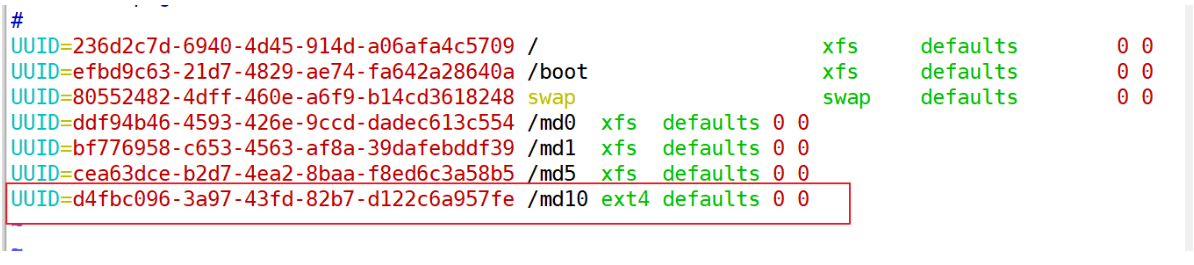

3、格式化并手动挂载md10

[root@stw2 ~]# mkfs.ext4 /dev/md10

mke2fs 1.42.9 (28-Dec-2013)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=128 blocks, Stripe width=256 blocks

130560 inodes, 522240 blocks

26112 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=534773760

16 block groups

32768 blocks per group, 32768 fragments per group

8160 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912

Allocating group tables: done

Writing inode tables: done

Creating journal (8192 blocks): done

Writing superblocks and filesystem accounting information: done

[root@stw2 ~]# blkid

/dev/sda1: UUID="efbd9c63-21d7-4829-ae74-fa642a28640a" TYPE="xfs"

/dev/sda2: UUID="236d2c7d-6940-4d45-914d-a06afa4c5709" TYPE="xfs"

/dev/sda3: UUID="80552482-4dff-460e-a6f9-b14cd3618248" TYPE="swap"

/dev/sdb1: UUID="e4d678dd-322e-d9fb-406d-0c6aa48ef576" UUID_SUB="c91fe913-8324-1615-79a4-0962349d7863" LABEL="stw2.example.com:0" TYPE="linux_raid_member" PARTUUID="f2421c60-785e-41f4-b724-9df1d17c18c1"

/dev/sdb2: UUID="e4d678dd-322e-d9fb-406d-0c6aa48ef576" UUID_SUB="61d77597-a973-6248-9dc0-b4204526e50e" LABEL="stw2.example.com:0" TYPE="linux_raid_member" PARTUUID="966253fe-b45c-47ad-8bf5-b265a895426b"

/dev/sr0: UUID="2018-11-25-23-54-16-00" LABEL="CentOS 7 x86_64" TYPE="iso9660" PTTYPE="dos"

/dev/md0: UUID="ddf94b46-4593-426e-9ccd-dadec613c554" TYPE="xfs"

/dev/sdb3: UUID="02de80c2-590f-b120-0cb0-309fd7eb11e6" UUID_SUB="c95eb1db-4bbf-2c57-9c45-b8545e9ce7d5" LABEL="stw2.example.com:1" TYPE="linux_raid_member" PARTUUID="6b72dbc2-1dce-4922-a299-3b10f094f8fd"

/dev/sdb4: UUID="02de80c2-590f-b120-0cb0-309fd7eb11e6" UUID_SUB="c2724409-5937-4261-2822-5cf4f1ea14b2" LABEL="stw2.example.com:1" TYPE="linux_raid_member" PARTUUID="74d18fa6-5f72-4819-aadb-e0ce7541d02a"

/dev/md1: UUID="bf776958-c653-4563-af8a-39dafebddf39" TYPE="xfs"

/dev/sdb5: UUID="326c3d85-39d2-b6c0-10c3-5aa91185c42c" UUID_SUB="8ab41f9c-3616-d3e0-d20d-3635f8fe47d4" LABEL="stw2.example.com:5" TYPE="linux_raid_member" PARTUUID="9880f0f1-ec14-44e3-a499-20040f02b81a"

/dev/sdb6: UUID="326c3d85-39d2-b6c0-10c3-5aa91185c42c" UUID_SUB="e7dcfb0d-8ee8-6ae7-aae1-ac10c4937f88" LABEL="stw2.example.com:5" TYPE="linux_raid_member" PARTUUID="1bcb575b-766f-4719-9464-c3301e6506eb"

/dev/sdb7: UUID="326c3d85-39d2-b6c0-10c3-5aa91185c42c" UUID_SUB="afcfc5ca-f528-1fbc-b184-4e40444a5cd5" LABEL="stw2.example.com:5" TYPE="linux_raid_member" PARTUUID="cb09c16a-0fcf-4618-a80c-f0093ca5ab02"

/dev/sdb8: UUID="326c3d85-39d2-b6c0-10c3-5aa91185c42c" UUID_SUB="bab190e3-f331-d810-9ed8-aa85dc3c7796" LABEL="stw2.example.com:5" TYPE="linux_raid_member" PARTUUID="6c34f4dc-5a0b-452a-99ff-c21579f1ffb3"

/dev/md5: UUID="cea63dce-b2d7-4ea2-8baa-f8ed6c3a58b5" TYPE="xfs"

/dev/sdb9: UUID="7c8f0699-b69d-5462-1393-9634fb59d7a7" UUID_SUB="a1c44e8b-c4af-3cfd-c15b-90fbcad46249" LABEL="stw2.example.com:101" TYPE="linux_raid_member" PARTUUID="dfa8cedd-dfdc-4f81-aeba-90190c778c66"

/dev/sdb10: UUID="7c8f0699-b69d-5462-1393-9634fb59d7a7" UUID_SUB="99439a3d-673a-8649-784b-a654987cd9de" LABEL="stw2.example.com:101" TYPE="linux_raid_member" PARTUUID="7048ab01-7f2b-48a7-980d-cc691d756c84"

/dev/sdb11: UUID="d3e7da76-c07e-9a81-5a2e-4ba6d1e761cd" UUID_SUB="a6604001-a32d-8c99-ad77-e716dc9d4244" LABEL="stw2.example.com:102" TYPE="linux_raid_member" PARTUUID="93b70cef-2392-4752-a2a9-fb6f70f8ef97"

/dev/sdb12: UUID="d3e7da76-c07e-9a81-5a2e-4ba6d1e761cd" UUID_SUB="c56ab35a-3769-c29d-63c7-7a7db863634c" LABEL="stw2.example.com:102" TYPE="linux_raid_member" PARTUUID="54adbf2d-e63e-446a-b578-4e7cd7ec90c2"

/dev/md101: UUID="a688471d-68e7-5062-2104-17cc5c7bfaf7" UUID_SUB="ce2aeade-80aa-1e51-49cc-ddfff7fc748d" LABEL="stw2.example.com:10" TYPE="linux_raid_member"

/dev/md102: UUID="a688471d-68e7-5062-2104-17cc5c7bfaf7" UUID_SUB="7d14f5c3-dcf9-7c4a-20ef-fc5711935698" LABEL="stw2.example.com:10" TYPE="linux_raid_member"

/dev/md10: UUID="d4fbc096-3a97-43fd-82b7-d122c6a957fe" TYPE="ext4"

[root@stw2 ~]# mkdir /md10

[root@stw2 ~]# vim /etc/fstab

[root@stw2 ~]# mount -a

[root@stw2 ~]# df -Th

Filesystem Type Size Used Avail Use% Mounted on

/dev/sda2 xfs 50G 3.5G 47G 7% /

devtmpfs devtmpfs 2.0G 0 2.0G 0% /dev

tmpfs tmpfs 2.0G 0 2.0G 0% /dev/shm

tmpfs tmpfs 2.0G 13M 2.0G 1% /run

tmpfs tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/sda1 xfs 1014M 163M 852M 17% /boot

tmpfs tmpfs 394M 12K 394M 1% /run/user/42

tmpfs tmpfs 394M 0 394M 0% /run/user/0

/dev/md0 xfs 2.0G 33M 2.0G 2% /md0

/dev/md1 xfs 1019M 33M 987M 4% /md1

/dev/md5 xfs 2.0G 33M 2.0G 2% /md5

/dev/md10 ext4 2.0G 6.0M 1.9G 1% /md10

[root@stw2 ~]# mdadm -D /dev/md10

/dev/md10:

Version : 1.2

Creation Time : Mon Aug 18 10:56:53 2025

Raid Level : raid0

Array Size : 2088960 (2040.00 MiB 2139.10 MB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Mon Aug 18 10:56:53 2025

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Chunk Size : 512K

Consistency Policy : none

Name : stw2.example.com:10 (local to host stw2.example.com)

UUID : a688471d:68e75062:210417cc:5c7bfaf7

Events : 0

Number Major Minor RaidDevice State

0 9 101 0 active sync /dev/md101

1 9 102 1 active sync /dev/md102

[root@stw2 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1G 0 part /boot

├─sda2 8:2 0 50G 0 part /

└─sda3 8:3 0 4G 0 part [SWAP]

sdb 8:16 0 20G 0 disk

├─sdb1 8:17 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0 /md0

├─sdb2 8:18 0 1G 0 part

│ └─md0 9:0 0 2G 0 raid0 /md0

├─sdb3 8:19 0 1G 0 part

│ └─md1 9:1 0 1022M 0 raid1 /md1

├─sdb4 8:20 0 1G 0 part

│ └─md1 9:1 0 1022M 0 raid1 /md1

├─sdb5 8:21 0 1G 0 part

│ └─md5 9:5 0 2G 0 raid5 /md5

├─sdb6 8:22 0 1G 0 part

│ └─md5 9:5 0 2G 0 raid5 /md5

├─sdb7 8:23 0 1G 0 part

│ └─md5 9:5 0 2G 0 raid5 /md5

├─sdb8 8:24 0 1G 0 part

│ └─md5 9:5 0 2G 0 raid5 /md5

├─sdb9 8:25 0 1G 0 part

│ └─md101 9:101 0 1022M 0 raid1

│ └─md10 9:10 0 2G 0 raid0 /md10

├─sdb10 8:26 0 1G 0 part

│ └─md101 9:101 0 1022M 0 raid1

│ └─md10 9:10 0 2G 0 raid0 /md10

├─sdb11 8:27 0 1G 0 part

│ └─md102 9:102 0 1022M 0 raid1

│ └─md10 9:10 0 2G 0 raid0 /md10

└─sdb12 8:28 0 1G 0 part

└─md102 9:102 0 1022M 0 raid1

└─md10 9:10 0 2G 0 raid0 /md10

sr0 11:0 1 4.3G 0 rom

更多推荐

已为社区贡献4条内容

已为社区贡献4条内容

所有评论(0)