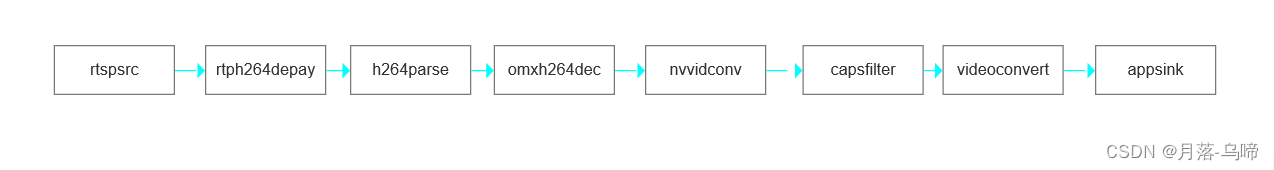

利用GStreamer 解析RTSP视频流,并保存cv::Mat

硬解码rtsp视频流,并封装成opencvMat,供后续使用。

·

一,

硬解码rtsp视频流,并封装成opencv Mat,供后续使用

二,

#pragma once

#include <opencv2/core/core.hpp>

#include <string>

#include <gst/gst.h>

#include "websocket_server.h"

namespace Transmit

{

class RTSPDecode : public std::enable_shared_from_this<RTSPDecode>

{

public:

using Ptr = std::shared_ptr<RTSPDecode>;

~RTSPDecode();

/**

* @brief 初始化编码器

*/

int init(int width, int height,std::string url);

int width()

{

return width_;

}

int height()

{

return height_;

}

GstElement *rtph264depay()

{

return rtph264depay_;

}

private:

GstElement *pipeline_;

GstElement *rtspsrc_; // 读取rtsp流

GstElement *rtph264depay_; // 从rtspsrc接收到rtp包

GstElement *h264parse_; // 分割输出H264帧数据

GstElement *omxh264dec_; // 硬解码H264帧数据

GstElement *nvvidconv_; // 硬解码drm_prime帧数据成BGRx

GstElement *capsfilter_; // 指定输出的数据类型

GstElement *videoconvert_; // 转换video数据格式

GstElement *appsink_; // 获取BGRx数据

int width_ = 0;

int height_ = 0;

std::string url_;

};

}

#include <transmit/rtsp_decode.h>

#include <iostream>

#include <stdio.h>

#include <unistd.h>

#include <gst/gst.h>

#include <gst/gstelement.h>

#include <gst/app/gstappsink.h>

#include <opencv2/core/core.hpp>

#include <opencv2/opencv.hpp>

using namespace std;

namespace Transmit

{

GstFlowReturn CaptureGstBGRBuffer(GstAppSink *sink, gpointer user_data)

{

RTSPDecode *data = (RTSPDecode *)user_data;

GstSample *sample = gst_app_sink_pull_sample(sink);

if (sample == NULL)

{

return GST_FLOW_ERROR;

}

GstBuffer *buffer = gst_sample_get_buffer(sample);

GstMapInfo map_info;

if (!gst_buffer_map((buffer), &map_info, GST_MAP_READ))

{

gst_buffer_unmap((buffer), &map_info);

gst_sample_unref(sample);

return GST_FLOW_ERROR;

}

cv::Mat bgra = cv::Mat(data->height(), data->width(), CV_8UC4, (char *)map_info.data, cv::Mat::AUTO_STEP);

cv::Mat bgr;

cv::cvtColor(bgra, bgr,cv::COLOR_BGRA2BGR);

static int sampleno = 0;

char szName[56] = {0};

sprintf(szName, "%d.jpg", sampleno++); // 设置保存路径

//memcpy(frame.data, map_info.data, map_info.size);

cv::imwrite(szName, bgr);

// fprintf(stderr, "Got sample no %d %d\n", sampleno++, (int)map_info.size);

gst_buffer_unmap((buffer), &map_info);

gst_sample_unref(sample);

return GST_FLOW_OK;

}

static void RtspSrcPadAdded(GstElement *element, GstPad *pad, gpointer data)

{

gchar *name;

GstCaps *p_caps;

gchar *description;

GstElement *p_rtph264depay;

name = gst_pad_get_name(pad);

g_print("A new pad %s was created\n", name);

// here, you would setup a new pad link for the newly created pad

// sooo, now find that rtph264depay is needed and link them?

p_caps = gst_pad_get_pad_template_caps(pad);

description = gst_caps_to_string(p_caps);

printf("%s \n", description);

g_free(description);

p_rtph264depay = GST_ELEMENT(data);

// try to link the pads then ...

if (!gst_element_link_pads(element, name, p_rtph264depay, "sink"))

{

printf("Failed to link elements 3\n");

}

g_free(name);

}

RTSPDecode::~RTSPDecode()

{

if (pipeline_)

{

gst_element_set_state(pipeline_, GST_STATE_NULL);

gst_object_unref(pipeline_);

pipeline_ = nullptr;

}

}

int RTSPDecode::init(int width, int height, std::string url)

{

width_ = width;

height_ = height;

url_ = url;

pipeline_ = gst_pipeline_new("pipeline");

rtspsrc_ = gst_element_factory_make("rtspsrc", "Rtspsrc");

rtph264depay_ = gst_element_factory_make("rtph264depay", "Rtph264depay");

h264parse_ = gst_element_factory_make("h264parse", "H264parse");

omxh264dec_ = gst_element_factory_make("omxh264dec", "Omxh264dec");

nvvidconv_ = gst_element_factory_make("nvvidconv", "Nvvidconv");

capsfilter_ = gst_element_factory_make("capsfilter", "Capsfilter");

videoconvert_ = gst_element_factory_make("videoconvert", "Videoconvert");

appsink_ = gst_element_factory_make("appsink", "Appsink");

if (!pipeline_ || !rtspsrc_ || !rtph264depay_ || !h264parse_ || !omxh264dec_ || !nvvidconv_ || !capsfilter_ || !videoconvert_ || !appsink_)

{

std::cerr << "Not all elements could be created" << std::endl;

return -1;

}

// 设置

g_object_set(G_OBJECT(rtspsrc_), "location", url_.c_str(), "latency", 2000, NULL);

g_object_set(G_OBJECT(capsfilter_), "caps", gst_caps_new_simple("video/x-raw", "format", G_TYPE_STRING, "BGRx", "width", G_TYPE_INT, width_, "height", G_TYPE_INT, height_, nullptr), NULL);

// Set up appsink

g_object_set(G_OBJECT(appsink_), "emit-signals", TRUE, NULL);

g_object_set(G_OBJECT(appsink_), "sync", FALSE, NULL);

g_object_set(G_OBJECT(appsink_), "drop", TRUE, NULL);

g_signal_connect(appsink_, "new-sample", G_CALLBACK(CaptureGstBGRBuffer), reinterpret_cast<void *>(this));

// Set up rtspsrc

g_signal_connect(rtspsrc_, "pad-added", G_CALLBACK(RtspSrcPadAdded), rtph264depay_);

// BAdd elements to pipeline

gst_bin_add_many(GST_BIN(pipeline_), rtspsrc_, rtph264depay_, h264parse_, omxh264dec_, nvvidconv_, capsfilter_, videoconvert_, appsink_, nullptr);

// Link elements

if (gst_element_link_many(rtph264depay_, h264parse_, omxh264dec_, nvvidconv_, capsfilter_, videoconvert_, appsink_, nullptr) != TRUE)

{

std::cerr << "rtspsrc_, rtph264depay_, h264parse_, omxh264dec_, nvvidconv_, capsfilter_,videoconvert_,appSink_ could not be linked" << std::endl;

return -1;

}

// Start playing

auto ret = gst_element_set_state(pipeline_, GST_STATE_PLAYING);

if (ret == GST_STATE_CHANGE_FAILURE)

{

std::cerr << "Unable to set the pipeline to the playing state" << std::endl;

return -1;

}

return 0;

}

}更多推荐

已为社区贡献9条内容

已为社区贡献9条内容

所有评论(0)