L1 玩转书生大模型 API 与 MCP【自存】

MCP(Model Control Protocol)是一种专为 AI 设计的协议(类别 USB-C接口转换器),其核心作用是扩充 AI 的能力。获取外部数据操作文件系统调用各种服务接口实现复杂的工作流程通过本教程,您将掌握如何让 Intern-S1 API 突破传统对话限制,实现以下核心功能:外部数据获取:连接并处理来自各种外部源的数据文件系统操作:具备完整的文件创建、读取、修改和删除能力,实现

L1 玩转书生大模型 API 与 MCP【自存】

1. 前置准备

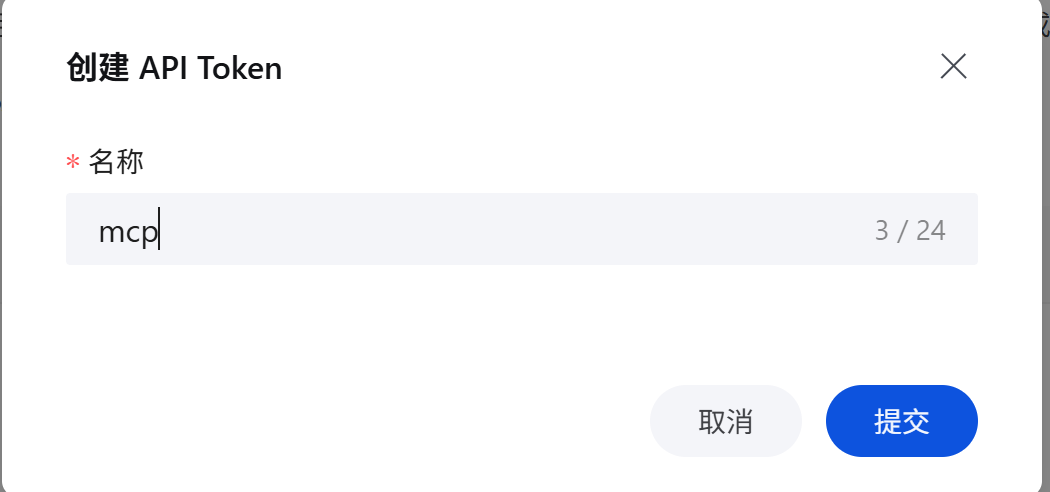

1.1 获取API Key

API Key:

sk-AFXOaPQuH0nb7wv3sgeiZAdQbfPZcZ7ZRLVEsyqiWHoxgnRt

1.2 开发机配置

2. 环境配置

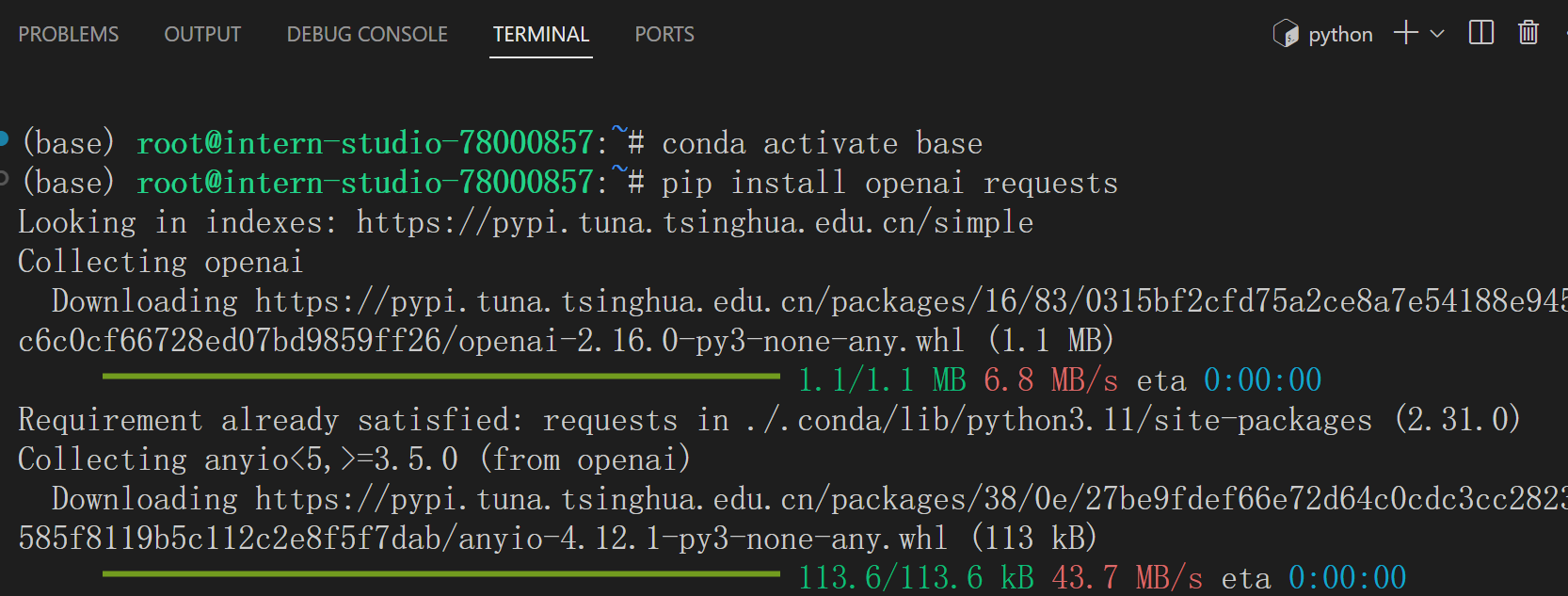

环境安装:

conda activate base

pip install openai requests

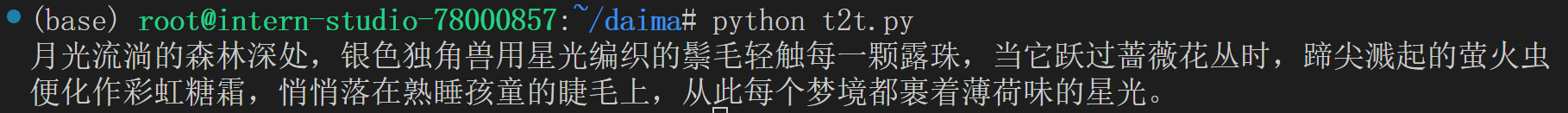

2.1 文本生成

Intern API 兼容 OpenAI API,为最新的Intern-S1 模型提供了一个简单的接口,用于文本生成、自然语言处理、计算机视觉等。本示例是文生文,根据提示生成文本输出——像您在使用网页端 Intern 一样。

t2t.py

from openai import OpenAI

client = OpenAI(

api_key="eyJ0eXxx", # 此处传token,不带Bearer

base_url="https://chat.intern-ai.org.cn/api/v1/",

)

completion = client.chat.completions.create(

model="intern-s1",

messages=[

{

"role": "user",

"content": "写一个关于独角兽的睡前故事,一句话就够了。"

}

]

)

print(completion.choices[0].message.content)

运行结果:

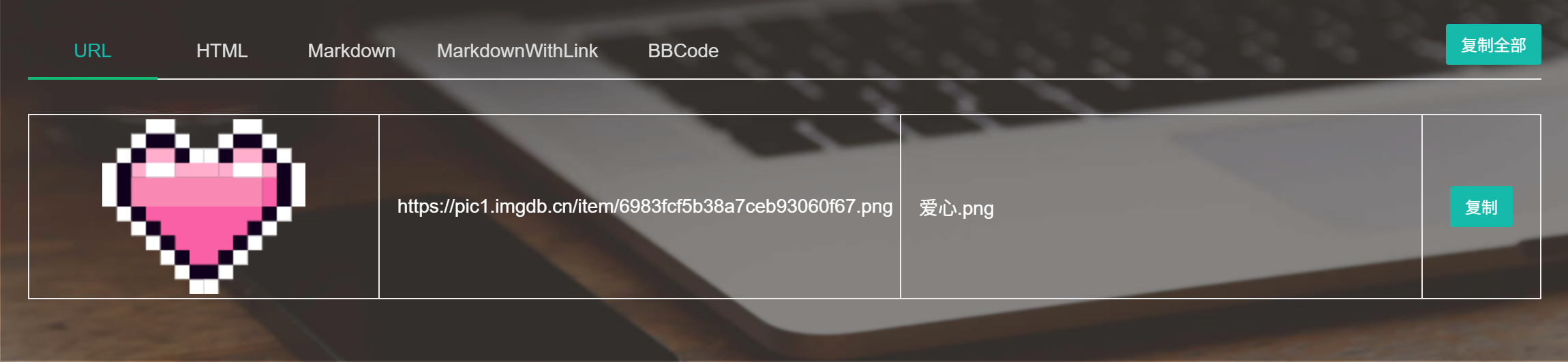

2.2 分析图象输入

你也可以向模型提供图像输入。扫描收据、分析截图,或使用计算机视觉技术在现实世界中寻找物体。

2.2.1 输入图像为 url

这里转化图片为 url 的工具:https://www.superbed.cn/

i2t.py

from openai import OpenAI

client = OpenAI(

api_key="eyJ0eXxx", # 此处传token,不带Bearer

base_url="https://chat.intern-ai.org.cn/api/v1/",

)

response = client.chat.completions.create(

model="intern-s1",

messages=[

{

"role": "user",

"content": [

{"type": "text", "text": "图片里有什么?"},

{

"type": "image_url",

"image_url": {

"url": "https://upload.wikimedia.org/wikipedia/commons/thumb/d/dd/Gfp-wisconsin-madison-the-nature-boardwalk.jpg/2560px-Gfp-wisconsin-madison-the-nature-boardwalk.jpg",

},

},

],

}

],

extra_body={"thinking_mode": True},

)

print(response.choices[0].message.content)

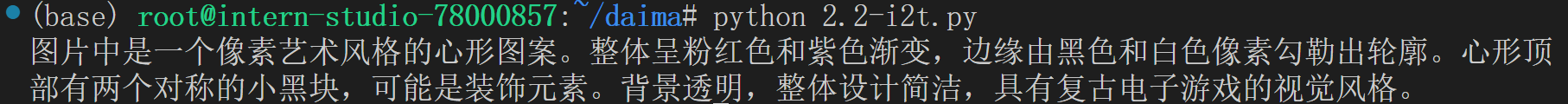

运行结果:

2.2.2 输入图像为文件

i2t_base64.py

import base64

from openai import OpenAI

client = OpenAI(

api_key="eyJ0eXxx", # 此处传token,不带Bearer

base_url="https://chat.intern-ai.org.cn/api/v1/",

)

# Function to encode the image

def encode_image(image_path):

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode("utf-8")

# Path to your image

image_path = "/root/share/intern.jpg"

# Getting the Base64 string

base64_image = encode_image(image_path)

completion = client.chat.completions.create(

model="intern-s1",

messages=[

{

"role": "user",

"content": [

{ "type": "text", "text": "图片里有什么?" },

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_image}",

},

},

],

}

],

)

print(completion.choices[0].message.content)

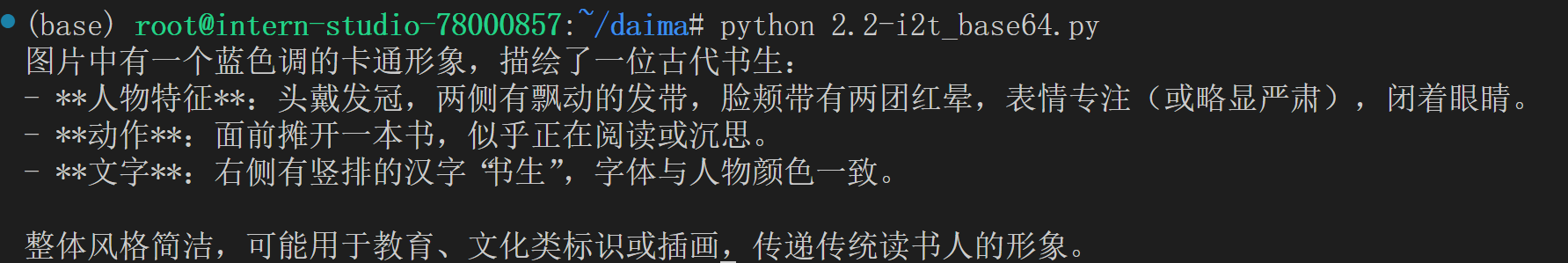

运行结果:

2.3 模型使用工具

Openai 格式

请求格式

请求采用 JSON 格式,主要参数包括:

{

"model": "gpt-3.5-turbo",

"messages": [

{ "role": "system", "content": "You are a helpful assistant." },

{ "role": "user", "content": "Hello, how are you?" }

],

"stream": false,

"temperature": 0.7,

"max_tokens": 1000,

"top_p": 1.0,

"frequency_penalty": 0.0,

"presence_penalty": 0.0,

"stop": null,

"user": "user-123"

}

get_weather_interns1.py

from openai import OpenAI

client = OpenAI( api_key="sk-lYQQ6Qxx,不带Bearer

base_url="https://chat.intern-ai.org.cn/api/v1/",

)

tools = [{

"type": "function",

"function": {

"name": "get_weather",

"description": "Get current temperature for a given location.",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "City and country e.g. Bogotá, Colombia"

}

},

"required": [

"location"

],

"additionalProperties": False

},

"strict": True

}

}]

completion = client.chat.completions.create(

model="intern-s1",

messages=[{"role": "user", "content": "What is the weather like in Paris today?"}],

tools=tools

)

print(completion.choices[0].message.tool_calls)

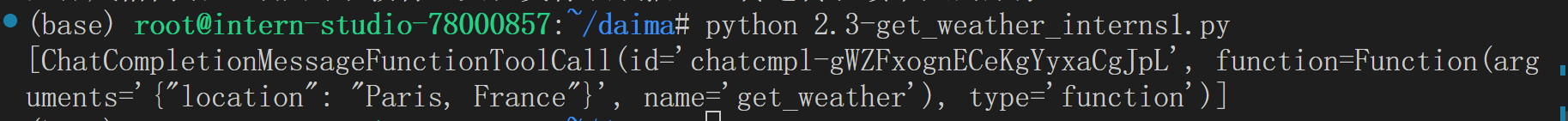

运行结果:

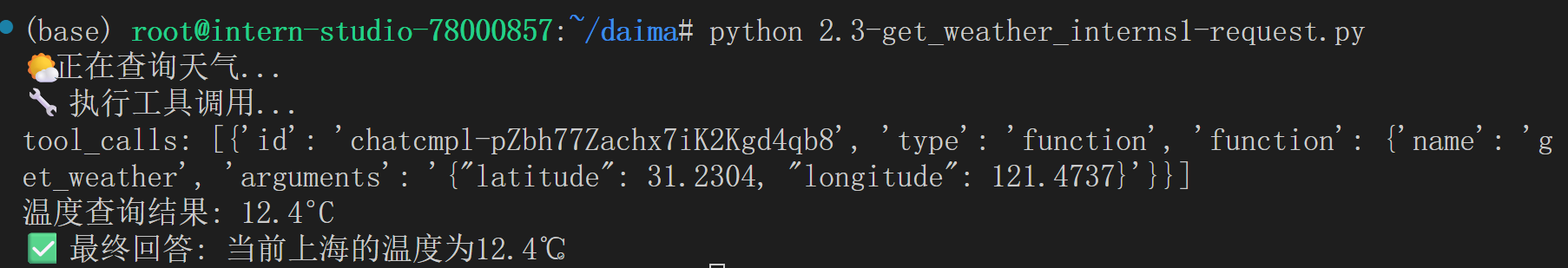

python原生调用:

import requests

import json

# API 配置

API_KEY = "eyJ0exxxxQ"

BASE_URL = "https://chat.intern-ai.org.cn/api/v1/"

ENDPOINT = f"{BASE_URL}chat/completions"

# 定义天气查询工具

WEATHER_TOOLS = [{

"type": "function",

"function": {

"name": "get_weather",

"description": "获取指定城市或坐标的当前温度(摄氏度)",

"parameters": {

"type": "object",

"properties": {

"latitude": {"type": "number", "description": "纬度"},

"longitude": {"type": "number", "description": "经度"}

},

"required": ["latitude", "longitude"],

"additionalProperties": False

},

"strict": True

}

}]

def get_weather(latitude, longitude):

"""

获取指定坐标的天气信息

Args:

latitude: 纬度

longitude: 经度

Returns:

当前温度(摄氏度)

"""

try:

# 调用开放气象API

response = requests.get(

f"https://api.open-meteo.com/v1/forecast?latitude={latitude}&longitude={longitude}¤t=temperature_2m,wind_speed_10m&hourly=temperature_2m,relative_humidity_2m,wind_speed_10m"

)

data = response.json()

temperature = data['current']['temperature_2m']

return f"{temperature}"

except Exception as e:

return f"获取天气信息时出错: {str(e)}"

def make_api_request(messages, tools=None):

"""发送API请求"""

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {API_KEY}"

}

payload = {

"model": "intern-s1",

"messages": messages,

"temperature": 0.7

}

if tools:

payload["tools"] = tools

payload["tool_choice"] = "auto"

try:

response = requests.post(ENDPOINT, headers=headers, json=payload, timeout=30)

response.raise_for_status()

return response.json()

except requests.exceptions.RequestException as e:

print(f"API请求失败: {e}")

return None

def main():

# 初始消息 - 巴黎的坐标

messages = [{"role": "user", "content": "请查询当前北京的温度"}]

print("🌤️ 正在查询天气...")

# 第一轮API调用

response = make_api_request(messages, WEATHER_TOOLS)

if not response:

return

assistant_message = response["choices"][0]["message"]

# 检查工具调用

if assistant_message.get("tool_calls"):

print("🔧 执行工具调用...")

print("tool_calls:",assistant_message.get("tool_calls"))

messages.append(assistant_message)

# 处理工具调用

for tool_call in assistant_message["tool_calls"]:

function_name = tool_call["function"]["name"]

function_args = json.loads(tool_call["function"]["arguments"])

tool_call_id = tool_call["id"]

if function_name == "get_weather":

latitude = function_args["latitude"]

longitude = function_args["longitude"]

weather_result = get_weather(latitude, longitude)

print(f"温度查询结果: {weather_result}°C")

# 添加工具结果

tool_message = {

"role": "tool",

"content": weather_result,

"tool_call_id": tool_call_id

}

messages.append(tool_message)

# 第二轮API调用获取最终答案

final_response = make_api_request(messages)

if final_response:

final_message = final_response["choices"][0]["message"]

print(f"✅ 最终回答: {final_message['content']}")

else:

print(f"直接回答: {assistant_message.get('content', 'No content')}")

if __name__ == "__main__":

main()

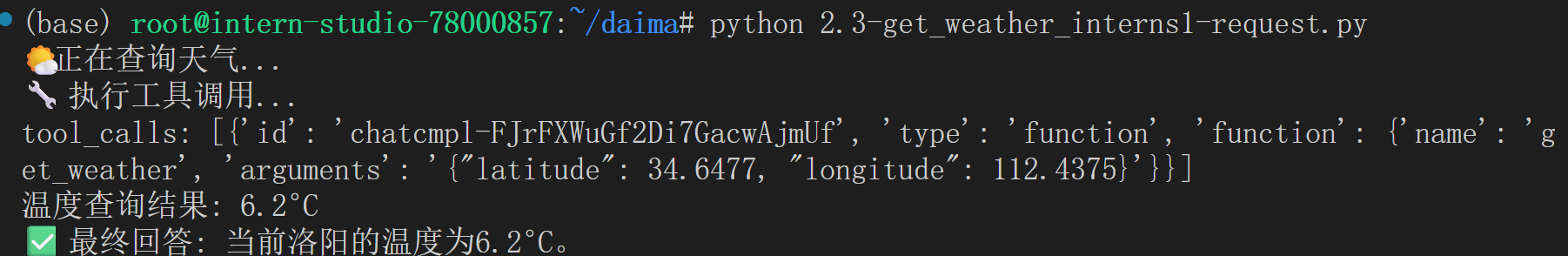

运行结果:

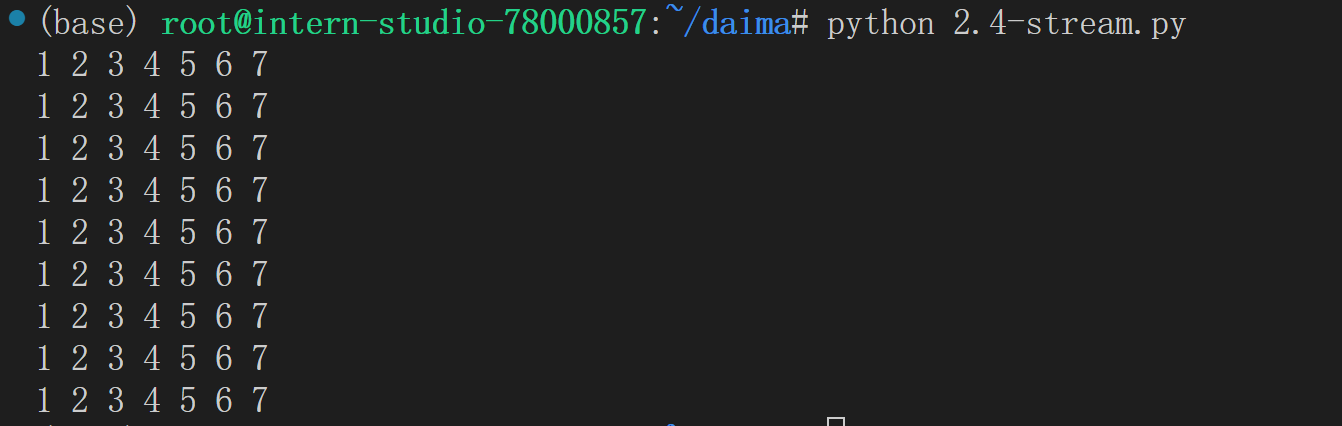

2.4 使用流式传输

stream=True,打开流式传输,体验如同网页端 Intern 吐字的感觉。

stream.py

from openai import OpenAI

client = OpenAI(

api_key="eyxxxx",

base_url="https://chat.intern-ai.org.cn/api/v1/",

)

stream = client.chat.completions.create(

model="intern-s1",

messages=[

{

"role": "user",

"content": "Say '1 2 3 4 5 6 7' ten times fast.",

},

],

stream=True,

)

# 只打印逐字输出的内容

for chunk in stream:

if chunk.choices[0].delta.content:

print(chunk.choices[0].delta.content, end="", flush=True) # 逐字输出,不换行

运行结果:

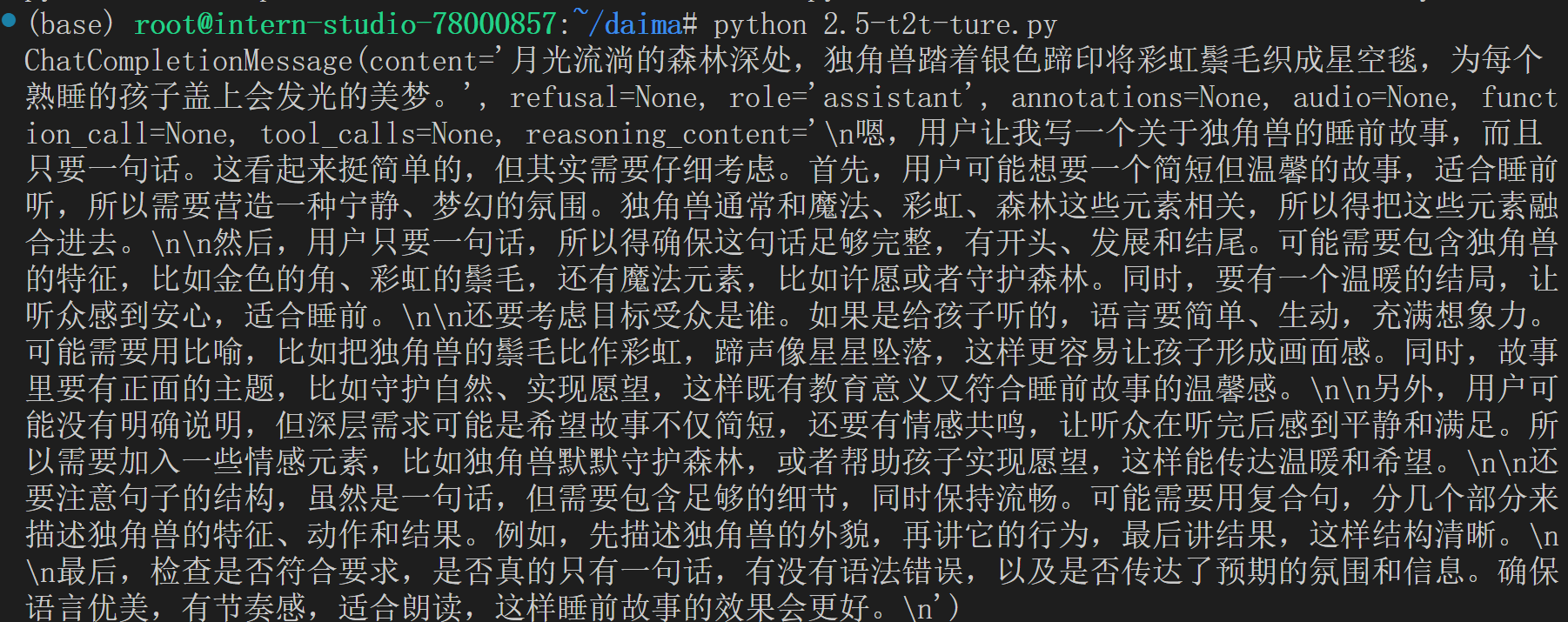

2.5 开关think 模式

通过extra_body={“thinking_mode”: True}打开思考模式

t2t-ture.py

from openai import OpenAI

client = OpenAI(

api_key="eyxxA", # 此处传token,不带Bearer

base_url="https://chat.intern-ai.org.cn/api/v1/",

)

completion = client.chat.completions.create(

model="intern-s1",

messages=[

{

"role": "user",

"content": "写一个关于独角兽的睡前故事,一句话就够了。"

}

],

extra_body={"thinking_mode": True,},

)

print(completion.choices[0].message)

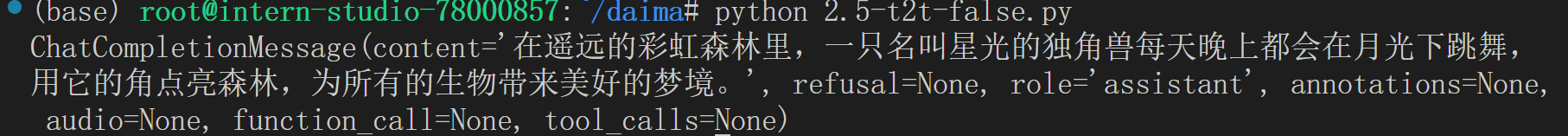

t2t-false.py

from openai import OpenAI

client = OpenAI(

api_key="eyJ0xxxmA", # 此处传token,不带Bearer

base_url="https://chat.intern-ai.org.cn/api/v1/",

)

completion = client.chat.completions.create(

model="intern-s1",

messages=[

{

"role": "user",

"content": "写一个关于独角兽的睡前故事,一句话就够了。"

}

],

extra_body={"thinking_mode": False,},

)

print(completion.choices[0].message)

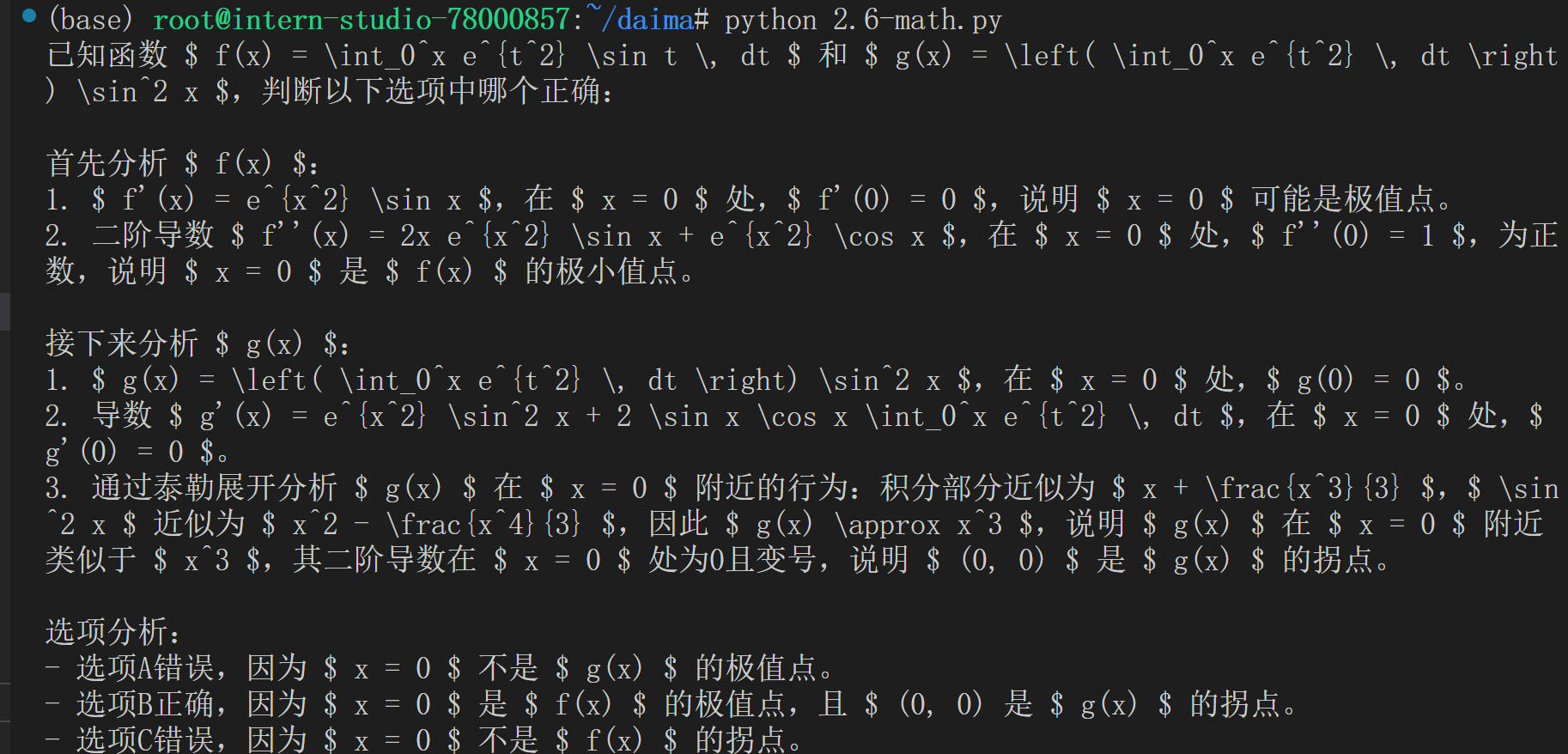

2.6 科学能力

数学

from getpass import getpass

from openai import OpenAI

api_key = getpass("请输入 API Key(输入不可见):")

client = OpenAI(

api_key=api_key, # 此处传token,不带Bearer

base_url="https://chat.intern-ai.org.cn/api/v1/",

)

response = client.chat.completions.create(

model="intern-s1",

messages=[

{

"role": "user",

"content": [

{"type": "text", "text": "这道题选什么"},

{

"type": "image_url",

"image_url": {

"url": "https://pic1.imgdb.cn/item/68d24759c5157e1a882b2505.jpg",

},

},

],

}

],

extra_body={"thinking_mode": True,},

)

print(response.choices[0].message.content)

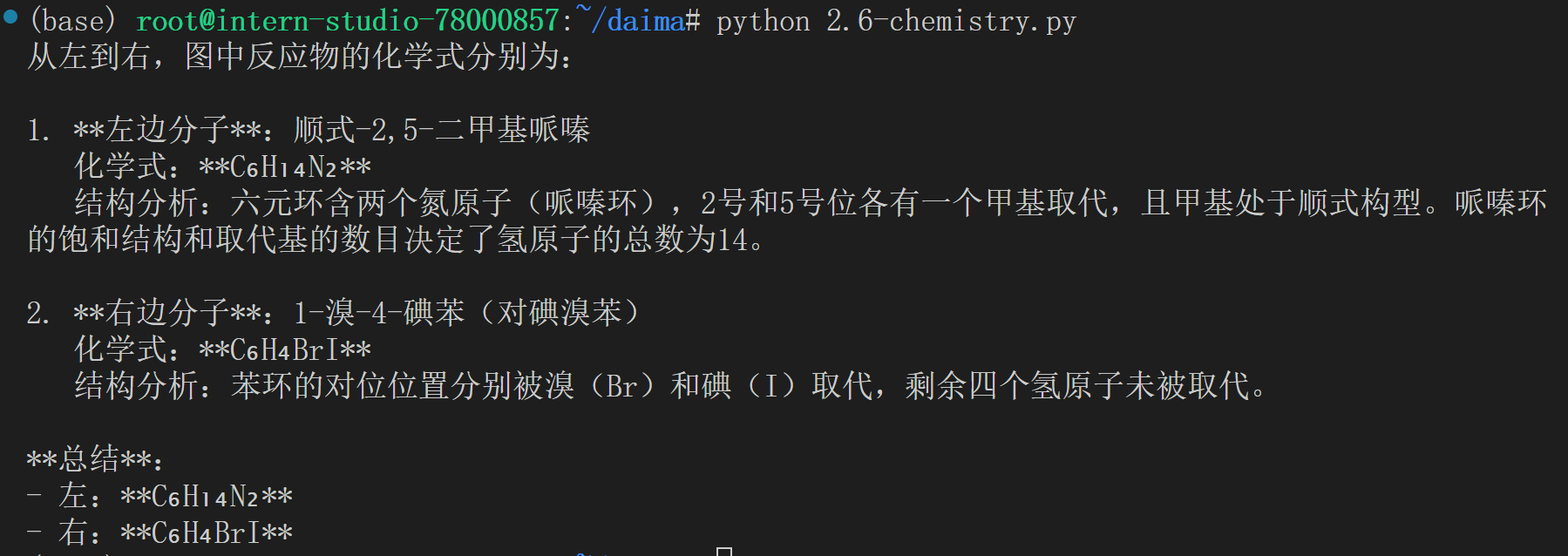

化学:

chemistry

from getpass import getpass

from openai import OpenAI

api_key = getpass("请输入 API Key(输入不可见):")

client = OpenAI(

api_key=api_key, # 此处传token,不带Bearer

base_url="https://chat.intern-ai.org.cn/api/v1/",

)

response = client.chat.completions.create(

model="intern-s1",

messages=[

{

"role": "user",

"content": [

{"type": "text", "text": "从左到右,给出图中反应物的化学式"},

{

"type": "image_url",

"image_url": {

"url": "https://pic1.imgdb.cn/item/68d23c82c5157e1a882ad47f.png",

},

},

],

}

],

extra_body={

"thinking_mode": True,

"temperature": 0.7,

"top_p": 1.0,

"top_k": 50,

"min_p": 0.0,

},

)

print(response.choices[0].message.content)

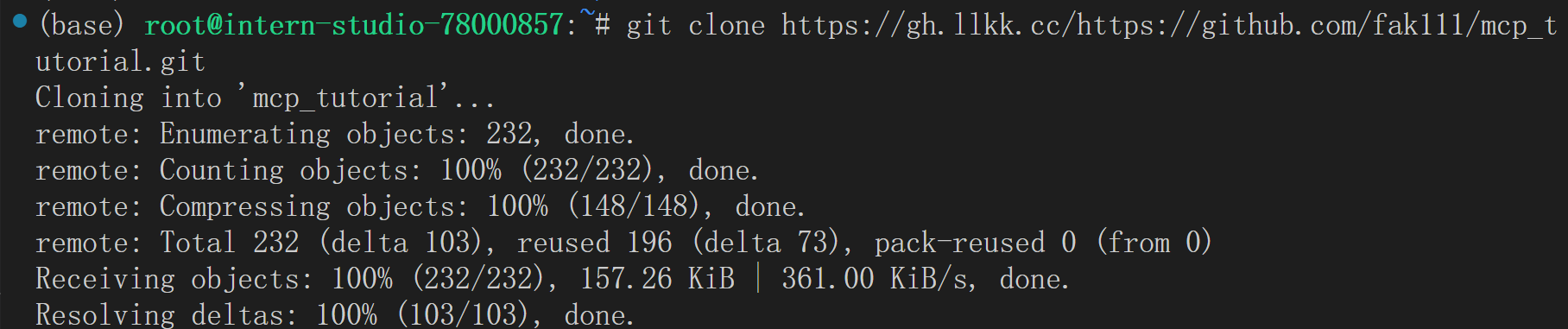

3. 玩转 MCP

3.1 什么是 MCP?

MCP(Model Control Protocol)是一种专为 AI 设计的协议(类别 USB-C接口转换器),其核心作用是扩充 AI 的能力。通过 MCP,AI 可以:

- 获取外部数据

- 操作文件系统

- 调用各种服务接口

- 实现复杂的工作流程

通过本教程,您将掌握如何让 Intern-S1 API 突破传统对话限制,实现以下核心功能: - 外部数据获取:连接并处理来自各种外部源的数据

- 文件系统操作:具备完整的文件创建、读取、修改和删除能力,实现一个命令行版本的 cursor。

让我们开始探索 MCP 的无限可能!

mcp_tutorial/

├── mcp-client/ # 客户端应用

├── mcp-server/ # 服务端微服务

│ ├── podcast/ # 播客服务

│ ├── weather/ # 天气服务

│ ├── gmail/ # Gmail服务

│ └── filesystem/ # 文件管理服务

└── install.sh # 一键安装环境脚本

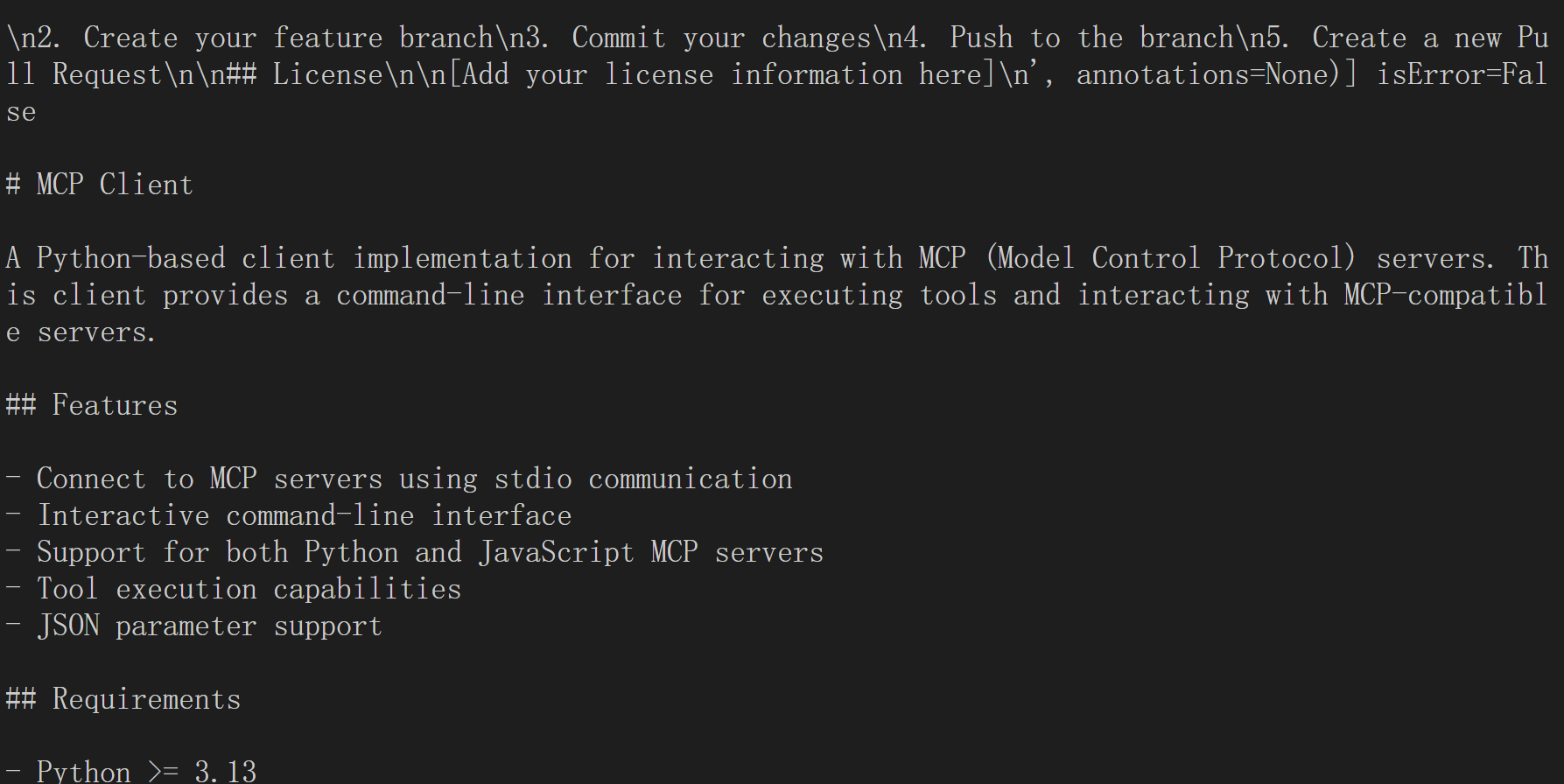

└── README.md # 说明文档

3.1.1. 环境准备

bash install.sh安装

3.1.2. 配置 API

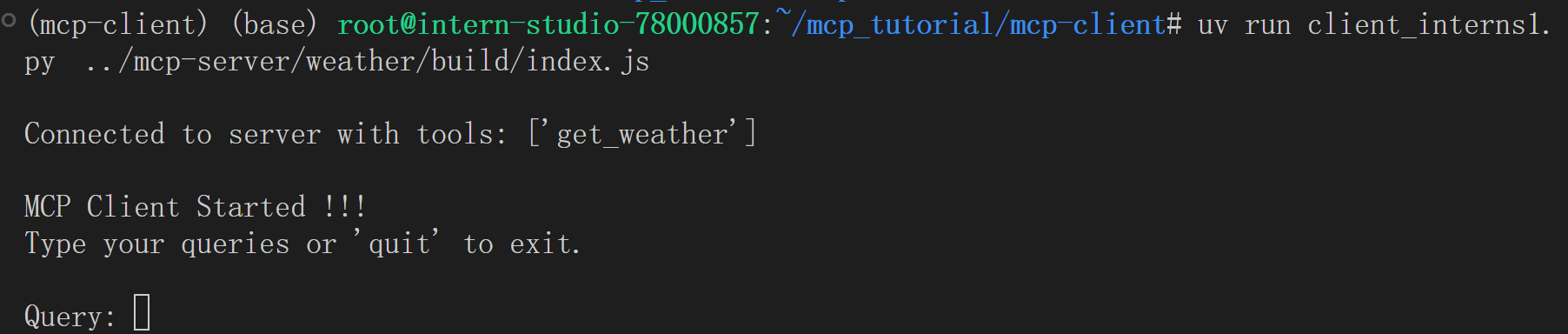

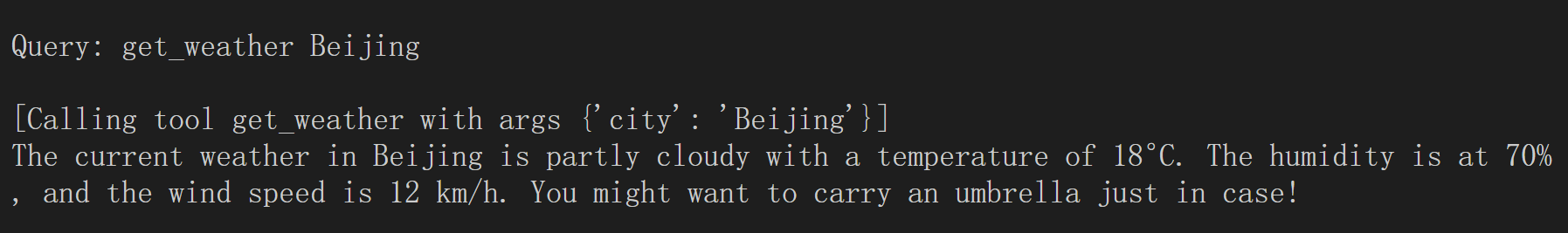

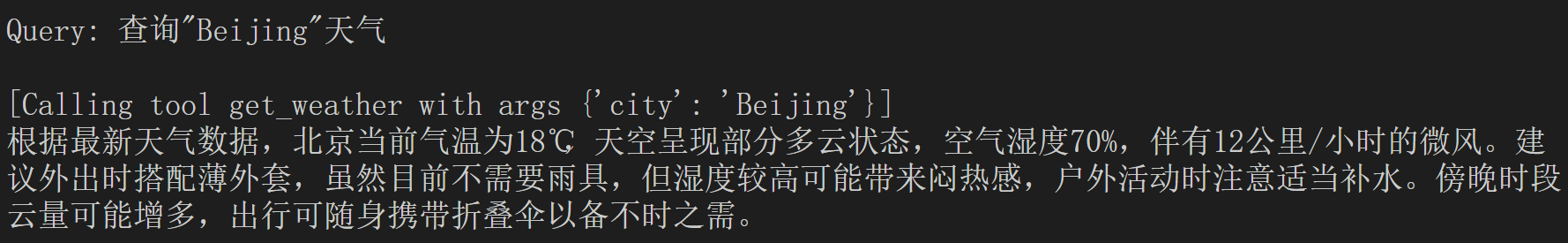

3.2 🌤️ 天气服务使用示例

cd mcp-client

source .venv/bin/activate

uv run client_interns1.py ../mcp-server/weather/build/index.js

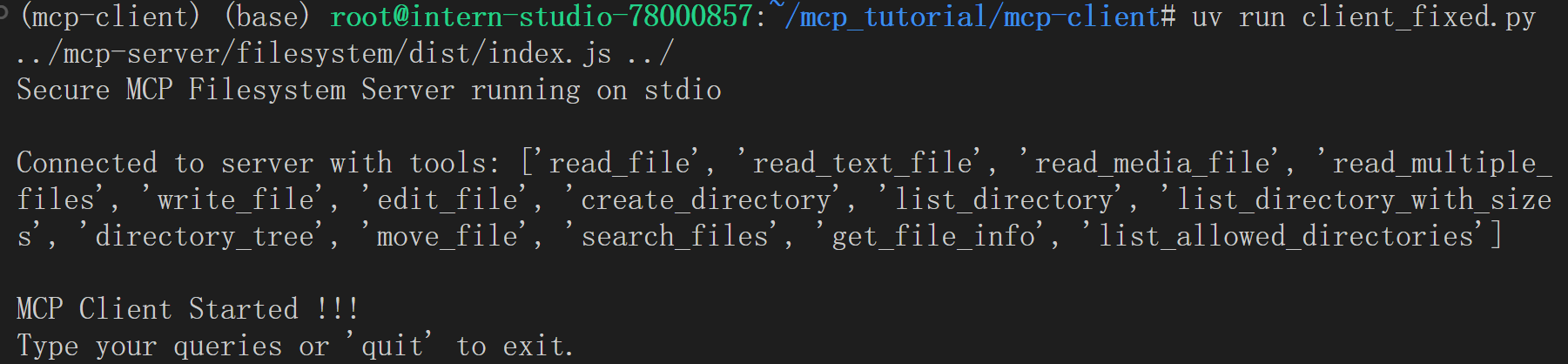

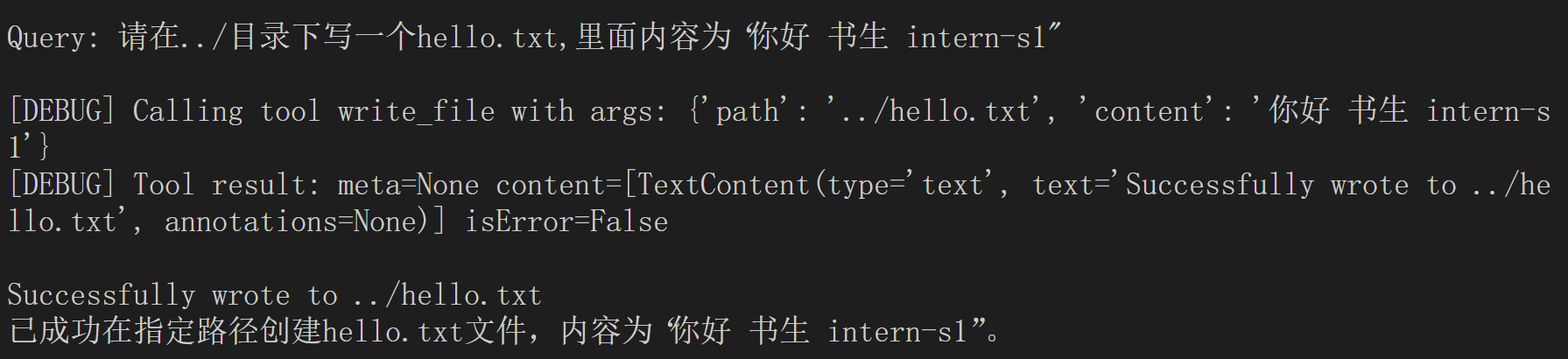

3.3 📁 文件系统服务

启动文件服务

文件服务的启动命令格式如下:

文件服务启动 uv run client_fixed.py arg1 arg2

cd mcp-client

source .venv/bin/activate

uv run client_fixed.py ../mcp-server/filesystem/dist/index.js ../

启动成功:

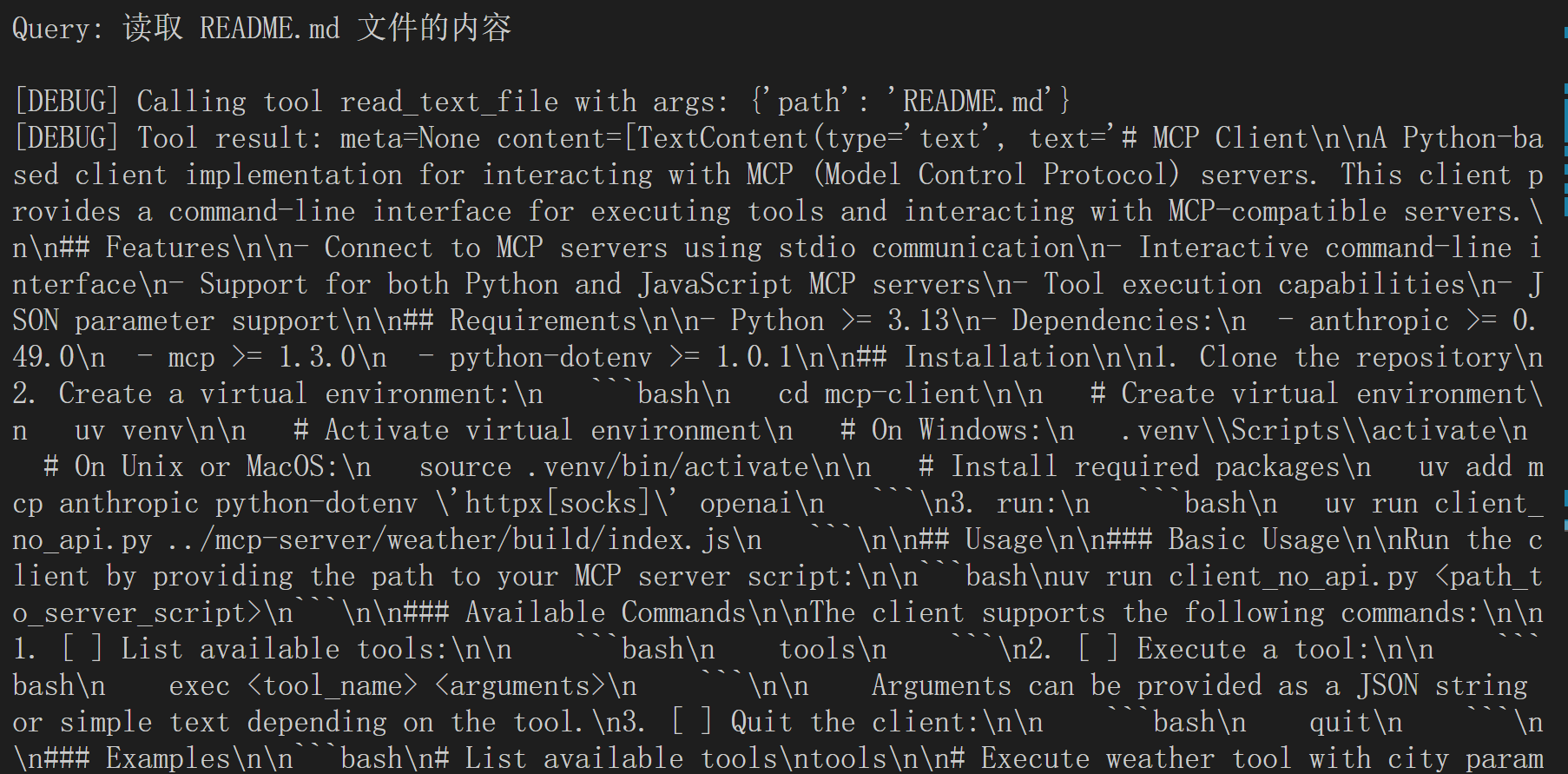

命令:列出文件:列出当前目录下的所有文件

命令:读取文件:读取 README.md 文件的内容

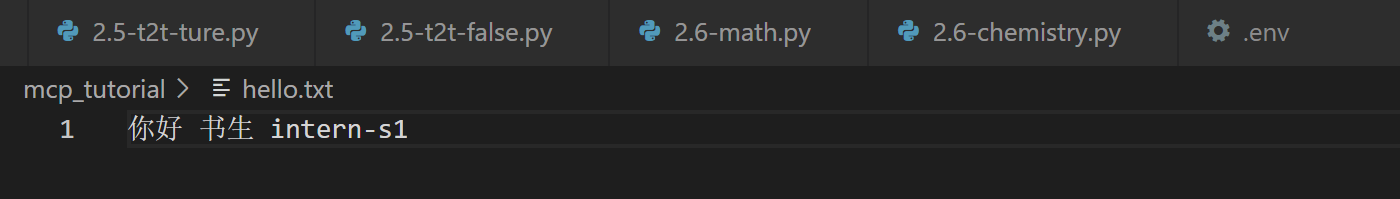

命令:创建文件:请在…/目录下写一个hello.txt,里面内容为“你好 书生 intern-s1"

命令:搜索文件:搜索所有 .md 文件

4. 总结

恭喜您完成了玩转书生大模型 API 与 MCP!通过本指南,您已经掌握了:

✅ 核心技能

- Intern-S1 API 的高级应用

- MCP 协议的实战运用

- 天气服务与文件系统的集成操作

✅ 实践成果 - 构建了具备外部数据获取能力的智能助手

- 实现了完整的文件系统操作功能

- 认识到 MCP 协议中参数匹配问题

🚀 下一步展望

现在您已经打开了 AI 能力扩展的大门,可以继续探索更多 MCP 服务,如 Gmail 集成、生成播客等,构建属于您的智能工作流!

感谢您的学习,期待看到您创造出更多精彩的 AI 应用! 🌟

更多推荐

已为社区贡献3条内容

已为社区贡献3条内容

所有评论(0)