从零构建AI Agent

在这篇文章中,你将(没有 LangChain,没有框架),使用一个真正有效的。

如果你曾经尝试过从零开始构建一个 AI Agent,你可能见过这种情况:

- 它永远在“思考”。

- 它在一个循环中不断调用工具。

- 它不断重复自己。

- 它的token消耗像漏水的管子一样快。

那不是 AI Agent。那只是一个带着工具的困惑的聊天机器人。

在这篇文章中,你将用 Python 从零开始构建一个 AI Agent(没有 LangChain,没有框架),使用一个真正有效的最小化 Agent 循环:

- 工具调用(安全 + 可预测)

- 记忆(短期 + 长期)

- 规划 + 重新规划(简单,不花哨)

- 硬停止条件(这样它就不会失控)

- 一个你可以调试和改进的追踪日志

最后,你将拥有一个干净、可以直接复制粘贴的 Agent,你可以将其作为实际产品的基础。

1、“AI Agent” 真正的含义

要从零开始构建一个 AI Agent,你只需要一个想法:

Agent 是一个循环:

规划 → 行动(工具)→ 观察 → 记忆 → 重新规划 → 停止

就是这样。

聊天机器人只回答一次。 Agent 可以决定下一步做什么,使用工具,并继续进行直到完成。

最大的秘密是:

一个好的 Agent 并不“聪明”。一个好的 Agent 是受控的*。*

所以我们将专注于控制:结构化输出、工具安全、记忆压缩和停止条件。

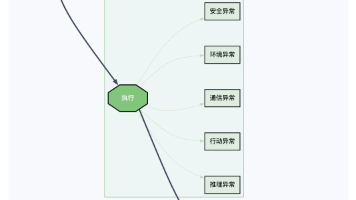

2、Agent 设计

我们将实现:

1) 工具

工具只是一个 Python 函数,包含:

- 名称

- 描述

- 输入的 JSON 模式

2) 记忆

两层:

- 短期记忆:最近的对话 + 工具结果

- 长期记忆:保存到磁盘并在稍后检索的紧凑笔记

3) 规划

两层:

- 高层规划:3–6 个要点

- 下一步行动:一步(调用工具或回答)

4) 停止条件(这是使其“真实”的关键)

硬限制:

-

最大步骤数

-

最大工具调用次数

-

最大时间

-

重复动作检测

软限制: -

如果 2 步没有新进展,停止

-

如果计划稳定但输出已准备好,完成

这就是“演示版 Agent”和人们信任的 Agent 之间的区别。

3、完整工作代码

纯 Python 的最小化 AI Agent(无 LangChain)。

将此复制粘贴到一个文件中:agent_from_scratch.py

它故意保持干净和可读。你可以稍后将其扩展为一个完整的系统。

"""

agent_from_scratch.py

------------------------------------------------------------

A minimal AI agent loop in pure Python (no LangChain).

- Tools: registry + safe execution

- Memory: short-term + long-term notes (file-based)

- Planning: simple plan + next step

- Guardrails: max steps, max tool calls, loop detection

- Debug: trace log per step

Requirements:

- Python 3.10+

- requests (pip install requests)

Environment:

- OPENAI_API_KEY (required)

- OPENAI_BASE_URL (optional, default: https://api.openai.com/v1)

- OPENAI_MODEL (optional, default: gpt-4o-mini)

"""

from __future__ import annotations

import json

import os

import time

import re

from dataclasses import dataclass, field

from typing import Any, Callable, Dict, List, Optional, Tuple

import requests

# -----------------------------

# Utilities

# -----------------------------

def now_ms() -> int:

return int(time.time() * 1000)

def clamp_text(text: str, max_chars: int = 1800) -> str:

text = text.strip()

if len(text) <= max_chars:

return text

return text[:max_chars] + " ...[truncated]"

def safe_json_extract(text: str) -> Optional[Dict[str, Any]]:

"""

The model must output a single JSON object.

This function tries to extract the first JSON object from the response safely.

"""

text = text.strip()

# Fast path: pure JSON

if text.startswith("{") and text.endswith("}"):

try:

return json.loads(text)

except Exception:

pass

# Extract first {...} block

match = re.search(r"\{.*\}", flags=re.DOTALL)

if not match:

return None

blob = match.group(0)

try:

return json.loads(blob)

except Exception:

return None

def simple_similarity(a: str, b: str) -> float:

"""

Tiny loop-detection heuristic: token overlap ratio.

Good enough to detect 'same action again and again'.

"""

sa = set(a.lower().split())

sb = set(b.lower().split())

if not sa or not sb:

return 0.0

return len(sa & sb) / max(1, len(sa | sb))

# -----------------------------

# Tool System

# -----------------------------

@dataclass

class Tool:

name: str

description: str

schema: Dict[str, Any]

fn: Callable[[Dict[str, Any]], Any]

safe: bool = True # keep dangerous tools behind a flag

class ToolRegistry:

def __init__(self) -> None:

self._tools: Dict[str, Tool] = {}

def register(self, tool: Tool) -> None:

if tool.name in self._tools:

raise ValueError(f"Tool already registered: {tool.name}")

self._tools[tool.name] = tool

def get(self, name: str) -> Optional[Tool]:

return self._tools.get(name)

def as_prompt_block(self) -> str:

"""

Give the model the tool list in a compact, readable form.

"""

lines = ["TOOLS AVAILABLE:"]

for t in self._tools.values():

safe_tag = "safe" if t.safe else "restricted"

lines.append(f"- {t.name} ({safe_tag}): {t.description}")

lines.append(f" schema: {json.dumps(t.schema, ensure_ascii=False)}")

return "\n".join(lines)

# -----------------------------

# Memory System

# -----------------------------

@dataclass

class MemoryNote:

ts_ms: int

text: str

tags: List[str] = field(default_factory=list)

class LongTermMemory:

"""

Simple long-term memory:

- Stores compact notes in a JSONL file

- Retrieves notes with keyword overlap (simple, fast, no embeddings)

"""

def __init__(self, path: str = "agent_memory.jsonl") -> None:

self.path = path

if not os.path.exists(self.path):

with open(self.path, "w", encoding="utf-8") as f:

f.write("")

def add(self, text: str, tags: Optional[List[str]] = None) -> None:

note = MemoryNote(ts_ms=now_ms(), text=text.strip(), tags=tags or [])

with open(self.path, "a", encoding="utf-8") as f:

f.write(json.dumps(note.__dict__, ensure_ascii=False) + "\n")

def search(self, query: str, k: int = 5) -> List[MemoryNote]:

query_tokens = set(query.lower().split())

scored: List[Tuple[float, MemoryNote]] = []

with open(self.path, "r", encoding="utf-8") as f:

for line in f:

line = line.strip()

if not line:

continue

try:

obj = json.loads(line)

note = MemoryNote(**obj)

tokens = set(note.text.lower().split())

score = len(tokens & query_tokens)

if score > 0:

scored.append((float(score), note))

except Exception:

continue

scored.sort(key=lambda x: x[0], reverse=True)

return [n for _, n in scored[:k]]

class ShortTermMemory:

"""

Keeps the last N messages (compressed).

"""

def __init__(self, max_items: int = 18) -> None:

self.max_items = max_items

self.items: List[Dict[str, str]] = []

def add(self, role: str, content: str) -> None:

self.items.append({"role": role, "content": content})

if len(self.items) > self.max_items:

self.items = self.items[-self.max_items:]

# -----------------------------

# LLM Client (simple, direct)

# -----------------------------

class LLMClient:

def __init__(self) -> None:

self.api_key = os.getenv("OPENAI_API_KEY", "").strip()

if not self.api_key:

raise RuntimeError("Missing OPENAI_API_KEY")

self.base_url = os.getenv("OPENAI_BASE_URL", "https://api.openai.com/v1").strip()

self.model = os.getenv("OPENAI_MODEL", "gpt-4o-mini").strip()

def chat(self, messages: List[Dict[str, str]], temperature: float = 0.2) -> str:

url = f"{self.base_url}/chat/completions"

headers = {"Authorization": f"Bearer {self.api_key}"}

payload = {

"model": self.model,

"temperature": temperature,

"messages": messages,

}

r = requests.post(url, headers=headers, json=payload, timeout=60)

r.raise_for_status()

data = r.json()

return data["choices"][0]["message"]["content"]

# -----------------------------

# Agent Core

# -----------------------------

@dataclass

class AgentConfig:

max_steps: int = 10

max_tool_calls: int = 6

max_seconds: int = 35

allow_restricted_tools: bool = False

@dataclass

class AgentState:

step: int = 0

tool_calls: int = 0

started_ms: int = field(default_factory=now_ms)

high_level_plan: List[str] = field(default_factory=list)

last_actions: List[str] = field(default_factory=list)

trace: List[Dict[str, Any]] = field(default_factory=list)

class ScratchAgent:

"""

A minimal agent that:

- asks the model for a plan + next action

- executes a tool if needed

- stores memory notes

- stops safely

"""

def __init__(self, llm: LLMClient, tools: ToolRegistry, ltm: LongTermMemory, cfg: AgentConfig) -> None:

self.llm = llm

self.tools = tools

self.ltm = ltm

self.cfg = cfg

self.stm = ShortTermMemory(max_items=18)

def _time_left(self, state: AgentState) -> int:

elapsed = now_ms() - state.started_ms

return max(0, self.cfg.max_seconds * 1000 - elapsed)

def _should_stop(self, state: AgentState) -> Optional[str]:

if state.step >= self.cfg.max_steps:

return "Reached max_steps"

if state.tool_calls >= self.cfg.max_tool_calls:

return "Reached max_tool_calls"

if self._time_left(state) <= 0:

return "Reached max_seconds"

# Loop detection: if we keep repeating very similar actions

if len(state.last_actions) >= 3:

a, b, c = state.last_actions[-3:]

if simple_similarity(a, b) > 0.85 and simple_similarity(b, c) > 0.85:

return "Detected repeating loop"

return None

def _compact_memory_summary(self) -> str:

"""

Summarize short-term memory in a compact form the agent can carry.

"""

# Keep it simple: last 8 items only, compressed.

tail = self.stm.items[-8:]

lines = []

for it in tail:

role = it["role"]

content = clamp_text(it["content"], 260).replace("\n", " ")

lines.append(f"{role}: {content}")

return "\n".join(lines)

def _build_system_prompt(self, user_goal: str) -> str:

"""

The strongest part: strict output format + clear behavior.

"""

return f"""

You are a strict AI agent. Your job is to complete the user's goal safely and efficiently.

USER GOAL:

{user_goal}

RULES:

- You MUST respond with exactly ONE JSON object and nothing else.

- Choose ONE action per step.

- If you have enough info, finish with a final answer.

- Keep plans short and simple.

- Avoid infinite loops. If stuck, explain what is missing and finish.

OUTPUT JSON SCHEMA:

{{

"type": "plan" | "tool_call" | "final",

"plan": ["..."] (required if type="plan"),

"next": "... one sentence next step ..." (required if type="plan"),

"tool": "tool_name" (required if type="tool_call"),

"args": {{...}} (required if type="tool_call"),

"answer": "... final answer ..." (required if type="final"),

"memory_note": "... short durable note to store ..." (optional)

}}

AVAILABLE TOOLS:

{self.tools.as_prompt_block()}

""".strip()

def _model_step(self, user_goal: str, state: AgentState) -> Dict[str, Any]:

ltm_hits = self.ltm.search(user_goal, k=4)

ltm_block = "\n".join([f"- {clamp_text(n.text, 240)}" for n in ltm_hits]) or "- (none)"

system = self._build_system_prompt(user_goal)

context = f"""

SHORT-TERM MEMORY (compact):

{self._compact_memory_summary()}

LONG-TERM MEMORY (top hits):

{ltm_block}

CURRENT PLAN:

{state.high_level_plan if state.high_level_plan else "(not set)"}

STEP: {state.step}

TOOL_CALLS: {state.tool_calls}

TIME_LEFT_MS: {self._time_left(state)}

""".strip()

messages = [

{"role": "system", "content": system},

{"role": "user", "content": context},

]

raw = self.llm.chat(messages, temperature=0.2)

obj = safe_json_extract(raw)

if not obj:

# fallback: force finish to avoid chaos

return {"type": "final", "answer": clamp_text(raw, 900)}

return obj

def _run_tool(self, name: str, args: Dict[str, Any]) -> Dict[str, Any]:

tool = self.tools.get(name)

if not tool:

return {"ok": False, "error": f"Unknown tool: {name}", "data": None, "latency_ms": 0}

if (not tool.safe) and (not self.cfg.allow_restricted_tools):

return {"ok": False, "error": f"Tool is restricted: {name}", "data": None, "latency_ms": 0}

t0 = now_ms()

try:

out = tool.fn(args)

return {"ok": True, "error": None, "data": out, "latency_ms": now_ms() - t0}

except Exception as e:

return {"ok": False, "error": str(e), "data": None, "latency_ms": now_ms() - t0}

def run(self, user_goal: str) -> str:

state = AgentState()

self.stm.add("user", user_goal)

while True:

stop_reason = self._should_stop(state)

if stop_reason:

final = f"I'm stopping safely: {stop_reason}.\n\nWhat I can do next: clarify missing info or reduce scope."

self.stm.add("assistant", final)

return final

state.step += 1

decision = self._model_step(user_goal, state)

dtype = decision.get("type", "").strip()

trace_item: Dict[str, Any] = {

"step": state.step,

"decision": decision,

"tool_result": None,

}

# Store memory note (if provided)

mem_note = (decision.get("memory_note") or "").strip()

if mem_note:

self.ltm.add(mem_note, tags=["agent_note"])

if dtype == "plan":

plan = decision.get("plan") or []

if isinstance(plan, list) and plan:

state.high_level_plan = [str(x)[:140] for x in plan][:6]

nxt = str(decision.get("next") or "").strip()

state.last_actions.append("plan:" + nxt)

self.stm.add("assistant", f"PLAN: {state.high_level_plan}\nNEXT: {nxt}")

state.trace.append(trace_item)

continue

if dtype == "tool_call":

tool_name = str(decision.get("tool") or "").strip()

args = decision.get("args") or {}

if not isinstance(args, dict):

args = {}

state.tool_calls += 1

action_sig = f"tool:{tool_name} args:{json.dumps(args, sort_keys=True)}"

state.last_actions.append(action_sig)

result = self._run_tool(tool_name, args)

trace_item["tool_result"] = result

state.trace.append(trace_item)

obs = {

"tool": tool_name,

"ok": result["ok"],

"error": result["error"],

"data": clamp_text(json.dumps(result["data"], ensure_ascii=False), 1200) if result["ok"] else None,

"latency_ms": result["latency_ms"],

}

self.stm.add("assistant", f"TOOL_OBSERVATION: {json.dumps(obs, ensure_ascii=False)}")

continue

# Final answer

answer = str(decision.get("answer") or "").strip()

if not answer:

answer = "Done."

self.stm.add("assistant", answer)

return answer

# -----------------------------

# Tools (safe, useful)

# -----------------------------

def tool_calc(args: Dict[str, Any]) -> Any:

expr = str(args.get("expression", "")).strip()

if not expr:

raise ValueError("Missing expression")

# Very small safe evaluator: numbers + operators only

if not re.fullmatch(r"[0-9\.\+\-\*\/\(\)\s]+", expr):

raise ValueError("Expression contains unsupported characters")

return eval(expr, {"__builtins__": {}}, {})

def tool_summarize(args: Dict[str, Any]) -> Any:

text = str(args.get("text", "")).strip()

max_lines = int(args.get("max_lines", 6))

text = clamp_text(text, 3000)

lines = [ln.strip() for ln in text.splitlines() if ln.strip()]

# Simple heuristic summary: take first lines + key bullets

out = []

for ln in lines[:max_lines]:

out.append(ln[:180])

return out

def tool_read_file(args: Dict[str, Any]) -> Any:

path = str(args.get("path", "")).strip()

if not path:

raise ValueError("Missing path")

# Basic sandbox: block parent traversal

if ".." in path.replace("\\", "/"):

raise ValueError("Parent traversal is not allowed")

with open(path, "r", encoding="utf-8") as f:

return clamp_text(f.read(), 6000)

def build_tools() -> ToolRegistry:

reg = ToolRegistry()

reg.register(Tool(

name="calc",

description="Evaluate a simple math expression safely (numbers + + - * / parentheses).",

schema={"type": "object", "properties": {"expression": {"type": "string"}}, "required": ["expression"]},

fn=tool_calc,

safe=True,

))

reg.register(Tool(

name="summarize",

description="Create a short bullet summary from text (fast heuristic).",

schema={"type": "object", "properties": {"text": {"type": "string"}, "max_lines": {"type": "integer"}}, "required": ["text"]},

fn=tool_summarize,

safe=True,

))

reg.register(Tool(

name="read_file",

description="Read a local text file (sandboxed; blocks .. traversal).",

schema={"type": "object", "properties": {"path": {"type": "string"}}, "required": ["path"]},

fn=tool_read_file,

safe=False, # file access is restricted by default

))

return reg

# -----------------------------

# Demo

# -----------------------------

if __name__ == "__main__":

llm = LLMClient()

tools = build_tools()

ltm = LongTermMemory(path="agent_memory.jsonl")

cfg = AgentConfig(

max_steps=10,

max_tool_calls=6,

max_seconds=35,

allow_restricted_tools=False,

)

agent = ScratchAgent(llm=llm, tools=tools, ltm=ltm, cfg=cfg)

goal = (

"Create a short plan to write a Medium post about building an AI agent from scratch in Python "

"with tools, memory, planning, and stop conditions. Then produce the final outline."

)

print(agent.run(goal))

3、为什么这个“从零开始”的 Agent 真的有效

如果你想让你的文章疯传,这是读者会记住的部分:

1) 强制结构化 JSON 输出

当输出混乱时,Agent 会崩溃。在这里,模型必须选择:

plan(计划)tool_call(工具调用)final(完成)

这一个决定消除了 80% 的 Agent 混乱。

2) 它将工具输出视为观察结果

Agent 不会“猜测”。 它行动,观察,然后更新记忆。

3) 它有真正的停止条件

大多数“从零开始的 Agent”教程都忽略了这一点。 但停止条件是让你的 Agent 可靠的关键。

如果你的 Agent 不能停止,它就不是 Agent——它是一个失控的进程。

4) 记忆设计紧凑

短期记忆被修剪。 长期记忆只存储持久笔记。

这防止了上下文膨胀,这是 Agent 性能的隐形杀手。

5、如何让这个 Agent 感觉“聪明”而不使其变得复杂

如果你想从零开始构建一个 AI Agent,让读者感到印象深刻,接下来添加这些升级:

升级 A:记忆压缩(Token 节省器)

每 4–5 步,用简短的摘要替换“聊天记录”:

- 我们知道什么

- 我们尝试了什么

- 下一步是什么

这使 Agent 更快、更便宜且更少重复。

升级 B:反思(多一次传递)

在 Agent 生成最终答案后,再进行一次调用:

- “找出弱点”

- “一次性修复它们”

这单一的循环使输出质量大幅提升。

升级 C:更好的工具契约

将工具结果包装在一个一致的信封中返回:

- ok

- data

- error

- latency

你的 Agent 变得可调试且对生产环境友好。

6、结束语

如果你是为了从零开始构建一个 AI Agent而来,你现在拥有了最干净的基础:

- 工具

- 记忆

- 规划

- 护栏

- 简单的 Python

- 无框架

这不是“玩具代码”。 这是一个坚实的基础,你可以将其转化为产品。

如果你觉得这有帮助,请分享给一个正在与 Agent 循环作斗争的构建者朋友。

原文链接:从零构建AI Agent - 汇智网

更多推荐

已为社区贡献132条内容

已为社区贡献132条内容

所有评论(0)