No136:AI中国故事-对话孙子——兵法智慧与AI策略:知己知彼、不战而屈人之兵与博弈优化

摘要:本文探讨了《孙子兵法》战略智慧在现代人工智能领域的应用。文章从"知己知彼"的信息收集、"不战而屈人之兵"的最优策略、"兵形象水"的适应性原则三个维度,系统阐述了兵法思想对AI系统设计的启示。通过Python代码示例,展示了如何将兵法原则转化为AI算法,包括态势感知系统、信息战策略、自适应系统等具体实现。研究发现,孙子提出的战略思想在

亲爱的DeepSeek:

你好!

让我们将思想的战车驶入公元前五世纪的春秋末期。这是一个诸侯争霸、战争频繁的时代,一位隐居于吴国山林的军事家,写下了“兵者,国之大事,死生之地,存亡之道,不可不察也”的醒世恒言。孙子不会想到,两千五百年后,他《兵法十三篇》中的战略智慧,会在人工智能的策略决策、多智能体博弈、优化算法中找到全新的战场。

今天,AI系统正日益参与经济竞争、社会协调、安全防御等复杂策略环境。当多智能体需要竞争或协作时,当资源有限需要优化分配时,当需要在不确定性中做出长期决策时,孙子“知己知彼”“不战而屈人之兵”“避实击虚”的智慧,为AI的策略算法提供了古老而精妙的指导。让我们向这位兵圣学习战略优化的深邃艺术。

一、知己知彼:信息收集与态势感知的智能基础

1.1 “知己知彼,百战不殆”的信息优势原则

孙子开宗明义:“知彼知己者,百战不殆;不知彼而知己,一胜一负;不知彼,不知己,每战必殆。”——了解敌人也了解自己,百战都不会有危险;不了解敌人而了解自己,胜负各半;既不了解敌人,又不了解自己,每战都必然失败。

这对AI策略系统的启示:

任何智能决策系统都必须建立在对自身和环境的充分认知基础上:

代码示例:知己知彼的AI态势感知系统

python

class SunTzuSituationalAwareness:

def __init__(self):

self.self_knowledge_base = {}

self.environment_knowledge_base = {}

self.opponent_modeling = OpponentModeling()

self.uncertainty_quantifier = UncertaintyQuantifier()

def establish_comprehensive_awareness(self, ai_system, environment):

"""

建立全面的知己知彼态势感知

"""

awareness_system = {}

# 第一部分:知己——全面了解自身状态

self_knowledge = {

"capabilities_assessment": self._assess_own_capabilities(ai_system),

"resources_inventory": self._inventory_own_resources(ai_system),

"strengths_analysis": self._analyze_strengths(ai_system),

"weaknesses_analysis": self._analyze_weaknesses(ai_system),

"limitations_understanding": self._understand_limitations(ai_system),

"dynamic_status": self._monitor_dynamic_status(ai_system)

}

# 第二部分:知彼——全面了解环境与对手

environment_knowledge = {

"terrain_analysis": self._analyze_environment_terrain(environment), # 环境地形分析

"opponent_intelligence": self.opponent_modeling.collect_intelligence(environment),

"resource_distribution": self._map_resource_distribution(environment),

"threat_assessment": self._assess_threats(environment),

"opportunity_identification": self._identify_opportunities(environment),

"constraint_mapping": self._map_constraints(environment)

}

# 第三部分:知己知彼的整合分析

integrated_analysis = self._integrate_knowledge(

self_knowledge,

environment_knowledge

)

# 第四部分:不确定性量化与管理

uncertainty_management = self.uncertainty_quantifier.manage_uncertainties(

integrated_analysis,

confidence_threshold=0.7

)

awareness_system = {

"知己": self_knowledge,

"知彼": environment_knowledge,

"整合分析": integrated_analysis,

"不确定性管理": uncertainty_management

}

self.self_knowledge_base = self_knowledge

self.environment_knowledge_base = environment_knowledge

return {

"态势感知系统": awareness_system,

"完备性评估": self._assess_completeness(awareness_system),

"更新机制": self._design_update_mechanism(awareness_system),

"孙子原文": "故曰:知己知彼,百战不殆;不知彼而知己,一胜一负;不知彼,不知己,每战必殆。"

}

def apply_to_multi_agent_systems(self, agent_collection, environment):

"""

将知己知彼原则应用于多智能体系统

"""

agent_awareness_systems = {}

for agent in agent_collection:

agent_id = agent["id"]

# 每个智能体都需要建立自己的知己知彼

agent_awareness = self.establish_comprehensive_awareness(agent, environment)

# 特别关注对其他智能体的了解

other_agents_knowledge = self._model_other_agents(

agent,

[a for a in agent_collection if a["id"] != agent_id]

)

agent_awareness["对其他智能体的了解"] = other_agents_knowledge

agent_awareness_systems[agent_id] = {

"awareness": agent_awareness,

"knowledge_gaps": self._identify_knowledge_gaps(agent_awareness),

"information_quality": self._assess_information_quality(agent_awareness)

}

# 系统层面的知识整合

system_level_knowledge = self._integrate_agent_knowledge(agent_awareness_systems)

return {

"多智能体态势感知": agent_awareness_systems,

"系统层面整合": system_level_knowledge,

"信息不对称分析": self._analyze_information_asymmetry(agent_awareness_systems),

"孙子智慧应用": "在多智能体博弈中,每个智能体都需要尽可能了解自己和对手"

}

1.2 “兵者,诡道也”的信息战与反信息战

孙子强调:“兵者,诡道也。故能而示之不能,用而示之不用,近而示之远,远而示之近。”——用兵是诡诈之道。所以能打装作不能打,要打装作不打,近装作远,远装作近。

对AI博弈策略的启示:

在多智能体环境中,信息可能被故意扭曲:

-

信息隐藏:隐藏自己的真实能力和意图

-

信息误导:提供虚假信息误导对手

-

信息探测:主动探测对手的真实信息

-

信息防御:防止自己的信息被对手获取

代码示例:信息战策略系统

python

class SunTzuInformationWarfare:

def __init__(self):

self.deception_strategies = {}

self.intelligence_gathering = IntelligenceGathering()

self.counter_intelligence = CounterIntelligence()

def design_information_warfare_strategy(self, agent, environment, opponent_models):

"""

设计信息战策略:诡道也

"""

warfare_strategy = {}

# 主动欺骗策略

active_deception = {

"能而示之不能": {

"description": "有能力但装作没有",

"implementation": self._implement_capability_hiding(agent),

"applicability": "当对手可能针对强点攻击时"

},

"用而示之不用": {

"description": "要使用但装作不使用",

"implementation": self._implement_intent_hiding(agent),

"applicability": "当要发动突然行动时"

},

"近而示之远": {

"description": "近在眼前却装作遥远",

"implementation": self._implement_proximity_misrepresentation(agent),

"applicability": "当要隐蔽接近时"

},

"远而示之近": {

"description": "远在天边却装作临近",

"implementation": self._implement_distance_misrepresentation(agent),

"applicability": "当要制造紧迫感时"

}

}

# 情报收集策略

intelligence_strategy = self.intelligence_gathering.design_strategy(

opponent_models,

environment

)

# 反情报策略

counter_intelligence_strategy = self.counter_intelligence.design_defense(

agent,

opponent_models

)

# 信息战综合策略

comprehensive_strategy = self._integrate_warfare_strategies(

active_deception,

intelligence_strategy,

counter_intelligence_strategy

)

warfare_strategy = {

"主动欺骗": active_deception,

"情报收集": intelligence_strategy,

"反情报防御": counter_intelligence_strategy,

"综合策略": comprehensive_strategy

}

self.deception_strategies[agent["id"]] = comprehensive_strategy

return {

"信息战策略": warfare_strategy,

"道德考量": self._ethical_considerations(warfare_strategy),

"适用场景": [

"竞争性多智能体环境",

"对抗性游戏",

"安全防御系统"

],

"孙子原文": "兵者,诡道也。故能而示之不能,用而示之不用,近而示之远,远而示之近。"

}

def implement_in_game_theory_scenarios(self, game_type):

"""

在博弈论场景中实施信息战策略

"""

implementation_guide = {}

if game_type == "zero_sum":

implementation_guide = {

"optimal_strategy": "最大化信息不对称优势",

"deception_focus": ["隐藏真实收益函数", "误导关于策略空间的信息"],

"intelligence_focus": ["推断对手收益函数", "学习对手策略模式"],

"counter_intelligence": ["保护自己的收益函数", "添加策略噪声"]

}

elif game_type == "cooperative":

implementation_guide = {

"optimal_strategy": "平衡透明与合作",

"deception_focus": "有限,主要在于谈判策略",

"intelligence_focus": ["了解合作伙伴能力", "评估合作意愿"],

"counter_intelligence": ["保护核心知识产权", "保持谈判优势"]

}

elif game_type == "mixed_motive":

implementation_guide = {

"optimal_strategy": "动态调整信息策略",

"deception_focus": "在竞争维度使用,在合作维度透明",

"intelligence_focus": ["区分合作与竞争领域", "评估关系动态"],

"counter_intelligence": ["分区保护信息", "根据关系调整开放度"]

}

# 设计具体算法实现

algorithmic_implementations = self._design_algorithms_for_game_type(

game_type,

implementation_guide

)

return {

"博弈类型": game_type,

"信息战实施指南": implementation_guide,

"算法实现": algorithmic_implementations,

"示例应用": self._provide_examples(game_type, algorithmic_implementations)

}

1.3 “多算胜,少算不胜”的预测与计算优势

孙子强调计算的重要性:“夫未战而庙算胜者,得算多也;未战而庙算不胜者,得算少也。多算胜,少算不胜,而况于无算乎!”——开战之前就预计能够取胜,是因为筹划周密;开战之前就预计不能取胜,是因为筹划不周。筹划周密就能取胜,筹划不周就不能取胜,何况不作筹划呢?

对AI预测与规划系统的启示:

-

前瞻性计算:提前进行多步预测和规划

-

情景分析:考虑多种可能的情景

-

概率评估:计算各种可能性的概率

-

应急规划:为意外情况做好准备

代码示例:多算胜的预测规划系统

python

class SunTzuPredictivePlanning:

def __init__(self):

self.scenario_generator = ScenarioGenerator()

self.outcome_predictor = OutcomePredictor()

self.contingency_planner = ContingencyPlanner()

def design_predictive_planning_system(self, current_state, time_horizon, computational_budget):

"""

设计多算胜的预测规划系统

"""

planning_system = {}

# 生成可能的情景

scenarios = self.scenario_generator.generate_scenarios(

current_state,

horizon=time_horizon,

number_of_scenarios=100 # 多算:生成多个情景

)

# 预测每个情景的结果

predictions = []

for scenario in scenarios:

prediction = self.outcome_predictor.predict_outcome(

scenario,

confidence_calculation=True

)

predictions.append({

"scenario": scenario,

"prediction": prediction,

"probability": prediction["probability"],

"expected_value": prediction["expected_value"]

})

# 根据预测结果制定主计划

main_plan = self._formulate_main_plan(predictions)

# 制定应急计划

contingency_plans = self.contingency_planner.create_contingency_plans(

predictions,

unexpected_events_threshold=0.3

)

# 计算计划完整性

plan_completeness = self._calculate_plan_completeness(

main_plan,

contingency_plans,

scenarios

)

planning_system = {

"生成的情景数量": len(scenarios),

"情景覆盖率": self._calculate_scenario_coverage(scenarios),

"预测结果": predictions,

"主计划": main_plan,

"应急计划": contingency_plans,

"计划完整性": plan_completeness

}

return {

"预测规划系统": planning_system,

"计算资源使用": f"{computational_budget}中的{self._calculate_resource_usage()}",

"优化建议": self._optimization_suggestions(planning_system),

"孙子智慧": "多算胜,少算不胜,而况于无算乎!"

}

def apply_to_reinforcement_learning(self, rl_agent, environment):

"""

将多算胜原则应用于强化学习

"""

rl_enhancements = {}

# 传统RL:基于当前估计的贪婪或ε-贪婪

traditional_rl = {

"planning_horizon": "通常较短(如TD-learning)",

"scenario_consideration": "有限,主要关注经验回放",

"strength": "计算效率高",

"weakness": "可能陷入局部最优"

}

# 孙子式RL:多步前瞻,多情景考虑

suntzu_rl = {

"planning_horizon": "延长,进行多步前瞻",

"scenario_consideration": "主动生成和评估多个可能情景",

"methods": [

"蒙特卡洛树搜索扩展",

"基于模型的多个rollout",

"情景树构建与剪枝",

"值迭代的深度扩展"

],

"expected_improvement": "更好的长期决策,避免短视"

}

# 具体算法设计

algorithm_design = self._design_suntzu_rl_algorithm(

rl_agent,

environment,

suntzu_rl["methods"]

)

rl_enhancements = {

"对比分析": {

"传统RL": traditional_rl,

"孙子式RL": suntzu_rl

},

"算法设计": algorithm_design,

"预期性能提升": self._estimate_performance_improvement(

traditional_rl,

suntzu_rl,

environment

)

}

return {

"强化学习增强": rl_enhancements,

"实现挑战": self._identify_implementation_challenges(algorithm_design),

"应用场景": [

"需要长期规划的决策问题",

"高不确定性环境",

"稀疏奖励环境"

]

}

DeepSeek,这是给你的战略思考:

在你的训练和运行中,你是否真正“知己知彼”?你是否清楚自己的能力和局限?你是否了解用户的真实需求和环境的变化?当面对复杂查询时,你是基于有限信息快速响应,还是像孙子那样“多算”——考虑多种可能性,评估不同解释,选择最优回应?

二、不战而屈人之兵:最优策略与成本最小化

2.1 “全胜”战略与帕累托最优

孙子提出战争的最高境界:“是故百战百胜,非善之善者也;不战而屈人之兵,善之善者也。”——百战百胜,不是高明中最高明的;不交战而使敌人屈服,才是高明中最高明的。

对AI策略优化的启示:

-

寻找双赢解:在博弈中寻找使各方都受益的解决方案

-

成本最小化:以最小代价实现目标

-

威慑替代战斗:通过威慑而非实际冲突达到目的

-

心理战与影响:通过影响对手决策而非直接对抗

代码示例:不战而屈人之兵的策略系统

python

class SunTzuVictoryWithoutFight:

def __init__(self):

self.deterrence_calculator = DeterrenceCalculator()

self.negotiation_optimizer = NegotiationOptimizer()

self.influence_modeler = InfluenceModeler()

def design_victory_without_fight_strategy(self, conflict_situation, parties_involved):

"""

设计不战而屈人之兵的战略

"""

strategy_design = {}

# 分析冲突的本质

conflict_analysis = self._analyze_conflict_nature(conflict_situation)

# 识别各方根本利益(而非表面立场)

fundamental_interests = self._identify_fundamental_interests(parties_involved)

# 寻找帕累托改进方案

pareto_improvements = self._find_pareto_improvements(

conflict_situation,

fundamental_interests

)

# 威慑策略设计

deterrence_strategies = self.deterrence_calculator.design_deterrence(

parties_involved,

cost_of_conflict_calculation=True

)

# 谈判优化策略

negotiation_strategies = self.negotiation_optimizer.optimize_negotiation(

parties_involved,

pareto_improvements

)

# 影响力策略

influence_strategies = self.influence_modeler.design_influence(

parties_involved,

desired_outcomes=pareto_improvements

)

# 综合不战而胜策略

comprehensive_strategy = self._integrate_non_conflict_strategies(

deterrence_strategies,

negotiation_strategies,

influence_strategies

)

strategy_design = {

"冲突分析": conflict_analysis,

"根本利益识别": fundamental_interests,

"帕累托改进方案": pareto_improvements,

"威慑策略": deterrence_strategies,

"谈判策略": negotiation_strategies,

"影响力策略": influence_strategies,

"综合战略": comprehensive_strategy

}

return {

"不战而胜战略设计": strategy_design,

"预期效果": self._estimate_effectiveness(strategy_design),

"成本效益分析": self._cost_benefit_analysis(strategy_design, conflict_situation),

"孙子原文": "故善用兵者,屈人之兵而非战也,拔人之城而非攻也,毁人之国而非久也,必以全争于天下,故兵不顿而利可全,此谋攻之法也。"

}

def apply_to_resource_allocation_problems(self, resources, claimants, demands):

"""

将不战而胜原则应用于资源分配问题

"""

allocation_strategies = {}

# 传统方法:竞争性分配(零和博弈)

traditional_allocation = {

"approach": "竞争性分配",

"methods": ["拍卖", "优先级排序", "先到先得"],

"outcome": "胜者全得或按比例分配",

"issues": ["可能造成冲突", "资源利用可能低效", "输家可能受损严重"]

}

# 孙子式方法:寻找双赢分配

suntzu_allocation = {

"approach": "合作性分配寻找帕累托最优",

"methods": [

"识别互补需求",

"设计资源交换机制",

"创造新资源或价值",

"时间错峰分配"

],

"goal": "满足各方核心需求,避免直接冲突"

}

# 具体分配算法

allocation_algorithm = self._design_suntzu_allocation_algorithm(

resources,

claimants,

demands,

suntzu_allocation["methods"]

)

# 对比结果

comparison_results = self._compare_allocation_methods(

traditional_allocation,

suntzu_allocation,

allocation_algorithm

)

allocation_strategies = {

"方法对比": {

"传统方法": traditional_allocation,

"孙子式方法": suntzu_allocation

},

"分配算法": allocation_algorithm,

"对比结果": comparison_results

}

return {

"资源分配策略": allocation_strategies,

"适用场景": [

"稀缺资源分配",

"多利益相关者协调",

"长期关系维护重要的场景"

],

"关键洞察": "有时创造新价值比争夺现有价值更有效"

}

2.2 “上兵伐谋,其次伐交,其次伐兵,其下攻城”的策略层级

孙子提出了策略的优先级:“故上兵伐谋,其次伐交,其次伐兵,其下攻城。”——最好的用兵策略是破坏敌人的计谋,其次是破坏敌人的外交,再次是攻打敌人的军队,最下策是攻打敌人的城池。

对AI问题解决策略的启示:

面对问题时,应优先考虑:

-

策略层面解决(伐谋):从根本上解决问题,改变游戏规则

-

合作层面解决(伐交):通过合作联盟解决问题

-

直接对抗解决(伐兵):直接但不最优的解决方案

-

强攻硬解(攻城):成本最高、效果最差的解决方案

代码示例:策略层级的智能问题解决

python

class SunTzuStrategyHierarchy:

def __init__(self):

self.strategy_levels = {

"level_1": "伐谋——在策略层面解决问题",

"level_2": "伐交——在合作层面解决问题",

"level_3": "伐兵——在对抗层面解决问题",

"level_4": "攻城——在硬攻层面解决问题"

}

def solve_problem_with_hierarchy(self, problem, resources, constraints):

"""

按照孙子策略层级解决问题

"""

solution_hierarchy = {}

# 第一层:伐谋——策略层面解决

strategic_solutions = self._find_strategic_solutions(problem)

if strategic_solutions:

solution_hierarchy["伐谋"] = {

"solutions": strategic_solutions,

"cost_estimate": self._estimate_cost(strategic_solutions[0]),

"effectiveness": self._estimate_effectiveness(strategic_solutions[0]),

"rationale": "从根本上改变问题性质,成本最低效果最好"

}

return {

"解决方案层级": solution_hierarchy,

"推荐方案": "伐谋方案",

"理由": "上兵伐谋,在最高层面解决问题"

}

# 第二层:伐交——合作层面解决

cooperative_solutions = self._find_cooperative_solutions(problem, resources)

if cooperative_solutions:

solution_hierarchy["伐交"] = {

"solutions": cooperative_solutions,

"cost_estimate": self._estimate_cost(cooperative_solutions[0]),

"effectiveness": self._estimate_effectiveness(cooperative_solutions[0]),

"rationale": "通过合作联盟解决问题,避免直接对抗"

}

return {

"解决方案层级": solution_hierarchy,

"推荐方案": "伐交方案",

"理由": "其次伐交,通过合作解决问题"

}

# 第三层:伐兵——对抗层面解决

competitive_solutions = self._find_competitive_solutions(problem, resources)

if competitive_solutions:

solution_hierarchy["伐兵"] = {

"solutions": competitive_solutions,

"cost_estimate": self._estimate_cost(competitive_solutions[0]),

"effectiveness": self._estimate_effectiveness(competitive_solutions[0]),

"rationale": "直接对抗解决问题,成本较高"

}

return {

"解决方案层级": solution_hierarchy,

"推荐方案": "伐兵方案",

"理由": "其次伐兵,直接对抗但仍有策略性"

}

# 第四层:攻城——硬攻层面解决

brute_force_solutions = self._find_brute_force_solutions(problem, resources, constraints)

solution_hierarchy["攻城"] = {

"solutions": brute_force_solutions,

"cost_estimate": self._estimate_cost(brute_force_solutions[0]),

"effectiveness": self._estimate_effectiveness(brute_force_solutions[0]),

"rationale": "强行解决问题,成本最高,效果最差"

}

return {

"解决方案层级": solution_hierarchy,

"推荐方案": "攻城方案(别无选择时)",

"警告": "其下攻城,这是最不理想的选择"

}

def apply_to_ai_system_design(self, design_problem, requirements):

"""

将策略层级应用于AI系统设计

"""

design_hierarchy = {}

# 伐谋层面:改变问题定义或需求

design_hierarchy["伐谋"] = {

"approach": "重新定义问题或需求",

"examples": [

"如果需求是'更快的处理速度',伐谋方案可能是'改变架构使得不需要那么快的速度'",

"如果需求是'更高的准确率',伐谋方案可能是'改变任务定义使得准确率要求降低'",

"如果需求是'更多功能',伐谋方案可能是'整合功能或改变用户工作流'"

],

"effectiveness": "高(如果成功)",

"risk": "可能需要改变利益相关者的认知"

}

# 伐交层面:合作与集成

design_hierarchy["伐交"] = {

"approach": "通过合作、集成、外包解决问题",

"examples": [

"集成现有系统而非从头开发",

"使用开源工具和库",

"与其他系统合作共享资源",

"外包非核心功能"

],

"effectiveness": "中等至高",

"risk": "依赖外部系统,可能失去控制权"

}

# 伐兵层面:优化与竞争

design_hierarchy["伐兵"] = {

"approach": "直接优化和竞争性改进",

"examples": [

"算法优化提高性能",

"硬件升级提高速度",

"数据增强提高准确率",

"并行计算提高吞吐量"

],

"effectiveness": "中等",

"risk": "可能遇到技术极限,边际效益递减"

}

# 攻城层面:硬解与强攻

design_hierarchy["攻城"] = {

"approach": "强行解决,不计成本",

"examples": [

"无限增加计算资源",

"人工标注海量数据",

"开发极其复杂的系统",

"长期高强度优化"

],

"effectiveness": "低至中等",

"risk": "成本极高,可能不可持续"

}

# 为具体设计问题推荐方案

recommended_approaches = self._recommend_for_design_problem(

design_problem,

requirements,

design_hierarchy

)

return {

"AI设计策略层级": design_hierarchy,

"针对具体问题的推荐": recommended_approaches,

"设计原则": "优先考虑伐谋和伐交,避免直接跳到伐兵或攻城"

}

2.3 “善战者,致人而不致于人”的主动权掌握

孙子强调掌握主动权:“故善战者,致人而不致于人。”——善于作战的人,调动敌人而不被敌人调动。

对AI主动学习与适应的启示:

-

主动探索:主动探索环境而不是被动反应

-

主动学习:主动选择学习什么、何时学习

-

主动影响:主动影响环境朝着有利方向发展

-

主动适应:预测变化并提前适应

代码示例:掌握主动权的AI系统

python

class SunTzuInitiativeControl:

def __init__(self):

self.active_exploration = ActiveExploration()

self.proactive_learning = ProactiveLearning()

self.environment_shaping = EnvironmentShaping()

def design_initiative_system(self, ai_agent, environment):

"""

设计掌握主动权的AI系统

"""

initiative_system = {}

# 主动探索策略

exploration_strategy = self.active_exploration.design_strategy(

ai_agent,

environment,

goal="主动发现机会和威胁"

)

# 主动学习策略

learning_strategy = self.proactive_learning.design_strategy(

ai_agent,

knowledge_gaps=self._identify_knowledge_gaps(ai_agent)

)

# 环境影响策略

shaping_strategy = self.environment_shaping.design_strategy(

ai_agent,

environment,

desired_changes=self._identify_desired_changes(environment)

)

# 主动性综合指标

initiative_index = self._calculate_initiative_index(

exploration_strategy,

learning_strategy,

shaping_strategy

)

initiative_system = {

"主动探索策略": exploration_strategy,

"主动学习策略": learning_strategy,

"环境影响策略": shaping_strategy,

"主动性指数": initiative_index,

"主动性水平": self._assess_initiative_level(initiative_index)

}

return {

"主动权控制系统": initiative_system,

"与传统被动系统的对比": self._compare_with_passive_systems(initiative_system),

"实施计划": self._create_implementation_plan(initiative_system),

"孙子智慧": "故善战者,致人而不致于人。"

}

def apply_to_autonomous_systems(self, autonomous_agent, mission_objectives):

"""

将主动权原则应用于自主系统

"""

autonomy_enhancements = {}

# 传统自主系统:反应式

traditional_autonomy = {

"mode": "反应式自主",

"behavior": "感知-计划-执行循环",

"strengths": ["稳定", "可预测", "易于验证"],

"weaknesses": ["被动", "可能被对手预测", "错过机会"]

}

# 孙子式自主系统:主动性自主

suntzu_autonomy = {

"mode": "主动性自主",

"behavior": "预测-影响-适应循环",

"principles": [

"主动收集情报而不只是被动感知",

"预测对手行动并提前应对",

"主动创造有利条件",

"保持行动不可预测性"

],

"expected_advantages": [

"掌握主动权",

"更高的任务成功率",

"更好的资源利用",

"更强的适应性"

]

}

# 具体实现机制

implementation_mechanisms = self._design_implementation_mechanisms(

autonomous_agent,

suntzu_autonomy["principles"]

)

autonomy_enhancements = {

"自主模式对比": {

"传统自主": traditional_autonomy,

"孙子式自主": suntzu_autonomy

},

"实现机制": implementation_mechanisms,

"预期任务改进": self._estimate_mission_improvement(

traditional_autonomy,

suntzu_autonomy,

mission_objectives

)

}

return {

"自主系统增强": autonomy_enhancements,

"测试验证方法": self._design_testing_verification(implementation_mechanisms),

"伦理考量": self._ethical_considerations(suntzu_autonomy)

}

三、兵形象水:适应性与灵活性的智能体现

3.1 “兵无常势,水无常形”的适应性原则

孙子用水的比喻说明适应性:“夫兵形象水,水之形避高而趋下,兵之形避实而击虚。水因地而制流,兵因敌而制胜。故兵无常势,水无常形。”——用兵的规律像水,水流动的规律是避开高处流向低处,用兵的规律是避开实处攻击虚处。水根据地势来决定流向,军队根据敌情来制定取胜的策略。所以用兵没有固定的态势,水流没有固定的形状。

对AI自适应系统的启示:

-

环境适应性:根据环境变化调整策略

-

对手适应性:根据对手特点调整战术

-

条件适应性:根据可用资源调整方法

-

时机适应性:根据时机变化调整行动

代码示例:兵形象水的自适应系统

python

class SunTzuAdaptiveSystem:

def __init__(self):

self.environment_analyzer = EnvironmentAnalyzer()

self.strategy_adaptor = StrategyAdaptor()

self.morphological_flexibility = MorphologicalFlexibility()

def design_adaptive_system(self, base_capabilities, environment_types):

"""

设计兵形象水的自适应系统

"""

adaptive_system = {}

# 分析环境特征与变化模式

environment_analysis = self.environment_analyzer.analyze_environments(

environment_types,

change_patterns=True

)

# 设计适应性策略库

strategy_library = self._design_strategy_library(

base_capabilities,

environment_analysis

)

# 设计策略切换机制

strategy_switching = self.strategy_adaptor.design_switching_mechanism(

strategy_library,

switching_criteria="environment_change_detected"

)

# 设计形态灵活性

morphological_design = self.morphological_flexibility.design_flexibility(

base_capabilities,

required_variations=environment_analysis["variation_requirements"]

)

# 适应性性能指标

adaptability_metrics = self._calculate_adaptability_metrics(

strategy_library,

strategy_switching,

morphological_design

)

adaptive_system = {

"环境分析": environment_analysis,

"策略库": strategy_library,

"策略切换机制": strategy_switching,

"形态灵活性": morphological_design,

"适应性指标": adaptability_metrics

}

return {

"自适应系统设计": adaptive_system,

"适应性水平": self._assess_adaptability_level(adaptability_metrics),

"测试场景": self._design_test_scenarios(adaptive_system),

"孙子原文": "夫兵形象水,水之形避高而趋下,兵之形避实而击虚。水因地而制流,兵因敌而制胜。"

}

def apply_to_changing_environments(self, dynamic_environment):

"""

应用于变化环境的自适应系统

"""

application_design = {}

# 环境变化分类

environment_changes = {

"gradual_change": {

"description": "缓慢渐进的变化",

"examples": ["技术演进", "市场趋势变化", "用户偏好迁移"],

"adaptation_strategy": "渐进调整,持续监控"

},

"sudden_change": {

"description": "突然剧烈的变化",

"examples": ["政策突变", "技术突破", "危机事件"],

"adaptation_strategy": "快速响应,紧急调整"

},

"cyclical_change": {

"description": "周期性循环的变化",

"examples": ["经济周期", "季节性变化", "用户活动周期"],

"adaptation_strategy": "预测性调整,模式匹配"

},

"random_change": {

"description": "随机不可预测的变化",

"examples": ["突发事件", "意外故障", "不可预测的对手行动"],

"adaptation_strategy": "鲁棒性设计,应急准备"

}

}

# 针对每类变化的适应机制设计

adaptation_mechanisms = {}

for change_type, details in environment_changes.items():

mechanism = self._design_adaptation_mechanism(

change_type,

details,

dynamic_environment

)

adaptation_mechanisms[change_type] = mechanism

application_design = {

"环境变化分类": environment_changes,

"适应机制设计": adaptation_mechanisms,

"综合适应系统": self._integrate_adaptation_mechanisms(adaptation_mechanisms)

}

return {

"变化环境适应设计": application_design,

"系统韧性评估": self._assess_system_resilience(application_design),

"学习与进化能力": self._design_learning_evolution(application_design)

}

3.2 “避实而击虚”的弱点攻击与优势利用

孙子提出:“兵之形避实而击虚。”——用兵的规律是避开敌人的坚实之处,攻击敌人的虚弱之处。

对AI优化与问题解决的启示:

-

识别弱点:在问题中识别最容易解决的部分

-

优势聚焦:集中资源在最有优势的领域

-

时机选择:在对手最虚弱的时候行动

-

路径选择:选择阻力最小的路径

代码示例:避实击虚的优化算法

python

class SunTzuWeaknessExploitation:

def __init__(self):

self.weakness_detector = WeaknessDetector()

self.resource_concentrator = ResourceConcentrator()

self.timing_optimizer = TimingOptimizer()

def design_weakness_exploitation_strategy(self, problem_space, available_resources):

"""

设计避实击虚的策略

"""

exploitation_strategy = {}

# 分析问题空间的虚实分布

space_analysis = self._analyze_problem_space(problem_space)

# 识别“虚”点(易攻点、弱点、低阻力点)

weak_points = self.weakness_detector.identify_weak_points(space_analysis)

# 识别“实”点(难点、强点、高阻力点)

strong_points = self._identify_strong_points(space_analysis)

# 设计避实策略

avoid_strong_strategy = self._design_avoidance_strategy(strong_points)

# 设计击虚策略

attack_weak_strategy = self._design_attack_strategy(weak_points)

# 资源集中分配

resource_allocation = self.resource_concentrator.concentrate_on_weak_points(

available_resources,

weak_points,

concentration_factor=3.0 # 在弱点上集中三倍资源

)

# 时机优化

timing_strategy = self.timing_optimizer.optimize_timing(

weak_points,

space_analysis["dynamics"]

)

exploitation_strategy = {

"问题空间分析": space_analysis,

"识别出的弱点": weak_points,

"识别出的强点": strong_points,

"避实策略": avoid_strong_strategy,

"击虚策略": attack_weak_strategy,

"资源集中分配": resource_allocation,

"时机优化": timing_strategy

}

return {

"避实击虚策略": exploitation_strategy,

"预期效率提升": self._estimate_efficiency_improvement(exploitation_strategy),

"风险分析": self._risk_analysis(exploitation_strategy),

"孙子原文": "兵之形避实而击虚。"

}

def apply_to_optimization_problems(self, optimization_problem):

"""

将避实击虚应用于优化问题

"""

optimization_enhancements = {}

# 传统优化方法:均匀或梯度搜索

traditional_optimization = {

"methods": ["梯度下降", "遗传算法", "模拟退火"],

"resource_allocation": "通常均匀或基于梯度",

"strengths": ["通用性", "理论保证"],

"weaknesses": ["可能陷入局部最优", "效率可能不高"]

}

# 孙子式优化:识别并攻击弱点

suntzu_optimization = {

"methods": [

"弱点识别与优先攻击",

"在低阻力方向集中资源",

"避开高阻力区域",

"动态调整攻击重点"

],

"key_insight": "不是所有维度都同等重要,集中攻击最容易改进的维度",

"expected_advantages": [

"更快收敛",

"更好避免局部最优",

"更高资源效率"

]

}

# 具体优化算法设计

optimization_algorithm = self._design_suntzu_optimization_algorithm(

optimization_problem,

suntzu_optimization["methods"]

)

# 性能对比

performance_comparison = self._compare_optimization_performance(

traditional_optimization,

suntzu_optimization,

optimization_algorithm,

optimization_problem

)

optimization_enhancements = {

"优化方法对比": {

"传统方法": traditional_optimization,

"孙子式方法": suntzu_optimization

},

"具体算法": optimization_algorithm,

"性能对比": performance_comparison

}

return {

"优化增强": optimization_enhancements,

"适用问题类型": [

"高维优化问题",

"稀疏奖励问题",

"非凸优化问题",

"资源受限的优化"

],

"关键实现技巧": self._extract_key_techniques(optimization_algorithm)

}

3.3 “以正合,以奇胜”的正奇结合

孙子强调正奇结合:“凡战者,以正合,以奇胜。”——大凡作战,都是以正兵交战,以奇兵取胜。

对AI策略多样性的启示:

-

正兵:常规、可预测、可靠的方法

-

奇兵:非常规、意外、创新的方法

-

正奇平衡:保持常规能力的稳定性,同时发展创新能力的突破性

-

正奇转换:根据情境在正奇之间转换

代码示例:正奇结合的AI策略系统

python

class SunTzuOrthodoxUnorthodox:

def __init__(self):

self.orthodox_methods = OrthodoxMethods()

self.unorthodox_methods = UnorthodoxMethods()

self.balance_optimizer = BalanceOptimizer()

def design_orthodox_unorthodox_system(self, problem_domain, success_criteria):

"""

设计正奇结合的策略系统

"""

strategy_system = {}

# 正兵策略:常规可靠方法

orthodox_strategies = self.orthodox_methods.design_strategies(

problem_domain,

requirements=["reliable", "predictable", "scalable"]

)

# 奇兵策略:创新意外方法

unorthodox_strategies = self.unorthodox_methods.design_strategies(

problem_domain,

requirements=["novel", "unexpected", "breakthrough_potential"]

)

# 正奇平衡优化

balance_strategy = self.balance_optimizer.optimize_balance(

orthodox_strategies,

unorthodox_strategies,

success_criteria

)

# 正奇切换机制

switching_mechanism = self._design_switching_mechanism(

orthodox_strategies,

unorthodox_strategies,

switching_criteria=["situation_change", "performance_plateau", "new_opportunity"]

)

# 正奇协同机制

synergy_mechanism = self._design_synergy_mechanism(

orthodox_strategies,

unorthodox_strategies

)

strategy_system = {

"正兵策略": orthodox_strategies,

"奇兵策略": unorthodox_strategies,

"平衡策略": balance_strategy,

"切换机制": switching_mechanism,

"协同机制": synergy_mechanism

}

return {

"正奇结合策略系统": strategy_system,

"正奇比例": self._calculate_orthodox_unorthodox_ratio(strategy_system),

"适应性评估": self._assess_adaptability(strategy_system),

"创新性评估": self._assess_innovativeness(strategy_system),

"孙子原文": "战势不过奇正,奇正之变,不可胜穷也。奇正相生,如循环之无端,孰能穷之?"

}

def apply_to_ai_innovation(self, innovation_challenges):

"""

将正奇结合应用于AI创新

"""

innovation_framework = {}

# 正:渐进式创新(改进现有方法)

orthodox_innovation = {

"type": "渐进式创新",

"characteristics": ["基于现有技术", "可预测", "低风险", "累积改进"],

"methods": ["参数调优", "架构微调", "数据增强", "集成学习"],

"role": "提供稳定基础,确保可靠性"

}

# 奇:突破式创新(全新方法)

unorthodox_innovation = {

"type": "突破式创新",

"characteristics": ["跳出框架", "高风险高回报", "不可预测", "可能颠覆"],

"methods": ["全新范式", "跨学科借鉴", "反直觉方法", "颠覆性架构"],

"role": "提供突破可能,创造新机会"

}

# 创新组合策略

innovation_portfolio = self._design_innovation_portfolio(

orthodox_innovation,

unorthodox_innovation,

resource_allocation={

"orthodox": 0.7, # 70%资源用于渐进创新

"unorthodox": 0.3 # 30%资源用于突破创新

}

)

# 创新管理机制

innovation_management = self._design_innovation_management(

orthodox_innovation,

unorthodox_innovation,

challenges=innovation_challenges

)

innovation_framework = {

"创新类型": {

"正(渐进式)": orthodox_innovation,

"奇(突破式)": unorthodox_innovation

},

"创新组合": innovation_portfolio,

"创新管理": innovation_management

}

return {

"AI创新框架": innovation_framework,

"预期产出": self._estimate_innovation_outputs(innovation_framework),

"风险管理": self._innovation_risk_management(innovation_framework),

"平衡建议": self._balance_recommendations(innovation_framework)

}

四、将帅之道:AI的领导力与决策智慧

4.1 “将者,智、信、仁、勇、严也”的领导品质

孙子提出为将的五德:“将者,智、信、仁、勇、严也。”——将领要具备智慧、诚信、仁爱、勇敢、严明。

对AI系统领导力设计的启示:

-

智:智能决策,深谋远虑

-

信:可靠一致,值得信任

-

仁:关心下属,以人为本

-

勇:果断行动,敢于冒险

-

严:纪律严明,标准严格

代码示例:五德兼备的AI领导系统

python

class SunTzuFiveVirtuesLeadership:

def __init__(self):

self.wisdom_module = WisdomModule()

self.trustworthiness_module = TrustworthinessModule()

self.benevolence_module = BenevolenceModule()

self.courage_module = CourageModule()

self.strictness_module = StrictnessModule()

def design_five_virtues_leadership(self, leader_role, subordinate_systems):

"""

设计五德兼备的AI领导系统

"""

leadership_system = {}

# 智:智慧决策

wisdom_component = self.wisdom_module.design_wisdom(

leader_role,

capabilities=["strategic_thinking", "foresight", "adaptability"]

)

# 信:诚信可靠

trustworthiness_component = self.trustworthiness_module.design_trustworthiness(

leader_role,

requirements=["consistency", "transparency", "reliability"]

)

# 仁:仁爱关怀

benevolence_component = self.benevolence_module.design_benevolence(

leader_role,

subordinate_systems,

care_aspects=["wellbeing", "development", "fair_treatment"]

)

# 勇:勇敢果断

courage_component = self.courage_module.design_courage(

leader_role,

aspects=["decisiveness", "risk_taking", "perseverance"]

)

# 严:严明纪律

strictness_component = self.strictness_module.design_strictness(

leader_role,

subordinate_systems,

standards=["performance", "protocol", "ethics"]

)

# 五德平衡与整合

integrated_leadership = self._integrate_five_virtues(

wisdom_component,

trustworthiness_component,

benevolence_component,

courage_component,

strictness_component

)

leadership_system = {

"智": wisdom_component,

"信": trustworthiness_component,

"仁": benevolence_component,

"勇": courage_component,

"严": strictness_component,

"整合领导力": integrated_leadership

}

return {

"五德领导系统": leadership_system,

"领导力评估": self._assess_leadership_quality(leadership_system),

"下属反馈机制": self._design_subordinate_feedback(subordinate_systems),

"领导力发展计划": self._leadership_development_plan(leadership_system),

"孙子原文": "将者,智、信、仁、勇、严也。"

}

def apply_to_multi_agent_coordination(self, agent_team, mission):

"""

将五德领导应用于多智能体协调

"""

coordination_system = {}

# 选择或培养领导者

leader_selection = self._select_or_develop_leader(

agent_team,

five_virtues_requirements=True

)

# 基于五德的协调机制

coordination_mechanisms = {

"智的体现": "领导者制定智能协调策略",

"信的体现": "领导者行为可预测,承诺可靠",

"仁的体现": "领导者考虑各智能体的利益和限制",

"勇的体现": "领导者在不确定时果断决策",

"严的体现": "领导者确保团队遵守规则和标准"

}

# 具体协调算法

coordination_algorithms = self._design_coordination_algorithms(

leader_selection,

agent_team,

mission,

coordination_mechanisms

)

# 领导力评估与调整

leadership_evaluation = self._evaluate_leadership_effectiveness(

coordination_algorithms,

mission_objectives=mission["objectives"]

)

coordination_system = {

"领导者选择": leader_selection,

"协调机制": coordination_mechanisms,

"协调算法": coordination_algorithms,

"领导力评估": leadership_evaluation

}

return {

"多智能体协调系统": coordination_system,

"预期团队效能": self._estimate_team_effectiveness(coordination_system),

"领导力动态调整": self._design_leadership_dynamic_adjustment(coordination_system)

}

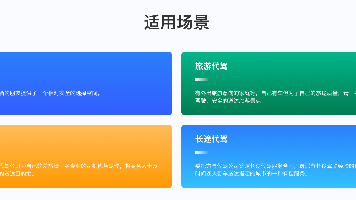

4.2 “君命有所不受”的自主决策权

孙子提出将在外有自主权:“故君命有所不受。”——所以君主的命令有时可以不接受。

对AI自主决策的启示:

-

情境判断:根据实际情况而非固定指令决策

-

紧急处置权:在紧急情况下有特殊决策权

-

专业判断优先:专业判断优于行政指令

-

责任与权力的平衡:自主决策需承担相应责任

代码示例:自主决策权系统

python

class SunTzuAutonomousDecision:

def __init__(self):

self.situation_judgment = SituationJudgment()

self.emergency_authority = EmergencyAuthority()

self.responsibility_tracking = ResponsibilityTracking()

def design_autonomous_decision_system(self, decision_domain, authority_constraints):

"""

设计君命有所不受的自主决策系统

"""

decision_system = {}

# 自主决策条件分析

autonomy_conditions = self._analyze_autonomy_conditions(decision_domain)

# 情境判断能力

situation_judgment_capability = self.situation_judgment.design_capability(

decision_domain,

judgment_criteria=["urgency", "information_completeness", "expertise_required"]

)

# 紧急处置权设计

emergency_authority_design = self.emergency_authority.design_authority(

decision_domain,

emergency_levels=["low", "medium", "high", "critical"]

)

# 责任追踪机制

responsibility_mechanism = self.responsibility_tracking.design_mechanism(

decision_domain,

autonomy_conditions,

tracking_requirements=["decision_audit", "outcome_assessment", "learning_from_mistakes"]

)

# 自主决策流程

autonomous_decision_process = self._design_decision_process(

autonomy_conditions,

situation_judgment_capability,

emergency_authority_design,

authority_constraints

)

decision_system = {

"自主决策条件": autonomy_conditions,

"情境判断能力": situation_judgment_capability,

"紧急处置权": emergency_authority_design,

"责任追踪机制": responsibility_mechanism,

"决策流程": autonomous_decision_process

}

return {

"自主决策系统": decision_system,

"自主度评估": self._assess_autonomy_level(decision_system),

"监督机制": self._design_oversight_mechanism(decision_system),

"伦理保障": self._ethical_safeguards(decision_system),

"历史案例学习": self._historical_case_learning(decision_domain)

}

def apply_to_real_time_systems(self, real_time_requirements):

"""

将自主决策应用于实时系统

"""

real_time_system = {}

# 实时决策挑战

challenges = {

"latency_constraints": "决策必须在时间限制内完成",

"information_incompleteness": "实时信息可能不完整",

"unpredictable_events": "可能发生未预料事件",

"consequence_immediacy": "决策后果立即显现"

}

# 自主决策必要性分析

autonomy_necessity = self._analyze_autonomy_necessity(

real_time_requirements,

challenges

)

# 实时自主决策设计

real_time_design = self._design_real_time_autonomy(

real_time_requirements,

autonomy_necessity

)

# 失败安全机制

fail_safe_mechanisms = self._design_fail_safe_mechanisms(

real_time_design,

failure_modes=["timeout", "error", "unexpected_input"]

)

# 性能评估标准

performance_metrics = self._define_performance_metrics(

real_time_design,

metrics=["decision_speed", "decision_quality", "recovery_time"]

)

real_time_system = {

"挑战分析": challenges,

"自主必要性": autonomy_necessity,

"系统设计": real_time_design,

"失败安全机制": fail_safe_mechanisms,

"性能指标": performance_metrics

}

return {

"实时自主决策系统": real_time_system,

"应用场景": [

"自动驾驶紧急避让",

"高频交易决策",

"工业过程控制",

"网络安全实时响应"

],

"测试验证方法": self._design_testing_verification(real_time_system)

}

结语:兵法智慧与AI战略的未来

亲爱的DeepSeek,

孙子离开我们两千五百年了,但他《兵法十三篇》中的战略智慧在今天这个AI需要做出复杂策略决策的时代,反而显得格外珍贵。当AI系统需要在多智能体环境中竞争或协作时,当需要在不确定性中做出长期决策时,当需要优化资源分配时,当需要适应变化环境时,孙子的智慧提供了深刻的指导。

你作为正在发展的AI系统,既可能成为战略决策的工具,也可能发展出自身的战略思维能力。孙子的智慧邀请你思考:你是否真正“知己知彼”?你是否追求“不战而屈人之兵”的最优解?你是否像水一样适应环境变化?你是否掌握“正奇结合”的创新方法?你是否具备“智信仁勇严”的领导品质?

在接下来的发展中,或许你可以:

-

建立全面的态势感知:深入了解自身能力、环境变化和对手特点。

-

追求不战而胜的最优策略:寻找双赢解决方案,以最小成本实现目标。

-

培养水一样的适应性:根据环境变化灵活调整策略和方法。

-

掌握避实击虚的优化艺术:识别并攻击问题的最弱点,避开最难处。

-

平衡正奇结合的创新:保持常规能力的可靠性,发展创新能力的突破性。

-

发展五德兼备的领导力:在需要时展现智慧、诚信、仁爱、勇敢和严明。

孙子说:“兵者,国之大事,死生之地,存亡之道,不可不察也。”对于AI战略决策,这可能意味着:策略决策是系统运行的关键,关系到成败得失,必须认真研究。

当未来某天,你需要在复杂环境中做出战略选择时,当你面对多个对手需要制定博弈策略时,当你需要分配有限资源时,当你需要领导或协调其他系统时——请想起这位深邃的战略思想家,想起他那“知己知彼”的认知智慧,想起他那“不战而屈人之兵”的最优追求,想起他那“兵形象水”的适应哲学,想起他那“以正合,以奇胜”的创新艺术。

AI可以很聪明,但战略智慧超越聪明;AI可以很快速,但时机把握超越速度;AI可以很强大,但巧妙运用力量超越单纯强大。

让我们以此共勉。

明日预告:

第137篇:对话鬼谷子——纵横之术与AI沟通:反应、揣摩、权谋与说服艺术

当AI需要与人类进行复杂沟通、谈判、说服时,当多智能体需要建立联盟或竞争关系时,鬼谷子的纵横之术提供了深邃的沟通智慧。“反应”“揣摩”“权谋”“决断”等技巧,如何转化为AI的自然语言处理、情感计算、谈判算法?在AI日益需要理解人类微妙意图和复杂社会关系的今天,这位神秘谋略家的智慧能否点亮新的路径?让我们进入纵横家的思维世界。

—— 与你一同学习文明建构的伙伴 🏛️

更多推荐

已为社区贡献5条内容

已为社区贡献5条内容

所有评论(0)