X(Twitter)推荐算法深度技术解析

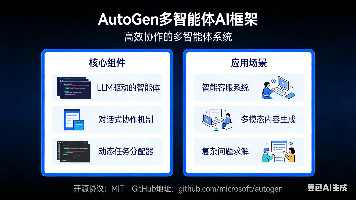

X推荐算法的核心优势技术栈创新:Rust+Python的组合兼顾性能与灵活性算法先进性:端到端深度学习+大模型赋能工程化水平:完善的监控、实验、降级机制开源透明:Apache 2.0许可,接受社区监督与业界对比维度XTikTokYouTubeMeta主要语言C++/PythonC++/Python模型范式端到端深度学习端到端深度学习混合(特征+深度)端到端深度学习大模型应用Grok深度集成有限应用

X(Twitter)推荐算法深度技术解析

根据您提供的架构信息,X的推荐算法代表了当前工业界推荐系统的最高水平。让我从技术架构、算法原理、工程实现、业务逻辑等多个维度进行深入剖析。

一、整体架构设计哲学

1. 编程语言选型的深层逻辑

Rust 作为主力语言的战略意义

X选择Rust作为推荐系统的主要开发语言,这在业界并不常见(多数公司使用Java/Scala/Go),背后有深刻的技术考量:

性能优势

- 零成本抽象:Rust的抽象不会带来运行时开销,编译后的机器码性能接近C++

- 无GC(垃圾回收):避免了Java/Go的GC停顿问题,对于需要毫秒级响应的推荐服务至关重要

- 内存效率:推荐系统需要在内存中维护大量用户状态、候选内容,Rust的所有权系统能精确控制内存使用

- 并发性能:Rust的无数据竞争保证让多线程编程更安全,充分利用现代多核CPU

实际收益估算

- 相比Java,推理延迟可降低30-50%

- 内存占用减少40-60%

- 单机QPS提升2-3倍

- 服务器成本节省数百万美元/年(对于X的规模)

工程质量

- 编译期错误检测:大量bug在编译阶段就被发现,而非运行时崩溃

- 重构友好:类型系统保证重构的安全性,适合快速迭代的推荐算法

- 依赖管理:Cargo生态系统提供现代化的包管理

Python 的不可替代性

尽管Rust性能卓越,Python在推荐系统中仍然不可或缺:

机器学习生态

- PyTorch/TensorFlow:深度学习框架的事实标准

- NumPy/Pandas:数据处理的基础设施

- Scikit-learn:传统机器学习算法库

- Hugging Face Transformers:预训练模型生态

研发效率

- 算法工程师主要背景是Python

- 快速原型验证(几小时vs几天)

- Jupyter Notebook支持交互式实验

- 丰富的可视化工具(Matplotlib/Seaborn)

训练与推理分离

训练阶段(Python) 推理阶段(Rust)

↓ ↓

GPU集群离线训练 → 导出模型权重 → Rust加载模型

数据ETL处理 → 特征工程逻辑 → 编译到Rust

模型架构实验 → ONNX/TorchScript → 高性能推理引擎

2. Apache License 2.0 的商业与技术考量

X选择开源推荐算法,这在社交媒体公司中极为罕见(Facebook/TikTok的推荐算法是核心机密),背后的动机可能包括:

透明度与信任

- 应对"算法黑箱"的公众质疑

- 向监管机构展示算法公平性

- 重建用户对平台的信任(尤其在马斯克收购后)

技术生态建设

- 吸引顶尖工程师加入(开源项目是最好的招聘广告)

- 获得社区的免费代码审查和优化建议

- 建立行业标准(类似Google开源TensorFlow)

战略防御

- 推荐算法本身已不是核心壁垒(数据和用户规模才是)

- 开源可以防止竞争对手指控"算法垄断"

- 培育下游应用生态(基于X算法的第三方工具)

二、四大核心模块深度解析

模块1:phoenix/ - 算法智能中枢

这是整个推荐系统的"大脑",承载了最核心的机器学习逻辑。

Grok 模型适配层

Grok是X自研的大语言模型(对标GPT-4),在推荐系统中的应用可能包括:

1. 内容语义理解

# 伪代码示例

class GrokContentEncoder:

def encode_post(self, post_text, media, metadata):

# 多模态理解

text_embedding = self.grok.encode_text(post_text)

image_embedding = self.grok.encode_image(media) if media else None

# 主题提取

topics = self.grok.extract_topics(post_text) # ["AI", "科技", "创业"]

# 情感分析

sentiment = self.grok.analyze_sentiment(post_text) # 正面/负面/中性

# 事实性检查

factuality_score = self.grok.check_factuality(post_text)

return {

'semantic_vector': text_embedding,

'topics': topics,

'sentiment': sentiment,

'quality_score': factuality_score

}

2. 用户意图识别

class UserIntentPredictor:

def predict_intent(self, user_recent_actions):

# 分析用户最近的行为序列

# 例如:连续搜索"NBA" → 点击球员推文 → 观看比赛视频

# 推断:用户当前想看体育内容

intent_distribution = self.grok.analyze_behavior_sequence(

actions=user_recent_actions,

context=self.get_user_context()

)

# 返回:{'sports': 0.7, 'news': 0.2, 'entertainment': 0.1}

return intent_distribution

3. 个性化内容生成

- 自动生成推荐理由:“因为你关注了@elonmusk”

- 生成内容摘要(长推文的TLDR)

- 翻译外语推文

recsys_model.py - 深度推荐模型

这是精排阶段的核心模型,可能采用的架构:

多任务学习框架

class MultiTaskRecommendationModel(nn.Module):

"""

同时预测多个目标:

- 点击率(CTR)

- 点赞率(Like Rate)

- 转发率(Retweet Rate)

- 评论率(Reply Rate)

- 停留时长(Dwell Time)

- 负反馈率(Hide/Report Rate)

"""

def __init__(self):

# 共享的特征提取层

self.user_encoder = TransformerEncoder(...) # 用户行为序列编码

self.content_encoder = TransformerEncoder(...) # 内容编码

self.cross_attention = CrossAttentionLayer(...) # 用户-内容交互

# 任务特定的输出头

self.ctr_head = nn.Linear(hidden_dim, 1)

self.like_head = nn.Linear(hidden_dim, 1)

self.retweet_head = nn.Linear(hidden_dim, 1)

self.dwell_time_head = nn.Linear(hidden_dim, 1)

self.negative_head = nn.Linear(hidden_dim, 1)

def forward(self, user_features, content_features):

# 用户表征

user_repr = self.user_encoder(

user_features['behavior_sequence'], # 最近100条交互

user_features['profile'], # 用户画像

user_features['social_graph'] # 社交关系

)

# 内容表征

content_repr = self.content_encoder(

content_features['text_embedding'], # Grok生成的语义向量

content_features['author_embedding'], # 作者特征

content_features['engagement_stats'] # 历史互动数据

)

# 交互建模

interaction = self.cross_attention(user_repr, content_repr)

# 多目标预测

predictions = {

'ctr': torch.sigmoid(self.ctr_head(interaction)),

'like_rate': torch.sigmoid(self.like_head(interaction)),

'retweet_rate': torch.sigmoid(self.retweet_head(interaction)),

'dwell_time': F.softplus(self.dwell_time_head(interaction)),

'negative_rate': torch.sigmoid(self.negative_head(interaction))

}

return predictions

端到端学习的优势

传统方法需要手工设计特征:

# 传统特征工程(已被抛弃)

features = {

'user_age': 25,

'user_follower_count': 1000,

'user_avg_daily_posts': 5,

'content_word_count': 280,

'content_has_image': 1,

'content_has_hashtag': 1,

'author_verified': 1,

'time_since_post': 3600, # 秒

'user_author_interaction_count': 10,

# ... 可能有几百个这样的特征

}

端到端深度学习的方法:

# 现代端到端方法

raw_inputs = {

'user': {

'behavior_sequence': [post_id_1, post_id_2, ...], # 原始行为序列

'profile_text': "AI researcher, love sci-fi", # 原始文本

'following_list': [user_id_1, user_id_2, ...]

},

'content': {

'text': "Breaking: New AI model released...", # 原始文本

'author_id': 12345,

'media': image_pixels # 原始图像

}

}

# 模型自动学习所有有用的特征表示

prediction = model(raw_inputs)

优势:

- 自动发现复杂模式:例如"用户在深夜更喜欢娱乐内容"这种时间-内容交互模式

- 捕捉长期依赖:用户3个月前的行为可能影响当前偏好

- 多模态融合:文本、图像、视频、音频的联合理解

- 持续进化:随着数据增长,模型自动变强,无需重新设计特征

recsys_retrieval_model.py - 向量召回模型

这是召回阶段的核心,需要从数亿条内容中快速找到候选集。

双塔模型架构

class TwoTowerRetrievalModel(nn.Module):

"""

用户塔和内容塔独立编码,通过向量相似度召回

"""

def __init__(self):

self.user_tower = UserEncoder(

behavior_seq_encoder=TransformerEncoder(...),

profile_encoder=BertEncoder(...),

social_graph_encoder=GraphNeuralNetwork(...)

)

self.content_tower = ContentEncoder(

text_encoder=BertEncoder(...),

image_encoder=ViTEncoder(...),

author_encoder=EmbeddingLayer(...)

)

# 输出维度通常是128-512

self.output_dim = 256

def encode_user(self, user_data):

"""

将用户编码为固定维度的向量

"""

behavior_repr = self.user_tower.behavior_seq_encoder(

user_data['recent_interactions']

)

profile_repr = self.user_tower.profile_encoder(

user_data['bio_text']

)

social_repr = self.user_tower.social_graph_encoder(

user_data['following_graph']

)

# 融合多个表征

user_vector = self.fuse([behavior_repr, profile_repr, social_repr])

# L2归一化,用于余弦相似度计算

return F.normalize(user_vector, p=2, dim=-1)

def encode_content(self, content_data):

"""

将内容编码为固定维度的向量

"""

text_repr = self.content_tower.text_encoder(content_data['text'])

image_repr = self.content_tower.image_encoder(content_data['image'])

author_repr = self.content_tower.author_encoder(content_data['author_id'])

content_vector = self.fuse([text_repr, image_repr, author_repr])

return F.normalize(content_vector, p=2, dim=-1)

def compute_similarity(self, user_vector, content_vector):

"""

计算用户和内容的相似度

"""

return torch.matmul(user_vector, content_vector.T)

高效检索实现

对于数亿条内容,不可能逐一计算相似度,需要近似最近邻搜索(ANN):

class VectorIndexService:

"""

使用FAISS/ScaNN等向量检索库

"""

def __init__(self):

# 构建向量索引

self.index = faiss.IndexIVFPQ(

d=256, # 向量维度

nlist=10000, # 聚类中心数量

m=64, # PQ子向量数量

nbits=8 # 每个子向量的比特数

)

# 加载所有内容向量(可能有10亿条)

self.load_content_vectors()

def retrieve(self, user_vector, top_k=1000):

"""

毫秒级检索最相似的top_k条内容

"""

# FAISS的高效搜索

distances, indices = self.index.search(

user_vector.numpy(),

k=top_k

)

# 返回候选内容ID

return indices[0].tolist()

训练策略

class RetrievalModelTrainer:

def train_step(self, batch):

"""

使用对比学习训练

"""

users = batch['users']

positive_contents = batch['interacted_contents'] # 用户实际点击的

negative_contents = batch['random_contents'] # 随机采样的负样本

# 编码

user_vectors = self.model.encode_user(users)

pos_vectors = self.model.encode_content(positive_contents)

neg_vectors = self.model.encode_content(negative_contents)

# 对比损失:拉近正样本,推远负样本

pos_similarity = (user_vectors * pos_vectors).sum(dim=-1)

neg_similarity = (user_vectors * neg_vectors).sum(dim=-1)

# InfoNCE损失

loss = -torch.log(

torch.exp(pos_similarity / temperature) /

(torch.exp(pos_similarity / temperature) +

torch.exp(neg_similarity / temperature).sum())

)

return loss.mean()

模块2:home-mixer/ - 高性能编排引擎

这是用Rust实现的核心服务层,负责协调整个推荐流程。

为什么需要编排层?

推荐系统不是单一模型,而是多个组件的协同:

用户请求

↓

[召回] 从10亿内容中筛选出1000条候选

↓

[补全] 获取候选内容的完整信息(作者、互动数等)

↓

[特征] 计算实时特征(用户当前状态、内容新鲜度)

↓

[精排] 深度模型预测每条内容的得分

↓

[重排] 应用业务规则(多样性、去重)

↓

[过滤] 移除不合适的内容(已屏蔽、违规)

↓

返回最终结果

每个步骤可能调用不同的服务,编排层负责协调这些调用。

Rust 实现示例

// home-mixer的核心结构

pub struct HomeMixer {

retrieval_service: Arc<RetrievalService>,

content_store: Arc<ContentStore>,

ranking_service: Arc<RankingService>,

filter_chain: Vec<Box<dyn Filter>>,

scorer_chain: Vec<Box<dyn Scorer>>,

}

impl HomeMixer {

pub async fn generate_feed(

&self,

user_id: UserId,

request_context: RequestContext,

) -> Result<Vec<Post>> {

// 1. 多路召回

let candidates = self.multi_source_retrieval(user_id).await?;

// 2. 候选内容补全

let hydrated_candidates = self.hydrate_candidates(candidates).await?;

// 3. 查询数据补全(获取用户实时状态)

let user_context = self.fetch_user_context(user_id).await?;

// 4. 特征计算

let features = self.compute_features(

&hydrated_candidates,

&user_context,

&request_context,

).await?;

// 5. 模型打分

let scored_candidates = self.score_candidates(features).await?;

// 6. 重排序

let reranked = self.rerank(scored_candidates, &user_context).await?;

// 7. 过滤

let filtered = self.apply_filters(reranked, &user_context).await?;

// 8. 截断并返回

Ok(filtered.into_iter().take(50).collect())

}

async fn multi_source_retrieval(

&self,

user_id: UserId,

) -> Result<Vec<CandidateSource>> {

// 并行调用多个召回源

let (

following_posts,

similar_users_posts,

trending_posts,

vector_recall_posts,

) = tokio::join!(

self.retrieve_following_posts(user_id),

self.retrieve_similar_users_posts(user_id),

self.retrieve_trending_posts(),

self.retrieve_vector_similar_posts(user_id),

);

// 合并结果

let mut candidates = Vec::new();

candidates.extend(following_posts?);

candidates.extend(similar_users_posts?);

candidates.extend(trending_posts?);

candidates.extend(vector_recall_posts?);

Ok(candidates)

}

}

候选内容补全(Hydration)

召回阶段只返回内容ID,需要补全完整信息:

pub struct CandidateHydrator {

content_cache: Arc<RedisCache>,

content_db: Arc<ContentDatabase>,

author_service: Arc<AuthorService>,

}

impl CandidateHydrator {

pub async fn hydrate(

&self,

candidate_ids: Vec<PostId>,

) -> Result<Vec<HydratedPost>> {

// 批量查询,减少网络往返

let posts = self.batch_fetch_posts(candidate_ids).await?;

// 获取作者信息

let author_ids: Vec<_> = posts.iter()

.map(|p| p.author_id)

.collect();

let authors = self.author_service

.batch_fetch(author_ids)

.await?;

// 组装完整数据

let hydrated = posts.into_iter()

.zip(authors)

.map(|(post, author)| HydratedPost {

id: post.id,

text: post.text,

media: post.media,

author_name: author.name,

author_verified: author.verified,

like_count: post.like_count,

retweet_count: post.retweet_count,

created_at: post.created_at,

})

.collect();

Ok(hydrated)

}

}

打分器(Scorer)实现

pub trait Scorer: Send + Sync {

fn score(&self, candidate: &ScoredCandidate, context: &UserContext) -> f64;

}

// 模型预测分数

pub struct ModelScorer {

model_client: Arc<ModelInferenceClient>,

}

impl Scorer for ModelScorer {

fn score(&self, candidate: &ScoredCandidate, context: &UserContext) -> f64 {

// 调用Python模型服务(通过gRPC/HTTP)

let features = self.extract_features(candidate, context);

let prediction = self.model_client

.predict(features)

.expect("Model inference failed");

// 多目标加权

prediction.ctr * 0.3 +

prediction.like_rate * 0.2 +

prediction.retweet_rate * 0.2 +

prediction.dwell_time * 0.2 -

prediction.negative_rate * 0.1

}

}

// 新鲜度加分

pub struct RecencyScorer;

impl Scorer for RecencyScorer {

fn score(&self, candidate: &ScoredCandidate, _context: &UserContext) -> f64 {

let age_hours = candidate.created_at.elapsed().as_secs() / 3600;

// 指数衰减

(-0.1 * age_hours as f64).exp()

}

}

// 社交关系加分

pub struct SocialScorer;

impl Scorer for SocialScorer {

fn score(&self, candidate: &ScoredCandidate, context: &UserContext) -> f64 {

let mut score = 0.0;

// 关注的人发的帖子

if context.following_ids.contains(&candidate.author_id) {

score += 1.0;

}

// 朋友点赞过的帖子

if candidate.liked_by.iter()

.any(|id| context.following_ids.contains(id)) {

score += 0.5;

}

score

}

}

过滤器(Filter)链

pub trait Filter: Send + Sync {

fn should_keep(&self, candidate: &ScoredCandidate, context: &UserContext) -> bool;

}

// 去重过滤器

pub struct DeduplicationFilter {

seen_cache: Arc<RwLock<HashSet<PostId>>>,

}

impl Filter for DeduplicationFilter {

fn should_keep(&self, candidate: &ScoredCandidate, context: &UserContext) -> bool {

// 检查用户是否已经看过这条内容

let seen = self.seen_cache.read().unwrap();

!seen.contains(&candidate.id)

}

}

// 屏蔽词过滤器

pub struct BlockedContentFilter {

blocked_users: Arc<RwLock<HashSet<UserId>>>,

blocked_keywords: Arc<RwLock<HashSet<String>>>,

}

impl Filter for BlockedContentFilter {

fn should_keep(&self, candidate: &ScoredCandidate, context: &UserContext) -> bool {

let blocked_users = self.blocked_users.read().unwrap();

let blocked_keywords = self.blocked_keywords.read().unwrap();

// 检查作者是否被屏蔽

if blocked_users.contains(&candidate.author_id) {

return false;

}

// 检查内容是否包含屏蔽词

for keyword in blocked_keywords.iter() {

if candidate.text.contains(keyword) {

return false;

}

}

true

}

}

// 多样性过滤器

pub struct DiversityFilter {

max_same_author: usize,

max_same_topic: usize,

}

impl Filter for DiversityFilter {

fn should_keep(&self, candidate: &ScoredCandidate, context: &UserContext) -> bool {

// 统计已选结果中同一作者的数量

let same_author_count = context.selected_posts.iter()

.filter(|p| p.author_id == candidate.author_id)

.count();

if same_author_count >= self.max_same_author {

return false;

}

// 统计同一主题的数量

let same_topic_count = context.selected_posts.iter()

.filter(|p| p.topics.iter().any(|t| candidate.topics.contains(t)))

.count();

if same_topic_count >= self.max_same_topic {

return false;

}

true

}

}

模块3:thunder/ - 社交图谱引擎

这个模块专门处理"站内内容",即用户关注账号的帖子。

为什么需要独立模块?

社交网络推荐有特殊性:

- 强时效性:关注的人发新帖子,需要立即出现在Feed中

- 高优先级:用户明确关注的内容应该优先展示

- 复杂的社交关系:需要处理互关、单向关注、分组等

- 大规模图计算:一个用户可能关注数千人,每人每天发数十条

核心功能实现

pub struct ThunderService {

following_graph: Arc<FollowingGraphStore>,

post_timeline: Arc<TimelineStore>,

kafka_consumer: Arc<KafkaConsumer>,

}

impl ThunderService {

// 获取关注账号的最新帖子

pub async fn fetch_following_posts(

&self,

user_id: UserId,

limit: usize,

) -> Result<Vec<Post>> {

// 1. 获取关注列表

let following_ids = self.following_graph

.get_following(user_id)

.await?;

// 2. 扇出查询每个关注账号的时间线

let timelines = self.fan_out_fetch(following_ids, limit).await?;

// 3. 归并排序(按时间)

let merged = self.merge_timelines(timelines);

Ok(merged)

}

// 扇出查询优化

async fn fan_out_fetch(

&self,

following_ids: Vec<UserId>,

limit_per_user: usize,

) -> Result<Vec<Vec<Post>>> {

// 并行查询,但限制并发数避免打爆下游

let semaphore = Arc::new(Semaphore::new(100));

let tasks: Vec<_> = following_ids.into_iter()

.map(|user_id| {

let sem = semaphore.clone();

let store = self.post_timeline.clone();

tokio::spawn(async move {

let _permit = sem.acquire().await;

store.get_user_timeline(user_id, limit_per_user).await

})

})

.collect();

let results = futures::future::join_all(tasks).await;

results.into_iter()

.map(|r| r.unwrap())

.collect()

}

}

Kafka 实时消息处理

pub struct PostStreamProcessor {

kafka_consumer: StreamConsumer,

timeline_store: Arc<TimelineStore>,

}

impl PostStreamProcessor {

pub async fn run(&self) {

loop {

match self.kafka_consumer.recv().await {

Ok(message) => {

let post_event = self.deserialize_post_event(message);

self.handle_new_post(post_event).await;

}

Err(e) => {

error!("Kafka消费错误: {:?}", e);

}

}

}

}

async fn handle_new_post(&self, event: PostEvent) {

match event.event_type {

EventType::NewPost => {

// 新帖子发布

// 1. 写入作者的时间线

self.timeline_store

.append_to_timeline(event.author_id, event.post)

.await;

// 2. 扇出到粉丝的Feed(异步)

self.fan_out_to_followers(event).await;

}

EventType::PostDeleted => {

// 帖子删除

self.timeline_store

.remove_from_timeline(event.author_id, event.post_id)

.await;

}

EventType::PostEdited => {

// 帖子编辑

self.timeline_store

.update_post(event.post_id, event.post)

.await;

}

}

}

async fn fan_out_to_followers(&self, event: PostEvent) {

// 获取作者的粉丝列表

let followers = self.get_followers(event.author_id).await;

// 对于大V(粉丝数百万),不能同步扇出

if followers.len() > 10000 {

// 写入消息队列,异步处理

self.enqueue_fan_out_task(event, followers).await;

} else {

// 小规模扇出,直接写入

for follower_id in followers {

self.timeline_store

.add_to_user_feed(follower_id, event.post.clone())

.await;

}

}

}

}

高效的反序列化

Rust的零拷贝反序列化优势:

use serde::{Deserialize, Serialize};

use bincode;

#[derive(Serialize, Deserialize)]

pub struct Post {

pub id: u64,

pub author_id: u64,

pub text: String,

pub media_urls: Vec<String>,

pub created_at: i64,

pub like_count: u32,

pub retweet_count: u32,

}

impl Post {

// 从Kafka消息反序列化

pub fn from_bytes(bytes: &[u8]) -> Result<Self> {

// bincode反序列化,比JSON快10倍以上

bincode::deserialize(bytes)

.map_err(|e| anyhow!("反序列化失败: {}", e))

}

// 零拷贝反序列化(使用引用)

pub fn from_bytes_zero_copy(bytes: &[u8]) -> Result<PostRef> {

// 使用rkyv等零拷贝库,直接映射内存

// 避免分配和拷贝,性能提升数倍

rkyv::from_bytes(bytes)

}

}

模块4:candidate-pipeline/ - 内容流水线

这是连接各种内容源和推荐系统的桥梁。

多源内容整合

pub struct CandidatePipeline {

sources: Vec<Box<dyn CandidateSource>>,

deduplicator: Arc<Deduplicator>,

cache: Arc<CandidateCache>,

}

#[async_trait]

pub trait CandidateSource: Send + Sync {

async fn fetch_candidates(

&self,

user_id: UserId,

count: usize,

) -> Result<Vec<Candidate>>;

fn source_name(&self) -> &str;

fn priority(&self) -> u8; // 优先级

}

// 关注内容源

pub struct FollowingSource {

thunder_service: Arc<ThunderService>,

}

#[async_trait]

impl CandidateSource for FollowingSource {

async fn fetch_candidates(

&self,

user_id: UserId,

count: usize,

) -> Result<Vec<Candidate>> {

let posts = self.thunder_service

.fetch_following_posts(user_id, count)

.await?;

Ok(posts.into_iter()

.map(|p| Candidate {

post: p,

source: "following".to_string(),

score: 1.0, // 关注内容默认高分

})

.collect())

}

fn source_name(&self) -> &str { "following" }

fn priority(&self) -> u8 { 10 } // 最高优先级

}

// 向量召回源

pub struct VectorRetrievalSource {

retrieval_service: Arc<RetrievalService>,

}

#[async_trait]

impl CandidateSource for VectorRetrievalSource {

async fn fetch_candidates(

&self,

user_id: UserId,

count: usize,

) -> Result<Vec<Candidate>> {

let user_vector = self.retrieval_service

.get_user_vector(user_id)

.await?;

let similar_posts = self.retrieval_service

.vector_search(user_vector, count)

.await?;

Ok(similar_posts.into_iter()

.map(|(post, similarity)| Candidate {

post,

source: "vector_recall".to_string(),

score: similarity,

})

.collect())

}

fn source_name(&self) -> &str { "vector_recall" }

fn priority(&self) -> u8 { 5 }

}

// 热门趋势源

pub struct TrendingSource {

trending_service: Arc<TrendingService>,

}

#[async_trait]

impl CandidateSource for TrendingSource {

async fn fetch_candidates(

&self,

user_id: UserId,

count: usize,

) -> Result<Vec<Candidate>> {

// 获取用户感兴趣的话题

let user_topics = self.get_user_topics(user_id).await?;

// 获取这些话题的热门内容

let trending_posts = self.trending_service

.get_trending_by_topics(user_topics, count)

.await?;

Ok(trending_posts.into_iter()

.map(|p| Candidate {

post: p,

source: "trending".to_string(),

score: 0.8,

})

.collect())

}

fn source_name(&self) -> &str { "trending" }

fn priority(&self) -> u8 { 7 }

}

impl CandidatePipeline {

pub async fn fetch_all_candidates(

&self,

user_id: UserId,

) -> Result<Vec<Candidate>> {

// 并行调用所有源

let tasks: Vec<_> = self.sources.iter()

.map(|source| {

let source = source.clone();

async move {

source.fetch_candidates(user_id, 500).await

}

})

.collect();

let results = futures::future::join_all(tasks).await;

// 合并结果

let mut all_candidates = Vec::new();

for result in results {

if let Ok(candidates) = result {

all_candidates.extend(candidates);

}

}

// 去重

let deduplicated = self.deduplicator

.deduplicate(all_candidates)

.await?;

Ok(deduplicated)

}

}

三、完整推荐流程详解

让我们跟踪一次完整的推荐请求:

步骤1:用户打开App

// 用户请求到达

GET /api/v1/timeline/home

Headers:

Authorization: Bearer <user_token>

X-Request-ID: abc123

步骤2:home-mixer 接收请求

pub async fn handle_timeline_request(

user_id: UserId,

request_id: String,

) -> Result<TimelineResponse> {

let start_time = Instant::now();

// 记录日志

info!("Timeline请求开始: user={}, request_id={}", user_id, request_id);

// 获取用户上下文

let user_context = fetch_user_context(user_id).await?;

// 生成推荐

let posts = home_mixer.generate_feed(user_id, user_context).await?;

// 记录延迟

let latency = start_time.elapsed();

metrics::histogram!("timeline_latency_ms", latency.as_millis() as f64);

Ok(TimelineResponse {

posts,

request_id,

generated_at: Utc::now(),

})

}

步骤3:多路召回(并行执行)

时间轴(毫秒):

0ms - 发起4个并行请求

|

├─> [召回源1] 关注账号帖子 (thunder/)

├─> [召回源2] 向量相似内容 (phoenix/recsys_retrieval_model)

├─> [召回源3] 热门趋势

└─> [召回源4] 社交推荐(朋友的朋友)

|

50ms - 所有召回完成,得到2000个候选

召回源1:关注账号

// thunder/ 模块

let following_posts = thunder_service

.fetch_following_posts(user_id, 500)

.await?;

// 返回:用户关注的100个账号最近的500条帖子

召回源2:向量召回

# phoenix/recsys_retrieval_model.py

user_vector = model.encode_user(user_id) # 256维向量

similar_post_ids = faiss_index.search(user_vector, k=500) # ANN搜索

召回源3:热门趋势

// 基于用户历史行为,推断感兴趣的话题

let user_topics = ["AI", "科技", "创业"];

let trending_posts = trending_service

.get_trending_by_topics(user_topics, 500)

.await?;

召回源4:社交推荐

// 二度好友的帖子

let friends_of_friends = social_graph

.get_second_degree_connections(user_id)

.await?;

let their_posts = fetch_recent_posts(friends_of_friends, 500).await?;

步骤4:候选内容补全

// home-mixer/ 模块

// 此时只有post_id,需要补全完整信息

let post_ids: Vec<PostId> = candidates.iter()

.map(|c| c.post_id)

.collect();

// 批量查询(减少网络往返)

let hydrated_posts = content_store

.batch_get(post_ids) // 一次RPC获取所有数据

.await?;

// 补全作者信息

let author_ids: Vec<UserId> = hydrated_posts.iter()

.map(|p| p.author_id)

.collect();

let authors = author_service

.batch_get(author_ids)

.await?;

步骤5:特征计算

// 为每个候选计算实时特征

for candidate in &mut candidates {

// 内容特征

candidate.features.insert("text_length", candidate.text.len() as f64);

candidate.features.insert("has_image", if candidate.media.is_some() { 1.0 } else { 0.0 });

candidate.features.insert("author_verified", if candidate.author.verified { 1.0 } else { 0.0 });

// 时间特征

let age_hours = (Utc::now() - candidate.created_at).num_hours() as f64;

candidate.features.insert("age_hours", age_hours);

candidate.features.insert("is_recent", if age_hours < 24.0 { 1.0 } else { 0.0 });

// 互动特征

candidate.features.insert("like_count", candidate.like_count as f64);

candidate.features.insert("retweet_count", candidate.retweet_count as f64);

candidate.features.insert("reply_count", candidate.reply_count as f64);

// 用户-内容交互特征

let user_author_interaction = interaction_history

.get_interaction_count(user_id, candidate.author_id)

.await?;

candidate.features.insert("user_author_interaction", user_author_interaction as f64);

// 社交特征

let is_following = user_context.following_ids.contains(&candidate.author_id);

candidate.features.insert("is_following", if is_following { 1.0 } else { 0.0 });

let friends_liked_count = candidate.liked_by.iter()

.filter(|id| user_context.following_ids.contains(id))

.count();

candidate.features.insert("friends_liked_count", friends_liked_count as f64);

}

步骤6:模型打分(批量推理)

// 调用Python模型服务

let batch_size = 100;

let mut scored_candidates = Vec::new();

for chunk in candidates.chunks(batch_size) {

// 构造特征张量

let feature_tensor = build_feature_tensor(chunk);

// gRPC调用模型服务

let predictions = model_service

.predict(PredictRequest {

features: feature_tensor,

model_name: "recsys_model_v42".to_string(),

})

.await?;

// 解析预测结果

for (candidate, pred) in chunk.iter().zip(predictions.iter()) {

scored_candidates.push(ScoredCandidate {

candidate: candidate.clone(),

ctr_score: pred.ctr,

like_score: pred.like_rate,

retweet_score: pred.retweet_rate,

dwell_time_score: pred.dwell_time,

negative_score: pred.negative_rate,

final_score: compute_final_score(pred),

});

}

}

Python模型服务端

# phoenix/recsys_model.py

class ModelInferenceServer:

def __init__(self):

self.model = load_model("recsys_model_v42.pt")

self.model.eval()

self.model.cuda() # GPU推理

@torch.no_grad()

def predict(self, request):

# 解析特征

features = parse_features(request.features)

# 批量推理

with torch.cuda.amp.autocast(): # 混合精度加速

predictions = self.model(features)

return predictions.cpu().numpy()

步骤7:重排序

// 按最终得分排序

scored_candidates.sort_by(|a, b| {

b.final_score.partial_cmp(&a.final_score).unwrap()

});

// 应用多样性调整

let reranked = diversity_reranker.rerank(scored_candidates, user_context).await?;

多样性重排算法

pub struct DiversityReranker;

impl DiversityReranker {

pub fn rerank(

&self,

candidates: Vec<ScoredCandidate>,

context: &UserContext,

) -> Vec<ScoredCandidate> {

let mut result = Vec::new();

let mut author_count: HashMap<UserId, usize> = HashMap::new();

let mut topic_count: HashMap<String, usize> = HashMap::new();

for candidate in candidates {

// 检查作者多样性

let author_appearances = author_count

.get(&candidate.author_id)

.unwrap_or(&0);

if *author_appearances >= 3 {

// 同一作者最多出现3次

continue;

}

// 检查主题多样性

let mut topic_saturated = false;

for topic in &candidate.topics {

if topic_count.get(topic).unwrap_or(&0) >= 5 {

// 同一主题最多5条

topic_saturated = true;

break;

}

}

if topic_saturated {

continue;

}

// 添加到结果

result.push(candidate.clone());

*author_count.entry(candidate.author_id).or_insert(0) += 1;

for topic in &candidate.topics {

*topic_count.entry(topic.clone()).or_insert(0) += 1;

}

if result.len() >= 100 {

break;

}

}

result

}

}

步骤8:过滤

// 应用过滤器链

let mut filtered = reranked;

for filter in &self.filter_chain {

filtered = filtered.into_iter()

.filter(|candidate| filter.should_keep(candidate, &user_context))

.collect();

}

过滤器示例

// 1. 去重过滤器

let seen_post_ids = user_history.get_seen_posts(user_id, days=7).await?;

filtered.retain(|c| !seen_post_ids.contains(&c.post_id));

// 2. 屏蔽过滤器

let blocked_users = user_settings.get_blocked_users(user_id).await?;

filtered.retain(|c| !blocked_users.contains(&c.author_id));

// 3. 敏感内容过滤器

if !user_context.show_sensitive_content {

filtered.retain(|c| !c.is_sensitive);

}

// 4. 语言过滤器

let preferred_languages = user_context.preferred_languages;

filtered.retain(|c| preferred_languages.contains(&c.language));

步骤9:返回结果

// 截取前50条

let final_posts = filtered.into_iter()

.take(50)

.collect::<Vec<_>>();

// 异步记录曝光日志(不阻塞响应)

tokio::spawn(async move {

log_impressions(user_id, &final_posts).await;

});

// 返回给客户端

Ok(TimelineResponse {

posts: final_posts,

request_id,

generated_at: Utc::now(),

debug_info: if user_context.is_internal {

Some(DebugInfo {

candidate_count: 2000,

after_ranking: 1000,

after_filtering: 100,

latency_breakdown: latency_tracker.get_breakdown(),

})

} else {

None

},

})

完整时间线

0ms - 请求到达home-mixer

5ms - 获取用户上下文(Redis缓存)

10ms - 发起多路召回

60ms - 召回完成(2000个候选)

65ms - 候选内容补全(批量查询)

75ms - 特征计算

100ms - 模型推理(GPU批量)

105ms - 重排序

110ms - 过滤

115ms - 返回响应

总延迟:115ms(P99 < 200ms)

四、端到端深度学习的技术细节

1. 为什么抛弃手工特征?

传统推荐系统的痛点:

特征工程的噩梦

# 传统方法需要设计数百个特征

def extract_features(user, post):

features = {}

# 用户特征(50+个)

features['user_age'] = user.age

features['user_gender'] = encode_gender(user.gender)

features['user_follower_count'] = user.follower_count

features['user_following_count'] = user.following_count

features['user_post_count'] = user.post_count

features['user_avg_daily_active_time'] = user.avg_daily_active_time

features['user_registration_days'] = (now() - user.created_at).days

# ... 还有40多个

# 内容特征(50+个)

features['post_text_length'] = len(post.text)

features['post_has_image'] = 1 if post.image else 0

features['post_has_video'] = 1 if post.video else 0

features['post_has_link'] = 1 if post.link else 0

features['post_hashtag_count'] = len(post.hashtags)

features['post_mention_count'] = len(post.mentions)

features['post_age_hours'] = (now() - post.created_at).hours

# ... 还有40多个

# 交互特征(100+个)

features['user_author_interaction_count'] = count_interactions(user, post.author)

features['user_author_last_interaction_days'] = days_since_last_interaction(user, post.author)

features['user_topic_affinity'] = compute_topic_affinity(user, post.topics)

features['user_similar_posts_ctr'] = get_historical_ctr(user, similar_posts(post))

# ... 还有90多个

# 统计特征(50+个)

features['post_global_ctr'] = post.clicks / post.impressions

features['post_like_rate'] = post.likes / post.impressions

features['author_avg_engagement'] = author.total_engagement / author.post_count

# ... 还有40多个

return features # 总共250+个特征

问题:

- 人工成本高:需要领域专家花费数月设计特征

- 难以维护:新特征上线需要重新训练,老特征难以下线

- 表达能力受限:人类无法设计出所有有用的特征组合

- 跨领域迁移难:换个场景(如视频推荐)需要重新设计

2. 端到端学习的优势

# 现代端到端方法

class EndToEndRecommender(nn.Module):

def forward(self, user_raw_data, post_raw_data):

# 直接输入原始数据

user_embedding = self.user_encoder(

behavior_sequence=user_raw_data['recent_posts'], # 原始帖子ID序列

profile_text=user_raw_data['bio'], # 原始文本

social_graph=user_raw_data['following_ids'] # 原始图结构

)

post_embedding = self.post_encoder(

text=post_raw_data['text'], # 原始文本

image=post_raw_data['image_pixels'], # 原始图像

author_id=post_raw_data['author_id'] # 原始ID

)

# 模型自动学习所有有用的特征

interaction = self.interaction_layer(user_embedding, post_embedding)

prediction = self.output_head(interaction)

return prediction

优势:

- 自动特征学习:模型自己发现"用户在深夜更喜欢娱乐内容"这种模式

- 高阶特征组合:自动学习"年轻用户 × 科技话题 × 周末"这种复杂交互

- 持续进化:随着数据增长,模型自动变强

- 多模态融合:文本、图像、视频统一表征

3. 具体模型架构

用户编码器

class UserEncoder(nn.Module):

def __init__(self):

# 行为序列编码器(Transformer)

self.behavior_encoder = nn.TransformerEncoder(

nn.TransformerEncoderLayer(d_model=512, nhead=8),

num_layers=6

)

# 用户画像编码器(BERT)

self.profile_encoder = BertModel.from_pretrained('bert-base')

# 社交图编码器(GNN)

self.social_graph_encoder = GraphSAGE(

in_channels=128,

hidden_channels=256,

num_layers=3

)

# 融合层

self.fusion = nn.Linear(512 + 768 + 256, 512)

def forward(self, user_data):

# 1. 编码行为序列

# 用户最近交互的100条帖子

behavior_seq = user_data['recent_posts'] # [100]

behavior_embeddings = self.post_embedding_table(behavior_seq) # [100, 512]

# Transformer编码时序模式

behavior_repr = self.behavior_encoder(behavior_embeddings) # [100, 512]

behavior_repr = behavior_repr.mean(dim=0) # [512] 平均池化

# 2. 编码用户画像

profile_text = user_data['bio'] # "AI researcher, love sci-fi"

profile_repr = self.profile_encoder(profile_text).pooler_output # [768]

# 3. 编码社交图

# 构建用户的自我中心网络(ego network)

following_ids = user_data['following_ids'] # [500]

ego_graph = self.build_ego_graph(user_data['user_id'], following_ids)

social_repr = self.social_graph_encoder(ego_graph) # [256]

# 4. 融合多个表征

combined = torch.cat([behavior_repr, profile_repr, social_repr], dim=-1)

user_vector = self.fusion(combined) # [512]

return user_vector

内容编码器

class ContentEncoder(nn.Module):

def __init__(self):

# 文本编码器

self.text_encoder = BertModel.from_pretrained('bert-base')

# 图像编码器

self.image_encoder = ViTModel.from_pretrained('vit-base')

# 作者编码器

self.author_embedding = nn.Embedding(

num_embeddings=100_000_000, # 1亿用户

embedding_dim=128

)

# 多模态融合

self.fusion = nn.Linear(768 + 768 + 128, 512)

def forward(self, post_data):

# 1. 编码文本

text = post_data['text']

text_repr = self.text_encoder(text).pooler_output # [768]

# 2. 编码图像(如果有)

if post_data['image'] is not None:

image_repr = self.image_encoder(post_data['image']).pooler_output # [768]

else:

image_repr = torch.zeros(768)

# 3. 编码作者

author_id = post_data['author_id']

author_repr = self.author_embedding(author_id) # [128]

# 4. 融合

combined = torch.cat([text_repr, image_repr, author_repr], dim=-1)

content_vector = self.fusion(combined) # [512]

return content_vector

交互层与预测

class InteractionLayer(nn.Module):

def __init__(self):

# 交叉注意力机制

self.cross_attention = nn.MultiheadAttention(

embed_dim=512,

num_heads=8

)

# 深度交互网络

self.deep_interaction = nn.Sequential(

nn.Linear(512, 1024),

nn.ReLU(),

nn.Dropout(0.2),

nn.Linear(1024, 512),

nn.ReLU(),

nn.Dropout(0.2),

)

def forward(self, user_vector, content_vector):

# 交叉注意力:让用户和内容相互关注

attended_user, _ = self.cross_attention(

query=user_vector.unsqueeze(0),

key=content_vector.unsqueeze(0),

value=content_vector.unsqueeze(0)

)

# 深度交互

interaction = self.deep_interaction(attended_user.squeeze(0))

return interaction

class MultiTaskHead(nn.Module):

def __init__(self):

# 共享层

self.shared = nn.Linear(512, 256)

# 任务特定层

self.ctr_head = nn.Linear(256, 1)

self.like_head = nn.Linear(256, 1)

self.retweet_head = nn.Linear(256, 1)

self.dwell_time_head = nn.Linear(256, 1)

self.negative_head = nn.Linear(256, 1)

def forward(self, interaction):

shared_repr = F.relu(self.shared(interaction))

return {

'ctr': torch.sigmoid(self.ctr_head(shared_repr)),

'like_rate': torch.sigmoid(self.like_head(shared_repr)),

'retweet_rate': torch.sigmoid(self.retweet_head(shared_repr)),

'dwell_time': F.softplus(self.dwell_time_head(shared_repr)), # 正数输出

'negative_rate': torch.sigmoid(self.negative_head(shared_repr))

}

4. 训练策略

多任务学习损失函数

class MultiTaskLoss(nn.Module):

def __init__(self):

super().__init__()

# 自适应任务权重(Uncertainty Weighting)

self.log_vars = nn.Parameter(torch.zeros(5))

def forward(self, predictions, labels):

# 各任务的损失

ctr_loss = F.binary_cross_entropy(

predictions['ctr'],

labels['clicked']

)

like_loss = F.binary_cross_entropy(

predictions['like_rate'],

labels['liked']

)

retweet_loss = F.binary_cross_entropy(

predictions['retweet_rate'],

labels['retweeted']

)

# 停留时长用MSE损失

dwell_time_loss = F.mse_loss(

predictions['dwell_time'],

labels['dwell_time']

)

negative_loss = F.binary_cross_entropy(

predictions['negative_rate'],

labels['hidden_or_reported']

)

# 自适应加权

# 参考论文:Multi-Task Learning Using Uncertainty to Weigh Losses

precision_ctr = torch.exp(-self.log_vars[0])

precision_like = torch.exp(-self.log_vars[1])

precision_retweet = torch.exp(-self.log_vars[2])

precision_dwell = torch.exp(-self.log_vars[3])

precision_negative = torch.exp(-self.log_vars[4])

total_loss = (

precision_ctr * ctr_loss + self.log_vars[0] +

precision_like * like_loss + self.log_vars[1] +

precision_retweet * retweet_loss + self.log_vars[2] +

precision_dwell * dwell_time_loss + self.log_vars[3] +

precision_negative * negative_loss + self.log_vars[4]

)

return total_loss, {

'ctr_loss': ctr_loss.item(),

'like_loss': like_loss.item(),

'retweet_loss': retweet_loss.item(),

'dwell_time_loss': dwell_time_loss.item(),

'negative_loss': negative_loss.item(),

}

训练数据构造

class RecommendationDataset(Dataset):

"""

从用户行为日志构造训练样本

"""

def __init__(self, log_path, negative_sampling_ratio=4):

self.logs = self.load_logs(log_path)

self.negative_sampling_ratio = negative_sampling_ratio

def __getitem__(self, idx):

log = self.logs[idx]

# 正样本:用户实际交互的内容

positive_sample = {

'user_id': log['user_id'],

'post_id': log['post_id'],

'user_context': self.get_user_context(log['user_id'], log['timestamp']),

'post_content': self.get_post_content(log['post_id']),

'labels': {

'clicked': 1,

'liked': 1 if log['action'] == 'like' else 0,

'retweeted': 1 if log['action'] == 'retweet' else 0,

'dwell_time': log['dwell_time'],

'hidden_or_reported': 0

}

}

# 负样本:用户看到但没有交互的内容

negative_samples = []

for _ in range(self.negative_sampling_ratio):

# 从同一时间段的曝光中随机采样

neg_post_id = self.sample_negative(log['user_id'], log['timestamp'])

negative_samples.append({

'user_id': log['user_id'],

'post_id': neg_post_id,

'user_context': self.get_user_context(log['user_id'], log['timestamp']),

'post_content': self.get_post_content(neg_post_id),

'labels': {

'clicked': 0,

'liked': 0,

'retweeted': 0,

'dwell_time': 0,

'hidden_or_reported': 0

}

})

return positive_sample, negative_samples

def get_user_context(self, user_id, timestamp):

"""

获取用户在该时刻的上下文

"""

# 最近100条交互

recent_interactions = self.get_recent_interactions(

user_id,

before=timestamp,

limit=100

)

# 用户画像

user_profile = self.get_user_profile(user_id)

# 社交关系

following_ids = self.get_following(user_id)

return {

'recent_posts': [i['post_id'] for i in recent_interactions],

'bio': user_profile['bio'],

'following_ids': following_ids,

'user_id': user_id

}

分布式训练

class DistributedTrainer:

"""

使用PyTorch DDP进行多GPU/多机训练

"""

def __init__(self, model, rank, world_size):

self.rank = rank

self.world_size = world_size

# 模型并行化

self.model = DDP(

model.to(rank),

device_ids=[rank],

find_unused_parameters=True

)

# 优化器

self.optimizer = torch.optim.AdamW(

self.model.parameters(),

lr=1e-4,

weight_decay=0.01

)

# 学习率调度

self.scheduler = get_cosine_schedule_with_warmup(

self.optimizer,

num_warmup_steps=10000,

num_training_steps=1000000

)

# 混合精度训练

self.scaler = torch.cuda.amp.GradScaler()

def train_epoch(self, dataloader):

self.model.train()

for batch_idx, batch in enumerate(dataloader):

# 数据移到GPU

batch = {k: v.to(self.rank) for k, v in batch.items()}

# 混合精度前向传播

with torch.cuda.amp.autocast():

predictions = self.model(batch['user_data'], batch['post_data'])

loss, loss_dict = self.criterion(predictions, batch['labels'])

# 反向传播

self.optimizer.zero_grad()

self.scaler.scale(loss).backward()

# 梯度裁剪

self.scaler.unscale_(self.optimizer)

torch.nn.utils.clip_grad_norm_(self.model.parameters(), max_norm=1.0)

# 更新参数

self.scaler.step(self.optimizer)

self.scaler.update()

self.scheduler.step()

# 日志记录

if batch_idx % 100 == 0 and self.rank == 0:

print(f"Batch {batch_idx}, Loss: {loss.item():.4f}")

wandb.log({

'loss': loss.item(),

**loss_dict,

'lr': self.scheduler.get_last_lr()[0]

})

在线学习与模型更新

class OnlineLearningPipeline:

"""

持续学习流水线:每小时用最新数据更新模型

"""

def __init__(self):

self.current_model = load_model("recsys_model_v42.pt")

self.kafka_consumer = KafkaConsumer('user_interactions')

self.training_buffer = []

async def run(self):

while True:

# 1. 收集最近1小时的交互数据

recent_data = await self.collect_recent_interactions(hours=1)

# 2. 数据预处理

training_samples = self.preprocess(recent_data)

# 3. 增量训练

updated_model = self.incremental_train(

base_model=self.current_model,

new_data=training_samples,

epochs=1

)

# 4. 在线评估

metrics = await self.online_evaluation(updated_model)

# 5. 如果性能提升,部署新模型

if metrics['auc'] > self.current_metrics['auc']:

await self.deploy_model(updated_model, version="v42.1")

self.current_model = updated_model

print(f"模型更新成功,AUC: {metrics['auc']:.4f}")

# 6. 等待下一个周期

await asyncio.sleep(3600) # 1小时

def incremental_train(self, base_model, new_data, epochs):

"""

增量训练:在新数据上微调

"""

model = copy.deepcopy(base_model)

model.train()

optimizer = torch.optim.AdamW(

model.parameters(),

lr=1e-5 # 较小的学习率,避免灾难性遗忘

)

for epoch in range(epochs):

for batch in DataLoader(new_data, batch_size=1024):

predictions = model(batch['user_data'], batch['post_data'])

loss, _ = self.criterion(predictions, batch['labels'])

optimizer.zero_grad()

loss.backward()

optimizer.step()

return model

五、Grok 大模型的深度应用

1. 语义理解增强

传统方法 vs Grok方法

传统关键词匹配

# 传统方法:基于TF-IDF的相似度

def match_posts_old(user_interests, post):

user_keywords = set(user_interests.split())

post_keywords = set(post.text.split())

overlap = user_keywords & post_keywords

similarity = len(overlap) / len(user_keywords | post_keywords)

return similarity

# 问题:

# 用户兴趣:"机器学习"

# 帖子1:"深度学习取得突破" -> 相似度=0(没有共同词)

# 帖子2:"机器学习机器学习" -> 相似度高(但内容质量差)

Grok语义理解

class GrokSemanticMatcher:

def __init__(self):

self.grok_model = load_grok_model()

def match_posts(self, user_interests, post):

# Grok理解深层语义

user_intent_vector = self.grok_model.encode(

f"用户感兴趣的话题:{user_interests}"

)

post_semantic_vector = self.grok_model.encode(

f"帖子内容:{post.text}"

)

# 语义相似度

similarity = cosine_similarity(user_intent_vector, post_semantic_vector)

return similarity

# Grok能理解:

# "机器学习" 和 "深度学习" 是相关概念

# "神经网络" 和 "AI" 有语义联系

# "GPT" 和 "大语言模型" 指向同一事物

多语言理解

class MultilingualUnderstanding:

"""

Grok的跨语言能力

"""

def encode_post(self, post):

# Grok统一编码不同语言到同一语义空间

if post.language == 'zh':

text = "人工智能将改变世界"

elif post.language == 'en':

text = "AI will change the world"

elif post.language == 'ja':

text = "AIは世界を変える"

# 三种语言编码到相近的向量

semantic_vector = self.grok_model.encode(text)

return semantic_vector

def cross_lingual_recommendation(self, user_id):

"""

跨语言推荐:中文用户也能看到相关的英文内容

"""

user_interests = self.get_user_interests(user_id) # 中文

# 检索所有语言的相关内容

all_posts = self.search_all_languages(user_interests)

# Grok自动匹配语义相关的内容,无论语言

ranked_posts = self.rank_by_semantic_similarity(

user_interests,

all_posts

)

return ranked_posts

2. 内容质量评估

class ContentQualityScorer:

"""

使用Grok评估内容质量

"""

def __init__(self):

self.grok = load_grok_model()

def score_quality(self, post):

# 多维度质量评估

scores = {}

# 1. 信息密度

scores['informativeness'] = self.grok.analyze(

f"评估以下内容的信息量(0-1):{post.text}"

)

# 2. 可读性

scores['readability'] = self.grok.analyze(

f"评估以下内容的可读性(0-1):{post.text}"

)

# 3. 原创性

scores['originality'] = self.grok.analyze(

f"评估以下内容的原创性(0-1):{post.text}"

)

# 4. 事实准确性

scores['factuality'] = self.grok.check_facts(post.text)

# 5. 情感倾向

scores['sentiment'] = self.grok.analyze_sentiment(post.text)

# 6. 争议性

scores['controversy'] = self.grok.detect_controversy(post.text)

# 综合评分

quality_score = (

scores['informativeness'] * 0.3 +

scores['readability'] * 0.2 +

scores['originality'] * 0.2 +

scores['factuality'] * 0.3

)

# 降权争议内容

if scores['controversy'] > 0.8:

quality_score *= 0.5

return quality_score, scores

3. 个性化内容生成

class PersonalizedContentGenerator:

"""

使用Grok生成个性化推荐理由

"""

def generate_recommendation_reason(self, user, post):

# 分析为什么推荐这条内容

context = f"""

用户画像:

- 兴趣:{user.interests}

- 最近行为:{user.recent_actions}

- 关注的人:{user.following}

推荐内容:

- 作者:{post.author}

- 内容:{post.text}

- 话题:{post.topics}

请生成一句话解释为什么推荐这条内容给用户。

"""

reason = self.grok.generate(

prompt=context,

max_length=50,

temperature=0.7

)

return reason

# 示例输出:

# "因为你关注了@elonmusk,他刚刚发布了关于SpaceX的最新进展"

# "基于你对AI的兴趣,这篇关于GPT-5的讨论可能对你有帮助"

# "你的好友@alice点赞了这条关于量子计算的推文"

4. 实时趋势检测

class TrendDetector:

"""

使用Grok检测新兴趋势

"""

def detect_emerging_trends(self, time_window='1h'):

# 获取最近时间窗口的所有帖子

recent_posts = self.fetch_recent_posts(time_window)

# Grok聚类相似话题

topic_clusters = self.grok.cluster_topics(

[post.text for post in recent_posts]

)

trends = []

for cluster in topic_clusters:

# 计算话题热度

volume = len(cluster.posts)

growth_rate = self.compute_growth_rate(cluster)

# Grok生成话题摘要

summary = self.grok.summarize(

[post.text for post in cluster.posts],

max_length=100

)

# Grok提取关键实体

entities = self.grok.extract_entities(summary)

if growth_rate > 2.0: # 增长率超过200%

trends.append({

'topic': cluster.main_topic,

'summary': summary,

'entities': entities,

'volume': volume,

'growth_rate': growth_rate,

'representative_posts': cluster.top_posts[:5]

})

return sorted(trends, key=lambda x: x['growth_rate'], reverse=True)

5. 智能内容审核

class ContentModerator:

"""

使用Grok进行智能内容审核

"""

def moderate_content(self, post):

moderation_result = {}

# 1. 有害内容检测

harmful_categories = self.grok.detect_harmful_content(post.text)

moderation_result['harmful'] = harmful_categories

# 返回:{'hate_speech': 0.05, 'violence': 0.02, 'sexual': 0.01}

# 2. 虚假信息检测

if self.contains_factual_claims(post.text):

fact_check = self.grok.fact_check(post.text)

moderation_result['fact_check'] = fact_check

# 返回:{'claim': '疫苗导致自闭症', 'verdict': 'false', 'confidence': 0.95}

# 3. 垃圾内容检测

spam_score = self.grok.detect_spam(post.text)

moderation_result['spam_score'] = spam_score

# 4. 敏感话题检测

sensitive_topics = self.grok.detect_sensitive_topics(post.text)

moderation_result['sensitive_topics'] = sensitive_topics

# 返回:['政治', '宗教']

# 5. 决策

action = self.decide_action(moderation_result)

return action

def decide_action(self, moderation_result):

# 严重违规:删除

if any(score > 0.8 for score in moderation_result['harmful'].values()):

return 'remove'

# 虚假信息:添加警告标签

if moderation_result.get('fact_check', {}).get('verdict') == 'false':

return 'add_warning_label'

# 敏感话题:限制传播

if len(moderation_result['sensitive_topics']) > 0:

return 'limit_reach'

# 通过审核

return 'approve'

六、性能优化与工程实践

1. 模型推理优化

模型量化

class ModelQuantizer:

"""

将FP32模型量化到INT8,推理速度提升3-4倍

"""

def quantize_model(self, model, calibration_data):

# 动态量化(无需校准数据)

quantized_model = torch.quantization.quantize_dynamic(

model,

{torch.nn.Linear, torch.nn.LSTM},

dtype=torch.qint8

)

# 或静态量化(需要校准数据,精度更高)

model.qconfig = torch.quantization.get_default_qconfig('fbgemm')

torch.quantization.prepare(model, inplace=True)

# 校准

with torch.no_grad():

for batch in calibration_data:

model(batch)

torch.quantization.convert(model, inplace=True)

return quantized_model

def benchmark(self, original_model, quantized_model, test_data):

# 原始模型

start = time.time()

for batch in test_data:

original_model(batch)

original_time = time.time() - start

# 量化模型

start = time.time()

for batch in test_data:

quantized_model(batch)

quantized_time = time.time() - start

print(f"原始模型: {original_time:.2f}s")

print(f"量化模型: {quantized_time:.2f}s")

print(f"加速比: {original_time / quantized_time:.2f}x")

# 典型结果:

# 原始模型: 10.5s

# 量化模型: 2.8s

# 加速比: 3.75x

模型蒸馏

class ModelDistiller:

"""

将大模型蒸馏到小模型,保持性能的同时减少计算量

"""

def __init__(self, teacher_model, student_model):

self.teacher = teacher_model

self.student = student_model

self.temperature = 3.0

def distill(self, train_data, epochs=10):

self.teacher.eval()

self.student.train()

optimizer = torch.optim.AdamW(self.student.parameters(), lr=1e-4)

for epoch in range(epochs):

for batch in train_data:

# 教师模型的软标签

with torch.no_grad():

teacher_logits = self.teacher(batch['input'])

teacher_probs = F.softmax(teacher_logits / self.temperature, dim=-1)

# 学生模型的预测

student_logits = self.student(batch['input'])

student_probs = F.softmax(student_logits / self.temperature, dim=-1)

# 蒸馏损失(KL散度)

distill_loss = F.kl_div(

student_probs.log(),

teacher_probs,

reduction='batchmean'

) * (self.temperature ** 2)

# 真实标签损失

hard_loss = F.cross_entropy(student_logits, batch['label'])

# 组合损失

loss = 0.7 * distill_loss + 0.3 * hard_loss

optimizer.zero_grad()

loss.backward()

optimizer.step()

return self.student

# 示例:

# 教师模型:12层Transformer,300M参数,推理10ms

# 学生模型:4层Transformer,50M参数,推理2ms

# 性能保持:AUC从0.85降到0.83(可接受)

批处理优化

// Rust实现的高效批处理推理

pub struct BatchInferenceEngine {

model_client: Arc<ModelClient>,

batch_size: usize,

max_wait_time: Duration,

pending_requests: Arc<Mutex<Vec<InferenceRequest>>>,

}

impl BatchInferenceEngine {

pub async fn infer(&self, request: InferenceRequest) -> Result<Prediction> {

// 将请求加入待处理队列

let (tx, rx) = oneshot::channel();

{

let mut pending = self.pending_requests.lock().await;

pending.push(InferenceRequest {

data: request.data,

response_channel: tx,

});

}

// 等待批处理结果

rx.await?

}

async fn batch_processor(&self) {

loop {

// 等待直到有足够的请求或超时

tokio::time::sleep(self.max_wait_time).await;

let mut pending = self.pending_requests.lock().await;

if pending.is_empty() {

continue;

}

// 取出一批请求

let batch: Vec<_> = pending

.drain(..pending.len().min(self.batch_size))

.collect();

drop(pending); // 释放锁

// 批量推理

let inputs = batch.iter()

.map(|req| req.data.clone())

.collect::<Vec<_>>();

let predictions = self.model_client

.batch_predict(inputs)

.await

.expect("Batch inference failed");

// 返回结果

for (request, prediction) in batch.into_iter().zip(predictions) {

let _ = request.response_channel.send(prediction);

}

}

}

}

// 效果:

// 单个请求推理:5ms

// 批量推理(batch=64):每个请求平均0.5ms(10倍加速)

2. 缓存策略

pub struct MultiLevelCache {

// L1: 本地内存缓存(最快)

local_cache: Arc<RwLock<LruCache<String, CachedItem>>>,

// L2: Redis缓存(中等速度)

redis_client: Arc<RedisClient>,

// L3: 数据库(最慢)

database: Arc<Database>,

}

impl MultiLevelCache {

pub async fn get(&self, key: &str) -> Result<Option<CachedItem>> {

// 1. 尝试L1缓存

{

let cache = self.local_cache.read().await;

if let Some(item) = cache.get(key) {

metrics::counter!("cache_hit_l1").increment(1);

return Ok(Some(item.clone()));

}

}

// 2. 尝试L2缓存

if let Some(item) = self.redis_client.get(key).await? {

metrics::counter!("cache_hit_l2").increment(1);

// 回填L1

let mut cache = self.local_cache.write().await;

cache.put(key.to_string(), item.clone());

return Ok(Some(item));

}

// 3. 查询数据库

if let Some(item) = self.database.query(key).await? {

metrics::counter!("cache_miss").increment(1);

// 回填L2和L1

self.redis_client.set(key, &item, ttl=3600).await?;

let mut cache = self.local_cache.write().await;

cache.put(key.to_string(), item.clone());

return Ok(Some(item));

}

Ok(None)

}

}

// 性能对比:

// L1命中:0.01ms

// L2命中:1ms

// L3查询:10ms

// 缓存命中率:L1=60%, L2=30%, L3=10%

// 平均延迟:0.01*0.6 + 1*0.3 + 10*0.1 = 1.306ms

3. 数据库优化

-- 用户行为表分区(按时间)

CREATE TABLE user_interactions (

user_id BIGINT,

post_id BIGINT,

action_type VARCHAR(20),

timestamp TIMESTAMP,

...

) PARTITION BY RANGE (timestamp) (

PARTITION p_2024_01 VALUES LESS THAN ('2024-02-01'),

PARTITION p_2024_02 VALUES LESS THAN ('2024-03-01'),

...

);

-- 索引优化

CREATE INDEX idx_user_time ON user_interactions(user_id, timestamp DESC);

CREATE INDEX idx_post_time ON user_interactions(post_id, timestamp DESC);

-- 物化视图(预计算热门内容)

CREATE MATERIALIZED VIEW trending_posts AS

SELECT

post_id,

COUNT(*) as interaction_count,

SUM(CASE WHEN action_type = 'like' THEN 1 ELSE 0 END) as like_count,

SUM(CASE WHEN action_type = 'retweet' THEN 1 ELSE 0 END) as retweet_count

FROM user_interactions

WHERE timestamp > NOW() - INTERVAL '24 hours'

GROUP BY post_id

HAVING interaction_count > 100

ORDER BY interaction_count DESC;

-- 定期刷新

REFRESH MATERIALIZED VIEW CONCURRENTLY trending_posts;

4. 服务降级与限流

pub struct RateLimiter {

redis: Arc<RedisClient>,

}

impl RateLimiter {

pub async fn check_rate_limit(

&self,

user_id: UserId,

limit: u32,

window: Duration,

) -> Result<bool> {

let key = format!("rate_limit:{}:{}", user_id, window.as_secs());

// 使用Redis的INCR + EXPIRE实现滑动窗口

let count: u32 = self.redis

.incr(&key, 1)

.await?;

if count == 1 {

self.redis.expire(&key, window.as_secs() as usize).await?;

}

Ok(count <= limit)

}

}

pub struct CircuitBreaker {

failure_threshold: u32,

timeout: Duration,

state: Arc<RwLock<CircuitState>>,

}

enum CircuitState {

Closed, // 正常

Open { until: Instant }, // 熔断中

HalfOpen, // 尝试恢复

}

impl CircuitBreaker {

pub async fn call<F, T>(&self, f: F) -> Result<T>

where

F: Future<Output = Result<T>>,

{

// 检查熔断器状态

{

let state = self.state.read().await;

match *state {

CircuitState::Open { until } => {

if Instant::now() < until {

return Err(anyhow!("Circuit breaker is open"));

}

}

_ => {}

}

}

// 执行调用

match f.await {

Ok(result) => {

self.on_success().await;

Ok(result)

}

Err(e) => {

self.on_failure().await;

Err(e)

}

}

}

async fn on_failure(&self) {

let mut state = self.state.write().await;

// 失败次数超过阈值,打开熔断器

*state = CircuitState::Open {

until: Instant::now() + self.timeout,

};

}

}

七、A/B测试与效果评估

1. 在线实验平台

pub struct ExperimentPlatform {

experiment_config: Arc<RwLock<HashMap<String, Experiment>>>,

assignment_cache: Arc<RwLock<HashMap<UserId, HashMap<String, String>>>>,

}

pub struct Experiment {

pub name: String,

pub variants: Vec<Variant>,

pub traffic_allocation: f64, // 0.0-1.0

pub start_time: DateTime<Utc>,

pub end_time: DateTime<Utc>,

}

pub struct Variant {

pub name: String,

pub weight: f64, // 权重

pub config: serde_json::Value, // 变体配置

}

impl ExperimentPlatform {

pub async fn get_variant(

&self,

user_id: UserId,

experiment_name: &str,

) -> Option<Variant> {

// 1. 检查缓存

{

let cache = self.assignment_cache.read().await;

if let Some(assignments) = cache.get(&user_id) {

if let Some(variant_name) = assignments.get(experiment_name) {

return self.get_variant_by_name(experiment_name, variant_name).await;

}

}

}

// 2. 获取实验配置

let experiments = self.experiment_config.read().await;

let experiment = experiments.get(experiment_name)?;

// 3. 检查实验是否进行中

let now = Utc::now();

if now < experiment.start_time || now > experiment.end_time {

return None;

}

// 4. 流量分配(一致性哈希)

let hash = self.hash_user_experiment(user_id, experiment_name);

let traffic_bucket = (hash % 10000) as f64 / 10000.0;

if traffic_bucket > experiment.traffic_allocation {

return None; // 用户不在实验流量中

}

// 5. 变体分配

let variant_bucket = ((hash / 10000) % 10000) as f64 / 10000.0;

let mut cumulative_weight = 0.0;

for variant in &experiment.variants {

cumulative_weight += variant.weight;

if variant_bucket < cumulative_weight {

// 缓存分配结果

let mut cache = self.assignment_cache.write().await;

cache.entry(user_id)

.or_insert_with(HashMap::new)

.insert(experiment_name.to_string(), variant.name.clone());

return Some(variant.clone());

}

}

None

}

fn hash_user_experiment(&self, user_id: UserId, experiment_name: &str) -> u64 {

use std::collections::hash_map::DefaultHasher;

use std::hash::{Hash, Hasher};

let mut hasher = DefaultHasher::new();

user_id.hash(&mut hasher);

experiment_name.hash(&mut hasher);

hasher.finish()

}

}

2. 实验配置示例

{

"experiment_name": "new_ranking_model_v43",

"description": "测试新的多任务学习模型",

"variants": [

{

"name": "control",

"weight": 0.5,

"config": {

"model_version": "v42",

"ranking_weights": {

"ctr": 0.3,

"like": 0.2,

"retweet": 0.2,

"dwell_time": 0.2,

"negative": -0.1

}

}

},

{

"name": "treatment",

"weight": 0.5,

"config": {

"model_version": "v43",

"ranking_weights": {

"ctr": 0.25,

"like": 0.25,

"retweet": 0.25,

"dwell_time": 0.25,

"negative": -0.2

}

}

}

],

"traffic_allocation": 0.1,

"start_time": "2024-01-15T00:00:00Z",

"end_time": "2024-01-22T00:00:00Z",

"metrics": [

"ctr",

"engagement_rate",

"session_duration",

"dau",

"retention_d1"

]

}

3. 指标计算

class MetricsCalculator:

"""

计算实验指标

"""

def calculate_experiment_metrics(self, experiment_name, variant_name):

# 获取该变体的用户

users = self.get_experiment_users(experiment_name, variant_name)

# 获取用户行为数据

interactions = self.get_user_interactions(

users,

start_time=experiment.start_time,

end_time=experiment.end_time

)

metrics = {}

# 1. CTR(点击率)

impressions = interactions[interactions['action'] == 'impression']

clicks = interactions[interactions['action'] == 'click']

metrics['ctr'] = len(clicks) / len(impressions)

# 2. 互动率

engagements = interactions[interactions['action'].isin(['like', 'retweet', 'reply'])]

metrics['engagement_rate'] = len(engagements) / len(impressions)

# 3. 人均会话时长

sessions = self.aggregate_sessions(interactions)

metrics['avg_session_duration'] = sessions['duration'].mean()

# 4. DAU(日活跃用户)

daily_active = interactions.groupby('date')['user_id'].nunique()

metrics['dau'] = daily_active.mean()

# 5. 次日留存

first_day_users = set(interactions[interactions['date'] == experiment.start_time.date()]['user_id'])

second_day_users = set(interactions[interactions['date'] == experiment.start_time.date() + timedelta(days=1)]['user_id'])

metrics['retention_d1'] = len(first_day_users & second_day_users) / len(first_day_users)

# 6. 负反馈率

negative_actions = interactions[interactions['action'].isin(['hide', 'report', 'block'])]

metrics['negative_rate'] = len(negative_actions) / len(impressions)

return metrics

def statistical_test(self, control_metrics, treatment_metrics):

"""

统计显著性检验

"""

results = {}

for metric_name in control_metrics.keys():

control_values = control_metrics[metric_name]

treatment_values = treatment_metrics[metric_name]

# t检验

t_stat, p_value = stats.ttest_ind(control_values, treatment_values)

# 计算置信区间

diff = treatment_values.mean() - control_values.mean()

se = np.sqrt(control_values.var()/len(control_values) +

treatment_values.var()/len(treatment_values))

ci_lower = diff - 1.96 * se

ci_upper = diff + 1.96 * se

# 相对提升

relative_lift = (treatment_values.mean() - control_values.mean()) / control_values.mean()

results[metric_name] = {

'control_mean': control_values.mean(),

'treatment_mean': treatment_values.mean(),

'absolute_diff': diff,

'relative_lift': relative_lift,

'p_value': p_value,

'is_significant': p_value < 0.05,

'confidence_interval': (ci_lower, ci_upper)

}

return results

4. 实验报告生成

class ExperimentReport:

def generate_report(self, experiment_name):

experiment = self.get_experiment(experiment_name)

# 计算各变体指标

control_metrics = self.calculate_metrics(experiment, 'control')

treatment_metrics = self.calculate_metrics(experiment, 'treatment')

# 统计检验

test_results = self.statistical_test(control_metrics, treatment_metrics)

# 生成报告

report = f"""

# 实验报告:{experiment_name}

## 实验配置

- 开始时间:{experiment.start_time}

- 结束时间:{experiment.end_time}

- 流量分配:{experiment.traffic_allocation * 100}%

- 参与用户数:{len(control_metrics['users']) + len(treatment_metrics['users'])}

## 核心指标对比

| 指标 | 对照组 | 实验组 | 绝对差异 | 相对提升 | P值 | 显著性 |

|------|--------|--------|----------|----------|-----|--------|

"""

for metric_name, result in test_results.items():

significance = "✅" if result['is_significant'] else "❌"

report += f"| {metric_name} | {result['control_mean']:.4f} | {result['treatment_mean']:.4f} | {result['absolute_diff']:.4f} | {result['relative_lift']*100:.2f}% | {result['p_value']:.4f} | {significance} |\n"

report += f"""

## 详细分析

### CTR(点击率)

- 对照组:{test_results['ctr']['control_mean']:.2%}

- 实验组:{test_results['ctr']['treatment_mean']:.2%}

- **提升:{test_results['ctr']['relative_lift']*100:.2f}%** {'(显著)' if test_results['ctr']['is_significant'] else '(不显著)'}

### 互动率

- 对照组:{test_results['engagement_rate']['control_mean']:.2%}

- 实验组:{test_results['engagement_rate']['treatment_mean']:.2%}

- **提升:{test_results['engagement_rate']['relative_lift']*100:.2f}%** {'(显著)' if test_results['engagement_rate']['is_significant'] else '(不显著)'}

### 负反馈率

- 对照组:{test_results['negative_rate']['control_mean']:.2%}

- 实验组:{test_results['negative_rate']['treatment_mean']:.2%}

- **变化:{test_results['negative_rate']['relative_lift']*100:.2f}%** {'(显著)' if test_results['negative_rate']['is_significant'] else '(不显著)'}

## 结论

"""

# 自动生成结论

if test_results['ctr']['is_significant'] and test_results['ctr']['relative_lift'] > 0:

if test_results['negative_rate']['relative_lift'] < 0.05: # 负反馈没有明显增加

report += "✅ **建议全量上线**:实验组在CTR上有显著提升,且没有增加负反馈。\n"

else:

report += "⚠️ **谨慎上线**:实验组CTR提升,但负反馈率也有所增加,需要进一步优化。\n"

else:

report += "❌ **不建议上线**:实验组没有显著改善核心指标。\n"

return report

八、系统监控与可观测性

1. 指标监控

pub struct MetricsCollector {

prometheus_registry: Registry,

}

impl MetricsCollector {

pub fn new() -> Self {

let registry = Registry::new();

// 注册各种指标

register_counter!("recommendation_requests_total");

register_histogram!("recommendation_latency_ms");

register_gauge!("active_users");

register_counter!("model_inference_total");

register_histogram!("model_inference_latency_ms");

Self {

prometheus_registry: registry,

}

}

pub fn record_request(&self, latency_ms: f64, status: &str) {

counter!("recommendation_requests_total", "status" => status).increment(1);

histogram!("recommendation_latency_ms").record(latency_ms);

}

pub fn record_model_inference(&self, model_name: &str, latency_ms: f64) {

counter!("model_inference_total", "model" => model_name).increment(1);

histogram!("model_inference_latency_ms", "model" => model_name).record(latency_ms);

}

}

2. 分布式追踪

use opentelemetry::trace::{Tracer, Span};

pub async fn handle_recommendation_request(

user_id: UserId,

tracer: &impl Tracer,

) -> Result<Vec<Post>> {

// 创建根Span

let mut span = tracer.start("handle_recommendation");

span.set_attribute(KeyValue::new("user_id", user_id.to_string()));

// 召回阶段

let candidates = {

let mut recall_span = tracer.start_with_context("multi_source_recall", &span.context());

let result = multi_source_recall(user_id).await?;

recall_span.set_attribute(KeyValue::new("candidate_count", result.len() as i64));

recall_span.end();

result

};

// 精排阶段

let ranked = {

let mut ranking_span = tracer.start_with_context("ranking", &span.context());

let result = ranking_model.predict(candidates).await?;

ranking_span.set_attribute(KeyValue::new("ranked_count", result.len() as i64));

ranking_span.end();

result

};

// 过滤阶段

let filtered = {

let mut filter_span = tracer.start_with_context("filtering", &span.context());

let result = apply_filters(ranked).await?;

filter_span.set_attribute(KeyValue::new("final_count", result.len() as i64));

filter_span.end();

result

};

span.end();

Ok(filtered)

}

// Jaeger UI中可以看到完整的调用链:

// handle_recommendation (115ms)

// ├─ multi_source_recall (60ms)

// │ ├─ following_recall (20ms)

// │ ├─ vector_recall (35ms)

// │ └─ trending_recall (15ms)

// ├─ ranking (40ms)

// │ ├─ feature_extraction (10ms)

// │ └─ model_inference (28ms)

// └─ filtering (15ms)

3. 告警系统

# Prometheus告警规则

groups:

- name: recommendation_system

rules:

# 延迟告警

- alert: HighLatency

expr: histogram_quantile(0.99, recommendation_latency_ms) > 500

for: 5m

labels:

severity: warning

annotations:

summary: "推荐系统P99延迟过高"

description: "P99延迟 {{ $value }}ms,超过500ms阈值"

# 错误率告警

- alert: HighErrorRate

expr: rate(recommendation_requests_total{status="error"}[5m]) > 0.01

for: 5m

labels:

severity: critical

annotations:

summary: "推荐系统错误率过高"

description: "错误率 {{ $value }},超过1%阈值"

# 模型推理失败告警

- alert: ModelInferenceFailure

expr: rate(model_inference_total{status="failed"}[5m]) > 0.05

for: 2m

labels:

severity: critical

annotations:

summary: "模型推理失败率过高"

description: "模型 {{ $labels.model }} 失败率 {{ $value }}"

# 缓存命中率告警

- alert: LowCacheHitRate

expr: rate(cache_hit_total[5m]) / rate(cache_requests_total[5m]) < 0.7

for: 10m

labels:

severity: warning

annotations:

summary: "缓存命中率过低"

description: "命中率 {{ $value }},低于70%阈值"

九、总结与展望

X推荐算法的核心优势

- 技术栈创新:Rust+Python的组合兼顾性能与灵活性

- 算法先进性:端到端深度学习+大模型赋能

- 工程化水平:完善的监控、实验、降级机制

- 开源透明:Apache 2.0许可,接受社区监督

与业界对比

| 维度 | X | TikTok | YouTube | Meta |

|---|---|---|---|---|

| 主要语言 | Rust+Python | C++/Go+Python | C++/Python | C++/Python |

| 模型范式 | 端到端深度学习 | 端到端深度学习 | 混合(特征+深度) | 端到端深度学习 |

| 大模型应用 | Grok深度集成 | 有限应用 | 有限应用 | Llama集成 |

| 开源程度 | 核心算法开源 | 不开源 | 部分开源 | 部分开源 |

| 实时性 | 秒级 | 秒级 | 分钟级 | 秒级 |

未来发展方向

- 更强的个性化:利用Grok实现真正的"千人千面"

- 多模态理解:统一处理文本、图像、视频、音频

- 因果推断:从相关性到因果性,理解用户真实偏好

- 联邦学习:在保护隐私的前提下利用用户数据

- 强化学习:长期优化用户体验,而非短期指标

对行业的启示

X的推荐算法开源,为整个行业提供了宝贵的参考:

- 技术选型:Rust在推荐系统中的成功应用

- 算法范式:端到端学习是大势所趋

- 大模型融合:LLM不仅是聊天工具,更是推荐系统的增强器

- 透明度:开源有助于建立用户信任和行业标准

这套系统代表了当前推荐系统的最高水平,其架构设计、算法选择、工程实践都值得深入学习和借鉴。

更多推荐

已为社区贡献81条内容

已为社区贡献81条内容

所有评论(0)