51.kubernetes部署

Kubernetes容器编排平台特性与部署指南 摘要: 本文介绍了Kubernetes(k8s)核心特性与部署方法。k8s具备自我修复、弹性伸缩、滚动更新、服务发现等七大特性,采用主从架构管理容器生命周期。当前1.28版本默认使用containerd运行时环境。详细部署步骤包括:内核升级、主机配置、环境包安装、安全机制关闭、swap分区禁用、内核参数调整、时间同步及IPVS功能配置。重点讲解了co

文章目录

kubernetes

keburnetes特性

- 自我修复

在节点故障时重新启动失败的容器,替换和重新创建

-

弹性伸缩

使用命令、UI或者基于CPU使用情况自动快速的扩容缩容

-

自动部署和回滚

k8s采用滚动更新策略更新应用

-

服务发现和负载均衡

k8s为多个容器提供一个同意访问入口

-

机密和配置管理

管理机密数据和应用程序配置,使用统一的配置文件传送给所有节点

-

存储编译

挂载外部存储系统

-

批处理

提供一次性人物,定时任务,满足批量数据处理

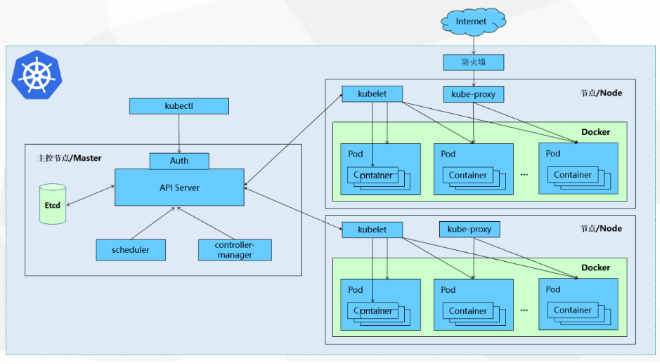

kubernetes的基础框架

整体部署的kubernetes环境是由一主两从,用户使用kubectl命令行经过身份认证操作master节点,master节点里有scheduler调度器去调度节点的资源选出适合创建容器的节点,将结果传给API server,在节点上创建通过API server在从节点上的代理kubelet(管理pod生命周期)进行创建容器,pod是容器的集合,外界想要访问容器需要经过系统的防火墙,通过kube-proxy4层的负载均衡,master里还有一个插件controller-manager控制器,管理节点的生命周期,etcd时序数据库可能存在在master节点,它里面用于储存整个kubernetes的数据信息。

版本

1.14 - 1.19 默认支持容器运行时:docker

1.20 - 1.23 同时支持,docker containerd (CNCF基金会的创建)

1.24 - 默认支持containerd

现阶段学习使用的时1.28版本

kubernetes的containerd部署

| 节点名 | IP地址 |

|---|---|

| k8s-master | 192.168.18.132 |

| k8s-node1 | 192.168.18.133 |

| k8s-node2 | 192.168.18.138 |

所有初始化配置

升级内核

#所有节点,不做强调以下的所有操作都在所有节点

yum update -y kernel && reboot

设置主机名和hosts文件

hostnamectl set-hostname k8s-master

hostnamectl set-hostname k8s-node01

hostnamectl set-hostname k8s-node02

cat >>/etc/hosts<<EOF

192.168.18.132 k8s-master

192.168.18.133 k8s-node01

192.168.18.138 k8s-node02

EOF

#使用ping 其他主机hostname 查看是否设置成功

安装环境包

yum -y install vim lrzsz unzip wget net-tools tree bash-completion \

conntrack ntpdate ntp ipvsadm ipset iptables \

curl sysstat libseccomp git psmisc telnet unzip gcc gcc-c++ make

关闭防火墙和内核安全机制

systemctl disable firewalld --now

vim /etc/selinux/config

enforcing -> disabled

setenforce 0

关闭swap分区

启用swap分区会对系统的性能产生非常负面的影响,因此kubernetes要求每个节点都要禁用swap分区。

加快数据读取,防止数据被交换到swap,降低性能

#关闭持久化挂载

vim /etc/fstab

将swap的挂载信息注释

#当下关闭

swapoff -a

关闭NetworkManager(可选)

systemctl stop NetworkManager

systemctl disable NetworkManager

调整系统内核参数

cat >/etc/sysctl.d/kubernetes.conf<<EOF

# 开启Linux内核的网络桥接功能,同时启用iptables和ip6tables的网络包过滤功能,用于在网络桥接时进行网络包过滤

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

# 开启路由转发,转发IPv4的数据包

net.ipv4.ip_forward=1

# 尽可能避免使用交换分区,提升k8s性能

vm.swappiness=0

# 不检查物理内存是否够用

vm.overcommit_memory=1

EOF

立即生效

sysctl --system

配置时间同步

yum -y install chrony

systemctl restart chronyd

chronyc sources -v

hwclock -s #将硬件时钟的时间同步到系统时钟

配置IPVS功能

yum -y install ipvsadm ipset sysstat conntrack libseccomp(在安装环境包时已安装)

#添加ipvs模块和内核模块

cat >>/etc/modules-load.d/ipvs.conf<<EOF

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack_ipv4 #内核4.19以上版本设置为nf_conntrack,本虚拟机需要随使用nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

overlay

br_netfilter

EOF

Linux内核4.19+版本已经将nf_conntrack_ipv4更新为nf_conntrack。

nf_conntrack是Linux内核中用于连接跟踪的模块,它负责跟踪网络连接的状态,包括连接的建立、维护和终止,以及相关的网络数据包的处理。这个模块在防火墙、网络地址转换(NAT)、负载均衡等网络功能中起着重要作用。

重启服务

systemctl restart systemd-modules-load

查看内核模块

lsmod | grep -e ip_vs -e nf_conntrack_ipv4

#结果有8个,6个也合理,是因为环境干净

安装containerd

containerd 是一个高级容器运行时,又名容器管理器。简而言之,它是一个守护进程,在单个主机上管理整个容器生命周期:创建、启动、停止容器、拉取和存储镜像、配置装载、网络等。

containerd 被设计为易于嵌入到更大的系统中。Docker 引擎使用 containerd 来运行容器。Kubernetes 可以通过 CRI 使用 containerd 来管理单个节点上的容器。

指定 containerd 在系统启动时加载的内核模块

cat >>/etc/modules-load.d/containerd.conf <<EOF

overlay

br_netfilter

EOF

加载模块

modprobe overlay

modprobe br_netfilter

立即生效

sysctl --system

安装依赖的软件包

yum install -y yum-utils device-mapper-persistent-data lvm2

添加 Docker 软件源

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum clean all

yum list

查看containerd版本

yum list containerd.io --showduplicates | sort -r

安装指定版本containerd

yum -y install containerd.io-1.6.16

生成containerd的配置文件

mkdir -p /etc/containerd

containerd config default >/etc/containerd/config.toml

修改containerd配置文件

#修改containerd的驱动程序

sed -i '/SystemdCgroup/s/false/true/g' /etc/containerd/config.toml

#将SystemCgroup 的false 替换成 true

#修改镜像仓库地址 61行

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.9"

启动containerd

systemctl enable containerd

systemctl start containerd

systemctl status containerd

查看containerd版本

ctr version

#1.6.16

镜像加速配置

vim /etc/containerd/config.toml

//145行

[plugins."io.containerd.grpc.v1.cri".registry]

config_path = "/etc/containerd/certs.d" #添加此处

mkdir /etc/containerd/certs.d

mkdir /etc/containerd/certs.d/docker.io

vim /etc/containerd/certs.d/docker.io/hosts.toml

server = "https://docker.io"

[host."https://3aa741e7c6bf42ae924a3c0143f0e841.mirror.swr.myhuaweicloud.com"]

capabilities = ["pull","resolve","push"]

[host."https://hub-mirror.c.163.com"]

capabilities = ["pull","resolve","push"]

[host."https://do.nark.eu.org"]

capabilities = ["pull","resolve","push"]

[host."https://dc.j8.work"]

capabilities = ["pull","resolve","push"]

[host."https://docker.m.daocloud.io"]

capabilities = ["pull","resolve","push"]

[host."https://dockerproxy.com"]

capabilities = ["pull","resolve","push"]

[host."https://docker.mirrors.ustc.edu.cn"]

capabilities = ["pull","resolve","push"]

[host."https://docker.nju.edu.cn"]

capabilities = ["pull","resolve","push"]

[host."https://registry.docker-cn.com"]

capabilities = ["pull","resolve","push"]

[host."https://hub.uuuadc.top"]

capabilities = ["pull","resolve","push"]

[host."https://docker.anyhub.us.kg"]

capabilities = ["pull","resolve","push"]

[host."https://dockerhub.jobcher.com"]

capabilities = ["pull","resolve","push"]

[host."https://dockerhub.icu"]

capabilities = ["pull","resolve","push"]

[host."https://docker.ckyl.me"]

capabilities = ["pull","resolve","push"]

[host."https://docker.awsl9527.cn"]

capabilities = ["pull","resolve","push"]

[host."https://mirror.baidubce.com"]

capabilities = ["pull","resolve","push"]

[host."https://docker.1panel.live"]

capabilities = ["pull","resolve","push"]

启动containerd

systemctl restart containerd

systemctl status containerd

查看containerd版本

ctr version

#1.6.16

测试下载镜像(单个节点测试即可)

ctr images pull docker.io/library/httpd:latest --hosts-dir=/etc/containerd/certs.d

查看镜像

ctr i ls

REF TYPE DIGEST SIZE PLATFORMS LABELS

docker.io/library/httpd:latest application/vnd.oci.image.index.v1+json sha256:4564ca7604957765bd2598e14134a1c6812067f0daddd7dc5a484431dd03832b 55.8 MiB linux/386,linux/amd64,linux/arm/v5,linux/arm/v7,linux/arm64/v8,linux/mips64le,linux/ppc64le,linux/s390x -

安装kubernetes

安装kubeadm

#添加k8s软件源

cat <<EOF>/etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

#快速建立yum缓存

yum makecache fast

#查看k8s版本

yum list kubectl --showduplicates | sort -r

#安装指定版本k8s

yum -y install kubectl-1.28.0 kubelet-1.28.0 kubeadm-1.28.0

#修改kubelet的crgoup与containerd的crgoup保持一致

cat >/etc/sysconfig/kubelet<<EOF

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

EOF

#kubelet设置为开机自启动

systemctl daemon-reload

systemctl enable kubelet

#查看k8s版本

kubelet --version 或 kubeadm version

kubeadm:快速部署kubernetes集群的工具

kubelet:在集群中的每个节点上用来启动Pod和容器等

kubectl:负责管理kubernetes集群的命令行工具

配置crictl工具

crictl 是CRI兼容的容器运行时命令行接口,可以使用它来检查和调试kubelet节点上的容器运行时和镜像

# 设置crictl连接containerd

cat <<EOF | tee /etc/crictl.yaml

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

timeout: 10

debug: false

EOF

在master节点操作

主节点部署Kubernetes

#查看k8s集群所需要的镜像

[root@k8s-master ~]# kubeadm config images list --kubernetes-version=v1.28.0 \

--image-repository=registry.aliyuncs.com/google_containers

#下载k8s集群所需要的镜像

[root@k8s-master ~]# kubeadm config images pull --kubernetes-version=v1.28.0 \

--image-repository=registry.aliyuncs.com/google_containers

#查看k8s集群所有的镜像

[root@k8s-master ~]# crictl images ls

IMAGE TAG IMAGE ID SIZE

registry.aliyuncs.com/google_containers/coredns v1.10.1 ead0a4a53df89 16.2MB

registry.aliyuncs.com/google_containers/etcd 3.5.9-0 73deb9a3f7025 103MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.28.0 bb5e0dde9054c 34.6MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.28.0 4be79c38a4bab 33.4MB

registry.aliyuncs.com/google_containers/kube-proxy v1.28.0 ea1030da44aa1 24.6MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.28.0 f6f496300a2ae 18.8MB

registry.aliyuncs.com/google_containers/pause 3.9 e6f1816883972 322kB

初始化集群自动开启IPVS

#创建初始化集群配置文件

[root@k8s-master ~]# kubeadm config print init-defaults > kubeadm-init.yaml

#修改初始化集群配置文件

[root@k8s-master ~]# vim kubeadm-init.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.18.132 #12行 修改master节点ip

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock #15行 修改容器进行时

imagePullPolicy: IfNotPresent

name: k8s-master #17行 修改master节点主机名

taints: #18行 注意!去掉Null

- effect: NoSchedule #19行 添加污点

key: node-role.kubernetes.io/control-plane #20行 添加

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers #32行 修改镜像仓库地址

kind: ClusterConfiguration

kubernetesVersion: 1.28.0 #34行 修改k8s版本

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16 #38行 增加pod网段

scheduler: {}

# 末尾添加

--- #更改kube-proxy的代理模式,默认为iptables

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

--- #更改kubelet cgroup驱动为systemd

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

初始化集群

[root@k8s-master ~]# kubeadm init --config=kubeadm-init.yaml --upload-certs | tee kubeadm-init.log

#显示

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.18.132:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:2e659dbd9475682888a8bc05b01feb3c7cf93f41f2f1f5f8d4edc42ecd315bdd

如果集群初始化失败

建议直接重做

重置处理:

1:删除kubernetes目录中所有内容

rm -rf /etc/kubernetes/*

2:删除启动端口进程

pkill -9 kubelet

pkill -9 kube-controll

pkill -9 kube-schedule

3:重置sock文件

kubeadm reset -f --cri-socket=unix:///var/run/containerd/containerd.sock

----------------------------------------------------------------------------------

# 查看 kube-proxy 代理模式

curl localhost:10249/proxyMode

# 注意:k8s集群初始化失败,查看日志解决

journalctl -xeu kubelet 或 tail kubeadm-init.log

配置kubectl工具(初始化成功后的步骤)

[root@k8s-master ~]# mkdir -p $HOME/.kube

[root@k8s-master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubelet申请api-server颁发证书,证书有可能会过期,过期后需要重新申请证书

用户管理kubernetes需要先进行身份认证,认证的信息都在admin.conf里,持久性管理需要添加环境变量

将该信息export KUBECONFIG=/etc/kubernetes/admin.conf添加到~/.bash_profile文件里

admin.conf 认证,指向master地址,方便和api-server通信

#永久生效(推荐)

[root@k8s-master ~]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

[root@k8s-master ~]# source ~/.bash_profil

查看组建状态

[root@k8s-master ~]# kubectl get cs

显示

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy ok

node工作节点操作

node工作节点加入集群

[root@k8s-node01 ~]# kubeadm join 192.168.18.132:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:2e659dbd9475682888a8bc05b01feb3c7cf93f41f2f1f5f8d4edc42ecd315bdd

[root@k8s-node02 ~]# kubeadm join 192.168.18.132:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:2e659dbd9475682888a8bc05b01feb3c7cf93f41f2f1f5f8d4edc42ecd315bdd

查看集群节点状态

[root@k8s-master .kube]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master NotReady control-plane 18m v1.28.0

k8s-node01 NotReady <none> 82s v1.28.0

k8s-node02 NotReady <none> 5s v1.28.0

部署网络CNI组建

kubernetes集群的网络是比较复杂的,不是集群内部实现的,为了更方便的使用集群,因此,使用第三方的cni网络插件(Container Network Interface )。cni是容器网络接口,作用是实现容器跨主机网络通信。pod的ip地址段,也称为cidr。

kubernetes支持多种网络插件,比如flannel、calico、canal等,任选一种即可,本次选择 Calico。

calico是一个纯三层的网络解决方案,为容器提供多node间的访问通信,calico将每一个node节点都当做为一个路由器(router),每个pod都是虚拟路由器下的的终端,各节点通过BGP(Border Gateway Protocol) 边界网关协议学习并在node节点生成路由规则,从而将不同node节点上的pod连接起来进行通信,是目前Kubernetes主流的网络方案。

官方下载地址:https://docs.tigera.io/calico

Github访问地址:https://github.com/projectcalico/calico

calico.yaml文件每个版本都有区别的,需要满足对应的k8s 版本

参考:https://archive-os-3-25.netlify.app/calico/3.25/getting-started/kubernetes/requirements

calico 3.25 版本对应的K8S版本有 v1.23—v1.28

下载Calico文件

[root@k8s-master ~]# wget --no-check-certificate https://docs.tigera.io/archive/v3.25/manifests/calico.yaml

修改Calico文件

[root@k8s-master ~]# vim calico.yaml

# 找到4601行,去掉注释并修改

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

部署Calico

[root@k8s-master ~]# kubectl apply -f calico.yaml

poddisruptionbudget.policy/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

serviceaccount/calico-node created

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

deployment.apps/calico-kube-controllers created

[root@k8s-master ~]# kubectl get pods -A

#实时监控

[root@k8s-master ~]# watch kubectl get pods -A

#直到都运行,如果前面的步骤都对,这步出现镜像拉取失败,可以更换镜像加速器重试

#查看节点状态

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 116m v1.28.0

k8s-node01 Ready <none> 115m v1.28.0

k8s-node02 Ready <none> 115m v1.28.0

设置补全工具(所有节点)

yum install bash-completion -y

source /usr/share/bash-completion/bash_completion

echo "source <(kubectl completion bash)" >> ~/.bashrc

source ~/.bashrc

测试

[root@k8s-master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-658d97c59c-mnchq 1/1 Running 0 116m

calico-node-2fqjf 1/1 Running 0 116m

calico-node-6p5td 1/1 Running 0 116m

calico-node-smppm 1/1 Running 0 116m

coredns-66f779496c-8f4g2 1/1 Running 0 120m

coredns-66f779496c-gqzk9 1/1 Running 0 120m

etcd-k8s-master 1/1 Running 0 120m

kube-apiserver-k8s-master 1/1 Running 0 121m

kube-controller-manager-k8s-master 1/1 Running 0 120m

kube-proxy-4cdj9 1/1 Running 0 119m

kube-proxy-9gtbd 1/1 Running 0 119m

kube-proxy-wldnf 1/1 Running 0 120m

kube-scheduler-k8s-master 1/1 Running 0 121m

#创建pods

[root@k8s-master ~]# kubectl create deployment nginx --image=nginx --replicas=3

deployment.apps/nginx created

#在节点上查看是否pull nginx镜像

[root@k8s-node01 ~]# crictl image ls

IMAGE TAG IMAGE ID SIZE

docker.io/calico/cni v3.25.0 d70a5947d57e5 88MB

docker.io/calico/node v3.25.0 08616d26b8e74 87.2MB

docker.io/library/nginx latest 4af177a024eb8 62.9MB

registry.aliyuncs.com/google_containers/kube-proxy v1.28.0 ea1030da44aa1 24.6MB

registry.aliyuncs.com/google_containers/pause 3.9 e6f1816883972 322kB

[root@k8s-master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-7854ff8877-bbzft 1/1 Running 0 16m 10.244.85.194 k8s-node01 <none> <none>

nginx-7854ff8877-fxn84 1/1 Running 0 16m 10.244.58.196 k8s-node02 <none> <none>

nginx-7854ff8877-s29m9 1/1 Running 0 16m 10.244.85.193 k8s-node01 <none> <none>

[root@k8s-master ~]# kubectl expose deployment nginx --port=80 --target-port=80

service/nginx exposed

[root@k8s-master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 144m

nginx ClusterIP 10.100.56.143 <none> 80/TCP 5s

[root@k8s-master ~]# kubectl get endpoints

NAME ENDPOINTS AGE

kubernetes 192.168.18.132:6443 144m

nginx 10.244.58.196:80,10.244.85.193:80,10.244.85.194:80 9s

通过https的协议管理k8s集群 https://192.168.18.132:6443

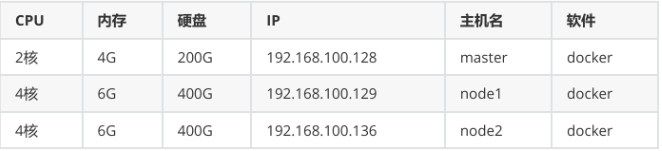

kubernetes的docker部署

前置环境部署

没有特别标明是在所有节点上部署

主机配置

安装环境包

[root@master ~ 15:17:54]# yum -y install vim lrzsz unzip wget net-tools tree bash-completion conntrack ntpdate ntp ipvsadm ipset iptables curl sysstat libseccomp git psmisc telnet unzip gcc gcc-c++ make

配置主机名解析

[root@master ~ 15:21:34]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.18.128 master

192.168.18.129 node1

192.168.18.136 node2

关闭防火墙和内核安全机制

[root@master ~ 15:21:51]# systemctl disable firewalld --now

[root@master ~ 15:23:02]# sed -i 's/enforcing/disabled/g' /etc/selinux/config

[root@master ~ 15:23:11]# setenforce 0

关闭swap分区

#注释交换空间的持久化挂载

[root@master ~ 15:23:18]# vim /etc/fstab

#/dev/mapper/centos-swap swap swap defaults 0 0

[root@master ~ 15:23:52]# swapoff -a

关闭NetworkManager(可选)

systemctl stop NetworkManager

systemctl disable NetworkManager

调整系统内核参数(先升级内核)

#升级内核

[root@master ~ 15:24:24]# yum update -y kernel && reboot

[root@master ~ 15:26:58]# cat >/etc/sysctl.d/kubernetes.conf<<EOF

> # 开启Linux内核的网络桥接功能,同时启用iptables和ip6tables的网络包过滤功能,用于在网络桥接时

> 进行网络包过滤

> net.bridge.bridge-nf-call-iptables=1

> net.bridge.bridge-nf-call-ip6tables=1

> # 开启路由转发,转发IPv4的数据包

> net.ipv4.ip_forward=1

> # 尽可能避免使用交换分区,提升k8s性能

> vm.swappiness=0

> # 不检查物理内存是否够用

> vm.overcommit_memory=1

> EOF

立即生效

[root@master ~ 15:28:01]# sysctl --system

调整Linux资源限制(可选)

//设置一个进程可以打开的最大文件句柄数

[root@master ~]# ulimit -SHn 65535

//在Linux系统中,默认情况下一个进程可以打开的最大文件句柄数是1024

[root@master ~]# cat >> /etc/security/limits.conf <<EOF

# 为所有用户设置文件描述符软限制

* soft nofile 655360

# 为所有用户设置文件描述符硬限制

* hard nofile 131072

# 为所有用户设置进程数软限制

* soft nproc 655350

# 为所有用户设置进程数硬限制

* hard nproc 655350

# 为所有用户设置内存锁定软限制为无限制

* soft memlock unlimited

# 为所有用户设置内存锁定硬限制为无限制

* hard memlock unlimited

EOF

时间同步

[root@master ~ 15:28:43]# systemctl restart chronyd

[root@master ~ 15:28:49]# chronyc sources -v

[root@master ~ 15:28:55]# hwclock -s

IPVS功能

[root@master ~ 15:29:02]# cat >>/etc/modules-load.d/ipvs.conf<<EOF

> ip_vs

> ip_vs_rr

> ip_vs_wrr

> ip_vs_sh

> nf_conntrack

> ip_tables

> ip_set

> xt_set

> ipt_set

> ipt_rpfilter

> ipt_REJECT

> ipip

> overlay

> br_netfilter

> EOF

IPVS内核模块功能说明

-

IPVS 核心模块

- ip_vs :IPVS 负载均衡基础模块

- ip_vs_rr :轮询(Round Robin)调度算法

- ip_vs_wrr :加权轮询(Weighted RR)调度算法

- ip_vs_sh :源地址哈希(Source Hashing)调度算法

-

网络连接与过滤

- nf_conntrack_ipv4 :IPv4 连接跟踪(NAT/防火墙依赖,新内核中内核版本 ≥4.19 时合并至 n f_conntrack )

- ip_tables :iptables 基础框架

- ipt_REJECT :实现数据包拒绝(REJECT 动作)

-

IP 集合管理

- ip_set :IP 地址集合管理

- xt_set & 4. 网络隧道与桥接 ipt_set :iptables 与 IP 集合的扩展匹配

-

网络隧道与桥接

- ipip :IP-over-IP 隧道协议

- overlay :Overlay 网络支持(如 Docker 跨主机网络)

- br_netfilter :桥接网络流量过滤 (需配合 net.bridge.bridge-nf-ca11-iptables=1参数)

-

反向路径过滤

- ipt_rpfilter :反向路径验证(防 IP 欺骗)

典型应用场景

- Kubernetes 节点初始化 :IPVS 模式 kube-proxy 依赖这些模块

- 负载均衡服务器 :启用 IPVS 调度算法

- 容器网络配置 :Overlay 和桥接模块支持

重启服务

[root@master ~ 15:30:03]# systemctl restart systemd-modules-load

查看内核模块

[root@master ~ 15:30:17]# lsmod | grep -e ip_vs -e nf_conntrack_ipv4

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 0

ip_vs 145458 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 143411 1 ip_vs

libcrc32c 12644 3 xfs,ip_vs,nf_conntrack

docker-ce环境

前置环境安装

[root@master ~ 15:30:22]# yum install -y yum-utils device-mapper-persistent-data lvm2

说明:

yum-utils 提供了 yum-config-manager

device mapper 存储驱动程序需要 device-mapper-persistent-data 和 lvm2

Device Mapper 是 Linux2.6 内核中支持逻辑卷管理的通用设备映射机制,它为实现用于存储资源管理 的块设备驱动提供了一个高度模块化的内核架构。

使用阿里云镜像

[root@master ~ 15:35:16]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

安装docker-ce

[root@master ~ 15:37:52]# yum install -y docker-ce

防火墙设置(已操作)

启动docker服务

[root@master ~ 15:37:52]# systemctl enable --now docker

镜像加速

[root@master ~ 15:38:16]# tee /etc/docker/daemon.json <<-'EOF'

> {

> "registry-mirrors": [

> "https://3aa741e7c6bf42ae924a3c0143f0e841.mirror.swr.myhuaweicloud.com",

> "https://do.nark.eu.org",

> "https://dc.j8.work",

> "https://docker.m.daocloud.io",

> "https://dockerproxy.com",

> "https://docker.mirrors.ustc.edu.cn",

> "https://docker.nju.edu.cn",

> "https://registry.docker-cn.com",

> "https://hub-mirror.c.163.com",

> "https://hub.uuuadc.top",

> "https://docker.anyhub.us.kg",

> "https://dockerhub.jobcher.com",

> "https://dockerhub.icu",

> "https://docker.ckyl.me",

> "https://docker.awsl9527.cn",

> "https://mirror.baidubce.com",

> "https://docker.1panel.live"

> ]

> }

> EOF

修改cgroup方式

[root@master ~ 15:41:59]# vim /etc/docker/daemon.json

[root@master ~ 15:44:15]# cat /etc/docker/daemon.json

{

"registry-mirrors": [

"https://3aa741e7c6bf42ae924a3c0143f0e841.mirror.swr.myhuaweicloud.com",

"https://do.nark.eu.org",

"https://dc.j8.work",

"https://docker.m.daocloud.io",

"https://dockerproxy.com",

"https://docker.mirrors.ustc.edu.cn",

"https://docker.nju.edu.cn",

"https://registry.docker-cn.com",

"https://hub-mirror.c.163.com",

"https://hub.uuuadc.top",

"https://docker.anyhub.us.kg",

"https://dockerhub.jobcher.com",

"https://dockerhub.icu",

"https://docker.ckyl.me",

"https://docker.awsl9527.cn",

"https://mirror.baidubce.com",

"https://docker.1panel.live"

],

"exec-opts": ["native.cgroupdriver=systemd"] #添加cgroup方式

}

#重启服务

[root@master ~ 15:44:24]# systemctl daemon-reload

[root@master ~ 15:45:14]# systemctl restart docker

cri-dockerd安装

作用:cri-dockerd 的主要作用是为 Docker Engine 提供一个符合 Kubernetes CRI(Container Runtime Interface)标准的接口 ,使 Docker 能继续作为 Kubernetes 的容器运行时(Container Runtime),尤其是在 Kubernetes1.24版本后,官方移除对原生 Docker 支持(dockershim)之后。

[root@master ~ 15:45:20]# wget https://github.com/mirantis/cri-dockerd/releases/download/v0.3.4/cri-dockerd-0.3.4-3.e17.x86_64.rpm

下载完成后直接安装

[root@master ~ 15:47:37]# rpm -ivh cri-dockerd-0.3.4-3.el7.x86_64.rpm

编辑服务配置文件

[root@master ~ 15:48:16]# vim /usr/lib/systemd/system/cri-docker.service

//编辑第10行,中间添加

ExecStart=/usr/bin/cri-dockerd --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9 --container-runtime-endpoint fd://

启动cri-dockerd服务

[root@master ~ 16:06:08]# systemctl daemon-reload

[root@master ~ 16:06:17]# systemctl start cri-docker.service

[root@master ~ 16:06:24]# systemctl enable cri-docker.service

检查文件是否启动

[root@master ~ 16:06:29]# ls /run/cri-*

/run/cri-dockerd.sock

kubernetes集群部署

Yum源

[root@master ~ 16:07:19]# cat <<EOF>/etc/yum.repos.d/kubernetes.repo

> [kubernetes]

> name=Kubernetes

> baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

> enabled=1

> gpgcheck=0

> repo_gpgcheck=0

> gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

> http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> EOF

软件安装

查看可以安装的版本

[root@master ~ 16:08:20]# yum list kubeadm.x86_64 --showduplicates | sort -r

安装1.28.2-0版本

[root@master ~ 16:09:03]# yum install -y kubeadm-1.28.0-0 kubelet-1.28.0-0 kubectl-1.28.0-0

kubelet配置

强制指定 kubelet 使用 systemd 作为 cgroup 驱动,确保与 Docker 或其他容器运行时保持一致 将 kube-proxy 的代理模式设置为 ipvs ,替代默认的 iptables ,提升大规模集群的网络性能

[root@master ~ 16:11:48]# vim /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

//因为没有初始化产生对应配置文件,我们先设置开机自启动状态

[root@master ~ 16:11:54]# systemctl daemon-reload

[root@master ~ 16:12:21]# systemctl enable kubelet.service

集群初始化

只在master节点上面做

查看可使用镜像

[root@master ~ 16:12:27]# kubeadm config images list --kubernetes-version=v1.28.0 --image-repository=registry.aliyuncs.com/google_containers

registry.aliyuncs.com/google_containers/kube-apiserver:v1.28.0

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.28.0

registry.aliyuncs.com/google_containers/kube-scheduler:v1.28.0

registry.aliyuncs.com/google_containers/kube-proxy:v1.28.0

registry.aliyuncs.com/google_containers/pause:3.9

registry.aliyuncs.com/google_containers/etcd:3.5.9-0

registry.aliyuncs.com/google_containers/coredns:v1.10.1

镜像下载

[root@master ~ 16:13:54]# kubeadm config images pull --cri-socket=unix:///var/run/cri-dockerd.sock --kubernetes-version=v1.28.0 --image-repository=registry.aliyuncs.com/google_containers

#查看已经下载镜像

[root@master ~ 16:17:28]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-apiserver v1.28.0 bb5e0dde9054 2 years ago 126MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.28.0 4be79c38a4ba 2 years ago 122MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.28.0 f6f496300a2a 2 years ago 60.1MB

registry.aliyuncs.com/google_containers/kube-proxy v1.28.0 ea1030da44aa 2 years ago 73.1MB

registry.aliyuncs.com/google_containers/etcd 3.5.9-0 73deb9a3f702 2 years ago 294MB

registry.aliyuncs.com/google_containers/coredns v1.10.1 ead0a4a53df8 2 years ago 53.6MB

registry.aliyuncs.com/google_containers/pause 3.9 e6f181688397 3 years ago 744kB

创建初始化集群配置文件

[root@master ~ 16:22:54]# kubeadm config print init-defaults > kubeadm-init.yaml

修改初始化配置文件

[root@master ~ 16:22:54]# vim kubeadm-init.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.18.128 #12行 master的IP地址

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/cri-dockerd.sock #15行 修改docker运行时

imagePullPolicy: IfNotPresent

name: master #17行 修改master节点主机名

taints: #18行 去掉 Null

- effect: NoSchedule #19行 添加污点

key: node-role.kubernetes.io/control-plane #20行 添加

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers #32行 修改镜像仓库地址

kind: ClusterConfiguration

kubernetesVersion: 1.28.0 #34行 k8s版本

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16 #38行 增加Pod网段

scheduler: {}

--- #末尾添加 更改kube-proxy的代理模式,默认为iptables

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

--- #更改kubelet cgroup驱动为systemd

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

初始化完成

[root@master ~ 16:23:49]# kubeadm init --config=kubeadm-init.yaml --upload-certs | tee kubeadm-init.log

#显示

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

`注意:`

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

`注意:`

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

`注意:`

kubeadm join 192.168.18.128:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:094e58183b48510b522f94eb4827049786a29b09f3fcb4e5a6de2f07936f7e54

初始化失败和containerd的方法相同

配置kubectl工具

根据初始化成功后的提示进行配置

Kubectl 是一个用于操作Kubernetes集群的命令行工具。 kubectl 在 $HOME/.kube 目录中查找一个名为 config 的配置文件。可以通过设置 KUBECONFIG 环境 变量或设置 --kubeconfig 参数来指定其它 kubeconfig 文件。

[root@master ~ 16:26:01]# mkdir -p $HOME/.kube

[root@master ~ 16:26:31]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~ 16:26:31]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

在Kubernetes集群中,admin.conf 文件是用于配置kubectl工具访问Kubernetes集群的客户端配置文 件。该文件包含了连接到Kubernetes集群所需的认证信息、集群信息和上下文信息。

#持久生效

[root@master ~ 16:26:31]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

[root@master ~ 16:27:19]# source ~/.bash_profile

#查看核心组建控制平面的健康状态

[root@master ~ 16:27:27]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy ok

核心组建控制平面的健康状态

⚠只在node节点操作

⚠注意:添加–cri-socket unix:///var/run/cri-dockerd.sock,否则会报错

[root@node1 ~ 16:29:16]#kubeadm join 192.168.18.128:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:094e58183b48510b522f94eb4827049786a29b09f3fcb4e5a6de2f07936f7e54 --cri-socket unix:///var/run/cri-dockerd.sock

[root@node2 ~ 16:29:16]#kubeadm join 192.168.18.128:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:094e58183b48510b522f94eb4827049786a29b09f3fcb4e5a6de2f07936f7e54 --cri-socket unix:///var/run/cri-dockerd.sock

#查看集群状态

[root@master ~ 16:27:40]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane 4m12s v1.28.0

node1 NotReady <none> 66s v1.28.0

node2 NotReady <none> 54s v1.28.0

网络CNI组建部署

下载Calico文件

[root@master ~ 16:30:10]# wget --no-check-certificate https://docs.tigera.io/archive/v3.25/manifests/calico.yaml

修改Calico文件

[root@master ~ 16:35:04]# vim calico.yaml

# 找到4601行,去掉注释并修改

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

部署Calico

[root@master ~ 16:35:04]# kubectl apply -f calico.yaml

验证检查集群

[root@master ~ 16:45:36]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-658d97c59c-hv65v 1/1 Running 0 9m42s

kube-system calico-node-bgtph 1/1 Running 0 9m42s

kube-system calico-node-fk8tb 1/1 Running 0 9m42s

kube-system calico-node-x5w4t 1/1 Running 0 9m42s

kube-system coredns-66f779496c-cklwm 1/1 Running 0 19m

kube-system coredns-66f779496c-wgdfz 1/1 Running 0 19m

kube-system etcd-master 1/1 Running 0 19m

kube-system kube-apiserver-master 1/1 Running 0 19m

kube-system kube-controller-manager-master 1/1 Running 0 19m

kube-system kube-proxy-4zl57 1/1 Running 0 16m

kube-system kube-proxy-h5sfp 1/1 Running 0 16m

kube-system kube-proxy-rb52m 1/1 Running 0 19m

kube-system kube-scheduler-master 1/1 Running 0 19m

#动态查看变化 watch kubectl get pods -A

如果出现长时间等待状态可以所有节点直接升级内核(解决)

查看集群node状态

[root@master ~ 16:46:44]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane 20m v1.28.0

node1 Ready <none> 17m v1.28.0

node2 Ready <none> 17m v1.28.0

kubernetes的主要文件

[root@master ~ 16:46:51]# ls /etc/kubernetes/

admin.conf #认证信息

kubelet.conf

pki/ #集群的证书

manifests/ #集群核心组件的运行配置

controller-manager.conf

scheduler.conf

[root@master ~ 16:46:51]# ls /etc/kubernetes/manifests/

etcd.yaml #数据库信息

kube-controller-manager.yaml #控制器信息

kube-apiserver.yaml #api信息

kube-scheduler.yaml #调度器信息

查看集群地址

[root@master ~ 16:46:51]# kubectl get service -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 22m

优化操作

kubectl命令补全

[root@master ~ 16:48:42]# yum install bash-completion -y

[root@master ~ 16:49:04]# source /usr/share/bash-completion/bash_completion

[root@master ~ 16:49:10]# echo "source <(kubectl completion bash)" >> ~/.bashrc

[root@master ~ 16:49:16]# source ~/.bashrc

更多推荐

已为社区贡献7条内容

已为社区贡献7条内容

所有评论(0)