自动驾驶世界模型-范式02-BEV&规划-04:HERMES: A Unified Self-Driving World Model for Simultaneous 3D Scene Underst

Xin Zhou1∗, Dingkang Liang1∗†, Sifan Tu1\mathrm { T u ^ { 1 } }Tu1 , Xiwu Chen3, Yikang Ding2†\mathrm { D i n g ^ { 2 \dagger } }Ding2† , Dingyuan Zhang1, Feiyang Tan3\mathrm { T a n ^ { 3 } }Tan3 , H

HERMES: A Unified Self-Driving World Model for Simultaneous 3D Scene Understanding and Generation

Xin Zhou1∗, Dingkang Liang1∗†, Sifan Tu1\mathrm { T u ^ { 1 } }Tu1 , Xiwu Chen3, Yikang Ding2†\mathrm { D i n g ^ { 2 \dagger } }Ding2† , Dingyuan Zhang1, Feiyang Tan3\mathrm { T a n ^ { 3 } }Tan3 , Hengshuang Zhao4, Xiang Bai1B

1 Huazhong University of Science and Technology, 2 MEGVII Technology, 3 Mach Drive, 4 The University of Hong Kong {xzhou03, dkliang}@hust.edu.cn

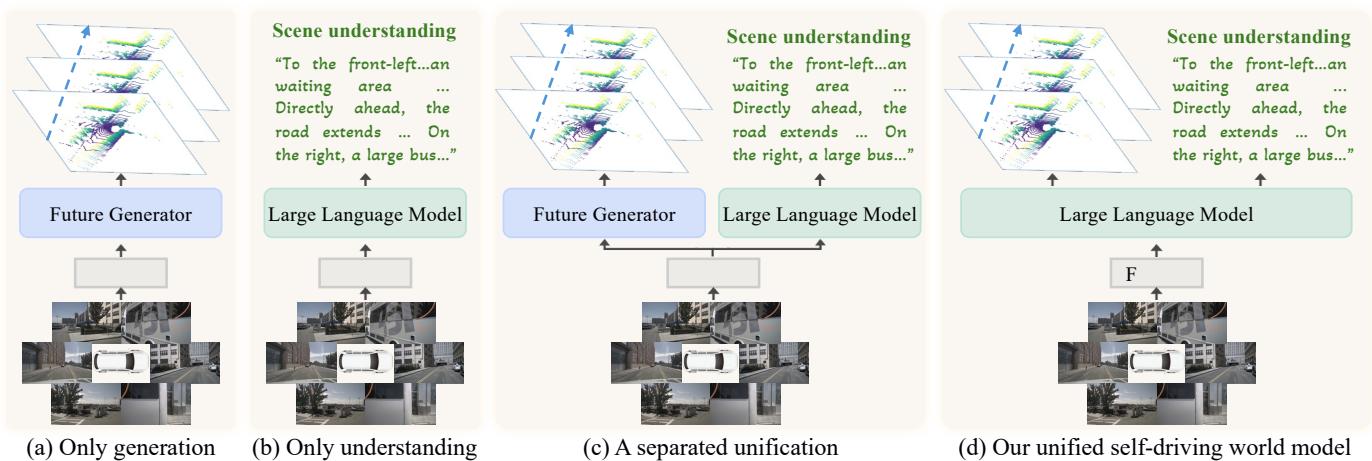

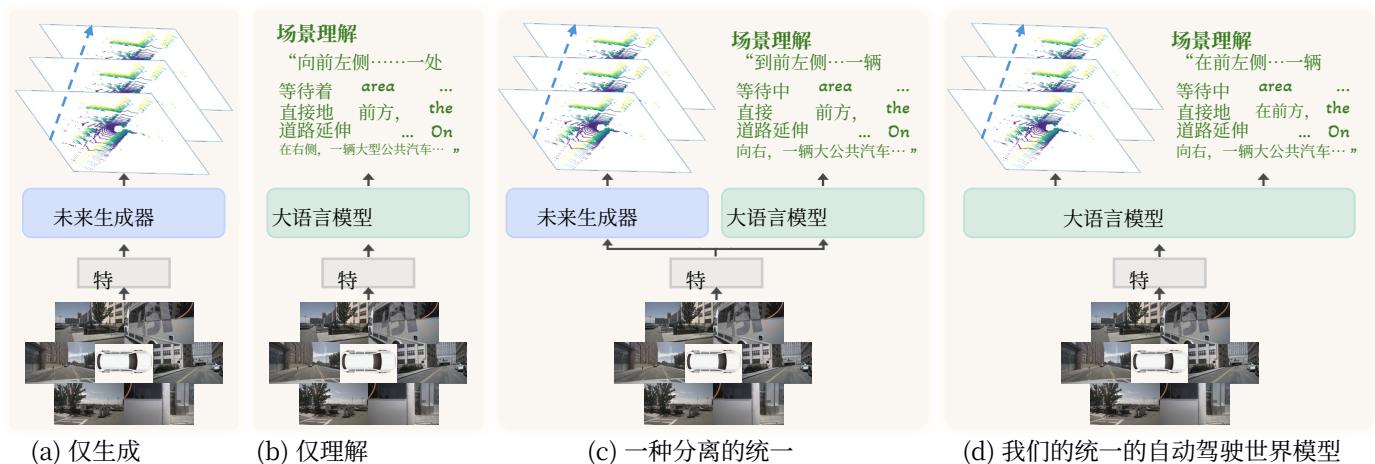

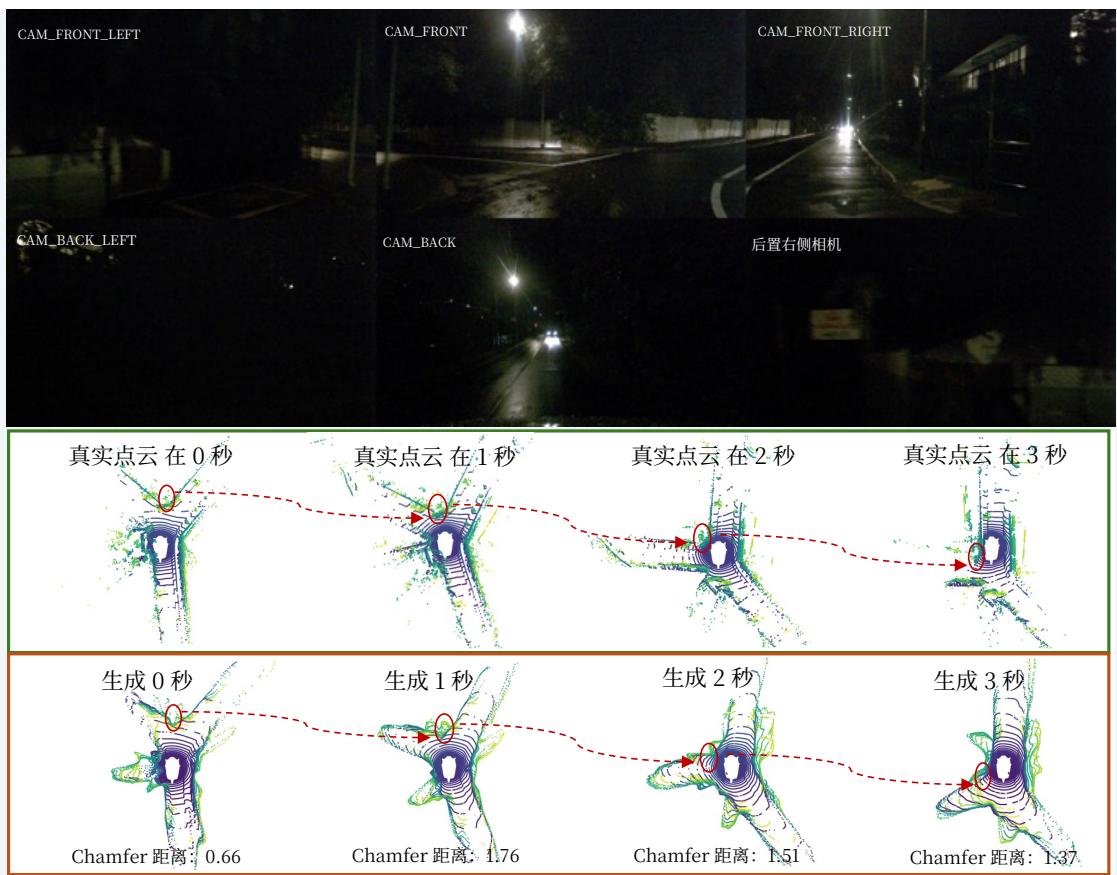

Figure 1. (a) Previous driving world models focus on generative scene evolution prediction. (b) Large language models for driving are limited to scene understanding. © A straightforward unification manner using the generator and large language models separately with a shared feature. (d) The proposed simple framework unifies 3D scene understanding and generates scene evolution based on given actions.

Abstract

Driving World Models (DWMs) have become essential for autonomous driving by enabling future scene prediction. However, existing DWMs are limited to scene generation and fail to incorporate scene understanding, which involves interpreting and reasoning about the driving environment. In this paper, we present a unified Driving World Model named HERMES. We seamlessly integrate 3D scene understanding and future scene evolution (generation) through a unified framework in driving scenarios. Specifically, HERMES leverages a Bird’s-Eye View (BEV) representation to consolidate multi-view spatial information while preserving geometric relationships and interactions. We also introduce world queries, which incorporate world knowledge into BEV features via causal attention in the Large Language Model, enabling contextual enrichment for understanding and generation tasks. We conduct comprehensive studies on nuScenes and OmniDrive-nuScenes datasets to validate the effectiveness of our method. HERMES achieves state-of-the-art performance, reducing generation error by 32.4%3 2 . 4 \%32.4% and improving understanding metrics such as CIDEr by 8.0%8 . 0 \%8.0% . The model and code will be publicly released at https://github.com/LMD0311/HERMES.

1. Introduction

Driving World Models (DWMs) [12, 16, 54, 70] have become increasingly important in autonomous driving for their ability to predict future scene evolutions. These models simulate potential changes in the surrounding environment, enabling the vehicles to forecast risk, optimize routes, and make timely decisions in dynamic situations. Among the various modalities, point clouds [15, 24, 28, 65] naturally preserve the geometric relationships between different objects and their surroundings, making them well-suited for accurately describing scene evolutions [22, 57, 67, 76].

However, despite the progress in scene generation, a crucial limitation of current DWMs is their inability to incorporate scene understanding fully. Specifically, while these DWMs excel at predicting how the environment will evolve

HERMES:用于同时进行三维场景理解与生成的统一自动驾驶世界模型

Xin Zhou1∗,Dingkang Liang1∗†,Sifan Tu1,Xiwu Chen3,Yikang Ding2†\mathrm { D i n g ^ { 2 \dag } }Ding2† ,Dingyuan Zhang1, Feiyang Tan3,HengshuangZhao4,Xiang Bai1B1 华中科技大学, 2 MEGVII Technology, 3Mach Drive, 4 香港大学{xzhou03, dkliang}@hust.edu.cn

图 1。(a) 以往的驾驶世界模型侧重于生成式场景演化预测。(b) 用于驾驶的大型语言模型仅限于场景理解。© 一种直接的统一方式:生成器和大型语言模型分别使用共享特征。(d) 所提出的简单框架在给定动作的基础上统一了三维场景理解并生成场景演化。

摘要

驾驶世界模型(DWMs)通过实现未来场景预测,已成为自动驾驶的关键。然而,现有的 DWMs 局限于场景生成,未能纳入场景理解,即对驾驶环境的解释和推理。本文提出了一个统一的驾驶世界模型,命名为HERMES。我们在驾驶场景中通过统一框架无缝整合三维场景理解与未来场景演化(生成)。具体而言,HER-MES 利用鸟瞰图(BEV)表示来整合多视角的空间信息,同时保留几何关系与交互。我们还引入了world queries,通过在大型语言模型中使用因果注意力将世界知识融合到 BEV 特征中,从而为理解和生成任务提供上下文增强。我们在 nuScenes 和OmniDrive-nuScenes 数据集上进行了全面研究,以验证本方法的有效性。HERMES 达到了

达到最先进的性能,将生成误差降低了 32.4%3 2 . 4 \%32.4% ,并提升了诸如CIDEr在内的理解指标 8.0%8 . 0 \%8.0% 。模型和代码将在以下地址公开发布https://GitHub.com/LMD0311/HERMES.

1. 引言

驾驶世界模型 (DWMs) [12, 16, 54, 70] 在自动驾驶中变得日益重要,因为它们能够预测未来的场景演化。这些模型模拟周围环境可能发生的变化,使车辆能够预测风险、优化路线并在动态情况下做出及时决策。在各种模态中,点云 [15, 24, 28, 65] 自然保留了不同物体与其周围环境之间的几何关系,使其非常适合准确描述场景演化 [22, 57, 67, 76]。

然而,尽管在场景生成方面取得了进展,当前驾驶世界模型(DWMs)存在一个关键限制:它们无法完全融合场景理解。具体来说,尽管这些DWMs在预测环境将如何演变方面表现出色

(Fig. 1(a)), they are hard to interpret and describe the environment, answer questions about it, or provide relevant contextual information (i.e., VQA, scene description).

Recently, vision-language models (VLMs) [6, 27, 31] have achieved remarkable advancements in general vision tasks by leveraging world knowledge and causal reasoning capabilities and have been successfully applied in autonomous driving scenes [44, 50]. As shown in Fig. 1(b), these driving VLMs are capable of performing tasks such as answering complex queries about the driving environment, generating descriptions of scenes, and reasoning about the relationships between various entities. However, while they improve understanding of the current driving environment, they still lack predictive capabilities for how the scene will evolve. This gap limits their effectiveness in autonomous driving, where both 3D scene understanding and future scene prediction are necessary for informed decisionmaking. This naturally gives rise to the question: how can world knowledge and future scene evolutions be seamlessly integrated into a unified world model?

Driven by the above motivation, in this paper, we propose a unified world model that connects both understanding and generation tasks. Our method is referred to as HERMES, as illustrated in Fig. 1(d), distinguishes itself from conventional methods, which typically specialize in either generation or scene understanding (e.g., VQA and caption). HERMES extends the capabilities of large language models (LLMs) to simultaneously predict future scenes and understand large-scale spatial environments, particularly those encountered in autonomous driving. However, constructing such a unified model is a highly non-trivial problem, as it requires overcoming several key challenges:

Large spatiality in multi-view. LLMs typically face max token length limits, especially in autonomous driving, where multiple surrounding views must be processed (e.g., six-view images in the nuScenes dataset [4]). Directly converting these multi-view images into tokens would exceed the token limit and fail to capture the interactions among different views. To address this, we propose to tokenize the input via a Bird’s-Eye View (BEV) representation. It offers two key benefits: 1) BEV effectively compresses the surrounding views into a unified latent space, thus overcoming the token length limitation while retaining key spatial information. 2) BEV preserves geometric spatial relationships between views, allowing the model to capture interactions between objects and agents across multiple perspectives.

The integration between understanding and generation. A straightforward way to unify scene understanding and generation would be to share the BEV features and apply separate models for understanding (via LLMs) and generation (via a future generator), as presented in Fig. 1©. However, this approach fails to leverage the potential interactions between understanding and generation. Moreover, the separate processing of these tasks hinders the optimization process, resulting in suboptimal performance. To address this, we propose to initialize a set of world queries using the raw BEV features (before LLM processing). These queries are then enhanced with world knowledge from text tokens through causal attention in the LLM. As a result, by using the world-knowledge-enhanced queries to interact with the LLM-processed BEV features via a current to future link, we ensure that the generated scene evolutions are enriched with world knowledge, effectively bridging the gap between generation and understanding.

By consolidating 3D scene understanding and future scene generation within a single framework, HERMES establishes a unified representation that seamlessly accommodates both tasks, offering a holistic perspective on driving environments. This marks a significant step toward a unified DWM, demonstrating the feasibility of integrated driving understanding and generation. Extensive experiments validate the effectiveness of our HERMES in terms of both tasks. Notably, our method significantly reduces the error by 32.4%3 2 . 4 \%32.4% compared to the current state-of-theart (SOTA) method [65] for generation. Additionally, for the understanding task, our approach outperforms the SOTA [50] by 8.0%8 . 0 \%8.0% under the CIDEr metric on the challenging OmniDrive-nuScenes dataset [50].

Our major contributions can be summarized as follows: 1) In this paper, we propose HERMES, which tames the LLM to understand the autonomous driving scene and predict its evolutions simultaneously. To the best of our knowledge, this is the first world model that can unify the 3D understanding and generation task; 2) We introduce world queries to capture and integrate world knowledge from text tokens, ensuring that the generated scene evolutions are not only contextually aware but also enriched with world knowledge. This scheme effectively bridges the gap between the understanding and generation tasks, enabling a more coherent and accurate prediction of future scenes.

2. Related Work

World Models for Driving. Driving World Models (DWMs) [14] have gained considerable attention in autonomous driving for obtaining comprehensive environmental representation and predicting future states based on action sequences. Current research mainly focuses on the generation, whether in 2D [35, 53, 73] or 3D [36, 37].

Specifically, most pioneering 2D world models perform a video generation for driving scenarios. GAIA-1 [16] first introduced a learned simulator based on an autoregressive model. Recent work further leverages the large scale of data [21, 63, 68] and more powerful pre-training models, significantly enhancing generation quality regarding consistency [11, 54], resolution [12, 21], and controllability [25, 34, 56, 70]. Concurrently, some studies aim (图。1(a)),它们难以解释和描述环境、回答有关环境的问题, 或提供相关的上下文信息(即,视觉问答(VQA)、场景描述)。

最近,视觉‑语言模型(VLMs) [6, 27, 31]通过利用世界知识和因果推理能力,在通用视觉任务上取得了显著进展,并已成功应用于自动驾驶场景 [44, 50lo5 0 \mathrm { l _ { o } }50lo 如图1(b)所示,这些驾驶用VLM能够执行诸如回答关于驾驶环境的复杂问题、生成场景描述以及推理各实体之间关系等任务。然而,尽管它们提升了对当前驾驶环境的理解,但仍缺乏对场景如何演变的预测能力。该缺口限制了它们在自动驾驶中的有效性,而在自动驾驶中,三维场景理解和未来场景预测对于做出明智决策都是必要的。这自然引出了一个问题:如何将世界知识与未来场景演化无缝地整合到一个统一的世界模型中?

基于上述动机,本文提出了一个将理解与生成任务相连接的统一世界模型。我们的方法称为HER-MES,如图1(d)所示,与通常专注于生成或场景理解(例如VQA和描述文本)的传统方法不同。HERMES 将大语言模型(LLMs)的能力扩展到同时预测未来场景并理解大规模空间环境,尤其是自动驾驶中遇到的环境。然而,构建这样一个统一模型并非易事,因为需要克服若干关键挑战:

多视角下的大范围空间性。LLMs 通常面临最大令牌长度限制,特别是在自动驾驶中,需要处理来自周围的多视角(例如,nuScenes 数据集 [4]中的六视图影像)。将这些多视角影像直接转换为令牌会超出令牌限制,且无法捕捉不同视角之间的交互。为此,我们提出通过鸟瞰图(BEV)表示对输入进行分词。它带来了两个关键优势:1)BEV 能有效将周围视图压缩到统一的潜在空间,从而克服令牌长度限制,同时保留关键的空间信息。2)BEV 保留了视图之间的几何空间关系,使模型能够捕捉跨多视角的物体与主体之间的交互。

理解与生成之间的整合。将场景理解与生成统一的一个直接方法是共享 BEV 特征,并对理解(通过LLMs)和生成(通过未来生成器)分别应用独立模型,如图1© 所示。然而,该方法未能利用理解与生成之间潜在的相互作用。此外,

将这些任务分开处理会阻碍优化过程,导致次优性能。为了解决这一问题,我们提出使用原始 BEV 特征(在 LLMs 处理之前)初始化一组世界查询。这些查询随后通过 LLMs 中的因果注意力被文本标记提供的世界知识增强。因此,通过使用带有世界知识增强的查询通过当前到未来链接与经过 LLMs 处理的BEV 特征交互,我们确保生成的场景演化被世界知识丰富,有效地弥合了生成与理解之间的差距。

通过在单一框架内整合三维场景理解与未来场景生成,HERMES 建立起一个统一表示,能够无缝兼容这两项任务,提供对驾驶环境的整体视角。这标志着朝着统一 DWM 的重要一步,展示了集成驾驶理解与生成的可行性。大量实验验证了我们 HERMES 在两项任务上的有效性。值得注意的是,我们的方法在生成任务上将误差相比当前最先进方法(SOTA) [65]显著降低了 32.4%3 2 . 4 \%32.4% 。此外,在理解任务上,我们的方法在具有挑战性的 OmniDrive‑nuScenes 数据集 [50]上,按 CIDEr 指标比 SOTA [50] 提升了 8.0%8 . 0 \%8.0% 。

我们的主要贡献可归纳如下:1) 在本文中,我们提出了HERMES,它使大语言模型能够同时理解自动驾驶场景并预测其演变。据我们所知,这是第一个能够统一 3D 理解与生成任务的世界模型;2) 我们引入了世界查询以从文本标记中捕获并集成世界知识,确保生成的场景演化不仅具有上下文意识,而且富含世界知识。该方案有效弥合了理解与生成任务之间的差距,使对未来场景的预测更加连贯且准确。

2. 相关工作

驾驶世界模型。驾驶世界模型(DWMs) [14] 在自动驾驶中受到广泛关注,用于获取全面的环境表示并基于动作序列预测未来状态。目前的研究主要集中在生成方面,无论是 2D [35, 53, 73] 还是 3D [36, 37]。

具体来说,大多数开创性的二维世界模型会为驾驶场景执行视频生成。GAIA‑1 [16]首次引入了基于自回归模型的学习到的模拟器。近期工作进一步利用了大规模数据 [21, 63, 68] 和更强大的预训练模型,大幅提升了在一致性 [11, 54], 、分辨率 [12, 21], 和可控性[25, 34, 56, 70]方面的生成质量。与此同时,一些研究旨在

to generate 3D spatial information for future scenes to provide geometric representations that can benefit autonomous driving systems. Occworld [72] focuses on future occupancy generation and ego planning using spatial-temporal transformers, which has been adapted to other paradigms including diffusion [13, 47], rendering [2, 19, 60], and autoregressive transformer [55]. Additionally, some approaches [22, 57, 67, 76] propose future point cloud forecasting as a world model, among which, ViDAR [65] using images to predict future point clouds through a selfsupervised manner.

However, existing DWMs overlook the explicit understanding capacity of the driving environment. This paper aims to propose a unified world model that can both comprehend the scenario and generate scene evolution.

Large Language Models for Driving. Large Language Models (LLMs) exhibit impressive generalization and extensive world knowledge derived from vast data, showcasing remarkable capabilities across various tasks [9, 58, 69]. This has led researchers to explore their applications in autonomous driving, and current studies [8, 38, 43, 52] primarily focus on using LLMs to understand driving scenarios and make perceptual or decision-making outputs. For instance, DriveGPT4 [59] processes front-view video input to predict vehicle actions and provide justifications via an LLM. DriveLM [44] leverages LLMs for graph-based visual question-answering (VQA) and end-to-end driving. ELM [74] enhances the spatial perception and temporal modeling through space-aware pre-training and time-aware token selection. OmniDrive [50] introduces a benchmark with extensive VQA data labeled by GPT-4 [1] and utilizes Q-Former to integrate 2D pre-trained knowledge with 3D spatial. Despite significant advancements and the emergence of various language-based methods, the application of LLMs in driving remains mainly limited to understanding and text modeling. In this paper, we aim to tame the LLM to understand the autonomous driving scene and predict its future evolutions simultaneously.

3. Preliminaries

This section revisits the driving world models and the Bird’s Eye View representation as preliminary.

The Driving World Models (DWMs) seek to learn a general representation of the world from large-scale unlabeled driving data by forecasting future scenarios, enabling the model to grasp the data distribution of real situations. Specifically, given an observation Ot{ \mathcal { O } } _ { t }Ot at time ttt , the model forecasts information about the next observation Ot+1\mathcal { O } _ { t + 1 }Ot+1 . The framework of DWMs can be summarized as follows:

Lt=E(Ot),Lt+1=M(Lt),Ot+1=D(Lt+1), \mathcal { L } _ { t } = \pmb { \mathcal { E } } \left( \boldsymbol { \mathcal { O } } _ { t } \right) , \mathcal { L } _ { t + 1 } = \pmb { \mathcal { M } } \left( \mathcal { L } _ { t } \right) , \mathcal { O } _ { t + 1 } = \pmb { \mathcal { D } } \left( \mathcal { L } _ { t + 1 } \right) , Lt=E(Ot),Lt+1=M(Lt),Ot+1=D(Lt+1),

where ε\varepsilonε and KaTeX parse error: Undefined control sequence: \mathbfcal at position 1: \̲m̲a̲t̲h̲b̲f̲c̲a̲l̲ ̲{ D } represent the encoder and decoder for the scene, while the world predictor M\mathbf { \mathcal { M } }M maps the latent state Lt\scriptstyle { \mathcal { L } } _ { t }Lt to the next time step Lt+1\mathcal { L } _ { t + 1 }Lt+1 . Together, these components follow the workflow of OtLtLt+1Ot+1\mathcal { O } _ { t } \mathcal { L } _ { t } \mathcal { L } _ { t + 1 } \mathcal { O } _ { t + 1 }OtLtLt+1Ot+1 .

Bird’s-Eye View (BEV) has emerged recently as a unified representation offering a natural candidate view. The BEVFormer series [26, 61] exemplifies this approach by leveraging cross-attention to improve 3D-2D view transformation modeling, resulting in robust BEV representations. This BEV feature maintains geometric spatial relationships between views, enabling the model to capture interactions among objects and agents from various perspectives. Additionally, BEV representations are ideal for integrating visual semantics and surrounding geometry, making them wellsuited for understanding generation unification.

This paper focuses on unifying the 3D scene understanding and generation by using current multi-view as the observation to generate future point clouds, which inherently maintain accurate geometric relationships among objects and their environments within a BEV-based representation.

4. HERMES

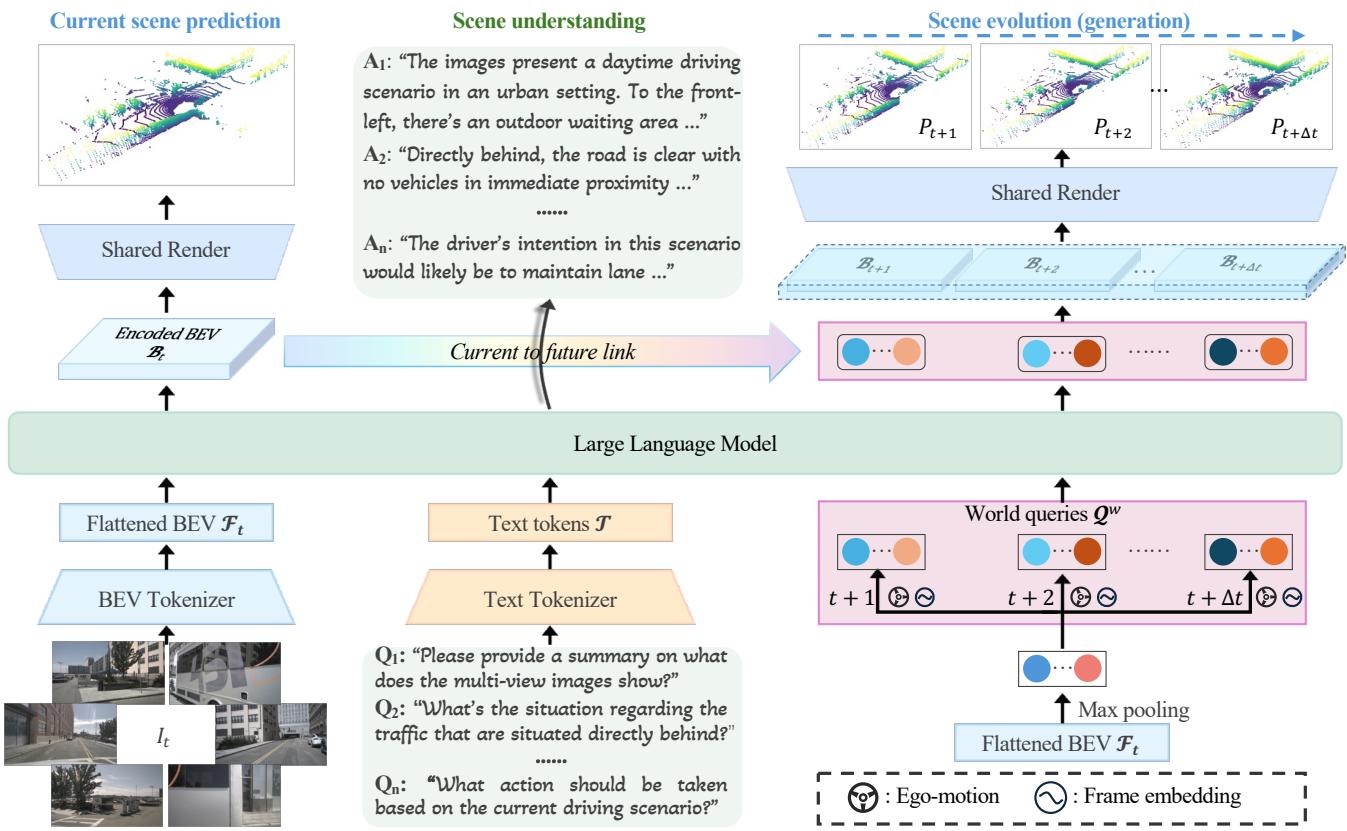

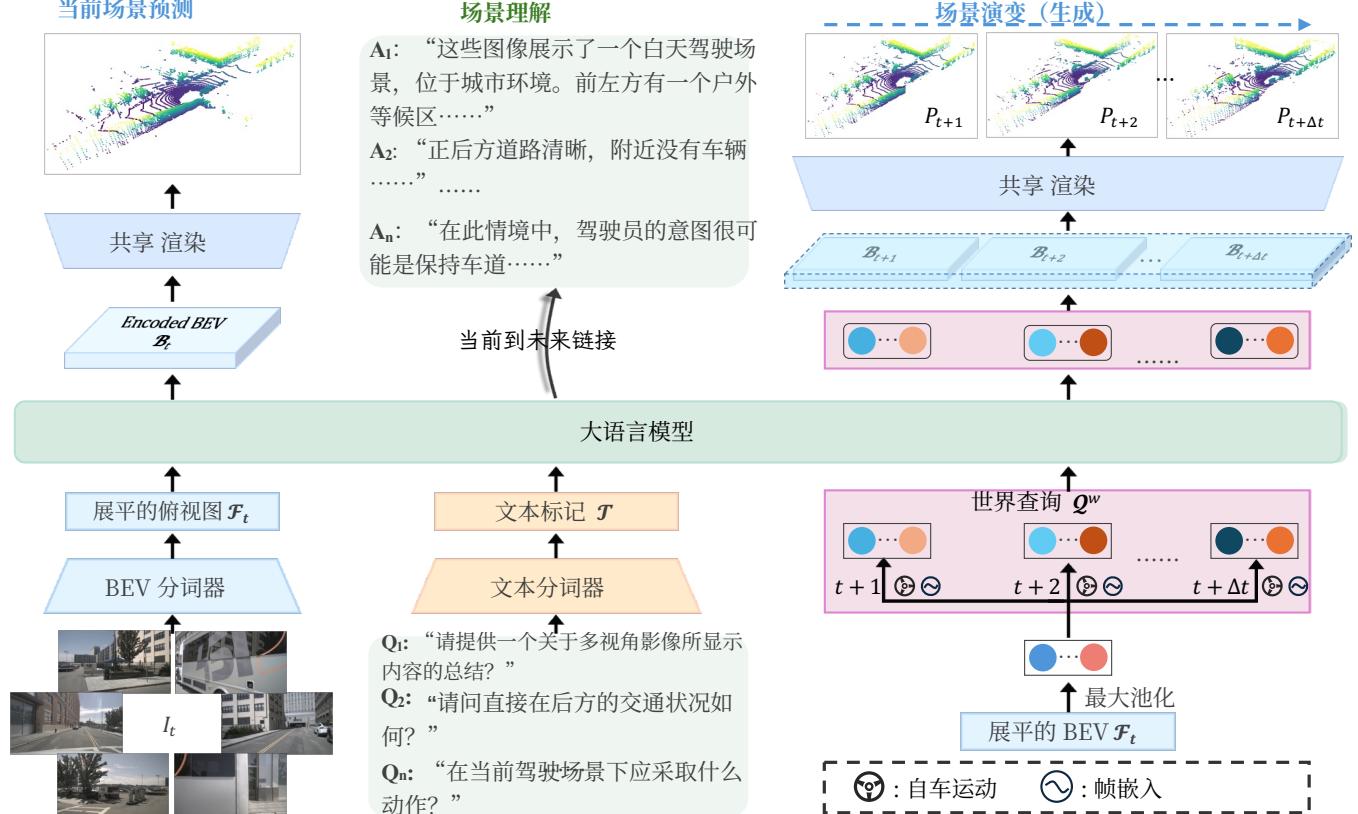

This paper presents HERMES, a unified framework for driving scenarios understanding and generating. Our HERMES serving as a world model that predicts scenes’ point cloud evolution based on image observations and facilitates detailed scene comprehension. The pipeline of our method is illustrated in Fig. 2. We begin with multi-view input images ItI _ { t }It , which are encoded for semantic information using a BEV-based tokenizer and then processed by a Large Language Model (LLM). The LLM predicts the next token based on user instructions to interpret the current autonomous driving scenario. We integrate world queries into the sequence to transfer world knowledge from the conversations to the generation task. The current to future link generates future BEV features, and the shared render predicts the current scene point cloud PtP _ { t }Pt (as an auxiliary task) and generates Δt\Delta tΔt future scenes from Pt+1P _ { t + 1 }Pt+1 to Pt+ΔtP _ { t + \Delta t }Pt+Δt .

4.1. World Tokenizer and Render

The world tokenizer encodes the world observation, i.e., the current multi-view images, into a compressed continuous BEV representation, which is then further processed by the LLM. Conversely, the Render [48, 62, 75] converts BEV features into point clouds to generate geometric information about the scenario. Both modules are detailed as follows.

BEV-based World Tokenizer. To preserve geometric spa-tial relationships between views and rich semantic infor-mation for LLM inputs, we adopt a BEV-based world tok-enizer E\mathcal { E }E . Specifically, the multi-view images ItI _ { t }It at time ttt arepassed through a CLIP image encoder [7, 33] and a singleframe BEVFormer v2 [61] without modification. The ob-tained BEV feature F bevt Ftbev∈Rw×h×c\mathcal { F } _ { t } ^ { b e v } \in \mathbb { R } ^ { w \times h \times c }Ftbev∈Rw×h×c captures the semanticand geometric information, where www and hhh denote the scaleof encoded scene, with larger values indicating greater de-生成用于未来场景的三维空间信息,以提供可惠及自动驾驶系统的几何表示。 [72] Occworld 专注于使用时空变换器进行未来占据生成和自车规划,该方法已被适配到包括扩散 [13, 47], 渲染 [2, 19, 60], 和自回归变换器 [55]等其他范式。此外,一些方法[22, 57, 67, 76] 将未来点云预测作为世界模型来提出,其中,ViDAR [65] 以自监督方式利用图像预测未来点云。

然而,现有的驾驶世界模型(DWMs)忽视了对驾驶环境明确的理解能力。本文旨在提出一个统一的世界模型,既能理解场景,又能生成场景演变。

Large LanguageModels forDriving. 大型语言模型(LLMs)凭借来自海量数据的广泛世界知识和出色的泛化能力,在多种任务上展现了卓越能力 [9, 58, 69]。这推动研究者探索其在自动驾驶中的应用,当前研究[8, 38, 43, 52] 主要集中于利用LLMs理解驾驶场景并产生感知或决策类输出。例如,DriveGPT4 [59] 处理前视视频输入以预测车辆动作并通过LLM提供解释。DriveLM [44]利用LLMs进行基于图的视觉问答(VQA)和端到端驾驶。ELM [74] 通过具有空间感知的预训练和时间感知的代币选择,增强了空间感知和时序建模。OmniDrive [50] 引入了由GPT‑4 [1] 标注的大规模VQA基准,并利用Q‑Former将二维预训练知识与三维空间融合。尽管取得了显著进展并涌现出多种基于语言的方法,LLMs在驾驶领域的应用仍主要局限于理解和文本建模。本文旨在调教LLM,使其既能理解自动驾驶场景,又能同时预测其未来演变。

3. 预备知识

本节作为预备,回顾驾驶世界模型和鸟瞰图表示。

The DrivingWorld Models (DWMs) 旨在通过预测未来场景,从大规模未标注的驾驶数据中学习对世界的通用表征,使模型能够掌握真实情境的数据分布。具体地,给定时刻 ttt 的观测 Ot{ \mathcal { O } } _ { t }Ot ,模型预测下一次观测 Ot+1\mathcal { O } _ { t + 1 }Ot+1 的相关信息。DWMs 的框架可概括如下:

Lt=E(Ot),Lt+1=M(Lt),Ot+1=D(Lt+1),\mathcal { L } _ { t } = \pmb { \mathcal { E } } \left( \boldsymbol { \mathcal { O } } _ { t } \right) , \mathcal { L } _ { t + 1 } = \pmb { \mathcal { M } } \left( \mathcal { L } _ { t } \right) , \mathcal { O } _ { t + 1 } = \pmb { \mathcal { D } } \left( \mathcal { L } _ { t + 1 } \right) ,Lt=E(Ot),Lt+1=M(Lt),Ot+1=D(Lt+1), (1)where ε\varepsilonε and KaTeX parse error: Undefined control sequence: \mathbfcal at position 1: \̲m̲a̲t̲h̲b̲f̲c̲a̲l̲ ̲{ D } represent the 编码器 and 解码器 for thescene, while the world predictor M\boldsymbol { \mathscr { M } }M maps the 潜在状态

Lt\mathcal { L } _ { t }Lt 到下一个时间步 Lt+1∘\mathcal { L } _ { t + 1 \circ }Lt+1∘ 这些组件共同遵循OtLtLt+1Ot+1\mathcal { O } _ { t } \mathcal { L } _ { t } \mathcal { L } _ { t + 1 } \mathcal { O } _ { t + 1 }OtLtLt+1Ot+1 的工作流程。

Bird’s-Eye View (BEV)作为一种统一表征近来逐渐兴起,提供了一个自然的候选视角。BEVFormer系列 [26, 61] 通过利用交叉注意力来改进 3D‑2D 视图变换建模,从而得到稳健的 BEV 表示。该鸟瞰图特征保持了视图之间的几何空间关系,使模型能够从不同视角捕捉对象和主体之间的相互作用。此外,BEV 表示非常适合将视觉语义和周边几何信息整合在一起,使其在理解与生成的统一方面表现良好。

本文旨在通过使用当前多视角作为观测来生成未来点云,从而统一 3D 场景理解与生成;在基于BEV 的表示中,这些点云固有地保持了对象与其环境之间的精确几何关系。

4. HERMES

本文提出了 HERMES,一个用于驾驶场景理解与生成的统一框架。我们的 HER-MES 作为一个世界模型,基于图像观测预测场景的点云演化并促进对场景的详细理解。我们方法的流程如图 2所示。我们从多视图输入图像 ItI _ { t }It 开始,这些图像使用基于BEV的分词器进行语义信息编码,然后由大语言模型处理。大语言模型根据用户指令预测下一个标记以解释当前的自动驾驶场景。我们将世界查询整合到序列中,以便将世界知识从对话转移到生成任务中。当前到未来链接生成未来的 BEV 特征,且共享 渲染 预测当前场景的点云 PtP _ { t }Pt (作为一个辅助任务)并从 Δt\Delta tΔt 生成未来场景,输入为Pt+1P _ { t + 1 }Pt+1 至 Pt+Δt∘P _ { t + \Delta t \circ }Pt+Δt∘ 。

4.1. World Tokenizer 和 Render

world tokenizer 将世界观测(即当前的多视角影像)编码为压缩的连续 BEV 表示,然后由大语言模型进一步处理。相反,Render [48, 62, 75] 将 BEV 特征转换为点云,以生成关于场景的几何信息。两个模块详述如下。

基于 BEV 的世界分词器。 为在 LLM 输入中保留视图之间的几何空间关系和丰富的语义信息,我们采用了基于 BEV 的世界分词器 E∘\scriptstyle { \mathcal { E } } _ { \circ }E∘ 具体地,时间 ttt 的多视角影像 ItI _ { t }It 被传入 CLIP 图像编码器 [7, 33] 和未经修改的单帧 BEVFormer v2 [61] 。所获得的 BEV 特征Ftbev∈Rw×h×c\mathcal { F } _ { t } ^ { b e v } \in \mathbb { R } ^ { w \times h \times c }Ftbev∈Rw×h×c 捕捉了语义和几何信息,其中 www 和hhh 表示被编码场景的尺度,值越大表示尺度越大。

Figure 2. The pipeline of our HERMES. The BEV tokenizer converts multi-view ItI _ { t }It into flattened BEV Ft\mathcal { F } _ { t }Ft , which are fed into the large language model (LLM). The LLM interprets user instructions τ\tauτ and generates textual responses by leveraging its understanding of driving scenes as world knowledge. A group of world queries Qw\pmb { \mathcal { Q } } ^ { w }Qw are appended to the LLM input sequence. Encoded BEV Bt\pmb { \mathscr { B } } _ { t }Bt and world queries generate future BEV (Bt+1,⋅⋅⋅,Bt+Δt)( \pmb { \mathcal { B } } _ { t + 1 } , \cdot \cdot \cdot , \pmb { \mathcal { B } } _ { t + \Delta t } )(Bt+1,⋅⋅⋅,Bt+Δt) via a current to future link, and the shared Render generates point clouds evolution.

tail, and ccc is the channel dimension of BEV. However, such a feature, often containing tens of thousands of tokens, is too large for the LLM [66]. To address this, we implement a down-sampling block that reduces Ftbev\mathcal { F } _ { t } ^ { b e v }Ftbev by two times, resulting in a compressed shape of Rw4×h4×(c×4)\textstyle \mathbb { R } ^ { \frac { w } { 4 } \times \frac { h } { 4 } \times ( c \times 4 ) }R4w×4h×(c×4) . When the LLM is required, the down-sampled feature will be flattened and projected to Ft∈RLbev×Cˉ\mathcal { F } _ { t } \in \mathbb { R } ^ { L _ { b e v } \times \bar { C } }Ft∈RLbev×Cˉ , where Lbev=w4×h4\begin{array} { r } { L _ { b e v } = \frac { w } { 4 } \times \frac { h } { 4 } } \end{array}Lbev=4w×4h .

BEV-to-Point Render. We introduce a simple BEV-toPoint Render R\mathcal { R }R , aiming to map the aforementioned downsampled feature to the scene point cloud PtP _ { t }Pt . Specifically, we first up-sample the compressed BEV feature (or encoded BEV Bt∈RLbev×(c×4)\pmb { \mathcal { B } } _ { t } \in \mathbb { R } ^ { L _ { b e v } \times ( c \times 4 ) }Bt∈RLbev×(c×4) after processing of the LLM and an out-projection) to the shape of Rw×h×c\mathbb { R } ^ { w \times h \times c }Rw×h×c using nearest neighbor interpolation and convolutions. To address the absence of height information in the BEV feature, we reshape the input to Rw×h×z×cz\mathbb { R } ^ { w \times h \times z \times \frac { c } { z } }Rw×h×z×zc by adding an extra height dimension. We then apply a series of 3D convolutions to reconstruct the volumetric feature Ftvol∈Rw×h×z×c′\mathcal { F } _ { t } ^ { v o l } \in \mathbb { R } ^ { w \times h \times z \times c ^ { \prime } }Ftvol∈Rw×h×z×c′ , where zzz is the height and c′c ^ { \prime }c′ is the output channel dimension. Finally, we construct rays {rk}k=1K\{ \mathbf { r } _ { k } \} _ { k = 1 } ^ { K }{rk}k=1K according to the LiDAR setup of the dataset and use differentiable volume rendering to compute the depth for each ray.

The rendering process models the environment as an implicit signed distance function (SDF) field to capture intricate geometric details accurately [48, 62, 75]. Given a ray rk\mathbf { r } _ { k }rk originating from o\mathbf { o }o and directed along tk\mathbf { t } _ { k }tk , we discretize it into nnn sampled points {pi=o+ditk∣i=\{ \mathbf { p } _ { i } = \mathbf { o } + d _ { i } \mathbf { t } _ { k } | i ={pi=o+ditk∣i= 1,⋯ ,n1 , \cdots , n1,⋯,n and 0≤di<di+1}0 \leq d _ { i } < d _ { i + 1 } \}0≤di<di+1} , where pi\mathbf { p } _ { i }pi corresponds to a location in 3D space, determined by its depth did _ { i }di along the ray. For each sampled point, we retrieve a local feature embedding fi\mathbf { f } _ { i }fi from the volumetric representation Ftvol\mathcal { F } _ { t } ^ { v o l }Ftvol via trilinear interpolation. Subsequently, a shallow MLP ϕSDF\phi _ { \mathrm { S D F } }ϕSDF is used to predict the SDF value si=ϕSDF(pi,fi)s _ { i } = \phi _ { \mathrm { S D F } } ( \mathbf { p } _ { i } , \mathbf { f } _ { i } )si=ϕSDF(pi,fi) . With the predicted SDF values, the rendered depth d(rk)d ( \mathbf { r } _ { k } )d(rk) is computed through a weighted integration of all sampled depths by d~(rk)=∑i=1nwidi\begin{array} { r } { \tilde { d } ( \mathbf { r } _ { k } ) = \sum _ { i = 1 } ^ { n } w _ { i } d _ { i } } \end{array}d~(rk)=∑i=1nwidi , where wi=Tiαiw _ { i } = T _ { i } \alpha _ { i }wi=Tiαi [48] represents an unbiased, occlusion-aware weight. The transmittance Ti=T _ { i } =Ti= ∏j=1i−1(1−αj)\textstyle \prod _ { j = 1 } ^ { i - 1 } ( 1 - \alpha _ { j } )∏j=1i−1(1−αj) accumulates the survival probability of photons up to the jjj -th sample, αi = max(σt(si)−σt(si+1)σt(si),0)\begin{array} { r } { \alpha _ { i } \ = \ \operatorname* { m a x } ( \frac { \sigma _ { t } ( s _ { i } ) - \sigma _ { t } ( s _ { i + 1 } ) } { \sigma _ { t } ( s _ { i } ) } , 0 ) } \end{array}αi = max(σt(si)σt(si)−σt(si+1),0) indicates the opacity, and σt(x)=(1+e−tx)−1\sigma _ { t } ( x ) = ( 1 + e ^ { - t x } ) ^ { - 1 }σt(x)=(1+e−tx)−1 is a sigmoid modulated by a learnable parameter ttt .

4.2. Unification

This section introduces the unification of world understand-ing and future scene generation within our HERMES. TheLarge Language Model (LLM) interprets driving scenarios尾部,且 ccc 是BEV的通道维度。然而,这样的特征通常包含数以万计的代币,对于大语言模型来说过大 [66]∘[ 6 6 ] _ { \circ }[66]∘ 为了解决这一问题,我们实现了一个下采样模块,将Ftbev\mathcal { F } _ { t } ^ { b e v }Ftbev 降低两倍,得到压缩后的形状为 R w×Ω4h×(c×4)c\mathbb { R } ^ { \ w } \times { \mathsf { \Omega } } _ { 4 } ^ { h } \times ( c \times 4 ) _ { \mathsf { c } }R w×Ω4h×(c×4)c 。当需要大语言模型时,下采样后的特征将被展平并投影到 Ft∈RLbev×C\mathcal { F } _ { t } \in \mathbb { R } ^ { L _ { b e v } \times C }Ft∈RLbev×C ,其中 Lbev=Π4w×Π4hL _ { b e v } = \mathbf { \Pi } _ { 4 } ^ { w } \times \mathbf { \Pi } _ { 4 } ^ { h }Lbev=Π4w×Π4h 。BEV 到点渲染器。我们提出了一个简单的 BEV‑to‑Point Render R\mathcal { R }R ,旨在将上述下采样的特征映射到场景点云 Pt∘P _ { t \circ }Pt∘ 。具体来说,我们首先使用最近邻插值和卷积将压缩的 BEV 特征(或经过 LLM 处理和一次外投影后的编码的俯视图 Bt∈RLbev×(c×4)\pmb { \mathcal { B } } _ { t } \in \mathbb { R } ^ { L _ { b e v } \times ( c \times 4 ) }Bt∈RLbev×(c×4) )上采样到Rw×h×c\mathbb { R } ^ { w \times h \times c }Rw×h×c 的形状。为了解决 BEV 特征中缺乏高度信息的问题,我们通过添加额外的高度维度将输入重塑为Rw×h×z×Φzc\mathbb { R } ^ { w \times h \times z \times \mathbf { \Phi } _ { z } ^ { c } }Rw×h×z×Φzc 。然后我们应用一系列三维卷积来重建体积特征 Ftvol∈Rw×h×z×c′\mathcal { F } _ { t } ^ { v o l } \in \mathbb { R } ^ { w \times h \times z \times c ^ { \prime } }Ftvol∈Rw×h×z×c′ ,其中 zzz 是高度, c′c ^ { \prime }c′ 是输出通道维度。最后,我们根据数据集的 LiDAR 设置构建射线 {rk}k=1K\{ \mathbf { r } _ { k } \} _ { k = 1 } ^ { K }{rk}k=1K ,并使用可微体积渲染来计算每条射线的深度。

图 2. 我们的 HERMES 流程。BEV 分词器将多视角 ItI _ { t }It 转换为展平的 BEV Ft\mathcal { F } _ { t }Ft ,并输入到大语言模型 (LLM)。LLM 解读用户指令 τ\tauτ 并利用其对驾驶场景的世界知识生成文本响应。一组世界查询 Qw\pmb { \mathcal { Q } } ^ { w }Qw 被附加到 LLM 的输入序列中。编码的俯视图 Bt\pmb { \mathscr { B } } _ { t }Bt 和世界查询通过一个 current to future link 生成未来 BEV (Bt+1,⋅⋅⋅,Bt+Δt)( \pmb { \mathscr { B } } _ { t + 1 } , \cdot \cdot \cdot , \pmb { \mathscr { B } } _ { t + \Delta t } )(Bt+1,⋅⋅⋅,Bt+Δt) ,共享渲染器生成点云的演化。

渲染过程将环境建模为一个⋯给定一条从 xkx _ { k }xk 出发、起点为o 并沿 tk.\mathbf { t } _ { k } .tk. 方向的射线,我们将其离散为 nnn 个采样点 {pi=o+ditk\{ { \bf p } _ { i } = { \bf o } + d _ { i } { \bf t } _ { k }{pi=o+ditk | i=1,⋯ ,ni = 1 , \cdots , ni=1,⋯,n 和0≤di<di+1}0 \leq d _ { i } < d _ { i + 1 } \}0≤di<di+1} ,其中 pi\mathbf { p } _ { i }pi 对应于三维空间中的一个位置,由沿射线的深度 did _ { i }di 决定。对于每个采样点,我们通过体素表示 Ftvol\mathcal { F } _ { t } ^ { v o l }Ftvol 的三线性插值检索局部特征嵌入 fi\mathbf { f } _ { i }fi 。随后,使用一个浅层多层感知器(MLP) ϕSDF\phi _ { \mathrm { S D F } }ϕSDF 来预测SDF值si=ϕSDF(pi,fi)∘s _ { i } = \phi _ { \mathrm { S D F } } ( \mathbf { p } _ { i } , \mathbf { f } _ { i } ) _ { \circ }si=ϕSDF(pi,fi)∘ 。利用预测的SDF值,通过对所有采样深度按 d~(rk)=∑i=1nwidi\begin{array} { r } { \tilde { d } ( \mathbf { r } _ { k } ) = \sum _ { i = 1 } ^ { n } w _ { i } d _ { i } } \end{array}d~(rk)=∑i=1nwidi 加权积分来计算渲染深度 d(rk)d ( \mathbf { r } _ { k } )d(rk) ,其中 wi=Tiαiw _ { i } = T _ { i } \alpha _ { i }wi=Tiαi [48] 表示无偏且考虑遮挡的权重。透射率∏j=1i−1(1−αj)\textstyle \prod _ { j = 1 } ^ { i - 1 } ( 1 - \alpha _ { j } )∏j=1i−1(1−αj) 累

积光子到第 jjj 个样本的存活概率,

αi=max(σt(si)−σt(si+1),0).\alpha _ { i } = \operatorname* { m a x } ( { { \sigma } _ { t } ( s _ { i } ) - { \sigma } _ { t } ( s _ { i + 1 } ) } , 0 ) .αi=max(σt(si)−σt(si+1),0). 表示不透明度,

σt(x)=(1+e−tx)−1\sigma _ { t } ( x ) = ( 1 + e ^ { - t x } ) ^ { - 1 }σt(x)=(1+e−tx)−1 是由可学习参数 ttt 调制的sigmoid。

4.2. 统一

本节介绍了在我们 HERMES 中对世界理解与未来场 景生成的统一。大语言模型(LLM)解释驾驶场景 from world tokenizer outputs (Ft)( \mathcal { F } _ { t } )(Ft) based on user instructions. Δt\Delta tΔt groups of world queries gather knowledge from conversations, aiding in generating scene evolution.

Large Language Model. As shown in Fig. 2, the LLM is pivotal to our HERMES, modeling BEV inputs Ft\mathcal { F } _ { t }Ft , parsing user instructions, acquiring world knowledge from real driving scenario inquiries, and generating predictions. We utilize the LLM within the widely used InternVL2 [5].

Understanding. Following prior work [31, 32], we project the flattened BEV to a shape of RLbev×C\mathbb { R } ^ { L _ { b e v } \times C }RLbev×C and into the feature space of the LLM using a two-layer MLP, where LbevL _ { b e v }Lbev is the input BEV length, and CCC is the channel dimension of LLM. For prompts on the current scene, we tokenize them into distinct vocabulary indices and text tokens τ\tauτ for processing by the LLM. Like existing multi-modal language models [5, 27], HERMES responds to user queries about the driving environment, providing scene descriptions and answers to visual questions. The LLM understands the scene through a next-token prediction approach.

Generation. Predicting and generating future changes based on observations of the current moment requires the model to have an exhaustive understanding of the world. To endow the LLM with future-generation capability, we propose a world query technique, which links world knowledge to future scenarios and improves information transfer between the LLM and the Render. As in Fig. 2, we outline the generation process in terms of LLM input and output.

For the input to the LLM, we utilize Δt\Delta tΔt groups of world queries Qw−∈R(Δt×n)×C\begin{array} { r } { \mathcal { Q } ^ { w ^ { - } } \in \mathbb { R } ^ { ( \Delta t \times n ) \times C } } \end{array}Qw−∈R(Δt×n)×C , where nnn is the number of queries per group and CCC represents the channel dimension of LLM. We emphasize the importance of proper feature initialization for effective learning. Thus, we employ a max pooling to derive the world queries from the peak of the BEV feature Ft\mathcal { F } _ { t }Ft , yielding Q∈Rn×(c×4)\mathcal { Q } \in \mathbb { R } ^ { n \times ( c \times 4 ) }Q∈Rn×(c×4) . The Q\mathfrak { Q }Q is then copied Δt\Delta tΔt times as query groups {Qi∣i=1,⋯ ,Δt}\{ \pmb { \mathscr { Q } } _ { i } | i = 1 , \cdots , \Delta t \}{Qi∣i=1,⋯,Δt} . To further enable controllable future generation, we encode the ego-motion condition to et+ie _ { t + i }et+i , which describes the planned future positions and heading of the ego-vehicle from the current to the iii -th frame into high-dimensional embeddings. The ego-motion information et+ie _ { t + i }et+i is then added to the corresponding queries Qi\pmb { \mathcal { Q } } _ { i }Qi . Additionally, a frame embedding FE∈RΔtˉ×(ˉc×4)\mathrm { F E } \in \mathbb { R } ^ { \bar { \Delta t } \times \bar { ( } c \times 4 ) }FE∈RΔtˉ×(ˉc×4) is incorporated by the broadcast mechanism to denote the prediction frames for which group of world queries is responsible. The world queries Qw\pmb { \mathcal { Q } } ^ { w }Qw and flattened BEV Ft\mathcal { F } ^ { t }Ft share the language-space projection layer (i.e., MLP) to project from c×4c \times 4c×4 to CCC for the language model channel. The Qw∈R(Δt×n)×C\mathcal { Q } ^ { w } \in \mathbb { R } ^ { ( \Delta t \times n ) \times C }Qw∈R(Δt×n)×C can be computed as:

Qw=MLP(Concat[Qi+et+i ∣i={1,⋯ ,Δt}]+FE). \pmb { \mathscr { Q } } ^ { w } = \mathrm { M L P } ( \mathrm { C o n c a t } [ \pmb { \mathscr { Q } } _ { i } + e _ { t + i } \ | i = \{ 1 , \cdots , \Delta t \} ] + \mathrm { F E } ) . Qw=MLP(Concat[Qi+et+i ∣i={1,⋯,Δt}]+FE).

The LLM’s causal attention mechanism (i.e., the later token can access the earlier information) allows world queries to access world knowledge derived from the understanding process. After being processed by the LLM feed-forwardly, the encoded BEV feature and world quires are projected by a shared two-layer MLP from the channel dimension of LLM CCC back to the channel of c×4c \times 4c×4 . Note that each group of world queries contains only nnn queries, which provide a sparse view of the future world, complicating the reconstruction of the future scene by the world Render. To address this, we propose the current to future link module, which employs cross-attention layers to inject world knowledge for future BEV features. Specifically, the current to future link module contains 3 cross-attention blocks for generating future BEV features. Each cross-attention block includes a cross-attention layer that uses the encoded BEV Bt\pmb { { \cal B } } _ { t }Bt from the LLM output as the query, with world queries for each scene serving as the value and key. A self-attention layer and a feed-forward network further process spatial information. The encoded BEV (Bt)( { \pmb { { \cal B } } } _ { t } )(Bt) and generated future BEV features (Bt+1,⋅⋅⋅,Bt+Δt)( \pmb { \mathscr { B } } _ { t + 1 } , \cdot \cdot \cdot , \pmb { \mathscr { B } } _ { t + \Delta t } )(Bt+1,⋅⋅⋅,Bt+Δt) are sent to a shared world Render and obtain point cloud from PtP _ { t }Pt to Pt+ΔtP _ { t + \Delta t }Pt+Δt .

4.3. Training Objectives

To perform auto-regressive language modeling, we employ Next Token Prediction (NTP) to maximize the likelihood of text tokens, following the standard language objective:

LN=−∑i=1logP(Ti∣Ft,T1,⋅⋅⋅,Ti−1;Θ), \mathcal { L } _ { N } = - \sum _ { i = 1 } \log P \left( \mathcal { T } _ { i } | \mathcal { F } _ { t } , \mathcal { T } _ { 1 } , \cdot \cdot \cdot , \mathcal { T } _ { i - 1 } ; \boldsymbol { \Theta } \right) , LN=−i=1∑logP(Ti∣Ft,T1,⋅⋅⋅,Ti−1;Θ),

where P(⋅∣⋅)P \left( \cdot | \cdot \right)P(⋅∣⋅) represents the conditional probability modeled by the weights Θ\ThetaΘ , Ft\mathcal { F } _ { t }Ft is the flattened BEV feature for the input frame, and τi\tau _ { i }τi denotes the iii -th text token.

For point cloud generation, we supervise the depths of various rays d(rk)d ( \mathbf { r } _ { k } )d(rk) using only L1 loss:

LD=∑i=0Δtλi1Ni∑k=0Ni∣d(rk)−d~(rk)∣, \mathcal { L } _ { D } = \sum _ { i = 0 } ^ { \Delta t } \lambda _ { i } \frac { 1 } { N _ { i } } \sum _ { k = 0 } ^ { N _ { i } } \left| d ( \mathbf { r } _ { k } ) - \tilde { d } ( \mathbf { r } _ { k } ) \right| , LD=i=0∑ΔtλiNi1k=0∑Ni d(rk)−d~(rk) ,

where λi\lambda _ { i }λi is the loss weight for frame t+it + it+i , and NiN _ { i }Ni is the number of rays in the point clouds for frame t+it + it+i . The total loss for HERMES is given by L=LN+10LD\mathcal { L } = \mathcal { L } _ { N } + 1 0 \mathcal { L } _ { D }L=LN+10LD .

5. Experiments

5.1. Dataset and Evaluation Metric

Datasets. 1) NuScenes [4] is a widely used autonomous driving dataset, which includes 700 training scenes, 150 validation scenes, and 150 test scenes. We use six images and the point clouds captured by surrounding cameras and the LiDAR, respectively. 2) NuInteract [71] is a newly proposed language-based driving dataset with dense captions for each image and scene. With ∼1.5M{ \sim } 1 . 5 \mathbf { M }∼1.5M annotations, it supports various tasks, such as 2D perception and 3D visual grounding. 3) OmniDrive-nuScenes [50] supplements nuScenes with high-quality caption and visual questionanswering (QA) text pairs generated by GPT4. Considering

来自世界分词器输出 (Ft)( \mathcal { F } _ { t } )(Ft) ),并根据用户指令操作。 Δt\Delta tΔt

组世界查询从对话中汇集知识,辅助生成场景演变。

大语言模型。 如图 2所示,LLM 是我们HERMES 的核心,负责对 BEV 输入 Ft\mathcal { F } _ { t }Ft 建模、解析用户指令、从真实驾驶场景查询中获取世界知识并生成预测。我们在广泛使用的 InternVL2 [5]中使用该 LLM。

理解。继先前工作 [31, 32], 之后,我们将展平的俯视图投影为形状 RLbev×C\mathbb { R } ^ { L _ { b e v } \times C }RLbev×C ,并通过两层 MLP 映射到LLM 的特征空间,其中 LbevL _ { b e v }Lbev 是输入 BEV 的长度, CCC 是 LLM 的通道维度。对于当前场景的提示词,我们将其标记化为不同的词表索引和用于 LLM 处理的文本标记τ\tauτ 。像现有的多模态语言模型 [5, 27], 一样,HERMES 对关于驾驶环境的用户查询作出回应,提供场景描述并回答视觉问题。LLM 通过下一个标记预测的方法来理解场景。

生成。基于对当前时刻观测的预测和生成未来变化,需要模型对世界有详尽的理解。为了赋予大语言模型未来生成能力,我们提出了一种世界查询技术,该技术将世界知识与未来场景连接起来,并改善大语言模型与Render之间的信息传递。如图 2所示,我们从大语言模型的输入和输出角度概述了生成过程。

作为大语言模型的输入,我们利用 Δt\Delta tΔt 组世界查询Qw∈R(Δt×n)×C{ \mathcal { Q } } ^ { w } \in \mathbb { R } ^ { ( \Delta t \times n ) \times C }Qw∈R(Δt×n)×C ,其中 nnn 是每组查询的数量, CCC 表示大语言模型的通道维度。我们强调适当特征初始化对于有效学习的重要性。因此,我们采用最大池化从鸟瞰图特征 Ft\mathcal { F } _ { t }Ft 的峰值处提取世界查询,得到Q∈Rn×(c×4)c\pmb { \mathcal { Q } } \in \mathbb { R } ^ { n \times ( c \times 4 ) } \mathrm { \sf c }Q∈Rn×(c×4)c 。然后将 Q\mathfrak { Q }Q 复制 Δt\Delta tΔt 次作为查询组{Qi∣i=1,⋯ ,Δt}\{ \mathcal { Q } _ { i } | i = 1 , \cdots , \Delta t \}{Qi∣i=1,⋯,Δt} 。为了进一步实现可控的未来生成,我们将自车运动条件编码为 et+ie _ { t + i }et+i ,它把从当前帧到第 iii 帧的自车计划的未来位置和航向描述为高维嵌入。自车运动信息 et+ie _ { t + i }et+i 随后被加到对应的查询 Qi\pmb { \mathcal { Q } } _ { i }Qi 上。此外,通过广播机制加入帧嵌 λFE∈RΔt×(c×4)\lambda _ { \mathrm { F E } } \in \mathbb { R } ^ { \Delta t \times ( c \times 4 ) }λFE∈RΔt×(c×4) 以标示每组世界查询负责预测的帧。世界查询 Qw\pmb { \mathcal { Q } } ^ { w }Qw 与展平的俯视图 Ft\mathcal { F } ^ { t }Ft 共享语言空间投影层(即多层感知器)以将 c×4c \times 4c×4 投影到 CCC 为语言模型通道。 Qw∈R(Δt×n)×C\mathcal { Q } ^ { w } \in \mathbb { R } ^ { ( \Delta t \times n ) \times C }Qw∈R(Δt×n)×C 可按如下方式计算:

Qw=MLP(Concat[Qi+et+i ∣i={1,⋯ ,Δt}]+FE). \pmb { \mathscr { Q } } ^ { w } = \mathrm { M L P } ( \mathrm { C o n c a t } [ \pmb { \mathscr { Q } } _ { i } + e _ { t + i } \ | i = \{ 1 , \cdots , \Delta t \} ] + \mathrm { F E } ) . Qw=MLP(Concat[Qi+et+i ∣i={1,⋯,Δt}]+FE).

LLM 的因果注意力机制(即后来的标记可以访问信息较早的标记)允许世界查询访问源自理解过程的世界知识。在被 LLM 前馈处理之后,

编码的 BEV 特征和世界查询通过一个共享的两层MLP 从 LLM 的通道维度 CCC 投影回 c×4c \times 4c×4 的通道维度。请注意,每组世界查询仅包含 nnn 个查询,它们提供了对未来世界的稀疏视图,使得世界渲染器重建未来场景更加复杂。为了解决这一点,我们提出了 current to futurelink 模块,该模块使用交叉注意力层将世界知识注入到未来 BEV 特征中。具体而言,currentto fu-ture link 模块包含 3 个用于生成未来 BEV 特征的交叉注意力块。每个交叉注意力块都包括一个交叉注意力层,该层使用来自LLM 输出的编码的 BEV Bt\pmb { { \cal B } } _ { t }Bt 作为查询,将每个场景的世界查询作为值和键。随后自注意力层和前馈网络进一步处理空间信息。编码的 BEV( (Bt)\left( \pmb { { B } } _ { t } \right)(Bt) )和生成的未来 BEV 特征 (Bt+1,⋅⋅⋅,Bt+Δt)( \pmb { \mathscr { B } } _ { t + 1 } , \cdot \cdot \cdot , \pmb { \mathscr { B } } _ { t + \Delta t } )(Bt+1,⋅⋅⋅,Bt+Δt) 被送入共享的世界渲染器,并从PtP _ { t }Pt 到 Pt+ΔtP _ { t + \Delta t }Pt+Δt 获取点云。

4.3. 训练目标

为了执行自回归语言建模,我们采用下一个标记预测(NTP)来最大化文本标记的似然,遵循标准的语言目标:

LN=−∑i=1logP(Ti∣Ft,T1,⋅⋅⋅,Ti−1;Θ), \mathcal { L } _ { N } = - \sum _ { i = 1 } \log P \left( \mathcal { T } _ { i } | \mathcal { F } _ { t } , \mathcal { T } _ { 1 } , \cdot \cdot \cdot , \mathcal { T } _ { i - 1 } ; \boldsymbol { \Theta } \right) , LN=−i=1∑logP(Ti∣Ft,T1,⋅⋅⋅,Ti−1;Θ),

其中 P(⋅∣⋅)P ( \cdot | \cdot )P(⋅∣⋅) 表示由权重 Θ\ThetaΘ 建模的条件概率, Ft\mathcal { F } _ { t }Ft 是输入帧的展平的 BEV 特征,且 τi\boldsymbol { \tau } _ { i }τi 表示第 iii 个文本标记。

对于点云生成,我们仅使用 L1 损失监督各条射线 d(rk)d ( \mathbf { r } _ { k } )d(rk) 的深度:

LD=∑i=0Δtλi1Ni∑k=0Ni∣d(rk)−d~(rk)∣, \mathcal { L } _ { D } = \sum _ { i = 0 } ^ { \Delta t } \lambda _ { i } \frac { 1 } { N _ { i } } \sum _ { k = 0 } ^ { N _ { i } } \left| d ( \mathbf { r } _ { k } ) - \tilde { d } ( \mathbf { r } _ { k } ) \right| , LD=i=0∑ΔtλiNi1k=0∑Ni d(rk)−d~(rk) ,

其中 λi\lambda _ { i }λi 是帧 t+it + it+i 的损失权重, NiN _ { i }Ni 是帧 t+it + it+i 的点云中光线的数量。HERMES 的总损失表示为 L=LN+10LD∘\mathcal { L } = \mathcal { L } _ { N } + 1 0 \mathcal { L } _ { D \circ }L=LN+10LD∘

5. 实验

5.1. 数据集与评估指标

数据集。 1) NuScenes [4] 是一个被广泛使用的自动驾驶数据集,包含700个训练场景、150个验证场景和150个测试场景。我们分别使用由环绕摄像头和激光雷达采集的六张图像和点云。2) NuInteract [71]是一个新提出的基于语言的驾驶数据集,为每张图像和场景提供密集的描述文本。拥有 ∼1.5M{ \sim } 1 . 5 \mathrm { M }∼1.5M 条注释,它支持多种任务,例如二维感知和三维视觉定位。3) OmniDrive-nuScenes [50]以高质量的描述文本和由GPT4生成的视觉问答(QA)文本对补充了nuScenes。考虑到

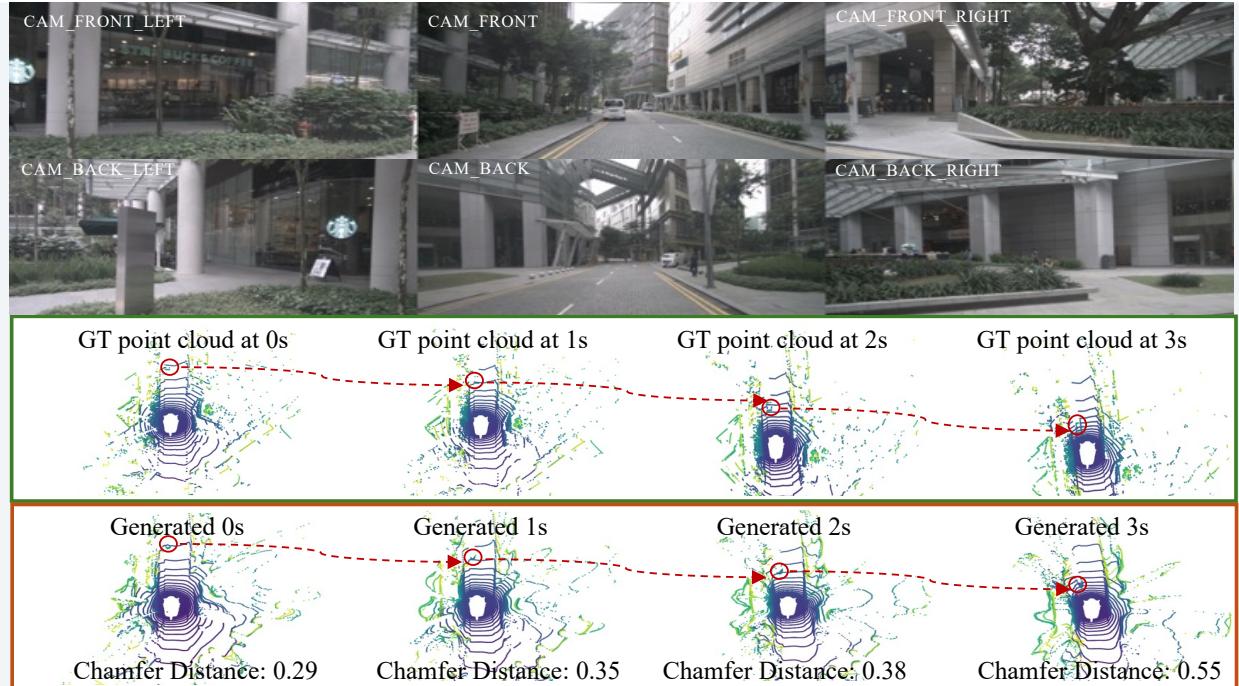

Table 1. The comparison of our HERMES and understanding/generation specialist models. L/C/T refers to LiDAR/camera/text, respectively. We report MTETOR, CIDEr, and ROUGE for understanding tasks, and Chamfer distance for 0-3s on the (OmniDrive-)nuScenes validation set, following ViDAR [65]. † denotes results from ViDAR, while scores for GPT-4o and LLaVA-OV are sourced from DriveMM [18].

| Method | Reference | # LLMParams | Modality | Generation | Understanding | ||||||

| 0s↓ | 1s↓ | 2s↓ 3s↓ | MTETOR ↑ | ROUGE↑ | CIDEr个 | ||||||

| Only Generation | |||||||||||

| 4D-Occ+ [22] | CVPR 23 | L→L | - | 1.13 | 1.53 | 2.11 | Unsupported | ||||

| ViDAR [65] | CVPR 24 | C→L | |||||||||

| Only Understanding | |||||||||||

| GPT-4o [20] | - | C→T | 0.223 | 0.244 | |||||||

| LLaVA-OV [23] | arXiv 24 | 7B | C→T | - ■ | 0.221 | 0.284 | |||||

| OmniDrive [50] | CVPR 25 | 7B | C→T | 0.380 | 0.326 | 0.686 | |||||

| OmniDrive-2D [50] | CVPR 25 | 7B | C→T | 0.383 | 0.325 | 0.671 | |||||

| OmniDrive-BEV [50] | CVPR 25 | 7B C→T | 0.356 | 0.278 | 0.595 | ||||||

| Unified Understanding and Generation | |||||||||||

| Separated unification | C→T&L | 0.60 | 0.84 | 1.08 | 1.37 | 0.384 | 0.327 | 0.745 | |||

| HERMES (ours) | 1.8B 1.8B | C→T&L | 0.59 | 0.78 | 0.95 | 1.17 | 0.384 | 0.327 | 0.741 | ||

the high quality and rich VQA annotations, we perform the understanding training and evaluation on the OmniDrivenuScenes description and conversation data.

Evaluation Metric. For understanding tasks, we utilize widely used METEOR [3], CIDEr [46], and ROUGE [29] metrics to compute similarities between generated and ground-truth answers at the word level. For generation evaluation, we follow previous work [65] and use Chamfer Distance to measure precision in generated point clouds, considering only points within the range of [−51.2m[ - 5 1 . 2 \mathrm { m }[−51.2m , 51.2ml5 1 . 2 \mathrm { m l }51.2ml o n the X−X \mathrm { - }X− and Y-axes, and [-3m, 5m] on the Z-axis.

5.2. Main Results

We compare HERMES with understanding [20, 23, 50] and generation [22, 65] specialist models in Tab. 1, which demonstrates competitive performance on both tasks and promote strong unification.

For future point cloud generation, both 4D-Occ and ViDAR utilize a 3s history horizon, while our HERMES only relies on the current frame, achieving significant improvements. Remarkably, with only multi-view inputs, HERMES is capable of generating a more accurate representation of the scene geometry for predicting future evolution, resulting in ∼32%{ \sim } 3 2 \%∼32% Chamfer Distance reduction in 3s point clouds compared to ViDAR. It should be noted that ViDAR utilizes a carefully designed latent render and an FCOS3D [51] pre-trained backbone, while HERMES uses simple volumetric representation. Furthermore, HERMES can simultaneously understand the current scenario, which is a crucial capability for driving systems but is challenging for existing driving world models.

For 3D scene understanding, we continuously achieve highly competitive results in caption quality compared to understanding specialists. For example, we notably outperform OmniDrive by 8%8 \%8% on the CIDEr metric and excel in

MTETOR and ROUGE. Note that OmniDrive leverages extensive 2D pre-training data [10], supervision from 3D objects [49], and lane detection. The OmniDrive-BEV uses LSS [40] to transform perspective features into a BEV feature and SOLOFusion [39] for temporal modeling. While it utilizes a BEV representation, its scene understanding is limited, likely due to insufficient data for BEV-based imagetext alignment. Further compared to the Separated unification model, our unified approach yields significantly better generation results while maintaining strong understanding performance. This demonstrates successful cross-task knowledge transfer and consistent scene modeling within our compact, unified HERMES.

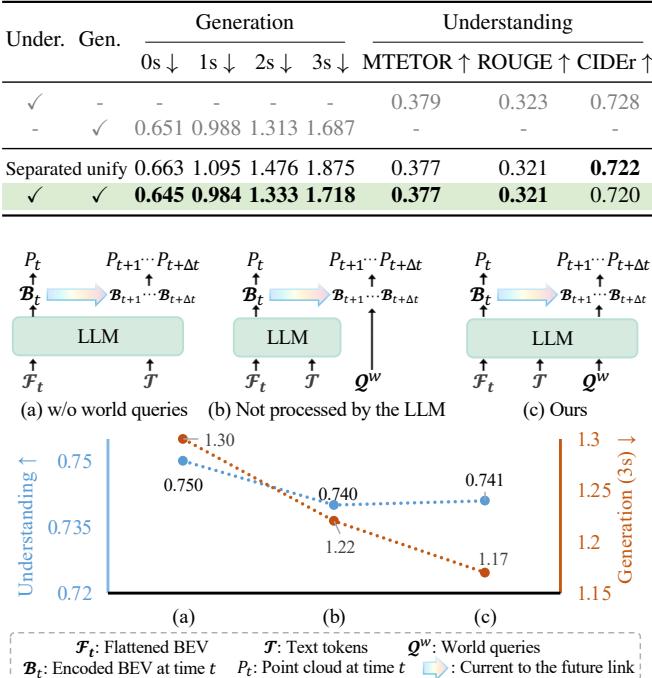

5.3. Ablation Study

Unless otherwise specified, we perform ablation studies trained on a quarter of the nuScenes training scenes. Default settings are marked in green .

Analysis on understanding and generation interaction. Our HERMES achieves a strong and seamless unification of understanding and generation in driving scenes. We first analyze the relationship between these two processes. As shown in Tab. 2, we conduct experiments with four approaches: solely understanding, solely generation, a separated unification, and our method. The separated unification involves sharing the flattened BEV while using separate models for understanding and generation, as in Fig. 1©. We find that HERMES achieves highly competitive results compared to training on one task, with a minor performance gap (e.g., 0.002 difference on MTETOR/ROUGE and a 0.03 chamfer distance gap on the 3s generation). Nevertheless, our approach shows better results at 0-1s, indicating ongoing optimization challenges in the unified understanding and generation stage. Ultimately, our HERMES outperforms the separated unification in generation results, as the lat其高质量且丰富的VQA注释,我们在OmniDrive‑nuScenes的 描述和对话数据上进行理解任务的训练与评估。

表 1。我们的 HERMES 和理解/生成 专家模型的比较。L/C/T 分别指 LiDAR/相机/文本。我们在理解任务中报告 MTETOR、CIDEr 和ROUGE,在 0‑3 秒 的 Chamfer 距离则遵循 ViDAR 在 (OmniDrive‑)nuScenes 验证集上的做法 [65]∘[ 6 5 ] _ { \circ }[65]∘ 。 † 表示来自 ViDAR 的结果,而GPT‑4o 和 LLaVA‑OV 的分数来自 DriveMM [18].

| 方法 | 参考文献 | # LLM参数 | 模态 | 生成 | 理解 | ||||||

| Os← | 1s↓ | 2s↓ | 3s↓ | MTETOR ↑ | ROUGE↑ | CIDEr↑ | |||||

| 仅生成 | |||||||||||

| 4D-Occ+ [22] | CVPR 23 | L→L | 1.13 | 1.53 | 2.11 | ||||||

| ViDAR [65] | CVPR 24 | C→L | 1.12 | 1.38 | 1.73 | 不支持 | |||||

| Only Understanding | |||||||||||

| GPT-40 [20] | = | - | C→T | 0.223 | 0.244 | ||||||

| LLaVA-OV [23] | arXiv 24 | 7B | C→T | ||||||||

| OmniDrive [50] | CVPR 25 | 7B | C→T | 不支持 | |||||||

| OmniDrive-2D [50] | CVPR25 | 7B | C→T | ||||||||

| OmniDrive-BEV [50] | CVPR 25 | 7B | C→T | 0.383 0.356 | 0.325 0.278 | 0.671 0.595 | |||||

| Unified Understanding and Generation | |||||||||||

| Separated unification | 1.37 | 0.384 | 0.327 | 0.745 | |||||||

| HERMES (我们的方 计 | = | 1.8B 1.8B | C→T&L C→T&L | 0.60 0.59 | 0.84 0.78 | 1.08 0.95 | 1.17 | 0.384 | 0.327 | 0.741 | |

评估指标。对于理解任务,我们使用广泛采用的METEOR [3], CIDEr [46], 和 ROUGE [29]指标来在词级别上计算生成答案与真实答案之间的相似度。对于生成评估,我们遵循先前工作 [65]并使用 Chamfer 距离来衡量生成点云的精度,仅考虑 X 轴和 Y 轴范围为 [-51.2m,51.2m]5 1 . 2 \mathrm { m } , 5 1 . 2 \mathrm { m } ]51.2m,51.2m] ,以及 [−3m,5m][ - 3 \mathrm { m } , 5 \mathrm { m } ][−3m,5m] 的 Z 轴范围内的点。

5.2. 主要结果

我们将 HERMES 与理解 [20, 23, 50]和生成 [22, 65]专用模型在表 1 中进行了比较,结果显示在两项任务上均具有竞争力表现并促进了强有力的统一。

对于未来点云生成,4D‑Occ 和 ViDAR 都使用了3 秒 的历史时域,而我们的 HERMES 仅依赖当前帧,却实现了显著的提升。值得注意的是,仅凭多视图输入,HERMES 就能够生成更准确的场景几何表示以预测未来演变,导致在 3 秒 点云中相比 ViDAR 减少了∼32%{ \sim } 3 2 \%∼32% 的 Chamfer 距离。需要指出的是,ViDAR使用了精心设计的潜在渲染器和一个在 FCOS3D 上预训练的 [51]骨干网络,而 HERMES 使用了简单的体素表示。此外,HERMES 能够同时理解当前场景,这是驾驶系统至关重要的能力,但对现有的驾驶世界模型来说具有挑战性。

用于三维场景理解,与场景理解专家相比,我们在描述质量上持续取得高度竞争性的结果。例如,我们在 CIDEr 指标上显著优于 OmniDrive 8%8 \%8% ,并在

MTETOR 和 ROUGE 上表现优异。注意 OmniDrive 利用大量二维预训练数据 [10], 、来自三维物体的监督[49], 和车道检测。OmniDrive‑BEV 使用 LSS [40] 将透视特征转换为 BEV 特征,并使用 SOLOFusion [39]进行时序建模。尽管它采用了 BEV 表示,但其场景理解仍然有限,可能是由于用于 BEV 基于图像‑文本对齐的数据不足。与分离的统一模型相比,我们的统一方法在保持强理解性能的同时,生成结果显著更好。这证明了在我们紧凑统一的 HERMES 中,跨任务知识迁移和一致的场景建模取得了成功。

5.3. 消融研究

除非另有说明,我们在四分之一的 nuScenes 训练场景上进行消融研究。默认设置用标记为green 。

关于理解与生成交互的分析。我们的 HERMES 在驾驶场景中实现了理解与生成的强大且无缝的统一。我们首先分析这两个过程之间的关系。如表 2 所示,我们进行了四种方法的实验:仅理解、仅生成、分离的统一以及我们的方法。分离的统一涉及共享展平的BEV,同时为理解和生成使用独立的模型,如图 1©所示。我们发现,与单任务训练相比,HERMES 达到了高度有竞争力的结果,仅有细微的性能差距(例如,在 MTETOR/ROUGE 上相差 0.002,在 3s 生成的Chamfer 距离上相差 0.03)。尽管如此,我们的方法在 0‑1秒 上表现更好,这表明在统一理解与生成阶段仍存在持续的优化挑战。最终,我们的 HERMES在生成结果上优于分离的统一,因为后者

Figure 3. The effect of world queries for understanding (CIDEr) and generation (3s chamfer distance) is trained on full data.

ter fails to exploit the potential interactions between understanding and generation, and the separation hinders optimization and leads to suboptimal performance.

Analysis on the effect of the world queries. We then validate the efficacy of world queries (Qw)( \pmb { \mathcal { Q } } ^ { w } )(Qw) , as shown in Fig. 3. Without world queries (Fig. 3(a)), the sequences Bt+1,⋅⋅⋅,Bt+Δt\pmb { \mathscr { B } } _ { t + 1 } , \cdot \cdot \cdot , \pmb { \mathscr { B } } _ { t + \Delta t }Bt+1,⋅⋅⋅,Bt+Δt are generated by directly integrating future ego motion into Bt\pmb { { \cal B } } _ { t }Bt . It can be found that introducing world queries significantly improves future generation capabilities, reducing the Chamfer Distance for 3s point cloud prediction by 10%10 \%10% . Although there is a slight 1%1 \%1% decrease in understanding performance (CIDEr), we attribute this to the increased optimization complexity from adding new informational parameters to the LLM. A comparison of Fig. 3(b)-© further reveals that Qw\pmb { \mathcal { Q } } ^ { w }Qw processed through the LLM enhances generative performance, supporting their role in effective world knowledge transfer.

Analysis on generation length. In Tab. 3, we discuss the number of generated frames for future scenes. As the number increases, the generation results exhibit a slight drop, which we attribute to an optimization dilemma within the LLM. This suggests that a more efficient interaction method could be explored in the future. Additionally, we evaluate the auxiliary role of current point cloud prediction for future generation, as shown in the last two rows of Tab. 3. Predicting the current frame regularizes the encoded BEV (Bt)( \pmb { { \cal B } } _ { t } )(Bt) from the LLM outputs, enhancing future generation results. More importantly, training for current point cloud prediction does not add extra inference burden for future generations, serving as a practical auxiliary task.

Table 2. Ablation on interaction of tasks.

Table 3. Ablation on generation length.

| Second | Generation | Understanding | |||||||||

| 01230s↓ | 1s↓2s↓3s↓MTETOR↑ROUGE↑( | CIDEr个 | |||||||||

| - | - 0.607 | 0.944 | - | ■ | 0.379 | 0.323 | 0.725 | ||||

| <<√- | 0.632 0.951 1.313 | ■ | 0.378 | 0.321 | 0.714 | ||||||

| -<√ | - | 1.078 1.397 1.779 | 0.378 | 0.321 | 0.717 | ||||||

| √√√√ 0.645 0.984 1.333 1.718 | 0.377 | 0.321 | 0.720 | ||||||||

Table 4. Ablation on the source of world queries.

| Pooling | Generation | Understanding | |||||

| Os↓ | 1s↓ | 2s↓ | 3s↓ | MTETOR ↑ROUGE ↑ | CIDEr 个 | ||

| Attn. | 0.656 | 1.001 | 1.344 | 1.748 | 0.377 | 0.321 | 0.712 |

| Avg. | 0.660 | 0.996 | 1.348 | 1.741 | 0.376 | 0.321 | 0.715 |

| Max | 0.645 0.984 1.333 1.718 | 0.377 | 0.321 | 0.720 | |||

Table 5. Ablation on size for the flattened BEV.

| BEV size | Generation | Understanding | |||||

| 0s← | 1s↓2s↓3s↓MTETOR↑ ROUGE↑CIDEr↑ | ||||||

| 25 × 25 0.720 1.040 1.347 1.698 | 0.367 | 0.311 | 0.671 | ||||

| 50 × 50 0.645 0.984 1.333 1.718 | 0.377 | 0.321 | 0.720 | ||||

Analysis on the source of world queries. We also assess the initialization of world queries in Tab. 4, including attention pooling [42, 45], adaptive average pooling and max pooling. It shows that world queries derived from the adaptive max pool of the flattened BEV Ft\mathcal { F } _ { t }Ft perform better in chamfer distance on 3s. We argue that the max pool effectively captures peak responses from Ft\mathcal { F } _ { t }Ft , whereas average or attention pooling may overly emphasize global information, potentially affected by background noise.

Analysis on the size of flattened BEV. We finally analyze the impact of flattened BEV (Ft)( \mathcal { F } _ { t } )(Ft) size by varying the BEV tokenizer’s downsampling multiplier, resulting in different feature lengths. Experiments with 8×8 \times8× and 4×4 \times4× downsampling yield Ft\mathcal { F } _ { t }Ft with spatial resolutions of 25 and 50, respectively. Tab. 5 demonstrates that a spatial size of 50×505 0 \times 5 050×50 significantly improves CIDEr and 0s generation by 7.3%7 . 3 \%7.3% and 10%10 \%10% compared to 25×252 5 \times 2 525×25 . We attribute this improvement to reduced information loss compared to excessive downsampling, enhancing text comprehension and facilitating point cloud recovery/prediction. While further increasing the size of Ft\mathcal { F } _ { t }Ft might improve performance, we chose 50×505 0 \times 5 050×50 as a trade-off due to LLM processing length limitations.

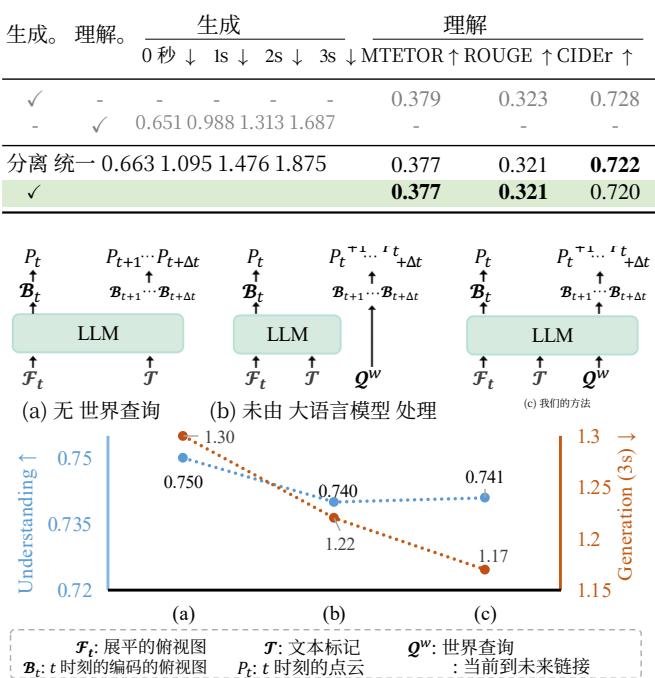

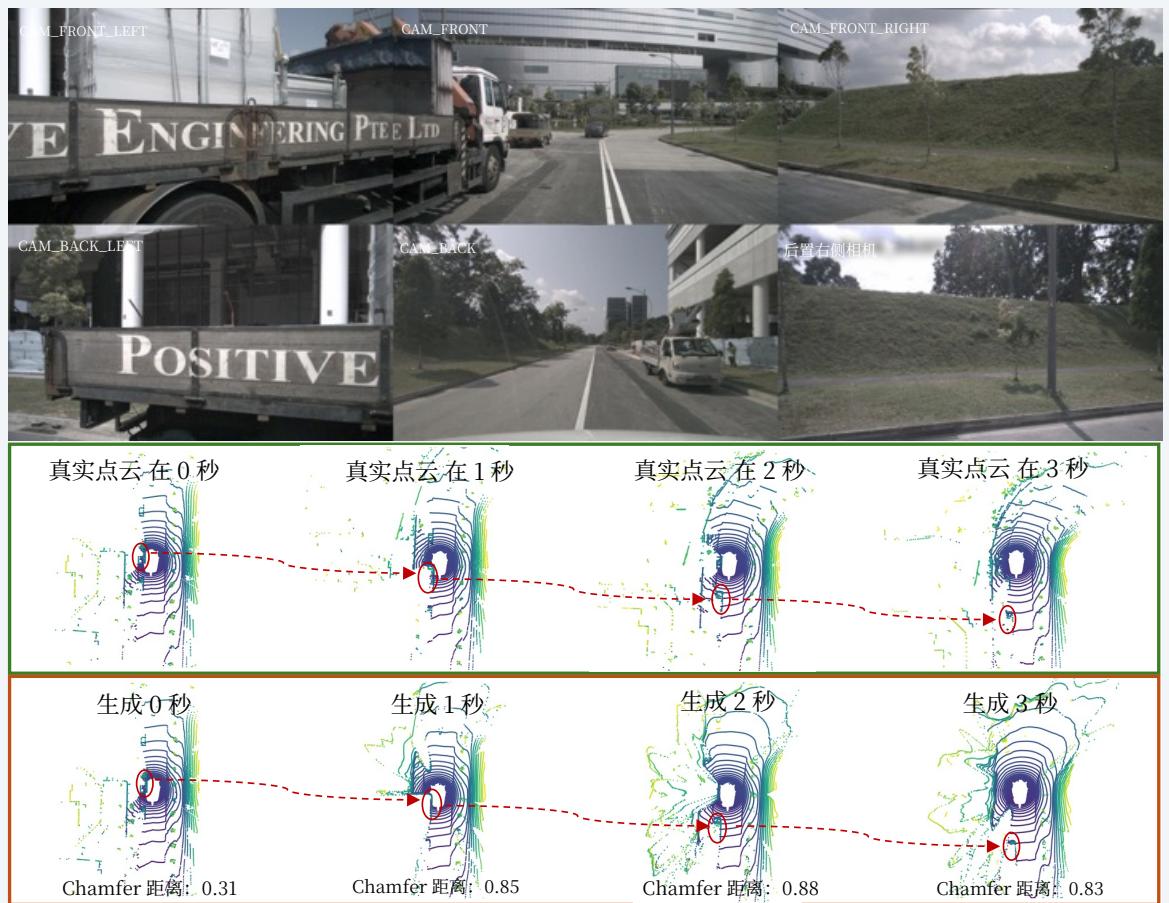

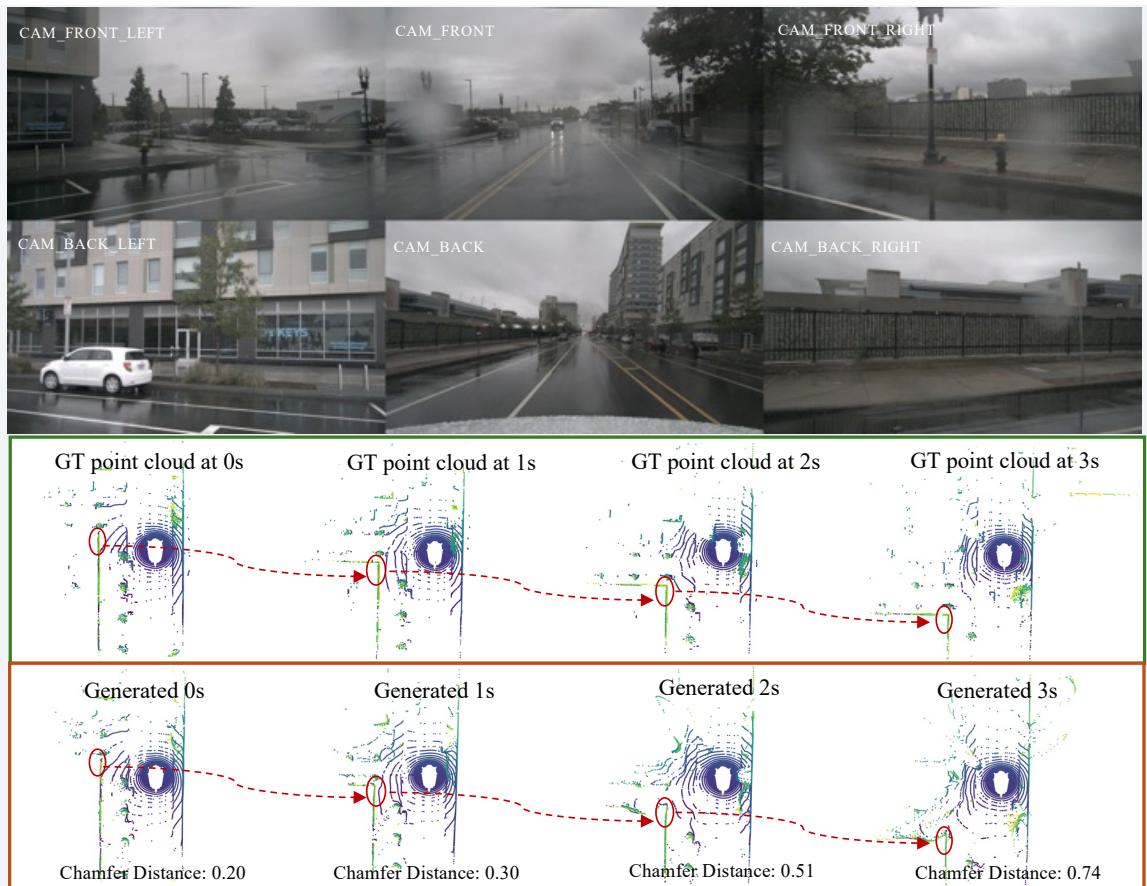

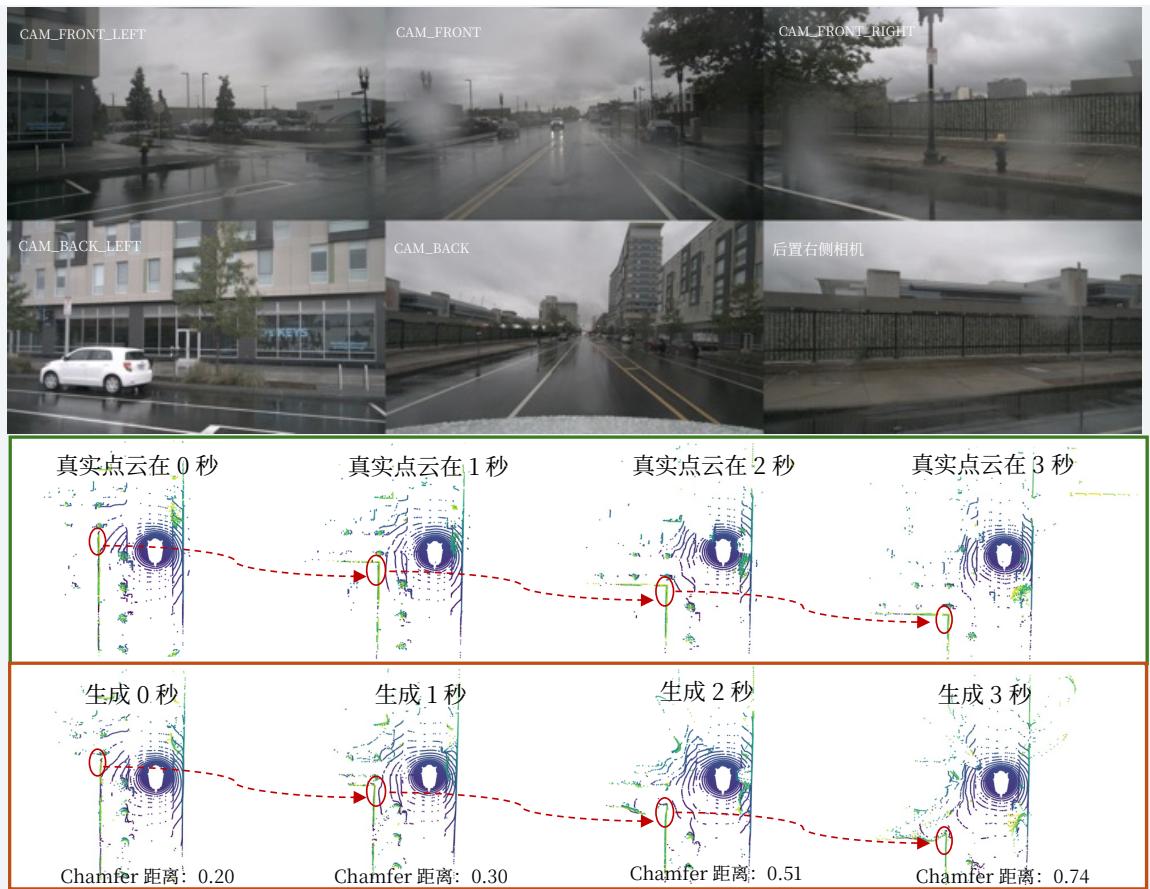

5.4. Qualitative Results

This section presents qualitative results on future generations and scene understanding, as illustrated in Fig. 4. Our 未能充分利用理解与生成之间的潜在交互,且分离阻 碍了优化并导致次优的性能。

表 2. 关于任务交互的消融研究。

图 3. 世界查询对理解(CIDEr)和生成(3 秒 Chamfer 距离)的影响,基于完整数据训练。

关于世界查询效果的分析。接着我们验证了世界查询( (Qw)( \pmb { \mathcal { Q } } ^ { w } )(Qw) )的有效性,如图3所示。没有世界查询(图3(a)),序列 Bt+1\pmb { \mathscr { B } } t { + } 1Bt+1 , · · · , Bt+Δt\pmb { { B } } _ { t + \Delta t }Bt+Δt 通过将未来自我运动直接整合到 Bt\pmb { { \cal B } } _ { t }Bt 来生成。可以发现,引入世界查询显著提升了未来生成能力,使 3 秒点云预测的 Chamfer距离降低了 10%1 0 \%10% 。尽管理解性能(CIDEr)略微下降了 1%1 \%1% ,我们认为这是由于向大语言模型添加新的信息参数后增加了优化难度。图3(b)‑© 的比较进一步表明, Qw\pmb { \mathcal { Q } } ^ { w }Qw 经由大语言模型处理后增强了生成性能,支持其在有效世界知识传递中的作用。

关于生成长度的分析。在表3中,我们讨论了未来场景的生成帧数。随着数量增加,生成结果略有下降,我们将其归因于大语言模型内部的优化困境。这表明未来可以探索更高效的交互方法。此外,我们评估了当前点云预测对未来生成的辅助作用,如表3的最后两行所示。预测当前帧对来自大语言模型输出的编码的俯视图 (Bt)( \pmb { { \cal B } } _ { t } )(Bt) )起到正则化作用,从而提升了未来生成结果。更重要的是,训练用于当前点云

Table 3. 消融 在 生成长度 上。

| 第二 生成 | 理解 | ||

| 01230秒↓ 1s↓ | 2s← 3秒 | ↓MTETOR ↑ROUGE↑CIDEr ↑ | |

| √√ , 0.607 0.944 - | 0.379 | 0.323 | 0.725 |

| √√√ - 0.632 0.9511.313 | 0.378 | 0.321 | 0.714 |

| - √√√ -1.078 1.3971.779 | 0.378 | 0.321 | 0.717 |

| √ | 0.377 | 0.321 | 0.720 |

表 4. 关于 world queries 源头的消融研究。

| 池化 | generation | understanding | |

| Os← 1s 2s← | 3秒MTETOR↑ROUGE↑CIDEr↑ | ||

| 注意力 | 0.656 1.0011.344 1.748 | 0.377 | 0.321 |

| Avg. | 0.660 0.996 1.348 1.741 | 0.376 | 0.712 0.715 |

| Max | 0.645 | 0.377 | 0.321 0.321 0.720 |

表5。展平的俯视图大小消融。

| 鸟瞰视图大 小 | 生成 | 理解 | |

| Os← | 1s↓2s↓3s↓MTETOR ↑ROUGE↑CIDEr↑ | ||

| 25 × 25 0.720 1.040 1.347 1.698 | 0.367 | 0.311 0.671 | |

| 50×50 | 0.377 | 0.321 0.720 | |

预测并不会为未来生成增加额外的推理负担,是一个实用的辅助任务。

关于世界查询来源的分析。我们还在表格中评估了世界查询的初始化。4,包括注意力池化 [42, 45], 自适应平均池化和最大池化。结果表明,从展平的俯视图的自适应最大池化中派生的世界查询 Ft\mathcal { F } _ { t }Ft 在3s上的Chamfer 距离表现更好。我们认为最大池化能有效捕捉来自 Ft\mathcal { F } _ { t }Ft 的峰值响应,而平均或注意力池化可能过分强调全局信息,可能受到背景噪声的影响。

关于展平的俯视图大小的分析。我们最后通过改变BEV 分词器的下采样倍数来分析展平俯视图 (Ft)( \mathcal { F } _ { t } )(Ft) )大小的影响,从而得到不同的特征长度。使用 8×8 \times8× 和 4×4 \times4× 下采样的实验产生了 Ft\mathcal { F } _ { t }Ft ,其空间分辨率分别为25和50。表5显示,相较于 25×252 5 \times 2 525×25 ,空间尺寸为 50×505 0 \times 5 050×50 显著提升了CIDEr和0 秒生成,分别提升了 7.3%7 . 3 \%7.3% 和 10%1 0 \%10% 。我们将这一改进归因于相对于过度下采样的信息损失减少,从而增强了文本理解并利于点云的恢复/预测。尽管进一步增大 Ft\mathcal { F } _ { t }Ft 的尺寸可能会提升性能,但考虑到大语言模型处理长度的限制,我们选择将F作为折中。

5.4. 定性结果

本节展示了关于未来生成和场景理解的定性结果,如图4所示。我们的

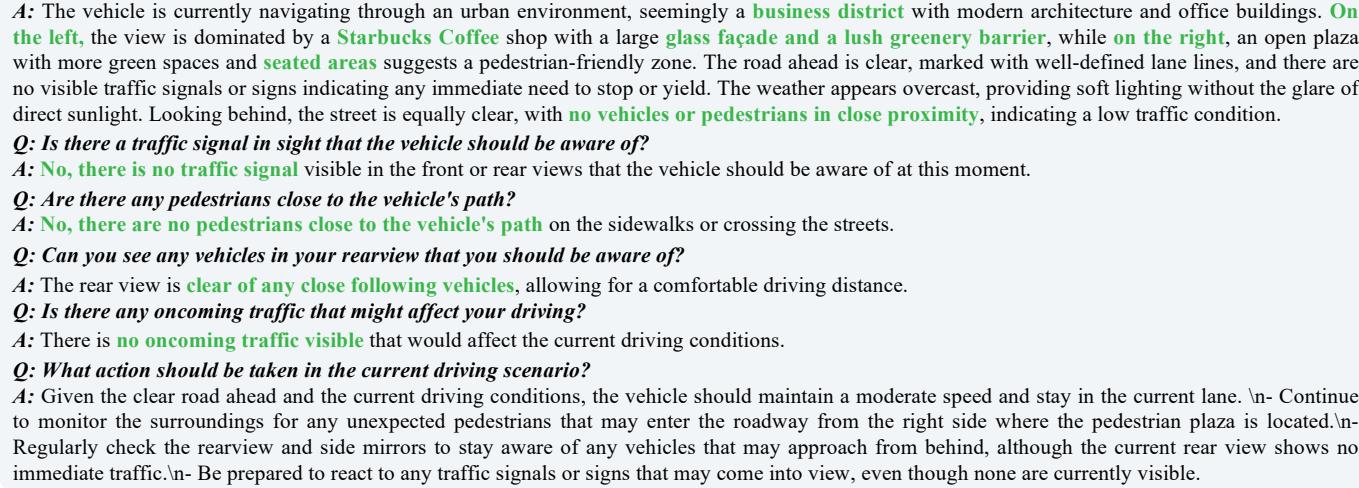

Q: Can you provide a summary of the current driving scenario based on the input images?

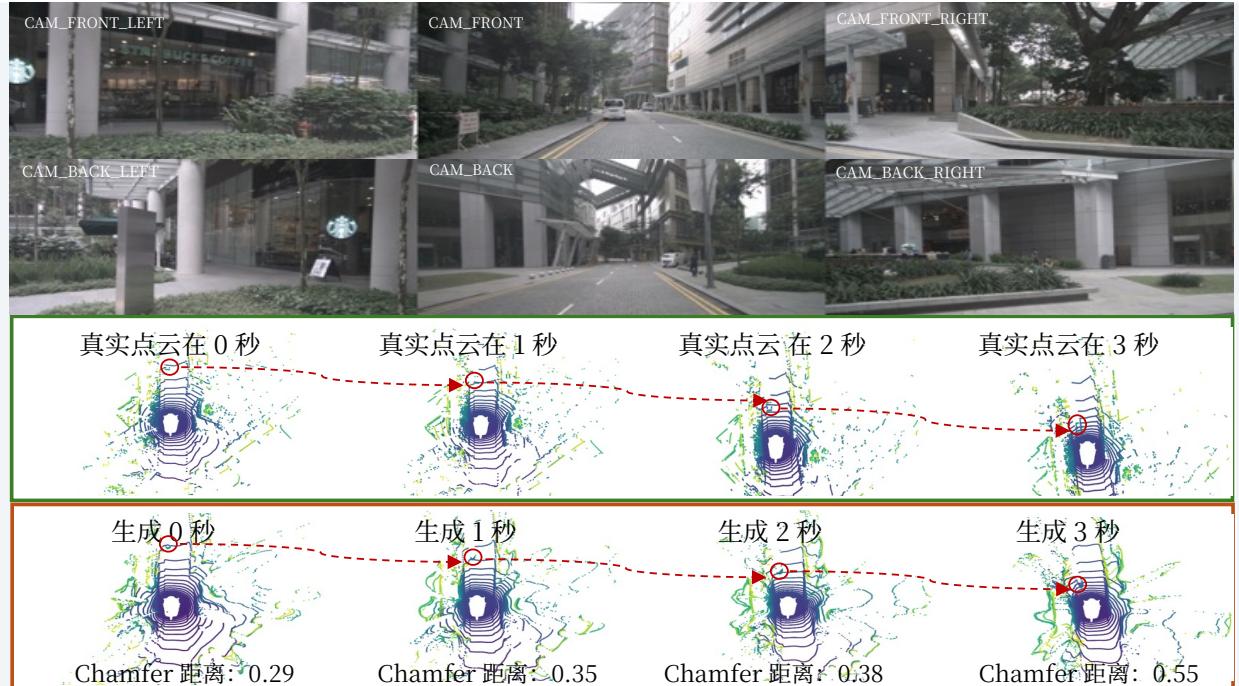

Figure 4. Qualitative results for future generation and scene understanding. From top to bottom, we display the multi-view input of the current scene, the ground truth scene evolution, the generated scene evolution, and the scene understanding result.

HERMES effectively captures future scene evolution, such as the van keeping close to the ego vehicle, noted in the red circle. Furthermore, HERMES exhibits a strong understanding of BEV inputs, as indicated by the green text, accurately identifying objects such as “Starbucks”.

6. Conclusion

This paper introduces HERMES, a simple yet effective unified Driving World Model that integrates 3D scene understanding and future scene generation within a single framework. We effectively bridge the gap between understanding and generation by leveraging a Bird’s-Eye View representation and incorporating world queries enhanced through large language models. Extensive experiments validate the effectiveness of the proposed HERMES, demonstrating significant improvements in future scene prediction accuracy and understanding metrics. We believe this work is a significant step toward a stronger unified Driving World Model.

Limitation. Although HERMES achieves promising results in unifying 3D scene understanding and generation, there are some limitations: 1) We have not explored perception tasks for autonomous driving within our framework. 2) Future image is also an important generation modality but is still under exploration. We left these in our future work.

Acknowledgment. This work was supported by the NSFC (62225603, 62441615, and 623B2038).

问:你能根据输入图像提供当前驾驶场景的总结吗?

A:该车辆目前正在穿行于一个城市环境中,似乎是一个 商业区 ,拥有现代建筑和写字楼。 在左侧,视野被一家 Starbucks Coffee 门店和一面大型 玻璃立面以及茂密的绿化隔离带所主导,而在右侧,一个带有更多绿地和 就座区的开放广场表明这是一个友好行人区。前方道路通畅,标有清晰的车道线,且没有可见的交通信号或标志表明有立即需要停车或让行的情况。天气看起来多云,柔和光照且无强烈直射阳光。回看后方,街道同样清晰, 附近没有车辆或行人接近,表明处于低交通流量状况。

问:视野内是否有车辆应关注的交通信号灯?

答: 不,当前前视或后视中没有可见的交通信号灯需要车辆在此刻关注。

问:是否有任何行人靠近车辆的行进路线?

图4。关于未来生成和场景理解的定性结果。从上到下,我们展示了当前场景的多视图输入、真实场景演化、生成的场景演化以及场景理解结果。

答: 不,人行道上或过街的地方没有靠近车辆行进路线的行人。

问:你能在后视中看到需要注意的车辆吗?

A:后视图没有任何紧随的近距离车辆,可以保持一个舒适的跟车距离。

Q: 有没有可能影响你驾驶的迎面来车?

A: 没有任何可见的迎面来车会影响当前的行驶条件。

Q: 在当前的驾驶场景中应采取什么动作?

A: 鉴于前方道路清晰以及当前的行驶条件,车辆应保持中等速度并停留在当前车道。 \n−\backslash \mathrm { n - }\n− 继续监控周围环境,注意可能从右侧行人广场进入道路的任何意外行人。\n‑定期检查后视和侧视镜,以便注意可能从后方接近的任何车辆,尽管当前后视图未显示有即时交通。\n‑ 即使当前未见任何交通信号或标志,也要做好应对任何可能进入视野的交通信号或标志的准备。

HERMES 有效地捕捉了未来场景演化,例如红圈中所示的厢式货车始终靠近自车。此外,正如绿色文本所示,HERMES 对 BEV 输入表现出较强的理解能力,能够准确识别诸如“Starbucks”等目标。

6. 结论

本文介绍了HERMES,一种简单但有效的统一驾驶世界模型,在单一框架内整合了 3D 场景理解与未来场景生成。我们通过利用鸟瞰图表示并引入经过增强的世界查询,有效地弥合了理解与生成之间的差距,

大型语言模型。大量实验验证了所提 HERMES 的有效性,显示在未来场景预测准确性和理解指标上有显著提升。我们认为这项工作是朝着更强大的统一驾驶世界模型迈出的重要一步。局限性。尽管HERMES 在统一三维场景理解和生成方面取得了令人鼓舞的结果,但仍存在一些局限:1)我们尚未在本框架内探索自动驾驶的感知任务。2)未来图像也是一种重要的生成模态,但仍在探索中。我们将这些工作留作未来工作。致谢。本工作得到国家自然科学基金委员会(62225603、62441615 和 623B2038)的支持。

HERMES: A Unified Self-Driving World Model for Simultaneous 3D Scene Understanding and Generation

Supplementary Material

S1. Additional Experiments

S1.1. Training Details

The BEV-based tokenizer utilizes the OpenCLIP ConNextL backbone [7, 33, 42], while other modules in the tokenizer and Render are trained from scratch. The LLM is derived from InternVL2-2B [5, 6]. The resolution of the input image is 1600×9001 6 0 0 \times 9 0 01600×900 , while the BEV-based world tokenizer adopts the same hyperparameters as BEVFormer v2- base [61], with the size of the encoded scene set to w=h=w = h =w=h= 200 and a BEV channel dimension of 256. The zzz and c′c ^ { \prime }c′ in the BEV-to-point clouds Render are set to 32. For future generation, we forecast scene evolution over 3 seconds, i.e., Δt=3\Delta t = 3Δt=3 . The frame-wise weights in Eq. 4 of the main paper are empirically defined by λi=1+0.5×i,i∈{0,⋯ ,3}\lambda _ { i } = 1 + 0 . 5 \times i , i \in \{ 0 , \cdots , 3 \}λi=1+0.5×i,i∈{0,⋯,3} , corresponding to the point clouds from 0 to 3s. The training of HERMES is structured into three stages and detailed below. Additional details are provided in Tab. S1.

Stage-1: Tokenizer Traning. In initial stage, we train the world tokenizer E\mathcal { E }E and Render R\mathcal { R }R to convert current images (It)( I _ { t } )(It) into point clouds (Pt)( P _ { t } )(Pt) , following Pt=R(E(It))P _ { t } = \mathcal { R } \left( \mathcal { E } \left( I _ { t } \right) \right)Pt=R(E(It)) . We utilize 12Hz1 2 \mathrm { H z }12Hz data from the nuScenes training set for the tokenizer and Render learning.

Stage-2: BEV-Text Alignment and Refinement. This stage encompasses BEV-Text alignment and refinement tuning phases. The alignment phase aims to establish vision-language alignment between the input and output BEV of the LLM, training only the in-projections for flattened BEV embeddings and out-projections for the encoded BEV. To alleviate data deprivation, we propose a simple data augmentation involving masking one of the multi-view images, splicing the caption from the visible view, and using the unprocessed multi-view scene descriptions. This approach increases the multi-view image-text pairs to ∼200K{ \sim } 2 0 0 \mathrm { K }∼200K , a sevenfold increase from the nuScenes keyframes. In the refinement phase, all parameters are unfrozen, and the LLM is fine-tuned using LoRA [17]. The alignment phase employs NuInteract [71] dense caption data, while the refinement phase adapts labeling styles using scene description data from OmniDrive-nuScenes [50].

Stage-3: Understanding and Generation Unification. Building on the understanding gained in the first two stages, we introduce future generation modules to generate point clouds at different moments. We train using nuScenes keyframes, descriptions, and general conversation annotations from OmniDrive-nuScenes.

Table S1. Training details of HERMES. −/−\mathrm { _ - } / \mathrm { _ - }−/− in Stage 2 indicates BEV-text alignment/refinement.

| Config | Stage 1 | Stage 2 | Stage 3 |

| Optimizer | AdamW | AdamW | AdamW |

| Learning Rate | 2e-4 | 2e-4/4e-4 | 4e-4 |

| Training Epochs | 6 | 3/6 | 36 |

| Learning Rate Scheduler | Cosine | Cosine | Cosine |

| Batch Size Per GPU | 1 | 4 | 4 |

| GPU Device | 32×NVIDIA H20 | ||

Table S2. Ablation on scaling potential of the LLM.

| # LLMParams | Generation | Understanding | ||

| Os↓1s↓2s↓3s↓MTETOR↑ROUGE↑CIDEr↑ | ||||

| 0.8B | 0.668 1.015 1.379 1.809 | 0.372 | 0.318 | 0.703 |

| 1.8B | 0.645 0.984 1.333 1.718 | 0.377 | 0.321 | 0.720 |

| 3.8B | 0.643 0.9911.3211.701 | 0.381 | 0.325 | 0.730 |

Table S3. Ablation on the number nnn of world queries.

| n | Generation | Understanding | |||||

| Os↓ | 1s↓ | 2s← | 3s↓ | MTETOR ↑ | ROUGE↑ | CIDEr 个 | |

| 1 | 0.658 | 0.996 | 1.328 | 1.725 | 0.376 | 0.320 | 0.712 |

| 2 | 0.656 | 0.995 | 1.324 | 1.720 | 0.377 | 0.321 | 0.714 |

| 4 | 0.645 | 0.984 | 1.333 | 1.718 | 0.377 | 0.321 | 0.720 |

| 8 | 0.667 | 1.028 | 1.361 | 1.744 | 0.376 | 0.321 | 0.713 |

| 16 | 0.658 | 0.999 | 1.354 | 1.748 | 0.378 | 0.321 | 0.716 |

S1.2. Additional Ablation Study

Unless otherwise specified, we perform ablation studies trained on a quarter of the nuScenes training scenes. Default settings are marked in green .

Analysis on the scaling potential of the LLM. We first explore the scaling potential of our HERMES, as shown in Tab. S2. Scaling up LLMs yields consistent gains in 3D scene understanding and point cloud generation, and we utilize the 1.8B LLM form InternVL2-2B [5, 6] as a trade-off. This indicates that the broader world knowledge acquired during pre-training enhances these tasks, suggesting potential benefits from further scaling.

Analysis on the number of world queries. The world queries facilitate knowledge transfer between the LLM and the Render for future scenarios. We then evaluate the impact of the number of queries nnn for each group, as shown in Tab. S3. We find that world queries do not adversely affect text understanding quality. However, increasing the

HERMES:用于同时进行三维场景理解与生成的统一自动驾驶世界模型

补充材料

S1. 额外实验

S1.1. 训练细节

基于 BEV 的分词器采用 OpenCLIP ConNext‑骨干网络 [7, 33, 42], ,而分词器和 Render 中的其他模块则从头训练。LLM 来源于 InternVL2‑2B [5, 6]∘6 ] _ { \circ }6]∘ 。输入图像的分辨率为 1600×9001 6 0 0 \times 9 0 01600×900 ,而基于 BEV 的世界分词器采用与 BEVFormer v2‑base 相同的超参数 [61], ,编码场景的大小设置为 w=h=200w = h = 2 0 0w=h=200 ,BEV 的通道维度为 256。Render 中的 zzz 和 c′c ^ { \prime }c′ 被设置为 32。对于未来生成,我们预测场景演变为 3 秒,即 Δt=3∘\Delta t = 3 _ { \circ }Δt=3∘ 。式子中逐帧的权重(主文献的式子4)由

λi=1+0.5×i i∈{0,⋅⋅⋅,3}\lambda _ { i } = 1 + 0 . 5 \times i \ i \in \{ 0 , \cdot \cdot \cdot , 3 \}λi=1+0.5×i i∈{0,⋅⋅⋅,3} ,经验性地定义,分别对应 0 到 3 秒的点云。HERMES 的训练分为三个阶段,下面详述。更多细节见表格S1。

Stage-1: Tokenizer Traning. 在初始阶段,我们训练世界分词器 E\mathcal { E }E 和 Render R\mathcal { R }R ,将当前图像 (It)\left( I _ { t } \right)(It) 转换为点云 (Pt)( P _ { t } )(Pt) ,方法遵循 Pt=R(E(It))∘P _ { t } = \mathcal { R } ( \mathcal { E } \left( I _ { t } \right) ) _ { \circ }Pt=R(E(It))∘ 。我们使用来自 nuScenes 训练集的 12Hz1 2 \mathrm { H z }12Hz 数据用于分词器和 Render 的学习。

Stage-2: BEV-Text AlignmentandRefinement. 该阶段包括 BEV‑文本对齐与精炼微调两个环节。对齐阶段旨在建立 LLM 输入与输出 BEV 之间的视觉‑语言对齐,仅训练用于平展 BEV 嵌入的 in‑projections 和用于编码 BEV 的 out‑projections。为缓解数据不足,我们提出一种简单的数据增强:屏蔽其中一张多视角图像、拼接可见视角的描述文本,并使用未处理的多视角场景描述。该方法将多视图图像‑文本对增加到∼200K{ \sim } 2 0 0 \mathrm { K }∼200K ,是 nuScenes 关键帧的七倍。在精炼阶段,所有参数解冻,使用 LoRA [17]对大模型进行微调。对齐阶段采用 NuInteract [71] 的密集描述数据,而精炼阶段则使用来自 OmniDrive‑nuScenes [50] 的场景描述数据来适配标注风格。

Stage-3: UnderstandingandGeneration Unification.在前两个阶段获得理解能力的基础上,我们引入未来生成模块以生成不同时间点的点云。我们使用nuScenes 的关键帧、描述以及来自OmniDrive‑nuScenes 的通用对话注释进行训练。

表 S1. HERMES 的训练细节。Stage 2 中的 −/−- / -−/− 表示 BEV‑文本对齐/精炼。

| 配置 | 阶段1 | 阶段2 | 阶段3 |

| 优化器 | AdamW | AdamW | AdamW |

| 学习率 | 2e-4 | 2e-4/4e-4 | 4e-4 |

| 训练轮次 | 6 | 3/6 | 36 |

| 学习率调度器 | 余弦 | 余弦 | 余弦 |

| 每个 GPU 的批量大小 | 1 | 4 32×NVIDIA H20 | 4 |

| GPU设备 | |||

表 S2。对大语言模型扩展潜力的消融研究。

表 S3。对世界查询数量的消融 nnn 。

| # LLM Params | 生成 | 理解 | ||

| 0秒↓Is↓2s↓3s↓MTETOR↑ROUGE ↑CIDEr ↑ | ||||

| 0.8B | 0.668 1.015 1.379 1.809 | 0.372 | 0.318 | 0.703 |

| 1.8B | 0.645 | 0.377 | 0.321 | 0.720 |

| 3.8B | 0.643 0.991 1.3211.701 | 0.381 | 0.325 | 0.730 |

| n | 生成 | 理解 | |

| Os↓ 1s↓ 2s↓ | MTETOR ↑ROUGE ↑CIDEr↑ | ||

| 1 | 3s↓ 0.658 0.996 1.328 1.725 | 0.376 | 0.320 0.712 |

| 2 | 0.656 0.995 1.324 1.720 | 0.377 0.321 | 0.714 |

| 4 | 0.377 | 0.321 0.720 | |

| 8 0.667 1.028 1.361 1.744 | 0.376 | 0.321 0.713 | |

| 16 0.658 0.999 1.354 1.748 | 0.378 | 0.321 0.716 |

S1.2. 额外的消融研究

除非另有说明,我们的消融研究是在 nuScenes 训练场景的四分之一上进行的。默认设置以绿色标出。

关于大语言模型扩展潜力的分析。我们首先探索了我们的HERMES的扩展潜力,如表S2所示。扩展大语言模型在3D场景理解和点云生成上带来了稳定的提升,我们将InternVL2‑2B中的18亿参数大语言模型作为权衡选择[5,6]。这表明在预训练期间获得的更广泛世界知识提升了这些任务,暗示进一步扩展可能带来更多好处。

关于 world queries 数量的分析。这些 worldqueries 促进了大语言模型与 Render 之间在未来场景下的知识迁移。然后我们评估了每组查询数量的影响 nnn ,如表S3所示。我们发现 world queries 并不会对文本理解质量产生不利影响。然而,增加

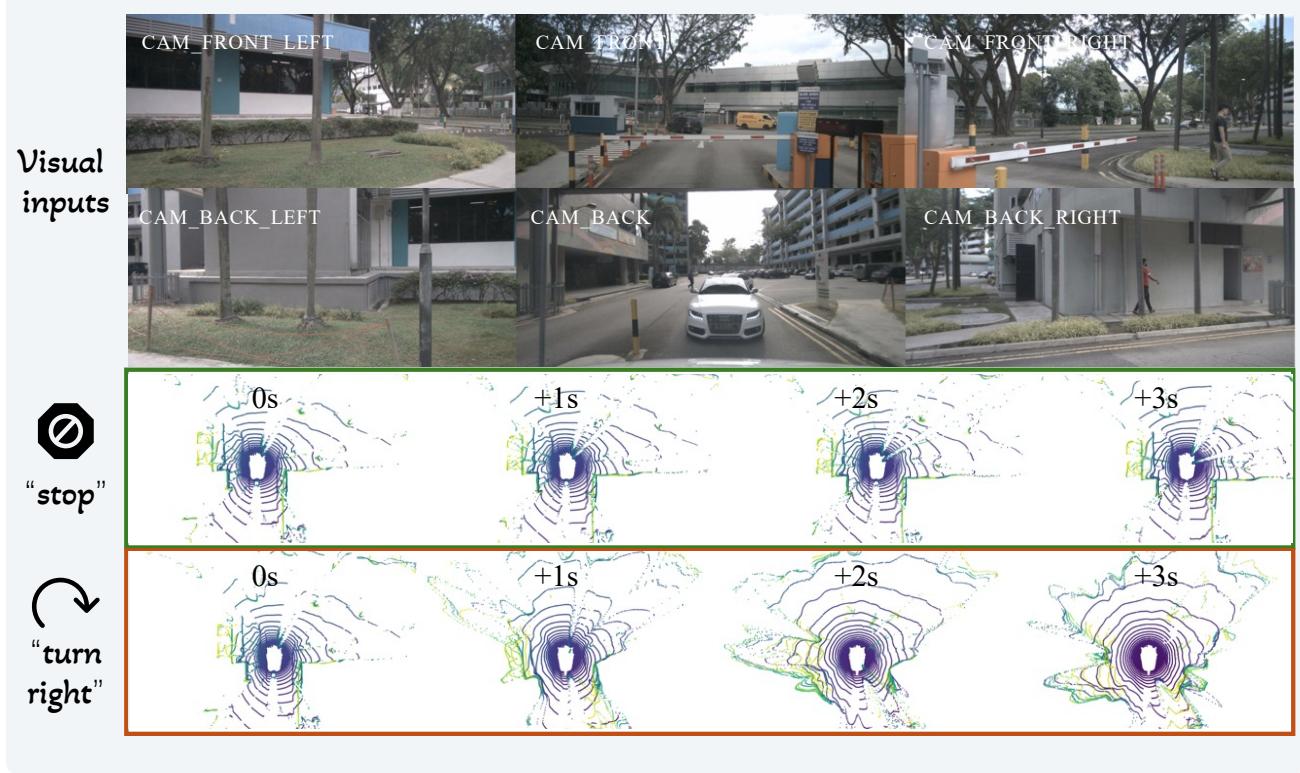

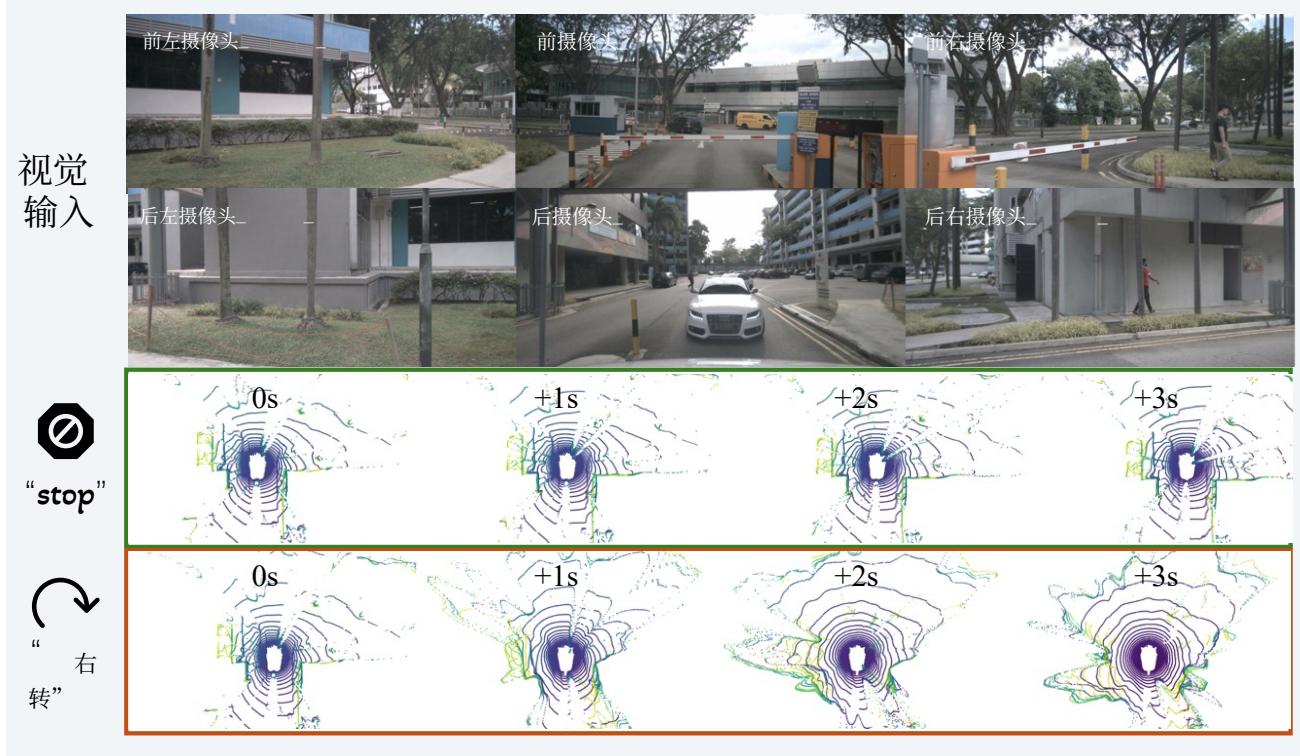

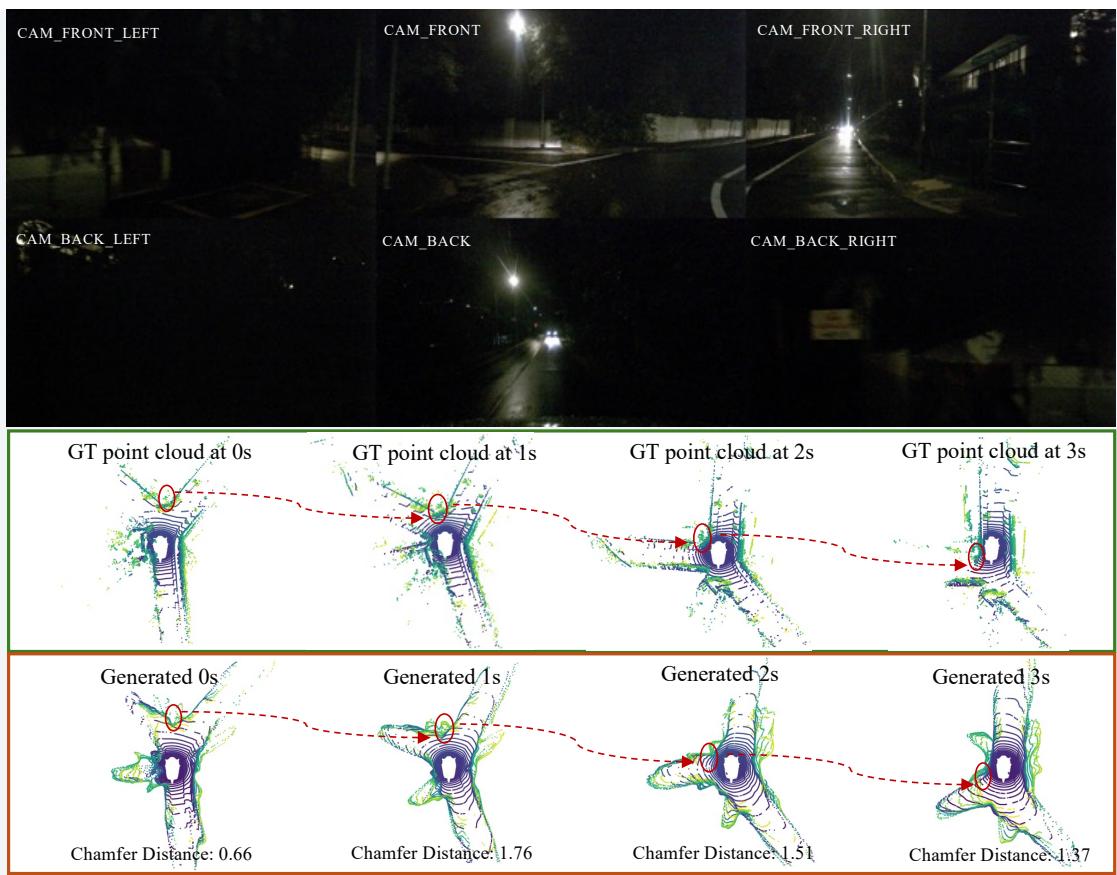

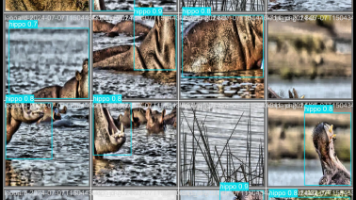

Figure S1. Qualitative results of HERMES conditioned on different future ego-motion conditions. From top to bottom, each sub-figure displays the multi-view input of the current scene, scene evolution predicted with a “stop” future ego-motion, and scene evolution predicted with a “turn right” ego-motion.

Table S4. Comparison of generation ability.

| Generation | Understanding | ||||||

| Os↓1s↓2s↓3s↓MTETOR↑ROUGE↑CIDEr↑ | |||||||

| Copy&Paste | - | 1.27 | 2.12 | 2.66 | |||

| ViDAR [65] | - | 1.12 | 1.38 | 1.73 | |||

| HERMES | 0.59 0.78 0.95 1.17 | 0.384 | 0.327 | 0.741 | |||

Table S5. VQA results on NuScenes-QA.

| Method | Reference | Modality | Acc. (%) ↑ |

| LLaVA [30] | NeurIPS 23 | Camera | 47.4 |

| LiDAR-LLM [64] | arXiv 23 | LiDAR | 48.6 |

| BEVDet+BUTD[41] | AAAI 24 | Camera | 57.0 |

| BEVDet+MCAN[41] | AAAI 24 | Camera | 57.9 |

| CenterPoint+BUTD [41] | AAAI 24 | LiDAR | 58.1 |

| CenterPoint+MCAN [41] | AAAI 24 | LiDAR | 59.5 |

| OmniDrive [50] | CVPR 25 | Camera | 59.2 |

| HERMES | Camera | 61.9 |

number of world queries leads to a decline in performance, likely due to redundant information and optimization challenges. Therefore, we choose to include four world queries per group for future generations.

Analysis on generation ability. We finally compare our future point cloud generation ability trained on the full training set against a Copy&Paste baseline, where Copy&Paste simply duplicates the current ground-truth point cloud for future observations. As shown in Tab. S4, this baseline fails to account for point cloud changes due to movement and occlusion, demonstrating that HERMES truly learns to understand 3D scenes and predict their future evolution.

S1.3. Understanding on NuScenes-QA

The NuScenes-QA [41] is another multi-modal VQA benchmark for driving scenarios, featuring primarily singleword answers focused on perception. We fine-tune HERMES on the NuScenes-QA training set to align with its style and length, and the results are shown in Tab. S5. HERMES achieves superior performance, outperforming LLaVA [30] by 14.5%1 4 . 5 \%14.5% and the point cloud method CenterPoint+MCAN [41] by 2.4%2 . 4 \%2.4% . This showcases HERMES’s strong 3D scene understanding capabilities via its unified BEV representation, especially considering it requires no 3D object detection supervision.

S2. Discussion

The integration of Bird’s-Eye View (BEV) representations as input for Large Language Models (LLMs) presents distinct advantages in our HERMES. Unlike conventional multi-view processing approaches that process individual

图 S1。HERMES 在不同未来自我位移条件下的定性结果。从上到下,每个子图显示当前场景的多视图输入、在“停止”未来自我位移下预测的场景演变,以及在“右转”自我位移下预测的场景演变。

表 S4。生成能力的比较。

| 生成 | 理解 | ||

| 0秒↓1s↓2s↓3s↓MTETOR↑ROUGE↑CIDEr↑ | |||

| 复制粘贴 | 1.27 2.12 2.66 | ||

| ViDAR[65] | 1.12 1.38 1.73 | ||

| HERMES 0.59 | 0.384 | 0.741 | |

表 S5。NuScenes‑QA 的 VQA 结果。

| 方法 | 参考文献 | 模态 | 准确率(%)↑ |

| LLaVA [30] | NeurIPS 2023 | 相机 | 47.4 |

| LiDAR-LLM [64] | arXiv 2023 | 激光雷达 | 48.6 |

| BEVDet+BUTD [41] | AAAI 24 | 相机 | 57.0 |

| BEVDet+MCAN [41] | AAAI 24 | 相机 | 57.9 |

| CenterPoint+BUTD [41] | AAAI 24 | 激光雷达 | 58.1 |

| CenterPoint+MCAN [41] | AAAI 24 | 激光雷达 | 59.5 |

| OmniDrive [50] | CVPR 25 | 相机 | 59.2 |

| HERMES | 相机 | 61.9 |

world queries 的数量会导致性能下降,可能是由于冗余信息和优化挑战所致。因此,我们选择在未来生成中每组包含四个 world queries。

生成能力分析。我们最终将训练于完整训练集的未来点云生成能力与一个 Copy&Paste 基线进行了比较,后者仅仅将当前的真实点云复制为未来的观测。

正如表S4所示,该基线无法考虑因运动和遮挡导致的点云变化,说明 HERMES 真正学会了理解三维场景并预测其未来演变。

S1.3. NuScenes‑QA 上的理解

NuScenes‑QA [41] 是另一个面向驾驶场景的多模态视觉问答基准,主要以侧重感知的单词答案为主。我们在NuScenes‑QA训练集上对HERMES进行了微调,以便与其风格和答案长度对齐,结果如表 S5 所示。HERMES取得了优异的表现,比LLaVA [30]高出14.5%1 4 . 5 \%14.5% ,比基于点云的CenterPoint+MCAN [41]高出2.4%2 . 4 \%2.4% 。这展示了HERMES通过其统一的BEV表示所具有的强大三维场景理解能力,特别是在无需三维目标检测监督的情况下。

S2. Discussion

将鸟瞰图(BEV)表示作为大语言模型(LLMs)的输入集成在我们的 HERMES 中带来了明显优势。与传统逐个处理摄像机流的多视角处理方法不同,基于 BEV 的标记化建立了统一的空间坐标系,能固有地保留跨视角的几何关系,同时维护对象交互模式。

camera streams independently, the BEV-based tokenization establishes a unified spatial coordinate system that inherently preserves geometric relationships across views while maintaining object interaction patterns. This spatial consolidation addresses the inherent limitations of visionlanguage models in interpreting multi-perspective scenarios, where disconnected 2D projections fail to capture the holistic 3D environment context. By strategically compressing high-resolution multi-view inputs 1600×9001 6 0 0 { \times } 9 0 01600×900 per view, for example) into a compact BEV latent space through our downsampling block, we achieve efficient token utilization (2,500 tokens vs. ∼47,000{ \sim } 4 7 { , } 0 0 0∼47,000 tokens for raw view processing) without exceeding standard LLM context windows. Crucially, the spatial-aware BEV features enable synergistic knowledge transfer between scene understanding and generation tasks through our world query mechanism, i.e., the positional correspondence between text descriptions and geometric features permits causal attention patterns that enrich future predictions with linguistic context. Our experiments on nuScenes demonstrate that this spatialtextual alignment contributes substantially to the 32.4%3 2 . 4 \%32.4% reduction in generation error and 8.0%8 . 0 \%8.0% CIDEr improvement, validating BEV’s dual role as both information compressor and cross-modal interface.

S3. More Qualitative Results

This section presents further qualitative results of HERMES on controllability and the unification ability of understanding and generation.

Potential for Controlled Scene Generation. As shown in Fig. S1, we observe the capability of HERMES to generate future point cloud evolution conditioned on specific egomotion information, such as “stop” or “turn right”. This showcases the potential of HERMES as world simulator and its ability to understand complex world scenarios deeply.

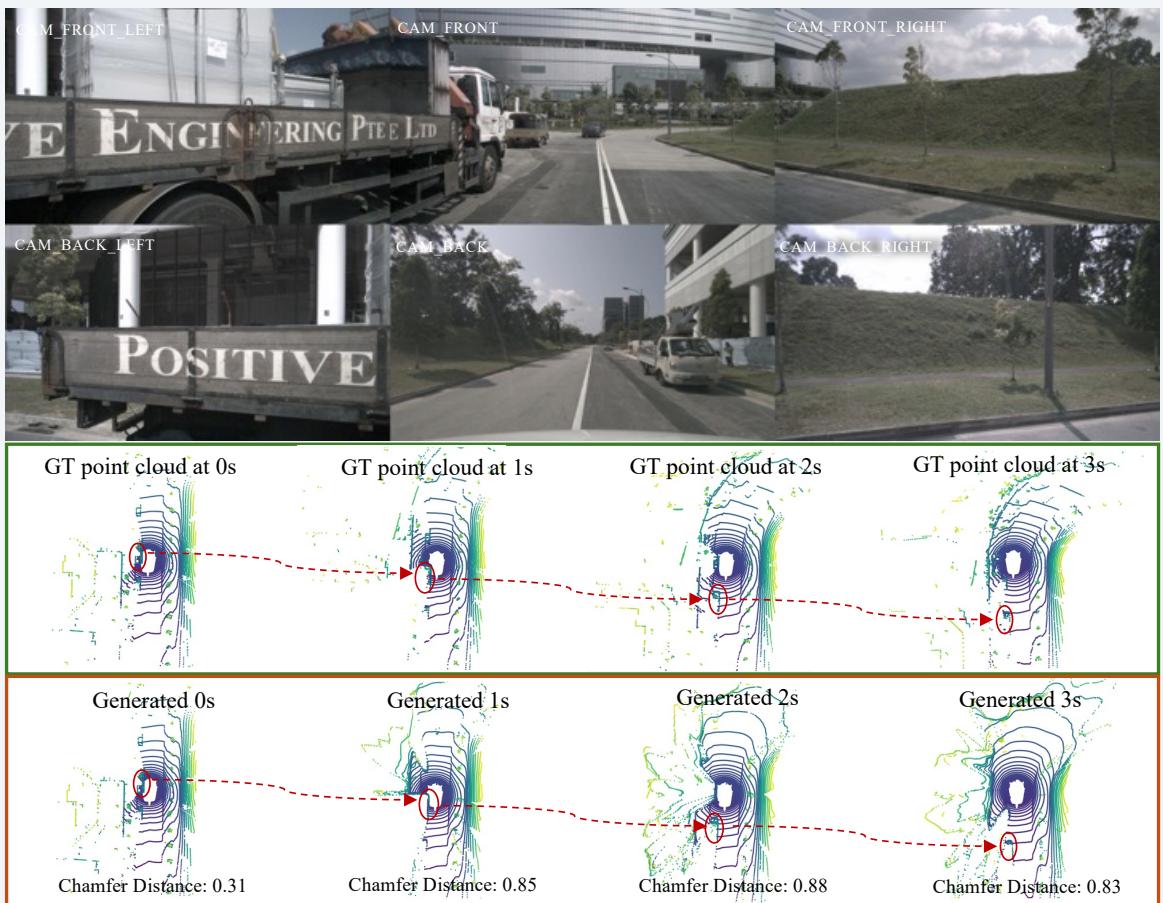

Unification of understanding and generation. More qualitative results on future generations and scene understanding are illustrated in Fig. S2. Our HERMES effectively captures future scene evolution (with the ground truth egomotion information for better comparison), such as the corner of the building keeps moving backward, noted in the red circle in Fig. S2b. While HERMES achieve an encouraging integration of understanding and generation, it faces challenges in complex scenes (e.g., significant left turns and occlusions as in Fig. S2a) and low-quality visible light conditions (e.g., nighttime driving as in Fig. S2c). Despite the complexity of the scenarios, HERMES still makes reasonable predictions about the emerging parts of future scenes.

这种空间整合解决了视觉‑文本模型在解释多视角场景时的固有局限,在那些孤立的二维投影无法捕捉整体三维环境上下文的情况下尤其明显。通过我们的下采样模块将高分辨率多视图输入(例如每视图 1600×9001 6 0 0 { \times } 9 0 01600×900 )策略性地压缩到紧凑的 BEV 潜在空间,我们实现了高效的标记利用(2,500 个标记 vs. 原始视图处理的 ∼47,000 个标记),且未超出标准 LLM 上下文窗口。关键是,空间感知 BEV 特征通过我们的世界查询机制使场景理解与生成任务之间能够实现协同的知识迁移,即文本描述与几何特征之间的位置对应允许因果注意力模式,用语言上下文丰富未来预测。我们在nuScenes 上的实验表明,这种空间‑文本对齐显著促成了生成误差降低 32.4%3 2 . 4 \%32.4% 和 CIDEr 提升 8.0%8 . 0 \%8.0% ,验证了 BEV 既作为信息压缩器又作为跨模态接口的双重作用。

S3。更多定性结果

本节展示了 HERMES 在可控性以及理解与生成统一能力方面的更多定性结果。

可控场景生成的潜力。如图S1所示,我们观察到HERMES 能够在特定自我运动信息(例如“stop”或“turn right”)的条件下生成未来点云演化。这展示了HERMES 作为世界模拟器的潜力以及其深入理解复杂世界场景的能力。

理解与生成的统一。 更多关于未来生成和场景理解的定性结果展示在图S2中。我们的 HERMES 有效地捕捉了未来场景演变(为便于比较使用了真实值自车运动信息),例如建筑物的角落持续向后移动,如图S2b中红圈所示。尽管 HERMES 在理解与生成的融合上取得了令人鼓舞的进展,但在复杂场景中(例如图S2a所示的大幅左转与遮挡)以及可见光质量较差的条件下(例如图S2c中的夜间驾驶)仍然面临挑战。即便在这些复杂场景中,HERMES 仍能对未来场景中新出现的部分做出合理的预测。

Q : Can you provide a summary of the current driving scenario based on the input images?

A : The vehicle is positioned on a clear, marked road during what appears to be daytime with good visibility and dry weather conditions . To the left, there’s a stationary construction vehicle , indicating potential work nearby or a temporary halt in traffic . Straight ahead, the road is free of obstructions with a few vehicles at a distance, suggesting a smooth flow of traffic . The road is bordered by greenery on both sides , with a grassy slope to the right and trees lining the road, contributing to a serene driving environment . In the rear view, there are no vehicles closely following , providing a sense of ease for potential maneuvers . The overall scene suggests a non -congested urban or suburban setting with minimal traffic activity .

Q : Is there a traffic signal or stop sign visible?

A : No , there is no traffic signal or stop sign visible in the current view .

Q : What type of vehicles are visible in the current driving scene?

A : There is a construction vehicle on the left side and a white SUV on the right side of the road .

Q : Are there any pedestrians or cyclists on the road?

No, there are no pedestrians or cyclists visible on the road in the current view .

Q : What is the condition of the road surface?

-

A : The road surface appears to be in good condition, with clear lane markings and no visible potholes or debris .

-

Are there any indications of construction work or road maintenance?

-

The presence of the construction vehicle and the fenced -off area to the left suggest ongoing construction or maintenance work .

Q: How would you describe the driving conditions based on the weather?

A : The driving conditions seem to be favorable, with clear weather and daylight providing good visibility .

Q : Based on the surroundings, what kind of area is the vehicle driving through?

A : The vehicle is driving through an urban area with modern buildings and well -maintained greenery, suggesting a well -developed and possibly a business or residential district .

A : The best driving practice would be to continue at a moderate speed, maintain a safe following distance from the vehicle ahead, and stay vigilant for any potential movements from the construction vehicle on the left .

ϱ\varrhoϱ : What action should be taken in the current driving scenario?

A : In this situation, the vehicle should continue at a moderate speed and maintain the current lane . \n⋅\backslash \mathbf { n } \cdot\n⋅ - Proceed straight, following the road markings and keeping a safe distance from the vehicles ahead . \n⋅\backslash \mathbf { n } \cdot\n⋅ - Be aware of potential movements from the construction vehicle on the left, in case it starts to move or workers step onto the road. \n\backslash \mathtt { n }\n - Regularly check the rearview mirror to stay informed about the traffic situation behind, even though there’s no immediate pressure from following vehicles . \n⋅\backslash \mathrm { n } \cdot\n⋅ - Since the road ahead is clear, focus on any unexpected obstacles that may appear from the sides of the road or from the construction area .

Q :可以 y o u p 提供一个 摘要 y o f 当 前 的驾驶 g\pmb { g }g 场景 ,基 于输入 的 p\pmb { p }p 图像 g e s ?

:该车辆位于一条清晰的有标记的道路上,似乎处于白天,能见度良好且为干燥的天气条件。左侧有一辆停着的施工车辆,表明附近可能有施工或交通临时停滞。前方道路无障碍物,远处有少量车辆,表明交通流畅。道路两侧有绿化,右侧有一处草坡和树木

路两旁种植着树木,营造出宁静的驾驶环境。在后视图中,没有紧跟的车辆,为潜在的变道或其他操作提供了从容感。总体场景表明这是一个车辆不拥挤的城市或郊区环境,交通活动很少。

问:能看到交通信号灯或停车标志吗?

答: 不 ,在 当前 视 野中 看 不到 交 通信号灯或 停车 标志。

问 : 当前行驶 场景 中能 看到什么类 型的 车 辆?

答: 左侧有一辆施工车辆 ,右侧道路上有一辆白色运动型多用途车。

问 : 道路上有 行人 或骑 行者吗?

答: 没有,在当前视野中道路上看不到行人或骑行者。

问 : 路面状况 如何 ?

答: 路 面看 起来 状 况良 好 ,具 有 清 晰的车 道 标线且没有可见的坑 洞或 碎片 。

问 : 是否有任 何施 工工 程或道路维 护的 迹 象?

答: 左 侧存 在 施 工 车辆和围挡 区域 ,表明 可 能正在进行施工或维 护工 作。

问 : 根据天气 ,你 如何 描述行驶条 件?

答: 行 驶条 件似 乎 良好 , 晴朗 天 气和白昼提 供了 良好的 能见 度 。

问:根据周围环境,该车正在穿过什么类型的区域?

答: 该车正在穿过一个城市区域,周围有 现代建筑和维护良好的绿化,表明该地区较为发达,可能是商务区或住宅区。

: 考虑到前 方道 路通 畅和交通状 况, 在 此情形下 的最佳驾驶做法是 什么?

答: 最佳的驾驶做法是以中等速度行驶,保持与前车的安全跟车距离,并对左侧施工车辆可能的动向保持警惕。