02 Agents-create_agent函数-model(str)

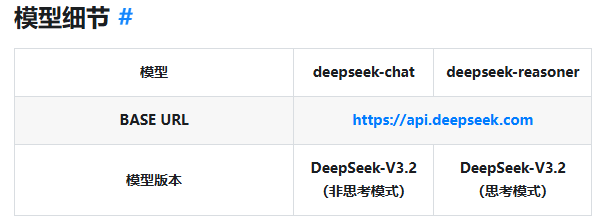

其中支持的字符串包含以下内容:(https://reference.langchain.com/python/langchain/models/#langchain.chat_models.init_chat_model(model_provider))注意model="deepseek-reasoner",此处是填的模型名称,而通过deepseek官网,支持两个模型deepseek-chat,d

参考链接:https://docs.langchain.com/oss/python/langchain/agents

https://reference.langchain.com/python/langchain/agents/?_gl=1*16rzxkd*_gcl_au*MTE0NTQ0OC4xNzY3ODQyNTI1*_ga*MTU3ODc0MjQ4NC4xNzY3ODQyNTI1*_ga_47WX3HKKY2*czE3Njc4NjIzNTYkbzQkZzEkdDE3Njc4NjQ0MTQkajYwJGwwJGgw#langchain.agents.create_agent

langchain使用create_agent函数将大模型和工具等结合起来,实现智能体开发

此处研究一下该函数的输入和含义

create_agent(

model: str | BaseChatModel,

tools: Sequence[BaseTool | Callable | dict[str, Any]] | None = None,

*,

system_prompt: str | SystemMessage | None = None,

middleware: Sequence[AgentMiddleware[StateT_co, ContextT]] = (),

response_format: ResponseFormat[ResponseT] | type[ResponseT] | None = None,

state_schema: type[AgentState[ResponseT]] | None = None,

context_schema: type[ContextT] | None = None,

checkpointer: Checkpointer | None = None,

store: BaseStore | None = None,

interrupt_before: list[str] | None = None,

interrupt_after: list[str] | None = None,

debug: bool = False,

name: str | None = None,

cache: BaseCache | None = None,

) -> CompiledStateGraph[

AgentState[ResponseT], ContextT, _InputAgentState, _OutputAgentState[ResponseT]

# model

指代智能体的大语言模型,可以是字符串或者模型实体

其中支持的字符串包含以下内容:(https://reference.langchain.com/python/langchain/models/#langchain.chat_models.init_chat_model(model_provider))

- `openai` -> [`langchain-openai`](https://docs.langchain.com/oss/python/integrations/providers/openai)

- `anthropic` -> [`langchain-anthropic`](https://docs.langchain.com/oss/python/integrations/providers/anthropic)

- `azure_openai` -> [`langchain-openai`](https://docs.langchain.com/oss/python/integrations/providers/openai)

- `azure_ai` -> [`langchain-azure-ai`](https://docs.langchain.com/oss/python/integrations/providers/microsoft)

- `google_vertexai` -> [`langchain-google-vertexai`](https://docs.langchain.com/oss/python/integrations/providers/google)

- `google_genai` -> [`langchain-google-genai`](https://docs.langchain.com/oss/python/integrations/providers/google)

- `bedrock` -> [`langchain-aws`](https://docs.langchain.com/oss/python/integrations/providers/aws)

- `bedrock_converse` -> [`langchain-aws`](https://docs.langchain.com/oss/python/integrations/providers/aws)

- `cohere` -> [`langchain-cohere`](https://docs.langchain.com/oss/python/integrations/providers/cohere)

- `fireworks` -> [`langchain-fireworks`](https://docs.langchain.com/oss/python/integrations/providers/fireworks)

- `together` -> [`langchain-together`](https://docs.langchain.com/oss/python/integrations/providers/together)

- `mistralai` -> [`langchain-mistralai`](https://docs.langchain.com/oss/python/integrations/providers/mistralai)

- `huggingface` -> [`langchain-huggingface`](https://docs.langchain.com/oss/python/integrations/providers/huggingface)

- `groq` -> [`langchain-groq`](https://docs.langchain.com/oss/python/integrations/providers/groq)

- `ollama` -> [`langchain-ollama`](https://docs.langchain.com/oss/python/integrations/providers/ollama)

- `google_anthropic_vertex` -> [`langchain-google-vertexai`](https://docs.langchain.com/oss/python/integrations/providers/google)

- `deepseek` -> [`langchain-deepseek`](https://docs.langchain.com/oss/python/integrations/providers/deepseek)

- `ibm` -> [`langchain-ibm`](https://docs.langchain.com/oss/python/integrations/providers/ibm)

- `nvidia` -> [`langchain-nvidia-ai-endpoints`](https://docs.langchain.com/oss/python/integrations/providers/nvidia)

- `xai` -> [`langchain-xai`](https://docs.langchain.com/oss/python/integrations/providers/xai)

- `perplexity` -> [`langchain-perplexity`](https://docs.langchain.com/oss/python/integrations/providers/perplexity)

- `upstage` -> [`langchain-upstage`](https://docs.langchain.com/oss/python/integrations/providers/upstage)

尝试一下deepseek

from langchain.agents import create_agent

from langchain.chat_models import init_chat_model

import os

os.environ["DEEPSEEK_API_KEY"] = "sk-XXXX"

# model = init_chat_model("deepseek-chat")

agent=create_agent(

model="deepseek-reasoner",

tools=None

)

#运行agent,获得结果

result=agent.invoke(

{

"messages":[{"role":"user","content":"what is the weather in sf"}]

}

)

print(result)注意model="deepseek-reasoner",此处是填的模型名称,而通过deepseek官网,支持两个模型deepseek-chat,deepseek-reasoner

参考链接:https://api-docs.deepseek.com/zh-cn/quick_start/pricing

另外在实验过程中报错,需要安装相关库

![]()

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)