【AI】04AI Aent:十分钟跑通LangGraph项目:调用llm+agent开发+langSmith使用

langGraph

目录标题

1.conda python虚拟环境创建

conda create --name new_langgrapg_env python=3.11

实操

先退出现有的langchain虚拟环境

然后新建一个langgraph的虚拟环境3.11

C:\Users\HP>conda activate zyyenv

(zyyenv) C:\Users\HP>python --version

Python 3.13.11

(zyyenv) C:\Users\HP>conda deactivate

C:\Users\HP>conda create --name new_langgrapg_env python=3.11

3 channel Terms of Service accepted

Retrieving notices: done

Channels:

- defaults

Platform: win-64

Collecting package metadata (repodata.json): done

Solving environment: done

## Package Plan ##

environment location: C:\Users\HP\miniconda3\envs\new_langgrapg_env

added / updated specs:

- python=3.11

The following packages will be downloaded:

package | build

---------------------------|-----------------

expat-2.7.3 | h885b0b7_4 31 KB

python-3.11.14 | h981015d_0 17.7 MB

setuptools-80.9.0 | py311haa95532_0 1.9 MB

wheel-0.45.1 | py311haa95532_0 182 KB

------------------------------------------------------------

Total: 19.8 MB

The following NEW packages will be INSTALLED:

bzip2 pkgs/main/win-64::bzip2-1.0.8-h2bbff1b_6

ca-certificates pkgs/main/win-64::ca-certificates-2025.12.2-haa95532_0

expat pkgs/main/win-64::expat-2.7.3-h885b0b7_4

libexpat pkgs/main/win-64::libexpat-2.7.3-h885b0b7_4

libffi pkgs/main/win-64::libffi-3.4.4-hd77b12b_1

libzlib pkgs/main/win-64::libzlib-1.3.1-h02ab6af_0

openssl pkgs/main/win-64::openssl-3.0.18-h543e019_0

pip pkgs/main/noarch::pip-25.3-pyhc872135_0

python pkgs/main/win-64::python-3.11.14-h981015d_0

setuptools pkgs/main/win-64::setuptools-80.9.0-py311haa95532_0

sqlite pkgs/main/win-64::sqlite-3.51.0-hda9a48d_0

tk pkgs/main/win-64::tk-8.6.15-hf199647_0

tzdata pkgs/main/noarch::tzdata-2025b-h04d1e81_0

ucrt pkgs/main/win-64::ucrt-10.0.22621.0-haa95532_0

vc pkgs/main/win-64::vc-14.3-h2df5915_10

vc14_runtime pkgs/main/win-64::vc14_runtime-14.44.35208-h4927774_10

vs2015_runtime pkgs/main/win-64::vs2015_runtime-14.44.35208-ha6b5a95_10

wheel pkgs/main/win-64::wheel-0.45.1-py311haa95532_0

xz pkgs/main/win-64::xz-5.6.4-h4754444_1

zlib pkgs/main/win-64::zlib-1.3.1-h02ab6af_0

Proceed ([y]/n)? y

Downloading and Extracting Packages:

python-3.11.14 | 17.7 MB | ############################################################################ | 100%

setuptools-80.9.0 | 1.9 MB | ############################################################################ | 100%

wheel-0.45.1 | 182 KB | ############################################################################ | 100%

Preparing transaction: done

Verifying transaction: done

Executing transaction: done

#

# To activate this environment, use

#

# $ conda activate new_langgrapg_env

#

# To deactivate an active environment, use

#

# $ conda deactivate

C:\Users\HP>

激活虚拟环境

#

# To activate this environment, use

#

# $ conda activate new_langgrapg_env

#

# To deactivate an active environment, use

#

# $ conda deactivate

C:\Users\HP>conda activate new_langgrapg_env

(new_langgrapg_env) C:\Users\HP>

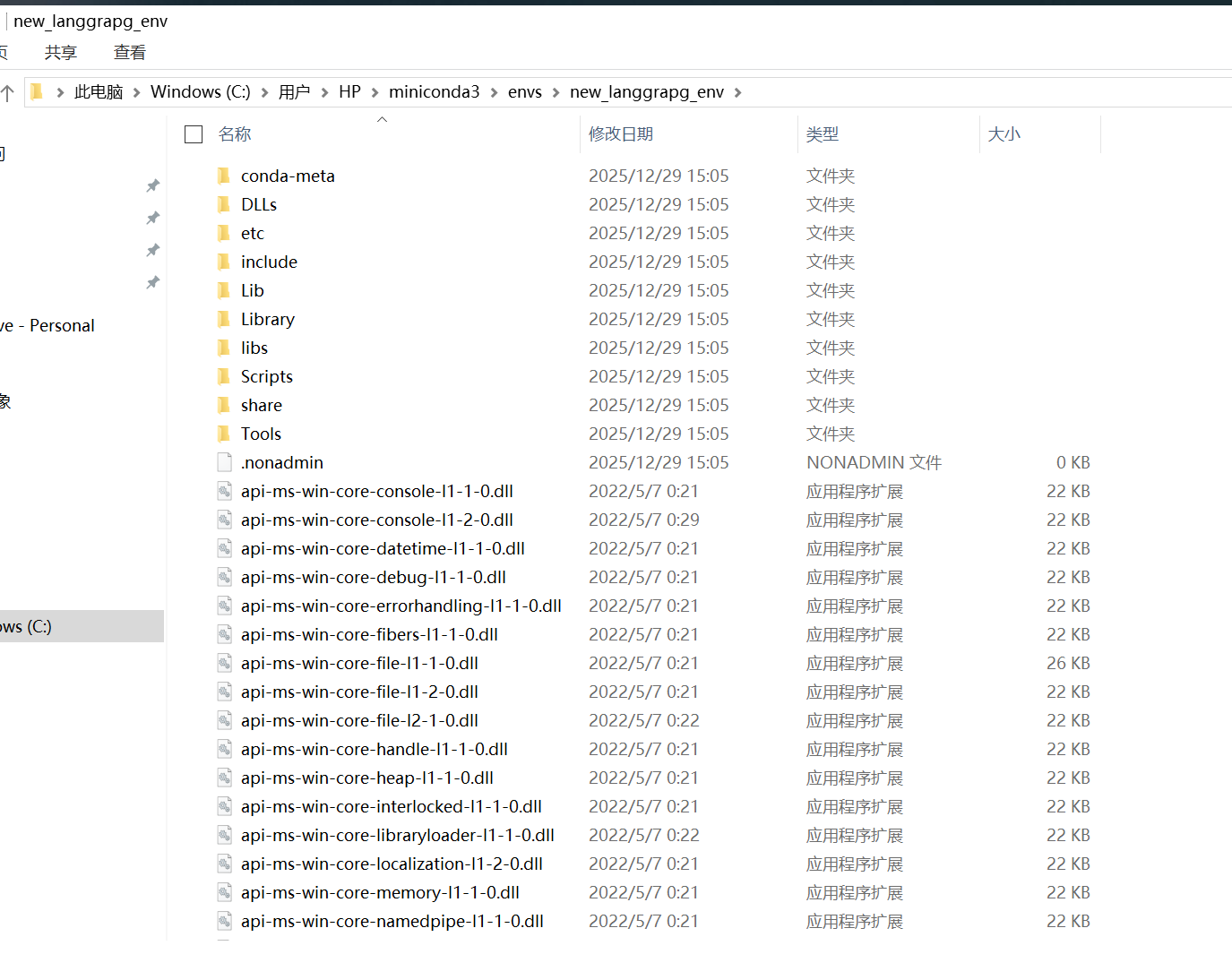

电脑中实际位置

C:\Users\HP\miniconda3\envs\new_langgrapg_env\Scripts

2.安装 langgraph-cli

官方推荐命令来安装langgraph-cli ,未指定版本 即为最新版

pip install --upgrade "langgraph-cli[inmem]"

实操,会安装非常多的包 ,

需要注意安装位置必须是目标的虚拟环境 ,

此处为刚新建的虚拟环境,所以进行激活

conda activate new_langgrapg_env

C:\Users\HP\miniconda3\envs\new_langgrapg_env> conda activate new_langgrapg_env

(new_langgrapg_env) C:\Users\HP\miniconda3\envs\new_langgrapg_env>pip install --upgrade "langgraph-cli[inmem]"

Collecting langgraph-cli[inmem]

Using cached langgraph_cli-0.4.11-py3-none-any.whl.metadata (4.0 kB)

Collecting click>=8.1.7 (from langgraph-cli[inmem])

Using cached click-8.3.1-py3-none-any.whl.metadata (2.6 kB)

Collecting langgraph-sdk>=0.1.0 (from langgraph-cli[inmem])

Using cached langgraph_sdk-0.3.1-py3-none-any.whl.metadata (1.6 kB)

Collecting langgraph-api<0.7.0,>=0.5.35 (from langgraph-cli[inmem])

Using cached langgraph_api-0.6.15-py3-none-any.whl.metadata (4.2 kB)

Collecting langgraph-runtime-inmem>=0.7 (from langgraph-cli[inmem])

Using cached langgraph_runtime_inmem-0.20.1-py3-none-any.whl.metadata (570 bytes)

Collecting python-dotenv>=0.8.0 (from langgraph-cli[inmem])

Using cached python_dotenv-1.2.1-py3-none-any.whl.metadata (25 kB)

Collecting cloudpickle>=3.0.0 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached cloudpickle-3.1.2-py3-none-any.whl.metadata (7.1 kB)

Collecting cryptography<47.0,>=42.0.0 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached cryptography-46.0.3-cp311-abi3-win_amd64.whl.metadata (5.7 kB)

Collecting grpcio-tools==1.75.1 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Downloading grpcio_tools-1.75.1-cp311-cp311-win_amd64.whl.metadata (5.5 kB)

Collecting grpcio<2.0.0,>=1.75.0 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Downloading grpcio-1.76.0-cp311-cp311-win_amd64.whl.metadata (3.8 kB)

Collecting httpx>=0.25.0 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached httpx-0.28.1-py3-none-any.whl.metadata (7.1 kB)

Collecting jsonschema-rs<0.30,>=0.20.0 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Downloading jsonschema_rs-0.29.1-cp311-cp311-win_amd64.whl.metadata (14 kB)

Collecting langchain-core>=0.3.64 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached langchain_core-1.2.5-py3-none-any.whl.metadata (3.7 kB)

Collecting langgraph-checkpoint<5,>=3.0.1 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached langgraph_checkpoint-3.0.1-py3-none-any.whl.metadata (4.7 kB)

Collecting langgraph<2,>=0.4.10 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached langgraph-1.0.5-py3-none-any.whl.metadata (7.4 kB)

Collecting langsmith>=0.3.45 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached langsmith-0.5.1-py3-none-any.whl.metadata (15 kB)

Collecting opentelemetry-api>=1.37.0 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached opentelemetry_api-1.39.1-py3-none-any.whl.metadata (1.5 kB)

Collecting opentelemetry-exporter-otlp-proto-http>=1.37.0 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached opentelemetry_exporter_otlp_proto_http-1.39.1-py3-none-any.whl.metadata (2.4 kB)

Collecting opentelemetry-sdk>=1.37.0 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached opentelemetry_sdk-1.39.1-py3-none-any.whl.metadata (1.5 kB)

Collecting orjson>=3.9.7 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Downloading orjson-3.11.5-cp311-cp311-win_amd64.whl.metadata (42 kB)

Collecting protobuf<7.0.0,>=6.32.1 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached protobuf-6.33.2-cp310-abi3-win_amd64.whl.metadata (593 bytes)

Collecting pyjwt>=2.9.0 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached PyJWT-2.10.1-py3-none-any.whl.metadata (4.0 kB)

Collecting sse-starlette<2.2.0,>=2.1.0 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached sse_starlette-2.1.3-py3-none-any.whl.metadata (5.8 kB)

Collecting starlette>=0.38.6 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached starlette-0.50.0-py3-none-any.whl.metadata (6.3 kB)

Collecting structlog<26,>=24.1.0 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached structlog-25.5.0-py3-none-any.whl.metadata (9.5 kB)

Collecting tenacity>=8.0.0 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached tenacity-9.1.2-py3-none-any.whl.metadata (1.2 kB)

Collecting truststore>=0.1 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached truststore-0.10.4-py3-none-any.whl.metadata (4.4 kB)

Collecting uuid-utils>=0.12.0 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached uuid_utils-0.12.0-cp39-abi3-win_amd64.whl.metadata (1.1 kB)

Collecting uvicorn>=0.26.0 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached uvicorn-0.40.0-py3-none-any.whl.metadata (6.7 kB)

Collecting watchfiles>=0.13 (from langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Downloading watchfiles-1.1.1-cp311-cp311-win_amd64.whl.metadata (5.0 kB)

Requirement already satisfied: setuptools in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from grpcio-tools==1.75.1->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem]) (80.9.0)

Collecting cffi>=2.0.0 (from cryptography<47.0,>=42.0.0->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Downloading cffi-2.0.0-cp311-cp311-win_amd64.whl.metadata (2.6 kB)

Collecting typing-extensions~=4.12 (from grpcio<2.0.0,>=1.75.0->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached typing_extensions-4.15.0-py3-none-any.whl.metadata (3.3 kB)

Collecting langgraph-prebuilt<1.1.0,>=1.0.2 (from langgraph<2,>=0.4.10->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached langgraph_prebuilt-1.0.5-py3-none-any.whl.metadata (5.2 kB)

Collecting pydantic>=2.7.4 (from langgraph<2,>=0.4.10->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached pydantic-2.12.5-py3-none-any.whl.metadata (90 kB)

Collecting xxhash>=3.5.0 (from langgraph<2,>=0.4.10->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Downloading xxhash-3.6.0-cp311-cp311-win_amd64.whl.metadata (13 kB)

Collecting ormsgpack>=1.12.0 (from langgraph-checkpoint<5,>=3.0.1->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Downloading ormsgpack-1.12.1-cp311-cp311-win_amd64.whl.metadata (3.3 kB)

Collecting blockbuster<2.0.0,>=1.5.24 (from langgraph-runtime-inmem>=0.7->langgraph-cli[inmem])

Using cached blockbuster-1.5.26-py3-none-any.whl.metadata (10 kB)

Collecting forbiddenfruit>=0.1.4 (from blockbuster<2.0.0,>=1.5.24->langgraph-runtime-inmem>=0.7->langgraph-cli[inmem])

Using cached forbiddenfruit-0.1.4.tar.gz (43 kB)

Installing build dependencies ... done

Getting requirements to build wheel ... done

Preparing metadata (pyproject.toml) ... done

Collecting anyio (from sse-starlette<2.2.0,>=2.1.0->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached anyio-4.12.0-py3-none-any.whl.metadata (4.3 kB)

Collecting pycparser (from cffi>=2.0.0->cryptography<47.0,>=42.0.0->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached pycparser-2.23-py3-none-any.whl.metadata (993 bytes)

Collecting colorama (from click>=8.1.7->langgraph-cli[inmem])

Using cached colorama-0.4.6-py2.py3-none-any.whl.metadata (17 kB)

Collecting certifi (from httpx>=0.25.0->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached certifi-2025.11.12-py3-none-any.whl.metadata (2.5 kB)

Collecting httpcore==1.* (from httpx>=0.25.0->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached httpcore-1.0.9-py3-none-any.whl.metadata (21 kB)

Collecting idna (from httpx>=0.25.0->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached idna-3.11-py3-none-any.whl.metadata (8.4 kB)

Collecting h11>=0.16 (from httpcore==1.*->httpx>=0.25.0->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached h11-0.16.0-py3-none-any.whl.metadata (8.3 kB)

Collecting jsonpatch<2.0.0,>=1.33.0 (from langchain-core>=0.3.64->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached jsonpatch-1.33-py2.py3-none-any.whl.metadata (3.0 kB)

Collecting packaging<26.0.0,>=23.2.0 (from langchain-core>=0.3.64->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached packaging-25.0-py3-none-any.whl.metadata (3.3 kB)

Collecting pyyaml<7.0.0,>=5.3.0 (from langchain-core>=0.3.64->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Downloading pyyaml-6.0.3-cp311-cp311-win_amd64.whl.metadata (2.4 kB)

Collecting jsonpointer>=1.9 (from jsonpatch<2.0.0,>=1.33.0->langchain-core>=0.3.64->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached jsonpointer-3.0.0-py2.py3-none-any.whl.metadata (2.3 kB)

Collecting requests-toolbelt>=1.0.0 (from langsmith>=0.3.45->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached requests_toolbelt-1.0.0-py2.py3-none-any.whl.metadata (14 kB)

Collecting requests>=2.0.0 (from langsmith>=0.3.45->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached requests-2.32.5-py3-none-any.whl.metadata (4.9 kB)

Collecting zstandard>=0.23.0 (from langsmith>=0.3.45->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Downloading zstandard-0.25.0-cp311-cp311-win_amd64.whl.metadata (3.3 kB)

Collecting annotated-types>=0.6.0 (from pydantic>=2.7.4->langgraph<2,>=0.4.10->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached annotated_types-0.7.0-py3-none-any.whl.metadata (15 kB)

Collecting pydantic-core==2.41.5 (from pydantic>=2.7.4->langgraph<2,>=0.4.10->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Downloading pydantic_core-2.41.5-cp311-cp311-win_amd64.whl.metadata (7.4 kB)

Collecting typing-inspection>=0.4.2 (from pydantic>=2.7.4->langgraph<2,>=0.4.10->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached typing_inspection-0.4.2-py3-none-any.whl.metadata (2.6 kB)

Collecting importlib-metadata<8.8.0,>=6.0 (from opentelemetry-api>=1.37.0->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached importlib_metadata-8.7.1-py3-none-any.whl.metadata (4.7 kB)

Collecting zipp>=3.20 (from importlib-metadata<8.8.0,>=6.0->opentelemetry-api>=1.37.0->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached zipp-3.23.0-py3-none-any.whl.metadata (3.6 kB)

Collecting googleapis-common-protos~=1.52 (from opentelemetry-exporter-otlp-proto-http>=1.37.0->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached googleapis_common_protos-1.72.0-py3-none-any.whl.metadata (9.4 kB)

Collecting opentelemetry-exporter-otlp-proto-common==1.39.1 (from opentelemetry-exporter-otlp-proto-http>=1.37.0->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached opentelemetry_exporter_otlp_proto_common-1.39.1-py3-none-any.whl.metadata (1.8 kB)

Collecting opentelemetry-proto==1.39.1 (from opentelemetry-exporter-otlp-proto-http>=1.37.0->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached opentelemetry_proto-1.39.1-py3-none-any.whl.metadata (2.3 kB)

Collecting opentelemetry-semantic-conventions==0.60b1 (from opentelemetry-sdk>=1.37.0->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached opentelemetry_semantic_conventions-0.60b1-py3-none-any.whl.metadata (2.4 kB)

Collecting charset_normalizer<4,>=2 (from requests>=2.0.0->langsmith>=0.3.45->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Downloading charset_normalizer-3.4.4-cp311-cp311-win_amd64.whl.metadata (38 kB)

Collecting urllib3<3,>=1.21.1 (from requests>=2.0.0->langsmith>=0.3.45->langgraph-api<0.7.0,>=0.5.35->langgraph-cli[inmem])

Using cached urllib3-2.6.2-py3-none-any.whl.metadata (6.6 kB)

Using cached langgraph_cli-0.4.11-py3-none-any.whl (41 kB)

Using cached langgraph_api-0.6.15-py3-none-any.whl (322 kB)

Downloading grpcio_tools-1.75.1-cp311-cp311-win_amd64.whl (1.2 MB)

---------------------------------------- 1.2/1.2 MB 28.9 MB/s 0:00:00

Using cached cryptography-46.0.3-cp311-abi3-win_amd64.whl (3.5 MB)

Downloading grpcio-1.76.0-cp311-cp311-win_amd64.whl (4.7 MB)

---------------------------------------- 4.7/4.7 MB 35.6 MB/s 0:00:00

Downloading jsonschema_rs-0.29.1-cp311-cp311-win_amd64.whl (1.9 MB)

---------------------------------------- 1.9/1.9 MB 100.6 MB/s 0:00:00

Using cached langgraph-1.0.5-py3-none-any.whl (157 kB)

Using cached langgraph_checkpoint-3.0.1-py3-none-any.whl (46 kB)

Using cached langgraph_prebuilt-1.0.5-py3-none-any.whl (35 kB)

Using cached langgraph_runtime_inmem-0.20.1-py3-none-any.whl (35 kB)

Using cached blockbuster-1.5.26-py3-none-any.whl (13 kB)

Using cached langgraph_sdk-0.3.1-py3-none-any.whl (66 kB)

Using cached protobuf-6.33.2-cp310-abi3-win_amd64.whl (436 kB)

Using cached sse_starlette-2.1.3-py3-none-any.whl (9.4 kB)

Using cached structlog-25.5.0-py3-none-any.whl (72 kB)

Using cached typing_extensions-4.15.0-py3-none-any.whl (44 kB)

Downloading cffi-2.0.0-cp311-cp311-win_amd64.whl (182 kB)

Using cached click-8.3.1-py3-none-any.whl (108 kB)

Using cached cloudpickle-3.1.2-py3-none-any.whl (22 kB)

Using cached httpx-0.28.1-py3-none-any.whl (73 kB)

Using cached httpcore-1.0.9-py3-none-any.whl (78 kB)

Using cached h11-0.16.0-py3-none-any.whl (37 kB)

Using cached langchain_core-1.2.5-py3-none-any.whl (484 kB)

Using cached jsonpatch-1.33-py2.py3-none-any.whl (12 kB)

Using cached langsmith-0.5.1-py3-none-any.whl (275 kB)

Using cached packaging-25.0-py3-none-any.whl (66 kB)

Using cached pydantic-2.12.5-py3-none-any.whl (463 kB)

Downloading pydantic_core-2.41.5-cp311-cp311-win_amd64.whl (2.0 MB)

---------------------------------------- 2.0/2.0 MB 116.6 MB/s 0:00:00

Downloading pyyaml-6.0.3-cp311-cp311-win_amd64.whl (158 kB)

Using cached tenacity-9.1.2-py3-none-any.whl (28 kB)

Using cached uuid_utils-0.12.0-cp39-abi3-win_amd64.whl (183 kB)

Using cached annotated_types-0.7.0-py3-none-any.whl (13 kB)

Using cached jsonpointer-3.0.0-py2.py3-none-any.whl (7.6 kB)

Using cached opentelemetry_api-1.39.1-py3-none-any.whl (66 kB)

Using cached importlib_metadata-8.7.1-py3-none-any.whl (27 kB)

Using cached opentelemetry_exporter_otlp_proto_http-1.39.1-py3-none-any.whl (19 kB)

Using cached opentelemetry_exporter_otlp_proto_common-1.39.1-py3-none-any.whl (18 kB)

Using cached opentelemetry_proto-1.39.1-py3-none-any.whl (72 kB)

Using cached googleapis_common_protos-1.72.0-py3-none-any.whl (297 kB)

Using cached opentelemetry_sdk-1.39.1-py3-none-any.whl (132 kB)

Using cached opentelemetry_semantic_conventions-0.60b1-py3-none-any.whl (219 kB)

Using cached requests-2.32.5-py3-none-any.whl (64 kB)

Downloading charset_normalizer-3.4.4-cp311-cp311-win_amd64.whl (106 kB)

Using cached idna-3.11-py3-none-any.whl (71 kB)

Using cached urllib3-2.6.2-py3-none-any.whl (131 kB)

Using cached certifi-2025.11.12-py3-none-any.whl (159 kB)

Downloading orjson-3.11.5-cp311-cp311-win_amd64.whl (133 kB)

Downloading ormsgpack-1.12.1-cp311-cp311-win_amd64.whl (115 kB)

Using cached PyJWT-2.10.1-py3-none-any.whl (22 kB)

Using cached python_dotenv-1.2.1-py3-none-any.whl (21 kB)

Using cached requests_toolbelt-1.0.0-py2.py3-none-any.whl (54 kB)

Using cached starlette-0.50.0-py3-none-any.whl (74 kB)

Using cached anyio-4.12.0-py3-none-any.whl (113 kB)

Using cached truststore-0.10.4-py3-none-any.whl (18 kB)

Using cached typing_inspection-0.4.2-py3-none-any.whl (14 kB)

Using cached uvicorn-0.40.0-py3-none-any.whl (68 kB)

Downloading watchfiles-1.1.1-cp311-cp311-win_amd64.whl (287 kB)

Downloading xxhash-3.6.0-cp311-cp311-win_amd64.whl (31 kB)

Using cached zipp-3.23.0-py3-none-any.whl (10 kB)

Downloading zstandard-0.25.0-cp311-cp311-win_amd64.whl (506 kB)

Using cached colorama-0.4.6-py2.py3-none-any.whl (25 kB)

Using cached pycparser-2.23-py3-none-any.whl (118 kB)

Building wheels for collected packages: forbiddenfruit

Building wheel for forbiddenfruit (pyproject.toml) ... done

Created wheel for forbiddenfruit: filename=forbiddenfruit-0.1.4-py3-none-any.whl size=21929 sha256=4fac7d335cb4afa51d74aace99e30eccdeb0d914ac5bb367589eb34bb30b6f7b

Stored in directory: c:\users\hp\appdata\local\pip\cache\wheels\a3\09\38\5a94e2df4ae8f2cfc8053997507ad46b07009af3afc34a9a9c

Successfully built forbiddenfruit

Installing collected packages: forbiddenfruit, zstandard, zipp, xxhash, uuid-utils, urllib3, typing-extensions, truststore, tenacity, structlog, pyyaml, python-dotenv, pyjwt, pycparser, protobuf, packaging, ormsgpack, orjson, jsonschema-rs, jsonpointer, idna, h11, colorama, cloudpickle, charset_normalizer, certifi, blockbuster, annotated-types, typing-inspection, requests, pydantic-core, opentelemetry-proto, jsonpatch, importlib-metadata, httpcore, grpcio, googleapis-common-protos, click, cffi, anyio, watchfiles, uvicorn, starlette, requests-toolbelt, pydantic, opentelemetry-exporter-otlp-proto-common, opentelemetry-api, httpx, grpcio-tools, cryptography, sse-starlette, opentelemetry-semantic-conventions, langsmith, langgraph-sdk, opentelemetry-sdk, langgraph-cli, langchain-core, opentelemetry-exporter-otlp-proto-http, langgraph-checkpoint, langgraph-prebuilt, langgraph, langgraph-runtime-inmem, langgraph-api

Successfully installed annotated-types-0.7.0 anyio-4.12.0 blockbuster-1.5.26 certifi-2025.11.12 cffi-2.0.0 charset_normalizer-3.4.4 click-8.3.1 cloudpickle-3.1.2 colorama-0.4.6 cryptography-46.0.3 forbiddenfruit-0.1.4 googleapis-common-protos-1.72.0 grpcio-1.76.0 grpcio-tools-1.75.1 h11-0.16.0 httpcore-1.0.9 httpx-0.28.1 idna-3.11 importlib-metadata-8.7.1 jsonpatch-1.33 jsonpointer-3.0.0 jsonschema-rs-0.29.1 langchain-core-1.2.5 langgraph-1.0.5 langgraph-api-0.6.15 langgraph-checkpoint-3.0.1 langgraph-cli-0.4.11 langgraph-prebuilt-1.0.5 langgraph-runtime-inmem-0.20.1 langgraph-sdk-0.3.1 langsmith-0.5.1 opentelemetry-api-1.39.1 opentelemetry-exporter-otlp-proto-common-1.39.1 opentelemetry-exporter-otlp-proto-http-1.39.1 opentelemetry-proto-1.39.1 opentelemetry-sdk-1.39.1 opentelemetry-semantic-conventions-0.60b1 orjson-3.11.5 ormsgpack-1.12.1 packaging-25.0 protobuf-6.33.2 pycparser-2.23 pydantic-2.12.5 pydantic-core-2.41.5 pyjwt-2.10.1 python-dotenv-1.2.1 pyyaml-6.0.3 requests-2.32.5 requests-toolbelt-1.0.0 sse-starlette-2.1.3 starlette-0.50.0 structlog-25.5.0 tenacity-9.1.2 truststore-0.10.4 typing-extensions-4.15.0 typing-inspection-0.4.2 urllib3-2.6.2 uuid-utils-0.12.0 uvicorn-0.40.0 watchfiles-1.1.1 xxhash-3.6.0 zipp-3.23.0 zstandard-0.25.0

(new_langgrapg_env) C:\Users\HP\miniconda3\envs\new_langgrapg_env>

(new_langgrapg_env) C:\Users\HP\miniconda3\envs\new_langgrapg_env>

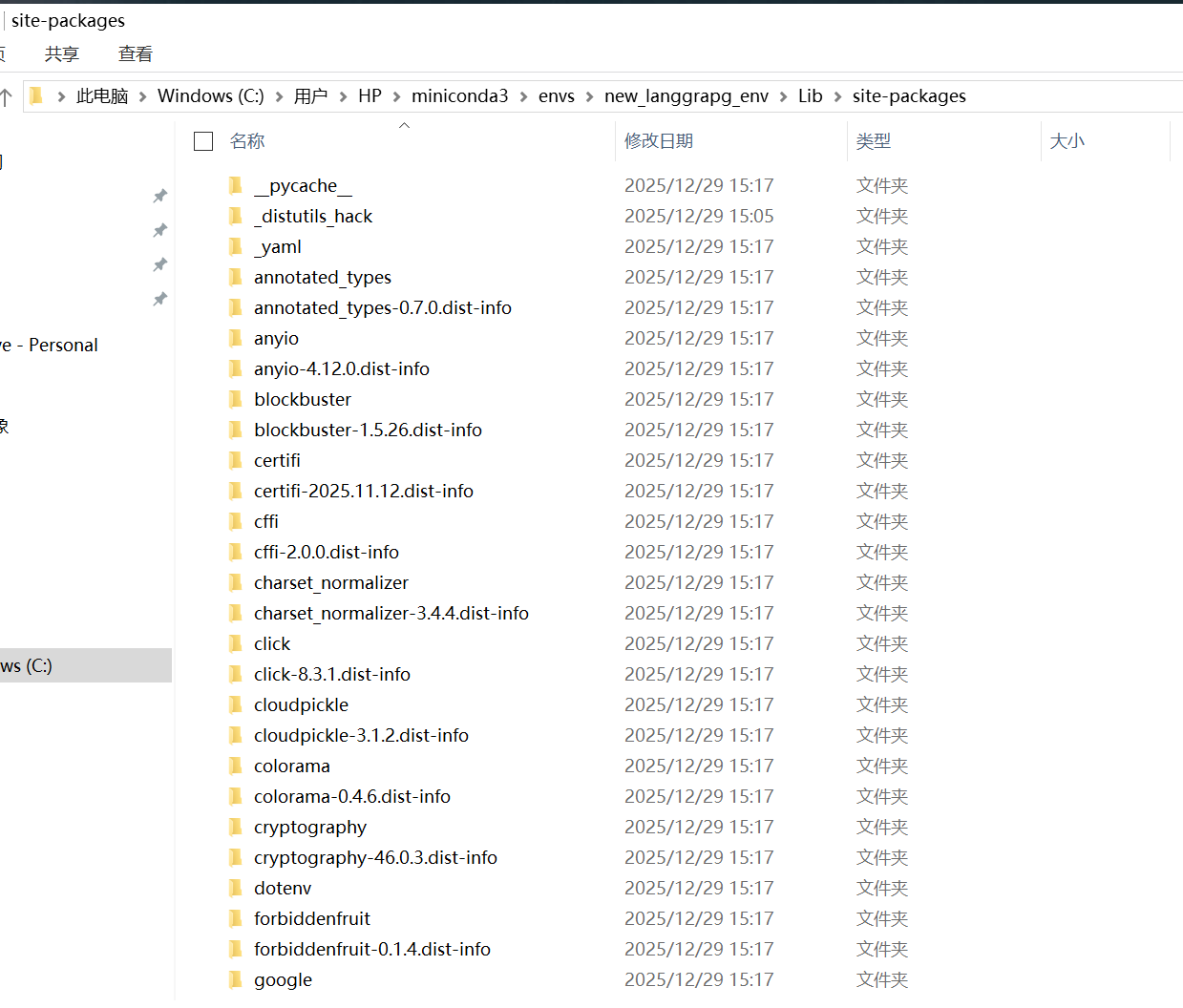

电脑中实际位置

也可以 查看包

(new_langgrapg_env) C:\Users\HP\miniconda3\envs\new_langgrapg_env>

(new_langgrapg_env) C:\Users\HP\miniconda3\envs\new_langgrapg_env>pip list

Package Version

---------------------------------------- ----------

annotated-types 0.7.0

anyio 4.12.0

blockbuster 1.5.26

certifi 2025.11.12

cffi 2.0.0

charset-normalizer 3.4.4

click 8.3.1

cloudpickle 3.1.2

colorama 0.4.6

cryptography 46.0.3

forbiddenfruit 0.1.4

googleapis-common-protos 1.72.0

grpcio 1.76.0

grpcio-tools 1.75.1

h11 0.16.0

httpcore 1.0.9

httpx 0.28.1

idna 3.11

importlib_metadata 8.7.1

jsonpatch 1.33

jsonpointer 3.0.0

jsonschema_rs 0.29.1

langchain-core 1.2.5

langgraph 1.0.5

langgraph-api 0.6.15

langgraph-checkpoint 3.0.1

langgraph-cli 0.4.11

langgraph-prebuilt 1.0.5

langgraph-runtime-inmem 0.20.1

langgraph-sdk 0.3.1

langsmith 0.5.1

opentelemetry-api 1.39.1

opentelemetry-exporter-otlp-proto-common 1.39.1

opentelemetry-exporter-otlp-proto-http 1.39.1

opentelemetry-proto 1.39.1

opentelemetry-sdk 1.39.1

opentelemetry-semantic-conventions 0.60b1

orjson 3.11.5

ormsgpack 1.12.1

packaging 25.0

pip 25.3

protobuf 6.33.2

pycparser 2.23

pydantic 2.12.5

pydantic_core 2.41.5

PyJWT 2.10.1

python-dotenv 1.2.1

PyYAML 6.0.3

requests 2.32.5

requests-toolbelt 1.0.0

setuptools 80.9.0

sse-starlette 2.1.3

starlette 0.50.0

structlog 25.5.0

tenacity 9.1.2

truststore 0.10.4

typing_extensions 4.15.0

typing-inspection 0.4.2

urllib3 2.6.2

uuid_utils 0.12.0

uvicorn 0.40.0

watchfiles 1.1.1

wheel 0.45.1

xxhash 3.6.0

zipp 3.23.0

zstandard 0.25.0

(new_langgrapg_env) C:\Users\HP\miniconda3\envs\new_langgrapg_env>

可以看到 langchain核心包 , langgraph ,pip都已经下载

3.创建langgraph-cli应用

实操

注意 必须是在虚拟环境里,再进入目标项目存放路径,

再输入命令。

(new_langgrapg_env) C:\Users\HP\miniconda3\envs\new_langgrapg_env>cd C:\Users\HP\PycharmProjects

(new_langgrapg_env) C:\Users\HP\PycharmProjects>langgraph new langgraph_demo

这里

第一个问题,

会让从4个模板中选择一个,

默认回车是,模板1:New LangGraph Project – 带 memory 的最小聊天机器人

第二个问题,让选择语言

语言:Python

(new_langgrapg_env) C:\Users\HP\miniconda3\envs\new_langgrapg_env>cd C:\Users\HP\PycharmProjects

(new_langgrapg_env) C:\Users\HP\PycharmProjects>langgraph new langgraph_demo

🌟 Please select a template:

1. New LangGraph Project - A simple, minimal chatbot with memory.

2. ReAct Agent - A simple agent that can be flexibly extended to many tools.

3. Memory Agent - A ReAct-style agent with an additional tool to store memories for use across conversational threads.

4. Retrieval Agent - An agent that includes a retrieval-based question-answering system.

5. Data-enrichment Agent - An agent that performs web searches and organizes its findings into a structured format.

Enter the number of your template choice (default is 1):

You selected: New LangGraph Project - A simple, minimal chatbot with memory.

Choose language (1 for Python 🐍, 2 for JS/TS 🌐): 1

📥 Attempting to download repository as a ZIP archive...

URL: https://github.com/langchain-ai/new-langgraph-project/archive/refs/heads/main.zip

✅ Downloaded and extracted repository to C:\Users\HP\PycharmProjects\langgraph_demo

🎉 New project created at C:\Users\HP\PycharmProjects\langgraph_demo

(new_langgrapg_env) C:\Users\HP\PycharmProjects>

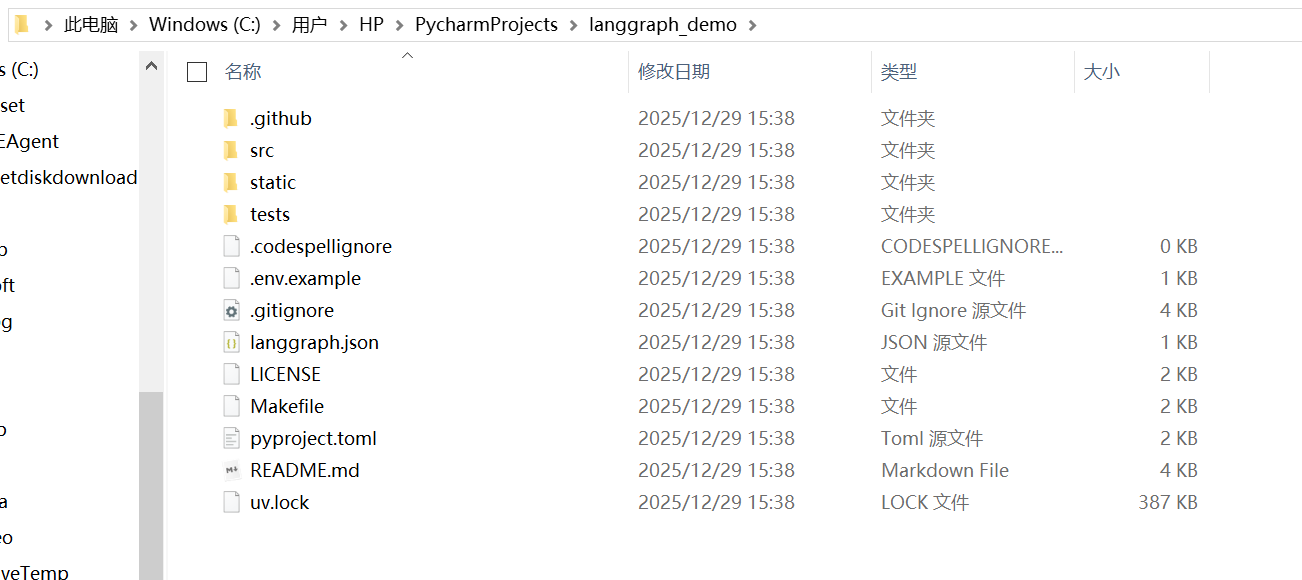

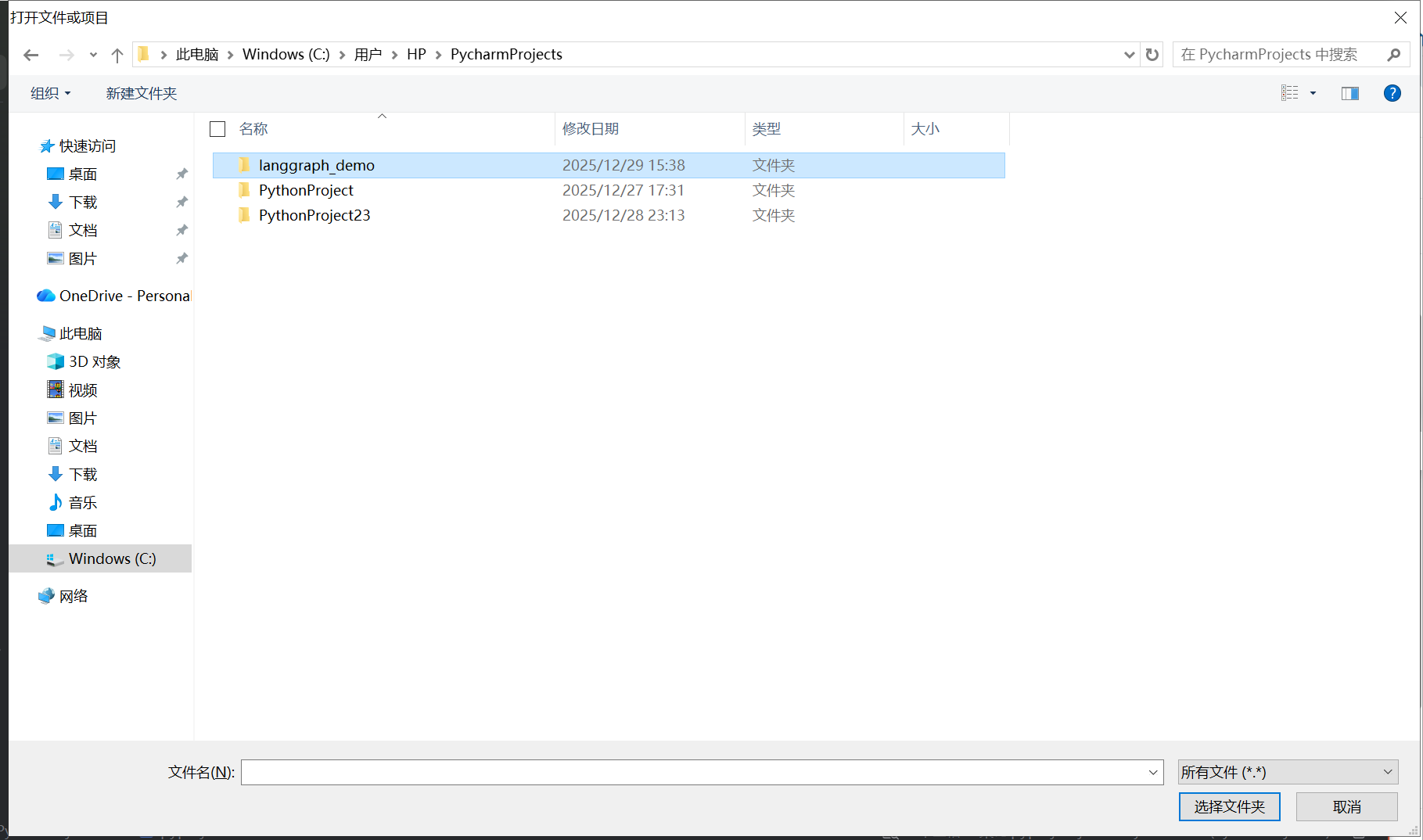

电脑中成功在虚拟环境中,目标路径下,创建了项目。

直接用pycharm打开。

总结 CLI 实际完成的事情是:

从 GitHub 下载官方模板

https://github.com/langchain-ai/new-langgraph-project

解压并生成项目到:

C:\Users\HP\PycharmProjects\langgraph_demo

项目创建 成功结束(New project created)

说明:

- langgraph-cli 安装正常

- Python 环境 new_langgrapg_env 正常

- 网络 / GitHub 访问正常

现在电脑里已经拥有的东西

在这个目录里:

C:\Users\HP\PycharmProjects\langgraph_demo

已经有了一个可运行的 LangGraph 示例项目,通常包含:

- 基础的 LangGraph 图结构

- 一个 带 memory 的 chatbot

- Python 项目结构(可直接用 PyCharm 打开)

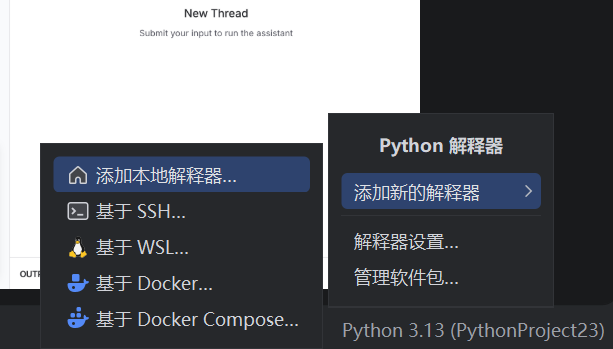

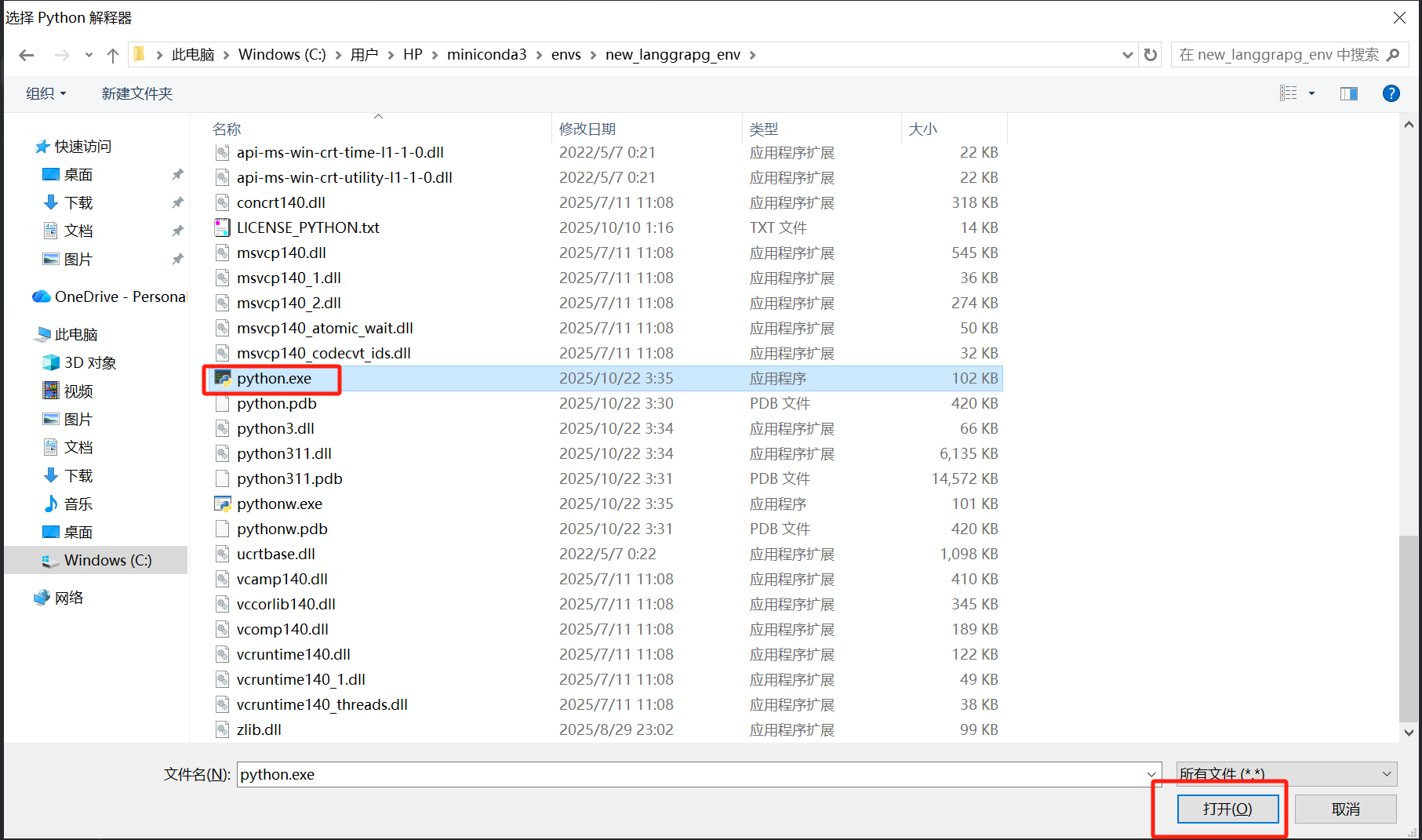

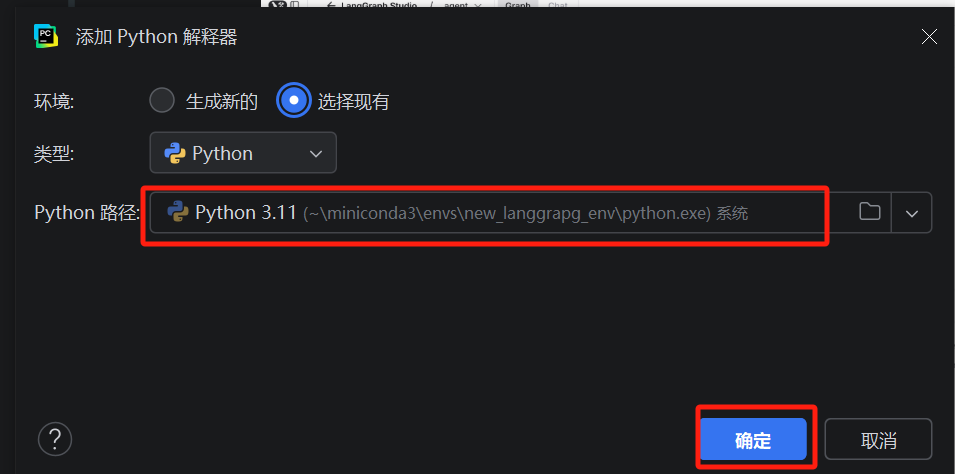

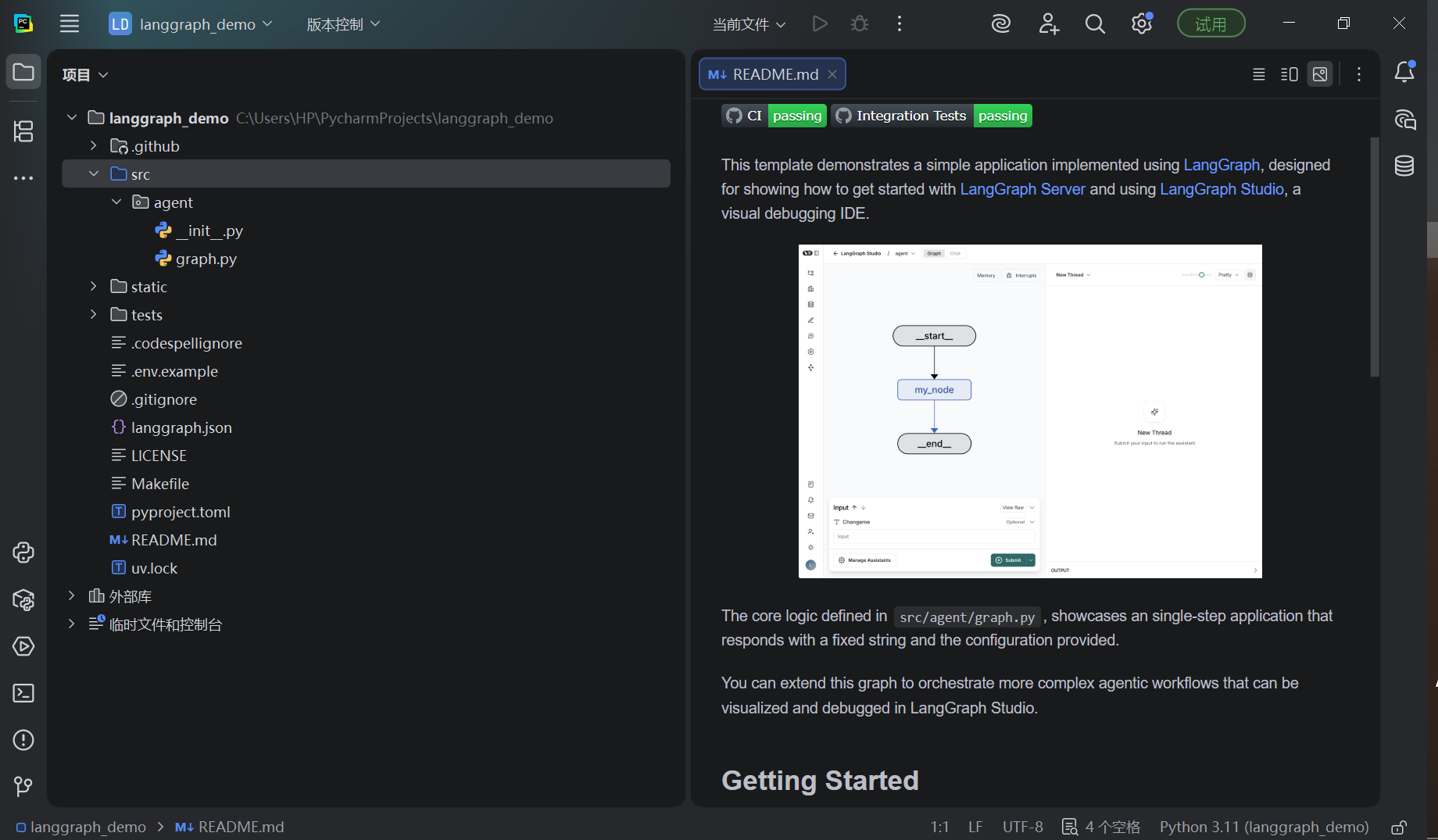

5.用 PyCharm 打开创建的项目

可以手动配置虚拟环境中的3.11版本 python 解释器(也就是创建langgraph项目时候 自动下载的pyhon环境)

6.langgraph项目解析

项目结构总览

langgraph_demo/

├─ .github/ # CI / GitHub Actions(暂时可忽略)

├─ src/

│ └─ agent/ # ⭐ LangGraph Agent 核心代码

│ ├─ __init__.py

│ └─ graph.py # ⭐⭐⭐ 最重要

├─ static/ # 前端 / 静态资源(示例项目一般为空)

├─ tests/ # 测试代码

├─ .env.example # ⭐⭐⭐环境变量示例(API Key)复制为 .env, ,填入自己的 Key

├─ langgraph.json # LangGraph CLI / 配置

├─ pyproject.toml # Python 项目 & 依赖定义

├─ uv.lock # 依赖锁文件

├─ README.md # 使用说明

├─ Makefile # 常用命令封装

└─ LICENSE

非常好,这个截图信息很完整。我按工程视角给你把这个 LangGraph 项目结构逐层拆解,并告诉你真正应该从哪一个文件开始看。

一、整体结论(“导航结论”)

核心入口只有一个文件:

src/agent/graph.py

进项目,90% 的 LangGraph 逻辑都在这里。

如果现在时间有限,只看这个文件就够了。

二、项目结构总览(现在看到的)

langgraph_demo/

├─ .github/ # CI / GitHub Actions(暂时可忽略)

├─ src/

│ └─ agent/ # ⭐ LangGraph Agent 核心代码

│ ├─ __init__.py

│ └─ graph.py # ⭐⭐⭐ 最重要

├─ static/ # 前端 / 静态资源(示例项目一般为空)

├─ tests/ # 测试代码

├─ .env.example # 环境变量示例(API Key)

├─ langgraph.json # LangGraph CLI / 配置

├─ pyproject.toml # Python 项目 & 依赖定义

├─ uv.lock # 依赖锁文件

├─ README.md # 使用说明

├─ Makefile # 常用命令封装

└─ LICENSE

三、最应该看的 5 个文件(按顺序)

① README.md —— 运行方式 & 官方意图

先看这个,搞清楚:

- 项目是怎么启动的

- 需要哪些环境变量

- 示例是“聊天 / Agent / Memory”哪一类

② .env.example —— LLM 配置模板(非常关键)

通常会看到类似:

OPENAI_API_KEY=

需要:

- 复制为

.env - 填入你自己的 Key

③ pyproject.toml —— 环境的配置文件:安装项目环境依赖 & Python 版本

依赖项,以便服务器使用您的本地更改。在您的新 LangGraph 应用的根目录下,以编辑模式安类

在LangGraph 中,pyproject.toml代传统的tup.py 和 requirements.txt,可能包含以下扩展配置:

- 依赖分组:如[project.optional-dependencis]定义 dev(测试框架)依赖。(开发工具)和 test指定Python 版本兼容性。

- 动态版本控制:通过 requires-python=“>=3

- CI/CD 集成:通过[tool.*]配置与 GitHub Actons 或 GitLab CI 的交互

这里可以确认:

- 用的是哪个 Python 版本

- 是否依赖

langgraph/langchain - 是否使用

uv

现在用的是 conda + pip,这没问题。

[project]

# 项目名称(Python 包名)

# pip install -e . 时使用的名字

name = "agent"

# 项目版本号

version = "0.0.1"

# 项目描述(用于包说明、README)

description = "Starter template for making a new agent LangGraph."

# 作者信息(基本不影响运行)

authors = [

{ name = "William Fu-Hinthorn", email = "13333726+hinthornw@users.noreply.github.com" },

]

# 项目说明文档

readme = "README.md"

# 开源协议

license = { text = "MIT" }

# Python 版本要求(非常重要)

# 说明:必须使用 Python 3.10 或以上

requires-python = ">=3.10"

# 运行时必须安装的依赖(核心)

dependencies = [

"langgraph>=1.0.0", # LangGraph 核心框架

"python-dotenv>=1.0.1", # 用于加载 .env 环境变量

]

[project.optional-dependencies]

# 开发环境专用依赖

# 一般不会部署到生产

dev = [

"mypy>=1.11.1", # 静态类型检查

"ruff>=0.6.1", # 代码格式 / Lint 工具

]

CLI 相关

说明:

当前项目 是为 LangGraph CLI 服务的

使用的是 in-memory 运行模式

对应之前执行的命令:

langgraph new langgraph_demo

[dependency-groups]

dev = [

"anyio>=4.7.0",

"langgraph-cli[inmem]>=0.4.7",

"mypy>=1.13.0",

"pytest>=8.3.5",

"ruff>=0.8.2",

]

④ langgraph.json —— LangGraph 项目的配置文件

这个文件是 LangGraph CLI 识别项目的关键,通常包含:

- Agent 定义

- 启动入口

- CLI / Studio 相关配置

一般不用手改,但知道它的存在很重要。

{

"$schema": "https://langgra.ph/schema.json",

"dependencies": ["."],

"graphs": {

"agent": "./src/agent/graph.py:graph"

},

"env": ".env",

"image_distro": "wolfi"

}

{

// 运行 Graph 时需要安装的 Python 依赖

// "." 表示:安装当前项目根目录本身(即 pyproject.toml)

// 等价于:pip install -e .

"dependencies": ["."],

// 定义 Graph 的入口

// key: Graph 名称(CLI / Studio 中使用)

// value: 智能体对象。Python 文件路径 : 变量名

"graphs": {

"agent": "./src/agent/graph.py:graph"

},

// 启动时自动加载的环境变量文件

// 一般用于 OPENAI_API_KEY 等

"env": ".env",

// 构建容器 / 远程运行时使用的基础镜像发行版 docker等

// wolfi = 官方推荐、安全性高

// 本地开发阶段基本不用管

"image_distro": "wolfi"

}

⑤ ⭐ src/agent/graph.py —— 真正的“业务逻辑”

这就是要精读的文件

这里通常会看到:

- State 定义(类似 Java DTO)

- Node(节点函数)

- Graph(节点如何连接)

- Memory / Checkpoint 机制

四、用 Java / 后端工程的角度理解(很重要)

可以这样类比:

| LangGraph | Java 后端类比 |

|---|---|

| State | DTO / VO |

| Node | Service 方法 |

| Edge | 流程控制(if / switch) |

| Graph | 工作流 / 状态机 |

| Memory | Session / Redis / DB |

LangGraph 本质 = LLM + 状态机

这也是它比 LangChain 更适合工程化的原因。

这个比较简单,但是进阶,可以:

- 把它改成 REST API(FastAPI)

- 加一个 Tool / DB / HTTP 调用

- 做成 多 Agent 流程

7. pip install -e . 读取 pyproject.toml在虚拟环境安装依赖

当前目录的 Python 项目”以「可编辑模式」安装到当前虚拟环境中

pip install -e .

说明:

pip install -e .

作用

- 把当前目录的项目安装到当前虚拟环境

- 使用 可编辑模式(-e),修改源码后立刻生效

具体做了什么

- 读取

pyproject.toml - 安装依赖(如

langgraph、python-dotenv) - 让我们可以直接

import agent

什么时候需要再执行

- 改代码:不需要

- 改

pyproject.toml/ 依赖:需要

Microsoft Windows [版本 10.0.19045.6466]

(c) Microsoft Corporation。保留所有权利。

(new_langgrapg_env) C:\Users\HP\PycharmProjects\langgraph_demo>pip install -e .

Obtaining file:///C:/Users/HP/PycharmProjects/langgraph_demo

Installing build dependencies ... done

Checking if build backend supports build_editable ... done

Getting requirements to build editable ... done

Preparing editable metadata (pyproject.toml) ... done

Requirement already satisfied: langgraph>=1.0.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from agent==0.0.1) (1.0.5)

Requirement already satisfied: python-dotenv>=1.0.1 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from agent==0.0.1) (1.2.1)

Requirement already satisfied: langchain-core>=0.1 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langgraph>=1.0.0->agent==0.0.1) (1.2.5)

Requirement already satisfied: langgraph-checkpoint<4.0.0,>=2.1.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langgraph>=1.0.0->agent==0.0.1) (3.0.1)

Requirement already satisfied: langgraph-prebuilt<1.1.0,>=1.0.2 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langgraph>=1.0.0->agent==0.0.1) (1.0.5)

Requirement already satisfied: langgraph-sdk<0.4.0,>=0.3.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langgraph>=1.0.0->agent==0.0.1) (0.3.1)

Requirement already satisfied: pydantic>=2.7.4 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langgraph>=1.0.0->agent==0.0.1) (2.12.5)

Requirement already satisfied: xxhash>=3.5.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langgraph>=1.0.0->agent==0.0.1) (3.6.0)

Requirement already satisfied: ormsgpack>=1.12.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langgraph-checkpoint<4.0.0,>=2.1.0->langgraph>=1.0.0->agent==0.0.1) (1.12.1)

Requirement already satisfied: httpx>=0.25.2 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langgraph-sdk<0.4.0,>=0.3.0->langgraph>=1.0.0->agent==0.0.1) (0.28.1)

Requirement already satisfied: orjson>=3.10.1 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langgraph-sdk<0.4.0,>=0.3.0->langgraph>=1.0.0->agent==0.0.1) (3.11.5)

Requirement already satisfied: anyio in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from httpx>=0.25.2->langgraph-sdk<0.4.0,>=0.3.0->langgraph>=1.0.0->agent==0.0.1) (4.12.0)

Requirement already satisfied: certifi in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from httpx>=0.25.2->langgraph-sdk<0.4.0,>=0.3.0->langgraph>=1.0.0->agent==0.0.1) (2025.11.12)

Requirement already satisfied: httpcore==1.* in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from httpx>=0.25.2->langgraph-sdk<0.4.0,>=0.3.0->langgraph>=1.0.0->agent==0.0.1) (1.0.9)

Requirement already satisfied: idna in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from httpx>=0.25.2->langgraph-sdk<0.4.0,>=0.3.0->langgraph>=1.0.0->agent==0.0.1) (3.11)

Requirement already satisfied: h11>=0.16 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from httpcore==1.*->httpx>=0.25.2->langgraph-sdk<0.4.0,>=0.3.0->langgraph>=1.0.0->agent==0.0.1) (0.16.0)

Requirement already satisfied: jsonpatch<2.0.0,>=1.33.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langchain-core>=0.1->langgraph>=1.0.0->agent==0.0.1) (1.33)

Requirement already satisfied: langsmith<1.0.0,>=0.3.45 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langchain-core>=0.1->langgraph>=1.0.0->agent==0.0.1) (0.5.1)

Requirement already satisfied: packaging<26.0.0,>=23.2.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langchain-core>=0.1->langgraph>=1.0.0->agent==0.0.1) (25.0)

Requirement already satisfied: pyyaml<7.0.0,>=5.3.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langchain-core>=0.1->langgraph>=1.0.0->agent==0.0.1) (6.0.3)

Requirement already satisfied: tenacity!=8.4.0,<10.0.0,>=8.1.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langchain-core>=0.1->langgraph>=1.0.0->agent==0.0.1) (9.1.2)

Requirement already satisfied: typing-extensions<5.0.0,>=4.7.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langchain-core>=0.1->langgraph>=1.0.0->agent==0.0.1) (4.15.0)

Requirement already satisfied: uuid-utils<1.0,>=0.12.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langchain-core>=0.1->langgraph>=1.0.0->agent==0.0.1) (0.12.0)

Requirement already satisfied: jsonpointer>=1.9 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from jsonpatch<2.0.0,>=1.33.0->langchain-core>=0.1->langgraph>=1.0.0->agent==0.0.1) (3.0.0)

Requirement already satisfied: requests-toolbelt>=1.0.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langsmith<1.0.0,>=0.3.45->langchain-core>=0.1->langgraph>=1.0.0->agent==0.0.1) (1.0.0)

Requirement already satisfied: requests>=2.0.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langsmith<1.0.0,>=0.3.45->langchain-core>=0.1->langgraph>=1.0.0->agent==0.0.1) (2.32.5)

Requirement already satisfied: zstandard>=0.23.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langsmith<1.0.0,>=0.3.45->langchain-core>=0.1->langgraph>=1.0.0->agent==0.0.1) (0.25.0)

Requirement already satisfied: annotated-types>=0.6.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from pydantic>=2.7.4->langgraph>=1.0.0->agent==0.0.1) (0.7.0)

Requirement already satisfied: pydantic-core==2.41.5 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from pydantic>=2.7.4->langgraph>=1.0.0->agent==0.0.1) (2.41.5)

Requirement already satisfied: typing-inspection>=0.4.2 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from pydantic>=2.7.4->langgraph>=1.0.0->agent==0.0.1) (0.4.2)

Requirement already satisfied: charset_normalizer<4,>=2 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from requests>=2.0.0->langsmith<1.0.0,>=0.3.45->langchain-core>=0.1->langgraph>=1.0.0->agent==0.0.1) (3.4.4)

Requirement already satisfied: urllib3<3,>=1.21.1 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from requests>=2.0.0->langsmith<1.0.0,>=0.3.45->langchain-core>=0.1->langgraph>=1.0.0->agent==0.0.1) (2.6.2)

Building wheels for collected packages: agent

Building editable for agent (pyproject.toml) ... done

Created wheel for agent: filename=agent-0.0.1-0.editable-py3-none-any.whl size=5144 sha256=a20865e802be5e6ebdd07270ad3ffcb8bc365c47d40879ff204dc7e1ada9c7af

Stored in directory: C:\Users\HP\AppData\Local\Temp\pip-ephem-wheel-cache-ayffcelk\wheels\ff\f9\32\fde3f2c0f36f1489ceb5a0b8240e369fdb64b13393b9bb691e

Successfully built agent

Installing collected packages: agent

Successfully installed agent-0.0.1

(new_langgrapg_env) C:\Users\HP\PycharmProjects\langgraph_demo>

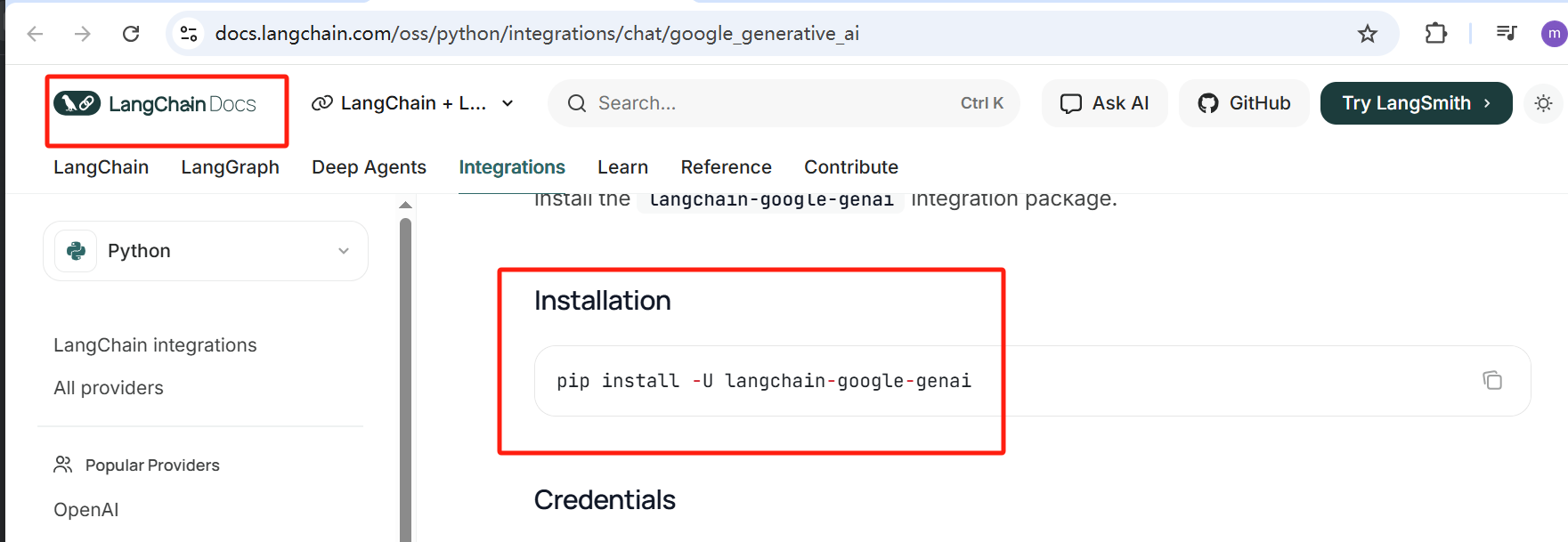

8. .env配置环境文件:API KEY安装 llm对应的 LangChain Provider

LLM 为 Gemini 2.5 Flash

LangChain 官方明确 Gemini 需要安装 langchain-google-genai 并用 GOOGLE_API_KEY/GEMINI_API_KEY 等方式鉴权

pip install -U langchain-google-genai

实操

(new_langgrapg_env) C:\Users\HP\PycharmProjects\langgraph_demo>pip install -U langchain-google-genai

Collecting langchain-google-genai

Downloading langchain_google_genai-4.1.2-py3-none-any.whl.metadata (2.7 kB)

Collecting filetype<2.0.0,>=1.2.0 (from langchain-google-genai)

Downloading filetype-1.2.0-py2.py3-none-any.whl.metadata (6.5 kB)

Collecting google-genai<2.0.0,>=1.56.0 (from langchain-google-genai)

Using cached google_genai-1.56.0-py3-none-any.whl.metadata (53 kB)

Requirement already satisfied: langchain-core<2.0.0,>=1.2.2 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langchain-google-genai) (1.2.5)

Requirement already satisfied: pydantic<3.0.0,>=2.0.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langchain-google-genai) (2.12.5)

Requirement already satisfied: anyio<5.0.0,>=4.8.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from google-genai<2.0.0,>=1.56.0->langchain-google-genai) (4.12.0)

Collecting google-auth<3.0.0,>=2.45.0 (from google-auth[requests]<3.0.0,>=2.45.0->google-genai<2.0.0,>=1.56.0->langchain-google-genai)

Using cached google_auth-2.45.0-py2.py3-none-any.whl.metadata (6.8 kB)

Requirement already satisfied: httpx<1.0.0,>=0.28.1 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from google-genai<2.0.0,>=1.56.0->langchain-google-genai) (0.28.1)

Requirement already satisfied: requests<3.0.0,>=2.28.1 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from google-genai<2.0.0,>=1.56.0->langchain-google-genai) (2.32.5)

Requirement already satisfied: tenacity<9.2.0,>=8.2.3 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from google-genai<2.0.0,>=1.56.0->langchain-google-genai) (9.1.2)

Collecting websockets<15.1.0,>=13.0.0 (from google-genai<2.0.0,>=1.56.0->langchain-google-genai)

Downloading websockets-15.0.1-cp311-cp311-win_amd64.whl.metadata (7.0 kB)

Requirement already satisfied: typing-extensions<5.0.0,>=4.11.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from google-genai<2.0.0,>=1.56.0->langchain-google-genai) (4.15.0)

Collecting distro<2,>=1.7.0 (from google-genai<2.0.0,>=1.56.0->langchain-google-genai)

Using cached distro-1.9.0-py3-none-any.whl.metadata (6.8 kB)

Collecting sniffio (from google-genai<2.0.0,>=1.56.0->langchain-google-genai)

Using cached sniffio-1.3.1-py3-none-any.whl.metadata (3.9 kB)

Requirement already satisfied: idna>=2.8 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from anyio<5.0.0,>=4.8.0->google-genai<2.0.0,>=1.56.0->langchain-google-genai) (3.11)

Collecting cachetools<7.0,>=2.0.0 (from google-auth<3.0.0,>=2.45.0->google-auth[requests]<3.0.0,>=2.45.0->google-genai<2.0.0,>=1.56.0->langchain-google-genai)

Using cached cachetools-6.2.4-py3-none-any.whl.metadata (5.6 kB)

Collecting pyasn1-modules>=0.2.1 (from google-auth<3.0.0,>=2.45.0->google-auth[requests]<3.0.0,>=2.45.0->google-genai<2.0.0,>=1.56.0->langchain-google-genai)

Using cached pyasn1_modules-0.4.2-py3-none-any.whl.metadata (3.5 kB)

Collecting rsa<5,>=3.1.4 (from google-auth<3.0.0,>=2.45.0->google-auth[requests]<3.0.0,>=2.45.0->google-genai<2.0.0,>=1.56.0->langchain-google-genai)

Using cached rsa-4.9.1-py3-none-any.whl.metadata (5.6 kB)

Requirement already satisfied: certifi in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from httpx<1.0.0,>=0.28.1->google-genai<2.0.0,>=1.56.0->langchain-google-genai) (2025.11.12)

Requirement already satisfied: httpcore==1.* in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from httpx<1.0.0,>=0.28.1->google-genai<2.0.0,>=1.56.0->langchain-google-genai) (1.0.9)

Requirement already satisfied: h11>=0.16 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from httpcore==1.*->httpx<1.0.0,>=0.28.1->google-genai<2.0.0,>=1.56.0->langchain-google-genai) (0.16.0)

Requirement already satisfied: jsonpatch<2.0.0,>=1.33.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langchain-core<2.0.0,>=1.2.2->langchain-google-genai) (1.33)

Requirement already satisfied: langsmith<1.0.0,>=0.3.45 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langchain-core<2.0.0,>=1.2.2->langchain-google-genai) (0.5.1)

Requirement already satisfied: packaging<26.0.0,>=23.2.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langchain-core<2.0.0,>=1.2.2->langchain-google-genai) (25.0)

Requirement already satisfied: pyyaml<7.0.0,>=5.3.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langchain-core<2.0.0,>=1.2.2->langchain-google-genai) (6.0.3)

Requirement already satisfied: uuid-utils<1.0,>=0.12.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langchain-core<2.0.0,>=1.2.2->langchain-google-genai) (0.12.0)

Requirement already satisfied: jsonpointer>=1.9 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from jsonpatch<2.0.0,>=1.33.0->langchain-core<2.0.0,>=1.2.2->langchain-google-genai) (3.0.0)

Requirement already satisfied: orjson>=3.9.14 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langsmith<1.0.0,>=0.3.45->langchain-core<2.0.0,>=1.2.2->langchain-google-genai) (3.11.5)

Requirement already satisfied: requests-toolbelt>=1.0.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langsmith<1.0.0,>=0.3.45->langchain-core<2.0.0,>=1.2.2->langchain-google-genai) (1.0.0)

Requirement already satisfied: zstandard>=0.23.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from langsmith<1.0.0,>=0.3.45->langchain-core<2.0.0,>=1.2.2->langchain-google-genai) (0.25.0)

Requirement already satisfied: annotated-types>=0.6.0 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from pydantic<3.0.0,>=2.0.0->langchain-google-genai) (0.7.0)

Requirement already satisfied: pydantic-core==2.41.5 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from pydantic<3.0.0,>=2.0.0->langchain-google-genai) (2.41.5)

Requirement already satisfied: typing-inspection>=0.4.2 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from pydantic<3.0.0,>=2.0.0->langchain-google-genai) (0.4.2)

Requirement already satisfied: charset_normalizer<4,>=2 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from requests<3.0.0,>=2.28.1->google-genai<2.0.0,>=1.56.0->langchain-google-genai) (3.4.4)

Requirement already satisfied: urllib3<3,>=1.21.1 in c:\users\hp\miniconda3\envs\new_langgrapg_env\lib\site-packages (from requests<3.0.0,>=2.28.1->google-genai<2.0.0,>=1.56.0->langchain-google-genai) (2.6.2)

Collecting pyasn1>=0.1.3 (from rsa<5,>=3.1.4->google-auth<3.0.0,>=2.45.0->google-auth[requests]<3.0.0,>=2.45.0->google-genai<2.0.0,>=1.56.0->langchain-google-genai)

Using cached pyasn1-0.6.1-py3-none-any.whl.metadata (8.4 kB)

Downloading langchain_google_genai-4.1.2-py3-none-any.whl (65 kB)

Downloading filetype-1.2.0-py2.py3-none-any.whl (19 kB)

Using cached google_genai-1.56.0-py3-none-any.whl (426 kB)

Using cached distro-1.9.0-py3-none-any.whl (20 kB)

Using cached google_auth-2.45.0-py2.py3-none-any.whl (233 kB)

Using cached cachetools-6.2.4-py3-none-any.whl (11 kB)

Using cached rsa-4.9.1-py3-none-any.whl (34 kB)

Downloading websockets-15.0.1-cp311-cp311-win_amd64.whl (176 kB)

Using cached pyasn1-0.6.1-py3-none-any.whl (83 kB)

Using cached pyasn1_modules-0.4.2-py3-none-any.whl (181 kB)

Using cached sniffio-1.3.1-py3-none-any.whl (10 kB)

Installing collected packages: filetype, websockets, sniffio, pyasn1, distro, cachetools, rsa, pyasn1-modules, google-auth, google-genai, langchain-google-genai

Successfully installed cachetools-6.2.4 distro-1.9.0 filetype-1.2.0 google-auth-2.45.0 google-genai-1.56.0 langchain-google-genai-4.1.2 pyasn1-0.6.1 pyasn1-modules-0.4.2 rsa-4.9.1 sniffio-1.3.1 websockets-15.0.1

(new_langgrapg_env) C:\Users\HP\PycharmProjects\langgraph_demo>

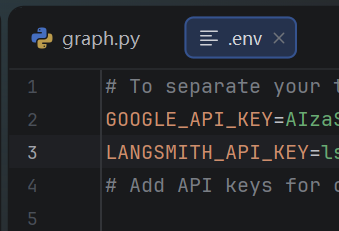

然后就可以配置环境文件 .env

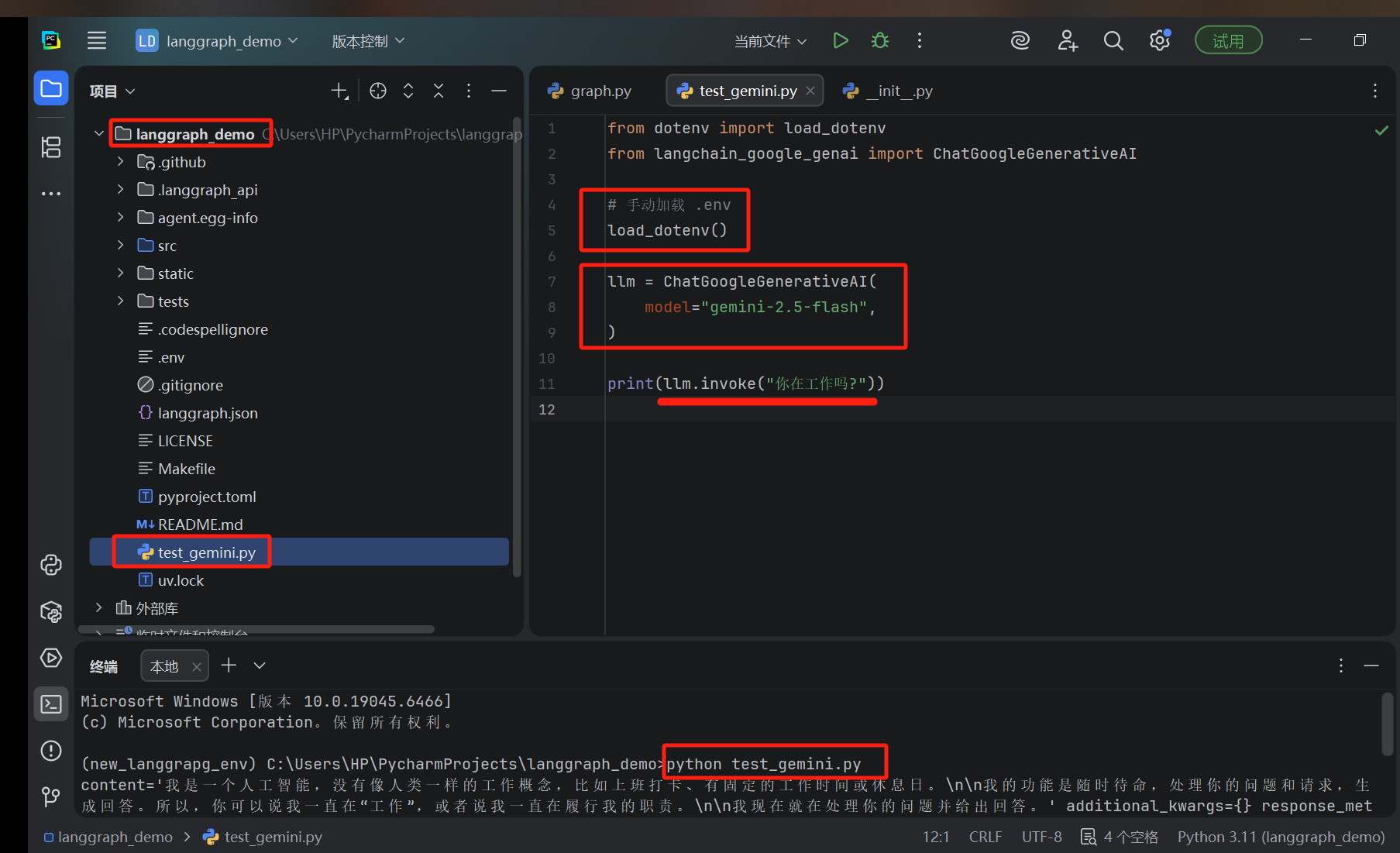

test_gemini.py 项目目录下,可以新预先看llm能否跑通

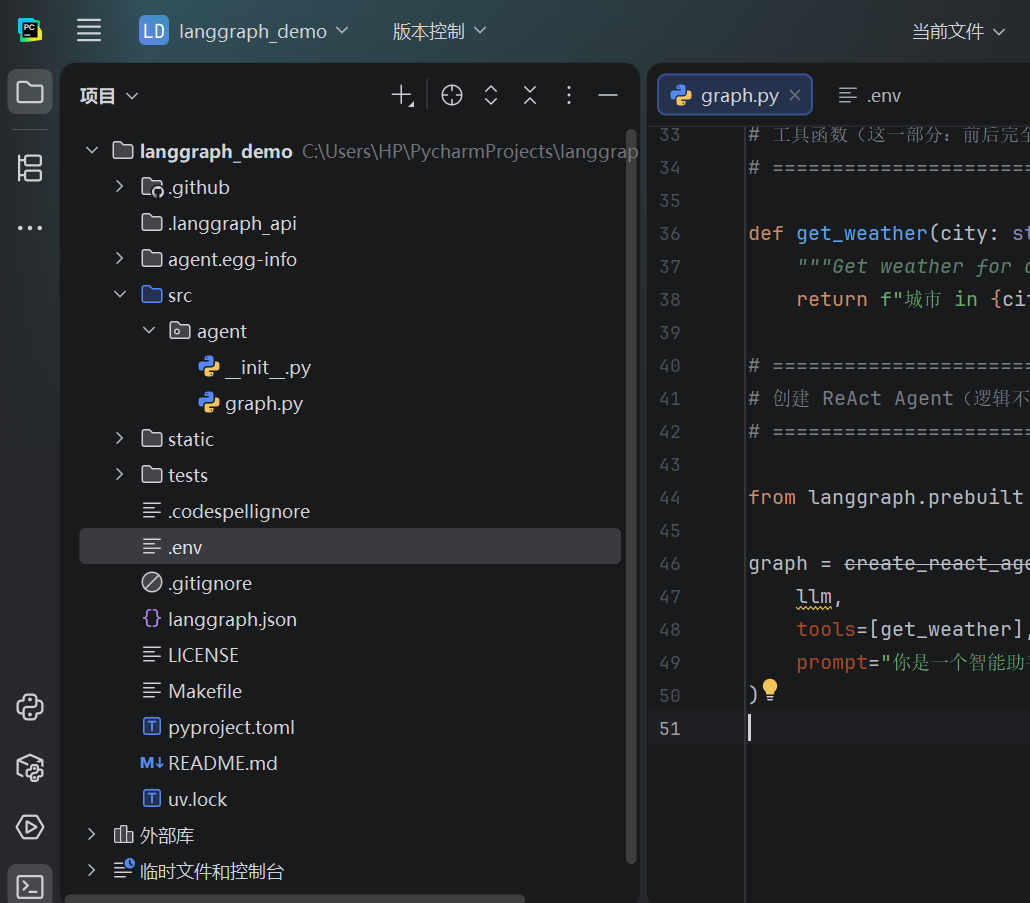

9.graph.py:AI Agent智能体的业务代码

项目中的位置

# ============================================================

# 修改前:本地私有化部署的大模型(OpenAI 协议兼容)

# ============================================================

# from langchain_openai import ChatOpenAI

#

# llm = ChatOpenAI(

# model="qwen3-8b", # 使用的模型名称

# temperature=0.8, # 随机性,越大回答越发散

# api_key="xx", # API Key(本地一般随便填)

# base_url="http://localhost:6006/v1", # 本地模型的 OpenAI 接口地址

# extra_body={ # 额外参数,透传给模型

# "chat_template_kwargs": {

# "enable_thinking": False # 关闭“思考过程”输出(Qwen 模板参数)

# }

# },

# )

# ============================================================

# 修改后:Gemini 2.5 Flash(官方 Gemini API + LangChain 适配)

# ============================================================

from langchain_google_genai import ChatGoogleGenerativeAI

from dotenv import load_dotenv

load_dotenv()

import os

print(os.getenv("GOOGLE_API_KEY"))

llm = ChatGoogleGenerativeAI(

model="gemini-2.5-flash", # Gemini 2.5 Flash(注意:flash,不是 fash)

temperature=0.8, # 含义与 OpenAI / Qwen 完全一致

thinking_budget=0, # ⭐ 等价于 enable_thinking=False(关闭 thinking)

)

# ============================================================

# 工具函数(这一部分:前后完全一样,不需要改)

# ============================================================

def get_weather(city: str) -> str:

"""Get weather for a given city."""

return f"城市 in {city},今天天气晴朗!"

# ============================================================

# 创建 ReAct Agent(逻辑不变,只是 LLM 实现变了)

# ============================================================

from langgraph.prebuilt import create_react_agent

# 此处的变量名 graph对应langgraph.json "agent": "./src/agent/graph.py:graph"

graph = create_react_agent(

llm, # Gemini 2.5 Flash

tools=[get_weather], # Agent 可调用的工具列表

prompt="你是一个智能助手!"

)

10.langgraph dev启动 LangGraph 开发服务器

langgraph dev

作用

- 以内存模式(in-memory)启动 LangGraph 服务

- 适合开发 / 调试

- 不适合生产(数据不持久化)

二、默认行为(你不用额外配置)

- 配置文件:

langgraph.json - 监听地址:

127.0.0.1 - 端口:

2024 - 自动热重载:开启

- 启动后会自动打开浏览器(如未禁用)

启动成功后一般访问:

http://127.0.0.1:2024

三、常用参数(记这几个就够)

langgraph dev --host 0.0.0.0 --port 2024

| 参数 | 类型 | 默认 | 说明 |

|---|---|---|---|

--config |

path | langgraph.json |

配置文件 |

--host |

str | 127.0.0.1 |

监听地址 |

--port |

int | 2024 |

监听端口 |

--no-reload |

flag | false | 关闭热重载 |

--no-browser |

flag | false | 不打开浏览器 |

--debug-port |

int | - | 调试端口 |

--wait-for-client |

flag | false | 等调试器 |

--n-jobs-per-worker |

int | - | 并发任务数 |

四、之后每次项目启动的正确使用顺序(推荐)

# 1. 进入项目目录

cd langgraph_demo

# 2. 确认已安装项目

pip install -e .

# 3. 启动开发服务器

langgraph dev

一句话总结

langgraph dev= 启动本地 LangGraph 开发服务

自动读取langgraph.json,加载自己定义的graph

实操

Microsoft Windows [版本 10.0.19045.6466]

(c) Microsoft Corporati

on。保留所有权利。

(new_langgrapg_env) C:\Users\HP\PycharmProjects\langgraph_demo>langgraph dev

INFO:langgraph_api.cli:

Welcome to

╦ ┌─┐┌┐┌┌─┐╔═╗┬─┐┌─┐┌─┐┬ ┬

║ ├─┤││││ ┬║ ╦├┬┘├─┤├─┘├─┤

╩═╝┴ ┴┘└┘└─┘╚═╝┴└─┴ ┴┴ ┴ ┴

- 🚀 API: http://127.0.0.1:2024

- 🎨 Studio UI: https://smith.langchain.com/studio/?baseUrl=http://127.0.0.1:2024

- 📚 API Docs: http://127.0.0.1:2024/docs

This in-memory server is designed for development and testing.

For production use, please use LangSmith Deployment.

2025-12-29T09:15:24.063012Z [info ] Using langgraph_runtime_inmem [langgraph_runtime] api_variant=local_dev langgraph_api_version=0.6.15 thread_name=MainThread

2025-12-29T09:15:24.083068Z [info ] Using auth of type=noop [langgraph_api.auth.middleware] api_variant=local_dev langgraph_api_version=0.6.15 thread_name=MainThread

2025-12-29T09:15:24.090068Z [info ] Starting In-Memory runtime with langgraph-api=0.6.15 and in-memory runtime=0.20.1 [langgraph_runtime_inmem.lifespan] api_variant=local_dev langgraph_api_version=0.6.15 langgraph_runtime_inmem_version=0.20.1 thread_name=asyncio_0 version=0.6.15

2025-12-29T09:15:24.431328Z [info ] Starting metadata loop [langgraph_api.metadata] api_variant=local_dev endpoint=https://api.smith.langchain.com/v1/metadata/submit langgraph_api_version=0.6.15 thread_name=MainThread

2025-12-29T09:15:24.432255Z [info ] Getting auth instance: None [langgraph_api.auth.custom] api_variant=local_dev langgraph_api_version=0.6.15 langgraph_auth=None thread_name=MainThread

2025-12-29T09:15:24.439808Z [info ] Starting thread TTL sweeper with interval 5 minutes [langgraph_api.thread_ttl] api_variant=local_dev interval_minutes=5 langgraph_api_version=0.6.15 strategy=delete thread_name=asyncio_1

2025-12-29T09:15:24.673704Z [info ] HTTP Request: POST https://api.smith.langchain.com/v1/metadata/submit "HTTP/1.1 204 No Content" [httpx] api_variant=local_dev langgraph_api_version=0.6.15 thread_name=MainThread

2025-12-29T09:15:24.720407Z [info ] Successfully submitted metadata to LangSmith instance [langgraph_api.metadata] api_variant=local_dev langgraph_api_version=0.6.15 n_nodes=0 n_runs=0 thread_name=MainThread

2025-12-29T09:15:25.977287Z [info ] Importing graph with id agent [langgraph_api.timing.timer] api_variant=local_dev elapsed_seconds=1.5456026000028942 graph_id=agent langgraph_api_version=0.6.15 module=None name=_graph_from_spec path=./src/agent/graph.py thread_name=asyncio_0

2025-12-29T09:15:25.978291Z [info ] Application started up in 2.250s [langgraph_api.timing.timer] api_variant=local_dev elapsed=2.25 langgraph_api_version=0.6.15 thread_name=MainThread

2025-12-29T09:15:26.005472Z [info ] Starting 1 background workers [langgraph_runtime_inmem.queue] api_variant=local_dev langgraph_api_version=0.6.15 thread_name=asyncio_2

2025-12-29T09:15:26.006576Z [info ] Worker stats [langgraph_runtime_inmem.queue] active=0 api_variant=local_dev available=1 langgraph_api_version=0.6.15 max=1 thread_name=asyncio_0

Server started in 3.74s

2025-12-29T09:15:26.008285Z [info ] Server started in 3.74s [browser_opener] api_variant=local_dev langgraph_api_version=0.6.15 message='Server started in 3.74s' thread_name='Thread-2 (_open_browser)'

🎨 Opening Studio in your browser...

2025-12-29T09:15:26.009281Z [info ] 🎨 Opening Studio in your browser... [browser_opener] api_variant=local_dev langgraph_api_version=0.6.15 message='🎨 Opening Studio in your browser...' thread_name='Thread-2 (_open_browser)'

URL: https://smith.langchain.com/studio/?baseUrl=http://127.0.0.1:2024

2025-12-29T09:15:26.009281Z [info ] URL: https://smith.langchain.com/studio/?baseUrl=http://127.0.0.1:2024 [browser_opener] api_variant=local_dev langgraph_api_version=0.6.15 message='URL: https://smith.langchain.com/studio/?baseUrl=http://127.0.0.1:2024' thread_name='Thread-2 (_open_browser)'

2025-12-29T09:15:26.520774Z [info ] Queue stats [langgraph_runtime_inmem.queue] api_variant=local_dev langgraph_api_version=0.6.15 n_pending=0 n_running=0 pending_runs_wait_time_max_secs=None pending_runs_wait_time_med_secs=None thread_name=asyncio_2

2025-12-29T09:16:26.230347Z [info ] Worker stats [langgraph_runtime_inmem.queue] active=0 api_variant=local_dev available=1 langgraph_api_version=0.6.15 max=1 thread_name=asyncio_0

2025-12-29T09:16:26.746978Z [info ] Queue stats [langgraph_runtime_inmem.queue] api_variant=local_dev langgraph_api_version=0.6.15 n_pending=0 n_running=0 pending_runs_wait_time_max_secs=None pending_runs_wait_time_med_secs=None thread_name=asyncio_2

2025-12-29T09:17:26.402147Z [info ] Worker stats [langgraph_runtime_inmem.queue] active=0 api_variant=local_dev available=1 langgraph_api_version=0.6.15 max=1 thread_name=asyncio_0

2025-12-29T09:17:26.916070Z [info ] Queue stats [langgraph_runtime_inmem.queue] api_variant=local_dev langgraph_api_version=0.6.15 n_pending=0 n_running=0 pending_runs_wait_time_max_secs=None pending_runs_wait_time_med_secs=None thread_name=asyncio_2

2025-12-29T09:18:26.507434Z [info ] Worker stats [langgraph_runtime_inmem.queue] active=0 api_variant=local_dev available=1 langgraph_api_version=0.6.15 max=1 thread_name=asyncio_0

2025-12-29T09:18:27.007509Z [info ] Queue stats [langgraph_runtime_inmem.queue] api_variant=local_dev langgraph_api_version=0.6.15 n_pending=0 n_running=0 pending_runs_wait_time_max_secs=None pending_runs_wait_time_med_secs=None thread_name=asyncio_2

2025-12-29T09:19:26.606802Z [info ] Worker stats [langgraph_runtime_inmem.queue] active=0 api_variant=local_dev available=1 langgraph_api_version=0.6.15 max=1 thread_name=asyncio_0

2025-12-29T09:19:27.121037Z [info ] Queue stats [langgraph_runtime_inmem.queue] api_variant=local_dev langgraph_api_version=0.6.15 n_pending=0 n_running=0 pending_runs_wait_time_max_secs=None pending_runs_wait_time_med_secs=None thread_name=asyncio_2

2025-12-29T09:19:37.599623Z [info ] 4 changes detected [watchfiles.main] api_variant=local_dev langgraph_api_version=0.6.15 thread_name=MainThread

2025-12-29T09:19:38.316082Z [info ] 1 change detected [watchfiles.main] api_variant=local_dev langgraph_api_version=0.6.15 thread_name=MainThread

2025-12-29T09:20:24.950454Z [info ] HTTP Request: POST https://api.smith.langchain.com/v1/metadata/submit "HTTP/1.1 204 No Content" [httpx] api_variant=local_dev langgraph_api_version=0.6.15 thread_name=MainThread

2025-12-29T09:20:24.953441Z [info ] Successfully submitted metadata to LangSmith instance [langgraph_api.metadata] api_variant=local_dev langgraph_api_version=0.6.15 n_nodes=0 n_runs=0 thread_name=MainThread

2025-12-29T09:20:26.805065Z [info ] Worker stats [langgraph_runtime_inmem.queue] active=0 api_variant=local_dev available=1 langgraph_api_version=0.6.15 max=1 thread_name=asyncio_2

2025-12-29T09:20:27.306354Z [info ] Queue stats [langgraph_runtime_inmem.queue] api_variant=local_dev langgraph_api_version=0.6.15 n_pending=0 n_running=0 pending_runs_wait_time_max_secs=None pending_runs_wait_time_med_secs=None thread_name=asyncio_0

2025-12-29T09:21:27.089428Z [info ] Worker stats [langgraph_runtime_inmem.queue] active=0 api_variant=local_dev available=1 langgraph_api_version=0.6.15 max=1 thread_name=asyncio_2

2025-12-29T09:21:27.588381Z [info ] Queue stats [langgraph_runtime_inmem.queue] api_variant=local_dev langgraph_api_version=0.6.15 n_pending=0 n_running=0 pending_runs_wait_time_max_secs=None pending_runs_wait_time_med_secs=None thread_name=asyncio_0

2025-12-29T09:22:27.178610Z [info ] Worker stats [langgraph_runtime_inmem.queue] active=0 api_variant=local_dev available=1 langgraph_api_version=0.6.15 max=1 thread_name=asyncio_2

2025-12-29T09:22:27.682870Z [info ] Queue stats [langgraph_runtime_inmem.queue] api_variant=local_dev langgraph_api_version=0.6.15 n_pending=0 n_running=0 pending_runs_wait_time_max_secs=None pending_runs_wait_time_med_secs=None thread_name=asyncio_0

2025-12-29T09:23:27.454220Z [info ] Worker stats [langgraph_runtime_inmem.queue] active=0 api_variant=local_dev available=1 langgraph_api_version=0.6.15 max=1 thread_name=asyncio_2

2025-12-29T09:23:27.952285Z [info ] Queue stats [langgraph_runtime_inmem.queue] api_variant=local_dev langgraph_api_version=0.6.15 n_pending=0 n_running=0 pending_runs_wait_time_max_secs=None pending_runs_wait_time_med_secs=None thread_name=asyncio_0

2025-12-29T09:24:27.504043Z [info ] Worker stats [langgraph_runtime_inmem.queue] active=0 api_variant=local_dev available=1 langgraph_api_version=0.6.15 max=1 thread_name=asyncio_2

2025-12-29T09:24:28.019370Z [info ] Queue stats [langgraph_runtime_inmem.queue] api_variant=local_dev langgraph_api_version=0.6.15 n_pending=0 n_running=0 pending_runs_wait_time_max_secs=None pending_runs_wait_time_med_secs=None thread_name=asyncio_0

2025-12-29T09:25:04.898115Z [info ] 4 changes detected [watchfiles.main] api_variant=local_dev langgraph_api_version=0.6.15 thread_name=MainThread

2025-12-29T09:25:05.252553Z [info ] 1 change detected [watchfiles.main] api_variant=local_dev langgraph_api_version=0.6.15 thread_name=MainThread

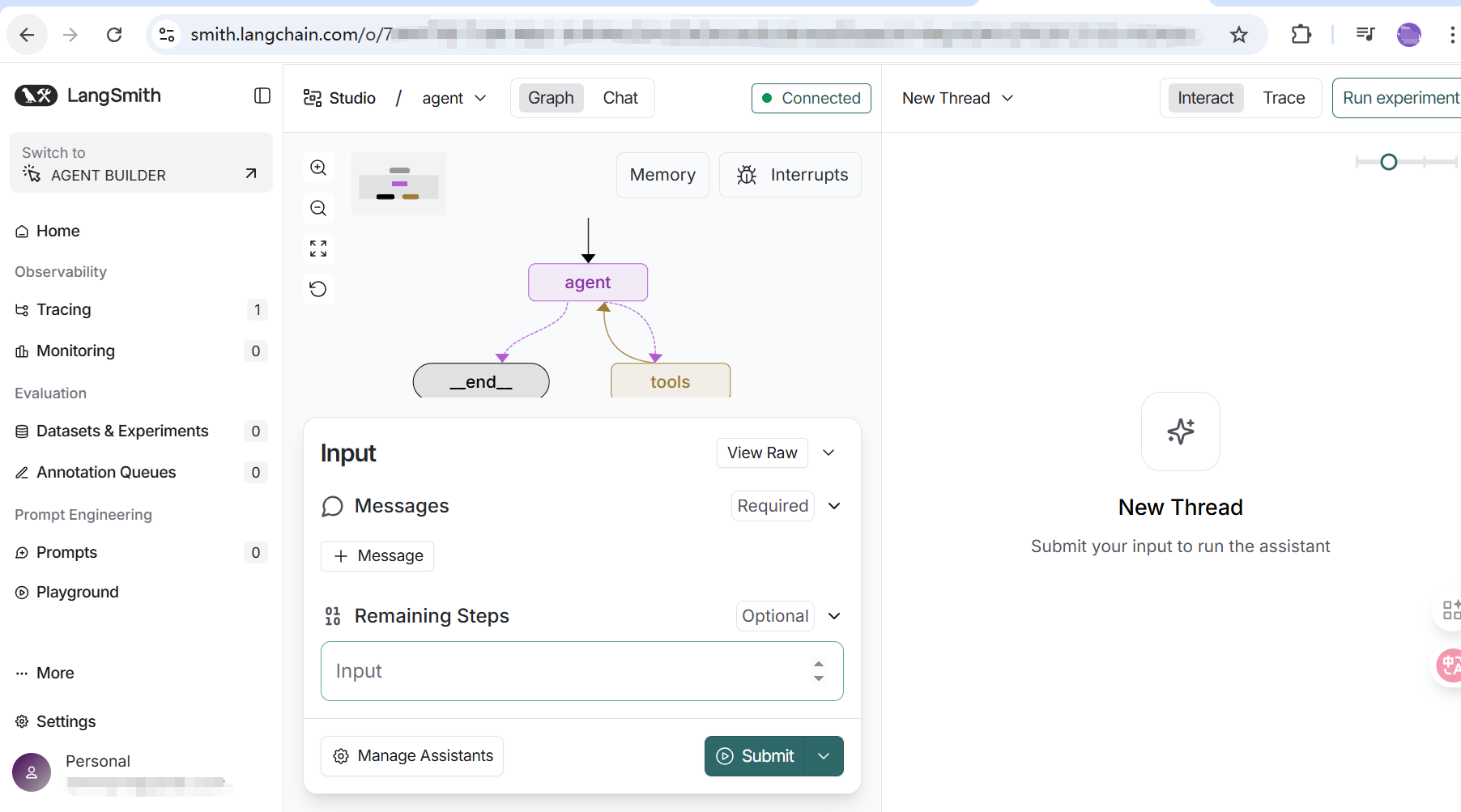

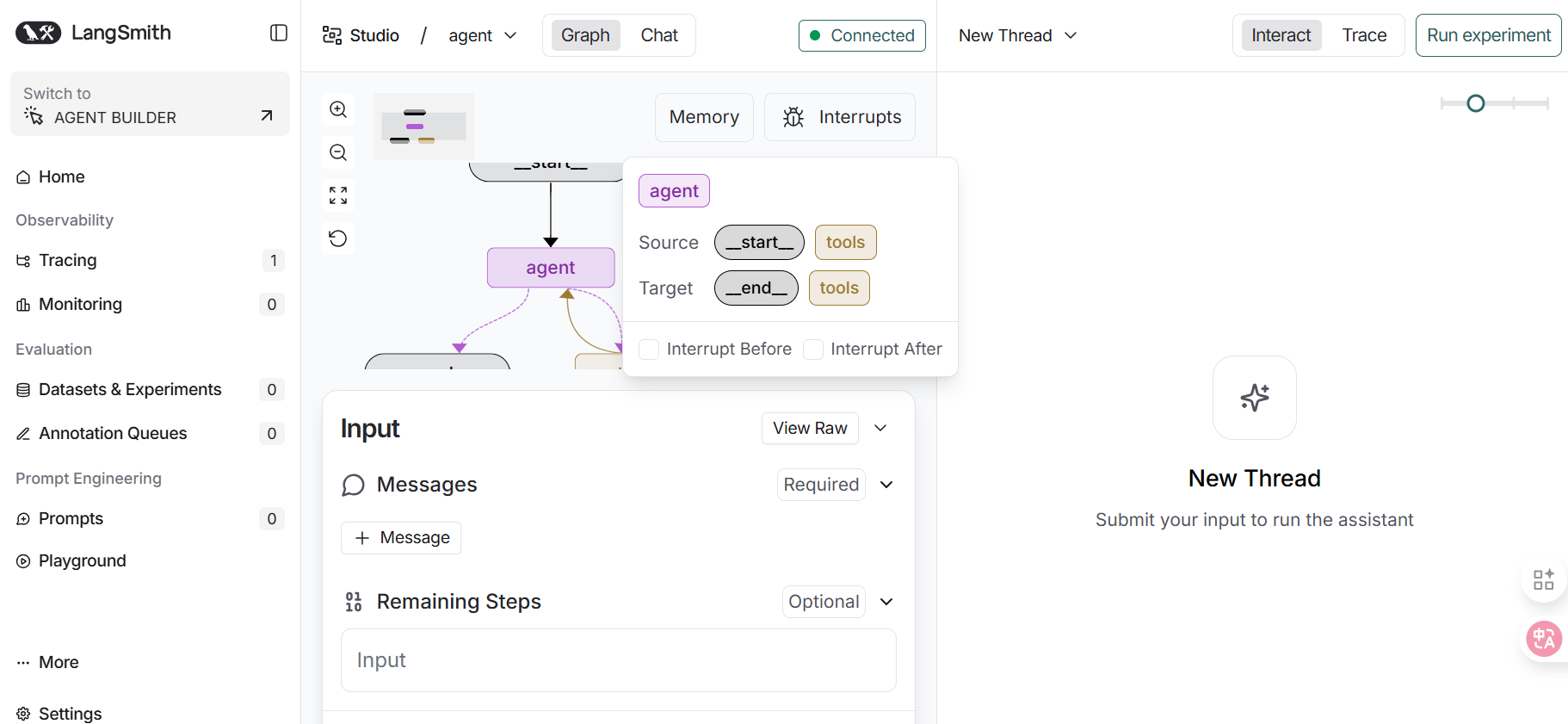

11.langSmith 使用

项目成功启动,自动打开langSmith

下面按“现在正在用 LangGraph Studio 的真实视角”,把这张图一次性讲清楚

一、这张图是什么?(一句话)

这是当前 Agent 的 LangGraph 执行图(Execution Graph)

展示的是:一次对话从开始 → Agent 思考 → 调用 Tool → 结束的完整流程

二、图中每个节点是什么意思(从上到下)

__start__(起点)

- 每一次 New Thread / 新对话 都从这里开始

- 对应:你在右侧输入框点击 Interact

不可修改,是 LangGraph 内置节点

agent(核心节点)⭐

-

** Agent 本体**

-

实际执行的是:

- Gemini(或你配置的 LLM)

-

- prompt

-

- 当前 messages

-

- memory(如果启用)

在代码中对应的就是:

graph = create_agent(...)

90% 的逻辑都发生在这里

tools(工具节点)

-

当 Agent 判断:

“我需要调用工具”

-

就会从

agent → tools -

执行你定义的 Python 函数,例如:

def get_weather(city: str) -> str:

...

执行完后:

- 结果会返回给

agent - 继续下一步判断

agent ↔ tools 的来回箭头(重点)

看到的是:

agent → tools → agent

这表示:

-

ReAct 模式

-

Agent 可以:

- 想一想

- 调用 Tool

- 再想一想

- 再决定是否继续

这是“智能行为”的来源

__end__(终点)

-

当 Agent 判断:

“我已经可以回答用户了”

-

流程走到这里

-

对话结束,结果返回 UI

三、图上的虚线 / 实线代表什么?

- 实线箭头:必经路径

- 虚线箭头:条件路径(Agent 决定)

例如:

- 不需要工具 →

agent → __end__ - 需要工具 →

agent → tools → agent

四、顶部几个按钮该怎么理解?

Graph / Chat

- Graph:看流程结构(你现在看到的)

- Chat:像 ChatGPT 一样直接聊天

Memory

- 是否启用 跨轮对话记忆

- 当前这张图是 无长期记忆 / in-memory

Interrupts

-

人工中断点

-

常用于:

- 人工审批

- 半自动 Agent

五、右侧 New Thread 是什么?

-

一个 Thread = 一次完整对话

-

每个 Thread:

- 有独立的 state

- 独立的执行路径

-

切换 Thread 就像切换聊天窗口

六、一句话理解

这张图 = 一个 LLM 驱动的状态机

- Node = 方法

- Edge = if / while

- Tool = 外部服务

- Thread = 会话上下文

七、测试验证agent

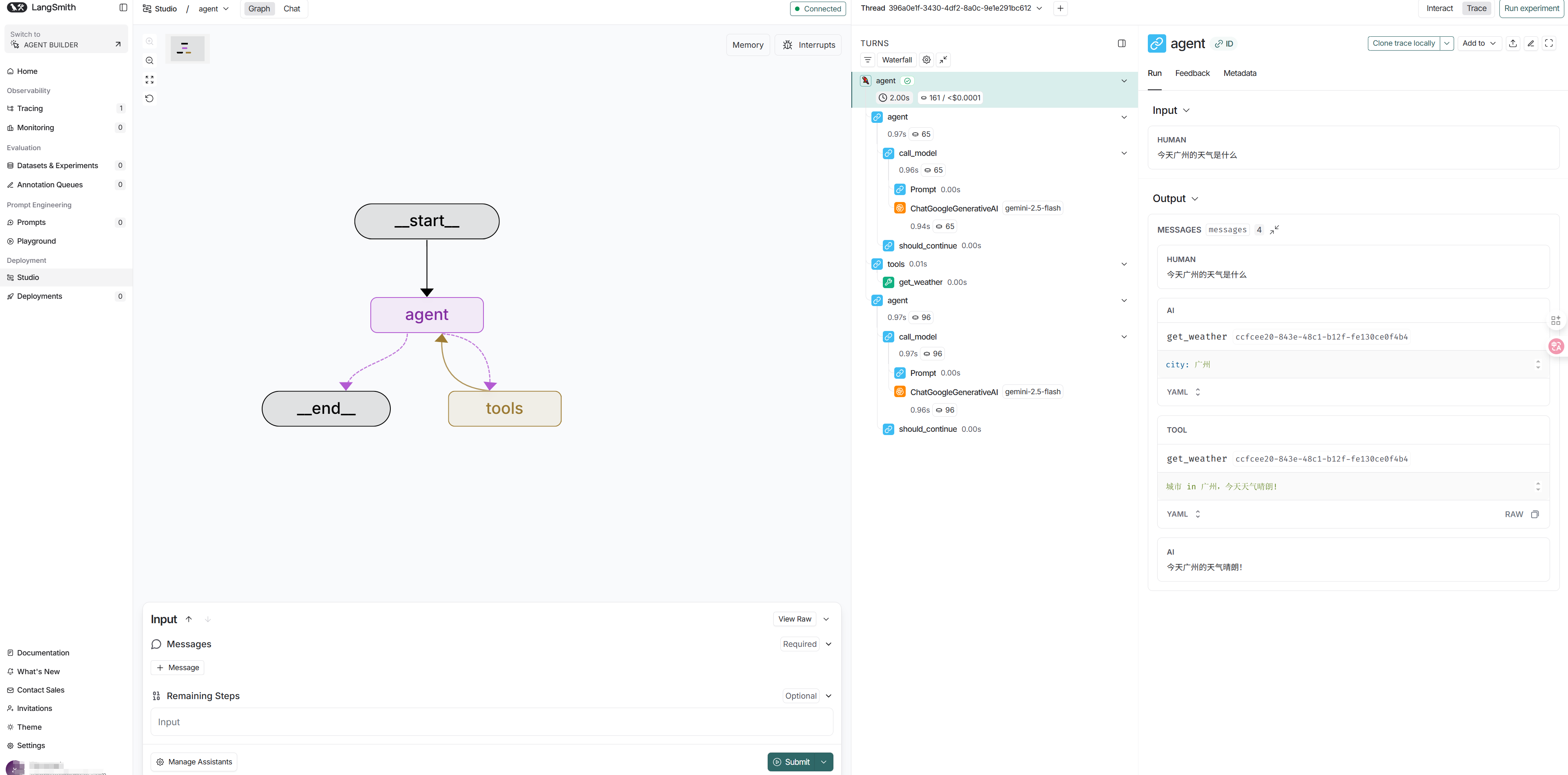

12.langSmith 查看agent是否跑通

确认

-

看 Graph 是否走了:

agent → tools → agent → end -

Tracing 看 Gemini 是否真的调用了

get_weather

这一张 Trace 说明 LangGraph + Gemini + Tool 调用已经 100% 跑通。

按 “从上到下、从左到右” 解释这一条执行链。

一、一句话结论

Gemini 成功理解了问题

成功决定 调用 get_weather 工具

工具被真实执行

结果回传给 Agent

Agent 生成最终自然语言回答

这是一个“标准、正确、健康”的 ReAct Agent Trace。

二、左侧 Waterfall(执行时间线)

这是执行顺序 + 耗时,从上到下就是时间顺序。

1️⃣ agent(第一次)

agent 0.97s

└─ call_model (gemini-2.5-flash)

└─ should_continue

含义:

- Agent 读取用户输入

- Gemini 推理:“我需要调用工具”

should_continue = True

👉 这一步 没有直接回答用户

2️⃣ tools → get_weather

tools

└─ get_weather

在右侧看到:

AI → get_weather

city: 广州

这说明:

- Gemini 正确识别了参数

- 自动生成了 Tool Call

- 参数结构完全正确(city = 广州)

👉

这是 Tool Calling 成功的关键证据

3️⃣ TOOL 返回结果

城市 in 广州,今天天气晴朗!

这是 真实执行 Python 函数的返回值:

def get_weather(city: str) -> str:

return f"城市 in {city},今天天气晴朗!"

4️⃣ agent(第二次)

agent 0.97s

└─ call_model

└─ should_continue = False

含义:

- Agent 拿到 tool 的返回结果

- Gemini 决定:可以结束了

- 生成最终用户可读回答

三、右侧 Output(消息流,最重要)

消息顺序(非常标准)

1️⃣ HUMAN

今天广州的天气是什么

2️⃣ AI(Tool Call 意图)

get_weather(city=广州)

3️⃣ TOOL(真实执行结果)

城市 in 广州,今天天气晴朗!

4️⃣ AI(最终回答)

今天广州的天气晴朗!

👉 这正是 ReAct = Think → Act → Observe → Answer

四、为什么这条 Trace 非常“干净”

几个关键指标:

- ✅ 没有 retry

- ✅ 没有 hallucination

- ✅ 参数抽取正确

- ✅ 一次 tool call 就结束

- ✅ 最终回答简洁

这说明:

- prompt 合理

- tool 定义清晰

- Gemini 2.5 Flash 表现稳定

客观说,

现在已经完成了:

- ✅ 最新 LangGraph

- ✅ Gemini 2.5 Flash

- ✅ ReAct Agent

- ✅ Tool Calling

- ✅ LangSmith Trace 阅读

- ✅ Graph / State / Tool 的完整闭环

Trace 就是 Agent 的“调用栈 + 日志”

你现在已经能读懂、判断、验证它是否“正确工作”。

更多推荐

已为社区贡献5条内容

已为社区贡献5条内容

所有评论(0)