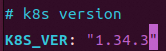

Kubernetes 的部署方式 (三)通过 kubeasz部署1.34.3集群版 (该工具目前不支持1.35,待更新后再更新,1.35变化点 只支持containerd2.0以上)

本文介绍了使用kubeasz工具部署高可用Kubernetes集群的详细步骤。kubeasz是一个基于Ansible-playbook的自动化部署工具,支持二进制方式部署K8s集群,提供灵活的配置选项和多种网络插件选择。文章详细说明了部署前的准备工作,包括系统要求、节点规划、免密登录配置等,并提供了完整的部署流程,从下载工具脚本到最终集群安装。同时,文章还对比了docker和containerd的

一、kubeasz部署集群简介

kubeasz 致力于提供快速部署高可用k8s集群的工具, 同时也努力成为k8s实践、使用的参考书;基于二进制方式部署和利用ansible-playbook实现自动化;既提供一键安装脚本, 也可以根据安装指南分步执行安装各个组件。

kubeasz 从每一个单独部件组装到完整的集群,提供最灵活的配置能力,几乎可以设置任何组件的任何参数;同时又为集群创建预置一套运行良好的默认配置,甚至自动化创建适合大规模集群的BGP Route Reflector网络模式。

- 集群特性 Master高可用、离线安装、多架构支持(amd64/arm64)

- 集群版本 kubernetes v1.24, v1.25, v1.26, v1.27, v1.28, v1.29, v1.30, v1.31, v1.32, v.1.33, v1.34

- 运行时 containerd v1.7.x, v2.0.x, v2.1.x

- 网络 calico, cilium, flannel, kube-ovn, kube-router

[news] kubeasz 通过cncf一致性测试 详情

推荐版本对照

| Kubernetes | 1.23 | 1.24-1.28 | 1.29 | 1.30 | 1.31 | 1.32 | 1.33 | 1.34 |

| kubeasz | 3.2.0 | 3.6.2 | 3.6.3 | 3.6.4 | 3.6.5 | 3.6.6 | 3.6.7 | 3.6.8 |

支持系统(截止2025年12月26日)

- Alibaba Linux 2.1903, 3.2104(notes)

- Alma Linux 8, 9

- Anolis OS 8.x RHCK, 8.x ANCK

- CentOS/RHEL 7, 8, 9

- Debian 10, 11(notes)

- Fedora 34, 35, 36, 37

- Kylin Linux Advanced Server V10 麒麟V10 Tercel, Lance

- openEuler 22.03 LTS, 24.03 LTS(notes)

- openSUSE Leap 15.x(notes)

- Rocky Linux 8, 9

- Ubuntu 16.04, 18.04, 20.04, 22.04, 24.04

能够支持大部分使用systemd的linux发行版,如果安装有问题先请查看文档;如果某个能够支持安装的系统没有在列表中,欢迎提PR 告知。

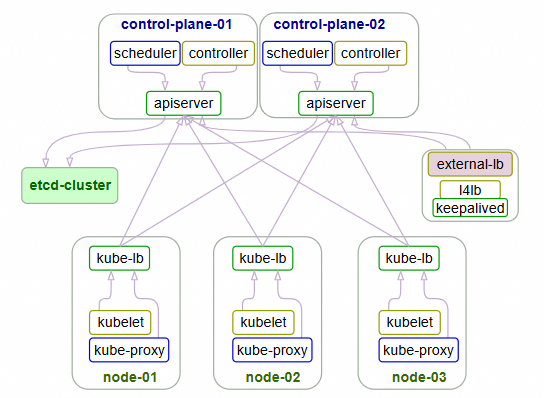

高可用架构

HA architecture

- 注意1:确保各节点时区设置一致、时间同步。 如果你的环境没有提供NTP 时间同步,推荐集成安装chrony

- 注意2:确保在干净的系统上开始安装,不要使用曾经装过kubeadm或其他k8s发行版的环境

- 注意3:建议操作系统升级到新的稳定内核,请结合阅读内核升级文档

- 注意4:在公有云上创建多主集群,请结合阅读在公有云上部署 kubeasz

高可用集群所需节点配置如下

| 角色 | 数量 | 描述 |

|---|---|---|

| 部署节点 | 1 | 运行ansible/ezctl命令,一般复用第一个master节点 |

| etcd节点 | 3 | 注意etcd集群需要1,3,5,...奇数个节点,一般复用master节点 |

| master节点 | 2 | 高可用集群至少2个master节点 |

| node节点 | n | 运行应用负载的节点,可根据需要提升机器配置/增加节点数 |

机器配置:

- master节点:4c/8g内存/50g硬盘

- worker节点:建议8c/32g内存/200g硬盘以上

注意:默认配置下容器运行时和kubelet会占用/var的磁盘空间,如果磁盘分区特殊,可以设置config.yml中的容器运行时和kubelet数据目录:CONTAINERD_STORAGE_DIR DOCKER_STORAGE_DIR KUBELET_ROOT_DIR

在 kubeasz 2x 版本,多节点高可用集群安装可以使用2种方式

- 1.按照本文步骤先规划准备,预先配置节点信息后,直接安装多节点高可用集群

- 2.先部署单节点集群 AllinOne部署,然后通过 节点添加 扩容成高可用集群

部署步骤

以下示例创建一个4节点的多主高可用集群,文档中命令默认都需要root权限运行。

1.基础系统配置

- 2c/4g内存/40g硬盘(该配置仅测试用)

- 最小化安装

Ubuntu 16.04 server或者CentOS 7 Minimal - 配置基础网络、更新源、SSH登录等

2.在每个节点安装依赖工具

推荐使用ansible in docker 容器化方式运行,无需安装额外依赖。

3.准备ssh免密登陆

配置从部署节点能够ssh免密登陆所有节点,并且设置python软连接

#$IP为所有节点地址包括自身,按照提示输入yes 和root密码 ssh-copy-id $IP

4.在部署节点编排k8s安装

- 4.1 下载项目源码、二进制及离线镜像

下载工具脚本ezdown,举例使用kubeasz版本3.5.0

export release=3.5.0

wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

chmod +x ./ezdown

下载kubeasz代码、二进制、默认容器镜像(更多关于ezdown的参数,运行./ezdown 查看)

# 国内环境 ./ezdown -D # 海外环境 #./ezdown -D -m standard

【可选】下载额外容器镜像(cilium,flannel,prometheus等)

# 按需下载 ./ezdown -X flannel ./ezdown -X prometheus ...

【可选】下载离线系统包 (适用于无法使用yum/apt仓库情形)

./ezdown -P

上述脚本运行成功后,所有文件(kubeasz代码、二进制、离线镜像)均已整理好放入目录/etc/kubeasz

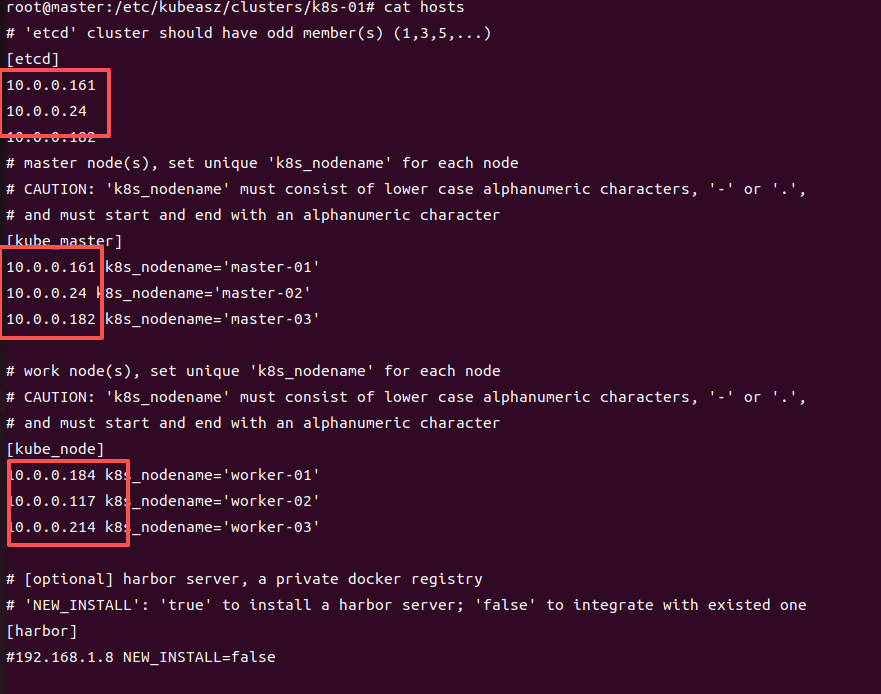

- 4.2 创建集群配置实例

# 容器化运行kubeasz ./ezdown -S # 创建新集群 k8s-01 docker exec -it kubeasz ezctl new k8s-01 2021-01-19 10:48:23 DEBUG generate custom cluster files in /etc/kubeasz/clusters/k8s-01 2021-01-19 10:48:23 DEBUG set version of common plugins 2021-01-19 10:48:23 DEBUG cluster k8s-01: files successfully created. 2021-01-19 10:48:23 INFO next steps 1: to config '/etc/kubeasz/clusters/k8s-01/hosts' 2021-01-19 10:48:23 INFO next steps 2: to config '/etc/kubeasz/clusters/k8s-01/config.yml'

然后根据提示配置'/etc/kubeasz/clusters/k8s-01/hosts' 和 '/etc/kubeasz/clusters/k8s-01/config.yml':根据前面节点规划修改hosts 文件和其他集群层面的主要配置选项;其他集群组件等配置项可以在config.yml 文件中修改。

- 4.3 开始安装 如果你对集群安装流程不熟悉,请阅读项目首页 安装步骤 讲解后分步安装,并对 每步都进行验证

#建议使用alias命令,查看~/.bashrc 文件应该包含:alias dk='docker exec -it kubeasz' source ~/.bashrc # 一键安装,等价于执行docker exec -it kubeasz ezctl setup k8s-01 all dk ezctl setup k8s-01 all # 或者分步安装,具体使用 dk ezctl help setup 查看分步安装帮助信息 # dk ezctl setup k8s-01 01 # dk ezctl setup k8s-01 02 # dk ezctl setup k8s-01 03 # dk ezctl setup k8s-01 04 ...

docker运行时部署参考我22年的文章

https://blog.csdn.net/m0_66705547/article/details/128603759?spm=1011.2415.3001.5331

二、高可用分布式集群部署 实操

1.部署构架说明 本次所有主机均采用 ubuntu24.04 / ubuntu22.04 系统,主机角色以下:

| IP | 主机名 | 角色 |

|---|---|---|

| 10.0.0.161 | master1 | K8s集群主节点1,K8s集群etcd节点1,建议内存2G以上 |

| 10.0.0.24 | master2 | K8s集群主节点2,K8s集群etcd节点2,建议内存2G以上 |

| 10.0.0.182 | master3 | K8s集群主节点3,K8s集群etcd节点3,建议内存2G以上 |

| 10.0.0.184 | node1 | K8s集群工作节点1 |

| 10.0.0.117 | node2 | K8s集群工作节点2 |

| 10.0.0.214 | node3 | K8s集群工作节点3 |

2.前期准备工作

1.配置免密登录个主机部署控制机为10.0.0.212

在控制机上执行

ssh-keygen

#免密脚本

cat > copy_ssh_keys.sh << 'EOF'

#!/bin/bash

# 定义主机信息:分开用户名、IP、端口

declare -A hosts=(

["10.0.0.161"]="root 22"

["10.0.0.24"]="root 22"

["10.0.0.182"]="root 22"

["10.0.0.184"]="root 22"

["10.0.0.117"]="root 22"

["10.0.0.214"]="root 22"

)

for ip in "${!hosts[@]}"; do

user_port=(${hosts[$ip]})

user=${user_port[0]}

port=${user_port[1]}

echo "=== 正在向 ${user}@${ip}:${port} 复制公钥 ==="

ssh-copy-id -p "$port" "${user}@${ip}"

if [ $? -eq 0 ]; then

echo " 成功: 公钥已复制到 ${user}@${ip}:${port}"

else

echo " 失败: 无法复制公钥到 ${user}@${ip}:${port}"

fi

echo "-----------------------------"

done

echo "所有主机公钥复制操作完成。"

EOF

chmod +x copy_ssh_keys.sh

echo " 脚本已生成:copy_ssh_keys.sh"

echo " 使用方法:./copy_ssh_keys.sh"

./copy_ssh_keys.sh2 下载工具脚本ezdown

export release=3.6.8

wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

chmod +x ./ezdown

编辑脚本调整需要的版本

vim ./ezdown把![]() 改成想要的版本即可 默认1.34.1

改成想要的版本即可 默认1.34.1

执行

./ezdown -D如果报错:

./ezdown -D

2025-12-17 17:00:54 [ezdown:786] INFO Action begin: download_all

2025-12-17 17:00:54 [ezdown:173] INFO downloading docker binaries, arch:x86_64, version:28.0.4

--2025-12-17 17:00:54-- https://mirrors.tuna.tsinghua.edu.cn/docker-ce/linux/static/stable/x86_64/docker-28.0.4.tgz

Resolving mirrors.tuna.tsinghua.edu.cn (mirrors.tuna.tsinghua.edu.cn)... 101.6.15.130, 2402:f000:1:400::2

Connecting to mirrors.tuna.tsinghua.edu.cn (mirrors.tuna.tsinghua.edu.cn)|101.6.15.130|:443... connected.

HTTP request sent, awaiting response... 403 Forbidden

2025-12-17 17:00:54 ERROR 403: Forbidden.

2025-12-17 17:00:54 [ezdown:175] ERROR downloading docker failed默认调用的国内清华源。更改为其他国内源例如阿里源

sed -i 's/mirrors.tuna.tsinghua.edu.cn/mirrors.aliyun.com/' ./ezdown如果再次报错:

./ezdown -D

2025-12-17 17:09:55 [ezdown:786] INFO Action begin: download_all

2025-12-17 17:09:55 [ezdown:173] INFO downloading docker binaries, arch:x86_64, version:28.0.4

--2025-12-17 17:09:55-- https://mirrors.aliyun.com/docker-ce/linux/static/stable/x86_64/docker-28.0.4.tgz

Resolving mirrors.aliyun.com (mirrors.aliyun.com)... 47.123.18.240

Connecting to mirrors.aliyun.com (mirrors.aliyun.com)|47.123.18.240|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 78805317 (75M) [application/x-compressed-tar]

Saving to: ‘docker-28.0.4.tgz’

docker-28.0.4.tgz 100%[=================================================>] 75.15M 4.09MB/s in 19s

2025-12-17 17:10:17 (4.02 MB/s) - ‘docker-28.0.4.tgz’ saved [78805317/78805317]

2025-12-17 17:10:18 [ezdown:192] WARN docker is already running.

2025-12-17 17:10:18 [ezdown:279] INFO downloading kubeasz: 3.6.8

Error response from daemon: Get "https://registry-1.docker.io/v2/": context deadline exceeded (Client.Timeout exceeded while awaiting headers)

2025-12-17 17:10:33 [ezdown:282] ERROR download failed!

2025-12-17 17:10:33 [ezdown:787] ERROR Action failed: download_allDocker 无法连接到 Docker Hub(registry-1.docker.io),导致拉取

kubeasz镜像超时失败。

虽然前面成功从 阿里云镜像站 下载了 Docker 二进制包(docker-28.0.4.tgz),但 Docker 引擎本身在拉取镜像时默认仍走官方 Docker Hub,而 Docker Hub 在国内访问极不稳定,经常超时或被墙。

单独手动配置 Docker 镜像加速器(Registry Mirror)

tee << EOF >> /etc/docker/daemon.json

{ "registry-mirrors":

[ "https://docker.1panel.live",

"https://do.nark.eu.org",

"https://dc.j8.work",

"https://pilvpemn.mirror.aliyuncs.com",

"https://docker.m.daocloud.io",

"https://dockerproxy.com",

"https://docker.mirrors.ustc.edu.cn",

"https://docker.nju.edu.cn",

"https://mirror.ccs.tencentyun.com" ]

}

EOFsystemctl daemon-reload

systemctl restart docker在执行ok

./ezdown -D

2025-12-17 17:52:53 [ezdown:786] INFO Action begin: download_all

2025-12-17 17:52:53 [ezdown:171] WARN docker binaries already existed

2025-12-17 17:52:54 [ezdown:192] WARN docker is already running.

2025-12-17 17:52:54 [ezdown:279] INFO downloading kubeasz: 3.6.8

3.6.8: Pulling from easzlab/kubeasz

f56be85fc22e: Pull complete

ea5757f4b3f8: Pull complete

bd0557c686d8: Pull complete

37d4153ce1d0: Pull complete

b39eb9b4269d: Pull complete

a3cff94972c7: Pull complete

6b32640e894a: Pull complete

Digest: sha256:dbd989bea272280c4d3b22d1d0b469ce310f7fbbd65566d1cdcfaef6e2d7638b

Status: Downloaded newer image for easzlab/kubeasz:3.6.8

docker.io/easzlab/kubeasz:3.6.8

2025-12-17 17:54:31 [ezdown:288] DEBUG run a temporary container

3bd002a989fe9869ebba1b5bc681cc312526687c42f1e0aff8d478fe967f063e

2025-12-17 17:54:31 [ezdown:295] DEBUG cp kubeasz code from the temporary container

Successfully copied 15.4MB to /etc/kubeasz

2025-12-17 17:54:31 [ezdown:297] DEBUG stop&remove temporary container

temp_easz

2025-12-17 17:54:32 [ezdown:309] INFO downloading kubernetes: v1.34.2 binaries

v1.34.2: Pulling from easzlab/kubeasz-k8s-bin

1b7ca6aea1dd: Pull complete

86afb3d277cf: Pull complete

b66ae37c5414: Pull complete

Digest: sha256:1235371ef995800b45158b59b569c4a9c25eafc2e8f81b7596b6c0fefaafc605

Status: Downloaded newer image for easzlab/kubeasz-k8s-bin:v1.34.2

docker.io/easzlab/kubeasz-k8s-bin:v1.34.2

2025-12-17 17:57:04 [ezdown:313] DEBUG run a temporary container

e67dd6af21ce6ab9cd2896bd7a47700a678f1f7d711e6576ecd26fd2d2f5b521

2025-12-17 17:57:04 [ezdown:315] DEBUG cp k8s binaries

Successfully copied 368MB to /etc/kubeasz/k8s_bin_tmp

2025-12-17 17:57:05 [ezdown:318] DEBUG stop&remove temporary container

temp_k8s_bin

2025-12-17 17:57:05 [ezdown:326] INFO downloading extral binaries kubeasz-ext-bin:1.13.0

1.13.0: Pulling from easzlab/kubeasz-ext-bin

a88dc8b54e91: Pull complete

6184b75f4087: Pull complete

a6213fefeeee: Pull complete

78713b053946: Pull complete

bf6544ef2403: Pull complete

e7b92baaefa0: Pull complete

a4c3d25e2eec: Pull complete

Digest: sha256:767f44b870ba7be6448433a88499234d0f47a04f9a27f1fa6cda35c2b446c9e7

Status: Downloaded newer image for easzlab/kubeasz-ext-bin:1.13.0

docker.io/easzlab/kubeasz-ext-bin:1.13.0

2025-12-17 17:59:21 [ezdown:330] DEBUG run a temporary container

d5ec54b2c4de8e02d47a90c884e6e70c37910d2b0a9fd53f5241809576d70bcb

2025-12-17 17:59:21 [ezdown:332] DEBUG cp extral binaries

Successfully copied 756MB to /etc/kubeasz/extra_bin_tmp

2025-12-17 17:59:23 [ezdown:335] DEBUG stop&remove temporary container

temp_ext_bin

2: Pulling from library/registry

44cf07d57ee4: Pull complete

bbbdd6c6894b: Pull complete

8e82f80af0de: Pull complete

3493bf46cdec: Pull complete

6d464ea18732: Pull complete

Digest: sha256:a3d8aaa63ed8681a604f1dea0aa03f100d5895b6a58ace528858a7b332415373

Status: Downloaded newer image for registry:2

docker.io/library/registry:2

2025-12-17 17:59:42 [ezdown:656] INFO start local registry ...

f873c0e90d2caf14d65f3af0c6e4bb7bac3eab966f88d016ab42f6bd8fdc81ae

2025-12-17 17:59:43 [ezdown:369] INFO download default images, then upload to the local registry

v3.28.4: Pulling from calico/cni

2772ed331197: Pull complete

385e82df3dbc: Pull complete

Digest: sha256:77f4e494343f41763bb7438e1ab61d07094abe07584b56c01ab5c3fb0b9bb4de 36.38MB/140.9MB

Status: Downloaded newer image for calico/node:v3.28.43fda8b4fdd5346b46643ec6ce

docker.io/calico/node:v3.28.4e for calico/cni:v3.28.4

The push refers to repository [easzlab.io.local:5000/easzlab/node]

c679b3382fdd: Pushed

v3.28.4: digest: sha256:cec640f3131eb91fece8b7dc14f5241b5192fe7faa107f91e2497c09332b96c8 size: 530

1.12.4: Pulling from coredns/coredns3cc769c69646e043790a7ee920c8c0edfe9987d0772 6.198MB/34.03MB

b77b57d31f7f: Downloading [============> ] 2.83MB/11.53MB

2e4cf50eeb92: Pull complete

56ce5a7a0a8c: Pull complete

e1089d61b200: Pull complete

0f8b424aa0b9: Pull complete

d557676654e5: Pull complete

d82bc7a76a83: Pull complete

b77b57d31f7f: Pull complete

d6accb83dc23: Pull complete

Digest: sha256:7d60df155cde82d04c93009ac97fa3e8c02e05d3fc6283a0832765b181537393

Status: Downloaded newer image for easzlab/k8s-dns-node-cache:1.26.4

docker.io/easzlab/k8s-dns-node-cache:1.26.4

The push refers to repository [easzlab.io.local:5000/easzlab/k8s-dns-node-cache]

bcbd50e29d07: Pushed

d23ed4180a23: Pushed

1.26.4: digest: sha256:422a07a940516af2363400de76d910c829d6319e9070cf7f48de10bcd51b784c size: 741

docker.io/coredns/coredns:1.12.4

The push refers to repository [easzlab.io.local:5000/easzlab/coredns]

44925e5e2cc9: Pushed

54559abf8a8c: Pushed

bfe9137a1b04: Pushed

f4aee9e53c42: Pushed

1a73b54f556b: Pushed

2a92d6ac9e4f: Pushed

bbb6cacb8c82: Pushed

6f1cdceb6a31: Pushed

af5aa97ebe6c: Pushed

4d049f83d9cf: Pushed

114dde0fefeb: Pushed

4840c7c54023: Pushed

8fa10c0194df: Pushed

bff7f7a9d443: Pushed

1.12.4: digest: sha256:5fe4ce2f40fba78ebd7941f205d5ba21058e6aebff00878325f3f2645d9d465c size: 3233

1.26.4: Pulling from easzlab/k8s-dns-node-cache

b77b57d31f7f: Downloading [===========> ] 2.699MB/11.53MB

d6accb83dc23: Download complete

35d697fe2738: Pull complete

bfb59b82a9b6: Pull complete

4eff9a62d888: Pull complete

a62778643d56: Pull complete

7c12895b777b: Pull complete

3214acf345c0: Pull complete

5664b15f108b: Pull complete

0bab15eea81d: Pull complete

4aa0ea1413d3: Pull complete

da7816fa955e: Pull complete

ddf74a63f7d8: Pull complete

6b6c881bc207: Pull complete

Digest: sha256:803cdfa3bcafcf988d5419669da336321977ad7a2371cb5a93316947486f3c58

Status: Downloaded newer image for easzlab/metrics-server:v0.8.0

docker.io/easzlab/metrics-server:v0.8.0

The push refers to repository [easzlab.io.local:5000/easzlab/metrics-server]

90c3da41cc55: Pushed

bfe9137a1b04: Mounted from easzlab/coredns

f4aee9e53c42: Mounted from easzlab/coredns

1a73b54f556b: Mounted from easzlab/coredns

2a92d6ac9e4f: Mounted from easzlab/coredns

bbb6cacb8c82: Mounted from easzlab/coredns

6f1cdceb6a31: Mounted from easzlab/coredns

af5aa97ebe6c: Mounted from easzlab/coredns

4d049f83d9cf: Mounted from easzlab/coredns

48c0fb67386e: Pushed

8fa10c0194df: Mounted from easzlab/coredns

f464af4b9b25: Pushed

v0.8.0: digest: sha256:d1a527deee93f23ffac97a2be308bf0bd5df3e686427ee447b9699e73049df72 size: 2814

3.10: Pulling from easzlab/pause

61d9e957431b: Pull complete

Digest: sha256:c7e33e8cea1c259324e8b20c62819b6a3703087088a8172d408d50e7c73099f4

Status: Downloaded newer image for easzlab/pause:3.10

docker.io/easzlab/pause:3.10

The push refers to repository [easzlab.io.local:5000/easzlab/pause]

d8bdedd33a4e: Pushed

3.10: digest: sha256:7faf0ab837630eb90a8e919f1ef2ba350609983bb001c4d76a27972c664a0dd9 size: 527

2025-12-17 18:15:41 [ezdown:788] INFO Action successed: download_all或者执行./ezdown -D报错:

./ezdown -D

2025-12-21 11:16:53 [ezdown:786] INFO Action begin: download_all

2025-12-21 11:16:53 [ezdown:173] INFO downloading docker binaries, arch:x86_64, version:28.0.4

--2025-12-21 11:16:53-- https://mirrors.aliyun.com/docker-ce/linux/static/stable/x86_64/docker-28.0.4.tgz

Resolving mirrors.aliyun.com (mirrors.aliyun.com)... 36.147.34.23, 36.147.34.27, 36.147.34.26, ...

Connecting to mirrors.aliyun.com (mirrors.aliyun.com)|36.147.34.23|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 78805317 (75M) [application/x-compressed-tar]

Saving to: ‘docker-28.0.4.tgz’

docker-28.0.4.tgz 100%[=======================================>] 75.15M 23.3MB/s in 3.3s

2025-12-21 11:16:57 (22.7 MB/s) - ‘docker-28.0.4.tgz’ saved [78805317/78805317]

Unit docker.service could not be found.

2025-12-21 11:16:59 [ezdown:194] DEBUG generate docker service file

2025-12-21 11:16:59 [ezdown:220] DEBUG generate docker config: /etc/docker/daemon.json

2025-12-21 11:16:59 [ezdown:222] DEBUG prepare register mirror for CN

2025-12-21 11:16:59 [ezdown:269] DEBUG enable and start docker

Created symlink /etc/systemd/system/multi-user.target.wants/docker.service → /etc/systemd/system/docker.service.

Job for docker.service failed because the control process exited with error code.

See "systemctl status docker.service" and "journalctl -xeu docker.service" for details.

2025-12-21 11:16:59 [ezdown:787] ERROR Action failed: download_all

root@ubuntu11:~# systemctl status docker.service

● docker.service - Docker Application Container Engine

Loaded: loaded (]8;;file://ubuntu11/etc/systemd/system/docker.service/etc/systemd/system/docker.service]8;;; enabled; preset: enabled)

Active: activating (auto-restart) (Result: exit-code) since Sun 2025-12-21 11:17:46 UTC; 4s ago

Docs: ]8;;http://docs.docker.iohttp://docs.docker.io]8;;

Process: 1599 ExecStart=/opt/kube/bin/dockerd (code=killed, signal=TERM)

Process: 1603 ExecStartPost=/sbin/iptables -I FORWARD -s 0.0.0.0/0 -j ACCEPT (code=exited, status=203/>

Main PID: 1599 (code=killed, signal=TERM)

CPU: 5ms说明问题出在

ExecStartPost=/sbin/iptables -I FORWARD -s 0.0.0.0/0 -j ACCEPT (code=exited, status=203/>这条命令可见是iptables 的命令,那么检查iptables是否没有安装,或者是其他问题。

安装iptables

sudo apt install iptables # Ubuntu/Debian

# 或

sudo yum install iptables # CentOS/RHEL或者 service文件里面删除这一条启动命令也行。

在执行即可成功。

5.创建集群配置实例

./ezdown -S

docker exec -it kubeasz ezctl new k8s-01

#编辑生成的文件

vim /etc/kubeasz/clusters/k8s-01/hosts

vim /etc/kubeasz/clusters/k8s-01/config.yml

#按需调整

6.配置改完后执行

docker exec -it kubeasz ezctl setup k8s-01 all7.附:dcoker和containerd 命令对比

| 命令 | docker | crictl(推荐) | ctr |

|---|---|---|---|

| 查看容器列表 | docker ps | crictl ps | ctr -n k8s.io c ls |

| 查看容器详情 | docker inspect | crictl inspect | ctr -n k8s.io c info |

| 查看容器日志 | docker logs | crictl logs | 无 |

| 容器内执行命令 | docker exec | crictl exec | 无 |

| 挂载容器 | docker attach | crictl attach | 无 |

| 容器资源使用 | docker stats | crictl stats | 无 |

| 创建容器 | docker create | crictl create | ctr -n k8s.io c create |

| 启动容器 | docker start | crictl start | ctr -n k8s.io run |

| 停止容器 | docker stop | crictl stop | 无 |

| 删除容器 | docker rm | crictl rm | ctr -n k8s.io c del |

| 查看镜像列表 | docker images | crictl images | ctr -n k8s.io i ls |

| 查看镜像详情 | docker inspect | crictl inspecti | 无 |

| 拉取镜像 | docker pull | crictl pull | ctr -n k8s.io i pull |

| 推送镜像 | docker push | 无 | ctr -n k8s.io i push |

| 删除镜像 | docker rmi | crictl rmi | ctr -n k8s.io i rm |

| 查看Pod列表 | 无 | crictl pods | 无 |

| 查看Pod详情 | 无 | crictl inspectp | 无 |

| 启动Pod | 无 | crictl runp | 无 |

| 停止Pod | 无 | crictl stopp | 无 |

8.建议安装一个nerdctl 这个命令完全兼容docker

1.单机版

nerdctl:与 Docker 兼容的 containerd CLI-CSDN博客

2.在 Kubernetes 集群中使用 nerdctl 客户端

wget https://github.com/containerd/nerdctl/releases/download/v2.2.1/nerdctl-2.2.1-linux-amd64.tar.gz

tar xf nerdctl-2.2.1-linux-amd64.tar.gz -C /usr/local/bin/#注意:kubernetes所有容器默认在k8s.io名称空间,因此需要指定k8s.io名称空间才能查看到k8s的容器

#加-n k8s.io 选项才能查看到k8s的名称空间的镜像和容器

#方法1:查看k8s名称空间的镜像

[root@node2 ~]#nerdctl -n k8s.io images

#查看k8s名称空间的容器

[root@node2 ~]#nerdctl -n k8s.io ps

#方法2:创建配置文件指定k8s.io为默认名称空间,执行命令就可以省略-n k8s.io

[root@node2 ~]#mkdir /etc/nerdctl/

[root@node2 ~]#vim /etc/nerdctl/nerdctl.toml

namespace = "k8s.io" # 指定默认的名称空间

debug = false

debug_full = false

insecure_registry = true # 启用非安全的镜像仓库

#查看指定名称空间的镜像

nerdctl -n k8s.io images

#查看默认名称空间的镜像

[root@node2 ~]#nerdctl images

#查看默认名称空间的容器

[root@node2 ~]#nerdctl ps

#导入镜像到默认的名称空间

[root@master1 ~]#nerdctl load -i flannel-v0.26.7.tar

#导入镜像到指定的名称空间

[root@master1 ~]#nerdctl -n k8s.io load -i flannel-v0.26.7.tar

#如果是使用apt安装的cni插件创建容器时会出下面错误

[root@node2 ~]#nerdctl run -d --name nginx -p 80:80 nginx:alpine

3 nerdctl集成 buildkitd 实现构建镜像

wget

https://github.com/moby/buildkit/releases/download/v0.26.3/buildkit

v0.26.3.linux-amd64.tar.gz

[root@master ~]# tar -zxvf buildkit-v0.26.3.linux-amd64.tar.gz [root@master ~]# cd bin/

[root@master bin]# cp * /usr/local/bin/

#创建service脚本

[root@master ~]# cat /etc/systemd/system/buildkitd.service

[Unit]

Description=BuildKit

Documentation=https://github.com/moby/buildkit

[Service]

ExecStart=/usr/local/bin/buildkitd --oci-worker=false --containerd-worker=true

[Install]

WantedBy=multi-user.target

#新增buildkitd配置文件,添加镜像仓库使用http访问

[root@master bin]# vim /etc/buildkit/buildkitd.toml

[registry."harbor.zjx521.cn"]

http = false

insecure = true

#启动buildkitd

[root@master bin]# systemctl daemon-reload

[root@master bin]# systemctl start buildkitd

[root@master bin]# systemctl enable buildkitd 构建镜像并测试

[root@master ~]# cat Dockerfile

FROM busybox

CMD ["echo","hello","container"]

[root@master ~]# nerdctl build -t harbor.zjx521.cn/example/busybox:v1

[root@master ~]# nerdctl images

REPOSITORY TAG IMAGE ID CREATED PLATFORM SIZE

BLOB SIZE

busybox v1 fb6a2dfc7899 About a minute ago linux/amd64

MiB

2.1 MiB

[root@master ~]# nerdctl run busybox:v1

hello container

#推送至Harbor仓库

[root@master ~]# nerdctl tag busybox:v1 harbor.zjx521.cn/example/busybox:v1

[root@master ~]# nerdctl push harbor.zjx521.cn/example/busybox:v1更多推荐

已为社区贡献13条内容

已为社区贡献13条内容

所有评论(0)