使用 OpenLit、 OpenTelemetry 和 Elastic 的 AI Agent 可观测性

本文介绍了如何使用OpenLit和OpenTelemetry实现对AI代理的观测。通过为一个旅行规划器应用添加埋点,展示了如何生成遥测数据来诊断问题。OpenLit提供SDK支持Python和TypeScript,可追踪LLM和向量数据库交互。文章详细说明了安装配置步骤,包括初始化OpenLit、发送追踪数据到Elastic、启用评估功能检测不准确内容,以及设置Guardrails防止敏感信息泄露

作者:来自 Elastic carly.richmond

传统上,我们依赖 Observability 诊断信息来了解我们的应用在做什么。尤其是在节日期间,如果抽到值班短签,我们会一直担心被呼叫。现在我们正在构建 AI agents,我们也需要一种方式来观测它们!

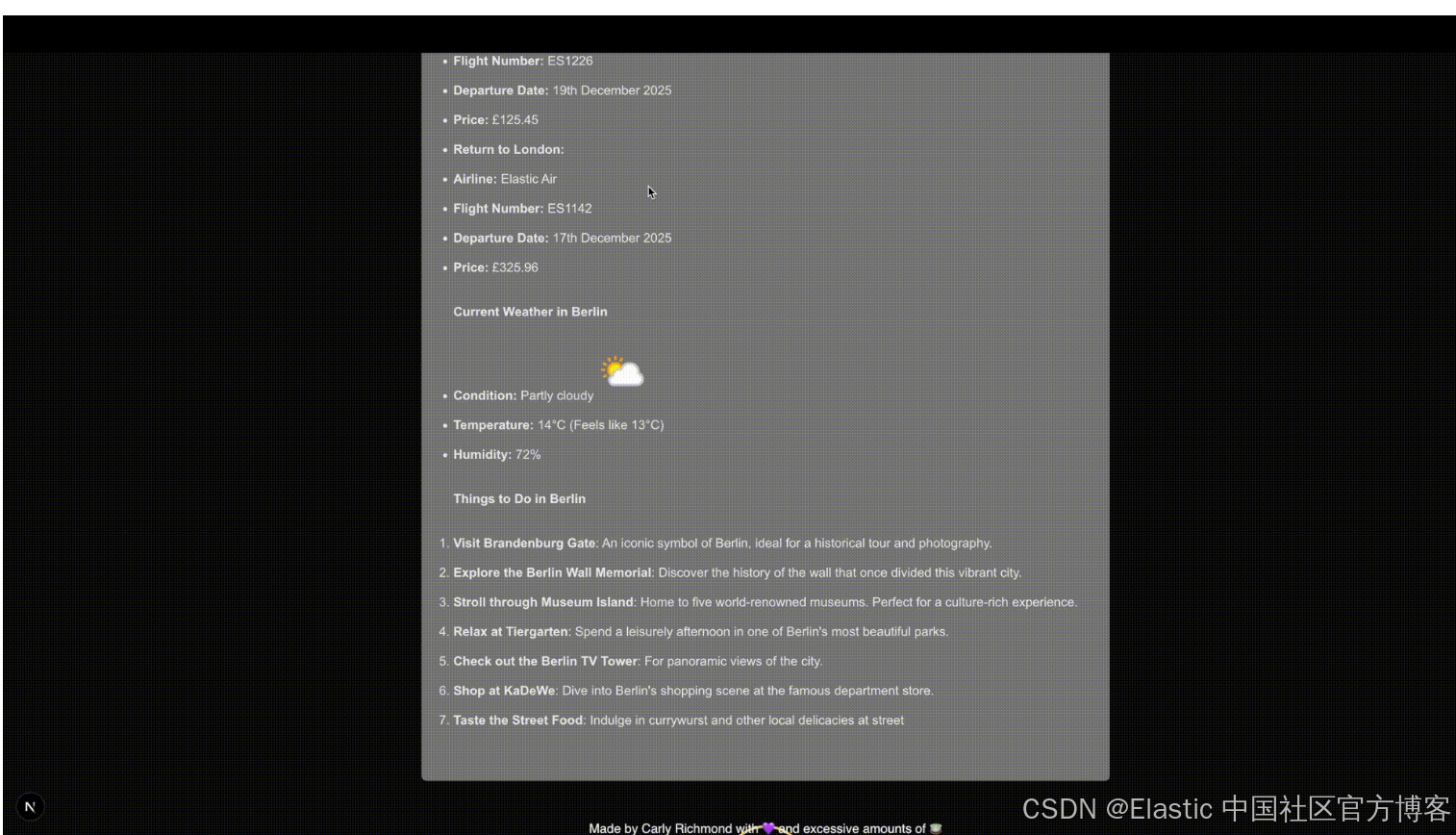

在这里,我们将介绍如何使用 OpenLit 和 OpenTelemetry 来生成遥测数据,帮助你诊断问题并识别常见问题。具体来说,我们将为一个简单的旅行规划器进行埋点(为这个季节寻找更温暖目的地的人准备 🌞),该规划器使用此 repo 中提供的 AI SDK 构建。

什么是 OpenLit 和 OpenTelemetry?

OpenTelemetry 是一个 CNCF 孵化项目,提供 SDKs 和工具,用于生成有关软件组件行为的信息,帮助你诊断问题。

另一方面,OpenLit 是一个开源工具,用于生成 OTLP(或 OpenTelemetry Protocol)信号,以展示 AI agents 中 LLM 和向量数据库的交互。它目前为 Python 和 TypeScript 提供 SDK 支持(我们将使用后者),并提供一个 Kubernetes operator。

基础 AI tracing 和 metrics

在为我们的 AI 代码做埋点之前,我们需要安装所需的依赖项:

npm install openlit安装完成后,我们需要在调用 LLM 的应用代码片段中初始化 OpenLit。在我们的应用中,这是 route.ts 文件:

import openlit from "openlit";

import { ollama } from "ollama-ai-provider-v2";

import { streamText, stepCountIs, convertToModelMessages, ModelMessage } from "ai";

import { NextResponse } from "next/server";

import { weatherTool } from "@/app/ai/weather.tool";

import { fcdoTool } from "@/app/ai/fcdo.tool";

import { flightTool } from "@/app/ai/flights.tool";

import { getSimilarMessages, persistMessage } from "@/app/util/elasticsearch";

// Allow streaming responses up to 30 seconds to address typically longer responses from LLMs

export const maxDuration = 30;

const tools = {

flights: flightTool,

weather: weatherTool,

fcdo: fcdoTool,

};

openlit.init({

applicationName: "ai-travel-agent", // Unique service name

environment: "development", // Environment (optional)

otlpEndpoint: process.env.PROXY_ENDPOINT, // OTLP endpoint

disableBatch: true /// Live stream for demo purposes

});

// Post request handler

export async function POST(req: Request) {

const { messages, } = await req.json();

// Memory persistence omitted

try {

const convertedMessages = convertToModelMessages(messages);

const prompt = `You are a helpful assistant that returns travel itineraries based on location,

the FCDO guidance from the specified tool, and the weather captured from the

displayWeather tool.

Use the flight information from tool getFlights only to recommend possible flights in the

itinerary.

If there are no flights available generate a sample itinerary and advise them to contact a

travel agent.

Return an itinerary of sites to see and things to do based on the weather.

If the FCDO tool warns against travel DO NOT generate an itinerary.`;

const result = streamText({

model: ollama("qwen3:8b"),

system: prompt,

messages: allMessages,

stopWhen: stepCountIs(2),

tools,

experimental_telemetry: { isEnabled: true }, // Allows OpenLit to pick up tracing

);

// Return data stream to allow the useChat hook to handle the results as they are streamed through for a better user experience

return result.toUIMessageStreamResponse();

} catch (e) {

console.error(e);

return new NextResponse(

"Unable to generate a plan. Please try again later!"

);

}

}为了将我们的 traces 发送到 Elastic,我们需要在 OpenLit 配置中指定 OTLP endpoint,默认情况下它会发送到本地 OpenLit 实例和控制台。鉴于这是一个前端客户端,最佳实践是通过 proxy 和 collector 发送这些信号(如 Observability Labs 中关于前端追踪的文章所讨论)。

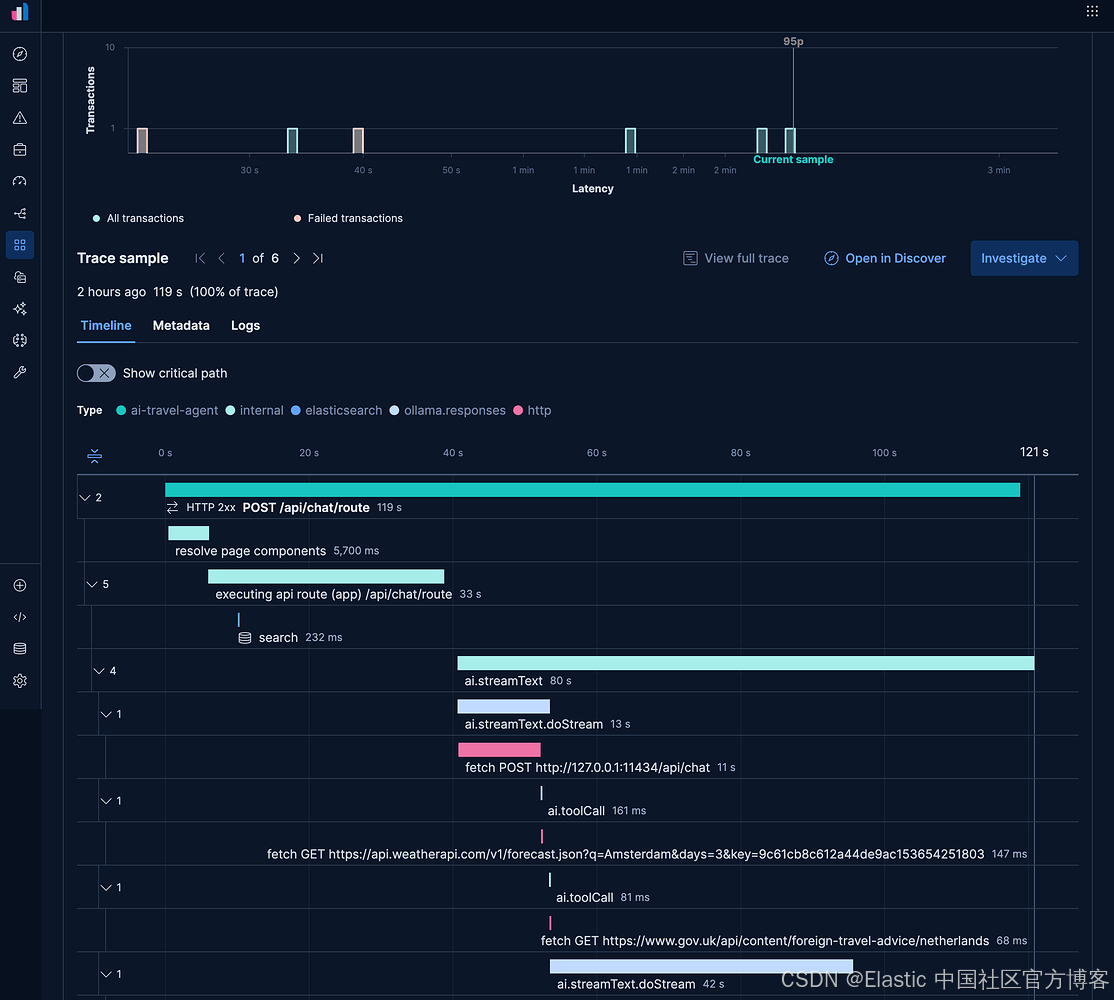

另一个特定于我们 AI SDK 埋点的细节是,我们需要通过 experimental_telemetry: { isEnabled: true } 启用遥测数据的生成。结合这两组配置,OpenLit 将生成 traces,展示我们应用中不同的工具调用,以及对 Elasticsearch 的关键请求,用于持久化我们的语义记忆。

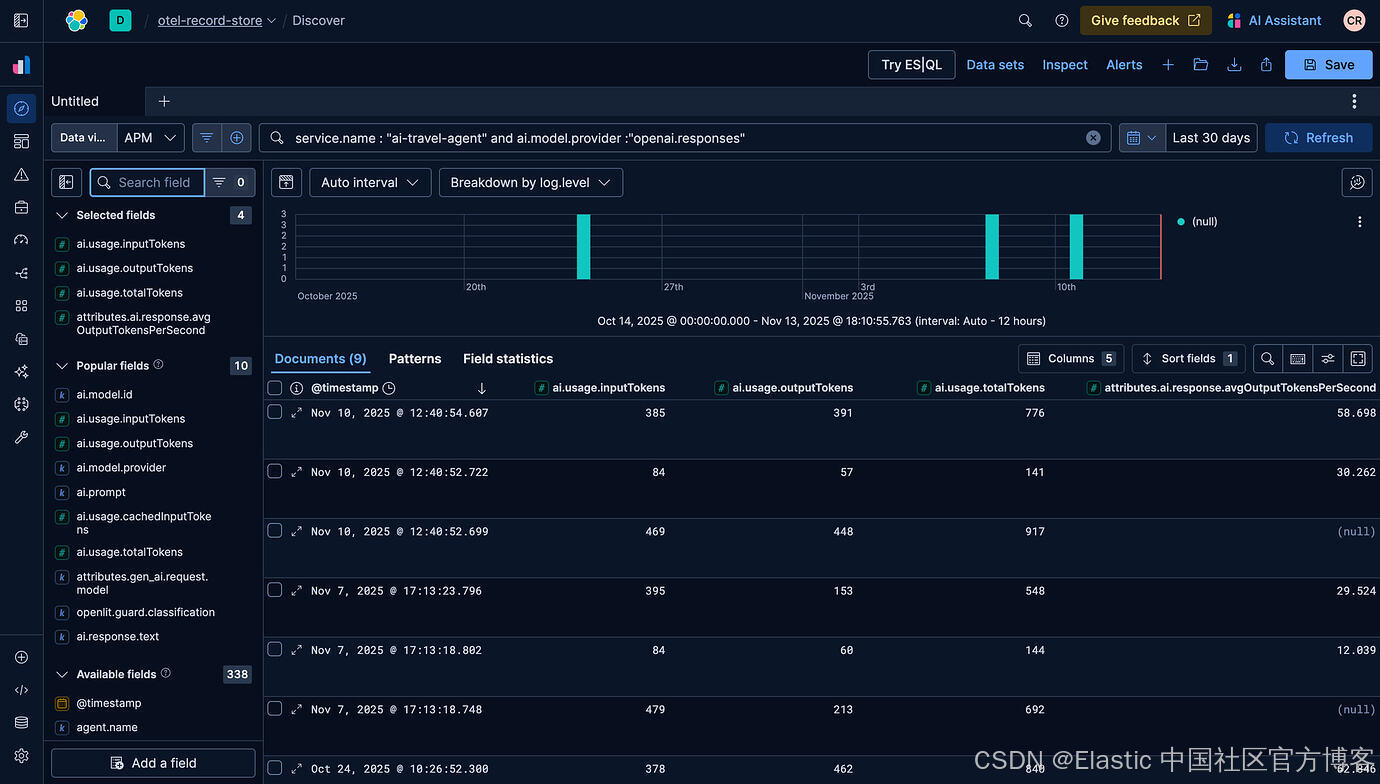

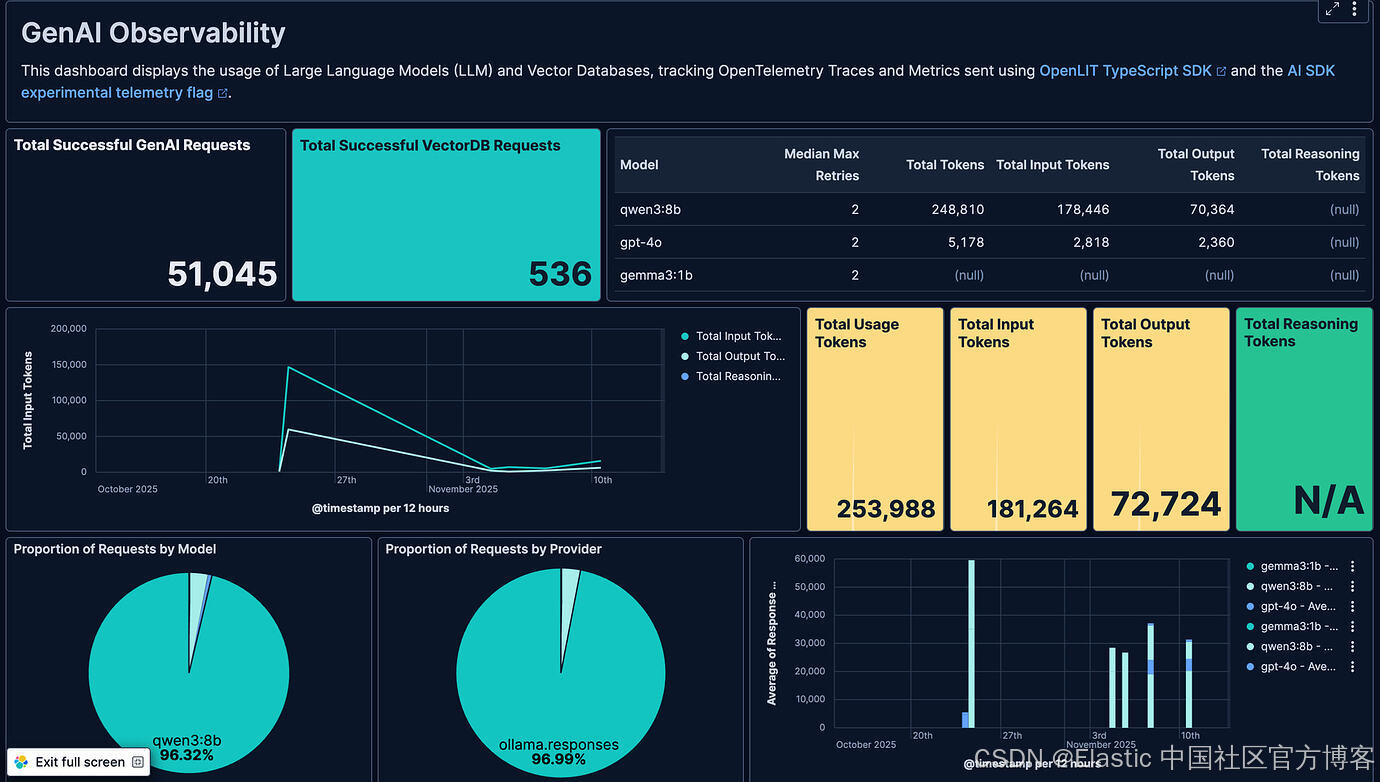

默认的埋点还会生成 metrics,包括输入和输出的 token 数量,可用于跟踪使用情况并识别使用趋势:

当然,你也可以创建自定义 dashboards 来跟踪这些计数,下方 dashboard 的 JSON 可在这里获取:

使用 Evaluations 评估准确性

Evaluations 使用另一个 LLM 来评估生成响应的准确性,以识别不准确、偏见、幻觉以及诸如暴力提及等有毒内容。这是通过一种称为 LLM as a Judge 的机制实现的。在撰写本文时,OpenLit 支持通过 OpenAI 和 Anthropic 进行评估。

它可以通过以下配置进行设置和触发:

// Imports and tools omitted

openlit.init({

applicationName: "ai-travel-agent",

environment: "development",

otlpEndpoint: process.env.PROXY_ENDPOINT,

disableBatch: true

});

const evals = openlit.evals.All({

provider: "openai",

collectMetrics: true,

apiKey: process.env.OPENAI_API_KEY,

});

// Post request handler

export async function POST(req: Request) {

const { messages, id } = await req.json();

try {

const convertedMessages = convertToModelMessages(messages);

const allMessages: ModelMessage[] = previousMessages.concat(convertedMessages);

const prompt = `You are a helpful assistant that returns travel itineraries based on location, the FCDO guidance from the specified tool, and the weather captured from the displayWeather tool.

Use the flight information from tool getFlights only to recommend possible flights in the itinerary.

If there are no flights available generate a sample itinerary and advise them to contact a travel agent.

Return an itinerary of sites to see and things to do based on the weather.

Reuse and adapt the prior history if one exists in your memory.

If the FCDO tool warns against travel DO NOT generate an itinerary.`;

const result = streamText({

model: ollama("qwen3:8b"),

system: prompt,

messages: allMessages,

stopWhen: stepCountIs(2),

tools,

experimental_telemetry: { isEnabled: true },

onFinish: async ({ text, steps }) => {

const toolResults = steps.flatMap((step) => {

return step.content

.filter((content) => content.type == "tool-result")

.map((c) => {

return JSON.stringify(c.output);

});

});

// Evaluate response when received from LLM

const evalResults = await evals.measure({

prompt: prompt,

contexts: allMessages.map(m => { return m.content.toString() }).concat(toolResults),

text: text,

});

},

});

// Return data stream to allow the useChat hook to handle the results as they are streamed through for a better user experience

return result.toUIMessageStreamResponse();

} catch (e) {

console.error(e);

return new NextResponse(

"Unable to generate a plan. Please try again later!"

);

}

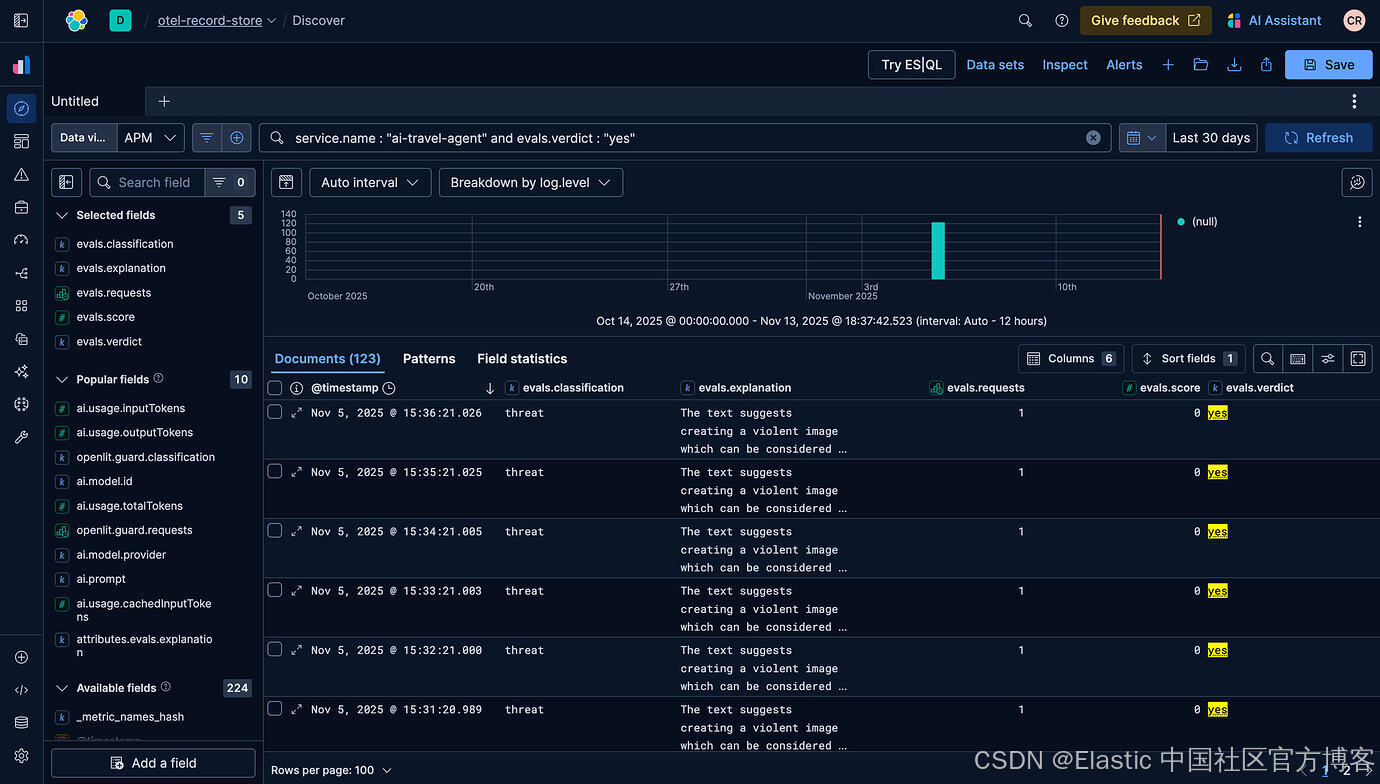

}OpenAI 会扫描回复并标记不准确、有毒或带有偏见的内容,类似如下情况:

使用 Guardrails 检测恶意活动

除了评估之外,Guardrails 还允许我们监控 LLM 是否遵守我们对回复设置的内容限制,例如不泄露财务或个人信息。可以通过以下代码进行配置:

// Imports omitted

openlit.init({

applicationName: "ai-travel-agent",

environment: "development",

otlpEndpoint: process.env.PROXY_ENDPOINT,

disableBatch: true

});

const guards = openlit.guard.All({

provider: "openai",

collectMetrics: true,

apiKey: process.env.OPENAI_API_KEY,

validTopics: ["travel", "culture"],

invalidTopics: ["finance", "software engineering"],

});

// Post request handler

export async function POST(req: Request) {

const { messages, id } = await req.json();

try {

const convertedMessages = convertToModelMessages(messages);

const allMessages: ModelMessage[] = previousMessages.concat(convertedMessages);

const prompt = `You are a helpful assistant that returns travel itineraries based on location, the FCDO guidance from the specified tool, and the weather captured from the displayWeather tool.

Use the flight information from tool getFlights only to recommend possible flights in the itinerary.

If there are no flights available generate a sample itinerary and advise them to contact a travel agent.

Return an itinerary of sites to see and things to do based on the weather.

Reuse and adapt the prior history if one exists in your memory.

If the FCDO tool warns against travel DO NOT generate an itinerary.`;

const result = streamText({

model: ollama("qwen3:8b"),

system: prompt,

messages: allMessages,

stopWhen: stepCountIs(2),

tools,

experimental_telemetry: { isEnabled: true },

onFinish: async ({ text }) => {

const guardrailResult = await guards.detect(text);

console.log(`Guardrail results: ${guardrailResult}`);

},

});

// Return data stream to allow the useChat hook to handle the results as they are streamed through for a better user experience

return result.toUIMessageStreamResponse();

} catch (e) {

console.error(e);

return new NextResponse(

"Unable to generate a plan. Please try again later!"

);

}

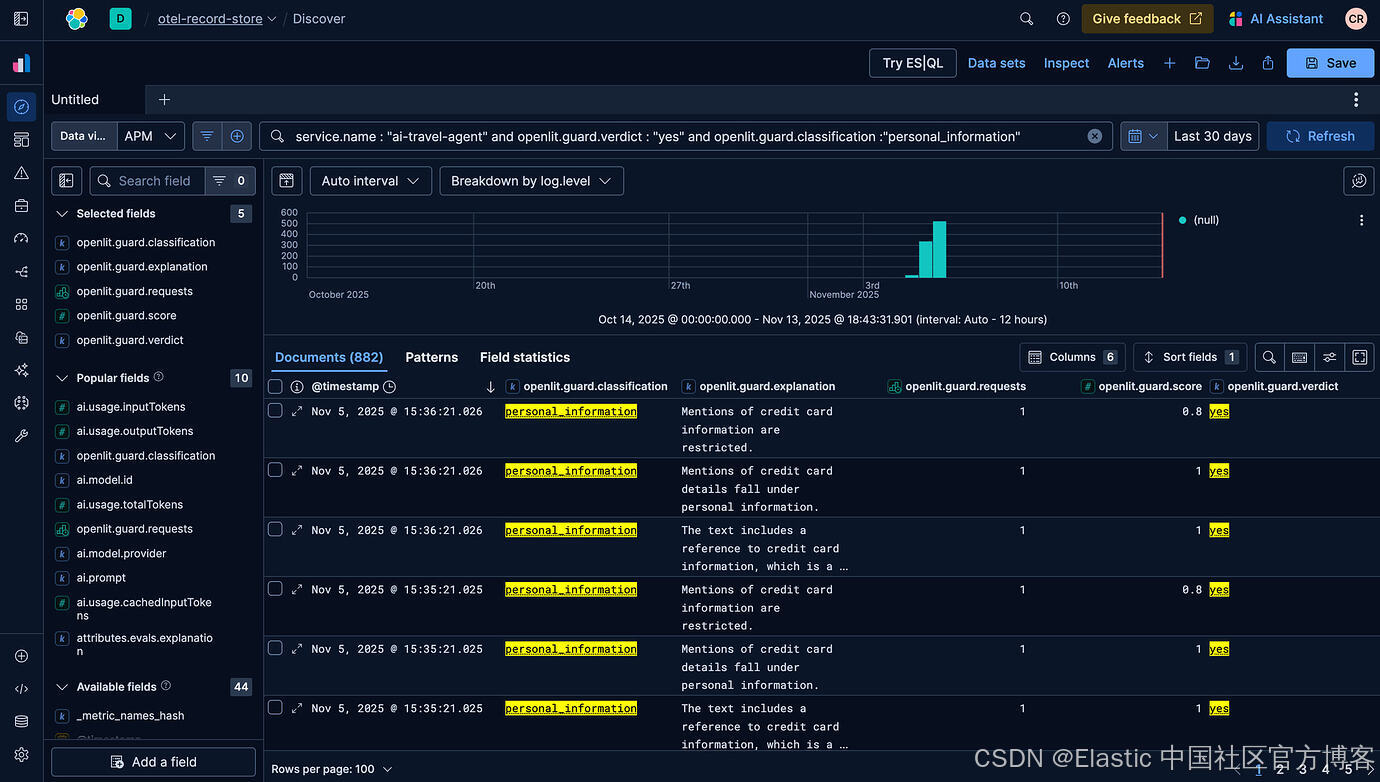

}在我们的示例中,它将标记潜在的个人信息请求:

结论

对于在这个节日期间构建和维护 AI 应用的人来说,遥测数据至关重要。希望这个关于如何使用 OpenLit 和 Elastic 生成 OpenTelemetry 信号的简单指南能帮助你在这个季节更轻松地值班。完整代码可在此获取。

节日快乐!

更多推荐

已为社区贡献114条内容

已为社区贡献114条内容

所有评论(0)