今天的AI只完成了AGI的50% —Google DeepMind CEO Demis Hassabis says AI scaling ‘must be pushed to the maximum‘

摘要:谷歌DeepMind CEO德米斯·哈萨比斯认为必须将AI系统规模扩展推向极致,这可能是实现通用人工智能(AGI)的关键路径。他指出扩展现有系统至少会成为AGI的核心组件,甚至可能构成完整方案。然而,单纯依赖数据与算力扩展面临公开数据上限和环境成本等问题。与此同时,Meta前AI首席科学家杨立昆等专家质疑规模扩展的可持续性,主张开发基于空间数据的"世界模型"替代方案。行业

https://www.youtube.com/watch?v=tDSDR7QILLg

https://www.businessinsider.com/demis-hassabis-ai-scaling-pushed-to-maximum-data-2025-12

Google DeepMind CEO Demis Hassabis, whose company just released Gemini 3 to widespread acclaim, has made it clear where he stands on the issue.

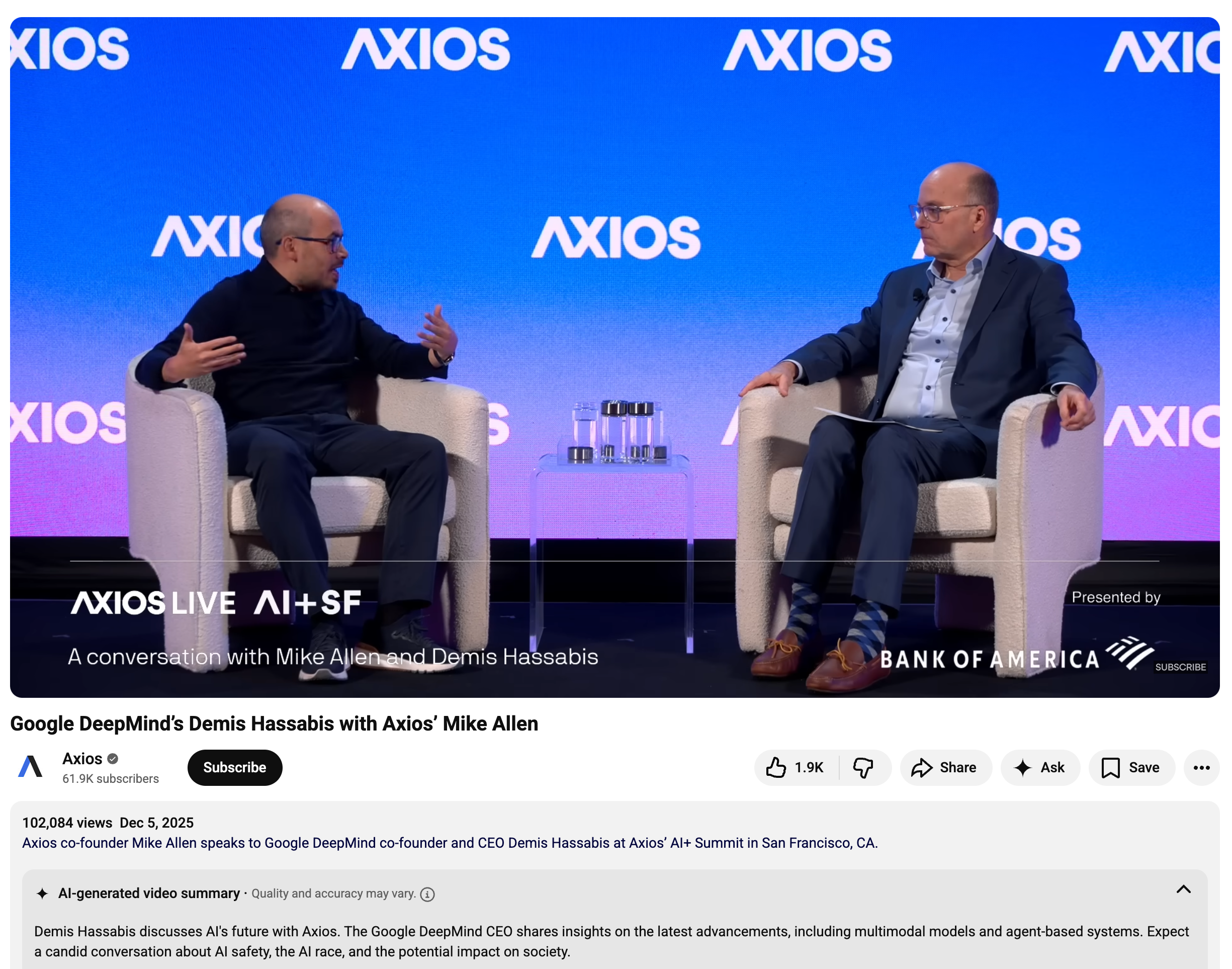

"The scaling of the current systems, we must push that to the maximum, because at the minimum, it will be a key component of the final AGI system," he said at the Axios' AI+ Summit in San Francisco last week. "It could be the entirety of the AGI system."

AGI, or artificial general intelligence, is a still theoretical version of AI that reasons as well as humans. It's the goal all the leading AI companies are competing to reach, fueling huge amounts of spending on infrastructure and talent.

AI scaling laws suggest that the more data and compute an AI model is given, the smarter it will get.

Hassabis said that scaling alone will likely get the industry to AGI, but that he suspects there will need to be"one or two" other breakthroughs as well.

The problem with scaling alone is that there is a limit to publicly available data, and adding compute means building data centers, which is expensive and taxing on the environment.

谷歌DeepMind首席执行官德米斯·哈萨比斯(Demis Hassabis)在公司刚刚发布广受好评的Gemini 3之际,明确表达了他对该议题的立场。

"我们必须将现有系统的规模扩展推向极致,因为这至少将成为最终通用人工智能系统的关键组成部分,"他上周在旧金山举行的Axios AI+峰会上表示,"甚至可能构成完整的人工智能系统。"

通用人工智能(AGI)是理论上与人类推理能力相当的人工智能形态,目前所有领先的AI公司都在为实现这个目标展开激烈竞争,这推动了对基础设施和人才的大量投入。AI扩展定律表明,AI模型获得的数据和计算资源越多,其智能水平就越高。

哈萨比斯指出,仅靠规模扩展可能就能让行业实现通用人工智能,但他推测还需要"一两个"其他突破性进展。单纯扩展的困境在于公开可用数据存在上限,而增加计算资源意味着要建造数据中心,这不仅成本高昂,还会对环境造成负担。

Some AI watchers are also concerned that the AI companies behind the leading large-language models are beginning to show diminishing returns on their massive investments in scaling.

Researchers like Yann LeCun, the chief AI scientist at Meta who recently announced he was leaving to run his own startup, believe the industry needs to consider another way.

"Most interesting problems scale extremely badly," he said at the National University of Singapore in April. "You cannot just assume that more data and more compute means smarter AI."

LeCun is leaving Meta to work on building world models, an alternative to large-language models that rely on collecting spatial data rather than language-based data.

一些人工智能观察人士还担心,主导大语言模型研发的AI企业正开始面临巨额投资回报递减的困境。

Meta首席AI科学家杨立昆等研究者认为,该行业需要另辟蹊径。他近期宣布将离职创办自己的初创公司。"最有趣的问题往往存在严重的规模不经济现象,"他四月在新加坡国立大学表示,"不能简单认为更多数据和算力就等同于更智能的AI。"

杨立昆离开Meta后将致力于构建世界模型,这种替代方案依托空间数据而非语言数据,与大语言模型形成技术分野。

For years, the world's top AI tech talent has spent billions of dollars developing LLMs, which underpin the most widely used chatbots.

The ultimate goal of many of the companies behind these AI models, however, is to develop AGI, a still theoretical version of AI that reasons like humans. And there's growing concern that LLMs may be nearing their plateau, far from a technology capable of evolving into AGI.

AI thinkers who have long held this belief were once written off as cynical. But since the release of OpenAI's GPT-5, which, despite improvements, didn't live up to OpenAI's own hype, the doomers are lining up to say, "I told you so."

Principal among them is perhaps Gary Marcus, an AI leader and best-selling author. Since GPT-5's release, he's taken his criticism to new heights.

更多推荐

已为社区贡献53条内容

已为社区贡献53条内容

所有评论(0)