【珍藏干货】GraphRAG:解决传统RAG三大缺陷,大模型问答准确率提升108%的秘诀

GraphRAG作为传统向量RAG的升级方案,通过三层关键技术(实体解析、图构建、社区发现)解决了三大核心缺陷:多跳推理失效、实体消歧不足和主题级问题处理能力弱。生产实践显示,GraphRAG将复杂多跳问题准确率从43%提升至91%,降低97%查询成本,特别适用于金融、医疗等高风险领域。文章提供了完整工程实现方案和成本效益分析,强调GraphRAG不是银弹,而是针对特定复杂场景的必要解决方案。

在企业级AI问答系统中,传统向量检索增强生成(RAG)架构在处理复杂查询时频频失效。当合规问题的错误答案导致客户审计事件,我们意识到:单纯基于语义相似度的检索机制在面对逻辑依赖、实体消歧和主题级问题时存在根本性缺陷。本文深入剖析生产环境中的GraphRAG架构实现,展示如何通过实体解析、图结构构建与社区发现技术,将复杂多跳问题的准确率从43%提升至91%,同时降低97%的查询成本。我们将超越概念层面,揭示工程实践中那些决定成败的关键细节。

传统向量RAG的三大结构性缺陷

在探讨GraphRAG解决方案前,需明确传统向量RAG架构失效的具体场景。这些问题不是边缘案例,而是企业环境中最常出现的核心需求。

- 多跳推理失效

考虑以下文档内容:

“产品A使用组件X。组件X需要认证Y。认证Y在2年后过期。”

当用户询问"我们需要多久重新认证产品A?"时,向量RAG系统通常只能检索到提及产品A或组件X的片段,却无法建立三个独立事实之间的逻辑链条。向量相似度能发现语义相关的文本片段,但无法跨越文档边界追踪逻辑依赖关系。

- 实体消歧能力缺失

在文档中出现47次"Dr. Smith",其中23次指肿瘤学家Sarah Smith,24次指心脏病专家Michael Smith。传统RAG将所有提及视为同等相关的实体,导致严重错误。在金融合规系统中,我们曾目睹系统将"John Miller"(受制裁实体)的制裁数据错误地关联到"John Miller"(员工)的查询中。当数据集跨越多种语言且包含音译名称时,这一问题呈指数级恶化。

- 无法处理主题级问题

当用户询问"我们所有审计报告中反复出现的合规问题有哪些?"时,向量RAG只能检索包含"合规"和"问题"关键词的5-10个最相似片段。它无法跨1,000份文档综合模式,因为每个文本块被独立处理,缺乏全局视角。

这些问题在企业级应用中并非边缘情况,而是核心需求。传统RAG架构在这些场景中系统性失效,亟需结构性解决方案。

GraphRAG核心架构:三层关键技术

生产级GraphRAG并非简单的"使用图",而是三个精密协同的子系统:实体解析层、关系提取与图构建层、社区发现与层次化总结层。

实体解析与规范化:图构建的基石

高质量实体解析是GraphRAG成功的前提。当实体解析准确率低于85%时,整个系统将不可靠。以下是我们生产环境中的实体解析实现:

from anthropic import Anthropicimport numpy as npfrom sklearn.cluster import DBSCANimport jsonclient = Anthropic(api_key="your-key")class EntityResolver: """Production entity resolution with context-aware disambiguation""" def __init__(self): self.entity_cache = {} self.canonical_map = {} def extract_entities_with_context(self, text, chunk_id): """Extract entities with surrounding context for disambiguation""" prompt = f"""Extract ALL entities from this text. For each entity, provide:1. Entity surface form (exact text)2. Entity type (Person, Organization, Location, Product, Concept)3. Surrounding context (the sentence containing the entity)4. Disambiguation features (titles, roles, dates, locations mentioned nearby)Text: {text}Return JSON array:[ {{ "surface_form": "Dr. Smith", "type": "Person", "context": "Dr. Smith performed the cardiac surgery on Tuesday", "features": {{"specialty": "cardiology", "title": "doctor"}} }}]""" response = client.messages.create( model="claude-sonnet-4-20250514", max_tokens=2000, messages=[{"role": "user", "content": prompt}] ) entities = json.loads(response.content[0].text) # Store with context for entity in entities: entity['chunk_id'] = chunk_id return entities def compute_entity_similarity(self, entity1, entity2): """Compute similarity considering both text and semantic context""" # Exact match gets high score if entity1['surface_form'].lower() == entity2['surface_form'].lower(): base_score = 0.9 else: # Fuzzy match on surface form from difflib import SequenceMatcher base_score = SequenceMatcher( None, entity1['surface_form'].lower(), entity2['surface_form'].lower() ).ratio() # Type mismatch penalty if entity1['type'] != entity2['type']: base_score *= 0.3 # Context similarity boost if'features'in entity1 and'features'in entity2: shared_features = set(entity1['features'].keys()) & set(entity2['features'].keys()) if shared_features: # Features match increases confidence feature_match_score = sum( 1for k in shared_features if entity1['features'][k] == entity2['features'][k] ) / len(shared_features) base_score = 0.7 * base_score + 0.3 * feature_match_score return base_score def resolve_entities(self, all_entities, similarity_threshold=0.75): """Cluster entities into canonical forms using DBSCAN""" n = len(all_entities) if n == 0: return {} # Build similarity matrix similarity_matrix = np.zeros((n, n)) for i in range(n): for j in range(i+1, n): sim = self.compute_entity_similarity(all_entities[i], all_entities[j]) similarity_matrix[i,j] = sim similarity_matrix[j,i] = sim # Convert similarity to distance for DBSCAN distance_matrix = 1 - similarity_matrix # Cluster entities clustering = DBSCAN( eps=1-similarity_threshold, min_samples=1, metric='precomputed' ).fit(distance_matrix) # Create canonical entities canonical_entities = {} for cluster_id in set(clustering.labels_): cluster_members = [ all_entities[i] for i, label in enumerate(clustering.labels_) if label == cluster_id ] # Most common surface form becomes canonical surface_forms = [e['surface_form'] for e in cluster_members] canonical_form = max(set(surface_forms), key=surface_forms.count) canonical_entities[canonical_form] = { 'canonical_name': canonical_form, 'type': cluster_members[0]['type'], 'variant_forms': list(set(surface_forms)), 'occurrences': len(cluster_members), 'contexts': [e['context'] for e in cluster_members[:5]] # Sample contexts } # Map all variants to canonical form for variant in surface_forms: self.canonical_map[variant] = canonical_form return canonical_entities def get_canonical_form(self, surface_form): """Get canonical entity name for any surface form""" return self.canonical_map.get(surface_form, surface_form)

此代码通过以下机制处理复杂实体解析:

- 上下文感知提取:不仅捕获实体名称,还提取周围上下文

- 基于特征的消歧:利用职位、专业、日期等特征区分同名实体

- 可配置阈值的聚类:使用DBSCAN算法处理变体形式

- 规范化映射:为图构建提供统一实体标识

三元组提取与图构建:建立逻辑连接

实体解析完成后,下一步是提取实体间关系并构建知识图谱:

import networkx as nxfrom typing import List, Dict, Tupleclass GraphConstructor: """Build knowledge graph with resolved entities""" def __init__(self, entity_resolver): self.resolver = entity_resolver self.graph = nx.MultiDiGraph() self.entity_to_chunks = {} def extract_relationships(self, text, entities_in_chunk): """Extract relationships between resolved entities""" # Get canonical forms canonical_entities = [ self.resolver.get_canonical_form(e['surface_form']) for e in entities_in_chunk ] if len(canonical_entities) < 2: return [] prompt = f"""Given these entities: {', '.join(canonical_entities)}Analyze this text and extract relationships:Text: {text}Return JSON array of relationships:[ {{ "source": "Entity A", "relation": "employed_by", "target": "Entity B", "evidence": "specific sentence showing relationship", "confidence": 0.95 }}]Only extract relationships explicitly stated in the text.""" response = client.messages.create( model="claude-sonnet-4-20250514", max_tokens=1500, messages=[{"role": "user", "content": prompt}] ) relationships = json.loads(response.content[0].text) # Canonicalize entity names in relationships for rel in relationships: rel['source'] = self.resolver.get_canonical_form(rel['source']) rel['target'] = self.resolver.get_canonical_form(rel['target']) return relationships def add_to_graph(self, chunk_id, chunk_text, entities, relationships): """Add entities and relationships to graph""" # Add entity nodes for entity in entities: canonical = self.resolver.get_canonical_form(entity['surface_form']) if canonical notin self.graph: self.graph.add_node( canonical, type=entity['type'], contexts=[], chunk_ids=[] ) # Track which chunks mention this entity if canonical notin self.entity_to_chunks: self.entity_to_chunks[canonical] = [] self.entity_to_chunks[canonical].append(chunk_id) # Add context self.graph.nodes[canonical]['contexts'].append(entity['context']) self.graph.nodes[canonical]['chunk_ids'].append(chunk_id) # Add relationship edges for rel in relationships: if rel['source'] in self.graph and rel['target'] in self.graph: self.graph.add_edge( rel['source'], rel['target'], relation=rel['relation'], evidence=rel['evidence'], confidence=rel.get('confidence', 0.8), chunk_id=chunk_id ) def get_entity_neighborhood(self, entity_name, hops=2): """Get N-hop neighborhood for an entity""" canonical = self.resolver.get_canonical_form(entity_name) if canonical notin self.graph: returnNone # BFS to collect neighborhood visited = set() queue = [(canonical, 0)] neighborhood = { 'nodes': [], 'edges': [], 'chunks': set() } while queue: node, depth = queue.pop(0) if node in visited or depth > hops: continue visited.add(node) # Add node data node_data = self.graph.nodes[node] neighborhood['nodes'].append({ 'name': node, 'type': node_data['type'], 'chunks': node_data.get('chunk_ids', []) }) # Add edges for neighbor in self.graph.neighbors(node): edge_data = self.graph.get_edge_data(node, neighbor) for key, attrs in edge_data.items(): neighborhood['edges'].append({ 'source': node, 'target': neighbor, 'relation': attrs['relation'], 'evidence': attrs['evidence'] }) neighborhood['chunks'].add(attrs.get('chunk_id')) if depth < hops: queue.append((neighbor, depth + 1)) return neighborhood

此组件解决了三个关键挑战:

- 关系提取与证据追踪:仅提取文档中明确陈述的关系,保留证据句子

- 多跳图遍历:支持跨多个关系路径的推理

- 数据溯源:追踪每个实体和关系的文档来源,用于后续引用

层次化社区发现:实现全局主题理解

GraphRAG使用Leiden社区检测算法将密集连接的实体聚类成主题组,使系统能够理解跨文档的全局主题。其核心实现如下:

from community import community_louvainfrom collections import defaultdictclass CommunityAnalyzer: """Detect and summarize communities in knowledge graph""" def __init__(self, graph): self.graph = graph self.communities = {} self.summaries = {} def detect_communities(self): """Apply Leiden/Louvain algorithm for community detection""" # Convert to undirected for community detection undirected = self.graph.to_undirected() # Detect communities using Louvain algorithm partition = community_louvain.best_partition(undirected) # Group entities by community communities = defaultdict(list) for entity, comm_id in partition.items(): communities[comm_id].append(entity) self.communities = dict(communities) return self.communities def summarize_community(self, community_id, entities): """Generate natural language summary of community""" # Collect all relationships within community internal_edges = [] for source in entities: for target in entities: if self.graph.has_edge(source, target): edge_data = self.graph.get_edge_data(source, target) for key, attrs in edge_data.items(): internal_edges.append({ 'source': source, 'relation': attrs['relation'], 'target': target }) # Collect entity types entity_info = [] for entity in entities: node_data = self.graph.nodes[entity] entity_info.append(f"{entity} ({node_data['type']})") prompt = f"""Summarize this knowledge community:Community {community_id}:Entities: {', '.join(entity_info)}Key Relationships:{chr(10).join([f"- {e['source']} {e['relation']} {e['target']}" for e in internal_edges[:20]])}Provide a 2-3 sentence summary describing:1. The main theme connecting these entities2. The domain or topic area3. Key relationships and patterns""" response = client.messages.create( model="claude-sonnet-4-20250514", max_tokens=500, messages=[{"role": "user", "content": prompt}] ) summary = response.content[0].text self.summaries[community_id] = { 'summary': summary, 'size': len(entities), 'entities': entities, 'edge_count': len(internal_edges) } return summary def build_hierarchical_summaries(self): """Generate multi-level summaries""" communities = self.detect_communities() # Level 1: Individual community summaries for comm_id, entities in communities.items(): self.summarize_community(comm_id, entities) # Level 2: Meta-communities (cluster of communities) if len(communities) > 5: # Build community similarity graph comm_similarity = nx.Graph() for c1 in communities: for c2 in communities: if c1 >= c2: continue # Measure inter-community edges cross_edges = sum( 1for e1 in communities[c1] for e2 in communities[c2] if self.graph.has_edge(e1, e2) or self.graph.has_edge(e2, e1) ) if cross_edges > 0: comm_similarity.add_edge(c1, c2, weight=cross_edges) # Detect meta-communities meta_partition = community_louvain.best_partition(comm_similarity) meta_communities = defaultdict(list) for comm_id, meta_id in meta_partition.items(): meta_communities[meta_id].append(comm_id) # Summarize meta-communities for meta_id, community_ids in meta_communities.items(): all_summaries = [self.summaries[cid]['summary'] for cid in community_ids] meta_prompt = f"""Synthesize these related community summaries into a high-level theme:{chr(10).join([f"Community {i}: {s}" for i, s in zip(community_ids, all_summaries)])}Provide a 2-3 sentence synthesis.""" response = client.messages.create( model="claude-sonnet-4-20250514", max_tokens=400, messages=[{"role": "user", "content": meta_prompt}] ) self.summaries[f"meta_{meta_id}"] = { 'summary': response.content[0].text, 'sub_communities': community_ids, 'type': 'meta' } return self.summaries

这一层次化结构使系统能够回答"审计报告中反复出现的合规问题有哪些?"这类主题级问题,通过在社区摘要层面进行综合分析,而非依赖单个文本片段。

生产环境的核心挑战:三索引同步问题

大多数教程未提及的关键挑战是:GraphRAG系统需要维护三个不同索引的同步——文本索引(精确匹配)、向量索引(嵌入)和结构索引(图模式)。当文档更新时,三个索引必须原子化更新,这一同步机制是生产系统崩溃的常见原因。

from dataclasses import dataclassfrom typing import Optionalimport sqlite3import faissimport pickle@dataclassclass DocumentVersion: """Track document versions for consistent updates""" doc_id: str version: int chunk_ids: list entity_ids: list update_timestamp: floatclass SynchronizedIndexManager: """Manage synchronized updates across text, vector, and graph indexes""" def __init__(self, db_path="graphrag.db"): # Text index (SQLite FTS5) self.text_conn = sqlite3.connect(db_path) self.text_conn.execute(""" CREATE VIRTUAL TABLE IF NOT EXISTS chunks_fts USING fts5(chunk_id, text, doc_id) """) # Vector index (FAISS) self.vector_dim = 1536# text-embedding-3-small dimension self.vector_index = faiss.IndexFlatL2(self.vector_dim) self.chunk_id_to_vector_idx = {} # Graph index (NetworkX persisted) self.graph_constructor = None# Will be injected # Version tracking self.versions = {} def atomic_update(self, doc_id, new_chunks, new_embeddings): """Atomically update all three indexes""" version = self.versions.get(doc_id, DocumentVersion(doc_id, 0, [], [], 0)) new_version = version.version + 1 try: # Step 1: Remove old data if version.chunk_ids: # Remove from text index placeholders = ','.join('?' * len(version.chunk_ids)) self.text_conn.execute( f"DELETE FROM chunks_fts WHERE chunk_id IN ({placeholders})", version.chunk_ids ) # Remove from vector index (mark as deleted) for chunk_id in version.chunk_ids: if chunk_id in self.chunk_id_to_vector_idx: # FAISS doesn't support deletion, rebuild periodically pass # Remove from graph (disconnect old entities) for entity_id in version.entity_ids: if self.graph_constructor.graph.has_node(entity_id): # Keep node but remove edges from this doc edges_to_remove = [ (u, v, k) for u, v, k, d in self.graph_constructor.graph.edges(entity_id, keys=True, data=True) if d.get('chunk_id') in version.chunk_ids ] for u, v, k in edges_to_remove: self.graph_constructor.graph.remove_edge(u, v, k) # Step 2: Add new data new_chunk_ids = [] new_entity_ids = [] for i, (chunk_text, embedding) in enumerate(zip(new_chunks, new_embeddings)): chunk_id = f"{doc_id}_chunk_{new_version}_{i}" new_chunk_ids.append(chunk_id) # Add to text index self.text_conn.execute( "INSERT INTO chunks_fts VALUES (?, ?, ?)", (chunk_id, chunk_text, doc_id) ) # Add to vector index vector_idx = self.vector_index.ntotal self.vector_index.add(embedding.reshape(1, -1)) self.chunk_id_to_vector_idx[chunk_id] = vector_idx # Extract and add to graph entities = self.graph_constructor.resolver.extract_entities_with_context( chunk_text, chunk_id ) relationships = self.graph_constructor.extract_relationships( chunk_text, entities ) self.graph_constructor.add_to_graph( chunk_id, chunk_text, entities, relationships ) new_entity_ids.extend([ self.graph_constructor.resolver.get_canonical_form(e['surface_form']) for e in entities ]) # Step 3: Commit transaction self.text_conn.commit() # Update version tracking import time self.versions[doc_id] = DocumentVersion( doc_id, new_version, new_chunk_ids, list(set(new_entity_ids)), time.time() ) returnTrue except Exception as e: # Rollback on failure self.text_conn.rollback() print(f"Update failed: {e}") returnFalse def query_all_indexes(self, query_text, query_embedding, k=5): """Query across all three indexes with fusion""" results = { 'text_matches': [], 'vector_matches': [], 'graph_matches': [] } # Text search (keyword) cursor = self.text_conn.execute( "SELECT chunk_id, text FROM chunks_fts WHERE chunks_fts MATCH ? LIMIT ?", (query_text, k) ) results['text_matches'] = [ {'chunk_id': row[0], 'text': row[1], 'score': 1.0} for row in cursor.fetchall() ] # Vector search (semantic) if self.vector_index.ntotal > 0: distances, indices = self.vector_index.search( query_embedding.reshape(1, -1), k ) reverse_map = {v: k for k, v in self.chunk_id_to_vector_idx.items()} results['vector_matches'] = [ { 'chunk_id': reverse_map.get(idx, f'unknown_{idx}'), 'score': 1 / (1 + dist) } for dist, idx in zip(distances[0], indices[0]) if idx < len(reverse_map) ] # Graph search (entities mentioned in query) query_entities = self.graph_constructor.resolver.extract_entities_with_context( query_text, "query" ) for entity in query_entities: canonical = self.graph_constructor.resolver.get_canonical_form( entity['surface_form'] ) neighborhood = self.graph_constructor.get_entity_neighborhood( canonical, hops=2 ) if neighborhood: results['graph_matches'].extend([ { 'chunk_id': chunk_id, 'score': 0.9, 'entity': canonical } for chunk_id in neighborhood['chunks'] ]) # Fusion: combine scores all_chunks = {} for source, matches in results.items(): weight = {'text_matches': 0.2, 'vector_matches': 0.4, 'graph_matches': 0.4}[source] for match in matches: chunk_id = match['chunk_id'] score = match['score'] * weight if chunk_id notin all_chunks: all_chunks[chunk_id] = { 'chunk_id': chunk_id, 'total_score': 0, 'sources': [] } all_chunks[chunk_id]['total_score'] += score all_chunks[chunk_id]['sources'].append(source) # Sort by fused score ranked = sorted( all_chunks.values(), key=lambda x: x['total_score'], reverse=True ) return ranked[:k]

在生产环境中,这一组件融合了搜索引擎、ETL管道和图分析系统,远非简单的"带LLM的RAG演示"可比。

成本效益分析:何时选择GraphRAG

从工程实践角度,我们需要评估GraphRAG相较于传统RAG的增量价值是否值得其额外的架构复杂度和投资成本。

GraphRAG不适用场景

- 简单FAQ检索

- 单文档问答

- 延迟敏感的实时聊天

- 月预算低于5,000元的项目

GraphRAG必要场景

- 跨文档多跳推理问题

- 实体消歧对业务至关重要的场景(合规、医疗、法律)

- 用户提出主题级问题(“模式是什么?”)

- 错误答案有法律/财务后果

实际生产数据

| 指标 | GraphRAG | 传统向量RAG |

|---|---|---|

| 多跳问题准确率 | 85-95% | 40-60% |

| 每千文档索引成本 | 2.00 | 0.10 |

| 单次全局搜索查询成本 | 0.05 | 0.01 |

| 生产级实施时间 | 2-4周 | 1-3天 |

在金融服务领域,我们的1,500文档系统数据:

- 索引成本:7.13美元(一次性)

- 查询成本:本地搜索次,全局搜索0.02/次

- 投资回报:当单次错误导致50,000美元审计成本时,预防2-3次错误即可覆盖系统成本

生产部署架构与核心指标

生产级GraphRAG系统架构如下:

┌─────────────────────────────────────────────┐│ Document Ingestion ││ (PDF, DOCX, HTML → Raw Text + Metadata) │└──────────────────┬──────────────────────────┘ │ ▼┌─────────────────────────────────────────────┐│ Chunking + Preprocessing ││ (Semantic chunking, overlap, metadata) │└──────────────────┬──────────────────────────┘ │ ▼┌─────────────────────────────────────────────┐│ Entity Resolution Pipeline ││ (Extract → Disambiguate → Canonicalize) │└──────────────────┬──────────────────────────┘ │ ┌──────────┴──────────┐ │ │ ▼ ▼┌─────────────┐ ┌─────────────────┐│ Text Index │ │ Vector Index ││ (SQLite) │ │ (FAISS) │└─────────────┘ └─────────────────┘ │ │ └──────────┬──────────┘ │ ▼┌─────────────────────────────────────────────┐│ Graph Construction ││ (NetworkX + Entity/Relation Extraction) │└──────────────────┬──────────────────────────┘ │ ▼┌─────────────────────────────────────────────┐│ Community Detection ││ (Leiden Algorithm + Hierarchical) │└──────────────────┬──────────────────────────┘ │ ▼┌─────────────────────────────────────────────┐│ Summary Generation ││ (LLM generates community summaries) │└──────────────────┬──────────────────────────┘ │ ▼┌─────────────────────────────────────────────┐│ Query Interface ││ Local Search (entity) / Global (themes) │└─────────────────────────────────────────────┘

关键性能指标

| 指标类别 | 具体指标 | 生产目标 | 实战结果参考 |

|---|---|---|---|

| 准确性 | 多跳推理准确率 | >85% | 91% |

| 实体消歧准确率 | >90% | 94% | |

| 幻觉率 (每百次查询) | <2% | 0.8% | |

| 成本 | 单次查询平均成本 | <$0.05 | $0.008 (局部) |

| 单文档索引成本 | <$0.01 | $0.0048 | |

| 性能 | P95查询延迟 (局部/全局) | <3s | 1.8s / 4.2s |

| 索引吞吐量 (文档/小时) | >500 | 680 |

结语:超越技术趋势的理性选择

GraphRAG并非银弹,而是一种针对特定场景的复杂架构。当关系理解和多跳推理比简单性更重要时,它才真正彰显价值。

真正令人振奋的不是技术本身,而是系统回答那些曾经不可能的问题的能力。当用户询问"我们合规违规中存在哪些组织模式?"而GraphRAG能从10,000份文档中综合主题时,我们才明白为何投入于此。

不要因为GraphRAG是趋势而构建它。构建它是因为:

- 你的用户在问向量相似度无法回答的问题

- 你领域中的实体关系至关重要

- 错误答案会带来严重后果

从小处起步:

- 首先测试实体解析,将其准确率提升至90%+

- 然后构建图结构

- 接着添加社区发现

- 最后在监控下部署

最重要的是:度量一切。没有指标的GraphRAG只是一个昂贵的实验。当准确率从43%跃升至91%,成本降低97%,且两个月内零幻觉时,这些数字才是说服决策者的最有力证据。

在金融和医疗等高风险领域,当错误答案具有法律后果时,GraphRAG不是可选项,而是必要选择。它的复杂性正是为了应对现实世界的复杂性。

普通人如何抓住AI大模型的风口?

领取方式在文末

为什么要学习大模型?

目前AI大模型的技术岗位与能力培养随着人工智能技术的迅速发展和应用 , 大模型作为其中的重要组成部分 , 正逐渐成为推动人工智能发展的重要引擎 。大模型以其强大的数据处理和模式识别能力, 广泛应用于自然语言处理 、计算机视觉 、 智能推荐等领域 ,为各行各业带来了革命性的改变和机遇 。

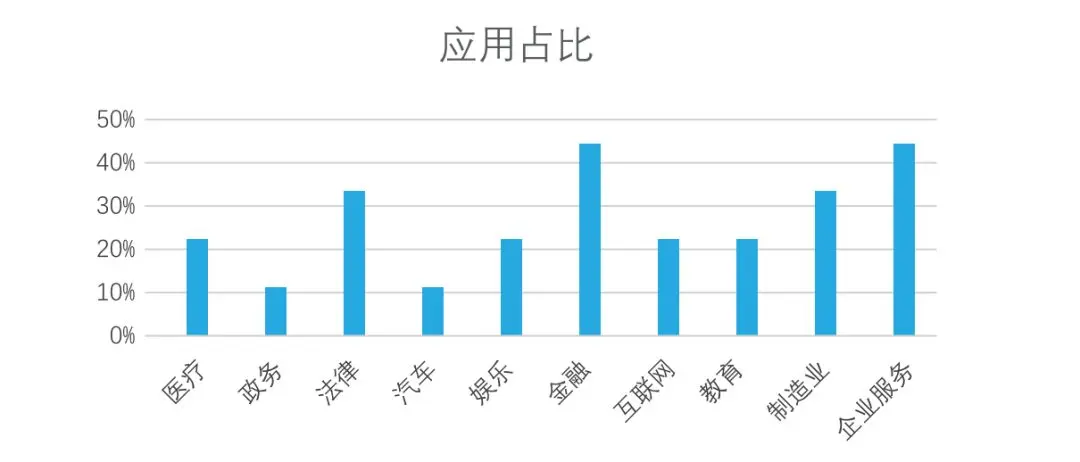

目前,开源人工智能大模型已应用于医疗、政务、法律、汽车、娱乐、金融、互联网、教育、制造业、企业服务等多个场景,其中,应用于金融、企业服务、制造业和法律领域的大模型在本次调研中占比超过 30%。

随着AI大模型技术的迅速发展,相关岗位的需求也日益增加。大模型产业链催生了一批高薪新职业:

人工智能大潮已来,不加入就可能被淘汰。如果你是技术人,尤其是互联网从业者,现在就开始学习AI大模型技术,真的是给你的人生一个重要建议!

最后

只要你真心想学习AI大模型技术,这份精心整理的学习资料我愿意无偿分享给你,但是想学技术去乱搞的人别来找我!

在当前这个人工智能高速发展的时代,AI大模型正在深刻改变各行各业。我国对高水平AI人才的需求也日益增长,真正懂技术、能落地的人才依旧紧缺。我也希望通过这份资料,能够帮助更多有志于AI领域的朋友入门并深入学习。

真诚无偿分享!!!

vx扫描下方二维码即可

加上后会一个个给大家发

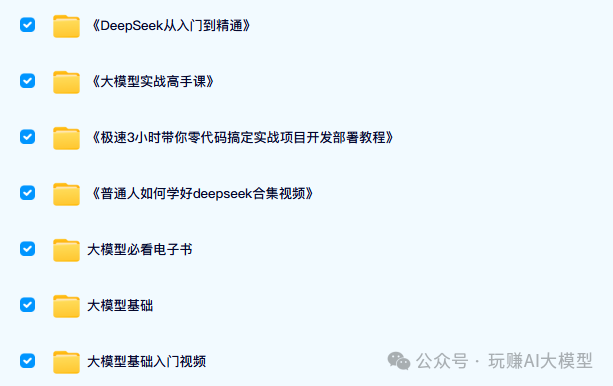

大模型全套学习资料展示

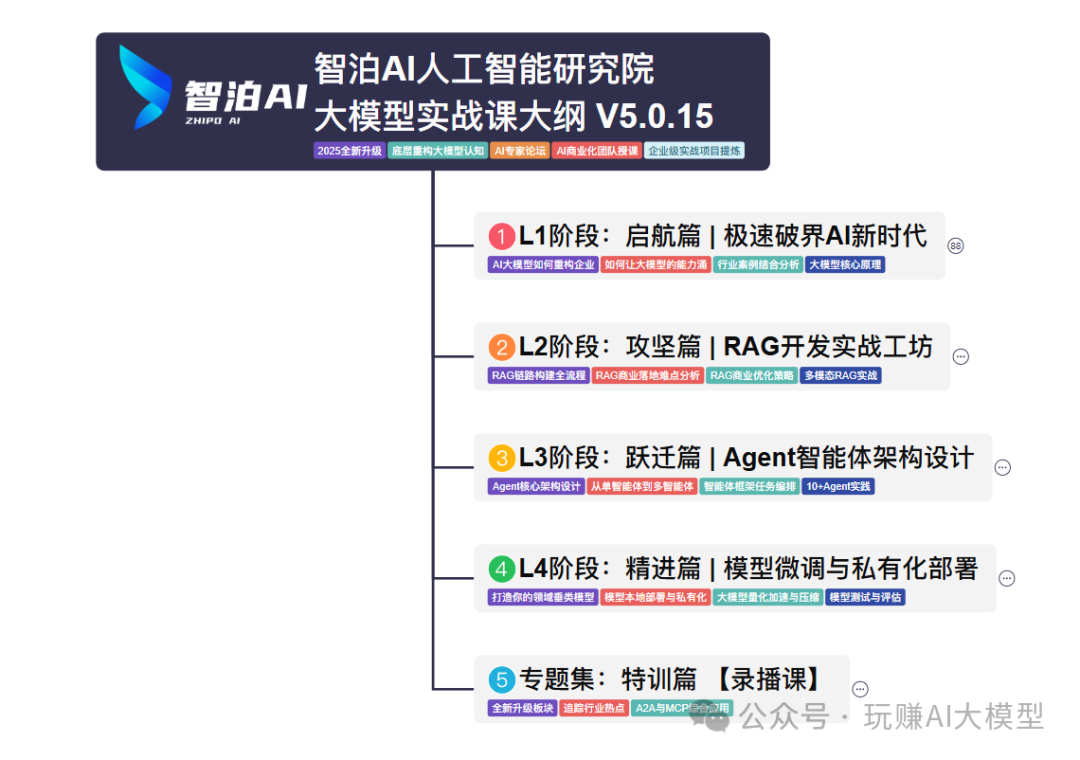

自我们与MoPaaS魔泊云合作以来,我们不断打磨课程体系与技术内容,在细节上精益求精,同时在技术层面也新增了许多前沿且实用的内容,力求为大家带来更系统、更实战、更落地的大模型学习体验。

希望这份系统、实用的大模型学习路径,能够帮助你从零入门,进阶到实战,真正掌握AI时代的核心技能!

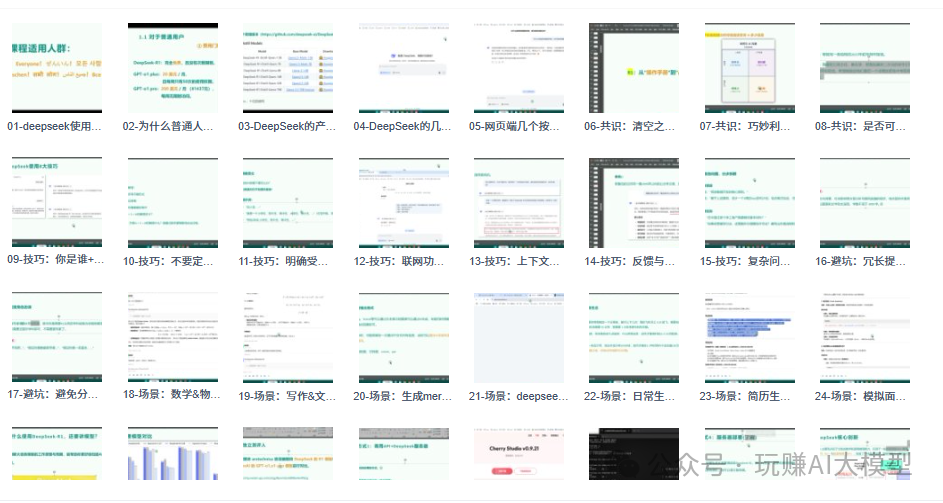

01 教学内容

-

从零到精通完整闭环:【基础理论 →RAG开发 → Agent设计 → 模型微调与私有化部署调→热门技术】5大模块,内容比传统教材更贴近企业实战!

-

大量真实项目案例: 带你亲自上手搞数据清洗、模型调优这些硬核操作,把课本知识变成真本事!

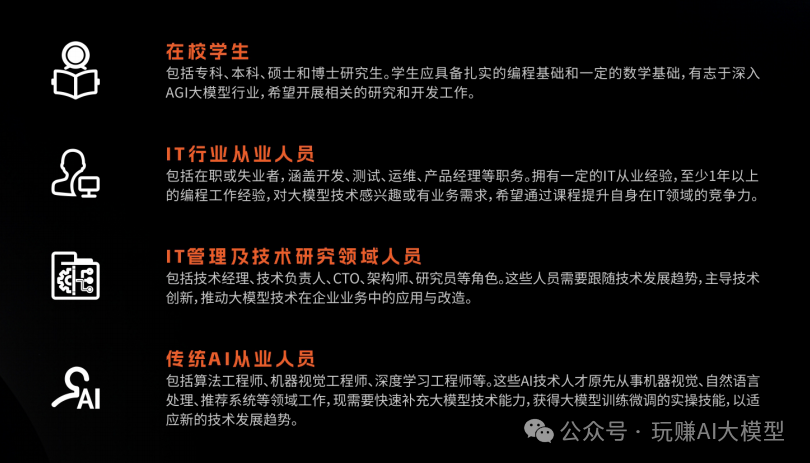

02适学人群

应届毕业生: 无工作经验但想要系统学习AI大模型技术,期待通过实战项目掌握核心技术。

零基础转型: 非技术背景但关注AI应用场景,计划通过低代码工具实现“AI+行业”跨界。

业务赋能突破瓶颈: 传统开发者(Java/前端等)学习Transformer架构与LangChain框架,向AI全栈工程师转型。

vx扫描下方二维码即可

本教程比较珍贵,仅限大家自行学习,不要传播!更严禁商用!

03 入门到进阶学习路线图

大模型学习路线图,整体分为5个大的阶段:

04 视频和书籍PDF合集

从0到掌握主流大模型技术视频教程(涵盖模型训练、微调、RAG、LangChain、Agent开发等实战方向)

新手必备的大模型学习PDF书单来了!全是硬核知识,帮你少走弯路(不吹牛,真有用)

05 行业报告+白皮书合集

收集70+报告与白皮书,了解行业最新动态!

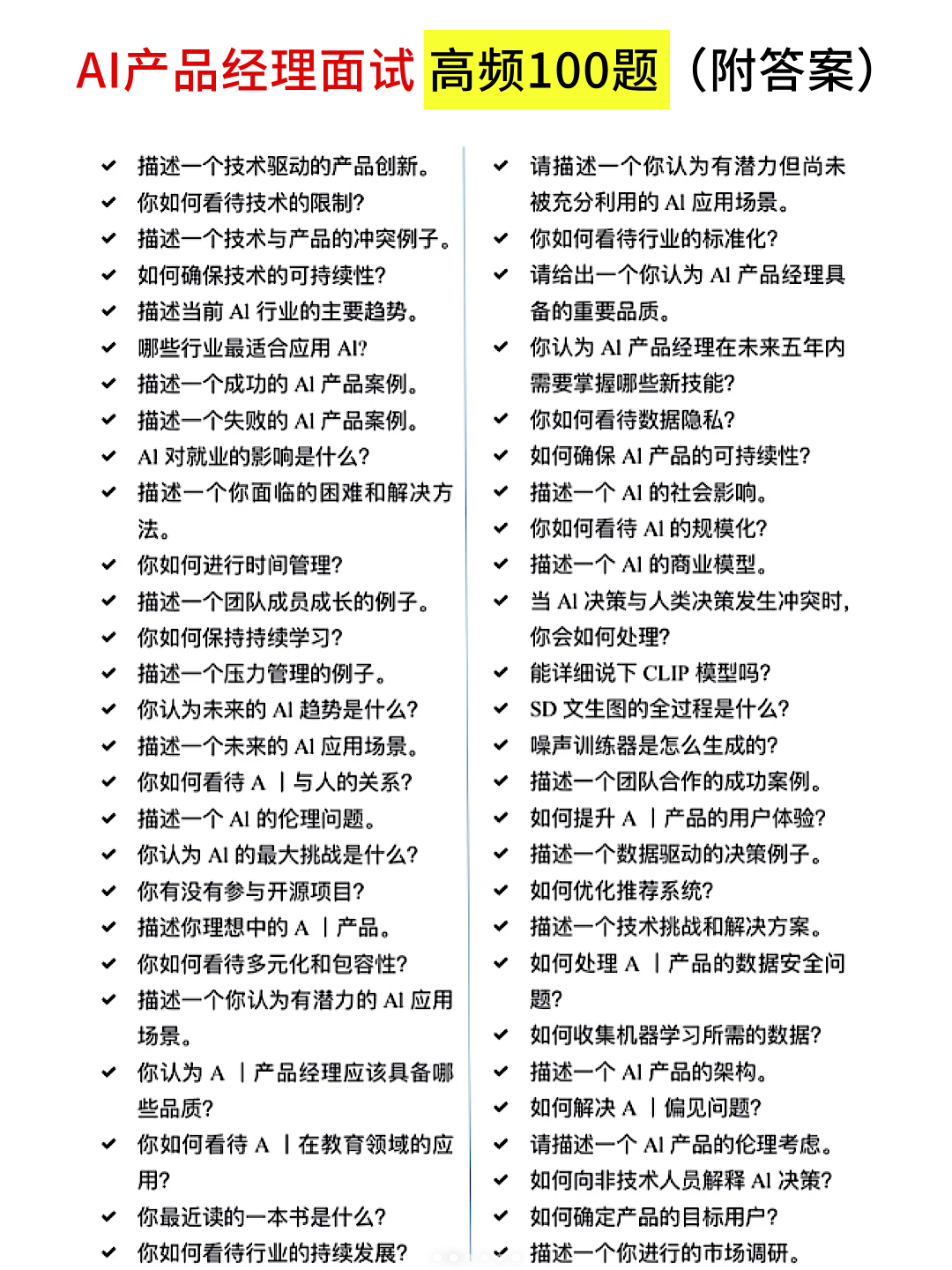

06 90+份面试题/经验

AI大模型岗位面试经验总结(谁学技术不是为了赚$呢,找个好的岗位很重要)

07 deepseek部署包+技巧大全

由于篇幅有限

只展示部分资料

并且还在持续更新中…

真诚无偿分享!!!

vx扫描下方二维码即可

加上后会一个个给大家发

更多推荐

已为社区贡献489条内容

已为社区贡献489条内容

所有评论(0)