【生成式AI】Cross-Attention:从理论到实践的全面突破(下篇)

【生成式AI】Cross-Attention:从理论到实践的全面突破(下篇)

·

【生成式AI】Cross-Attention:从理论到实践的全面突破(下篇)

【生成式AI】Cross-Attention:从理论到实践的全面突破(下篇)

文章目录

欢迎铁子们点赞、关注、收藏!

祝大家逢考必过!逢投必中!上岸上岸上岸!upupup

大多数高校硕博生毕业要求需要参加学术会议,发表EI或者SCI检索的学术论文会议论文。详细信息可扫描博文下方二维码 “

学术会议小灵通”或参考学术信息专栏:https://ais.cn/u/mmmiUz

前言

- 深入探索Cross-Attention的先进变体、优化策略及其在复杂任务中的前沿应用

- 在上篇中,我们深入探讨了Cross-Attention的基础理论、数学原理和在Transformer中的标准实现。现在,让我们继续这个技术之旅,探索Cross-Attention的高级变体、优化技术以及在实际复杂系统中的应用。

五、 Cross-Attention的先进变体与改进

5.1 高效Cross-Attention变体

- 随着序列长度的增加,标准Cross-Attention的计算复杂度成为瓶颈,催生了许多高效变体:

class EfficientCrossAttentionVariants:

"""高效Cross-Attention变体"""

def __init__(self, d_model, n_heads):

self.d_model = d_model

self.n_heads = n_heads

def linear_attention(self, Q, K, V):

"""线性注意力:通过核函数近似实现线性复杂度"""

# 使用特征映射将点积注意力转化为线性形式

from functools import partial

def elu_feature_map(x):

return F.elu(x) + 1

Q_mapped = elu_feature_map(Q)

K_mapped = elu_feature_map(K)

# 线性注意力计算

KV = torch.einsum('bshd,bshv->bhdv', K_mapped, V)

Z = 1.0 / (torch.einsum('bshd,bhd->bsh', Q_mapped, K_mapped.sum(dim=1)) + 1e-6)

V_att = torch.einsum('bshd,bhdv,bsh->bshv', Q_mapped, KV, Z)

return V_att

def sliding_window_attention(self, Q, K, V, window_size=512):

"""滑动窗口注意力:局部注意力机制"""

batch_size, seq_len, d_model = Q.shape

# 将序列分块

chunks = seq_len // window_size

if seq_len % window_size != 0:

chunks += 1

outputs = []

for i in range(chunks):

start_idx = i * window_size

end_idx = min((i + 1) * window_size, seq_len)

# 当前窗口的Query

Q_chunk = Q[:, start_idx:end_idx, :]

# Key和Value的窗口(可包含上下文)

context_start = max(0, start_idx - window_size // 2)

context_end = min(seq_len, end_idx + window_size // 2)

K_chunk = K[:, context_start:context_end, :]

V_chunk = V[:, context_start:context_end, :]

# 计算窗口内注意力

scores = torch.matmul(Q_chunk, K_chunk.transpose(-2, -1)) / math.sqrt(d_model)

attn_weights = F.softmax(scores, dim=-1)

chunk_output = torch.matmul(attn_weights, V_chunk)

outputs.append(chunk_output)

return torch.cat(outputs, dim=1)

def low_rank_attention(self, Q, K, V, rank=64):

"""低秩注意力:通过低秩近似减少计算"""

# 投影到低维空间

U_q = nn.Linear(self.d_model, rank, bias=False)

U_k = nn.Linear(self.d_model, rank, bias=False)

Q_low = U_q(Q) # [batch, seq_len, rank]

K_low = U_k(K) # [batch, seq_len, rank]

# 在低维空间计算注意力

scores_low = torch.matmul(Q_low, K_low.transpose(-2, -1)) / math.sqrt(rank)

attn_weights_low = F.softmax(scores_low, dim=-1)

# 应用注意力到原始Value

output = torch.matmul(attn_weights_low, V)

return output

class AttentionVariantComparison:

"""不同注意力变体比较"""

def compare_variants(self, sequence_lengths=[256, 512, 1024, 2048]):

"""比较不同变体在计算效率和效果上的表现"""

comparison_results = {}

for seq_len in sequence_lengths:

# 计算复杂度比较

complexities = {

'standard': seq_len ** 2,

'linear': seq_len,

'sliding_window': seq_len * 512, # 假设窗口大小为512

'low_rank': seq_len * 64 * 2 + 64 * seq_len # 假设秩为64

}

# 内存使用比较

memory_usage = {

'standard': seq_len ** 2,

'linear': seq_len,

'sliding_window': seq_len * 512,

'low_rank': seq_len * 64 * 3

}

comparison_results[seq_len] = {

'complexity': complexities,

'memory': memory_usage,

'recommendation': self.get_recommendation(seq_len, complexities)

}

return comparison_results

def get_recommendation(self, seq_len, complexities):

"""根据序列长度给出推荐"""

if seq_len <= 512:

return "标准注意力(效果最好)"

elif seq_len <= 2048:

return "滑动窗口注意力(平衡效率与效果)"

else:

return "线性注意力或低秩注意力(最高效)"

5.2 结构化Cross-Attention

class StructuredCrossAttention:

"""结构化Cross-Attention:融入先验知识"""

def __init__(self, d_model, n_heads, structure_type='hierarchical'):

self.d_model = d_model

self.n_heads = n_heads

self.structure_type = structure_type

def hierarchical_attention(self, Q, K, V, hierarchy_levels):

"""层次化注意力:在不同粒度级别计算注意力"""

hierarchical_outputs = []

for level in hierarchy_levels:

# 对Query和Key进行池化

Q_pooled = self.pool_sequence(Q, level)

K_pooled = self.pool_sequence(K, level)

V_pooled = self.pool_sequence(V, level)

# 在池化后的序列上计算注意力

level_output = self.compute_attention(Q_pooled, K_pooled, V_pooled)

# 上采样回原始分辨率

level_output_upsampled = self.upsample_sequence(level_output, Q.shape[1])

hierarchical_outputs.append(level_output_upsampled)

# 融合不同层次的结果

fused_output = self.fuse_hierarchical_outputs(hierarchical_outputs)

return fused_output

def syntactic_attention(self, Q, K, V, syntactic_tree):

"""句法注意力:基于语法树结构"""

# 根据语法树构建注意力掩码

syntactic_mask = self.build_syntactic_mask(syntactic_tree, Q.shape[1], K.shape[1])

# 应用句法约束的注意力

scores = torch.matmul(Q, K.transpose(-2, -1)) / math.sqrt(self.d_model)

scores = scores.masked_fill(syntactic_mask == 0, -1e9)

attn_weights = F.softmax(scores, dim=-1)

return torch.matmul(attn_weights, V)

def spatial_attention(self, Q, K, V, spatial_relations):

"""空间注意力:基于空间关系"""

# 构建空间关系偏置

spatial_bias = self.compute_spatial_bias(spatial_relations)

scores = torch.matmul(Q, K.transpose(-2, -1)) / math.sqrt(self.d_model)

scores = scores + spatial_bias

attn_weights = F.softmax(scores, dim=-1)

return torch.matmul(attn_weights, V)

class StructuredAttentionApplications:

"""结构化注意力应用场景"""

def application_scenarios(self):

scenarios = {

'文档理解': {

'structure': '文档层次结构(章节、段落、句子)',

'attention_type': '层次化注意力',

'benefit': '同时捕获局部和全局信息'

},

'代码生成': {

'structure': '抽象语法树',

'attention_type': '句法注意力',

'benefit': '保持代码结构正确性'

},

'视觉推理': {

'structure': '空间关系图',

'attention_type': '空间注意力',

'benefit': '理解物体间空间关系'

},

'分子建模': {

'structure': '分子图结构',

'attention_type': '图注意力',

'benefit': '准确建模分子性质'

}

}

return scenarios

六、 Cross-Attention的优化策略

6.1 训练稳定性优化

class CrossAttentionOptimization:

"""Cross-Attention训练优化策略"""

def __init__(self):

self.optimization_techniques = {}

def gradient_checkpointing(self, attention_layer, Q, K, V):

"""梯度检查点:节省内存"""

# 使用torch的梯度检查点功能

from torch.utils.checkpoint import checkpoint

def custom_forward(*inputs):

Q, K, V = inputs

return attention_layer(Q, K, V)

return checkpoint(custom_forward, Q, K, V, use_reentrant=False)

def mixed_precision_training(self, attention_layer, Q, K, V):

"""混合精度训练"""

from torch.cuda.amp import autocast

with autocast():

output = attention_layer(Q, K, V)

return output

def attention_dropout_strategies(self, attention_weights, dropout_rate=0.1, strategy='standard'):

"""注意力Dropout策略"""

if strategy == 'standard':

# 标准dropout

return F.dropout(attention_weights, p=dropout_rate)

elif strategy == 'head_dropout':

# 多头dropout:随机丢弃整个注意力头

batch, heads, seq_len, _ = attention_weights.shape

head_mask = torch.bernoulli(torch.ones(heads) * (1 - dropout_rate))

head_mask = head_mask.view(1, heads, 1, 1).to(attention_weights.device)

return attention_weights * head_mask

elif strategy == 'token_dropout':

# token级dropout:随机丢弃某些token的注意力

batch, heads, seq_len, _ = attention_weights.shape

token_mask = torch.bernoulli(torch.ones(seq_len) * (1 - dropout_rate))

token_mask = token_mask.view(1, 1, seq_len, 1).to(attention_weights.device)

return attention_weights * token_mask

class AttentionStabilityAnalysis:

"""注意力稳定性分析"""

def analyze_training_stability(self, attention_weights_history):

"""分析训练过程中注意力的稳定性"""

stability_metrics = {}

# 注意力权重变化分析

weight_variance = torch.var(attention_weights_history, dim=0)

stability_metrics['weight_stability'] = 1.0 / (1.0 + weight_variance.mean())

# 注意力分布一致性

entropy_history = [self.compute_attention_entropy(w) for w in attention_weights_history]

entropy_variance = torch.var(torch.stack(entropy_history))

stability_metrics['distribution_stability'] = 1.0 / (1.0 + entropy_variance)

# 梯度分析

gradient_norms = [w.grad.norm().item() for w in attention_weights_history if w.grad is not None]

if gradient_norms:

gradient_stability = 1.0 / (1.0 + np.var(gradient_norms))

stability_metrics['gradient_stability'] = gradient_stability

return stability_metrics

def compute_attention_entropy(self, attention_weights):

"""计算注意力分布的熵"""

# 避免log(0)

attention_weights = attention_weights + 1e-8

attention_weights = attention_weights / attention_weights.sum(dim=-1, keepdim=True)

entropy = -torch.sum(attention_weights * torch.log(attention_weights), dim=-1)

return entropy.mean()

6.2 推理优化技术

class InferenceOptimization:

"""Cross-Attention推理优化"""

def __init__(self, model):

self.model = model

self.optimization_cache = {}

def kv_caching(self, past_key_values, current_input):

"""Key-Value缓存:避免重复计算"""

if past_key_values is None:

# 第一次推理,计算并缓存KV

output = self.model(current_input)

new_key_values = output.past_key_values

return output, new_key_values

else:

# 使用缓存的KV,只计算当前步骤

output = self.model(current_input, past_key_values=past_key_values)

updated_key_values = output.past_key_values

return output, updated_key_values

def dynamic_sequence_length(self, input_sequences, max_length=4096, chunk_size=512):

"""动态序列长度处理"""

processed_outputs = []

for sequence in input_sequences:

if len(sequence) <= max_length:

# 直接处理短序列

output = self.model(sequence)

processed_outputs.append(output)

else:

# 长序列分块处理

chunk_outputs = []

for i in range(0, len(sequence), chunk_size):

chunk = sequence[i:i+chunk_size]

chunk_output = self.model(chunk)

chunk_outputs.append(chunk_output)

# 合并块结果

merged_output = self.merge_chunk_outputs(chunk_outputs)

processed_outputs.append(merged_output)

return processed_outputs

def attention_sparsification(self, attention_scores, sparsity_threshold=0.01):

"""注意力稀疏化:移除不重要的连接"""

# 基于阈值进行稀疏化

sparse_mask = attention_scores > sparsity_threshold

# 确保每行至少有一个非零元素

row_has_values = sparse_mask.sum(dim=-1) > 0

sparse_mask = sparse_mask | (~row_has_values.unsqueeze(-1) & (attention_scores == attention_scores.max(dim=-1, keepdim=True).values))

# 应用稀疏掩码

sparse_scores = attention_scores.masked_fill(~sparse_mask, -1e9)

sparse_weights = F.softmax(sparse_scores, dim=-1)

return sparse_weights

class ModelCompressionForAttention:

"""注意力模型压缩"""

def knowledge_distillation(self, teacher_model, student_model, inputs, temperature=3.0):

"""知识蒸馏:用大模型指导小模型"""

with torch.no_grad():

teacher_outputs = teacher_model(inputs)

teacher_attention = teacher_outputs.attention_weights

student_outputs = student_model(inputs)

student_attention = student_outputs.attention_weights

# 注意力分布蒸馏损失

attention_loss = F.kl_div(

F.log_softmax(student_attention / temperature, dim=-1),

F.softmax(teacher_attention / temperature, dim=-1),

reduction='batchmean'

) * (temperature ** 2)

return attention_loss

def attention_head_pruning(self, model, pruning_ratio=0.3):

"""注意力头剪枝:移除不重要的注意力头"""

importance_scores = self.compute_head_importance(model)

# 根据重要性排序并剪枝

num_heads_to_prune = int(len(importance_scores) * pruning_ratio)

heads_to_prune = importance_scores.argsort()[:num_heads_to_prune]

pruned_model = self.prune_attention_heads(model, heads_to_prune)

return pruned_model

def compute_head_importance(self, model):

"""计算注意力头的重要性"""

importance_scores = {}

for name, module in model.named_modules():

if hasattr(module, 'attention_heads'):

# 基于注意力权重的方差计算重要性

for head_idx, head in enumerate(module.attention_heads):

head_variance = head.attention_weights.var().item()

importance_scores[f"{name}.head_{head_idx}"] = head_variance

return importance_scores

七、 Cross-Attention在复杂系统中的应用

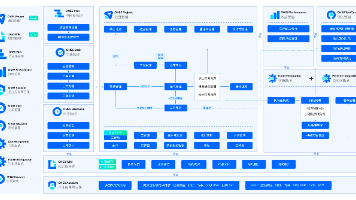

7.1 多模态融合系统

class MultimodalFusionWithCrossAttention:

"""基于Cross-Attention的多模态融合"""

def __init__(self, text_dim, image_dim, audio_dim, fusion_dim=512):

self.text_dim = text_dim

self.image_dim = image_dim

self.audio_dim = audio_dim

self.fusion_dim = fusion_dim

# 跨模态注意力模块

self.text_to_image_attention = CrossAttentionLayer(fusion_dim, 8)

self.image_to_text_attention = CrossAttentionLayer(fusion_dim, 8)

self.audio_to_multimodal_attention = CrossAttentionLayer(fusion_dim, 8)

# 模态编码器

self.text_encoder = nn.Linear(text_dim, fusion_dim)

self.image_encoder = nn.Linear(image_dim, fusion_dim)

self.audio_encoder = nn.Linear(audio_dim, fusion_dim)

def forward(self, text_features, image_features, audio_features):

"""多模态特征融合"""

# 编码到统一空间

text_encoded = self.text_encoder(text_features)

image_encoded = self.image_encoder(image_features)

audio_encoded = self.audio_encoder(audio_features)

# 文本-图像交叉注意力

text_enhanced_by_image = self.text_to_image_attention(

text_encoded, image_encoded, image_encoded

)

image_enhanced_by_text = self.image_to_text_attention(

image_encoded, text_encoded, text_encoded

)

# 音频-多模态交叉注意力

multimodal_context = torch.cat([text_enhanced_by_image, image_enhanced_by_text], dim=1)

audio_enhanced = self.audio_to_multimodal_attention(

audio_encoded, multimodal_context, multimodal_context

)

# 最终融合

fused_features = torch.cat([

text_enhanced_by_image.mean(dim=1),

image_enhanced_by_text.mean(dim=1),

audio_enhanced.mean(dim=1)

], dim=1)

return fused_features

class CrossModalRetrievalSystem:

"""基于Cross-Attention的跨模态检索"""

def __init__(self, query_modality='text', key_modality='image'):

self.query_modality = query_modality

self.key_modality = key_modality

self.cross_attention = CrossAttentionLayer(512, 8)

def compute_cross_modal_similarity(self, query_features, key_features):

"""计算跨模态相似度"""

# 使用cross-attention计算模态间对齐

aligned_query = self.cross_attention(query_features, key_features, key_features)

# 计算相似度分数

similarity_scores = F.cosine_similarity(

aligned_query.mean(dim=1),

key_features.mean(dim=1),

dim=-1

)

return similarity_scores

def retrieve_cross_modal(self, query, key_database, top_k=10):

"""跨模态检索"""

similarities = []

for key_id, key_features in key_database.items():

similarity = self.compute_cross_modal_similarity(query, key_features)

similarities.append((key_id, similarity.item()))

# 按相似度排序

similarities.sort(key=lambda x: x[1], reverse=True)

return similarities[:top_k]

7.2 层次化Cross-Attention架构

class HierarchicalCrossAttention:

"""层次化Cross-Attention架构"""

def __init__(self, num_levels=3, d_model=512, n_heads=8):

self.num_levels = num_levels

self.d_model = d_model

self.n_heads = n_heads

# 不同层次的注意力模块

self.level_attentions = nn.ModuleList([

CrossAttentionLayer(d_model, n_heads) for _ in range(num_levels)

])

# 层次融合模块

self.fusion_attention = CrossAttentionLayer(d_model, n_heads)

def forward(self, source_sequences, target_sequences, hierarchies):

"""

source_sequences: 源序列的多层次表示列表

target_sequences: 目标序列的多层次表示列表

hierarchies: 层次结构信息

"""

level_outputs = []

for level in range(self.num_levels):

# 当前层次的cross-attention

level_output = self.level_attentions[level](

target_sequences[level],

source_sequences[level],

source_sequences[level]

)

level_outputs.append(level_output)

# 跨层次注意力融合

fused_output = self.fuse_hierarchical_outputs(level_outputs, hierarchies)

return fused_output

def fuse_hierarchical_outputs(self, level_outputs, hierarchies):

"""融合多层次输出"""

# 将所有层次的输出拼接

all_outputs = torch.cat(level_outputs, dim=1)

# 使用self-attention进行层次间信息交换

fused = self.fusion_attention(all_outputs, all_outputs, all_outputs)

return fused

class MultiScaleCrossAttention:

"""多尺度Cross-Attention"""

def __init__(self, scales=[1, 2, 4], d_model=512):

self.scales = scales

self.d_model = d_model

self.scale_attentions = nn.ModuleList([

CrossAttentionLayer(d_model, 8) for _ in range(len(scales))

])

def forward(self, queries, keys, values):

"""多尺度处理"""

scale_outputs = []

for i, scale in enumerate(self.scales):

# 尺度调整

Q_scaled = self.resize_sequence(queries, scale)

K_scaled = self.resize_sequence(keys, scale)

V_scaled = self.resize_sequence(values, scale)

# 尺度特定的cross-attention

scale_output = self.scale_attentions[i](Q_scaled, K_scaled, V_scaled)

scale_outputs.append(scale_output)

# 多尺度融合

fused_output = self.fuse_multiscale_outputs(scale_outputs)

return fused_output

def resize_sequence(self, sequence, scale_factor):

"""调整序列尺度"""

if scale_factor == 1:

return sequence

batch_size, seq_len, d_model = sequence.shape

if scale_factor > 1:

# 下采样

new_len = seq_len // scale_factor

return F.adaptive_avg_pool1d(sequence.transpose(1,2), new_len).transpose(1,2)

else:

# 上采样

new_len = int(seq_len * abs(scale_factor))

return F.interpolate(sequence.transpose(1,2), size=new_len, mode='linear').transpose(1,2)

八、 未来展望与研究前沿

8.1 技术发展趋势

class CrossAttentionFutureTrends:

"""Cross-Attention技术发展趋势"""

def emerging_research_directions(self):

directions = {

'efficient_attention': {

'trend': '高效注意力机制',

'focus': '线性复杂度、稀疏注意力、分块计算',

'potential': '处理极长序列(>10k tokens)'

},

'dynamic_attention': {

'trend': '动态注意力机制',

'focus': '自适应计算、条件计算、可学习结构',

'potential': '根据输入动态调整计算路径'

},

'explainable_attention': {

'trend': '可解释注意力',

'focus': '注意力可视化、归因分析、因果推理',

'potential': '增强模型透明度和可信度'

},

'multimodal_unification': {

'trend': '多模态统一注意力',

'focus': '跨模态通用表示、统一注意力机制',

'potential': '真正的多模态理解与生成'

}

}

return directions

def predicted_breakthroughs(self, timeline='5年'):

"""预测技术突破"""

breakthroughs = {

'理论突破': [

'注意力复杂度的理论下界证明',

'注意力与记忆机制的神经科学基础',

'注意力最优架构的数学理论'

],

'技术突破': [

'千倍序列长度的高效注意力',

'完全可解释的注意力机制',

'通用多模态注意力架构'

],

'应用突破': [

'实时长文档理解系统',

'创造性跨模态生成',

'人机协同注意力系统'

]

}

return breakthroughs

8.2 挑战与机遇

class CrossAttentionChallenges:

"""Cross-Attention面临的挑战"""

def identify_key_challenges(self):

challenges = {

'computational_bottleneck': {

'challenge': '计算复杂度瓶颈',

'impact': '限制序列长度和模型规模',

'research_direction': '高效注意力算法'

},

'interpretability_issues': {

'challenge': '可解释性不足',

'impact': '黑箱决策,难以信任',

'research_direction': '可解释注意力机制'

},

'generalization_limits': {

'challenge': '泛化能力限制',

'impact': '域外表现下降',

'research_direction': '元学习注意力'

},

'multimodal_alignment': {

'challenge': '多模态对齐困难',

'impact': '跨模态理解不准确',

'research_direction': '统一表示学习'

}

}

return challenges

def potential_solutions(self):

"""潜在解决方案"""

solutions = {

'硬件算法协同设计': {

'approach': '针对注意力优化的专用硬件',

'potential': '数量级性能提升',

'timeline': '3-5年'

},

'神经符号融合': {

'approach': '符号推理与神经注意力的结合',

'potential': '增强推理能力和可解释性',

'timeline': '5-7年'

},

'生物启发注意力': {

'approach': '借鉴人类视觉注意力的机制',

'potential': '更高效和鲁棒的注意力',

'timeline': '长期研究'

}

}

return solutions

九、 总结

- Cross-Attention作为现代深度学习的核心组件,已经从最初的自然语言处理工具,发展成为连接多模态智能的通用桥梁。通过我们的深入探索,可以看到:

技术演进的核心脉络:

- 从简单的序列对齐到复杂的多模态理解

- 从固定计算模式到自适应动态架构

- 从黑箱操作到可解释、可控的注意力机制

关键技术洞察:

- 注意力作为信息路由:Cross-Attention本质上是动态的信息路由机制

- 多尺度的重要性:结合不同粒度的注意力可以获得更丰富的表示

- 效率与效果的平衡:需要在计算复杂度和模型性能之间找到最优平衡点

实践应用建议:

- 根据任务特点选择合适的注意力变体

- 重视注意力可视化和解释性分析

- 在模型设计中充分考虑计算效率约束

- 充分利用预训练模型的注意力知识

Cross-Attention的发展远未结束,随着计算硬件的进步、理论理解的深入以及新应用场景的出现,这一技术将继续演进,为人工智能的发展提供强大动力。

在智能系统的构建中,Cross-Attention不仅是技术组件,更是实现真正理解和创造的认知桥梁——它让机器能够像人类一样,在不同的信息源之间建立有意义的连接,从而产生真正的智能行为。

更多推荐

已为社区贡献21条内容

已为社区贡献21条内容

所有评论(0)