不是OpenAI用不起,而是硅基流动更有性价比:全语言GPT调用指南

欢迎来到小灰灰的博客空间!Weclome you!

博客主页:IT·小灰灰

爱发电:小灰灰的爱发电

热爱领域:前端(HTML)、后端(PHP)、人工智能、云服务

目录

在之前的文章中已经讲了通过国内硅基流动平台调用AI模型,但是比较热门的GPT模型因为没有国内备案所以没上硅基流动国内平台,但是在最近,网上冲浪时偶然发现了硅基流动国际平台,通过github账号登录后发现,居然有open ai的gpt大模型,并且还送了1美元体验,这个gpt调用价格也还比较便宜,0.18美元/一百万tokens,跟模力方舟的比稍微便宜一点,但是充值的话必须银行卡,无法微信/支付宝付款,也无法像国内平台那样通过邀请新人拿余额,模力方舟那边可以微信和支付宝。这篇文章,就是从那些踩过的坑和跑通的代码里整理出来的,希望能帮你少走点弯路。

一、准备工作

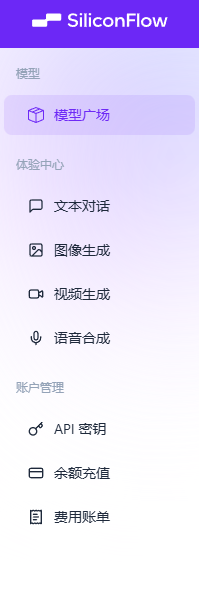

首先访问硅基流动国际平台,选择谷歌或github账号登录。

进入API密钥中,创建新密钥。

准备工作到这里完成了就,非常简单。

二、通过多种语言调用

2.1 python

import requests

import os

# 建议从环境变量读取 API Key

api_key = os.getenv("SILICONFLOW_API_KEY", "YOUR_API_KEY_HERE")

url = "https://api.siliconflow.com/v1/chat/completions"

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {api_key}"

}

data = {

"model": "openai/gpt-oss-20b",

"messages": [

{"role": "user", "content": "你好,你能帮我解释一下量子力学的基本原理吗?"}

],

"temperature": 0.7,

"max_tokens": 500

}

response = requests.post(url, headers=headers, json=data)

if response.status_code == 200:

reply = response.json()['choices'][0]['message']['content']

print("模型回复:", reply)

else:

print(f"请求失败,状态码: {response.status_code}")

print("错误详情:", response.text)2.2 curl

curl "https://api.siliconflow.com/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer YOUR_API_KEY_HERE" \

-d '{

"model": "openai/gpt-oss-20b",

"messages": [{"role": "user", "content": "你好,你能帮我解释一下量子力学的基本原理吗?"}],

"temperature": 0.7,

"max_tokens": 500

}'2.3 js

const apiKey = 'YOUR_API_KEY_HERE';

const url = 'https://api.siliconflow.com/v1/chat/completions';

const requestData = {

model: 'openai/gpt-oss-20b',

messages: [

{ role: 'user', content: '你好,你能帮我解释一下量子力学的基本原理吗?' }

],

temperature: 0.7,

max_tokens: 500

};

fetch(url, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer ${apiKey}`

},

body: JSON.stringify(requestData)

})

.then(response => {

if (!response.ok) {

throw new Error(`HTTP 错误! 状态码: ${response.status}`);

}

return response.json();

})

.then(data => {

const reply = data.choices[0].message.content;

console.log('模型回复:', reply);

})

.catch(error => {

console.error('请求出错:', error);

});2.4 go

package main

import (

"bytes"

"encoding/json"

"fmt"

"io"

"net/http"

)

func main() {

apiKey := "YOUR_API_KEY_HERE"

url := "https://api.siliconflow.com/v1/chat/completions"

requestData := map[string]interface{}{

"model": "openai/gpt-oss-20b",

"messages": []map[string]string{

{"role": "user", "content": "你好,你能帮我解释一下量子力学的基本原理吗?"},

},

"temperature": 0.7,

"max_tokens": 500,

}

jsonData, _ := json.Marshal(requestData)

req, _ := http.NewRequest("POST", url, bytes.NewBuffer(jsonData))

req.Header.Set("Content-Type", "application/json")

req.Header.Set("Authorization", "Bearer "+apiKey)

client := &http.Client{}

resp, err := client.Do(req)

if err != nil {

panic(err)

}

defer resp.Body.Close()

body, _ := io.ReadAll(resp.Body)

if resp.StatusCode == http.StatusOK {

var result map[string]interface{}

json.Unmarshal(body, &result)

choices := result["choices"].([]interface{})

reply := choices[0].(map[string]interface{})["message"].(map[string]interface{})["content"].(string)

fmt.Println("模型回复:", reply)

} else {

fmt.Printf("请求失败,状态码: %d\n响应体: %s\n", resp.StatusCode, string(body))

}

}2.5 java

import java.net.URI;

import java.net.http.*;

import java.time.Duration;

import java.util.*;

import com.fasterxml.jackson.databind.*;

public class SiliconFlowDemo {

public static void main(String[] args) throws Exception {

String apiKey = "YOUR_API_KEY_HERE";

String url = "https://api.siliconflow.com/v1/chat/completions";

// 构建请求体

Map<String, Object> body = new HashMap<>();

body.put("model", "openai/gpt-oss-20b");

List<Map<String, String>> messages = List.of(

Map.of("role", "user", "content", "你好,你能帮我解释一下量子力学的基本原理吗?")

);

body.put("messages", messages);

body.put("temperature", 0.7);

body.put("max_tokens", 500);

ObjectMapper mapper = new ObjectMapper();

String jsonBody = mapper.writeValueAsString(body);

HttpClient client = HttpClient.newHttpClient();

HttpRequest request = HttpRequest.newBuilder()

.uri(URI.create(url))

.header("Content-Type", "application/json")

.header("Authorization", "Bearer " + apiKey)

.POST(HttpRequest.BodyPublishers.ofString(jsonBody))

.timeout(Duration.ofSeconds(30))

.build();

HttpResponse<String> response = client.send(request,

HttpResponse.BodyHandlers.ofString());

if (response.statusCode() == 200) {

JsonNode root = mapper.readTree(response.body());

String reply = root.path("choices").get(0).path("message").path("content").asText();

System.out.println("模型回复: " + reply);

} else {

System.out.println("请求失败,状态码: " + response.statusCode());

System.out.println("响应体: " + response.body());

}

}

}2.6 php(curl扩展)

<?php

$url = 'https://api.siliconflow.com/v1/chat/completions';

$apiKey = 'YOUR_API_KEY_HERE';

$data = [

'model' => 'openai/gpt-oss-20b',

'messages' => [

['role' => 'user', 'content' => '你好,你能帮我解释一下量子力学的基本原理吗?']

],

'temperature' => 0.7,

'max_tokens' => 500

];

$ch = curl_init($url);

curl_setopt($ch, CURLOPT_POST, true);

curl_setopt($ch, CURLOPT_POSTFIELDS, json_encode($data));

curl_setopt($ch, CURLOPT_HTTPHEADER, [

'Content-Type: application/json',

'Authorization: Bearer ' . $apiKey

]);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

$response = curl_exec($ch);

$httpCode = curl_getinfo($ch, CURLINFO_HTTP_CODE);

curl_close($ch);

if ($httpCode === 200) {

$result = json_decode($response, true);

$reply = $result['choices'][0]['message']['content'];

echo "模型回复: " . $reply . "\n";

} else {

echo "请求失败,状态码: $httpCode\n";

echo "响应体: $response\n";

}

?>2.7 C#

// C# (HttpClient + System.Text.Json)

using System;

using System.Net.Http;

using System.Text;

using System.Text.Json;

using System.Threading.Tasks;

class SiliconFlowDemo

{

static async Task Main()

{

string apiKey = "YOUR_API_KEY_HERE";

string url = "https://api.siliconflow.com/v1/chat/completions";

var requestData = new

{

model = "openai/gpt-oss-20b",

messages = new[]

{

new { role = "user", content = "你好,你能帮我解释一下量子力学的基本原理吗?" }

},

temperature = 0.7,

max_tokens = 500

};

var json = JsonSerializer.Serialize(requestData);

var content = new StringContent(json, Encoding.UTF8, "application/json");

using (var client = new HttpClient())

{

client.DefaultRequestHeaders.Authorization =

new System.Net.Http.Headers.AuthenticationHeaderValue("Bearer", apiKey);

var response = await client.PostAsync(url, content);

var responseString = await response.Content.ReadAsStringAsync();

if (response.IsSuccessStatusCode)

{

var responseJson = JsonDocument.Parse(responseString);

var reply = responseJson.RootElement

.GetProperty("choices")[0]

.GetProperty("message")

.GetProperty("content")

.GetString();

Console.WriteLine("模型回复: " + reply);

}

else

{

Console.WriteLine($"请求失败,状态码: {(int)response.StatusCode}");

Console.WriteLine("响应体: " + responseString);

}

}

}

}

// 依赖: .NET 5+ 或 .NET Core 3.0+2.8 C++

// C++ (libcurl + nlohmann/json)

#include <iostream>

#include <string>

#include <curl/curl.h> // 需要 libcurl 开发包

#include <nlohmann/json.hpp> // 需要 nlohmann/json 单头文件库

// 回调函数用于接收响应

static size_t WriteCallback(void* contents, size_t size, size_t nmemb, void* userp)

{

((std::string*)userp)->append((char*)contents, size * nmemb);

return size * nmemb;

}

int main()

{

CURL* curl = curl_easy_init();

if (!curl) {

std::cerr << "初始化 libcurl 失败" << std::endl;

return 1;

}

std::string apiKey = "YOUR_API_KEY_HERE";

std::string url = "https://api.siliconflow.com/v1/chat/completions";

// 构建 JSON 请求体

nlohmann::json requestData = {

{"model", "openai/gpt-oss-20b"},

{"messages", nlohmann::json::array({

{{"role", "user"}, {"content", "你好,你能帮我解释一下量子力学的基本原理吗?"}}

})},

{"temperature", 0.7},

{"max_tokens", 500}

};

std::string jsonBody = requestData.dump();

std::string response_str;

// 设置请求头

struct curl_slist* headers = nullptr;

headers = curl_slist_append(headers, "Content-Type: application/json");

headers = curl_slist_append(headers, ("Authorization: Bearer " + apiKey).c_str());

curl_easy_setopt(curl, CURLOPT_URL, url.c_str());

curl_easy_setopt(curl, CURLOPT_HTTPHEADER, headers);

curl_easy_setopt(curl, CURLOPT_POSTFIELDS, jsonBody.c_str());

curl_easy_setopt(curl, CURLOPT_WRITEFUNCTION, WriteCallback);

curl_easy_setopt(curl, CURLOPT_WRITEDATA, &response_str);

curl_easy_setopt(curl, CURLOPT_TIMEOUT, 30L);

CURLcode res = curl_easy_perform(curl);

if (res == CURLE_OK) {

long http_code = 0;

curl_easy_getinfo(curl, CURLINFO_RESPONSE_CODE, &http_code);

if (http_code == 200) {

try {

auto response_json = nlohmann::json::parse(response_str);

std::string reply = response_json["choices"][0]["message"]["content"];

std::cout << "模型回复: " << reply << std::endl;

} catch (const std::exception& e) {

std::cerr << "解析 JSON 失败: " << e.what() << std::endl;

}

} else {

std::cout << "请求失败,状态码: " << http_code << std::endl;

std::cout << "响应体: " << response_str << std::endl;

}

} else {

std::cerr << "请求执行失败: " << curl_easy_strerror(res) << std::endl;

}

curl_slist_free_all(headers);

curl_easy_cleanup(curl);

curl_global_cleanup();

return 0;

}

// 依赖: libcurl, nlohmann/json (单头文件)

// 编译示例 (Linux/macOS):

// g++ -std=c++17 siliconflow_demo.cpp -lcurl -o demo

// 或使用 vcpkg: g++ -std=c++17 siliconflow_demo.cpp -I/path/to/nlohmann -lcurl -o demo2.9 重要提醒

-

所有示例中的

YOUR_API_KEY_HERE需替换为实际密钥 -

生产环境务必使用环境变量管理 API Key

-

C++ 示例需安装 libcurl 开发包及下载 nlohmann/json.hpp 头文件

-

C# 示例需要 .NET 5+ 或 .NET Core 3.0+ 环境

-

各语言示例均未设置代理,需网络环境可直连

api.siliconflow.com -

如需流式响应,在各语言中设置

"stream": true并解析 SSE 格式数据块

三、扩展内容

3.1 流式响应处理

流式响应在对话场景中几乎是必需品。设置 "stream": true 后,API 会返回 Server-Sent Events (SSE) 格式数据,每个数据块都是独立的 JSON 片段,以 data: 开头。

Python 实现需要注意逐行读取和异常断开的处理:

import requests

import json

def stream_chat():

api_key = os.getenv("SILICONFLOW_API_KEY")

url = "https://api.siliconflow.com/v1/chat/completions"

data = {

"model": "openai/gpt-oss-20b",

"messages": [{"role": "user", "content": "写一段关于秋天的散文"}],

"stream": True,

"temperature": 0.8

}

response = requests.post(url, headers={"Authorization": f"Bearer {api_key}"},

json=data, stream=True)

for line in response.iter_lines():

if line.startswith(b"data: "):

chunk = line[6:] # 去掉 "data: " 前缀

if chunk == b"[DONE]": # 流结束标志

break

try:

data = json.loads(chunk)

content = data["choices"][0]["delta"].get("content", "")

print(content, end="", flush=True)

except json.JSONDecodeError:

continue # 忽略解析失败的异常行JavaScript 的 fetch API 对 SSE 支持不太友好,建议使用 eventsource-parser 这类库:

import { createParser } from 'eventsource-parser';

const response = await fetch(url, {

method: 'POST',

headers: { 'Authorization': `Bearer ${apiKey}` },

body: JSON.stringify({ model: 'openai/gpt-oss-20b', messages, stream: true })

});

const reader = response.body.getReader();

const decoder = new TextDecoder();

const parser = createParser(event => {

if (event.type === 'event' && event.data !== '[DONE]') {

const data = JSON.parse(event.data);

const content = data.choices[0].delta.content || '';

process.stdout.write(content);

}

});

while (true) {

const { done, value } = await reader.read();

if (done) break;

parser.feed(decoder.decode(value));

}3.2 错误处理与重试策略

实际部署中,网络抖动、速率限制、服务暂时不可用是常态。一个健壮的调用层需要分级处理错误:

import time

import random

def call_with_retry(func, max_retries=3, backoff_factor=2):

for attempt in range(max_retries):

try:

return func()

except requests.exceptions.HTTPError as e:

status = e.response.status_code

# 客户端错误不 retry

if 400 <= status < 500:

if status == 429: # 速率限制特殊处理

wait = int(e.response.headers.get('Retry-After', 60))

time.sleep(wait)

continue

raise

# 服务器错误才 retry

elif 500 <= status < 600:

if attempt == max_retries - 1:

raise

wait_time = backoff_factor ** attempt + random.uniform(0, 1)

time.sleep(wait_time)

continue

# 使用示例

def make_request():

resp = requests.post(url, headers=headers, json=data)

resp.raise_for_status()

return resp.json()

try:

result = call_with_retry(make_request)

except Exception as e:

# 记录日志,触发告警

logger.error(f"API 调用失败: {e}")3.3 生产环境配置管理

编码 API Key 是灾难的开始。不同环境的配置隔离:

# config.py

from pydantic import BaseSettings

class Settings(BaseSettings):

siliconflow_api_key: str = ""

api_base_url: str = "https://api.siliconflow.com/v1"

max_tokens: int = 800

timeout: int = 30

class Config:

env_file = ".env"

# .env 文件 (gitignore)

SILICONFLOW_API_KEY=sk-abc123...

MAX_TOKENS=1000

# 使用

settings = Settings()对于 Kubernetes 部署:

# deployment.yaml

env:

- name: SILICONFLOW_API_KEY

valueFrom:

secretKeyRef:

name: siliconflow-secret

key: api-key3.4 高级参数组合调优

除了基础的 temperature 和 max_tokens,几个冷门但关键的参数:

-

top_p=0.9+temperature=0.4:适合事实性问答,减少幻觉 -

frequency_penalty=0.5:避免重复用词,长文本生成时效果显著 -

presence_penalty=0.3:鼓励引入新话题,多轮对话中保持多样性 -

stop=["\n\n", "Human:", "AI:"]:自定义停止序列,控制输出格式

# 技术文档生成专用配置

data = {

"model": "openai/gpt-oss-20b",

"messages": [{"role": "user", "content": "解释 TCP 三次握手"}],

"temperature": 0.3,

"max_tokens": 1200,

"top_p": 0.85,

"frequency_penalty": 0.4,

"presence_penalty": 0.2,

"stop": ["\n\n###", "2", "3"]

}3.5 成本监控与告警

响应中的 usage 字段是计费依据,建议持久化到时序数据库:

# 记录每次调用的 token 消耗

def log_usage(response):

usage = response.get('usage', {})

prompt_tokens = usage.get('prompt_tokens', 0)

completion_tokens = usage.get('completion_tokens', 0)

total_cost = (prompt_tokens * 0.001 + completion_tokens * 0.002) / 1000 # 示例单价

metrics.send({

'metric': 'api_token_cost',

'value': total_cost,

'tags': {'model': response['model']}

})

# 设置每日预算告警

DAILY_BUDGET = 10.0 # 美元

if accumulated_cost > DAILY_BUDGET:

alert.send("API 预算已超支")3.6 与 OpenAI SDK 的兼容方案

如果已有代码基于 OpenAI Python 客户端,改动最小化:

from openai import OpenAI

# 只需要修改 base_url 和 api_key

client = OpenAI(

api_key=os.getenv("SILICONFLOW_API_KEY"),

base_url="https://api.siliconflow.com/v1"

)

response = client.chat.completions.create(

model="openai/gpt-oss-20b",

messages=[{"role": "user", "content": "你好"}],

temperature=0.7

)

print(response.choices[0].message.content)这种方式利用了 OpenAI SDK 的接口兼容性,但注意并非所有功能都支持,比如函数调用(function calling)需要确认服务端的实际支持情况。

3.7 常见问题排查清单

-

返回 401:检查 Key 是否复制完整,Bearer 后是否有空格

-

返回 403:余额不足或模型未开通权限,去控制台确认

-

返回 429:速率限制,检查

X-RateLimit-Remaining响应头 -

返回 502/503:服务端问题,重试即可。建议在低峰期跑关键任务

-

响应内容被截断:

max_tokens设得太小,或触发了未明说的长度限制,可尝试分两阶段生成 -

中文乱码:确保请求头和响应都使用 UTF-8 编码,大多数 HTTP 库默认处理正确

3.8 调试技巧

在请求中加入 {"stream": true, "echo": true} 可以同时在响应中看到请求内容,方便调试。对于复杂的问题,减少 max_tokens 到 50 快速验证连通性,避免每次测试消耗过多 token。

四、那些文档没写但你得知道的事

-

费用感知:每次响应里的

usage字段会告诉你花了多少 token。我习惯在测试环境把这点日志打出来,避免月底账单惊喜。 -

速率限制:目前体感是每分钟 60 次请求,超过会返回 429。做批量处理时,用

time.Sleep()简单控速就行。 -

网络超时:默认 timeout 建议设 30 秒,模型偶尔会有延迟峰值。我加过

client.timeout = 30*time.Second,避免了几个僵尸请求。 -

错误码应对:401 是 Key 错了,403 是余额不足,429 是太频繁,500 是服务抽风——前三个得你自己解决,最后一个重试就行。

结语

接口调通只是开始。真正让模型好好说话,靠的是不断调整 messages 里的上下文、试验参数组合,以及最重要的——观察真实用户的反馈。那份 rate limit 和 token 消耗之间的平衡,没有标准答案,只能在一次次请求里找到最适合你场景的那个点。

如果还有问题,直接去文档页面翻最新的更新日志,API 接口这东西,三个月前的经验都可能过时。祝你的模型早日开口成章。

更多推荐

已为社区贡献37条内容

已为社区贡献37条内容

所有评论(0)