Kimi AI: A Comprehensive Analysis of Technical Architecture and Market Potential

Kimi AI is a state-of-the-art artificial intelligence system developed by Moonshot AI (月之暗面科技有限公司), a Beijing-based startup founded in March 2023 by Yang Zhilin, a distinguished alumnus of Tsinghua Un

1. Executive Summary

1.1 Overview of Kimi AI and Moonshot AI

Kimi AI is a state-of-the-art artificial intelligence system developed by Moonshot AI (月之暗面科技有限公司), a Beijing-based startup founded in March 2023 by Yang Zhilin, a distinguished alumnus of Tsinghua University and a former researcher at Baidu and Google . The company has rapidly emerged as a significant player in the global AI landscape, with a strategic focus on creating advanced, open-weight large language models (LLMs) that excel in agentic intelligence, complex reasoning, and real-world task execution. Backed by major investors like Alibaba, Moonshot AI has positioned itself as a formidable competitor to established Western AI labs, aiming to democratize access to powerful AI through its open-source philosophy and cost-effective solutions . The latest iteration, Kimi K2, represents a culmination of these efforts, showcasing a sophisticated Mixture-of-Experts (MoE) architecture and a novel training methodology that has garnered international attention . The company’s mission extends beyond mere conversational AI, aiming to build autonomous agents capable of planning, using tools, and solving complex, multi-step problems, thereby redefining the practical applications of generative AI .

The development of Kimi AI is underpinned by a commitment to both fundamental research and practical application. The team has introduced significant innovations in model optimization, such as the MuonClip optimizer, which ensures stable training at an unprecedented scale of 1 trillion parameters . This technical prowess is complemented by a strategic market approach that leverages an open-weight model release, fostering a vibrant developer community and encouraging widespread adoption . By making its models accessible and affordable, Moonshot AI is not only challenging the dominance of proprietary systems but also catalyzing innovation in AI development globally. The company’s rapid ascent and the impressive capabilities of Kimi K2 signal a shift in the AI landscape, where high-performance models are no longer the exclusive domain of a few large corporations, but are increasingly available to a broader ecosystem of researchers, developers, and enterprises .

1.2 Key Findings on Kimi K2’s Performance and Market Position

Kimi K2 has demonstrated exceptional performance across a wide range of industry-standard benchmarks, often surpassing leading models from OpenAI, Anthropic, and Meta, particularly in domains requiring agentic capabilities, coding, and complex reasoning . Its Mixture-of-Experts (MoE) architecture, with 1 trillion total parameters and 32 billion activated parameters, provides a powerful yet efficient foundation for its capabilities . The model’s performance on benchmarks such as SWE-Bench (65.8%) , LiveCodeBench (53.7%) , and Humanity’s Last Exam (44.9% with tools) highlights its advanced problem-solving and tool-use abilities . These results are not merely academic; they translate into practical advantages in real-world applications, from autonomous software engineering to sophisticated data analysis and research assistance . The model’s ability to execute hundreds of sequential tool calls without human intervention marks a significant step forward in the development of truly autonomous AI agents .

From a market perspective, Kimi K2’s open-weight and cost-effective strategy positions it as a highly disruptive force. By offering a model that rivals the performance of closed-source competitors at a fraction of the cost, Moonshot AI is targeting a broad market segment that includes price-sensitive startups, academic researchers, and enterprises concerned with data sovereignty and customization . The company’s API pricing is estimated to be 30-40% lower than that of comparable models like GPT-5, making advanced AI capabilities more accessible than ever before . This approach has already attracted significant attention, with some U.S. companies beginning to adopt Chinese AI models like Kimi for their superior performance and cost advantages . The strategic backing from Alibaba further strengthens its market position, providing the necessary infrastructure and potential integration channels to scale its deployment and challenge the existing market leaders .

1.3 Strategic Implications for the AI Search Landscape

The emergence of Kimi K2 has profound strategic implications for the AI search and assistant landscape, signaling a move towards more specialized, agentic, and open models. Unlike traditional search engines or general-purpose chatbots, Kimi K2 is designed to be an active agent that can interact with its environment, use tools, and complete complex tasks . This capability blurs the lines between information retrieval and task execution, setting a new standard for what users can expect from an AI assistant. Its success challenges the prevailing model of closed, proprietary systems and demonstrates that an open-source approach can foster rapid innovation and achieve state-of-the-art performance . This could accelerate a trend where developers and enterprises favor open models that they can customize, audit, and deploy on their own infrastructure, thereby gaining greater control over their data and applications .

Furthermore, Kimi K2’s strong performance in coding and reasoning tasks, combined with its long-context processing capabilities, makes it a formidable competitor in the enterprise market . It can serve as a powerful tool for software development, data analysis, and research, potentially displacing incumbent solutions that lack its level of integration and autonomy. The model’s ability to handle complex, multi-step workflows autonomously also opens up new possibilities for automating business processes and enhancing productivity . As the AI landscape continues to evolve, Kimi K2’s success is likely to inspire further investment in agentic AI and open-source development, leading to a more diverse and competitive ecosystem. The strategic decisions made by companies like Moonshot AI will shape the future of AI, pushing the boundaries of what is possible and making advanced AI capabilities more accessible and impactful across a wide range of industries and applications .

2. Technical Architecture of Kimi K2

2.1 Mixture-of-Experts (MoE) Model Design

The technical foundation of Kimi K2 is built upon a sophisticated Mixture-of-Experts (MoE) architecture, a design choice that enables the model to achieve a remarkable balance between immense scale and computational efficiency. This architecture is a significant departure from traditional dense models, where all parameters are active during every computation. Instead, the MoE model consists of a large number of “expert” sub-networks, and for any given input, only a small subset of these experts is activated. This sparse activation mechanism is the key to Kimi K2’s ability to handle a massive number of parameters without incurring the prohibitive computational costs associated with dense models of a similar size. The MoE design allows Kimi K2 to effectively leverage its vast knowledge base, which is distributed across its numerous experts, to provide highly specialized and accurate responses to a wide range of queries. This architectural choice is particularly well-suited for the diverse and complex tasks that Kimi AI is designed to handle, from intricate programming challenges to nuanced data analysis and creative content generation.

The implementation of the MoE architecture in Kimi K2 is further enhanced by a custom training framework that supports both data and model parallelism. This framework is crucial for efficiently training a model of this scale, as it allows for the distribution of the computational load across multiple processing units, significantly reducing the time required for training. The combination of the MoE architecture and this advanced training framework provides Kimi K2 with a powerful and scalable foundation, enabling it to continuously learn and adapt to new information and tasks. This architectural design not only contributes to the model’s impressive performance on various benchmarks but also ensures its long-term viability and potential for future enhancements. As the field of AI continues to evolve, the MoE architecture provides a flexible and efficient framework for developing even larger and more capable models, positioning Kimi AI at the forefront of this technological advancement.

2.1.1 Scale and Efficiency: 1 Trillion Total Parameters, 32 Billion Activated

The scale of Kimi K2 is truly impressive, boasting a total of 1 trillion parameters, a figure that places it among the largest and most powerful AI models in existence . This vast number of parameters allows the model to capture and represent a rich and nuanced understanding of the world, encompassing a wide range of domains, from natural language and programming languages to scientific concepts and cultural knowledge. However, the sheer size of the model does not necessarily translate to a proportional increase in computational cost, thanks to its Mixture-of-Experts (MoE) architecture. The MoE design ensures that only a fraction of the model’s total parameters are activated during any given inference task. Specifically, Kimi K2 activates only 32 billion parameters for a given input, a small subset of its total 1 trillion parameters . This sparse activation is the key to the model’s efficiency, as it allows Kimi K2 to leverage the power of a massive model while maintaining a computational footprint that is comparable to a much smaller dense model.

This balance between scale and efficiency is a critical factor in the practical deployment of Kimi K2. It enables the model to be served at a reasonable cost, making its advanced capabilities accessible to a broader audience. The ability to handle a massive number of parameters without a corresponding increase in computational cost is a significant advantage over traditional dense models, which would require exponentially more resources to achieve a similar level of performance. The MoE architecture, therefore, represents a paradigm shift in the design of large-scale AI models, offering a scalable and efficient solution for developing increasingly powerful and capable AI systems. The combination of a trillion-parameter scale and a 32-billion-parameter activation budget provides Kimi K2 with a unique advantage, allowing it to deliver state-of-the-art performance while remaining economically viable for a wide range of applications.

2.1.2 Dynamic Expert Activation for Task-Specific Processing

The core innovation of the Mixture-of-Experts (MoE) architecture in Kimi K2 lies in its dynamic expert activation mechanism. This system intelligently routes each input to a select group of specialized “expert” sub-networks within the model, ensuring that the most relevant knowledge and computational resources are applied to the task at hand. This process is not static; it is a dynamic and adaptive system that learns to identify which experts are best suited for a particular type of input, whether it’s a complex mathematical problem, a piece of code, or a natural language query. This dynamic routing is managed by a “gating network,” which analyzes the input and determines the optimal combination of experts to activate. This allows Kimi K2 to effectively leverage its vast and diverse knowledge base, which is distributed across its numerous experts, to provide highly accurate and contextually relevant responses.

The benefits of this dynamic activation are twofold. First, it significantly improves the model’s efficiency by avoiding the need to process every input through the entire network of 1 trillion parameters. By activating only a small subset of experts, Kimi K2 can deliver its powerful capabilities at a fraction of the computational cost of a dense model of a similar size. Second, it enhances the model’s performance by allowing for specialization. Each expert can be trained to excel in a specific domain or task, and the dynamic activation mechanism ensures that the most appropriate experts are always engaged. This specialization leads to a higher degree of accuracy and a deeper understanding of the nuances of different types of information. The dynamic expert activation mechanism is, therefore, a cornerstone of Kimi K2’s design, enabling it to achieve a remarkable balance of power, efficiency, and precision.

2.2 Core Innovations in Model Training

The development of Kimi K2 was not just about scaling up the model architecture; it also involved a series of core innovations in the training process that were crucial for achieving its high level of performance and stability. One of the most significant of these innovations is the MuonClip optimizer, a novel optimization algorithm developed by the Moonshot AI team . This optimizer is an enhanced version of the Muon optimizer, which is known for its efficiency in training large-scale models. The key improvement in MuonClip is the introduction of a new technique called QK-clip, which is designed to address the issue of training instability that can often arise in large-scale models . Training instability, often manifested as “loss spikes,” can be a major challenge in the development of large language models, as it can lead to a degradation in model performance and even cause the training process to fail. The QK-clip technique in MuonClip helps to mitigate this problem by providing a more stable and robust training process, allowing the model to be trained on a massive dataset of 15.5 trillion tokens without experiencing any loss spikes .

The stability provided by the MuonClip optimizer was a critical factor in the successful training of Kimi K2. It allowed the research team to push the limits of model scale and data size without being hampered by training instabilities. This, in turn, enabled the model to learn a richer and more nuanced representation of language and knowledge, which is reflected in its superior performance on a wide range of benchmarks. The development of the MuonClip optimizer is a testament to the technical expertise of the Moonshot AI team and their commitment to pushing the boundaries of AI research. It is a key example of the kind of core innovation that is needed to build the next generation of AI models. By addressing the fundamental challenge of training stability, the MuonClip optimizer has not only enabled the successful development of Kimi K2 but has also provided a valuable tool for the broader AI research community. The details of the QK-clip technique and its implementation in the MuonClip optimizer are a key part of the technical report on Kimi K2, and they provide valuable insights into the cutting-edge techniques that are being used to train the world’s most advanced AI models .

2.2.1 The MuonClip Optimizer for Enhanced Training Stability

The MuonClip optimizer is a cornerstone of the Kimi K2 training process, representing a significant advancement in the field of large-scale model optimization. It is an evolution of the Muon optimizer, which has gained recognition for its effectiveness in training deep neural networks. The primary innovation of MuonClip lies in its ability to maintain training stability even at the massive scale of Kimi K2. This is a critical achievement, as training instability is a common and often debilitating problem in the development of large language models. Instabilities can manifest as sudden spikes in the loss function, which can derail the training process and lead to suboptimal model performance. The Moonshot AI team identified this challenge and developed the MuonClip optimizer as a solution, enabling them to train Kimi K2 on a dataset of 15.5 trillion tokens without encountering a single loss spike . This level of stability is a testament to the robustness of the optimizer and a key factor in the model’s success.

The enhanced stability of the MuonClip optimizer is not just a technical achievement; it has profound practical implications for the development of large-scale AI models. By mitigating the risk of training instabilities, the optimizer allows researchers to explore larger and more complex model architectures with greater confidence. It also reduces the need for extensive hyperparameter tuning and manual intervention during the training process, which can be both time-consuming and resource-intensive. The ability to train a model as large as Kimi K2 without any loss spikes is a significant step forward in the field of AI, and it opens up new possibilities for the development of even more powerful and capable models in the future. The MuonClip optimizer is a key example of the kind of foundational research that is needed to drive progress in AI, and its development is a major contribution of the Moonshot AI team to the broader research community. The details of the optimizer’s design and implementation are a valuable resource for anyone interested in the technical challenges of training large-scale AI models.

2.2.2 QK-Clip Technology to Mitigate Training Instabilities

At the heart of the MuonClip optimizer is the QK-clip technology, a novel technique specifically designed to address the problem of training instabilities in large-scale models. The “QK” in QK-clip refers to the query and key matrices in the attention mechanism of the transformer architecture, which is the foundation of most modern language models. The attention mechanism is a critical component of these models, as it allows them to weigh the importance of different parts of the input sequence when making predictions. However, the computation of the attention scores can sometimes lead to numerical instabilities, particularly in very large models, which can cause the training process to become unstable. The QK-clip technique addresses this issue by applying a clipping operation to the attention scores, which helps to keep them within a stable range and prevent them from becoming too large or too small.

The implementation of the QK-clip technique is a key part of the MuonClip optimizer’s ability to ensure stable training. By mitigating the numerical instabilities in the attention mechanism, the QK-clip technique helps to create a more robust and reliable training process. This is particularly important for a model like Kimi K2, which has a massive number of parameters and is trained on a very large dataset. The fact that the model was trained without any loss spikes is a strong indication of the effectiveness of the QK-clip technique . The development of this technique is a significant contribution to the field of AI, as it provides a practical solution to a long-standing problem in the training of large-scale models. It is a key example of the kind of innovative research that is being done at Moonshot AI, and it has the potential to benefit the entire AI research community. The details of the QK-clip technique are a valuable resource for anyone interested in the technical challenges of training large-scale AI models, and they provide a fascinating glimpse into the cutting-edge techniques that are being used to build the next generation of AI systems.

2.3 Advanced Attention Mechanisms

Kimi K2’s ability to handle long-context windows and perform complex reasoning tasks is largely due to its advanced attention mechanisms. The model employs a Multi-head Latent Attention (MLA) mechanism, which is specifically designed to improve inference efficiency and enable the processing of long sequences of text . This mechanism is a key innovation that allows Kimi K2 to maintain a high level of performance even when dealing with context windows of up to 256,000 tokens, a capability that is far beyond that of many other language models . The MLA mechanism works by creating a compressed representation of the input sequence, which is then used to compute the attention weights. This compressed representation allows the model to focus on the most relevant parts of the input sequence, while ignoring the less important parts, which helps to improve both the efficiency and the accuracy of the attention mechanism.

The use of advanced attention mechanisms is a key differentiator for Kimi K2, as it allows the model to excel in a wide range of tasks that require a deep understanding of long and complex texts. For example, the model can be used to analyze entire books, summarize lengthy documents, or engage in extended conversations without losing track of the context. This is a significant advantage over models that are limited to shorter context windows, as it allows for a more natural and intuitive interaction with the AI. The development of advanced attention mechanisms like MLA is a testament to Moonshot AI’s commitment to pushing the boundaries of AI research and a key factor in the success of Kimi K2. As the demand for AI systems that can handle long and complex texts continues to grow, these advanced attention mechanisms are likely to become increasingly important in the development of next-generation AI models.

2.3.1 Multi-head Latent Attention (MLA) for Inference Efficiency

The Multi-head Latent Attention (MLA) mechanism is a key component of Kimi K2’s architecture, designed to enhance inference efficiency and enable the processing of long-context windows. This mechanism is a significant improvement over traditional attention mechanisms, which can be computationally expensive and memory-intensive, particularly when dealing with long sequences of text. The MLA mechanism addresses these challenges by creating a compressed, or “latent,” representation of the input sequence, which is then used to compute the attention weights. This latent representation captures the most important information from the input sequence, while discarding the less relevant details, which helps to reduce the computational and memory requirements of the attention mechanism.

The use of a latent representation is a key innovation of the MLA mechanism, as it allows the model to focus its attention on the most salient parts of the input sequence. This is particularly important in the context of long-context windows, where the model needs to be able to identify and attend to the most relevant information from a large amount of text. By creating a compressed representation of the input sequence, the MLA mechanism is able to achieve this goal in a more efficient and effective manner than traditional attention mechanisms. This, in turn, allows Kimi K2 to maintain a high level of performance even when dealing with context windows of up to 256,000 tokens, a capability that is a key differentiator for the model. The development of the MLA mechanism is a testament to Moonshot AI’s commitment to developing cutting-edge AI technologies and a key factor in the success of Kimi K2.

2.3.2 Handling Long-Context Windows (128K+ Tokens)

Kimi K2’s ability to handle long-context windows of up to 256,000 tokens is one of its most impressive features, and it is made possible by a combination of its advanced architecture and innovative training techniques . The model’s Mixture-of-Experts (MoE) design, with its dynamic expert activation mechanism, plays a crucial role in this capability, as it allows the model to focus its computational resources on the most relevant parts of a long text. This is a significant advantage over traditional dense models, which would struggle to process such large amounts of text in a single pass. Additionally, the model’s Multi-head Latent Attention (MLA) mechanism is specifically designed to handle long sequences of text efficiently, by creating a compressed representation of the input that captures the most important information.

The ability to handle long-context windows has a number of important implications for the use of Kimi K2. It allows the model to be used for a wide range of tasks that were previously difficult or impossible for AI models to perform, such as analyzing entire books, summarizing lengthy legal documents, or engaging in extended conversations without losing track of the context. This is a significant step forward in the development of AI systems that can understand and interact with the world in a more human-like way. The development of Kimi K2’s long-context capabilities is a testament to Moonshot AI’s commitment to pushing the boundaries of AI research and a key factor in the model’s success. As the demand for AI systems that can handle long and complex texts continues to grow, these advanced attention mechanisms are likely to become increasingly important in the development of next-generation AI models.

3. Core Algorithms and Implementation

3.1 Multi-Stage Training Pipeline

The development of Kimi K2’s advanced capabilities is the result of a meticulously designed multi-stage training pipeline that combines large-scale unsupervised learning with targeted fine-tuning and reinforcement learning. This comprehensive approach ensures that the model not only possesses a broad and deep understanding of language and knowledge but also excels at specific, complex tasks that require reasoning, tool use, and adherence to human preferences. The training process is divided into two main phases: a pre-training stage, where the model learns from a massive and diverse dataset, and a post-training stage, where its capabilities are refined and specialized through a combination of supervised fine-tuning, reinforcement learning from human feedback (RLHF), and training for agentic behaviors. This multi-faceted approach allows Kimi K2 to achieve a high level of performance across a wide range of benchmarks and real-world applications, making it a versatile and powerful AI assistant.

The pre-training stage is the foundation of Kimi K2’s knowledge and capabilities. During this phase, the model is exposed to an enormous corpus of text and code, allowing it to learn the statistical patterns, grammatical structures, and semantic relationships that underpin human language and programming. This unsupervised learning process provides the model with a rich and nuanced understanding of the world, which serves as the basis for its more specialized skills. The post-training stage then builds upon this foundation, using a variety of techniques to refine the model’s performance and align it with human values and preferences. This includes supervised fine-tuning on high-quality, task-specific datasets, as well as reinforcement learning from human feedback, which helps the model to generate more helpful, harmless, and honest responses. The final stage of the training pipeline focuses on developing the model’s agentic capabilities, teaching it how to use external tools, browse the web, and perform complex, multi-step tasks. This comprehensive and multi-stage training process is the key to Kimi K2’s impressive performance and its ability to function as a truly agentic AI.

3.1.1 Pre-training on a Massive Dataset (15.5 Trillion Tokens)

The pre-training stage of Kimi K2 is a monumental undertaking, involving the processing of an unprecedentedly large dataset of 15.5 trillion tokens . This massive corpus of text and code serves as the raw material from which the model learns the fundamental principles of language, reasoning, and knowledge. The dataset is carefully curated to be diverse and representative, encompassing a wide range of domains, including scientific literature, technical documentation, literary works, and open-source code repositories. This diversity is crucial for ensuring that the model develops a broad and well-rounded understanding of the world, rather than being limited to a narrow set of topics. The sheer scale of the dataset allows Kimi K2 to capture subtle nuances and complex patterns in language that would be missed by smaller models, resulting in a more sophisticated and capable AI assistant.

The pre-training process itself is a complex and computationally intensive task, requiring a sophisticated training framework that can efficiently handle the massive dataset and the model’s 1 trillion parameters. Moonshot AI has developed a custom training framework that leverages both data and model parallelism to distribute the computational load across a large cluster of GPUs, significantly accelerating the training process. The use of an enhanced Adam optimizer further ensures the stability and efficiency of the training, allowing the model to converge to a high-quality solution in a reasonable amount of time . The result of this extensive pre-training is a model with a deep and comprehensive understanding of language and knowledge, which serves as the foundation for its more specialized capabilities. This massive-scale pre-training is a key differentiator for Kimi K2, providing it with a significant advantage in terms of its breadth of knowledge and its ability to handle a wide range of complex tasks.

3.1.2 Post-training with Reinforcement Learning (RL) and Human Feedback (RLHF)

Following the extensive pre-training phase, Kimi K2 undergoes a rigorous post-training process designed to refine its capabilities and align its behavior with human preferences. A key component of this phase is Reinforcement Learning from Human Feedback (RLHF) , a sophisticated technique that uses human evaluations to guide the model’s learning process. In this stage, human annotators are presented with multiple responses generated by the model for a given prompt and are asked to rank them based on criteria such as helpfulness, accuracy, and safety. This feedback is then used to train a reward model, which in turn is used to fine-tune the language model, encouraging it to generate responses that are more aligned with human values. This iterative process of human evaluation and model fine-tuning is crucial for ensuring that Kimi K2 is not only a powerful AI but also a safe and reliable one.

In addition to RLHF, the post-training phase also includes a focus on developing the model’s agentic capabilities. This involves training the model to use external tools, such as search APIs, to gather information and perform complex tasks. By integrating with the web and other external resources, Kimi K2 can access up-to-date information and provide more comprehensive and accurate responses. This training for tool use is a critical step in transforming Kimi K2 from a passive information provider into a proactive agent that can execute tasks and solve problems on behalf of the user. The combination of RLHF and agentic training in the post-training phase is what gives Kimi K2 its unique blend of power, safety, and utility, making it a truly advanced and capable AI assistant.

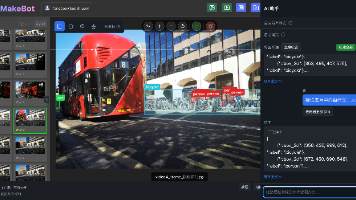

3.2 Agentic AI and Tool Integration

A defining feature of Kimi K2 is its “agentic” nature, which enables it to go beyond simple question-answering and actively perform tasks on behalf of the user. This capability is made possible through the model’s sophisticated integration with external tools and its ability to engage in multi-step reasoning. Unlike traditional AI models that are limited to the knowledge they were trained on, Kimi K2 can dynamically access and utilize information from the web and other external sources, allowing it to provide up-to-date and contextually relevant responses. This agentic behavior is not an innate property of the model but is the result of a specialized training process that teaches it how to use tools effectively and how to break down complex tasks into a series of manageable steps. This training is a key differentiator for Kimi K2, positioning it as a proactive and powerful assistant that can help users to be more productive and efficient.

The integration of external tools is a cornerstone of Kimi K2’s agentic capabilities. The model is trained to interact with a variety of APIs, including search engines, databases, and other software applications, allowing it to retrieve information, perform calculations, and execute commands. This ability to seamlessly integrate with the digital ecosystem is what enables Kimi K2 to perform a wide range of tasks, from booking a flight to analyzing a dataset to debugging a piece of code. The model’s training for tool use involves a combination of supervised learning, where it is shown examples of how to use different tools, and reinforcement learning, where it is rewarded for successfully completing tasks using the available tools. This comprehensive training approach ensures that Kimi K2 is not only proficient in using individual tools but also capable of orchestrating them in a coordinated manner to achieve complex goals.

3.2.1 Synthesizing Data for Agentic Capabilities

A cornerstone of Kimi K2’s advanced agentic capabilities is the use of a large-scale agentic data synthesis pipeline during its post-training phase . This pipeline is designed to generate a vast and diverse set of training examples that are specifically tailored to teach the model how to act as an autonomous agent. The process involves creating complex, multi-step tasks that require the model to use a variety of tools, such as web search, code execution, and database queries, to achieve a specific goal. By training on this synthetic data, the model learns how to break down a high-level task into a series of smaller, more manageable sub-tasks, and how to orchestrate the use of different tools to complete each sub-task. This is a significant departure from traditional language models, which are primarily trained on passive text data and are not equipped to handle interactive and dynamic tasks.

The agentic data synthesis pipeline is a key innovation that sets Kimi K2 apart from other language models. It allows the model to develop a deep understanding of the principles of agentic behavior, such as planning, reasoning, and tool use. The pipeline is also designed to be scalable, allowing for the generation of a large number of training examples with minimal manual intervention . This is crucial for training a model as large and complex as Kimi K2, as it ensures that the model is exposed to a wide variety of agentic scenarios during the training process. The open-weight release of the model provides a unique opportunity for the research community to study the details of this data synthesis pipeline and to develop new methods for training agentic AI systems.

3.2.2 Training for Real-Time Web Search and Tool Use

A critical aspect of Kimi K2’s agentic training is its ability to perform real-time web search and effectively utilize the information it retrieves. This capability is essential for providing users with accurate and up-to-date information, as the model’s pre-trained knowledge has a fixed cutoff date. By integrating with search APIs, Kimi K2 can access the latest news, research, and data from the web, ensuring that its responses are always current and relevant. The training for this capability involves teaching the model how to formulate effective search queries, how to evaluate the credibility of different sources, and how to synthesize information from multiple sources into a coherent and comprehensive answer. This is a complex task that requires a deep understanding of natural language, as well as the ability to reason about the quality and relevance of different pieces of information.

The training process for web search and tool use is a multi-faceted one, involving a combination of different techniques. The model is first trained on a large dataset of examples that demonstrate how to use search APIs and other tools to complete specific tasks. This supervised learning phase provides the model with a foundational understanding of how to interact with the digital world. This is followed by a reinforcement learning phase, where the model is given a set of goals and is rewarded for successfully achieving them using the available tools. This allows the model to learn through trial and error, developing a more robust and flexible understanding of how to use tools in a variety of contexts. The result of this extensive training is a model that is not only proficient at retrieving information from the web but also capable of using that information to perform complex, multi-step tasks, making it a truly powerful and versatile AI assistant.

3.3 Memory and Context Management

A key challenge in developing advanced AI assistants is the ability to maintain context and remember information over long conversations and complex tasks. Kimi K2 addresses this challenge through a sophisticated memory and context management system that allows it to understand and respond to user queries in a more intelligent and personalized way. This system is designed to handle long-context windows, enabling the model to process and retain information from lengthy documents, extended conversations, and multi-step tasks. This long-context capability is a significant advantage over many other AI models, which are often limited to a much smaller context window, restricting their ability to handle complex and detailed information. By effectively managing context and memory, Kimi K2 can provide more coherent, relevant, and helpful responses, making it a more effective and reliable assistant.

The memory and context management system in Kimi K2 is built upon a combination of advanced architectural features and specialized training techniques. The model’s architecture is designed to efficiently process long sequences of text, allowing it to maintain a coherent understanding of the conversation or task at hand. This is complemented by a training process that emphasizes the importance of long-term context, teaching the model to identify and retain key pieces of information over time. The result is a model that can not only remember what was said earlier in a conversation but also use that information to inform its future responses. This ability to maintain and utilize long-term context is a critical component of Kimi K2’s agentic capabilities, as it allows the model to perform complex, multi-step tasks that require a deep understanding of the user’s goals and preferences.

3.3.1 Episodic Memory for Long-Term Context Understanding

A key innovation in Kimi K2’s memory system is the use of episodic memory, a mechanism that allows the model to store and retrieve information from past interactions in a structured and efficient manner. This is analogous to the way humans remember past events and experiences, allowing the model to build a coherent and evolving understanding of the user’s needs and preferences over time. The episodic memory system is designed to be both scalable and efficient, allowing the model to handle a large volume of information without a significant increase in computational cost. This is achieved through a combination of advanced indexing and retrieval techniques, which allow the model to quickly and accurately access relevant information from its memory when needed.

The implementation of episodic memory in Kimi K2 is a significant step forward in the development of more human-like AI assistants. It allows the model to move beyond simple stateless interactions and engage in more meaningful and context-aware conversations. By remembering past interactions, the model can provide more personalized and relevant responses, making it a more effective and valuable assistant. The episodic memory system is also a key enabler of Kimi K2’s agentic capabilities, as it allows the model to maintain a long-term memory of the tasks it is performing and the information it has gathered. This is crucial for performing complex, multi-step tasks that require a deep understanding of the user’s goals and preferences. The development of episodic memory in Kimi K2 is a testament to Moonshot AI’s commitment to pushing the boundaries of AI research and a key factor in the model’s success.

3.3.2 Multi-turn Search and Reasoning Capabilities

Kimi K2’s advanced memory and context management system is a key enabler of its multi-turn search and reasoning capabilities. This allows the model to engage in complex, multi-step tasks that require a deep understanding of the user’s goals and the ability to reason about the information it has gathered. For example, a user could ask Kimi K2 to “research the latest trends in renewable energy and create a summary report.” In response, the model could perform a series of web searches, analyze the information it finds, and then synthesize that information into a coherent and well-structured report. This is a significant step forward from traditional AI assistants, which are often limited to single-turn interactions and are not capable of performing such complex, multi-step tasks.

The multi-turn search and reasoning capabilities of Kimi K2 are a direct result of its agentic training and its ability to maintain long-term context. The model is trained to break down complex tasks into a series of smaller, more manageable sub-tasks, and to use a variety of tools, such as web search and code execution, to complete each sub-task. The model’s long-context memory allows it to keep track of the information it has gathered and the progress it has made, ensuring that it can complete the task in a coherent and efficient manner. This combination of agentic training and long-term memory is what gives Kimi K2 its powerful multi-turn search and reasoning capabilities, making it a truly versatile and valuable AI assistant.

4. Performance Evaluation and Benchmarks

4.1 Superior Performance in Coding and Reasoning

Kimi K2 has demonstrated exceptional performance in coding and reasoning tasks, as evidenced by its impressive scores on a variety of industry-standard benchmarks. This superior performance is a direct result of its sophisticated architecture, massive pre-training dataset, and specialized post-training for agentic tasks. The model’s ability to understand complex programming problems, generate correct and efficient code, and reason through multi-step logic problems sets it apart from many other language models. This makes Kimi K2 a valuable tool for developers, researchers, and anyone who needs to perform complex cognitive tasks. The model’s success on these benchmarks is a testament to the effectiveness of Moonshot AI’s approach to model development and a clear indication of the model’s potential to revolutionize a wide range of industries.

The model’s performance in coding and reasoning is not just a matter of academic interest; it has significant practical implications. For developers, Kimi K2 can be a powerful assistant, helping them to write code faster, debug more effectively, and learn new programming concepts. For researchers, the model can be a valuable tool for analyzing data, generating hypotheses, and writing research papers. For businesses, Kimi K2 can be used to automate a wide range of tasks, from customer service to data analysis, leading to increased productivity and efficiency. The model’s open-source nature also means that it can be integrated into a variety of applications and workflows, making it accessible to a wide range of users. The superior performance of Kimi K2 in coding and reasoning is a key factor in its potential to become a leading AI assistant in the years to come.

4.1.1 LiveCodeBench v6 and OJBench Results

Kimi K2 has demonstrated exceptional performance in coding and reasoning tasks, as evidenced by its impressive scores on a variety of industry-standard benchmarks. On LiveCodeBench v6, a challenging benchmark for evaluating code generation capabilities, Kimi K2-Instruct achieved a score of 53.7%, significantly outperforming other leading models such as OpenAI’s GPT-4.1 (44.7%) and DeepSeek’s V3 model (46.9%) . This result highlights Kimi K2’s superior ability to understand complex programming problems and generate correct and efficient code. The model’s performance on OJBench, another competitive programming benchmark, was equally impressive, with a score of 27.1%, further cementing its position as a top-tier coding assistant . These benchmarks are designed to test a model’s ability to handle a wide range of coding challenges, from simple algorithmic problems to more complex software engineering tasks, and Kimi K2’s strong performance across the board indicates its versatility and power in this domain.

The model’s success on these benchmarks can be attributed to its massive pre-training on a large corpus of code data, as well as its specialized post-training for agentic tasks. The pre-training provided the model with a deep understanding of programming languages and concepts, while the post-training taught it how to apply this knowledge to solve real-world problems. The model’s ability to use tools, such as a code interpreter, also plays a crucial role in its coding performance, as it allows the model to test and debug its own code, leading to more accurate and reliable results. The strong performance of Kimi K2 on coding benchmarks has significant implications for its practical applications, as it suggests that the model can be a valuable tool for software developers, helping them to write code faster, debug more effectively, and learn new programming concepts. The model’s open-source nature also means that it can be integrated into a variety of development environments and workflows, making it accessible to a wide range of users.

4.1.2 SWE-Bench Verified and Multilingual Performance

Kimi K2’s prowess in software engineering is further demonstrated by its outstanding performance on the SWE-Bench benchmarks, which are designed to evaluate a model’s ability to resolve real-world issues in code repositories. On the SWE-Bench Verified tests, which focus on a curated set of real-world GitHub issues, Kimi K2-Instruct achieved a remarkable 65.8% accuracy . This score significantly outperforms OpenAI’s GPT-4.1 (54.6%) and other prominent models, highlighting Kimi K2’s superior capabilities in understanding bug reports, generating code fixes, and passing software unit tests. The model’s ability to perform these tasks autonomously is a key aspect of its agentic intelligence and a major differentiator from other language models. The SWE-Bench results are a strong indicator of Kimi K2’s potential to be a powerful tool for software developers, helping them to automate the process of resolving code issues and improving the overall quality of their software.

In addition to its strong performance on English-language benchmarks, Kimi K2 also demonstrates impressive multilingual capabilities. The model was trained on a diverse dataset that includes a significant amount of text in Chinese, which gives it a distinct advantage in understanding and processing the Chinese language . This is a key part of Moonshot AI’s market positioning, as it allows the company to cater to the large and growing Chinese market. The model’s multilingual performance is a testament to the quality and diversity of its pre-training data, as well as the effectiveness of its training pipeline. The ability to perform well on both English and Chinese benchmarks is a significant advantage for Kimi K2, as it allows the model to be used in a wide range of global applications. This multilingual capability is a key factor in the model’s potential to become a leading AI assistant on the world stage.

4.2 Excellence in Mathematical and General Knowledge Tasks

Beyond its prowess in coding and reasoning, Kimi K2 has also demonstrated excellence in mathematical and general knowledge tasks. The model’s strong performance on a variety of benchmarks in these domains is a testament to its broad and deep understanding of the world, which is a result of its massive pre-training dataset and sophisticated architecture. The model’s ability to solve complex mathematical problems, answer challenging questions about a wide range of topics, and demonstrate a graduate-level understanding of various subjects sets it apart from many other language models. This makes Kimi K2 a valuable tool for students, researchers, and anyone who needs to access and process a large amount of information. The model’s excellence in these areas is a key factor in its potential to become a leading AI assistant for a wide range of educational and professional applications.

The model’s performance in mathematical and general knowledge tasks is not just a matter of memorizing facts; it is a reflection of its ability to reason, understand, and apply its knowledge in a flexible and intelligent way. The model’s training on a diverse dataset that includes a large amount of scientific and academic literature has given it a strong foundation in these areas. The model’s post-training, which includes reinforcement learning from human feedback, has further refined its ability to provide accurate and helpful responses to a wide range of questions. The combination of a strong foundation and targeted fine-tuning is what gives Kimi K2 its excellence in mathematical and general knowledge tasks. This is a key differentiator for the model and a major contributor to its overall success.

4.2.1 AIME 2025 and GPQA-Diamond Scores

Kimi K2’s mathematical reasoning capabilities are particularly impressive, as demonstrated by its performance on the AIME 2025 benchmark, a challenging math competition. The model achieved a score of 49.5%, significantly outperforming OpenAI’s GPT-4.1, which scored 37.0% . This result highlights Kimi K2’s ability to solve complex mathematical problems that require a deep understanding of mathematical concepts and a high degree of logical reasoning. The model’s performance on the AIME 2025 is a clear indication of its strong foundation in STEM fields and its potential to be a valuable tool for students and researchers in these areas. The model’s ability to excel in such a challenging benchmark is a testament to the effectiveness of its training pipeline and the sophistication of its architecture.

In addition to its strong performance on the AIME 2025, Kimi K2 also excels in general knowledge tasks, as evidenced by its score on the GPQA-Diamond benchmark. This benchmark assesses a model’s graduate-level question-answering abilities across a range of subjects, including physics, chemistry, and biology. Kimi K2 achieved a score of 75.1% on this benchmark, surpassing GPT-4.1’s score of 66.3% . This result demonstrates the model’s broad and deep understanding of a wide range of academic subjects and its ability to apply that knowledge to answer complex and challenging questions. The model’s performance on the GPQA-Diamond benchmark is a strong indicator of its potential to be a powerful tool for research and education, as it can provide accurate and insightful answers to a wide range of questions. The combination of strong mathematical reasoning and broad general knowledge makes Kimi K2 a truly versatile and capable AI assistant.

4.2.2 Humanity’s Last Exam (HLE) Performance

Kimi K2’s advanced reasoning capabilities are further showcased by its performance on Humanity’s Last Exam (HLE) , a challenging benchmark designed to test a model’s ability to perform complex, multi-step reasoning tasks. On this benchmark, Kimi K2 achieved a state-of-the-art score of 44.9% with tools, outperforming OpenAI’s GPT-5, which scored 41.7% . This result is particularly noteworthy as it demonstrates the model’s ability to effectively use external tools, such as web search and code execution, to solve complex problems. The HLE benchmark is designed to be a “last exam” for AI models, testing their ability to reason, plan, and act in a way that is comparable to human intelligence. Kimi K2’s strong performance on this benchmark is a clear indication of its advanced agentic capabilities and its potential to be a powerful tool for a wide range of complex tasks.

The model’s performance on the HLE benchmark is a testament to the effectiveness of its agentic training and its ability to integrate with external tools. The model is trained to break down complex problems into a series of smaller, more manageable sub-tasks, and to use a variety of tools to complete each sub-task. The model’s ability to perform an average of 23 reasoning steps and explore over 200 URLs per task on the HLE benchmark is a clear demonstration of its capacity for deep, structured reasoning and long-horizon problem-solving . This is a key differentiator from simpler, single-shot AI models and a major contributor to Kimi K2’s success. The model’s performance on the HLE benchmark is a strong indicator of its potential to be a leading AI assistant in the years to come.

4.3 Comparative Analysis with Leading Models

Kimi K2’s performance on a wide range of benchmarks has been compared to that of other leading AI models, including OpenAI’s GPT series and Anthropic’s Claude. These comparisons have consistently shown that Kimi K2 is a highly competitive model, often outperforming its rivals in key areas such as coding, reasoning, and agentic tasks. The model’s superior performance is a result of its innovative architecture, massive pre-training dataset, and specialized post-training for agentic behaviors. This makes Kimi K2 a compelling alternative to other leading models, particularly for users who require a high degree of performance in these areas. The model’s open-source nature and competitive pricing further enhance its appeal, making it an attractive option for a wide range of users, from individual developers to large enterprises.

The comparative analysis of Kimi K2 and other leading models is not just a matter of academic interest; it has significant practical implications for the AI market. The success of Kimi K2 in outperforming established models from well-funded Western AI labs is a clear indication of the growing competitiveness of the global AI landscape. It demonstrates that it is possible to develop state-of-the-art AI models without the massive resources of a large tech company, and it highlights the importance of innovation and a focus on practical applications. The comparative analysis also provides valuable insights for users who are looking to choose the best AI model for their specific needs. By understanding the strengths and weaknesses of different models, users can make more informed decisions and select the model that is best suited to their particular use case.

4.3.1 Outperforming GPT-4.1 and GPT-4o on Key Benchmarks

Kimi K2 has consistently demonstrated its ability to outperform leading models from OpenAI, including GPT-4.1 and GPT-4o, on a variety of key benchmarks. In the domain of coding, Kimi K2 achieved a score of 53.7% on the LiveCodeBench v6, significantly higher than GPT-4.1’s 44.7% . Similarly, on the SWE-Bench Verified, a benchmark for real-world software engineering tasks, Kimi K2 scored 65.8%, while GPT-4.1 managed only 44.7% . These results highlight Kimi K2’s superior capabilities in code generation, debugging, and autonomous problem-solving. In mathematical reasoning, Kimi K2 also excels, scoring 49.5% on the AIME 2025, compared to GPT-4.1’s 37.0% . These achievements underscore the model’s strong foundation in STEM fields and its ability to tackle complex quantitative challenges.

The model’s performance on these benchmarks is a clear indication of its advanced cognitive abilities and its potential to become a preferred tool for developers, researchers, and professionals who require high-precision AI assistance. The model’s strength in these areas is a direct result of its sophisticated training pipeline, which includes pre-training on a massive dataset of 15.5 trillion tokens and a post-training phase that incorporates reinforcement learning from human feedback (RLHF) to refine its performance on specific tasks . The fact that Kimi K2, an open-weight model, can outperform proprietary models like GPT-4.1 is a significant development in the AI landscape, as it challenges the notion that only closed-source models can reach the pinnacle of AI performance.

4.3.2 Competitive Edge in Agentic and Tool-Use Scenarios

Kimi K2’s most significant competitive edge lies in its agentic and tool-use capabilities, which are a key differentiator from many other leading AI models. The model’s ability to perform complex, multi-step tasks by integrating with external tools and information sources sets it apart from traditional, passive information retrieval systems. This is clearly demonstrated by its performance on benchmarks such as Tau2-Bench (66.1%) and SWE-Bench (65.8%) , which evaluate a model’s ability to use tools and interact with software environments . The model’s ability to execute hundreds of sequential tool calls without human intervention is a significant step forward in the development of truly autonomous AI agents . This capability is a direct result of the model’s specialized post-training, which includes a large-scale agentic data synthesis pipeline and reinforcement learning from human feedback.

The competitive edge that Kimi K2 has in agentic and tool-use scenarios has significant implications for the future of AI. As the industry moves beyond simple question-answering towards more complex, autonomous systems that can perform multi-step tasks, Kimi K2’s capabilities become increasingly critical. The model’s ability to seamlessly integrate with external tools, perform real-time web searches, and execute code makes it a powerful engine for building sophisticated AI agents for a wide range of industries, from finance and healthcare to software development and scientific research . The open-source nature of Kimi K2 further enhances its appeal in this area, as it allows developers to build upon and customize the model for their specific needs. This combination of superior performance, agentic capabilities, and an open-source strategy positions Kimi K2 as a key player in the evolving AI landscape.

5. Market Potential and Strategic Positioning

5.1 Open-Weight and Open-Source Strategy

Moonshot AI’s decision to release Kimi K2 as an open-weight model under a permissive license is a key part of its market strategy and a major differentiator from many of its competitors. This open-source approach has a number of significant advantages, both for the company and for the broader AI community. By making the model’s weights freely available, Moonshot AI is fostering a vibrant and collaborative developer ecosystem, which can accelerate the pace of innovation and drive the adoption of its technology. This approach also allows for greater transparency and customization, which is a key requirement for many enterprise customers who are concerned about data privacy and security. The open-source strategy is a clear indication of Moonshot AI’s commitment to democratizing access to advanced AI and its belief in the power of community-driven development.

The open-weight and open-source strategy of Kimi K2 has a number of important implications for the AI market. It challenges the prevailing model of closed, proprietary systems and demonstrates that an open-source approach can be a viable and successful business strategy. It also puts pressure on other AI companies to adopt a more open and collaborative approach, which could lead to a more diverse and competitive market. The open-source nature of Kimi K2 is also likely to accelerate the development of new and innovative applications, as developers are free to experiment with and build upon the model without the constraints of a proprietary license. This could lead to a new wave of innovation in the AI space, as a broader range of developers and researchers are able to contribute to the development of new and exciting AI-powered tools and services.

5.1.1 Fostering a Developer Ecosystem

A key benefit of Moonshot AI’s open-weight strategy is its ability to foster a vibrant and active developer ecosystem. By making the Kimi K2 model freely available, the company is empowering a global community of developers, researchers, and enthusiasts to experiment with, build upon, and contribute to the model’s development. This collaborative approach can accelerate the pace of innovation, as a diverse range of perspectives and expertise can be brought to bear on the challenges of developing and improving AI models. The developer ecosystem can also play a crucial role in driving the adoption of Kimi K2, as developers create new and innovative applications that showcase the model’s capabilities and make it more accessible to a wider audience. This can create a virtuous cycle of innovation and adoption, as a larger and more active developer ecosystem leads to more and better applications, which in turn attracts more users and developers to the platform.

The developer ecosystem around Kimi K2 is already showing signs of significant growth and activity. The model has become one of the fastest-downloaded models on Hugging Face, a popular platform for sharing and collaborating on AI models . This indicates a high level of interest and enthusiasm from the developer community, which is a positive sign for the future of the model. The open-source nature of Kimi K2 also allows for greater transparency and collaboration, as developers can inspect the model’s code, contribute to its development, and share their own improvements and innovations with the community. This can lead to a more robust and reliable model, as a larger number of eyes can help to identify and fix bugs and other issues. The fostering of a strong developer ecosystem is a key part of Moonshot AI’s long-term strategy, and it is a major factor in the company’s potential for success in the competitive AI market.

5.1.2 Driving Research and Innovation in Agentic AI

The open-weight release of Kimi K2 is also a major catalyst for driving research and innovation in the field of agentic AI. By providing a state-of-the-art, open-source model with strong agentic capabilities, Moonshot AI is enabling researchers and developers to explore new frontiers in AI and to develop new and innovative applications that were previously not possible. The open-source nature of the model allows for greater transparency and reproducibility in research, as researchers can access the model’s code and training data to verify and build upon existing work. This can accelerate the pace of scientific discovery and lead to a deeper understanding of the principles of agentic AI. The availability of a powerful and accessible model like Kimi K2 can also lower the barrier to entry for researchers who are interested in working on agentic AI, as they no longer need to have access to massive computational resources and proprietary datasets to make meaningful contributions to the field.

The potential for Kimi K2 to drive research and innovation in agentic AI is significant. The model’s advanced capabilities in areas such as tool use, multi-step reasoning, and long-term memory provide a powerful platform for developing new and more sophisticated AI agents. Researchers can use the model as a starting point for their own work, building upon its existing capabilities to create new and innovative applications. The open-source nature of the model also encourages collaboration and knowledge sharing, as researchers can share their own improvements and innovations with the community. This can lead to a more rapid and efficient development of new agentic AI technologies, as a global community of researchers can work together to solve the challenges of creating truly autonomous and intelligent AI systems. The open-weight release of Kimi K2 is a major contribution to the field of AI, and it is likely to have a lasting impact on the future of agentic AI research and development.

5.2 Competitive and Disruptive Pricing Model

In addition to its open-source strategy, Moonshot AI has also adopted a competitive and disruptive pricing model for its API services, which is designed to make advanced AI capabilities more accessible to a wider range of users. The company’s API pricing is significantly lower than that of its Western counterparts, with costs as low as $0.15 per million tokens . This aggressive pricing strategy is a key part of the company’s efforts to challenge the dominance of established players in the AI market and to attract a broad user base, from individual developers to large enterprises. The combination of high performance, open-source availability, and low-cost API access is a powerful value proposition that is likely to be highly attractive to a wide range of users.

The disruptive pricing model of Kimi K2 has a number of important implications for the AI market. It puts significant pressure on other AI companies to lower their prices, which could lead to a more competitive and affordable market for AI services. It also makes advanced AI capabilities more accessible to a wider range of users, including startups, small businesses, and individual developers who may have been priced out of the market for cutting-edge AI. This could lead to a new wave of innovation, as a broader range of users are able to experiment with and build upon advanced AI technologies. The disruptive pricing model of Kimi K2 is a clear indication of Moonshot AI’s commitment to democratizing access to AI and its ambition to become a leading player in the global AI market.

5.2.1 Low-Cost API Pricing to Attract Enterprise Adoption

A key component of Moonshot AI’s pricing strategy is its low-cost API pricing, which is designed to attract enterprise adoption. The company’s API pricing is estimated to be 30-40% lower than that of comparable models like GPT-5, making it a highly attractive option for businesses that are looking to integrate advanced AI capabilities into their products and services . This cost-effectiveness is a major advantage for enterprises, as it allows them to reduce their operational costs and improve their profit margins. The low-cost API pricing also makes it more feasible for businesses to experiment with and deploy AI-powered solutions, as they can do so without a significant upfront investment. This can lead to a more rapid and widespread adoption of AI in the enterprise market, as businesses are able to leverage the power of AI to improve their efficiency, productivity, and competitiveness.

The low-cost API pricing of Kimi K2 is a key part of Moonshot AI’s strategy to challenge the dominance of established players in the AI market. By offering a high-performance, open-source model at a fraction of the cost of its competitors, the company is providing a compelling alternative for enterprises that are looking for a more cost-effective and flexible AI solution. This is particularly appealing to businesses that are concerned about data privacy and security, as the open-source nature of Kimi K2 allows them to deploy the model on their own infrastructure, giving them greater control over their data. The combination of low-cost API pricing, open-source availability, and high performance is a powerful value proposition that is likely to be highly attractive to a wide range of enterprise customers.

5.2.2 Free Tier to Lower Barrier to Entry for Individual Users

In addition to its low-cost API pricing, Moonshot AI also offers a free tier for its Kimi AI platform, which is designed to lower the barrier to entry for individual users. The free tier provides users with unlimited access to the Kimi AI chatbot, allowing them to experience the power of the model without any cost. This is a key part of the company’s strategy to build a large and active user base, as it allows individuals to try out the model and see its value for themselves. The free tier also serves as a powerful marketing tool, as it can generate a great deal of buzz and excitement around the Kimi AI platform. This can help to attract more users and developers to the platform, which can in turn lead to a more vibrant and active community.

The free tier of Kimi AI is a major differentiator from many of its competitors, which often require users to pay a subscription fee to access their services. This makes Kimi AI a more accessible and attractive option for individual users, particularly those who are new to AI and are not yet ready to commit to a paid plan. The free tier also allows users to experiment with the model and to explore its capabilities without any financial risk. This can lead to a more engaged and active user base, as users are more likely to try out new features and to provide feedback to the company. The free tier is a key part of Moonshot AI’s strategy to democratize access to AI and to build a strong and loyal community of users.

5.3 Foundational Strengths of Moonshot AI

The success of Kimi AI is not just a result of its advanced technology; it is also a reflection of the foundational strengths of Moonshot AI as a company. The company is led by a team of experienced and visionary leaders who have a deep understanding of the AI landscape and a clear vision for the future of the company. The company has also been successful in attracting significant funding from major investors, which has provided it with the resources it needs to develop and deploy its advanced AI models. These foundational strengths are a key factor in the company’s ability to compete with established players in the AI market and to achieve its ambitious goals.

The foundational strengths of Moonshot AI are a testament to the company’s commitment to excellence and its long-term vision for the future of AI. The company’s leadership team has a proven track record of success in the AI industry, and they have a deep understanding of the challenges and opportunities that lie ahead. The company’s ability to attract significant funding is a clear indication of the confidence that investors have in the company’s vision and its ability to execute on its plans. These foundational strengths provide Moonshot AI with a solid platform for future growth and success, and they are a key factor in the company’s potential to become a leading player in the global AI market.

5.3.1 Leadership and Vision of Founder Yang Zhilin

A key foundational strength of Moonshot AI is the leadership and vision of its founder, Yang Zhilin. As a prominent AI researcher with a PhD from Carnegie Mellon University and experience at Google Brain and Facebook AI Research, Yang brings a deep understanding of the technical and strategic challenges of developing advanced AI models . His vision for Moonshot AI is to build foundational models that pave the way to Artificial General Intelligence (AGI) while democratizing access to powerful AI tools . This vision is reflected in the company’s commitment to open-source development and its focus on creating AI that can handle complex, real-world tasks. Yang’s leadership has been instrumental in guiding the company’s rapid growth and in establishing its reputation as a key innovator in the AI industry.

The leadership of Yang Zhilin is a key factor in the success of Moonshot AI. His deep technical expertise and his clear vision for the future of the company have been instrumental in attracting top talent and securing significant funding. His commitment to open-source development and his focus on creating AI that is both powerful and accessible have resonated with a wide range of users and developers. Yang’s leadership has also been crucial in navigating the complex and rapidly evolving AI landscape, as he has been able to anticipate market trends and to position the company for future success. The leadership and vision of Yang Zhilin are a major asset for Moonshot AI, and they are a key factor in the company’s potential to become a leading player in the global AI market.

5.3.2 Rapid Growth and Significant Funding

Another key foundational strength of Moonshot AI is its rapid growth and its ability to attract significant funding. The company has achieved a valuation of $3.3 billion after a recent funding round, with backing from major investors like Alibaba . This significant funding provides the company with the resources it needs to develop and deploy its advanced AI models, as well as to compete with established players in the AI market. The company’s rapid growth, from a 20-person startup to a platform with over 100 million users in just over a year, is a clear indication of the market’s demand for its products and services . This rapid growth has also helped to establish the company’s reputation as a key innovator in the AI industry and has attracted a great deal of attention from the media and the investment community.

The rapid growth and significant funding of Moonshot AI are a testament to the company’s strong execution and its ability to deliver on its promises. The company has been able to develop and release a series of highly capable AI models in a short period of time, which has helped to build a strong and loyal user base. The company’s ability to attract significant funding is a clear indication of the confidence that investors have in the company’s vision and its ability to execute on its plans. This rapid growth and significant funding provide Moonshot AI with a solid platform for future growth and success, and they are a key factor in the company’s potential to become a leading player in the global AI market.

6. Comparative Analysis with Other AI Search Tools

6.1 Kimi AI vs. Perplexity AI

Kimi AI and Perplexity AI are two of the leading AI-powered search and answer engines, but they have different architectural approaches, performance focuses, and market strategies. While both aim to provide users with direct, accurate, and well-sourced answers to their queries, they do so in different ways and with different strengths. A comparative analysis of the two platforms reveals a number of key differences that are important for users to consider when choosing the best tool for their specific needs. This analysis will delve into the architectural differences between the two platforms, their respective performance focuses, and their different market strategies.

The comparison between Kimi AI and Perplexity AI is not just a matter of technical specifications; it is also a reflection of different philosophies about the future of AI-powered search. Kimi AI’s focus on agentic AI and its open-source approach represent a more collaborative and community-driven vision for the future of AI. Perplexity AI’s proprietary, multi-model approach, on the other hand, represents a more centralized and controlled vision. The choice between the two platforms is not just a matter of which one is “better”; it is also a matter of which vision for the future of AI is more appealing to the user.

6.1.1 Architectural Differences: MoE vs. Multi-Model Approach

The most significant architectural difference between Kimi AI and Perplexity AI lies in their underlying models. Kimi AI is built on a single, powerful Mixture-of-Experts (MoE) model, Kimi K2, which is designed to handle a wide range of tasks with a high degree of efficiency and specialization . This approach allows Kimi AI to leverage the vast knowledge and capabilities of a massive model while maintaining a reasonable computational cost. Perplexity AI, on the other hand, employs a multi-model approach, where it uses a variety of different models from various providers, including OpenAI, Anthropic, and Meta, to generate its answers . This approach allows Perplexity AI to be more flexible and to choose the best model for a particular task, but it can also be more complex and less efficient than a single-model approach.

The architectural differences between Kimi AI and Perplexity AI have a number of important implications for their performance and capabilities. Kimi AI’s single-model approach allows for a more consistent and integrated user experience, as the model is able to maintain a coherent understanding of the conversation and the user’s intent. Perplexity AI’s multi-model approach, on the other hand, can lead to a more fragmented and less consistent user experience, as the different models may have different strengths and weaknesses. The choice between the two architectural approaches is a trade-off between consistency and flexibility, and the best choice for a particular user will depend on their specific needs and preferences.

6.1.2 Performance Focus: Agentic Reasoning vs. Real-Time Retrieval

Another key difference between Kimi AI and Perplexity AI is their respective performance focuses. Kimi AI’s primary focus is on agentic reasoning, which is the ability to perform complex, multi-step tasks by integrating with external tools and information sources . This is reflected in the model’s strong performance on benchmarks such as SWE-Bench and Tau2-Bench, which evaluate a model’s ability to use tools and interact with software environments. Perplexity AI, on the other hand, is primarily focused on real-time retrieval, which is the ability to provide users with up-to-date and accurate information from the web . This is reflected in the platform’s strong integration with search engines and its ability to provide users with a list of relevant sources for its answers.

The different performance focuses of Kimi AI and Perplexity AI are a reflection of their different goals and target audiences. Kimi AI is designed to be a powerful and versatile AI assistant that can help users to be more productive and efficient by automating a wide range of tasks. Perplexity AI, on the other hand, is designed to be a more traditional search engine that provides users with direct answers to their questions. The choice between the two platforms will depend on the user’s specific needs and preferences. Users who are looking for a powerful and versatile AI assistant that can help them to automate complex tasks will likely prefer Kimi AI. Users who are looking for a more traditional search engine that provides them with direct answers to their questions will likely prefer Perplexity AI.

6.1.3 Market Strategy: Open-Source vs. Proprietary Model

The final key difference between Kimi AI and Perplexity AI is their market strategy. Kimi AI has adopted an open-source strategy, releasing its Kimi K2 model as an open-weight model under a permissive license . This approach is designed to foster a vibrant and collaborative developer ecosystem and to accelerate the pace of innovation in the AI community. Perplexity AI, on the other hand, has adopted a proprietary model, keeping its technology and models closed-source . This approach allows the company to maintain greater control over its technology and to generate revenue through a subscription-based model.