构建智能交通分析系统:YOLOv8/YOLO11与多目标跟踪实战

随着城市化进程加快,交通管理面临着前所未有的挑战。传统的人工监控方式已无法满足现代交通管理的需求,智能交通系统应运而生。基于计算机视觉的交通场景分析技术,能够自动检测和跟踪车辆、行人等目标,为交通流量统计、违章检测、事故预警等应用提供强大支持。本文将详细介绍如何构建一个完整的交通场景目标检测与跟踪系统,结合YOLO系列算法与DeepSORT、FairMOT等跟踪器,并展示前后端分离的系统架构实现。

1. 引言:智能交通的时代需求

随着城市化进程加快,交通管理面临着前所未有的挑战。传统的人工监控方式已无法满足现代交通管理的需求,智能交通系统应运而生。基于计算机视觉的交通场景分析技术,能够自动检测和跟踪车辆、行人等目标,为交通流量统计、违章检测、事故预警等应用提供强大支持。

本文将详细介绍如何构建一个完整的交通场景目标检测与跟踪系统,结合YOLO系列算法与DeepSORT、FairMOT等跟踪器,并展示前后端分离的系统架构实现。

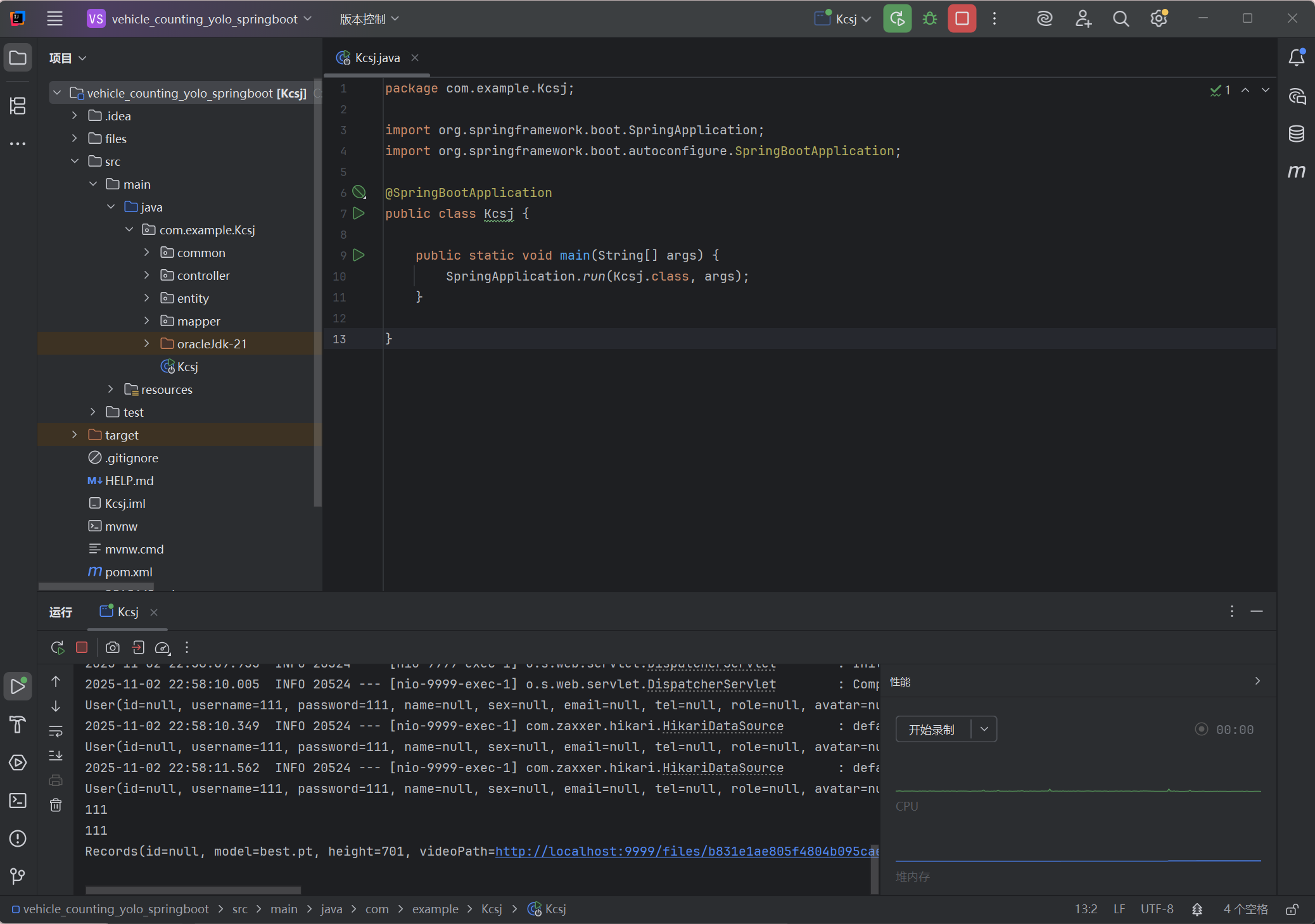

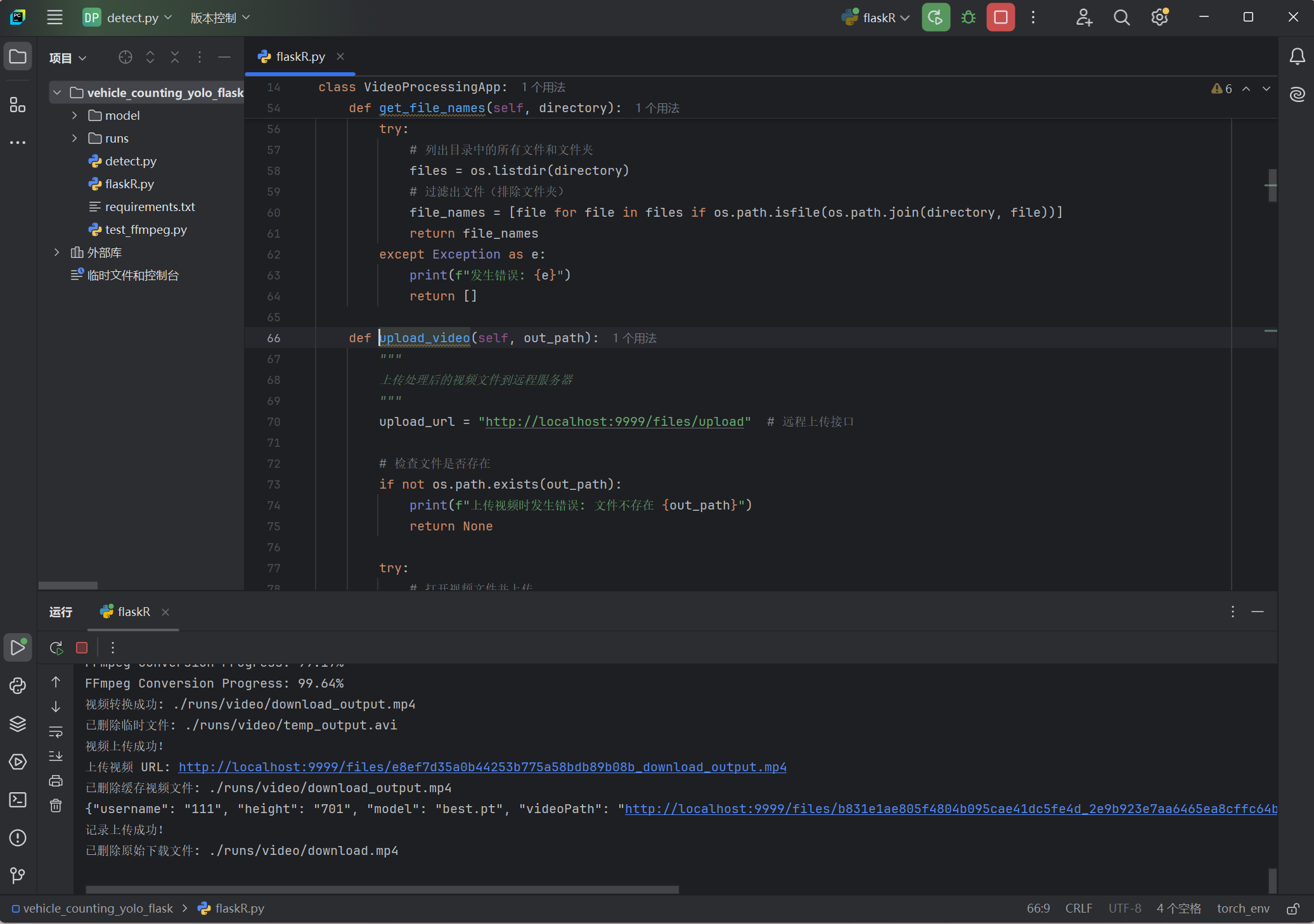

2. 系统架构设计

智能交通分析系统采用典型的前后端分离架构:

┌─────────────────┐ ┌──────────────────┐ ┌─────────────────┐ │ 前端展示层 │ │ 后端服务层 │ │ 算法推理层 │ │ │ │ │ │ │ │ TypeScript/ │───▶│ Java Spring Boot │───▶│ Python Flask │ │ JavaScript │ │ REST API │ │ YOLO + │ │ React/Vue │ │ 业务逻辑处理 │ │ 跟踪算法 │ │ │ │ │ │ │ └─────────────────┘ └──────────────────┘ └─────────────────┘

2.1 技术栈选择理由

-

前端:TypeScript提供类型安全,React/Vue生态丰富

-

后端:Java Spring Boot稳健可靠,适合企业级应用

-

算法:Python在AI领域生态完善,便于算法集成

3. 核心算法深度解析

3.1 YOLOv8/YOLO11目标检测

YOLO(You Only Look Once)系列以其卓越的速度-精度平衡著称。

YOLOv8核心改进:

-

锚点免费(Anchor-free)设计

-

更高效的特征金字塔网络

-

更精确的边界框回归机制

# YOLOv8 推理示例代码

from ultralytics import YOLO

import cv2

class TrafficDetector:

def __init__(self, model_path='yolov8n.pt'):

self.model = YOLO(model_path)

# 交通相关类别

self.traffic_classes = [1, 2, 3, 5, 7] # 人、车、摩托、公交、卡车

def detect_traffic_objects(self, image):

results = self.model(image, classes=self.traffic_classes)

detections = []

for result in results:

boxes = result.boxes

for box in boxes:

detection = {

'bbox': box.xyxy[0].cpu().numpy().tolist(),

'confidence': box.conf[0].cpu().numpy().item(),

'class_id': int(box.cls[0].cpu().numpy()),

'class_name': self.model.names[int(box.cls[0].cpu().numpy())]

}

detections.append(detection)

return detections

# 使用示例

detector = TrafficDetector()

image = cv2.imread('traffic_scene.jpg')

detections = detector.detect_traffic_objects(image)3.2 多目标跟踪算法对比

DeepSORT:传统方法的巅峰

DeepSORT在SORT基础上增加了深度外观特征,有效解决ID切换问题。

import numpy as np

from deep_sort import DeepSort

from deep_sort.utils.parser import get_config

class DeepSortTracker:

def __init__(self):

cfg = get_config()

cfg.merge_from_file("deep_sort.yaml")

self.tracker = DeepSort(

cfg.DEEPSORT.REID_CKPT,

max_dist=cfg.DEEPSORT.MAX_DIST,

min_confidence=cfg.DEEPSORT.MIN_CONFIDENCE,

nms_max_overlap=cfg.DEEPSORT.NMS_MAX_OVERLAP,

max_iou_distance=cfg.DEEPSORT.MAX_IOU_DISTANCE,

max_age=cfg.DEEPSORT.MAX_AGE,

n_init=cfg.DEEPSORT.N_INIT,

nn_budget=cfg.DEEPSORT.NN_BUDGET,

use_cuda=True

)

def update(self, detections, image):

bboxes = []

confidences = []

class_ids = []

for det in detections:

bboxes.append(det['bbox'])

confidences.append(det['confidence'])

class_ids.append(det['class_id'])

if len(bboxes) > 0:

bboxes = np.array(bboxes)

confidences = np.array(confidences)

# 转换为 [x1, y1, w, h] 格式

bboxes[:, 2:] = bboxes[:, 2:] - bboxes[:, :2]

tracks = self.tracker.update(bboxes, confidences, class_ids, image)

return tracks

return []FairMOT:端到端的多目标跟踪

FairMOT采用联合检测和重识别的方法,在准确性和速度间取得更好平衡。

import torch

from fairmot import FairMOT

class FairMOTTracker:

def __init__(self, model_path='fairmot_dla34.pth'):

self.model = FairMOT(opt)

self.model.load_state_dict(torch.load(model_path))

self.model.eval()

self.model.cuda()

def track(self, image):

with torch.no_grad():

# 预处理

input_tensor = self.preprocess(image)

# 推理

output = self.model(input_tensor)

# 后处理

detections, embeddings = self.postprocess(output)

# 跟踪

tracks = self.matching(detections, embeddings)

return tracks4. 系统实现细节

4.1 后端Java服务实现

// 检测结果DTO

@Data

public class DetectionResult {

private List<Detection> detections;

private String imageId;

private Long processingTime;

}

@Data

public class Detection {

private Double[] bbox; // [x1, y1, x2, y2]

private Double confidence;

private Integer classId;

private String className;

private Integer trackId; // 跟踪ID

}

// Spring Boot控制器

@RestController

@RequestMapping("/api/traffic")

@CrossOrigin(origins = "*")

public class TrafficAnalysisController {

@Autowired

private PythonIntegrationService pythonService;

@PostMapping("/analyze")

public ResponseEntity<AnalysisResult> analyzeTraffic(

@RequestParam("video") MultipartFile videoFile) {

try {

// 保存上传文件

String filePath = saveUploadedFile(videoFile);

// 调用Python算法服务

AnalysisResult result = pythonService.analyzeVideo(filePath);

return ResponseEntity.ok(result);

} catch (Exception e) {

return ResponseEntity.status(500).build();

}

}

@GetMapping("/realtime")

public SseEmitter setupRealtimeAnalysis() {

SseEmitter emitter = new SseEmitter();

// 设置实时视频流处理

setupRealtimeProcessing(emitter);

return emitter;

}

}4.2 Python算法服务

from flask import Flask, request, jsonify

import cv2

import numpy as np

import base64

from traffic_analyzer import TrafficAnalyzer

app = Flask(__name__)

analyzer = TrafficAnalyzer()

@app.route('/analyze/frame', methods=['POST'])

def analyze_frame():

data = request.json

image_data = data['image']

# 解码base64图像

image_bytes = base64.b64decode(image_data)

nparr = np.frombuffer(image_bytes, np.uint8)

image = cv2.imdecode(nparr, cv2.IMREAD_COLOR)

# 执行分析

result = analyzer.analyze_single_frame(image)

return jsonify(result)

@app.route('/analyze/video', methods=['POST'])

def analyze_video():

video_file = request.files['video']

video_path = f"/tmp/{video_file.filename}"

video_file.save(video_path)

# 视频分析

results = analyzer.analyze_video(video_path)

return jsonify({

'status': 'success',

'analysis_id': generate_analysis_id(),

'results': results

})

class TrafficAnalyzer:

def __init__(self):

self.detector = TrafficDetector()

self.tracker = DeepSortTracker()

self.traffic_stats = TrafficStatistics()

def analyze_single_frame(self, image):

# 目标检测

detections = self.detector.detect_traffic_objects(image)

# 多目标跟踪

tracks = self.tracker.update(detections, image)

# 交通统计

stats = self.traffic_stats.update(tracks)

return {

'detections': detections,

'tracks': tracks,

'statistics': stats,

'frame_info': {

'timestamp': get_current_timestamp(),

'object_count': len(tracks)

}

}4.3 前端TypeScript实现

// 类型定义

interface Detection {

bbox: [number, number, number, number];

confidence: number;

classId: number;

className: string;

trackId?: number;

}

interface AnalysisResult {

detections: Detection[];

tracks: any[];

statistics: TrafficStatistics;

frameInfo: FrameInfo;

}

// 视频分析组件

import React, { useRef, useState } from 'react';

import { analyzeFrame, uploadVideo } from '../services/trafficApi';

const TrafficAnalysis: React.FC = () => {

const videoRef = useRef<HTMLVideoElement>(null);

const canvasRef = useRef<HTMLCanvasElement>(null);

const [isAnalyzing, setIsAnalyzing] = useState(false);

const [results, setResults] = useState<AnalysisResult[]>([]);

const handleVideoUpload = async (file: File) => {

try {

const response = await uploadVideo(file);

setResults(response.data.results);

} catch (error) {

console.error('视频分析失败:', error);

}

};

const processFrame = async () => {

if (!videoRef.current || !canvasRef.current) return;

const video = videoRef.current;

const canvas = canvasRef.current;

const context = canvas.getContext('2d');

if (!context) return;

// 绘制当前帧

context.drawImage(video, 0, 0, canvas.width, canvas.height);

// 获取图像数据

const imageData = canvas.toDataURL('image/jpeg');

try {

const result = await analyzeFrame(imageData);

setResults(prev => [...prev, result]);

// 在画布上绘制检测结果

drawDetections(context, result.detections);

drawTracks(context, result.tracks);

} catch (error) {

console.error('帧分析失败:', error);

}

};

const drawDetections = (context: CanvasRenderingContext2D, detections: Detection[]) => {

detections.forEach(det => {

const [x1, y1, x2, y2] = det.bbox;

context.strokeStyle = '#FF0000';

context.lineWidth = 2;

context.strokeRect(x1, y1, x2 - x1, y2 - y1);

// 绘制标签

context.fillStyle = '#FF0000';

context.fillText(

`${det.className} ${(det.confidence * 100).toFixed(1)}%`,

x1, y1 - 5

);

});

};

return (

<div className="traffic-analysis">

<div className="video-section">

<video ref={videoRef} width="800" height="450" controls />

<canvas ref={canvasRef} width="800" height="450" />

</div>

<div className="controls">

<input

type="file"

accept="video/*"

onChange={(e) => e.target.files && handleVideoUpload(e.target.files[0])}

/>

<button onClick={() => setIsAnalyzing(!isAnalyzing)}>

{isAnalyzing ? '停止分析' : '开始实时分析'}

</button>

</div>

<div className="results">

<h3>分析结果</h3>

<StatisticsDisplay stats={results[results.length - 1]?.statistics} />

<ObjectList detections={results.flatMap(r => r.detections)} />

</div>

</div>

);

};

export default TrafficAnalysis;5. 性能优化与实战技巧

5.1 模型优化策略

1. 模型量化加速

# PyTorch模型量化

model = YOLO('yolov8n.pt')

model.model = torch.quantization.quantize_dynamic(

model.model, {torch.nn.Linear}, dtype=torch.qint8

)2. TensorRT加速

# 转换为TensorRT引擎

from torch2trt import torch2trt

model = YOLO('yolov8n.pt')

model.eval().cuda()

# 创建示例输入

x = torch.ones(1, 3, 640, 640).cuda()

# 转换模型

model_trt = torch2trt(model, [x])5.2 系统级优化

1. 异步处理架构

@Service

public class AsyncAnalysisService {

@Async("taskExecutor")

public CompletableFuture<AnalysisResult> processVideoAsync(String videoPath) {

// 异步处理视频分析

AnalysisResult result = pythonService.analyzeVideo(videoPath);

return CompletableFuture.completedFuture(result);

}

}2. 结果缓存机制

@Service

public class AnalysisCacheService {

@Cacheable(value = "analysisResults", key = "#videoHash")

public AnalysisResult getCachedResult(String videoHash) {

return null; // 缓存未命中时执行实际分析

}

}6. 实际应用场景展示

6.1 交通流量统计

系统能够实时统计道路上的车辆数量、类型分布,生成流量热力图。

6.2 违章行为检测

-

闯红灯检测

-

逆行检测

-

违停检测

-

超速估算

6.3 交通事故预警

通过分析车辆轨迹异常、突然停止等行为,提前预警潜在事故。

7. 总结与展望

基于YOLOv8/YOLO11和DeepSORT/FairMOT的交通场景智能分析系统的完整实现,该系统展现了现代计算机视觉技术在交通管理中的强大应用潜力。

未来改进方向:

-

多模态融合:结合雷达、激光雷达等多传感器数据

-

3D检测:引入3D目标检测提升空间感知能力

-

预测模型:集成轨迹预测算法,实现更智能的预警

-

边缘计算:优化模型以适应边缘设备部署

更多推荐

已为社区贡献3条内容

已为社区贡献3条内容

所有评论(0)