【速通LLM Agent系列】读复旦米哈游综述2 原文introduction部分

🧩 Section 1 Introduction — 引言

“If they find a parrot who could answer to everything, I would claim it to be an intelligent being without hesitation.”

— Denis Diderot, 1875

“如果人们找到一只能回答所有问题的鹦鹉,我会毫不犹豫地认为它是一个有智能的存在。”

—— 丹尼斯·狄德罗 (1875)

🧠 关键词:哲学溯源 | 智能定义 | 语言回应与智能假说

🔹 1. From Philosophy to AI

Artificial Intelligence (AI) is a field dedicated to designing and developing systems that can replicate human-like intelligence and abilities [1]. As early as the 18th century, philosopher Denis Diderot introduced the idea that if a parrot could respond to every question, it could be considered intelligent [2]. While Diderot was referring to living beings, his notion highlights the profound concept that a highly intelligent organism could resemble human intelligence. In the 1950s, Alan Turing expanded this notion to artificial entities and proposed the renowned Turing Test [3].

人工智能(AI) 是一个致力于设计并开发能复现人类智能及能力的系统的领域 [1]。

早在 18 世纪,哲学家 狄德罗 就提出:如果一只鹦鹉能回答所有问题,那么它可被视为智能体 [2]。

虽然狄德罗讨论的是生物体,但这一思想揭示了“智能即语言回应与理解能力”的深刻观点。

20 世纪 50 年代,艾伦·图灵(Alan Turing) 将这一概念扩展至人工实体,提出了著名的图灵测试(Turing Test) [3]。

🔍 逻辑注释:

狄德罗 → 语言能力与智能;图灵 → 机器能否以行为展示智能。

📎 MoA4NAD 启示:在智能体测试中,可以设计“语言-行为映射”的验证机制。

🔹 2. The Agent as a Core Concept

These AI entities are often termed “agents”, forming the essential building blocks of AI systems. Typically in AI, an agent refers to an artificial entity capable of perceiving its surroundings using sensors, making decisions, and then taking actions in response using actuators [1; 4].

这些 AI 实体通常被称为**“智能体(Agent)”,是 AI 系统的核心构件**。

在人工智能领域中,智能体通常指能够通过传感器感知环境、做出决策并通过执行器采取行动的人工实体 [1; 4]。

🧠 关键词:agent = perception + decision + action

📎 MoA4NAD 启示:这对应你设计的三层结构——感知层(pcap→JSONL)、决策层(分析Agent)、行动层(资产输出与决策执行)。

🔹 3. Philosophical Roots → Computational Transition

The concept of agents originated in Philosophy, with roots tracing back to Aristotle and Hume [5]. It describes entities possessing desires, beliefs, intentions, and the ability to take actions [5]. This idea transitioned into computer science, intending to enable computers to understand users’ interests and autonomously perform actions on their behalf [6-8]. As AI advanced, the term “agent” depicted entities with autonomy, reactivity, pro-activeness and social ability [4; 9].

“智能体”概念源于哲学,可追溯至亚里士多德与休谟 [5],用于描述具有欲望、信念、意图并能采取行动的实体。

这一思想后来被引入计算机科学:旨在使计算机理解用户意图并自主执行任务 [6-8]。

随着 AI 发展,“智能体”逐渐被定义为具备自主性、反应性、主动性与社会性的实体 [4; 9]。

🔍 逻辑注释:哲学的“意向性”→ 计算机的“自治执行”。

📎 MoA4NAD 启示:你可参考 BDI 模型(Belief-Desire-Intention)来设计 Agent 决策逻辑。

🔹 4. Towards AGI

AI agents are now acknowledged as a pivotal stride towards achieving Artificial General Intelligence (AGI) [4; 11; 12].

AI 智能体如今被视为**通向通用人工智能(AGI)**的重要一步 [4; 11; 12]。

🧠 关键词:AGI = 广义智能 | agent = 实现路径

🔹 5. Limitations of Traditional Agents

From the mid-20th century on, AI agents mainly focused on symbolic reasoning or specific tasks like Go and Chess [19-21]. These studies emphasized algorithms and training strategies but ignored inherent general abilities like knowledge memory, long-term planning, generalization and interaction [22; 23].

自 20 世纪中期起,AI 智能体研究主要聚焦于符号推理或特定任务(如 围棋、国际象棋) [19-21]。

此类研究重视算法与训练策略,却忽略了模型自身的通用能力——知识记忆、长期规划、泛化与交互 [22; 23]。

🔍 逻辑注释:指出旧 AI agent 局限,为 LLM 引出论点。

🔹 6. The Rise of LLMs as Sparks for AGI

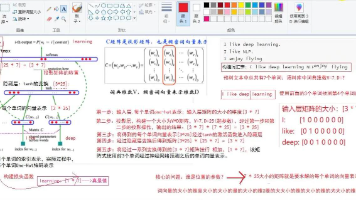

The development of large language models (LLMs) has brought hope for agents [24-26]. According to the World Scope (WS) framework [30] with five levels — Corpus, Internet, Perception, Embodiment, Social — LLMs now occupy Level 2 (Internet). Despite this, they show strong abilities in knowledge acquisition, instruction comprehension, planning and reasoning, earning the title of “sparks for AGI” [31].

大型语言模型(LLM) 的发展为智能体研究带来了新的希望 [24-26]。

根据World Scope(世界范围)框架 [30],AI 发展可分为五个层次:

1️⃣ 语料层 (Corpus) 2️⃣ 互联网层 (Internet) 3️⃣ 感知层 (Perception) 4️⃣ 具身层 (Embodiment) 5️⃣ 社会层 (Social)。

当前LLM处于第二层(互联网文本输入输出),但已展现出知识获取、指令理解、推理与规划等强大能力,因此被称为 “通用人工智能的火花(sparks for AGI)” [31]。

📎 MoA4NAD 启示:LLM 可作为 “脑” 层核心,感知与行动通过工具 (Function Call / API / Sensors) 扩展至第 3–4 层。

🔹 7. From LLM to Agent Society

If we elevate LLMs to agents and equip them with expanded perception and action spaces, they can reach Level 3–4 (Perception & Embodiment). Placed together, cooperative or competitive agents may form social phenomena, approaching Level 5 (Social). Figure 1 envisions a harmonious society of AI agents and humans.

若将 LLM 提升为智能体并赋予更广的感知与行动空间,它们可迈向第 3 与第 4 层(感知层与具身层)。

当多个 LLM 智能体相互作用时,可能出现社会现象(social phenomena),从而逼近第 5 层(社会层)。

图 1 展示了一个人机共存的和谐智能体社会。

🧠 关键词:multi-agent interaction | emergent behavior | agent society

🔹 8. Structure of This Survey

In this paper, we present a comprehensive survey on LLM-based agents.

- § 2 traces the origin of agents and their philosophical foundation.

- § 3 proposes a conceptual framework with three parts: brain, perception, action.

- § 4 reviews applications in single-agent, multi-agent and human-agent settings.

- § 5 discusses the Agent Society concept and emergent social behaviors.

- § 6 covers key topics and open problems — evaluation, risks, scaling, and the path to AGI.

本文将对 基于LLM的智能体 进行系统综述:

- 第 2 节 追溯智能体的哲学与技术起源;

- 第 3 节 提出通用概念框架(大脑-感知-行动);

- 第 4 节 介绍应用场景(单体、多体、人机协作);

- 第 5 节 探讨智能体社会 与群体行为;

- 第 6 节 讨论评测、风险、扩展与AGI之路等开放问题。

📚 Summary of Core Ideas 核心要点汇总

| 主题 | 内容要点 | 启示 |

|---|---|---|

| 哲学起源 | 智能 = 感知 + 理解 + 回应 | LLM 语言能力可映射为感知-行动回路 |

| AI 演化 | 从符号AI → 数据驱动LLM | 从算法到模型能力转移 |

| agent 定义 | 自主、反应、主动、社会 | 设计多Agent协同规则 |

| LLM 突破 | 成为 AGI 火花 | 以LLM 为脑,扩展感知与行动 |

| 研究路线 | Brain–Perception–Action → Application → Society → Open Problems | 对 MoA4NAD 框架具直接指导意义 |

更多推荐

已为社区贡献4条内容

已为社区贡献4条内容

所有评论(0)