Unity接入AI实时抠像,支持无绿幕详细解决方案

VideoKit是一款为Unity引擎开发的多媒体解决方案,提供实时无绿幕抠像、视频录制(支持MP4、WEBM等格式)、相机控制、音频处理及社交分享功能,支持跨平台部署。使用步骤包括官网注册、生成API密钥,并在Unity中配置VideoKitCameraManager类实现功能.

提示:文章写完后,目录可以自动生成,如何生成可参考右边的帮助文档

文章目录

前言

VideoKit 是一款功能丰富的多媒体解决方案,具有多种用途和特点。

一、videokit是什么?

这是一款专门为 Unity 引擎打造的全功能用户生成内容解决方案,基于 C# 开发。它目前处于 alpha 阶段,C# API 可能会有变化。

功能特点

- 视频录制:支持 MP4、WEBM、ProRes 等格式,还能生成动图。

- 相机功能:可对相机的对焦、曝光、缩放等参数进行精细控制。

- 音频处理:能控制麦克风音频格式,具备回声消除功能。

- 社交分享:提供原生分享界面,可将视频和图像保存到相册或分享至社交平台。

- 跨平台支持:一次构建,可部署到 Android、iOS、macOS、WebGL 和 Windows 等平台。

- 应用场景:适用于游戏开发、教育应用、社交媒体应用等场景。例如在游戏中,玩家可录制精彩瞬间并分享;在教育领域,教师可创建视频教程,学生可录制反馈或作品。

二、使用步骤

1.官网注册videokit账号

点击右侧链接地址进入注册

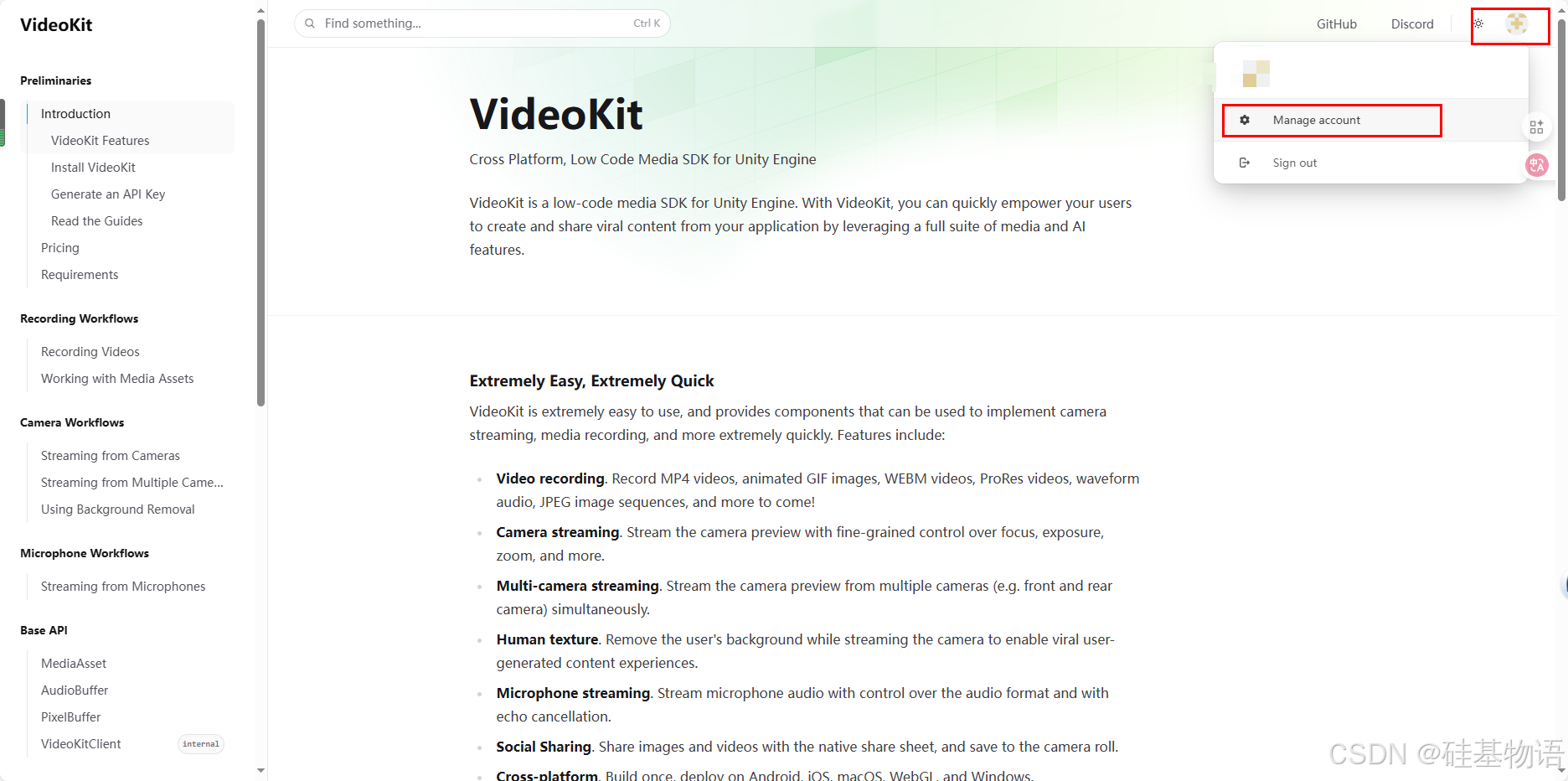

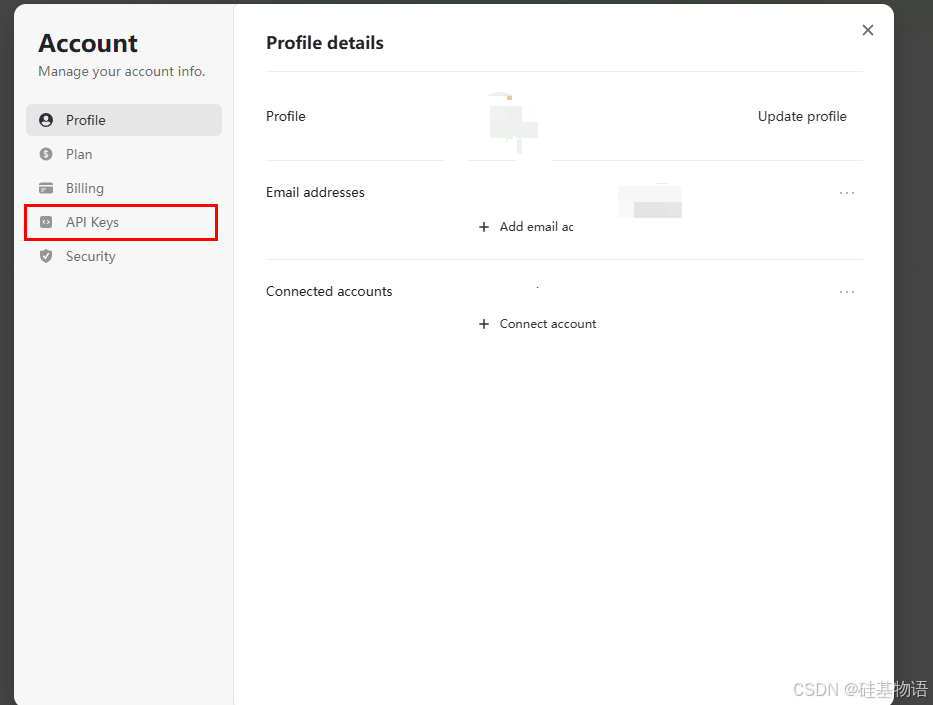

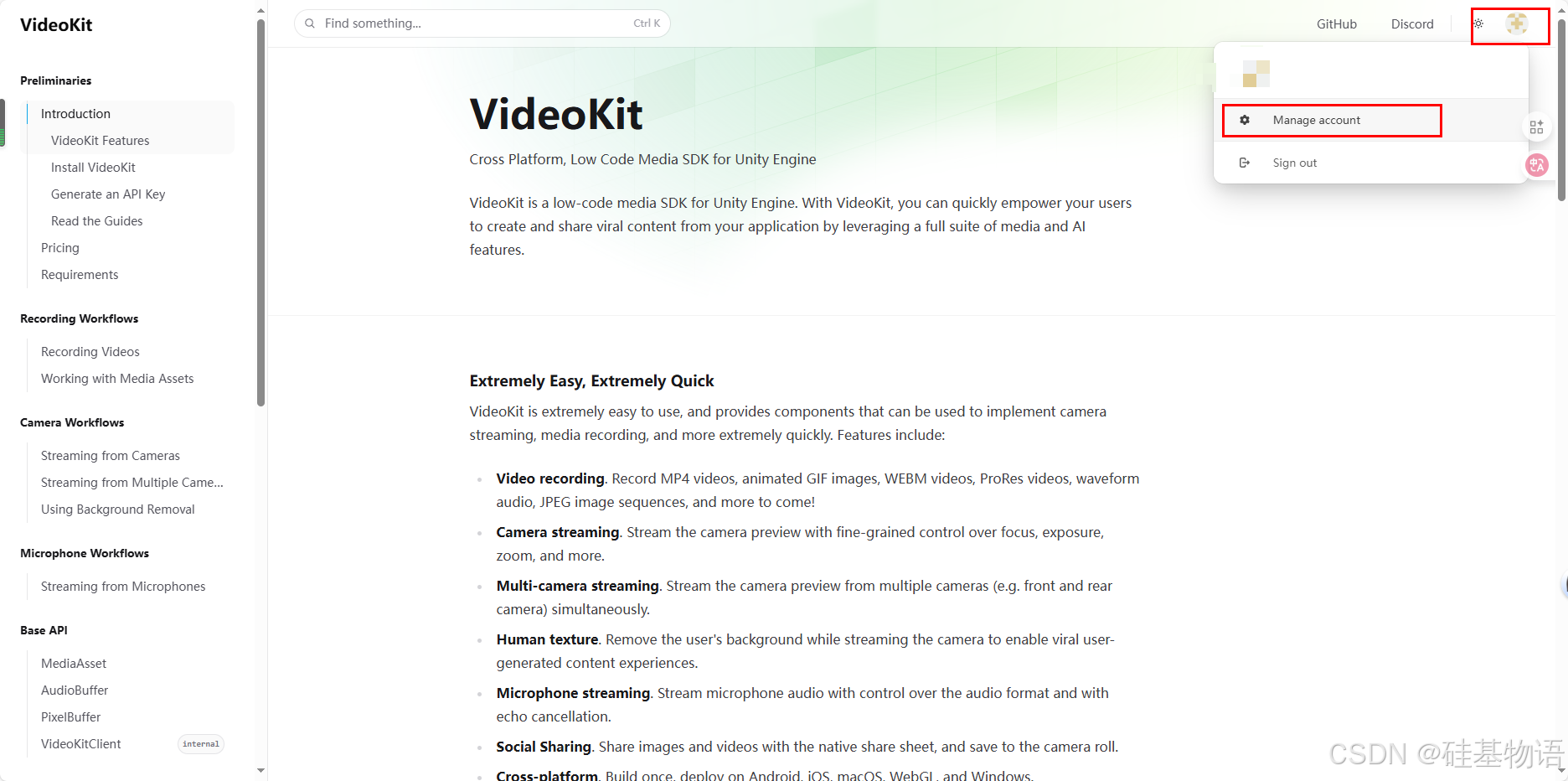

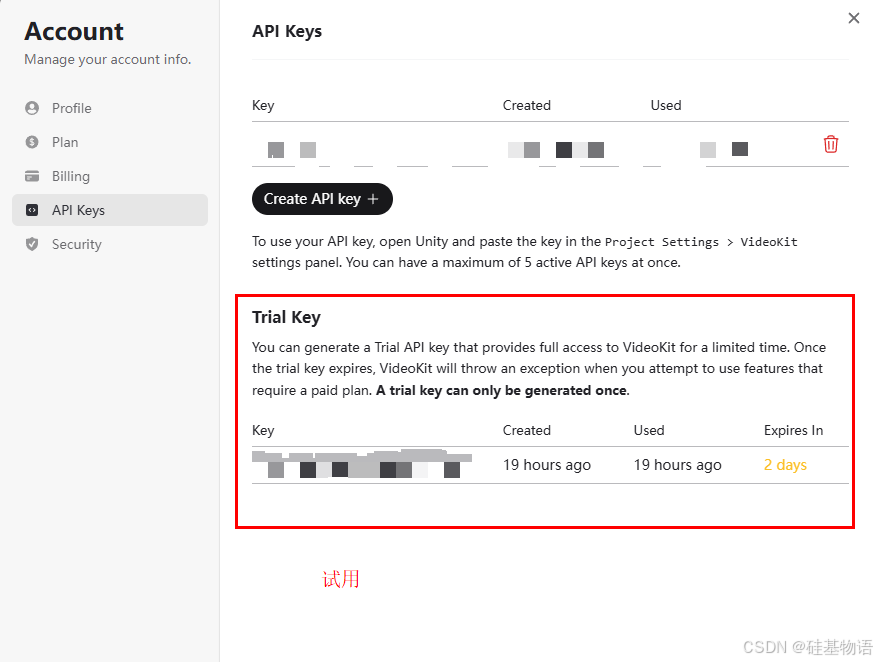

2.生成API key密钥

三、运行

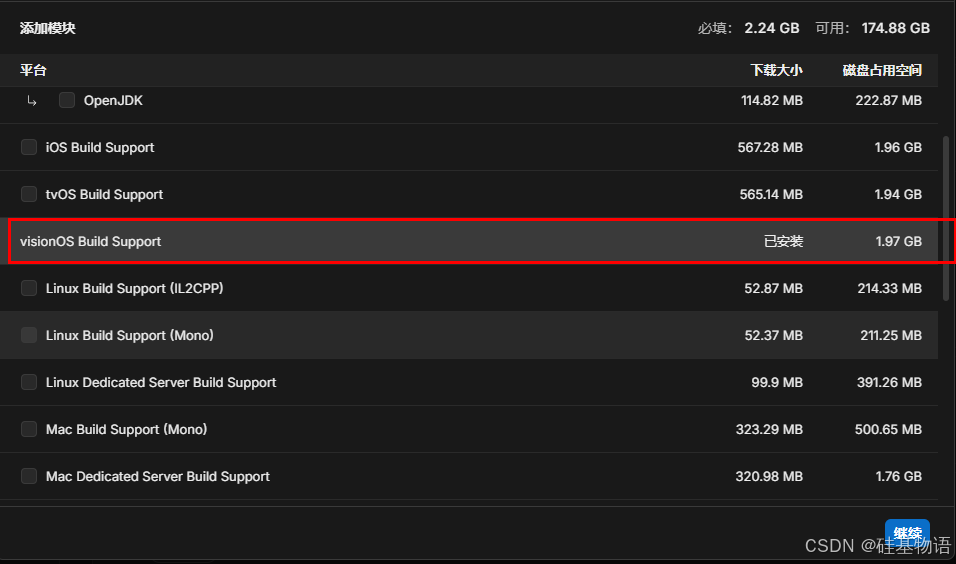

1、使用unity 2023以上版本必须附带VersionOS平台模块负责报错

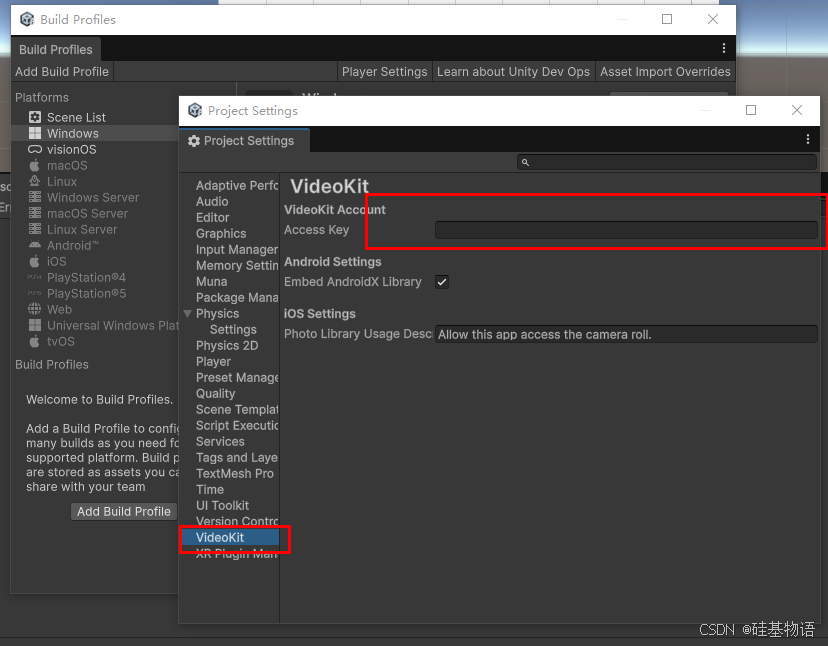

2、填写Videokit AccessKey

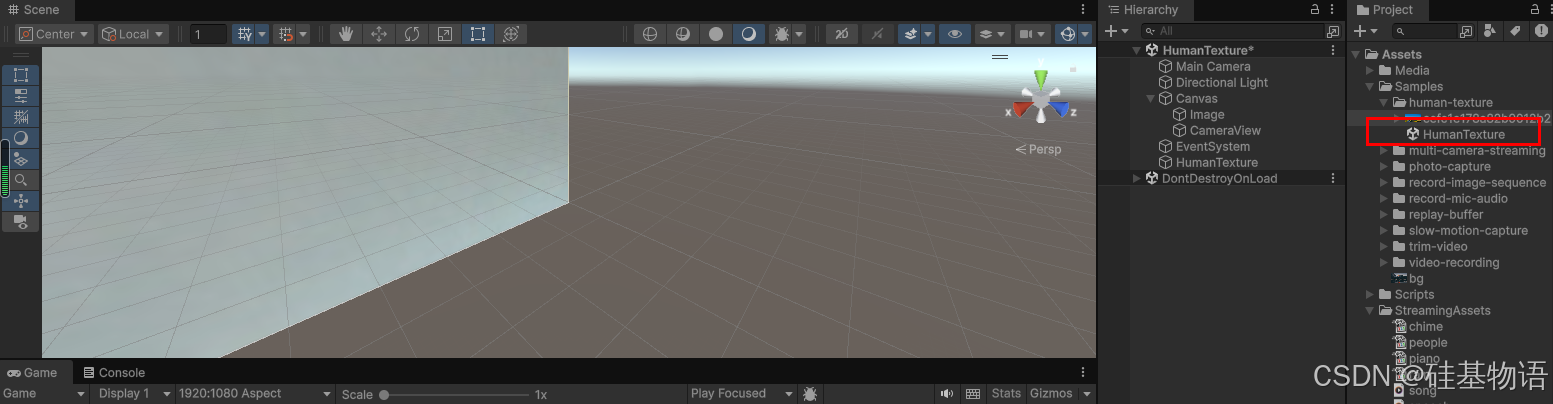

3、废话不多说,直接上代码,主要用到官方VideoKitCameraManager类和VideoKitCameraView类

namespace VideoKit {

using System;

using System.Collections.Generic;

using System.IO;

using System.Linq;

using System.Threading.Tasks;

using UnityEngine;

using Internal;

/// <summary>

/// VideoKit camera manager for streaming video from camera devices.

/// </summary>

[Tooltip(@"VideoKit camera manager for streaming video from camera devices.")]

[HelpURL(@"https://videokit.ai/reference/videokitcameramanager")]

[DisallowMultipleComponent]

public sealed class VideoKitCameraManager : MonoBehaviour {

#region --Enumerations--

/// <summary>

/// Camera manager capabilities.

/// </summary>

[Flags]

public enum Capabilities : int {

/// <summary>

/// Stream depth data along with the camera preview data.

/// This flag adds a minimal performance cost, so enable it only when necessary.

/// This flag is only supported on iOS and Android.

/// </summary>

Depth = 0b0001,

/// <summary>

/// Generate a human texture from the camera preview stream.

/// This flag adds a variable performance cost, so enable it only when necessary.

/// </summary>

HumanTexture = 0b00110,

}

/// <summary>

/// Camera facing.

/// </summary>

[Flags]

public enum Facing : int {

/// <summary>

/// User-facing camera.

/// </summary>

User = 0b10,

/// <summary>

/// World-facing camera.

/// </summary>

World = 0b01,

}

/// <summary>

/// Camera resolution presets.

/// </summary>

public enum Resolution : int {

/// <summary>

/// Use the default camera resolution.

/// With this preset, the camera resolution will not be set.

/// </summary>

Default = 0,

/// <summary>

/// Lowest resolution supported by the camera device.

/// </summary>

Lowest = 1,

/// <summary>

/// SD resolution.

/// </summary>

_640x480 = 2,

/// <summary>

/// HD resolution.

/// </summary>

[InspectorName(@"1280x720 (HD)")]

_1280x720 = 3,

/// <summary>

/// Full HD resolution.

/// </summary>

[InspectorName(@"1920x1080 (Full HD)")]

_1920x1080 = 4,

/// <summary>

/// 2K WQHD resolution.

/// </summary>

[InspectorName(@"2560x1440 (2K)")]

_2560x1440 = 6,

/// <summary>

/// 4K UHD resolution.

/// </summary>

[InspectorName(@"3840x2160 (4K)")]

_3840x2160 = 5,

/// <summary>

/// Highest resolution supported by the camera device.

/// Using this resolution is strongly not recommended.

/// </summary>

Highest = 10

}

/// <summary>

/// </summary>

public enum FrameRate : int {

/// <summary>

/// Use the default camera frame rate.

/// With this preset, the camera frame rate will not be set.

/// </summary>

Default = 0,

/// <summary>

/// Use the lowest frame rate supported by the camera.

/// </summary>

Lowest = 1,

/// <summary>

/// 15FPS.

/// </summary>

_15 = 15,

/// <summary>

/// 30FPS.

/// </summary>

_30 = 30,

/// <summary>

/// 60FPS.

/// </summary>

_60 = 60,

/// <summary>

/// 120FPS.

/// </summary>

_120 = 120,

/// <summary>

/// 240FPS.

/// </summary>

_240 = 240

}

#endregion

#region --Inspector--

[Header(@"Configuration")]

/// <summary>

/// Desired camera capabilities.

/// </summary>

[Tooltip(@"Desired camera capabilities.")]

public Capabilities capabilities = 0;

/// <summary>

/// Whether to start the camera preview as soon as the component awakes.

/// </summary>

[Tooltip(@"Whether to start the camera preview as soon as the component awakes.")]

public bool playOnAwake = true;

[Header(@"Camera Selection")]

/// <summary>

/// Desired camera facing.

/// </summary>

[SerializeField, Tooltip(@"Desired camera facing.")]

private Facing _facing = Facing.User;

/// <summary>

/// Whether the specified facing is required.

/// When false, the camera manager will fallback to a default camera when a camera with the requested facing is not available.

/// </summary>

[Tooltip(@"Whether the specified facing is required. When false, the camera manager will fallback to a default camera when a camera with the requested facing is not available.")]

public bool facingRequired = false;

[Header(@"Camera Settings")]

/// <summary>

/// Desired camera resolution.

/// </summary>

[Tooltip(@"Desired camera resolution.")]

public Resolution resolution = Resolution._1280x720;

/// <summary>

/// Desired camera frame rate.

/// </summary>

[Tooltip(@"Desired camera frame rate.")]

public FrameRate frameRate = FrameRate._30;

/// <summary>

/// Desired camera focus mode.

/// </summary>

[Tooltip(@"Desired camera focus mode.")]

public CameraDevice.FocusMode focusMode = CameraDevice.FocusMode.Continuous;

/// <summary>

/// Desired camera exposure mode.

/// </summary>

[Tooltip(@"Desired camera exposure mode.")]

public CameraDevice.ExposureMode exposureMode = CameraDevice.ExposureMode.Continuous;

#endregion

#region --Client API--

/// <summary>

/// Get or set the camera device used for streaming.

/// </summary>

public MediaDevice? device {

get => _device;

set {

// Switch cameras without disposing output

// We deliberately skip configuring the camera like we do in `StartRunning`

if (running) {

_device!.StopRunning();

_device = value;

if (_device != null)

StartRunning(_device, OnCameraBuffer);

}

// Handle trivial case

else

_device = value;

}

}

/// <summary>

/// Get or set the desired camera facing.

/// </summary>

public Facing facing {

get => _facing;

set {

if (_facing == value)

return;

device = GetDefaultDevice(devices, _facing = value, facingRequired);

}

}

/// <summary>

/// Whether the camera is running.

/// </summary>

public bool running => _device?.running ?? false;

/// <summary>

/// Event raised when a new pixel buffer is provided by the camera device.

/// NOTE: This event is invoked on a dedicated camera thread, not the Unity main thread.

/// </summary>

public event Action<CameraDevice, PixelBuffer>? OnPixelBuffer;

/// <summary>

/// Start the camera preview.

/// </summary>

public async void StartRunning() => await StartRunningAsync();

/// <summary>

/// Start the camera preview.

/// </summary>

public async Task StartRunningAsync() {

// Check

if (!isActiveAndEnabled)

throw new InvalidOperationException(@"VideoKit: Camera manager failed to start running because component is disabled");

// Check

if (running)

return;

// Request camera permissions

var permissions = await CameraDevice.CheckPermissions(request: true);

if (permissions != MediaDevice.PermissionStatus.Authorized)

throw new InvalidOperationException(@"VideoKit: User did not grant camera permissions");

// Check device

devices = await GetAllDevices();

_device ??= GetDefaultDevice(devices, _facing, facingRequired);

if (_device == null)

throw new InvalidOperationException(@"VideoKit: Camera manager failed to start running because no camera device is available");

// Configure camera(s)

foreach (var cameraDevice in EnumerateCameraDevices(_device)) {

if (resolution != Resolution.Default)

cameraDevice.previewResolution = GetResolutionFrameSize(resolution);

if (frameRate != FrameRate.Default)

cameraDevice.frameRate = (int)frameRate;

if (cameraDevice.IsFocusModeSupported(focusMode))

cameraDevice.focusMode = focusMode;

if (cameraDevice.IsExposureModeSupported(exposureMode))

cameraDevice.exposureMode = exposureMode;

}

// Preload human texture predictor

var muna = VideoKitClient.Instance!.muna;

if (capabilities.HasFlag(Capabilities.HumanTexture)) {

try { await muna.Predictions.Create(HumanTextureTag, new()); }

catch { // CHECK // REMOVE

var predictorCachePath = Path.Join(Application.persistentDataPath, @"fxn", @"predictors");

if (Directory.Exists(predictorCachePath))

Directory.Delete(predictorCachePath, recursive: true);

}

await muna.Predictions.Create(HumanTextureTag, new());

}

// Start running

StartRunning(_device, OnCameraBuffer);

// Listen for events

var events = VideoKitEvents.Instance;

events.onPause += OnPause;

events.onResume += OnResume;

}

/// <summary>

/// Stop the camera preview.

/// </summary>

public void StopRunning() {

var events = VideoKitEvents.OptionalInstance;

if (events != null) {

events.onPause -= OnPause;

events.onResume -= OnResume;

}

_device?.StopRunning();

}

#endregion

#region --Operations--

private MediaDevice[]? devices;

private MediaDevice? _device;

public const string HumanTextureTag = @"@videokit/human-texture";

private void Awake() {

if (playOnAwake)

StartRunning();

}

private static void StartRunning(

MediaDevice device,

Action<CameraDevice, PixelBuffer> handler

) {

if (device is CameraDevice cameraDevice)

cameraDevice.StartRunning(pixelBuffer => handler(cameraDevice, pixelBuffer));

else if (device is MultiCameraDevice multiCameraDevice)

multiCameraDevice.StartRunning(handler);

else

throw new InvalidOperationException($"Cannot start running because media device has unsupported type: {device.GetType()}");

}

private unsafe void OnCameraBuffer(

CameraDevice cameraDevice,

PixelBuffer pixelBuffer

) => OnPixelBuffer?.Invoke(cameraDevice, pixelBuffer);

private void OnPause() => _device?.StopRunning();

private void OnResume() {

if (_device != null)

StartRunning(_device, OnCameraBuffer);

}

private void OnDestroy() => StopRunning();

#endregion

#region --Utilties--

internal static IEnumerable<CameraDevice> EnumerateCameraDevices(MediaDevice? device) {

if (device is CameraDevice cameraDevice)

yield return cameraDevice;

else if (device is MultiCameraDevice multiCameraDevice)

foreach (var camera in multiCameraDevice.cameras)

yield return camera;

else

yield break;

}

internal static Facing GetCameraFacing(MediaDevice mediaDevice) => mediaDevice switch {

CameraDevice cameraDevice => cameraDevice.frontFacing ? Facing.User : Facing.World,

MultiCameraDevice multiCameraDevice => multiCameraDevice.cameras.Select(GetCameraFacing).Aggregate((a, b) => a | b),

_ => 0,

};

private static async Task<MediaDevice[]> GetAllDevices() {

var cameraDevices = await CameraDevice.Discover(); // MUST always come before multi-cameras

var multiCameraDevices = await MultiCameraDevice.Discover();

var result = cameraDevices.Cast<MediaDevice>().Concat(multiCameraDevices).ToArray();

return result;

}

private static MediaDevice? GetDefaultDevice(

MediaDevice[]? devices,

Facing facing,

bool facingRequired

) {

facing &= Facing.User | Facing.World;

var fallbackDevice = facingRequired ? null : devices?.FirstOrDefault();

var requestedDevice = devices?.FirstOrDefault(device => GetCameraFacing(device).HasFlag(facing));

return requestedDevice ?? fallbackDevice;

}

private static (int width, int height) GetResolutionFrameSize(Resolution resolution) => resolution switch {

Resolution.Lowest => (176, 144),

Resolution._640x480 => (640, 480),

Resolution._1280x720 => (1280, 720),

Resolution._1920x1080 => (1920, 1080),

Resolution._2560x1440 => (2560, 1440),

Resolution._3840x2160 => (3840, 2160),

Resolution.Highest => (5120, 2880),

_ => (1280, 720),

};

#endregion

}

}

using System;

using System.Collections.Generic;

using System.Linq;

using System.Runtime.CompilerServices;

using UnityEngine;

using UnityEngine.Events;

using UnityEngine.EventSystems;

using UnityEngine.Serialization;

using UnityEngine.UI;

using Unity.Collections.LowLevel.Unsafe;

using Muna;

using Internal;

using Facing = VideoKitCameraManager.Facing;

using Image = Muna.Image;

/// <summary>

/// VideoKit UI component for displaying the camera preview from a camera manager.

/// </summary>

[Tooltip(@"VideoKit UI component for displaying the camera preview from a camera manager.")]

[RequireComponent(typeof(RawImage), typeof(AspectRatioFitter), typeof(EventTrigger))]

[HelpURL(@"https://videokit.ai/reference/videokitcameraview")]

[DisallowMultipleComponent]

public sealed partial class VideoKitCameraView : MonoBehaviour, IPointerUpHandler, IBeginDragHandler, IDragHandler {

#region --Enumerations--

/// <summary>

/// View mode.

/// </summary>

public enum ViewMode : int {

/// <summary>

/// Display the camera texture.

/// </summary>

CameraTexture = 0,

/// <summary>

/// Display the human texture.

/// </summary>

HumanTexture = 1,

}

/// <summary>

/// Gesture mode.

/// </summary>

public enum GestureMode : int {

/// <summary>

/// Do not respond to gestures.

/// </summary>

None = 0,

/// <summary>

/// Detect tap gestures.

/// </summary>

Tap = 1,

/// <summary>

/// Detect two-finger pinch gestures.

/// </summary>

Pinch = 2,

/// <summary>

/// Detect single-finger drag gestures.

/// This gesture mode is recommended when the user is holding a button to record a video.

/// </summary>

Drag = 3,

}

#endregion

#region --Inspector--

[Header(@"Configuration")]

/// <summary>

/// VideoKit camera manager.

/// </summary>

[Tooltip(@"VideoKit camera manager.")]

public VideoKitCameraManager? cameraManager;

/// <summary>

/// Desired camera facing to display.

/// </summary>

[Tooltip(@"Desired camera facing to display.")]

public Facing facing = Facing.User | Facing.World;

/// <summary>

/// View mode of the view.

/// </summary>

[Tooltip(@"View mode of the view.")]

public ViewMode viewMode = ViewMode.CameraTexture;

[Header(@"Gestures")]

/// <summary>

/// Focus gesture.

/// </summary>

[Tooltip(@"Focus gesture."), FormerlySerializedAs(@"focusMode")]

public GestureMode focusGesture = GestureMode.None;

/// <summary>

/// Exposure gesture.

/// </summary>

[Tooltip(@"Exposure gesture."), FormerlySerializedAs(@"exposureMode")]

public GestureMode exposureGesture = GestureMode.None;

/// <summary>

/// Zoom gesture.

/// </summary>

[Tooltip(@"Zoom gesture."), FormerlySerializedAs(@"zoomMode")]

public GestureMode zoomGesture = GestureMode.None;

[Header(@"Events")]

/// <summary>

/// Event raised when a new camera frame is available in the preview texture.

/// </summary>

[Tooltip(@"Event raised when a new camera frame is available.")]

public UnityEvent? OnCameraFrame;

#endregion

#region --Client API--

/// <summary>

/// Get the camera device that this view displays.

/// </summary>

internal CameraDevice? device => VideoKitCameraManager // CHECK // Should we make this public??

.EnumerateCameraDevices(cameraManager?.device)

.FirstOrDefault(device => facing.HasFlag(VideoKitCameraManager.GetCameraFacing(device)));

/// <summary>

/// Get the camera preview texture.

/// </summary>

public Texture2D? texture { get; private set; }

/// <summary>

/// Get or set the camera preview rotation.

/// This is automatically reset when the component is enabled.

/// </summary>

public PixelBuffer.Rotation rotation { get; set; }

/// <summary>

/// Event raised when a new pixel buffer is available.

/// Unlike the `VideoKitCameraManager`, this pixel buffer has an `RGBA8888` format and is rotated upright.

/// This event is invoked on a dedicated camera thread, NOT the Unity main thread.

/// </summary>

public event Action<PixelBuffer>? OnPixelBuffer;

#endregion

#region --Operations--

private PixelBuffer pixelBuffer;

private RawImage rawImage;

private AspectRatioFitter aspectFitter;

private readonly object fence = new();

private static readonly List<RuntimePlatform> OrientationSupport = new() {

RuntimePlatform.Android,

RuntimePlatform.IPhonePlayer

};

private void Reset() {

cameraManager = FindFirstObjectByType<VideoKitCameraManager>();

}

private void Awake() {

rawImage = GetComponent<RawImage>();

aspectFitter = GetComponent<AspectRatioFitter>();

}

private void OnEnable() {

rotation = GetPreviewRotation(Screen.orientation);

if (cameraManager != null)

cameraManager.OnPixelBuffer += OnCameraBuffer;

}

private unsafe void Update() {

bool upload = false;

lock (fence) {

if (pixelBuffer == IntPtr.Zero)

return;

if (

texture != null &&

(texture.width != pixelBuffer.width || texture.height != pixelBuffer.height)

) {

Texture2D.Destroy(texture);

texture = null;

}

if (texture == null)

texture = new Texture2D(

pixelBuffer.width,

pixelBuffer.height,

TextureFormat.RGBA32,

false

);

if (viewMode == ViewMode.CameraTexture) {

using var buffer = new PixelBuffer(

texture.width,

texture.height,

PixelBuffer.Format.RGBA8888,

texture.GetRawTextureData<byte>(),

mirrored: false

);

pixelBuffer.CopyTo(buffer);

upload = true;

} else if (viewMode == ViewMode.HumanTexture) {

var muna = VideoKitClient.Instance!.muna;

var prediction = muna.Predictions.Create(

tag: VideoKitCameraManager.HumanTextureTag,

inputs: new () {

["image"] = new Image(

(byte*)pixelBuffer.data.GetUnsafePtr(),

pixelBuffer.width,

pixelBuffer.height,

4

)

}

).Throw().Result;

var image = (Image)prediction.results![0]!;

image.ToTexture(texture);

upload = true;

}

}

if (upload)

texture.Apply();

rawImage.texture = texture;

aspectFitter.aspectRatio = (float)texture.width / texture.height;

OnCameraFrame?.Invoke();

}

private unsafe void OnCameraBuffer(

CameraDevice cameraDevice,

PixelBuffer cameraBuffer

) {

if ((VideoKitCameraManager.GetCameraFacing(cameraDevice) & facing) == 0)

return;

var (width, height) = GetPreviewTextureSize(

cameraBuffer.width,

cameraBuffer.height,

rotation

);

lock (fence) {

if (

pixelBuffer != IntPtr.Zero &&

(pixelBuffer.width != width || pixelBuffer.height != height)

) {

pixelBuffer.Dispose();

pixelBuffer = default;

}

if (pixelBuffer == IntPtr.Zero)

pixelBuffer = new PixelBuffer(

width,

height,

PixelBuffer.Format.RGBA8888,

mirrored: true

);

cameraBuffer.CopyTo(pixelBuffer, rotation: rotation);

}

OnPixelBuffer?.Invoke(pixelBuffer);

}

private void OnDisable() {

if (cameraManager != null)

cameraManager.OnPixelBuffer -= OnCameraBuffer;

}

private void OnDestroy() {

pixelBuffer.Dispose();

pixelBuffer = default;

}

#endregion

#region --Handlers--

void IPointerUpHandler.OnPointerUp(PointerEventData data) {

// Check device

var device = this.device;

if (device == null)

return;

// Check focus mode

if (focusGesture != GestureMode.Tap && exposureGesture != GestureMode.Tap)

return;

// Get press position

var rectTransform = transform as RectTransform;

if (!RectTransformUtility.ScreenPointToLocalPointInRectangle(

rectTransform,

data.position,

data.pressEventCamera, // or `enterEventCamera`

out var localPoint

))

return;

// Focus

var point = Rect.PointToNormalized(rectTransform!.rect, localPoint);

if (device.focusPointSupported && focusGesture == GestureMode.Tap)

device.SetFocusPoint(point.x, point.y);

if (device.exposurePointSupported && exposureGesture == GestureMode.Tap)

device.SetExposurePoint(point.x, point.y);

}

void IBeginDragHandler.OnBeginDrag(PointerEventData data) {

}

void IDragHandler.OnDrag(PointerEventData data) {

}

#endregion

#region --Utilities--

[MethodImpl(MethodImplOptions.AggressiveInlining)]

private static PixelBuffer.Rotation GetPreviewRotation(ScreenOrientation orientation) => orientation switch {

var _ when !OrientationSupport.Contains(Application.platform) => PixelBuffer.Rotation._0,

ScreenOrientation.LandscapeLeft => PixelBuffer.Rotation._0,

ScreenOrientation.Portrait => PixelBuffer.Rotation._90,

ScreenOrientation.LandscapeRight => PixelBuffer.Rotation._180,

ScreenOrientation.PortraitUpsideDown => PixelBuffer.Rotation._270,

_ => PixelBuffer.Rotation._0

};

[MethodImpl(MethodImplOptions.AggressiveInlining)]

private static (int width, int height) GetPreviewTextureSize(

int width,

int height,

PixelBuffer.Rotation rotation

) => rotation == PixelBuffer.Rotation._90 || rotation == PixelBuffer.Rotation._270 ?

(height, width) :

(width, height);

#endregion

}

四、实时抠像效果

# 总结

# 总结

以上就是今天要讲的内容,本文仅仅简单介绍了videokit的使用,而videokit提供了大量能使我们快速便捷地处理数据的函数和方法。作为多功能多媒体解决方案,VideoKit 针对不同开发环境呈现差异化价值:在 Unity 引擎中,它以 C# 开发为基础,提供视频录制(支持多格式与动图生成)、相机参数精细控制、音频处理(含回声消除)、跨平台社交分享等能力,适配游戏开发、教育应用等多元场景;在 Android 平台,其 SDK 通过核心、录制、播放器、编辑、直播五大模块,实现低延迟视频处理与直播功能,同时还有华为旗下及手机端专用的 VideoKit 软件,分别侧重播放编辑与视频压缩转码等实用功能,全方位覆盖多媒体处理需求。

另一方面,针对 Unity 2023 + 版本中 VersionOS 模块报错问题,澄清认知误区并给出明确解决方案。首先明确 VersionOS 模块并非通用项目必需,其核心作用是适配嵌入式设备与定制化硬件,通用平台项目无需添加;接着分析报错多源于误勾选模块、项目模板残留或版本兼容性 Bug,而非强制要求;最后提供 “移除模块 - 检查插件依赖 - 更换项目模板” 的三步解决流程,帮助开发者高效排查冲突,保障开发流程顺畅。

谢谢大家的观看~

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)