华为显卡部署

参考:https://blog.csdn.net/weixin_45724963/article/details/149979566?基础镜像。

大模型部署

300I DUO

参考:https://blog.csdn.net/weixin_45724963/article/details/149979566?utm_medium=distribute.pc_relevant.none-task-blog-2defaultbaidujs_utm_term~default-0-149979566-blog-148839465.235

基础镜像

swr.cn-south-1.myhuaweicloud.com/ascendhub/mindie:1.0.0-300I-Duo-py311-openeuler24.03-lts

1) 启动镜像

docker run -it --net=host --shm-size=1g \

--name Qwen2.5-14B-Instruct \

--device=/dev/davinci_manager \

--device=/dev/hisi_hdc \

--device=/dev/devmm_svm \

--device=/dev/davinci6 \

--device=/dev/davinci7 \

-v /usr/local/Ascend/driver:/usr/local/Ascend/driver:ro \

-v /usr/local/sbin:/usr/local/sbin:ro \

-v /root/hw/Qwen/Qwen2.5-14B-Instruct:/model:ro \

-v /root/hw:/root/hw \

harbor.huaweisoft.com/huaweisoft/ai/mindie:1.0.0-300I-Duo-py311-openeuler24.03-lts bash

这里注意两点,-v /root/hw/Qwen/Qwen2.5-14B-Instruct:/model:ro 直接映射到模型目录

第二个 -v /root/hw:/root/hw, 不能写 -v /root:/root 。会导致容器/root被覆盖

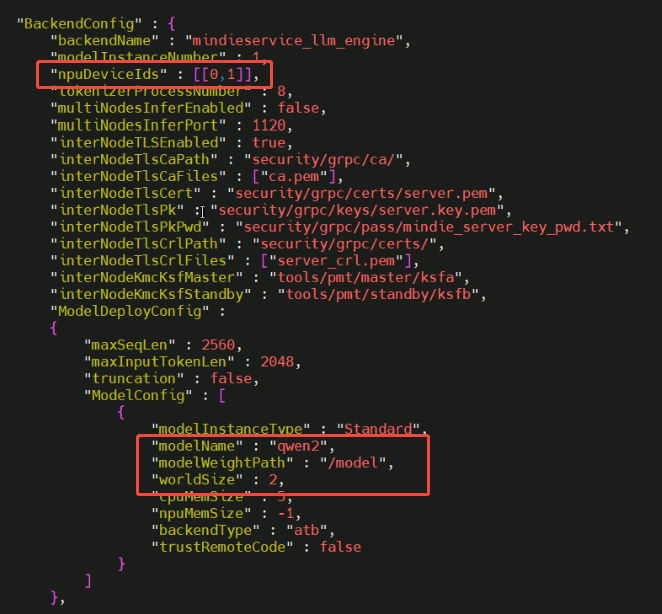

2)改配置

- 修改模型权重config.json中torch_dtype字段为float16

- chmod 750 /model/config.json

- 修改mindie配置文件:vim /usr/local/Ascend/mindie/latest/mindie-service/conf/config.json

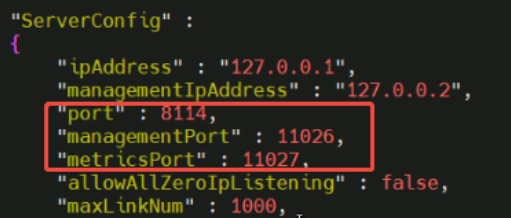

① 修改3个监听端口,否则同一服务器上有部署多个模型在华为,会有冲突

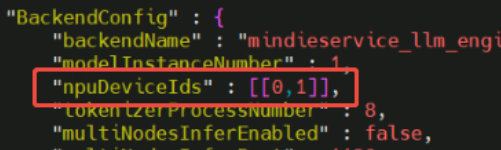

② 修改npu数量,外部映射6,7-> 容器内部可见0,1 ;worldSize设置为2

③ 修改模型路径

3)改完后就可以固化启动了

docker commit 固化镜像,然后重建容器

docker run -itd --restart always --net=host --shm-size=1g \

--name Qwen2.5-14B-Instruct \

--device=/dev/davinci_manager \

--device=/dev/hisi_hdc \

--device=/dev/devmm_svm \

--device=/dev/davinci6 \

--device=/dev/davinci7 \

-v /usr/local/Ascend/driver:/usr/local/Ascend/driver:ro \

-v /usr/local/sbin:/usr/local/sbin:ro \

-v /root/hw/Qwen/Qwen2.5-14B-Instruct:/model:ro \

-v /root/hw:/root/hw \

harbor.huaweisoft.com/huaweisoft/ai/mindie:1.0.0-300I-Duo-py311-openeuler24.03-lts-qwen bash /root/hw/run_qwen2.sh

常见报错

1) Error while loading conda entry point: conda-anaconda-tos (No module named ‘pydantic_core._pydantic_core’)

【原因】-v /root:/root 覆盖了容器内/root

【解决方法】 移除该映射

2)

[root@localhost bin]# ./mindieservice_daemon

LogConfig: [json.exception.out_of_range.403] key 'LogConfig' not found

ERR: Failed to init endpoint! Please check the service log or console output.

Killed

【原因】使用了cp /root/hw/config.json /usr/local/Ascend/mindie/latest/mindie-service/conf/config.json 。 把容器内的config.json通过cp覆盖

【解决办法】要用vim编辑,不能用cp

3)

EE1001: [PID: 202] 2025-08-19-10:43:30.610.367 The argument is invalid.Reason: Set device failed, invalid device, set device=3, valid device range is [0, 2)

Solution: 1.Check the input parameter range of the function. 2.Check the function invocation relationship.

TraceBack (most recent call last):

rtSetDevice execute failed, reason=[device id error][FUNC:FuncErrorReason][FILE:error_message_manage.cc][LINE:53]

open device 3 failed, runtime result = 107001.[FUNC:ReportCallError][FILE:log_inner.cpp][LINE:161]

【原因】容器内可见显卡序号为0,1 ; 配置的不是0,1

【解决办法】修改显卡配置

4)模型加载成功, 但MindIE 服务在“启动 HTTP 监听端口”阶段失败。

[2025-08-19 10:44:57,046] [802] [281456198218080] [llm] [INFO] [logging.py-331] : >>>>>>id of kcache is 281462178701744 id of vcache is 281462178700016

2025-08-19 10:45:10,179 [INFO] standard_model.py:155 - >>>rank:0 done ibis manager to device

2025-08-19 10:45:10,179 [INFO] npu_compile.py:20 - 310P,some op does not support

2025-08-19 10:45:10,179 [INFO] standard_model.py:172 - >>>rank:0: return initialize success result: {'status': 'ok', 'npuBlockNum': '1695', 'cpuBlockNum': '426', 'maxPositionEmbeddings': '32768'}

2025-08-19 10:45:10,191 [INFO] standard_model.py:155 - >>>rank:1 done ibis manager to device

2025-08-19 10:45:10,192 [INFO] npu_compile.py:20 - 310P,some op does not support

2025-08-19 10:45:10,192 [INFO] standard_model.py:172 - >>>rank:1: return initialize success result: {'status': 'ok', 'npuBlockNum': '1656', 'cpuBlockNum': '426', 'maxPositionEmbeddings': '32768'}

ERR: Failed to init endpoint! Please check the service log or console output.

Killed

【原因】监听端口冲突,修改监听端口

更多推荐

已为社区贡献4条内容

已为社区贡献4条内容

所有评论(0)