OpenStack Victoria 集群部署 -prometheus监控接入- Ubuntu20.04

本文只是用做prometheus监控参考,非全面监控,请各位看官,根据实际环境进行调整。1 环境准备#系统环境root@prometheus113:~# lsb_release -aNo LSB modules are available.Distributor ID:UbuntuDescription:Ubuntu 20.04.2 LTSRelease:20.04Codename:focal#基

本文只是用做prometheus监控参考,非全面监控,请各位看官,根据实际环境进行调整。

1 环境准备

#系统环境

root@prometheus113:~# lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 20.04.2 LTS

Release: 20.04

Codename: focal

#基础软件安装&ntp时间同步

sed -i 's/security.ubuntu.com/mirrors.ustc.edu.cn/g' /etc/apt/sources.list

sed -i 's/archive.ubuntu.com/mirrors.ustc.edu.cn/g' /etc/apt/sources.list

#刷新源

apt-get update

#安装基础软件

apt install net-tools wget vim bash-completion lrzsz unzip zip ntpdate -y

#ntp时间同步

#修改时区

sudo cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

ntpdate ntp3.aliyun.com

echo "*/3 * * * * ntpdate ntp3.aliyun.com &> /dev/null" > /tmp/crontab

crontab /tmp/crontab

#安装docker

#阿里脚本安装

curl -fsSL https://get.docker.com | bash -s docker --mirror Aliyun

or

apt install docker -y

apt install docker.io -y

2 配置安装prometheus-本机监控

#创建prometheus.yml,这里放在/data/prometheus文件目录下,这个配置用来监控本机

#vim /data/prometheus/prometheus.yml

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Defaul

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is eve

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

#docker启动镜像,并将刚刚的文件映射进去

docker run -d --name=prometheus113 --restart=always\

-p 9090:9090 \

-v /data/prometheus/prometheus.yml:/etc/prometheus/prometheus.yml \

prom/prometheus

#查看下docker运行状态

root@prometheus113:/data/prometheus# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

969f0d86a540 prom/prometheus "/bin/prometheus --c…" About a minute ago Up About a minute 0.0.0.0:9090->9090/tcp prometheus113

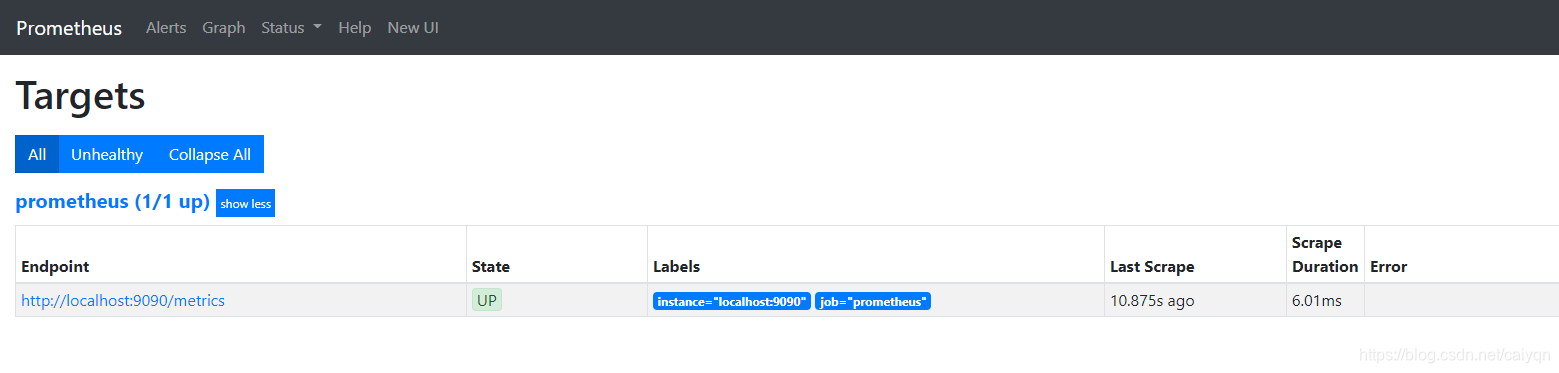

#访问web,确认是否成功

#http://192.168.3.113:9090

3 Grafana 部署

#启动命令,默认使用最新版本

docker run -d --name=grafana113 --restart=always -p 3000:3000 grafana/grafana

#查看容器是否启动正常

root@prometheus113:/data/prometheus# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f870f773bbf8 grafana/grafana "/run.sh" 26 seconds ago Up 25 seconds 0.0.0.0:3000->3000/tcp grafana113

969f0d86a540 prom/prometheus "/bin/prometheus --c…" 13 minutes ago Up 13 minutes 0.0.0.0:9090->9090/tcp prometheus113

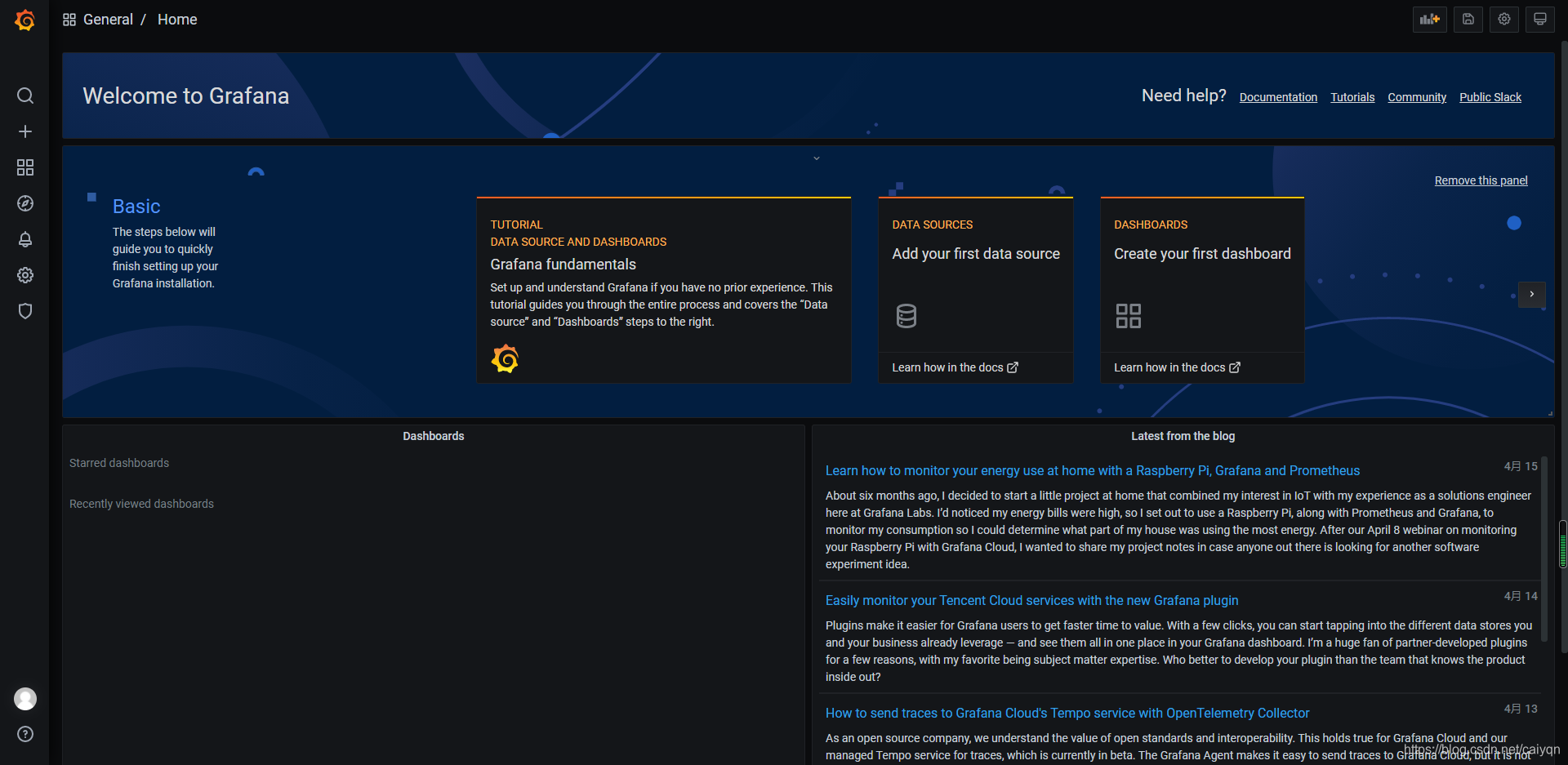

#登陆web查看,默认用户名密码都为admin

#http://192.168.3.113:3000/

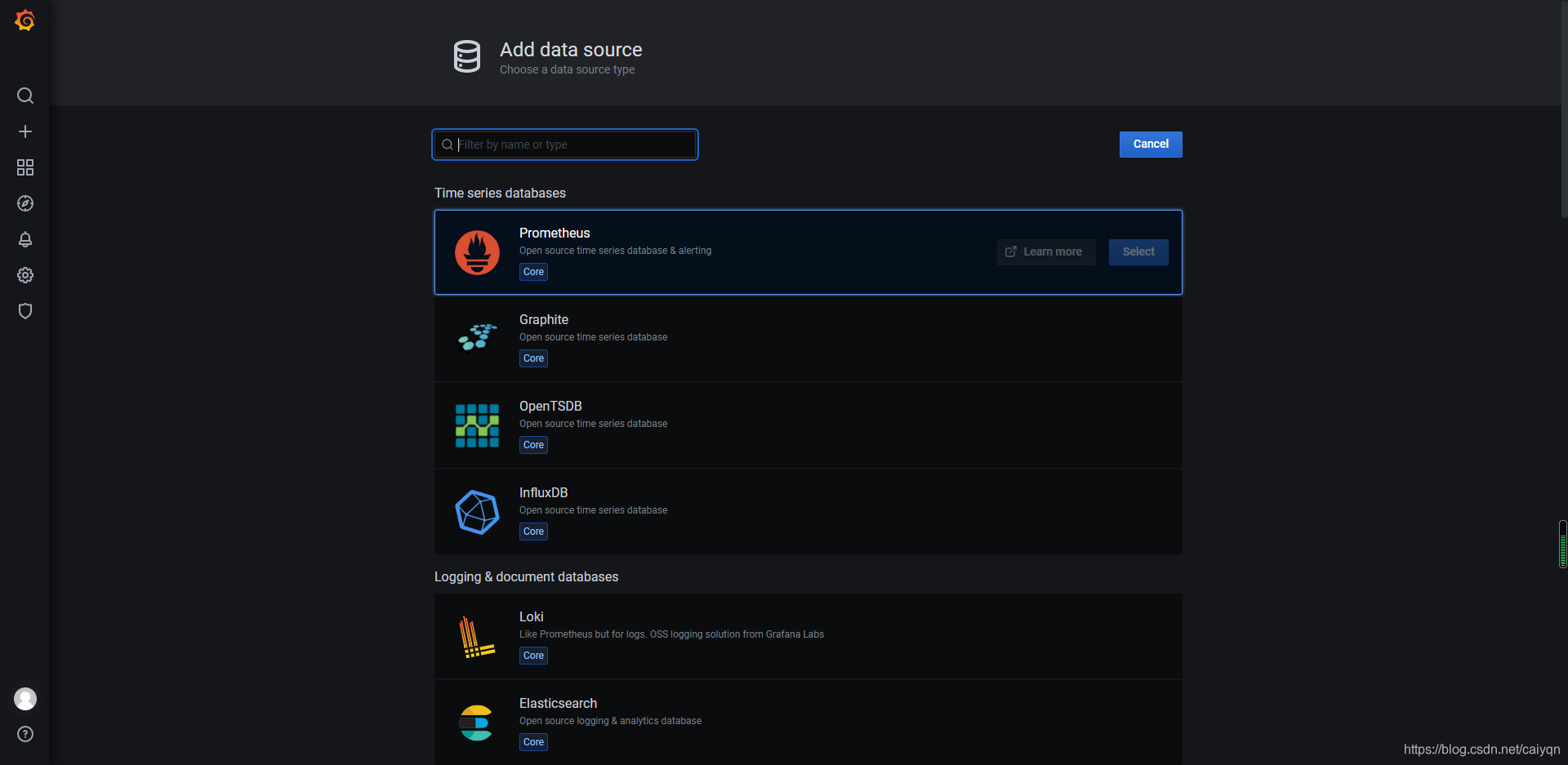

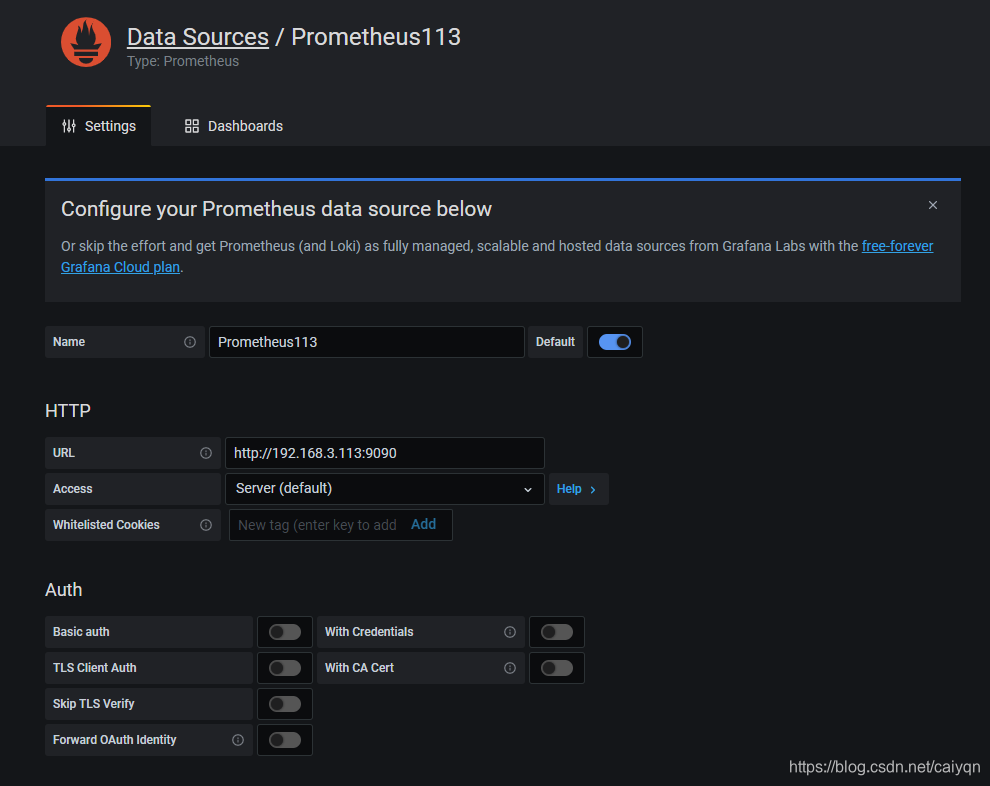

#添加prometheus的数据源

#填入对应的地址保存及测试

#http://192.168.3.113:9090

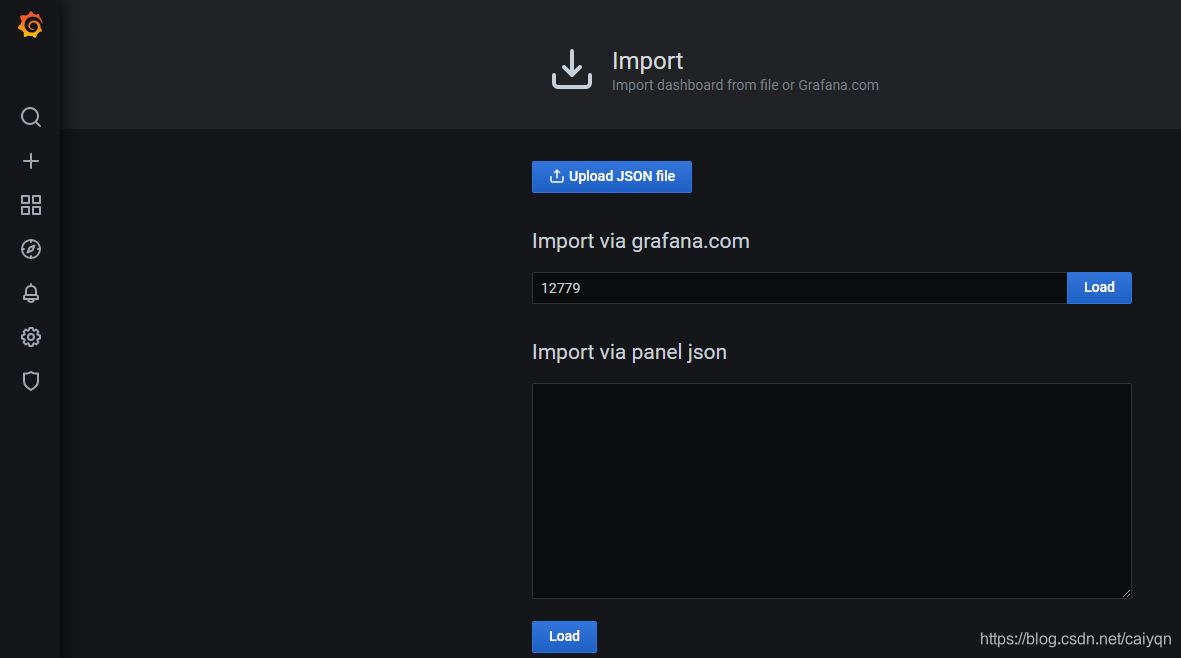

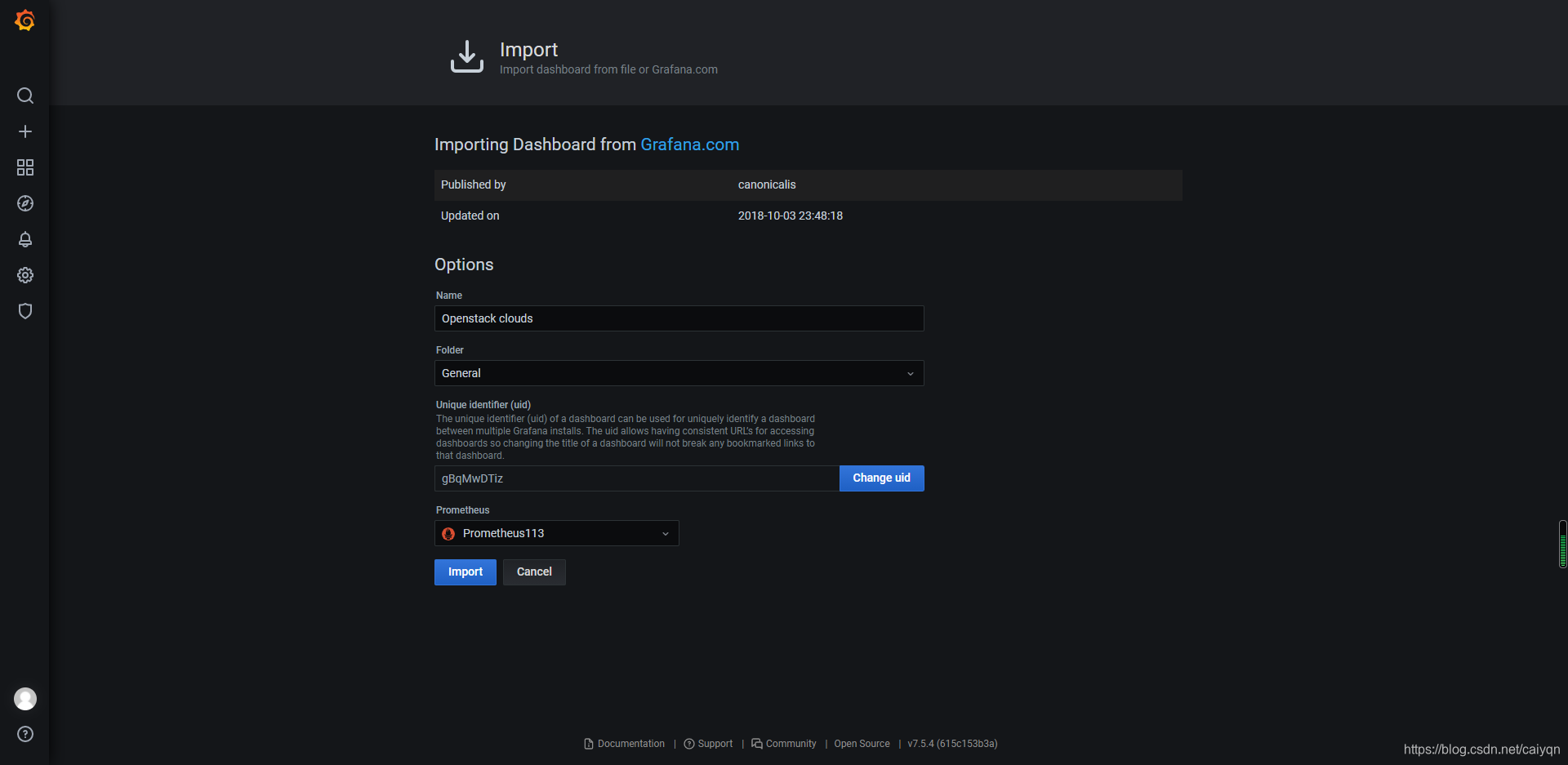

#导入dashboard仪表盘

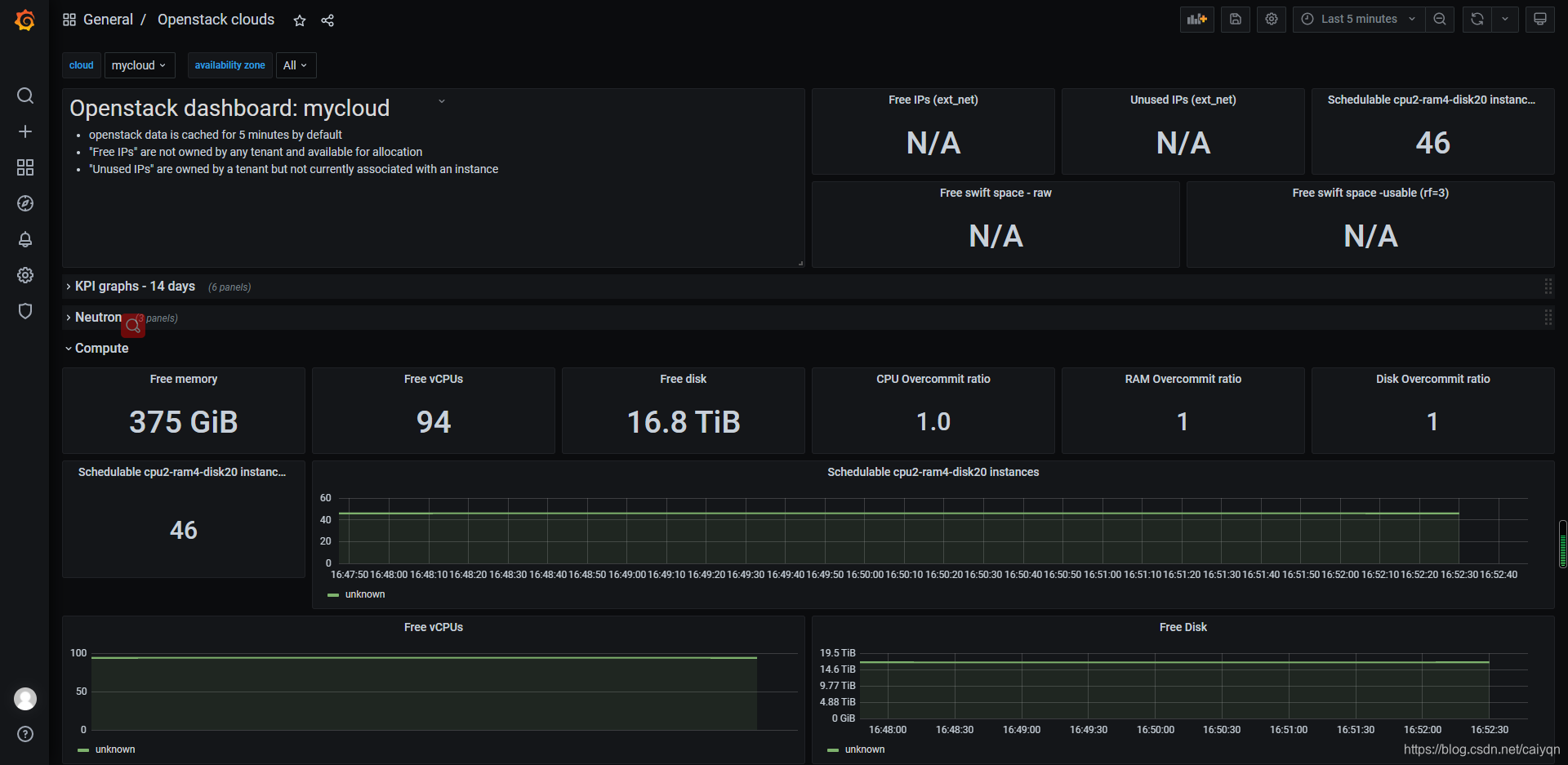

4 openstack监控接入

#新建验证文件

#vim admin.prometheus

OS_PROJECT_DOMAIN_NAME=Default

OS_USER_DOMAIN_NAME=Default

OS_PROJECT_NAME=admin

OS_USERNAME=admin

OS_PASSWORD=admin.123

OS_IDENTITY_API_VERSION=3

OS_AUTH_URL=http://192.168.1.100:5000/v3/

#启动openstack监控容器

docker run -itd \

--name=openstack100 \

--net=host \

--env-file=/data/prometheus/admin.prometheus \

--restart=unless-stopped moghaddas/prom-openstack-exporter

#查看是否顺利跑起来

root@prometheus113:/data/prometheus# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

7ce37f1965e3 moghaddas/prom-openstack-exporter "/bin/sh /wrapper.sh" About a minute ago Up About a minute openstack100

f870f773bbf8 grafana/grafana "/run.sh" About an hour ago Up About an hour 0.0.0.0:3000->3000/tcp grafana113

969f0d86a540 prom/prometheus "/bin/prometheus --c…" 2 hours ago Up 49 minutes 0.0.0.0:9090->9090/tcp prometheus113

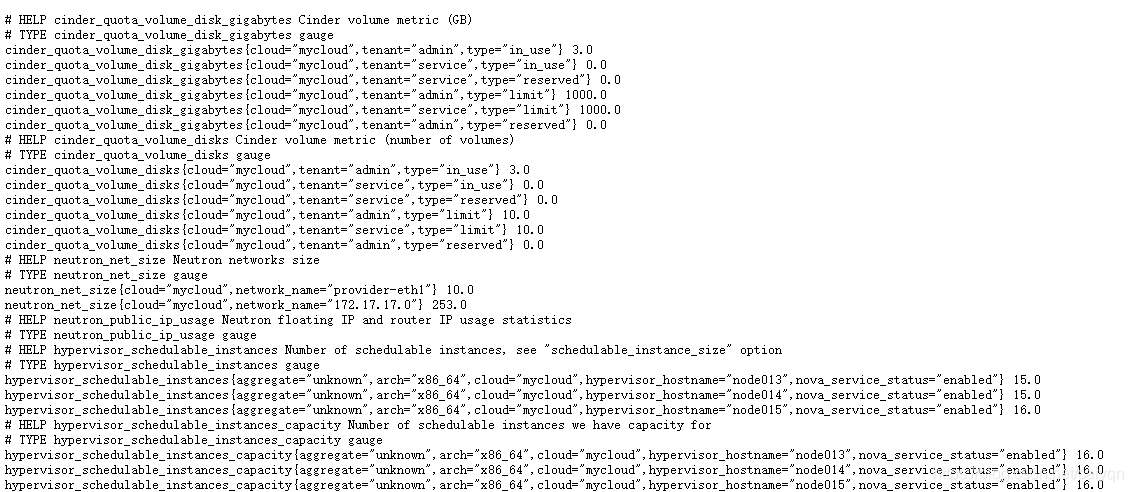

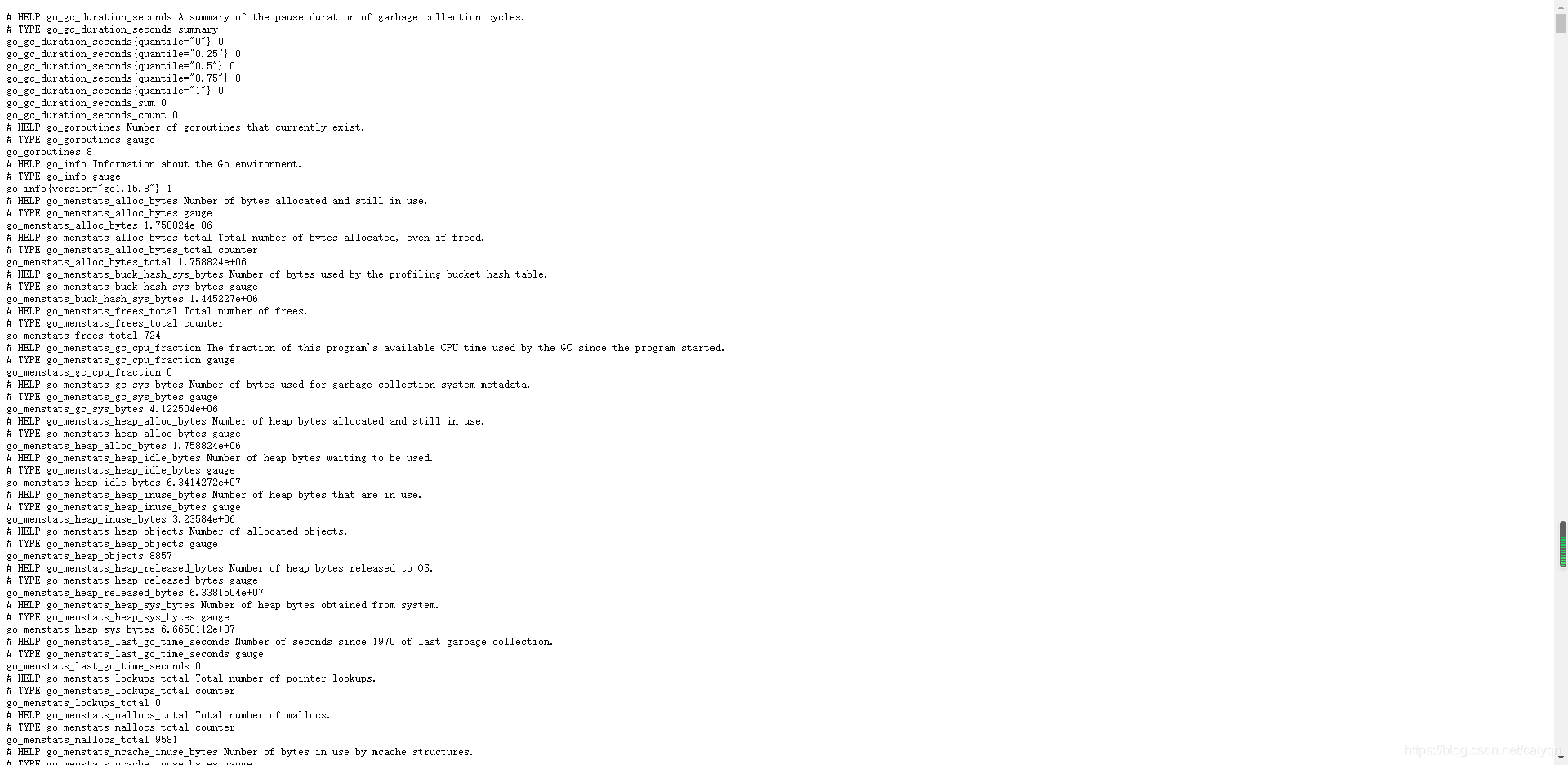

#查看是否有采集到数据

#在prometheus server的配置文件中加入job

#vim /data/prometheus/prometheus.yml

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: 'openstack100'

static_configs:

- targets: ['192.168.1.113:9183']

#重启prometheus server

docker restart prometheus113

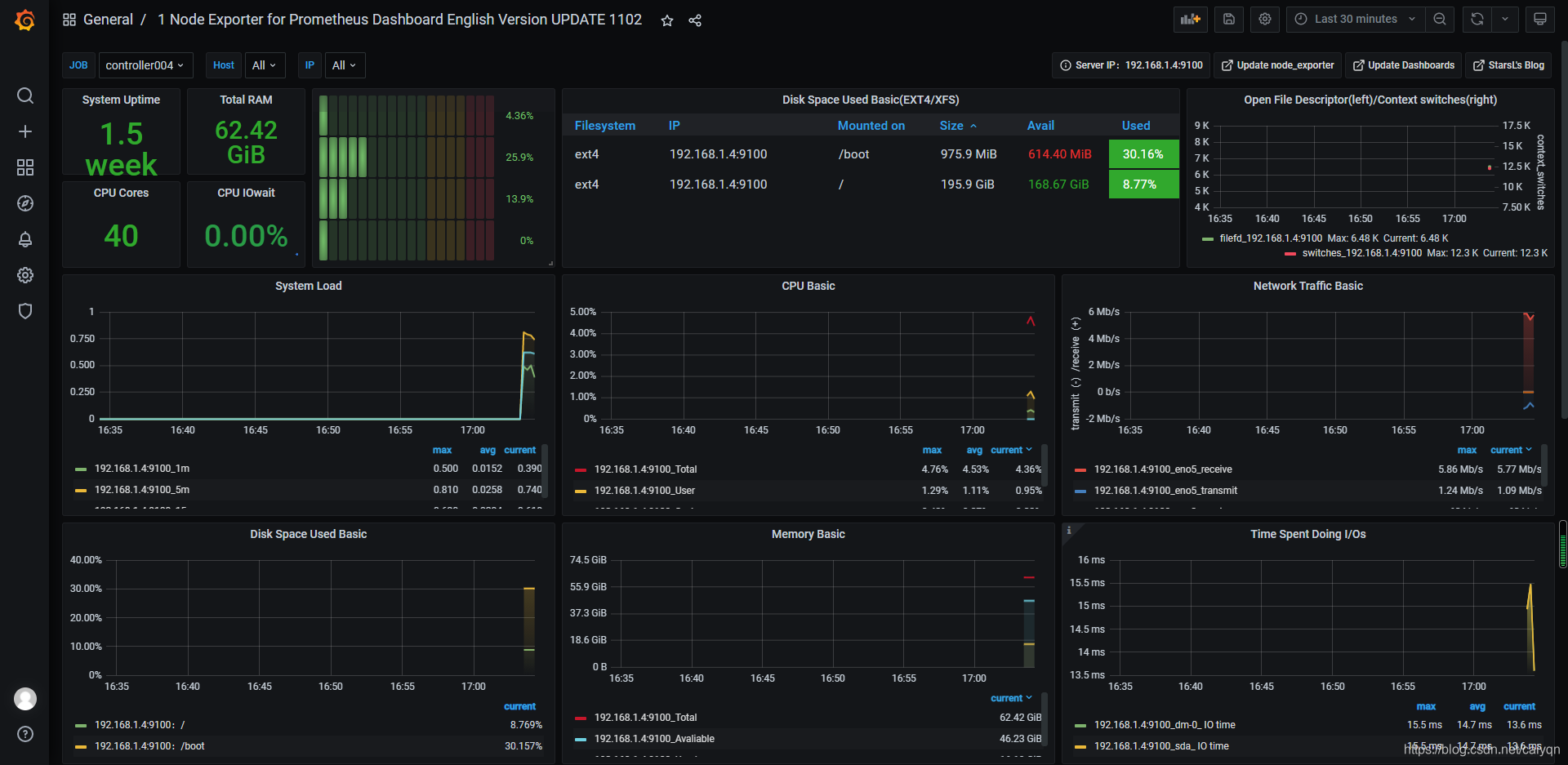

#导入官方仪表盘7924,这个是默认的,有很多字段都得要自己写监控脚本,自行根据实际使用接入

5 openstac宿主机监控

#宿主机安装docker,这里就不多说了,见1安装即可

#docker安装node-exporter

docker run -d \

--net="host" \

--pid="host" \

--name controller004 --restart=always \

-v "/:/host:ro,rslave" \

quay.io/prometheus/node-exporter \

--path.rootfs /host

#查看宿主机是否有运行

root@controller004:~# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

b3214aaf0d96 quay.io/prometheus/node-exporter "/bin/node_exporter …" About a minute ago Up About a minute controller004

#查看数据是否正常采集

#http://192.168.1.4:9100/metrics

#在prometheus server配置添加任务job任务,并重启容器

- job_name: 'controller004'

static_configs:

- targets: ['192.168.1.4:9100']

#导入仪表盘11207

X.至此结束

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)