【springboot之langchain4j学习】

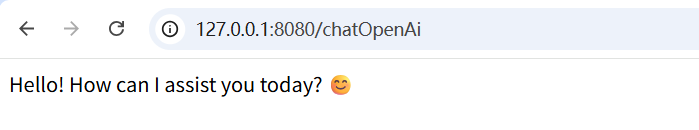

本文介绍了如何将LangChain4j与Spring Boot集成。主要内容包括:1) 版本要求(JDK17+SpringBoot3.x);2) 引入核心依赖(LangChain4j、OpenAI starter等);3) 配置LLM参数(在application.properties中设置API地址和密钥);4) 创建简单Demo(通过OpenAiChatModel实现聊天接口)。最后展示了一个

·

springboot之langchain4j学习-01

与springboot集成

前言

记录一下学习的过程

一、与springboot集成

- langchain4j需要集成版本说明

| jdk版本 | springboot版本 |

|---|---|

| 17 | 3.x |

- 引入依赖

<dependencyManagement>

<dependencies>

<dependency>

<groupId>dev.langchain4j</groupId>

<artifactId>langchain4j-bom</artifactId>

<version>1.4.0</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<dependencies>

<dependency>

<groupId>dev.langchain4j</groupId>

<artifactId>langchain4j-spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>dev.langchain4j</groupId>

<artifactId>langchain4j-open-ai-spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<!-- for Flux<String> support -->

<dependency>

<groupId>dev.langchain4j</groupId>

<artifactId>langchain4j-reactor</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-webflux</artifactId>

</dependency>

<!-- observability -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-tracing-bridge-otel</artifactId>

</dependency>

<dependency>

<groupId>io.opentelemetry</groupId>

<artifactId>opentelemetry-exporter-zipkin</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-aop</artifactId>

</dependency>

</dependencies>

- 引入LLM的配置(在application.properties)

langchain4j.open-ai.chat-model.base-url=https://dashscope.aliyuncs.com/compatible-mode/v1

langchain4j.open-ai.chat-model.api-key=xxxxxx

langchain4j.open-ai.chat-model.model-name=deepseek-v3.1

ps:现在是用的百炼,根据后面需求方式配置

- 简单写个demo试一下

@RestController

public class ChatAgentController {

@Autowired

private OpenAiChatModel openAiChatModel;

@GetMapping("/chatOpenAi")

public String openAi(@RequestParam(value = "message", defaultValue = "Hello") String message) {

return openAiChatModel.chat(message);

}

}

运行一下~~,搞定。

`

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)