昇腾AI创新大赛-算子挑战赛(S5赛季)【挑战性能命题】Lcm赛题

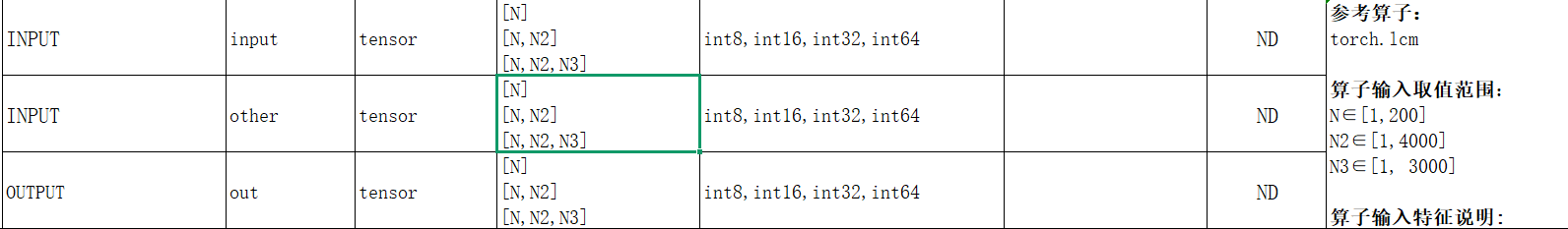

具体描述是:参考算子:torch.lcm算子输入取值范围:N∈[1,200]算子输入特征说明:输入x2应与x1 Shape一致,如果不一致,则对应的维度为1,可以广播成与x1 Shape一致,需要考虑广播实现。N~N3均可能为非32的整倍数,需要考虑非对齐场景。int64类型数据取值会超出int32取值范围,注意不要使用Cast强转int64到int32参考实现是。

题目

具体描述是:

参考算子:

torch.lcm

算子输入取值范围:

N∈[1,200]

N2∈[1,4000]

N3∈[1, 3000]

算子输入特征说明:

输入x2应与x1 Shape一致,如果不一致,则对应的维度为1,可以广播成与x1 Shape一致,需要考虑广播实现。

N~N3均可能为非32的整倍数,需要考虑非对齐场景。

int64类型数据取值会超出int32取值范围,注意不要使用Cast强转int64到int32

参考实现是torch.lcm

分析

运算本身

首先基本计算是 l c m ( x , y ) = x ∗ y / h c d ( x , y ) lcm(x,y)=x*y/hcd(x,y) lcm(x,y)=x∗y/hcd(x,y)

乘法部分我们可以调用向量接口。

关键就是计算 g c d gcd gcd。这里只能用类辗转相除的做法来。这样实现的话,对于每对 x , y x,y x,y,辗转相除的过程,进行的操作都是不同的。很难用向量接口,只能逐个计算。

广播

实际上还有一个隐藏难点,广播。就是可能出现两个张量大小不同的情况,但此时不一定无法计算。如果满足广播的条件,也就是x在一个维度可以是任意值,y要么这个维度大小是1,要么没有这个维度,就可以广播。

广播有两种实现,一种是我们全都交给一个核,然后这个核心获得全部数据,也就是不进行tiling,然后枚举结果张量每个维度的下标,相当于遍历整个结果张量,然后用广播规则去计算这个结果位置,分别是由x,y张量的哪两个位置运算得到的。

这样优点是简洁,缺点是慢,毕竟没有利用多核。

另一种实现是,对结果张量tiling,每个核心分配到结果张量的一段区间,然后我们枚举这个区间的下标,仍然去计算每个位置,是由xy的哪两个位置计算出来的。

(理论上还有一种,是对于xy进行tiling,也就是计算出每个核心,需要的x,y的区间,然后分配给核心,但这太难算)

溢出

x ∗ y x*y x∗y这里可能会溢出,然后这个处理器的溢出规则,和c++,torch的规则不太一样,需要手动去尝试,找到规则,然后特判。具体来说,int32和in64都需要先让他们自然溢出,然后取绝对值。

优化

求gcd,辗转相除里的取模运算太多了,取模实际上是一个很慢的东西,需要的时钟周期比乘法加法多一个数量级。考虑减少取模运算,那么有没有不用取模的求gcd算法呢,还真有。

就是二进制gcd/stein算法,可以全程只用if+位运算。具体可以看

这篇博客

大概实现就是这样

inline int gcd(int a, int b)

{

register int az=__builtin_ctz(a),bz=__builtin_ctz(b),z=az>bz?bz:az,diff;

b>>=bz;

while(a)

{

a>>=az;

diff=b-a;

az=__builtin_ctz(diff);

if(a<b)b=a;

a=diff<0?-diff:diff;

}

return b<<z;

}

这里的__builtin都是优化过的库函数,不是 O ( l o g ) O(log) O(log)而是 O ( 1 ) O(1) O(1)的

然后由于ascendC是继承了很多C标准库,所以这个也能用。

另外,对于多次查询,求gcd这种问题,一个经典的优化是,打表。可以把一些比较小的 ( x , y ) (x,y) (x,y)的结果预处理出来。

网上找的开源实现更进一步,他没有用 g c d [ x ] [ y ] gcd[x][y] gcd[x][y]保存预处理的 g c d ( x , y ) gcd(x,y) gcd(x,y),因为这样的话数据量还是有点大了,他是用的位运算,表的数据量只有 64 个 u i n t 64 64个uint64 64个uint64,实际上是把小于 64 64 64的每个数字用一个二进制位来表示,这样仍然存了 64 ∗ 64 64*64 64∗64的信息,查表时用位运算即可

有tiling的实现

op_kernel/lcm.cpp

#include "kernel_operator.h"

using namespace AscendC;

constexpr uint64_t pre[65] = {0, 1ull,3ull,5ull,11ull,17ull,39ull,65ull,139ull,261ull,531ull,1025ull,2095ull,4097ull,8259ull,16405ull,32907ull,65537ull,131367ull,262145ull,524827ull,1048645ull,2098179ull,4194305ull,8390831ull,16777233ull,33558531ull,67109125ull,134225995ull,268435457ull,536887863ull,1073741825ull,2147516555ull,4294968325ull,8590000131ull,17179869265ull,34359871791ull,68719476737ull,137439215619ull,274877911045ull,549756338843ull,1099511627777ull,2199024312423ull,4398046511105ull,8796095120395ull,17592186061077ull,35184376283139ull,70368744177665ull,140737496778927ull,281474976710721ull,562949970199059ull,1125899906908165ull,2251799847243787ull,4503599627370497ull,9007199321981223ull,18014398509483025ull,36028797153190091ull,72057594038190085ull,144115188344291331ull,288230376151711745ull,576460752840837695ull,1152921504606846977ull,2305843010287435779ull,4611686018428436805ull,9223372039002292363ull};

template<typename T> class BruteForce {

public:

__aicore__ inline BruteForce() {}

__aicore__ inline void Init(GM_ADDR x1, GM_ADDR x2, GM_ADDR y, uint32_t n1[3], uint32_t n2[3], uint32_t ny[3], uint32_t size, uint32_t length,uint32_t abs_flag,uint32_t dim_num) {

//ASSERT(GetBlockNum() != 0 && "block dim can not be zero!");

uint32_t total1 = 1, total2 = 1, totaly = 1;

for (int i = 0; i < dim_num; ++i) {

this->n1[i] = n1[i];

this->n2[i] = n2[i];

this->ny[i] = ny[i];

total1 *= n1[i];

total2 *= n2[i];

totaly *= ny[i];

}

x1Gm.SetGlobalBuffer((__gm__ T*)x1, total1);

x2Gm.SetGlobalBuffer((__gm__ T*)x2, total2);

const unsigned num_cores = GetBlockNum();

unsigned L = GetBlockIdx() * length;

unsigned R = (GetBlockIdx() + 1) * length;

if (R > size) {

R = size;

}

this->L = L;

this->R = R ;

yGm.SetGlobalBuffer((__gm__ T*)y, totaly);

this->abs_flag=abs_flag;

this->dim_num=dim_num;

}

__aicore__ inline void Process() {

if (L >= R) return; // 空区间直接返回

// --------------------------

// 1. 初始化:仅计算一次初始索引(含除法/取模,仅执行1次)

// --------------------------

uint32_t indices[3]; // 5维索引

uint32_t temp = L;

// 初始计算L对应的5维索引(仅此处用除法/取模)

for (int j = dim_num-1; j >= 0; --j) {

indices[j] = temp % ny[j];

temp = temp / ny[j];

}

// 初始计算idx1和idx2(x1和x2的索引)

uint32_t idx1 = 0, idx2 = 0;

for (int j = 0; j < dim_num; ++j) {

idx1 = idx1 * n1[j] + (indices[j] % n1[j]);

idx2 = idx2 * n2[j] + (indices[j] % n2[j]);

}

// --------------------------

// 2. 处理第一个元素(i=L)

// --------------------------

int64_t a = x1Gm.GetValue(idx1);

int64_t b = x2Gm.GetValue(idx2);

if (a == 0 || b == 0) {

yGm.SetValue(L, 0);

} else {

a = (a > 0 ? a : -a);

b = (b > 0 ? b : -b);

int64_t aa = a, bb = b;

int64_t shift = ScalarGetSFFValue<1>(a | b);

a >>= ScalarGetSFFValue<1>(a);

do {

b >>= ScalarGetSFFValue<1>(b);

if (a <= 64 && b <= 64) {

a = 64 - ScalarCountLeadingZero(pre[a] & pre[b]);

break;

}

if (a > b) {

a ^= b ^= a ^= b;

}

b -= a;

} while (b);

a = (a << shift);

T ans = aa / a * bb;

if (ans < 0 && abs_flag) {

ans = -ans;

}

yGm.SetValue(L, ans);

}

// --------------------------

// 3. 处理剩余元素(i从L+1到R-1):纯加法/乘法增量更新

// --------------------------

for (int i = L + 1; i < R; ++i) {

// --------------------------

// 3.1 增量更新indices(用加法+进位,无除法/取模)

// --------------------------

int carry = 1; // 从最后一维开始+1(模拟i递增1)

for (int j = dim_num-1; j >= 0 && carry; --j) {

indices[j] += carry; // 当前维度+1

if (indices[j] >= ny[j]) {

// 溢出:归零并向前进位

indices[j] = 0;

carry = 1;

} else {

// 未溢出:停止进位

carry = 0;

}

}

uint32_t idx1 = 0, idx2 = 0;

for (int j = 0; j < dim_num; ++j) {

int ind=indices[j];

idx1 = idx1 * n1[j] + (ind>=n1[j]?ind%n1[j]:ind); // 广播映射x1

idx2 = idx2 * n2[j] + (ind>=n2[j]?ind%n2[j]:ind); // 广播映射x2

}

// --------------------------

// 3.3 计算并写入当前元素(逻辑同前)

// --------------------------

a = x1Gm.GetValue(idx1);

b = x2Gm.GetValue(idx2);

if (a == 0 || b == 0) {

yGm.SetValue(i, 0);

} else {

a = (a > 0 ? a : -a);

b = (b > 0 ? b : -b);

int64_t aa = a, bb = b;

int64_t shift = ScalarGetSFFValue<1>(a | b);

a >>= ScalarGetSFFValue<1>(a);

do {

b >>= ScalarGetSFFValue<1>(b);

if (a <= 64 && b <= 64) {

a = 64 - ScalarCountLeadingZero(pre[a] & pre[b]);

break;

}

if (a > b) {

a ^= b ^= a ^= b;

}

b -= a;

} while (b);

a = (a << shift);

T ans = aa / a * bb;

if (ans < 0 && abs_flag) {

ans = -ans;

}

yGm.SetValue(i, ans);

}

}

}

private:

GlobalTensor<T> x1Gm, x2Gm, yGm;

uint32_t n1[3], n2[3], ny[3];

uint32_t L, R;

uint32_t abs_flag;

uint32_t dim_num;

};

template<typename T> class LCMKernalFast {

public:

__aicore__ inline LCMKernalFast() {}

__aicore__ inline void Init(GM_ADDR x1, GM_ADDR x2, GM_ADDR y, uint32_t size, uint32_t length,uint32_t abs_flag) {

//ASSERT(GetBlockNum() != 0 && "block dim can not be zero!");

const unsigned num_cores = GetBlockNum();

unsigned L = GetBlockIdx() * length;

unsigned R = (GetBlockIdx() + 1) * length;

if (R > size) {

R = size;

}

this->L = 0;

this->R = R - L;

x1Gm.SetGlobalBuffer((__gm__ T*)x1 + L, length);

x2Gm.SetGlobalBuffer((__gm__ T*)x2 + L, length);

yGm.SetGlobalBuffer((__gm__ T*)y + L, length);

this->abs_flag=abs_flag;

}

__aicore__ inline void Process() {

for (int i = L; i < R; ++i) {

int64_t a = x1Gm.GetValue(i);

int64_t b = x2Gm.GetValue(i);

if (a == 0||b==0){

yGm.SetValue(i, 0);

}

else {

a = (a > 0 ? a : -a);

b = (b > 0 ? b : -b);

int64_t aa=a,bb=b;

int64_t shift = ScalarGetSFFValue<1>(a | b);

a >>= ScalarGetSFFValue<1>(a);

do {

b >>= ScalarGetSFFValue<1>(b);

if(a <= 64 && b <= 64){

a = 64 - ScalarCountLeadingZero(pre[a] & pre[b]);

break;

}

if (a > b) {

a ^= b ^= a ^= b;

}

b -= a;

} while (b);

a =(a<<shift);

T ans=aa/a*bb;

if (ans<0 && abs_flag) {

ans=-ans;

}

yGm.SetValue(i, ans);

}

}

}

private:

GlobalTensor<T> x1Gm, x2Gm, yGm;

uint32_t L, R;

uint32_t abs_flag;

};

extern "C" __global__ __aicore__ void lcm(GM_ADDR input, GM_ADDR other, GM_ADDR out, GM_ADDR workspace, GM_ADDR tiling) {

GET_TILING_DATA(tiling_data, tiling);

if (tiling_data.status == 0) {

BruteForce<DTYPE_INPUT> op;

op.Init(input, other, out, tiling_data.n1, tiling_data.n2, tiling_data.ny,tiling_data.size,tiling_data.length,tiling_data.abs_flag,tiling_data.dim_num);

op.Process();

}

else {

LCMKernalFast<DTYPE_INPUT> op;

op.Init(input, other, out, tiling_data.size, tiling_data.length,tiling_data.abs_flag);

op.Process();

}

}

op_host/lcm.cpp

#include "lcm_tiling.h"

#include "register/op_def_registry.h"

#include "tiling/platform/platform_ascendc.h"

#include <iostream>

#include <algorithm>

namespace optiling {

static ge::graphStatus TilingFunc(gert::TilingContext* context)

{

LcmTilingData tiling;

auto ascendcPlatform = platform_ascendc::PlatformAscendC(context->GetPlatformInfo());

auto num_cores = ascendcPlatform.GetCoreNum();

uint32_t sizeofdatatype,tot=16;

auto dt = context->GetInputTensor(0)->GetDataType();

if (dt == ge::DT_INT16) {

sizeofdatatype = 2;

}

else if (dt == ge::DT_INT8) {

sizeofdatatype = 1;

tot=64;

}

else if (dt == ge::DT_INT32) {

sizeofdatatype = 4;

tiling.set_abs_flag(1);

}

else {

sizeofdatatype = 8;

tiling.set_abs_flag(1);

}

const uint32_t alignment = tot / sizeofdatatype;

const gert::StorageShape* x1_shape = context->GetInputShape(0);

auto dim1 = x1_shape->GetStorageShape().GetDimNum();

uint32_t n1[3] = {1, 1, 1};

for (int i = 0; i < dim1; ++i) {

n1[i] = x1_shape->GetStorageShape().GetDim(i);

}

const gert::StorageShape* x2_shape = context->GetInputShape(1);

auto dim2 = x2_shape->GetStorageShape().GetDimNum();

uint32_t n2[3] = {1, 1, 1};

for (int i = 0; i < dim2; ++i) {

n2[i + (dim1 - dim2)] = x2_shape->GetStorageShape().GetDim(i);

}

int dim = std::max(dim1, dim2);

tiling.set_dim_num(dim);

uint32_t ny[3] = {1, 1, 1};

uint32_t size = 1;

for (int i = 0; i < dim; ++i) {

ny[i] = std::max(n1[i], n2[i]);

size *= ny[i];

}

tiling.set_n1(n1);

tiling.set_n2(n2);

tiling.set_ny(ny);

//std::cout << "n1: " << n1[0] << " " << n1[1] << " " << n1[2] << " " << n1[3] << " " << n1[4] << " " << std::endl;

//std::cout << "n2: " << n2[0] << " " << n2[1] << " " << n2[2] << " " << n2[3] << " " << n2[4] << " " << std::endl;

//std::cout << "ny: " << ny[0] << " " << ny[1] << " " << ny[2] << " " << ny[3] << " " << ny[4] << " " << std::endl;

int status = 2;

for (int i = 0; i < 3; ++i) {

if (n1[i] != n2[i]) {

status = 0;

}

}

if(size <= num_cores * alignment) status = 0;

tiling.set_status(status);

tiling.set_size(size);

unsigned length = (size - 1) / num_cores + 1;

while (length % alignment != 0) length += 1;

tiling.set_length(length);

if (status == 0) {

//std::cout<<"Broadcast"<<std::endl;

context->SetBlockDim(num_cores);

}

else {

//std::cout << "Multicore" << std::endl;

context->SetBlockDim(num_cores);

}

tiling.SaveToBuffer(context->GetRawTilingData()->GetData(), context->GetRawTilingData()->GetCapacity());

context->GetRawTilingData()->SetDataSize(tiling.GetDataSize());

return ge::GRAPH_SUCCESS;

}

}

namespace ge {

static ge::graphStatus InferShape(gert::InferShapeContext* context) {

const gert::Shape* x1_shape = context->GetInputShape(0);

const gert::Shape* x2_shape = context->GetInputShape(1);

gert::Shape* y_shape = context->GetOutputShape(0);

// 1. 计算广播后的维度(与TilingFunc中ny的逻辑一致)

int dim1 = x1_shape->GetDimNum();

int dim2 = x2_shape->GetDimNum();

int max_dim = std::max(dim1, dim2);

std::vector<int64_t> broadcast_dims; // 存储广播后的维度

for (int i = 0; i < max_dim; ++i) {

int64_t d1 = (i >= max_dim - dim1) ? x1_shape->GetDim(i - (max_dim - dim1)) : 1;

int64_t d2 = (i >= max_dim - dim2) ? x2_shape->GetDim(i - (max_dim - dim2)) : 1;

if (d1 != d2 && d1 != 1 && d2 != 1) {

std::cerr << "Broadcast failed: incompatible dims " << d1 << " vs " << d2 << std::endl;

return ge::GRAPH_FAILED;

}

broadcast_dims.push_back(std::max(d1, d2));

}

// 2. 关键修改:通过默认构造+AddDim添加维度(避开initializer_list)

gert::Shape new_shape; // 默认构造空Shape

new_shape.SetDimNum(broadcast_dims.size());

for (int i=0;i<broadcast_dims.size();i++) {

new_shape.SetDim(i,broadcast_dims[i]); // 逐个添加维度(假设Shape类有AddDim方法)

}

*y_shape = new_shape; // 赋值给输出形状

return ge::GRAPH_SUCCESS;

}

static ge::graphStatus InferDataType(gert::InferDataTypeContext *context)

{

const auto inputDataType = context->GetInputDataType(0);

context->SetOutputDataType(0, inputDataType);

return ge::GRAPH_SUCCESS;

}

}

namespace ops {

class Lcm : public OpDef {

public:

explicit Lcm(const char* name) : OpDef(name)

{

this->Input("input")

.ParamType(REQUIRED)

.DataType({ge::DT_INT8, ge::DT_INT16, ge::DT_INT32, ge::DT_INT64})

.Format({ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND})

.UnknownShapeFormat({ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND});

this->Input("other")

.ParamType(REQUIRED)

.DataType({ge::DT_INT8, ge::DT_INT16, ge::DT_INT32, ge::DT_INT64})

.Format({ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND})

.UnknownShapeFormat({ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND});

this->Output("out")

.ParamType(REQUIRED)

.DataType({ge::DT_INT8, ge::DT_INT16, ge::DT_INT32, ge::DT_INT64})

.Format({ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND})

.UnknownShapeFormat({ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND});

this->SetInferShape(ge::InferShape).SetInferDataType(ge::InferDataType);

this->AICore()

.SetTiling(optiling::TilingFunc);

this->AICore().AddConfig("ascend910b");

}

};

OP_ADD(Lcm);

}

op_host/lcm_tiling.h

#include "register/tilingdata_base.h"

namespace optiling {

BEGIN_TILING_DATA_DEF(LcmTilingData)

TILING_DATA_FIELD_DEF_ARR(uint32_t, 3, n1);

TILING_DATA_FIELD_DEF_ARR(uint32_t, 3, n2);

TILING_DATA_FIELD_DEF_ARR(uint32_t, 3, ny);

TILING_DATA_FIELD_DEF(uint32_t, status);

TILING_DATA_FIELD_DEF(uint32_t, size);

TILING_DATA_FIELD_DEF(uint32_t, length);

TILING_DATA_FIELD_DEF(uint32_t, abs_flag);

TILING_DATA_FIELD_DEF(uint32_t, dim_num);

END_TILING_DATA_DEF;

REGISTER_TILING_DATA_CLASS(Lcm, LcmTilingData)

}

无tiling的实现

op_kernel/lcm.cpp

#include "kernel_operator.h"

using namespace AscendC;

constexpr uint64_t pre[65] = {0, 1ull,3ull,5ull,11ull,17ull,39ull,65ull,139ull,261ull,531ull,1025ull,2095ull,4097ull,8259ull,16405ull,32907ull,65537ull,131367ull,262145ull,524827ull,1048645ull,2098179ull,4194305ull,8390831ull,16777233ull,33558531ull,67109125ull,134225995ull,268435457ull,536887863ull,1073741825ull,2147516555ull,4294968325ull,8590000131ull,17179869265ull,34359871791ull,68719476737ull,137439215619ull,274877911045ull,549756338843ull,1099511627777ull,2199024312423ull,4398046511105ull,8796095120395ull,17592186061077ull,35184376283139ull,70368744177665ull,140737496778927ull,281474976710721ull,562949970199059ull,1125899906908165ull,2251799847243787ull,4503599627370497ull,9007199321981223ull,18014398509483025ull,36028797153190091ull,72057594038190085ull,144115188344291331ull,288230376151711745ull,576460752840837695ull,1152921504606846977ull,2305843010287435779ull,4611686018428436805ull,9223372039002292363ull};

template<typename T> class BruteForce {

public:

__aicore__ inline BruteForce() {}

__aicore__ inline void Init(GM_ADDR x1, GM_ADDR x2, GM_ADDR y, uint32_t n1[5], uint32_t n2[5], uint32_t ny[5]) {

ASSERT(GetBlockNum() != 0 && "block dim can not be zero!");

uint32_t total1 = 1, total2 = 1, totaly = 1;

for (int i = 0; i < 5; ++i) {

this->n1[i] = n1[i];

this->n2[i] = n2[i];

this->ny[i] = ny[i];

total1 *= n1[i];

total2 *= n2[i];

totaly *= ny[i];

}

x1Gm.SetGlobalBuffer((__gm__ T*)x1, total1);

x2Gm.SetGlobalBuffer((__gm__ T*)x2, total2);

yGm.SetGlobalBuffer((__gm__ T*)y, totaly);

}

__aicore__ inline void Process() {

for (uint32_t i0 = 0; i0 < ny[0]; ++i0) {

for (uint32_t i1 = 0; i1 < ny[1]; ++i1) {

for (uint32_t i2 = 0; i2 < ny[2]; ++i2) {

for (uint32_t i3 = 0; i3 < ny[3]; ++i3) {

for (uint32_t i4 = 0; i4 < ny[4]; ++i4) {

uint32_t indices[5] = {i0, i1, i2, i3, i4};

uint32_t idx1 = 0, idx2 = 0, idxy = 0;

for (int j = 0; j < 5; ++j) {

idx1 = idx1 * n1[j] + indices[j] % n1[j];

idx2 = idx2 * n2[j] + indices[j] % n2[j];

idxy = idxy * ny[j] + indices[j];

}

int64_t a = x1Gm.GetValue(idx1);

int64_t b = x2Gm.GetValue(idx2);

if (a < 0) {

a = -a;

}

if (b < 0) {

b = -b;

}

int64_t aa=a,bb=b;

while (b) {

int64_t A = b;

int64_t B = a % b;

a = A;

b = B;

}

T ans=aa/a*bb;

if constexpr (std::is_same<T, int64_t>::value) {

if(ans<0)ans=-ans;

}

if constexpr (std::is_same<T, int32_t>::value) {

if(ans<0)ans=-ans;

}

yGm.SetValue(idxy, ans);

}

}

}

}

}

}

private:

GlobalTensor<T> x1Gm, x2Gm, yGm;

uint32_t n1[5], n2[5], ny[5];

};

template<typename T> class GCDKernalFast {

public:

__aicore__ inline GCDKernalFast() {}

__aicore__ inline void Init(GM_ADDR x1, GM_ADDR x2, GM_ADDR y, uint32_t size, uint32_t length) {

ASSERT(GetBlockNum() != 0 && "block dim can not be zero!");

const unsigned num_cores = GetBlockNum();

unsigned L = GetBlockIdx() * length;

unsigned R = (GetBlockIdx() + 1) * length;

if (R > size) {

R = size;

}

this->L = 0;

this->R = R - L;

x1Gm.SetGlobalBuffer((__gm__ T*)x1 + L, length);

x2Gm.SetGlobalBuffer((__gm__ T*)x2 + L, length);

yGm.SetGlobalBuffer((__gm__ T*)y + L, length);

}

__aicore__ inline void Process() {

for (int i = L; i < R; ++i) {

int64_t a = x1Gm.GetValue(i);

int64_t b = x2Gm.GetValue(i);

a = (a > 0 ? a : -a);

b = (b > 0 ? b : -b);

int64_t aa=a,bb=b;

if (a == 0){

yGm.SetValue(i, b);

}

else if (b == 0) {

yGm.SetValue(i, a);

}

else {

int64_t shift = ScalarGetSFFValue<1>(a | b);

a >>= ScalarGetSFFValue<1>(a);

do {

b >>= ScalarGetSFFValue<1>(b);

if(a <= 64 && b <= 64){

a = 64 - ScalarCountLeadingZero(pre[a] & pre[b]);

break;

}

if (a > b) {

a ^= b ^= a ^= b;

}

b -= a;

} while (b);

a =(a<<shift);

T ans=aa/a*bb;

if constexpr (std::is_same<T, int64_t>::value) {

if(ans<0)ans=-ans;

}

if constexpr (std::is_same<T, int32_t>::value) {

if(ans<0)ans=-ans;

}

yGm.SetValue(i, ans);

}

}

}

private:

GlobalTensor<T> x1Gm, x2Gm, yGm;

uint32_t L, R;

};

extern "C" __global__ __aicore__ void lcm(GM_ADDR input, GM_ADDR other, GM_ADDR out, GM_ADDR workspace, GM_ADDR tiling) {

GET_TILING_DATA(tiling_data, tiling);

if (tiling_data.status == 0) {

BruteForce<DTYPE_INPUT> op;

op.Init(input, other, out, tiling_data.n1, tiling_data.n2, tiling_data.ny);

op.Process();

}

else {

GCDKernalFast<DTYPE_INPUT> op;

op.Init(input, other, out, tiling_data.size, tiling_data.length);

op.Process();

}

// BruteForce<DTYPE_INPUT> op;

// op.Init(input, other, out, tiling_data.n1, tiling_data.n2, tiling_data.ny);

// op.Process();

}

op_host/lcm.cpp

#include "lcm_tiling.h"

#include "register/op_def_registry.h"

#include "tiling/platform/platform_ascendc.h"

#include <iostream>

#include <algorithm>

namespace optiling {

static ge::graphStatus TilingFunc(gert::TilingContext* context)

{

LcmTilingData tiling;

auto ascendcPlatform = platform_ascendc::PlatformAscendC(context->GetPlatformInfo());

auto num_cores = ascendcPlatform.GetCoreNum();

uint32_t sizeofdatatype = 2;

auto dt = context->GetInputTensor(0)->GetDataType();

if (dt == ge::DT_INT16) {

sizeofdatatype = 2;

}

else if (dt == ge::DT_INT32) {

sizeofdatatype = 4;

}

else {

sizeofdatatype = 8;

}

const uint32_t alignment = 64 / sizeofdatatype;

const gert::StorageShape* x1_shape = context->GetInputShape(0);

auto dim1 = x1_shape->GetStorageShape().GetDimNum();

uint32_t n1[5] = {1, 1, 1, 1, 1};

for (int i = 0; i < dim1; ++i) {

n1[i] = x1_shape->GetStorageShape().GetDim(i);

}

const gert::StorageShape* x2_shape = context->GetInputShape(1);

auto dim2 = x2_shape->GetStorageShape().GetDimNum();

uint32_t n2[5] = {1, 1, 1, 1, 1};

for (int i = 0; i < dim2; ++i) {

n2[i + (dim1 - dim2)] = x2_shape->GetStorageShape().GetDim(i);

}

int dim = std::max(dim1, dim2);

uint32_t ny[5] = {1, 1, 1, 1, 1};

uint32_t size = 1;

for (int i = 0; i < dim; ++i) {

ny[i] = std::max(n1[i], n2[i]);

size *= ny[i];

}

tiling.set_n1(n1);

tiling.set_n2(n2);

tiling.set_ny(ny);

std::cout << "n1: " << n1[0] << " " << n1[1] << " " << n1[2] << " " << n1[3] << " " << n1[4] << " " << std::endl;

std::cout << "n2: " << n2[0] << " " << n2[1] << " " << n2[2] << " " << n2[3] << " " << n2[4] << " " << std::endl;

std::cout << "ny: " << ny[0] << " " << ny[1] << " " << ny[2] << " " << ny[3] << " " << ny[4] << " " << std::endl;

int status = 2;

for (int i = 0; i < 5; ++i) {

if (n1[i] != n2[i]) {

status = 0;

}

}

if(size <= num_cores * alignment) status = 0;

tiling.set_status(status);

tiling.set_size(size);

unsigned length = (size - 1) / num_cores + 1;

while (length % alignment != 0) length += 1;

tiling.set_length(length);

if (status == 0) {

context->SetBlockDim(1);

}

else {

std::cout << "Multicore" << std::endl;

context->SetBlockDim(num_cores);

}

tiling.SaveToBuffer(context->GetRawTilingData()->GetData(), context->GetRawTilingData()->GetCapacity());

context->GetRawTilingData()->SetDataSize(tiling.GetDataSize());

return ge::GRAPH_SUCCESS;

}

}

namespace ge {

static ge::graphStatus InferShape(gert::InferShapeContext* context)

{

const gert::Shape* x1_shape = context->GetInputShape(0);

gert::Shape* y_shape = context->GetOutputShape(0);

*y_shape = *x1_shape;

return GRAPH_SUCCESS;

}

static ge::graphStatus InferDataType(gert::InferDataTypeContext *context)

{

const auto inputDataType = context->GetInputDataType(0);

context->SetOutputDataType(0, inputDataType);

return ge::GRAPH_SUCCESS;

}

}

namespace ops {

class Lcm : public OpDef {

public:

explicit Lcm(const char* name) : OpDef(name)

{

this->Input("input")

.ParamType(REQUIRED)

.DataType({ge::DT_INT8, ge::DT_INT16, ge::DT_INT32, ge::DT_INT64})

.Format({ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND})

.UnknownShapeFormat({ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND});

this->Input("other")

.ParamType(REQUIRED)

.DataType({ge::DT_INT8, ge::DT_INT16, ge::DT_INT32, ge::DT_INT64})

.Format({ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND})

.UnknownShapeFormat({ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND});

this->Output("out")

.ParamType(REQUIRED)

.DataType({ge::DT_INT8, ge::DT_INT16, ge::DT_INT32, ge::DT_INT64})

.Format({ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND})

.UnknownShapeFormat({ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND, ge::FORMAT_ND});

this->SetInferShape(ge::InferShape).SetInferDataType(ge::InferDataType);

this->AICore()

.SetTiling(optiling::TilingFunc);

this->AICore().AddConfig("ascend910b");

}

};

OP_ADD(Lcm);

}

op_host/lcm_tiling.h

#include "register/tilingdata_base.h"

namespace optiling {

BEGIN_TILING_DATA_DEF(LcmTilingData)

TILING_DATA_FIELD_DEF_ARR(uint32_t, 5, n1);

TILING_DATA_FIELD_DEF_ARR(uint32_t, 5, n2);

TILING_DATA_FIELD_DEF_ARR(uint32_t, 5, ny);

TILING_DATA_FIELD_DEF(uint32_t, status);

TILING_DATA_FIELD_DEF(uint32_t, size);

TILING_DATA_FIELD_DEF(uint32_t, length);

END_TILING_DATA_DEF;

REGISTER_TILING_DATA_CLASS(Lcm, LcmTilingData)

}

环境配置

运行脚本安装的ascendC不是在用户目录下的,所以重启就没了。需要每次重启之后都重新运行脚本安装

创建算子工程

可以用msoopgen一件创建,具体见文档

大概用法是

msopgen gen -i {*.json} -f {framework type} -c {Compute Resource} -lan cpp -out {Output Path}

其中.json文件是算子工程的配置文件,需要声明算子的名称,参数的名称,类型

[

{

"op": "BitwiseLeftShift",

"language": "cpp",

"input_desc": [

{

"name": "input",

"param_type": "required",

"format": [

"ND",

"ND",

"ND",

"ND"

],

"type": [

"int8",

"int16",

"int32",

"int64"

]

},

{

"name": "other",

"param_type": "required",

"format": [

"ND",

"ND",

"ND",

"ND"

],

"type": [

"int8",

"int16",

"int32",

"int64"

]

}

],

"output_desc": [

{

"name": "out",

"param_type": "required",

"format": [

"ND",

"ND",

"ND",

"ND"

],

"type": [

"int8",

"int16",

"int32",

"int64"

]

}

]

}

]

-c格式为ai_core-{soc version},如果用的是910b,就是ai_core-910B

-o可以没有,没有的话就默认在当前目录创建工程

编译

算子工程目录下有个build.sh,直接运行即可。成功后会得到一个可执行文件build_out/custom_opp_euleros_aarch64.run,

运行后会把当前算子安装到ascendC算子库里。

这里注意,这种方式同时只能安装一个算子,因为安装时的名称都是一样的,后面的会覆盖前面的。

测试

比赛给的压缩包里,首先运行init_pybind.sh,安装环境,这个只用安装一次就行了。

后面进入对应赛题的文件夹,例如Lcm赛题就是case_910b/Lcm,里面有一个run.sh脚本,执行bash run.sh x,即可运行test_op.py里定义的第x个测试用例。如果运行时检测不到算子的.wheel文件,会先编译得到并安装。

如果修改了算子实现,想重新测试,需要删掉dist和build两个文件夹,否则不会重新安装.wheel,也就无法测试最新实现

更多推荐

已为社区贡献5条内容

已为社区贡献5条内容

所有评论(0)