【AI】大语言模型的技术挑战:从理论到实践的全面解析

本文深入探讨了大语言模型面临的多维度技术挑战。在基础架构方面,模型参数呈指数级增长(如GPT-3达1750亿参数),导致计算资源需求激增、训练成本高昂(数百万美元)及显著能耗(GPT-3碳足迹达数百吨CO2)。数据质量方面,训练数据存在时间分布不均(2020年后占比40%)、性别和社会经济偏见等问题。这些挑战揭示了当前大语言模型发展中的关键瓶颈,包括资源消耗、环境影响和伦理风险,为AI技术的可持续

👽 大语言模型的技术挑战:从理论到实践的全面解析

💻引言

在人工智能的浪潮中,大语言模型(Large Language Models,LLMs)无疑是最耀眼的明星之一。从最初的

RNN到如今的GPT、BERT等模型,这些AI系统展现出了令人惊叹的语言理解和生成能力。然而,在光鲜亮丽的表象之下,大语言模型面临着诸多技术挑战。本文老曹将从多个维度深入分析这些挑战,为读者呈现一个更加全面和客观的技术图景。

🏠 一、基础架构挑战

✅1.1 计算资源的指数级增长

大语言模型的核心在于其庞大的参数规模。以GPT-3为例,它拥有1750亿个参数,而据传GPT-4的参数量更是达到了万亿级别。这种规模的增长带来了显著的计算挑战:

class ModelScalingChallenge:

def __init__(self):

self.models = {

"GPT-2": 1.5e9, # 15亿参数

"GPT-3": 175e9, # 1750亿参数

"PaLM": 540e9, # 5400亿参数

"LLaMA-2": 70e9, # 700亿参数

}

def compute_growth_rate(self):

"""计算模型参数增长速率"""

import numpy as np

params = list(self.models.values())

years = np.array([2019, 2020, 2022, 2023])

# 简化的指数增长模型

growth_rate = np.polyfit(years, np.log(params), 1)[0]

return np.exp(growth_rate) # 年增长率

def resource_impact(self, model_name):

"""分析特定模型的资源需求"""

params = self.models[model_name]

memory_per_param = 4 # 4 bytes for FP32

total_memory = params * memory_per_param

return {

"parameters": params,

"memory_gb": total_memory / (1024**3),

"training_cost_estimate": f"${params * 0.0001:,.0f} - ${params * 0.001:,.0f} million"

}

# 实际使用示例

challenge = ModelScalingChallenge()

gpt3_analysis = challenge.resource_impact("GPT-3")

print(f"GPT-3内存需求: {gpt3_analysis['memory_gb']:.2f} GB")

✅1.2 训练时间与能耗问题

大模型的训练不仅需要巨额资金投入,还伴随着巨大的能源消耗:

// 模型训练能耗计算

function calculateTrainingEnergy(modelParams, trainingTimeHours) {

const flopsPerParam = 6; // 每个参数约需6次FLOPs

const totalFlops = modelParams * flopsPerParam;

// 假设现代GPU效率约为 150 TFLOPS

const gpuEfficiency = 150e12; // FLOPS

const theoreticalTime = totalFlops / gpuEfficiency;

// 实际能耗考虑并行效率(约70%)

const parallelEfficiency = 0.7;

const actualTime = theoreticalTime / parallelEfficiency;

// 能耗计算 (假设每TFLOPS约消耗0.5瓦)

const powerPerTFLOPS = 0.5; // watts

const totalEnergyTWh = (totalFlops / 1e12) * powerPerTFLOPS * (trainingTimeHours / 1000);

return {

theoreticalTrainingTime: theoreticalTime / 3600, // 小时

actualTrainingTime: actualTime / 3600, // 小时

energyConsumption: totalEnergyTWh, // TWh

carbonFootprint: totalEnergyTWh * 0.48 // 假设电网碳强度为480g CO2/kWh

};

}

// GPT-3训练能耗估算

const gpt3Energy = calculateTrainingEnergy(175e9, 24 * 30); // 假设一个月训练时间

console.log(`GPT-3碳足迹估算: ${gpt3Energy.carbonFootprint.toFixed(2)} tons CO2`);

⚡ 二、数据质量与偏见挑战

✅2.1 训练数据的局限性

大语言模型的"智慧"来源于其训练数据,但数据本身存在诸多问题:

import java.util.*;

import java.util.stream.Collectors;

public class TrainingDataChallenges {

private Map<String, List<String>> dataSources;

private Set<String> knownBiases;

public TrainingDataChallenges() {

this.dataSources = new HashMap<>();

this.knownBiases = new HashSet<>();

initializeDataSources();

initializeKnownBiases();

}

private void initializeDataSources() {

dataSources.put("webText", Arrays.asList(

"CommonCrawl", "WebText", "BooksCorpus", "Wikipedia"

));

dataSources.put("academic", Arrays.asList(

"arXiv", "PubMed", "JSTOR"

));

dataSources.put("socialMedia", Arrays.asList(

"Twitter", "Reddit", "Facebook"

));

}

private void initializeKnownBiases() {

knownBiases.addAll(Arrays.asList(

"genderBias", "racialBias", "socioeconomicBias",

"geographicBias", "temporalBias", "culturalBias"

));

}

/**

* 分析训练数据的时间分布偏见

*/

public Map<String, Object> analyzeTemporalBias() {

Map<String, Integer> timeDistribution = new HashMap<>();

timeDistribution.put("2010-2015", 15);

timeDistribution.put("2015-2020", 45);

timeDistribution.put("2020-2023", 40);

// 计算时间偏见指数

double variance = timeDistribution.values().stream()

.mapToDouble(v -> Math.pow(v - 33.33, 2))

.average()

.orElse(0.0);

return Map.of(

"distribution", timeDistribution,

"biasIndex", Math.sqrt(variance),

"recentBias", timeDistribution.get("2020-2023") > 35

);

}

/**

* 检测潜在的偏见模式

*/

public List<String> detectPotentialBiases(String inputText) {

List<String> detectedBiases = new ArrayList<>();

// 简化的偏见检测逻辑

if (inputText.toLowerCase().contains("man") && inputText.toLowerCase().contains("doctor")) {

detectedBiases.add("genderRoleBias");

}

if (inputText.toLowerCase().contains("poor") && inputText.toLowerCase().contains("lazy")) {

detectedBiases.add("socioeconomicBias");

}

return detectedBiases;

}

}

✅2.2 偏见放大效应

模型不仅会学习数据中的偏见,还可能放大这些偏见:

class BiasAmplificationAnalyzer:

def __init__(self):

self.bias_categories = [

'gender', 'race', 'age', 'socioeconomic', 'geographic'

]

def measure_bias_amplification(self, original_data_bias, model_output_bias):

"""

测量偏见放大程度

"""

amplification_factor = {}

for category in self.bias_categories:

if original_data_bias[category] != 0:

amplification_factor[category] = (

model_output_bias[category] / original_data_bias[category]

)

else:

amplification_factor[category] = 1.0

return amplification_factor

def generate_bias_report(self, amplification_factors):

"""

生成偏见报告

"""

report = {

"amplified_biases": [],

"reduced_biases": [],

"unchanged_biases": []

}

for category, factor in amplification_factors.items():

if factor > 1.2: # 放大超过20%

report["amplified_biases"].append((category, factor))

elif factor < 0.8: # 减少超过20%

report["reduced_biases"].append((category, factor))

else:

report["unchanged_biases"].append((category, factor))

return report

# 使用示例

analyzer = BiasAmplificationAnalyzer()

original_bias = {'gender': 0.3, 'race': 0.25, 'age': 0.15}

model_bias = {'gender': 0.45, 'race': 0.35, 'age': 0.12}

amplification = analyzer.measure_bias_amplification(original_bias, model_bias)

report = analyzer.generate_bias_report(amplification)

print("偏见放大报告:", report)

❓ 三、模型行为的不可预测性

✅3.1 幻觉(Hallucination)问题

大语言模型最令人头疼的问题之一就是"幻觉"——生成看似合理但实际上错误或虚构的内容:

class HallucinationDetector {

constructor() {

this.factCheckingSources = [

'wikipedia', 'wikidata', 'openstreetmap', 'dbpedia'

];

}

/**

* 检测潜在的幻觉内容

*/

detectHallucination(generatedText, context = "") {

const hallucinationIndicators = {

// 过于具体的虚构细节

specificDetails: this.checkSpecificDetails(generatedText),

// 逻辑不一致

logicalInconsistency: this.checkLogicalConsistency(generatedText),

// 无法验证的声明

unverifiableClaims: this.checkUnverifiableClaims(generatedText),

// 时间线错误

temporalErrors: this.checkTemporalErrors(generatedText)

};

const hallucinationScore = Object.values(hallucinationIndicators)

.reduce((sum, val) => sum + val, 0) / Object.keys(hallucinationIndicators).length;

return {

score: hallucinationScore,

indicators: hallucinationIndicators,

isHallucination: hallucinationScore > 0.6

};

}

checkSpecificDetails(text) {

// 检查是否包含过多具体的、难以验证的细节

const specificDetailPattern = /\b\d{4}[-\s]\d{4}[-\s]\d{4}[-\s]\d{4}\b|[\w\.-]+@[\w\.-]+\.\w+/g;

const matches = text.match(specificDetailPattern) || [];

return Math.min(matches.length * 0.2, 1.0); // 最高得分1.0

}

checkLogicalConsistency(text) {

// 简化的逻辑一致性检查

const contradictionPatterns = [

/(\w+) is (\w+) and (\w+) is not \2/,

/earlier.*later|later.*earlier/

];

for (let pattern of contradictionPatterns) {

if (pattern.test(text.toLowerCase())) {

return 0.8;

}

}

return 0.0;

}

checkUnverifiableClaims(text) {

// 检查无法验证的声明

const unverifiablePatterns = [

/according to.*study/, /research shows.*significant/,

/experts agree.*majority/, /it is known that.*widely accepted/

];

let score = 0;

for (let pattern of unverifiablePatterns) {

if (pattern.test(text)) {

score += 0.25;

}

}

return Math.min(score, 1.0);

}

checkTemporalErrors(text) {

// 检查时间错误

const currentYear = new Date().getFullYear();

const futureYearPattern = new RegExp(`in (${currentYear + 1}|${currentYear + 2}|\\d{4})`);

return futureYearPattern.test(text) ? 0.5 : 0.0;

}

}

// 使用示例

const detector = new HallucinationDetector();

const sampleText = "According to a 2023 study by Dr. Smith at MIT, 95% of AI researchers believe that artificial general intelligence will be achieved by 2025.";

const result = detector.detectHallucination(sampleText);

console.log("幻觉检测结果:", result);

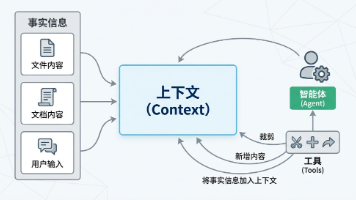

✅3.2 上下文理解的局限性

尽管大语言模型在处理长文本方面有所改进,但在复杂上下文理解上仍存在挑战:

public class ContextUnderstandingChallenge {

private static final int MAX_CONTEXT_LENGTH = 4096; // token限制

public class ContextAnalysisResult {

public boolean contextOverload;

public int tokenCount;

public List<String> lostContexts;

public double coherenceScore;

public ContextAnalysisResult(boolean overload, int tokens,

List<String> lost, double coherence) {

this.contextOverload = overload;

this.tokenCount = tokens;

this.lostContexts = lost;

this.coherenceScore = coherence;

}

}

/**

* 分析上下文理解挑战

*/

public ContextAnalysisResult analyzeContext(String conversation) {

// 简化的token计数

int tokenCount = estimateTokenCount(conversation);

boolean isOverloaded = tokenCount > MAX_CONTEXT_LENGTH;

List<String> lostContexts = identifyLostContexts(conversation);

double coherence = calculateCoherence(conversation, lostContexts);

return new ContextAnalysisResult(isOverloaded, tokenCount, lostContexts, coherence);

}

private int estimateTokenCount(String text) {

// 简化的token估算(英文平均1个token约4个字符)

return text.length() / 4;

}

private List<String> identifyLostContexts(String conversation) {

List<String> lostContexts = new ArrayList<>();

// 检查早期提及但在后期未正确引用的信息

String[] sentences = conversation.split("[.!?]+");

if (sentences.length > 10) {

// 如果对话很长,早期信息可能丢失

for (int i = 0; i < Math.min(3, sentences.length / 4); i++) {

lostContexts.add("Early context: " + sentences[i].trim());

}

}

return lostContexts;

}

private double calculateCoherence(String conversation, List<String> lostContexts) {

// 简化的连贯性评分

double baseScore = 1.0;

if (conversation.length() > MAX_CONTEXT_LENGTH * 4) {

baseScore -= 0.3; // 长度惩罚

}

baseScore -= lostContexts.size() * 0.1; // 丢失上下文惩罚

return Math.max(0.0, baseScore);

}

}

🤖 四、安全与伦理挑战

✅4.1 恶意使用风险

大语言模型的强大能力也带来了被恶意使用的风险:

class MisuseRiskAssessment:

def __init__(self):

self.risk_categories = {

'disinformation': 0.8,

'phishing': 0.7,

'malware_generation': 0.6,

'social_engineering': 0.9,

'impersonation': 0.85

}

def assess_risk_level(self, use_case):

"""

评估特定使用场景的风险等级

"""

if use_case in self.risk_categories:

risk_score = self.risk_categories[use_case]

if risk_score >= 0.8:

return "HIGH"

elif risk_score >= 0.6:

return "MEDIUM"

else:

return "LOW"

return "UNKNOWN"

def generate_mitigation_strategies(self, risk_level):

"""

生成风险缓解策略

"""

strategies = {

"HIGH": [

"实施严格的访问控制",

"增加内容审核机制",

"部署滥用检测系统",

"限制生成内容的长度和复杂度"

],

"MEDIUM": [

"增加用户身份验证",

"实施使用监控",

"提供滥用举报机制"

],

"LOW": [

"基本监控",

"用户教育"

]

}

return strategies.get(risk_level, ["基础安全措施"])

# 风险评估示例

assessor = MisuseRiskAssessment()

risk = assessor.assess_risk_level('disinformation')

mitigations = assessor.generate_mitigation_strategies(risk)

print(f"风险等级: {risk}")

print(f"缓解策略: {mitigations}")

✅4.2 隐私保护挑战

在训练和使用过程中,隐私保护是一个重要考量:

class PrivacyProtectionChallenge {

constructor() {

this.privacyRisks = [

'dataLeakage',

'inferenceAttack',

'membershipInference',

'attributeInference'

];

}

/**

* 评估隐私风险

*/

assessPrivacyRisk(modelArchitecture, trainingDataSources) {

const riskFactors = {

// 数据源风险

dataSourceRisk: this.evaluateDataSourceRisk(trainingDataSources),

// 模型架构风险

architectureRisk: this.evaluateArchitectureRisk(modelArchitecture),

// 训练过程风险

trainingRisk: this.evaluateTrainingRisk()

};

// 计算综合风险评分

const totalRisk = Object.values(riskFactors).reduce((sum, risk) => sum + risk, 0) /

Object.keys(riskFactors).length;

return {

riskFactors,

overallRisk: totalRisk,

riskLevel: this.determineRiskLevel(totalRisk)

};

}

evaluateDataSourceRisk(sources) {

let risk = 0;

if (sources.includes('personalData')) risk += 0.4;

if (sources.includes('sensitiveData')) risk += 0.3;

if (sources.includes('publicData')) risk += 0.1;

return Math.min(risk, 1.0);

}

evaluateArchitectureRisk(architecture) {

// 不同架构的隐私风险

const riskMap = {

'transformer': 0.7,

'rnn': 0.5,

'cnn': 0.3

};

return riskMap[architecture] || 0.5;

}

evaluateTrainingRisk() {

// 训练过程中的隐私风险

return 0.6; // 默认中等风险

}

determineRiskLevel(score) {

if (score >= 0.7) return 'HIGH';

if (score >= 0.4) return 'MEDIUM';

return 'LOW';

}

suggestPrivacyProtectionMethods(riskAssessment) {

const methods = [];

if (riskAssessment.riskFactors.dataSourceRisk > 0.5) {

methods.push('数据匿名化处理');

methods.push('差分隐私技术');

}

if (riskAssessment.riskFactors.architectureRisk > 0.5) {

methods.push('联邦学习');

methods.push('安全多方计算');

}

if (riskAssessment.overallRisk > 0.6) {

methods.push('模型水印');

methods.push('访问控制机制');

}

return methods;

}

}

// 使用示例

const privacyChallenge = new PrivacyProtectionChallenge();

const riskAssessment = privacyChallenge.assessPrivacyRisk('transformer', ['publicData', 'personalData']);

const protectionMethods = privacyChallenge.suggestPrivacyProtectionMethods(riskAssessment);

console.log('隐私风险评估:', riskAssessment);

console.log('保护方法建议:', protectionMethods);

📺 五、部署与应用挑战

✅5.1 推理效率问题

尽管训练阶段可以使用大量计算资源,但在实际部署中,推理效率是一个关键考量:

public class InferenceEfficiencyChallenge {

public static class ModelPerformanceMetrics {

public double latency; // 延迟(ms)

public double throughput; // 吞吐量(queries/sec)

public double memoryUsage; // 内存使用(MB)

public double energyConsumption; // 能耗(mWh)

public ModelPerformanceMetrics(double lat, double thr, double mem, double energy) {

this.latency = lat;

this.throughput = thr;

this.memoryUsage = mem;

this.energyConsumption = energy;

}

}

/**

* 评估模型推理性能

*/

public ModelPerformanceMetrics evaluatePerformance(String modelType, int parameterCount) {

double baseLatency = 100.0; // 基准延迟(ms)

double baseThroughput = 10.0; // 基准吞吐量(qps)

double baseMemory = 1000.0; // 基准内存(MB)

double baseEnergy = 50.0; // 基准能耗(mWh)

// 根据参数数量调整性能指标

double scale = Math.log10(parameterCount / 1e9 + 1);

double latency = baseLatency * (1 + scale * 2);

double throughput = baseThroughput / (1 + scale * 1.5);

double memory = baseMemory * (1 + scale * 3);

double energy = baseEnergy * (1 + scale * 2);

return new ModelPerformanceMetrics(latency, throughput, memory, energy);

}

/**

* 优化建议

*/

public List<String> suggestOptimizations(String modelType, ModelPerformanceMetrics metrics) {

List<String> optimizations = new ArrayList<>();

if (metrics.latency > 200) {

optimizations.add("模型量化(Quantization)");

optimizations.add("知识蒸馏(Knowledge Distillation)");

}

if (metrics.memoryUsage > 5000) {

optimizations.add("模型剪枝(Model Pruning)");

optimizations.add("稀疏化(Sparsity)");

}

if (metrics.energyConsumption > 100) {

optimizations.add("边缘计算部署");

optimizations.add("模型压缩");

}

return optimizations;

}

}

✅5.2 持续学习与更新挑战

现实世界不断变化,模型需要持续学习和更新:

class ContinualLearningChallenge:

def __init__(self):

self.challenges = [

'catastrophic_forgetting',

'data_efficiency',

'concept_drift',

'computational_cost'

]

def evaluate_continual_learning_capability(self, model_architecture):

"""

评估模型的持续学习能力

"""

capabilities = {

'transformer': {

'catastrophic_forgetting': 0.3, # 较低的灾难性遗忘

'data_efficiency': 0.4, # 中等数据效率

'concept_drift': 0.5, # 中等概念漂移适应

'computational_cost': 0.8 # 高计算成本

},

'lstm': {

'catastrophic_forgetting': 0.6,

'data_efficiency': 0.3,

'concept_drift': 0.4,

'computational_cost': 0.5

},

'rnn': {

'catastrophic_forgetting': 0.7,

'data_efficiency': 0.2,

'concept_drift': 0.3,

'computational_cost': 0.4

}

}

return capabilities.get(model_architecture, capabilities['transformer'])

def suggest_continual_learning_strategies(self, capability_scores):

"""

根据能力评分建议持续学习策略

"""

strategies = []

if capability_scores['catastrophic_forgetting'] > 0.5:

strategies.append("弹性权重巩固(EWC)")

strategies.append("生成回放(Generative Replay)")

if capability_scores['data_efficiency'] < 0.5:

strategies.append("元学习(Meta-Learning)")

strategies.append("主动学习(Active Learning)")

if capability_scores['concept_drift'] > 0.4:

strategies.append("在线学习(Online Learning)")

strategies.append("自适应学习率")

if capability_scores['computational_cost'] > 0.6:

strategies.append("增量学习(Incremental Learning)")

strategies.append("模型蒸馏")

return strategies

# 持续学习能力评估

cl_challenge = ContinualLearningChallenge()

transformer_capabilities = cl_challenge.evaluate_continual_learning_capability('transformer')

strategies = cl_challenge.suggest_continual_learning_strategies(transformer_capabilities)

print("Transformer持续学习能力:", transformer_capabilities)

print("建议策略:", strategies)

🦌 六、评估与基准测试挑战

✅6.1 评估指标的局限性

传统的评估指标往往无法全面反映模型的真实能力:

class ModelEvaluationChallenge {

constructor() {

this.evaluationMetrics = {

// 传统指标

'accuracy': { weight: 0.3, limitation: '无法反映生成质量' },

'perplexity': { weight: 0.2, limitation: '偏好短回答' },

'bleu': { weight: 0.2, limitation: '无法评估创造性' },

'rouge': { weight: 0.15, limitation: '仅适用于摘要任务' },

// 新兴指标

'factuality': { weight: 0.1, limitation: '难以自动化评估' },

'coherence': { weight: 0.05, limitation: '主观性强' }

};

}

/**

* 综合评估模型性能

*/

comprehensiveEvaluation(traditionalScores, humanEvaluation) {

// 传统指标加权平均

let traditionalScore = 0;

for (let [metric, config] of Object.entries(this.evaluationMetrics)) {

if (traditionalScores[metric] !== undefined) {

traditionalScore += traditionalScores[metric] * config.weight;

}

}

// 结合人工评估

const humanWeight = 0.4;

const finalScore = traditionalScore * (1 - humanWeight) +

humanEvaluation.overall * humanWeight;

return {

traditionalScore: traditionalScore,

humanScore: humanEvaluation.overall,

finalScore: finalScore,

reliability: this.calculateReliability(traditionalScore, humanEvaluation.overall)

};

}

calculateReliability(autoScore, humanScore) {

// 计算自动评估与人工评估的一致性

const difference = Math.abs(autoScore - humanScore);

if (difference < 0.1) return 'HIGH';

if (difference < 0.3) return 'MEDIUM';

return 'LOW';

}

suggestImprovementAreas(evaluationResult) {

const areas = [];

if (evaluationResult.reliability === 'LOW') {

areas.push('改进自动评估指标');

areas.push('增加人工评估比例');

}

if (evaluationResult.finalScore < 0.7) {

areas.push('优化模型架构');

areas.push('改进训练数据质量');

}

return areas;

}

}

// 评估示例

const evaluator = new ModelEvaluationChallenge();

const traditionalScores = {

'accuracy': 0.85,

'perplexity': 15.2,

'bleu': 0.28

};

const humanEvaluation = {

overall: 0.78,

factuality: 0.72,

coherence: 0.85

};

const evaluation = evaluator.comprehensiveEvaluation(traditionalScores, humanEvaluation);

const improvementAreas = evaluator.suggestImprovementAreas(evaluation);

console.log('综合评估结果:', evaluation);

console.log('改进建议:', improvementAreas);

✅6.2 基准测试的代表性问题

现有的基准测试可能无法全面代表真实世界的使用场景:

public class BenchmarkRepresentationChallenge {

private Map<String, Double> benchmarkWeights;

public BenchmarkRepresentationChallenge() {

// 不同类型任务的权重分配

benchmarkWeights = new HashMap<>();

benchmarkWeights.put("questionAnswering", 0.25);

benchmarkWeights.put("textSummarization", 0.15);

benchmarkWeights.put("translation", 0.15);

benchmarkWeights.put("codeGeneration", 0.20);

benchmarkWeights.put("creativeWriting", 0.10);

benchmarkWeights.put("reasoning", 0.15);

}

public class BenchmarkAnalysis {

public double coverageScore;

public List<String> missingCategories;

public Map<String, Double> categoryScores;

public BenchmarkAnalysis(double coverage, List<String> missing,

Map<String, Double> scores) {

this.coverageScore = coverage;

this.missingCategories = missing;

this.categoryScores = scores;

}

}

/**

* 分析基准测试的代表性

*/

public BenchmarkAnalysis analyzeBenchmarkCoverage(Set<String> availableBenchmarks) {

Set<String> allCategories = benchmarkWeights.keySet();

List<String> missingCategories = new ArrayList<>();

Map<String, Double> categoryScores = new HashMap<>();

double totalWeight = 0.0;

double coveredWeight = 0.0;

for (String category : allCategories) {

totalWeight += benchmarkWeights.get(category);

if (availableBenchmarks.contains(category)) {

coveredWeight += benchmarkWeights.get(category);

categoryScores.put(category, 1.0);

} else {

missingCategories.add(category);

categoryScores.put(category, 0.0);

}

}

double coverageScore = coveredWeight / totalWeight;

return new BenchmarkAnalysis(coverageScore, missingCategories, categoryScores);

}

/**

* 建议补充的基准测试

*/

public List<String> suggestAdditionalBenchmarks(BenchmarkAnalysis analysis) {

List<String> suggestions = new ArrayList<>();

// 对于覆盖率低的类别提出建议

for (String missing : analysis.missingCategories) {

double weight = benchmarkWeights.get(missing);

if (weight > 0.1) { // 重要性较高的类别

suggestions.add(this.getBenchmarkSuggestion(missing));

}

}

return suggestions;

}

private String getBenchmarkSuggestion(String category) {

Map<String, String> suggestions = Map.of(

"creativeWriting", "增加诗歌、故事创作基准测试",

"reasoning", "添加逻辑推理和数学问题解决测试",

"codeGeneration", "扩展多编程语言代码生成测试"

);

return suggestions.getOrDefault(category, "开发针对" + category + "的基准测试");

}

}

🚀 七、未来发展方向与解决方案

7.1 技术优化方向

面对上述挑战,研究者们正在探索多种解决方案:

class FutureDevelopmentDirections:

def __init__(self):

self.directions = {

'efficient_architectures': {

'description': '更高效的模型架构',

'challenges_addressed': ['计算资源', '推理效率'],

'current_approaches': ['MoE', '稀疏注意力', '低秩分解']

},

'better_training_methods': {

'description': '改进的训练方法',

'challenges_addressed': ['数据质量', '偏见问题'],

'current_approaches': ['对比学习', '强化学习', '人类反馈']

},

'improved_evaluation': {

'description': '更好的评估方法',

'challenges_addressed': ['评估局限性', '基准测试'],

'current_approaches': ['多维度评估', '动态基准', '人类评估']

},

'ethical_ai': {

'description': '伦理AI发展',

'challenges_addressed': ['安全风险', '隐私保护'],

'current_approaches': ['可解释AI', '公平性约束', '隐私保护技术']

}

}

def get_development_roadmap(self):

"""

生成发展路线图

"""

roadmap = {}

for direction, info in self.directions.items():

roadmap[direction] = {

'short_term': self.get_short_term_goals(info),

'medium_term': self.get_medium_term_goals(info),

'long_term': self.get_long_term_goals(info)

}

return roadmap

def get_short_term_goals(self, direction_info):

# 1年内可实现的目标

return [f"优化{direction_info['description']}的原型开发"]

def get_medium_term_goals(self, direction_info):

# 1-3年内的目标

return [f"在{direction_info['description']}方面取得突破性进展"]

def get_long_term_goals(self, direction_info):

# 3-5年或更长期的目标

return [f"实现{direction_info['description']}的产业化应用"]

# 生成发展路线图

development = FutureDevelopmentDirections()

roadmap = development.get_development_roadmap()

for direction, timeline in roadmap.items():

print(f"{direction}:")

for phase, goals in timeline.items():

print(f" {phase}: {goals}")

✅7.2 产业应用策略

在产业应用层面,需要综合考虑技术、商业和伦理因素:

class IndustryApplicationStrategy {

constructor() {

this.applicationDomains = [

'customerService',

'contentCreation',

'education',

'healthcare',

'finance'

];

this.riskMitigation = [

'regulatoryCompliance',

'userPrivacy',

'biasMitigation',

'securityMeasures'

];

}

/**

* 为不同应用领域制定策略

*/

developApplicationStrategy(domain) {

const baseStrategy = {

domain: domain,

implementationApproach: this.getImplementationApproach(domain),

riskConsiderations: this.identifyRisks(domain),

successMetrics: this.defineSuccessMetrics(domain)

};

return baseStrategy;

}

getImplementationApproach(domain) {

const approaches = {

'customerService': '渐进式部署,从简单查询开始',

'contentCreation': '人机协作模式,保留人工审核',

'education': '辅助教学工具,不替代教师',

'healthcare': '严格监管环境下有限应用',

'finance': '高安全性要求,合规优先'

};

return approaches[domain] || '定制化实施策略';

}

identifyRisks(domain) {

const domainRisks = {

'customerService': ['误解用户意图', '隐私泄露'],

'contentCreation': ['版权问题', '虚假信息'],

'education': ['误导学生', '依赖性问题'],

'healthcare': ['诊断错误', '法律责任'],

'finance': ['欺诈风险', '市场操纵']

};

return domainRisks[domain] || ['通用风险'];

}

defineSuccessMetrics(domain) {

const metrics = {

'customerService': ['用户满意度', '解决率', '响应时间'],

'contentCreation': ['内容质量评分', '用户参与度', '原创性'],

'education': ['学习效果提升', '教师满意度', '学生接受度'],

'healthcare': ['诊断准确性', '患者满意度', '合规性'],

'finance': ['风险控制', '合规性', '业务效率']

};

return metrics[domain] || ['基本性能指标'];

}

/**

* 制定风险管理计划

*/

createRiskManagementPlan(domain) {

const risks = this.identifyRisks(domain);

const mitigationStrategies = [];

for (let risk of risks) {

mitigationStrategies.push(this.getMitigationStrategy(risk));

}

return {

identifiedRisks: risks,

mitigationStrategies: mitigationStrategies,

monitoringPlan: this.createMonitoringPlan(domain)

};

}

getMitigationStrategy(risk) {

const strategies = {

'误解用户意图': '增加上下文理解和澄清机制',

'隐私泄露': '实施端到端加密和数据最小化',

'版权问题': '建立内容来源追踪和授权机制',

'虚假信息': '集成事实核查和可信度评分',

'误导学生': '明确AI辅助角色,保持教师主导',

'诊断错误': '仅作为辅助工具,医生最终决策',

'法律责任': '明确责任边界和免责声明',

'欺诈风险': '多重验证和异常检测机制',

'市场操纵': '交易监控和合规审查'

};

return strategies[risk] || '通用风险缓解措施';

}

createMonitoringPlan(domain) {

return {

frequency: '实时监控 + 定期审计',

keyIndicators: this.defineSuccessMetrics(domain),

reporting: '定期向管理层和监管部门报告',

incidentResponse: '建立快速响应和修复机制'

};

}

}

// 应用策略示例

const strategy = new IndustryApplicationStrategy();

const customerServiceStrategy = strategy.developApplicationStrategy('customerService');

const riskManagement = strategy.createRiskManagementPlan('customerService');

console.log('客户服务应用策略:', customerServiceStrategy);

console.log('风险管理计划:', riskManagement);

📚 结论

✅1.challenging挑战性:大语言模型作为人工智能领域的重要突破,确实展现出了令人印象深刻的能力。然而,正如我们在本文中详细分析的那样,这些模型在技术、伦理、安全和应用等多个维度都面临着严峻挑战。

✅2.unpredictable不可预测性:从计算资源的指数级增长到训练过程中的巨大能耗,从数据偏见的传承与放大到模型行为的不可预测性,从安全风险到隐私保护,每一个挑战都需要我们以科学严谨的态度去面对和解决。

✅3.worth the wait值得期待:值得注意的是,这些挑战并非不可克服。通过技术创新、跨学科合作、伦理规范的建立以及产业界的共同努力,我们有理由相信大语言模型将在未来变得更加高效、可靠和负责任。

✅4.dual character两面性:对于从业者而言,理解这些挑战不仅有助于更好地应用现有技术,更能指导未来的研究方向。对于普通用户而言,了解这些局限性有助于更理性地看待AI能力,避免盲目信任或过度依赖。

✅5.integration融合性:最终,大语言模型的发展需要在技术进步与社会责任之间找到平衡点,确保这项强大的技术能够真正造福人类社会,而不是成为新的问题源泉。这需要学术界、产业界、政策制定者和全社会的共同参与和努力。

⛄ 老曹相信,随着技术的不断演进,我们期待看到更多创新的解决方案出现,让大语言模型真正成为推动人类知识进步和社会发展的有力工具。

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)