傅立叶变换/傅立叶神经算子/Fourier Transform

基于论文FOURIER NEURAL OPERATOR FOR PARAMETRIC PARTIAL DIFFERENTIAL EQUATIONS

FOURIER NEURAL OPERATOR FOR PARAMETRIC PARTIAL DIFFERENTIAL EQUATIONS

Peng Jian

1.Abstract

Neural operators that learn mappings between function spaces

formulate a new neural operator by parameterizing the integral kernel directly in Fourier space(频率空间)

f:R→R,F:f→g,g:k(频率)→Cf:R\rightarrow R, \mathcal{F}:f\rightarrow g,g:k(频率)\rightarrow Cf:R→R,F:f→g,g:k(频率)→C

2.Introduction

Two mainstream neural network-based approaches for PDEs – the finite-dimensional operators and Neural-FEM

The integral operator is restricted to a convolution, and instantiated through a linear transformation in the Fourier domain

The proposed framework can approximate complex operators raising in PDEs that are highly non-linear, with high frequency modes and slow energy decay

优点:

They learn an entire family of PDEs, in contrast to classical methods which solve on instance of the equation

Three orders of magnitude faster compared to traditional PDE solvers.

Achieves superior accuracy compared to previous learning-based solvers under fixed resolution

3.Learning Operators

3.1符号说明

有界定义域:D⊂RdD\subset\mathbb{R}^dD⊂Rd

separable Banach spaces of function:A=A(D;Rda)\mathcal{A}=\mathcal{A}(D;\mathbb{R}^{d_a})A=A(D;Rda) and U=U(D;Rdu)\mathcal{U}=\mathcal{U}(D;\mathbb{R}^{d_u})U=U(D;Rdu)

函数对集合:{aj,uj}j=1N where aj∼μ is an i.i.d. sequence\{a_j,u_j\}_{j=1}^N\text{ where }a_j\sim\mu\text{ is an i.i.d. sequence}{aj,uj}j=1N where aj∼μ is an i.i.d. sequence

learns a mapping between two infinite dimensional spaces from a finite collection of observed input-output pairs

non-linear map:G†:A→UG^\dagger:{\mathcal{A}}\rightarrow\mathcal{U}G†:A→U,uj=G†(aj)\begin{aligned}u_j=G^\dagger(a_j)\end{aligned}uj=G†(aj)

We aim to build an approximation of G†G^\daggerG† by constructing a parametric map(参数映射)

parametric map:G:A×Θ→Uor equivalently,Gθ:A→U,θ∈ΘG:\mathcal{A}\times\Theta\to\mathcal{U}\quad\text{or equivalently},\quad G_\theta:\mathcal{A}\to\mathcal{U},\quad\theta\in\ThetaG:A×Θ→Uor equivalently,Gθ:A→U,θ∈Θ

cost function:C:U×U→RC:\mathcal{U}\times\mathcal{U}\to\mathbb{R}C:U×U→R

seek a minimizer of the problem:minθ∈ΘEa∼μ[C(G(a,θ),G†(a))]\min_{\theta\in\Theta}\mathbb{E}_{a\sim\mu}[C(G(a,\theta),G^\dagger(a))]minθ∈ΘEa∼μ[C(G(a,θ),G†(a))]

3.2Discretization(离散化)

Since our data aj,uja_j ,u_jaj,uj are, in general, functions, to work with them numerically,we assume access only to point-wise evaluations(逐点评估).

Dj = {x1,…,xn} ⊂ D D_j~=~\{x_1,\ldots,x_n\}~\subset~D~Dj = {x1,…,xn} ⊂ D be a n-n\text{-}n-point discretization of the domain DDD

aj∣Dj∈Rn×da,uj∣Dj∈Rn×dv\begin{aligned}a_j|_{D_j}\in\mathbb{R}^{n\times d_a},u_j|_{D_j}\in\mathbb{R}^{n\times d_v}\end{aligned}aj∣Dj∈Rn×da,uj∣Dj∈Rn×dv

To be discretization-invariant, the neural operator can produce an answer u(x){u(x)}u(x) for any x∈Dx \in Dx∈D, potentially x∉Djx\notin D_jx∈/Dj

4.Neural operator

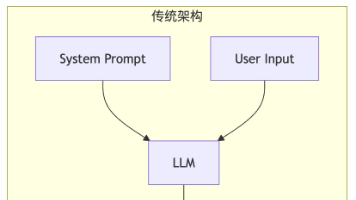

神经算子的本质:Iterative architecture

v0↦v1↦…↦vTv_0\mapsto v_1\mapsto\ldots\mapsto v_Tv0↦v1↦…↦vT

where vj for j=0,1,…,T−1\begin{aligned}v_j\text{ for }j=0,1,\ldots,T-1\end{aligned}vj for j=0,1,…,T−1 is a sequence of functions each taking values in Rdυ\mathbb{R}^{d_{\upsilon}}Rdυ

higher dimensional representation of a(x)a(x)a(x):v0(x)=P(a(x))v_0(x)=P(a(x))v0(x)=P(a(x)), where PPP is a fully connected neural network

output:u(x)=Q(vT(x))\begin{aligned}u(x)=Q(v_T(x))\end{aligned}u(x)=Q(vT(x)), where Q:Rdv→RduQ:\mathbb{R}^{d_{v}}\to\mathbb{R}^{d_{u}}Q:Rdv→Rdu

神经算子的强大之处在于将线性的全局积分算子(通过傅里叶变换)与非线性的局部激活函数相结合。类似于标准神经网络通过将线性乘法与非线性激活函数相结合来逼近高度非线性函数

迭代关系vt↦vt+1\begin{aligned}v_t\mapsto v_{t+1}\end{aligned}vt↦vt+1:

vt+1(x)=σ(Wvt(x)+∫Dκ(x,y,a(x),a(y);ϕ)vt(y)dy)v_{t+1}(x)=\sigma(Wv_{t}(x)+\int_D\kappa\big(x,y,a(x),a(y);\phi\big)v_t(y)\mathrm{d}y)vt+1(x)=σ(Wvt(x)+∫Dκ(x,y,a(x),a(y);ϕ)vt(y)dy)

因而可以理解下图:

5.Fourier Neural operator

进一步改进:用卷积算子(傅立叶变换)替换神经算子中的核积分算子,相应地,定义域发生改变(核积分算子自变量为xxx,卷积算子自变量为kkk (频率))

Fourier Transform:

(Ff)j(k)=∫Dfj(x)e−2iπ⟨x,k⟩dx(\mathcal{F}f)_j(k)=\int_Df_j(x)e^{-2i\pi\langle x,k\rangle}\mathrm{d}x(Ff)j(k)=∫Dfj(x)e−2iπ⟨x,k⟩dx

inverse:

(F−1f)j(x)=∫Dfj(k)e2iπ⟨x,k⟩dk(\mathcal{F}^{-1}f)_j(x)=\int_Df_j(k)e^{2i\pi\langle x,k\rangle}\mathrm{d}k(F−1f)j(x)=∫Dfj(k)e2iπ⟨x,k⟩dk

改进操作:令核积分算子中的 κϕ(x,y,a(x),a(y))=κϕ(x−y)\kappa_\phi(x,y,a(x),a(y))=\kappa_\phi(x-y)κϕ(x,y,a(x),a(y))=κϕ(x−y)

那么(Kϕvt)(x)=(Kϕ∗vt)(x)\left(\mathcal{K}_\phi v_t\right)(x)=(\mathcal{K}_\phi*v_t)(x)(Kϕvt)(x)=(Kϕ∗vt)(x)

根据卷积定理:F(Kϕ∗vt)=F(Kϕ)∗F(vt)\mathcal{F}(\mathcal{K}_\phi*v_t)=\mathcal{F}(\mathcal{K}_\phi)*\mathcal{F}(v_t)F(Kϕ∗vt)=F(Kϕ)∗F(vt)

所以:Kϕ∗vt=F−1F(Kϕ∗vt)=F−1(F(Kϕ)F(vt))\mathcal{K}_\phi*v_t=\mathcal{F}^{-1}\mathcal{F}(\mathcal{K}_\phi*v_t)=\mathcal{F}^{-1}(\mathcal{F}(\mathcal{K}_\phi)\mathcal{F}(v_t))Kϕ∗vt=F−1F(Kϕ∗vt)=F−1(F(Kϕ)F(vt))

最终得:(K(a;ϕ)vt)(x)=F−1(F(κϕ)⋅F(vt))(x),∀x∈D.\left(\mathcal{K}(a;\phi)v_t\right)(x)=\mathcal{F}^{-1}\left(\mathcal{F}(\kappa_\phi)\cdot\mathcal{F}(v_t)\right)(x),\quad\forall x\in D.(K(a;ϕ)vt)(x)=F−1(F(κϕ)⋅F(vt))(x),∀x∈D.

directly parameterize κϕ\kappa_\phiκϕ in Fourier space then let Rϕ=F(κϕ)R_{\phi}=\mathcal{F}(\kappa_\phi)Rϕ=F(κϕ)

因此:vt+1(x)=σ(Wvt(x)+F−1(Rϕ⋅(Fvt))(x))v_{t+1}(x)=\sigma(Wv_{t}(x)+\mathcal{F}^{-1}\left(R_\phi\cdot(\mathcal{F}v_t)\right)(x))vt+1(x)=σ(Wvt(x)+F−1(Rϕ⋅(Fvt))(x))

卷积算子自变量为kkk (频率模式 frequency mode)

所以:(Fvt)(k) ∈ Cdv and Rϕ(k) ∈ Cdv×dv(\mathcal{F}v_t)(k)~\in~\mathbb{C}^{d_v}\mathrm{~and~}R_\phi(k)~\in~\mathbb{C}^{d_v\times d_v}(Fvt)(k) ∈ Cdv and Rϕ(k) ∈ Cdv×dv (参考Abstract)

前文已经假设κϕ\kappa_\phiκϕ是周期函数,可以展开成傅立叶级数形式,so we may work with the discrete modes k∈Zdk\in\mathbb{Z}^dk∈Zd

截断傅立叶级数:

We pick a fifinite-dimensional parameterization by truncating(截断) the Fourier series at a maximal number of modes(最大模式数)kmax=∣Zkmax∣=.∣{k∈Zd:∣kj∣≤kmax,j, for j=1,…,d}∣,Zkmax=.{k∈Zd:∣kj∣≤kmax,j, for j=1,…,d}k_{\max}=|Z_{k_{\max}}|\stackrel{.}{=}|\{k\in\mathbb{Z}^d:|k_j|{\leq}k_{\max,j},\text{ for }j=1,\ldots,d\}|,\quad Z_{k_{\max}}\stackrel{.}{=}\{k\in\mathbb{Z}^d:|k_j|{\leq}k_{\max,j},\text{ for }j=1,\ldots,d\}kmax=∣Zkmax∣=.∣{k∈Zd:∣kj∣≤kmax,j, for j=1,…,d}∣,Zkmax=.{k∈Zd:∣kj∣≤kmax,j, for j=1,…,d}

因为Rϕ(k) ∈ Cdv×dvR_\phi(k)~\in~\mathbb{C}^{d_v\times d_v}Rϕ(k) ∈ Cdv×dv,所以Rϕ(Zkmax) ∈ Ckmax×dv×dvR_\phi(Z_{k_{\max}})~\in~\mathbb{C}^{k_{\max}\times d_v\times d_v}Rϕ(Zkmax) ∈ Ckmax×dv×dv, 所以直接参数化RϕR_\phiRϕ为一组复值张量(complex-valued(kmax×dv×dv)(k_{\max}\times d_v\times d_v)(kmax×dv×dv) -tensor),因此可以省略符号ϕ\phiϕ

总结:周期函数–>傅立叶展开–>使用离散的频率模式表示不同的频率分量–>设定最大模式数–>截断傅立叶级数–>得到周期函数的近似–>进而得到RϕR_\phiRϕ–>得到复值张量(参数化表示)

共轭对称性(conjugate symmetry):由于函数 κ\kappaκ 是实值的,对应的傅里叶变换结果 RϕR_\phiRϕ 具有共轭对称性。这意味着在频域中的负频率模式的振幅和相位与正频率模式相同,只是共轭的。在实际应用中,利用共轭对称性可以减少计算量。由于正负频率模式具有相同的振幅和相位(只是共轭关系),我们只需要计算正频率模式的傅里叶系数,并根据共轭对称性得到负频率模式的系数。

5.1The discrete case and the FFT.

假设区域DDD离散化为nnn个点,那么vt∈Rn×dv and F(vt)∈Cn×dvv_t\in\mathbb{R}^{n\times d_v}\text{ and }\mathcal{F}(v_t)\in\mathbb{C}^{n\times d_v}vt∈Rn×dv and F(vt)∈Cn×dv,因为我们将vtv_tvt和只有kmaxk_{\max}kmax个傅立叶模式的函数进行卷积,因此我们可以进行截断,得到F(vt)∈Ckmax×dv\mathcal{F}(v_t)\in\mathbb{C}^{k_{\max}\times d_v}F(vt)∈Ckmax×dv。

所以(R⋅(Fvt))k,l=∑j=1dvRk,l,j(Fvt)k,j,k=1,…,kmax,j=1,…,dv\left(R\cdot(\mathcal{F}v_t)\right)_{k,l}=\sum_{j=1}^{d_v}R_{k,l,j}(\mathcal{F}v_t)_{k,j},\quad k=1,\ldots,k_{\max},\quad j=1,\ldots,d_v(R⋅(Fvt))k,l=∑j=1dvRk,l,j(Fvt)k,j,k=1,…,kmax,j=1,…,dv,得到kmax×dvk_{\max} \times d_{v}kmax×dv的矩阵,每一行都是vt+1(x)=σ(Wvt(x)+(K(a;ϕ)vt)(x))v_{t+1}(x)=\sigma\Big(Wv_t(x)+\big(\mathcal{K}(a;\phi)v_t\big)(x)\Big)vt+1(x)=σ(Wvt(x)+(K(a;ϕ)vt)(x))中激活函数的一个输入。

When the discretization is uniform with resolution s1×⋯×sd=n,d=dim(D)s_1\times\cdots\times s_d=n,\quad d = \dim{(D)}s1×⋯×sd=n,d=dim(D), F\mathcal{F}F can be replaced by the Fast Fourier Transform

快速傅立叶变换:

f∈Rn×dv,k=(k1,…,kd)∈Zs1×⋯×Zsd,and x=(x1,…,xd)∈Df\in\mathbb{R}^{n\times d_v},k=(k_1,\ldots,k_d)\in\mathbb{Z}_{s_1}\times\cdots\times\mathbb{Z}_{s_d},\text{and }x=(x_1,\ldots,x_d)\in Df∈Rn×dv,k=(k1,…,kd)∈Zs1×⋯×Zsd,and x=(x1,…,xd)∈D

快速傅立叶变换FFTFFTFFT F^\hat{\mathcal{F}}F^

逆变换:F^−1\hat{\mathcal{F}}^{-1}F^−1

(F^f)l(k)=∑x1=0s1−1⋯∑xd=0sd−1fl(x1,…,xd)e−2iπ∑j=1dxjkjsj,(F^−1f)l(x)=∑k1=0s1−1⋯∑kd=0sd−1fl(k1,…,kd)e2iπ∑j=1dxjkjsj\begin{aligned}(\hat{\mathcal{F}}f)_l(k)&=\sum_{x_1=0}^{s_1-1}\cdots\sum_{x_d=0}^{s_d-1}f_l(x_1,\ldots,x_d)e^{-2i\pi\sum_{j=1}^d\frac{x_jk_j}{s_j}},\\(\hat{\mathcal{F}}^{-1}f)_l(x)&=\sum_{k_1=0}^{s_1-1}\cdots\sum_{k_d=0}^{s_d-1}f_l(k_1,\ldots,k_d)e^{2i\pi\sum_{j=1}^d\frac{x_jk_j}{s_j}}\end{aligned}(F^f)l(k)(F^−1f)l(x)=x1=0∑s1−1⋯xd=0∑sd−1fl(x1,…,xd)e−2iπ∑j=1dsjxjkj,=k1=0∑s1−1⋯kd=0∑sd−1fl(k1,…,kd)e2iπ∑j=1dsjxjkj

RRR is treated as a (s1×⋯×sd×dv×dv)(s_1\times\cdots\times s_d\times d_v\times d_v)(s1×⋯×sd×dv×dv)-tensor

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)