深度解析时间序列三大核心技术:交叉验证、滞后特征与谱聚类(Scikit-learn)(十二)

适用于电力网络、交通流量预测。处理不规则采样时序数据。

一、时间序列分析的独特属性与挑战

时间序列数据具有以下核心特征:

- 时间依赖性:xt=f(xt−1,xt−2,...,ϵt)x_t = f(x_{t-1},x_{t-2},...,\epsilon_t)xt=f(xt−1,xt−2,...,ϵt)

- 趋势与季节性:Tt=αTt−1+βSt−mT_t = \alpha T_{t-1} + \beta S_{t-m}Tt=αTt−1+βSt−m

- 非平稳性:E[xt]≠E[xt+k]\mathbb{E}[x_t] \neq \mathbb{E}[x_{t+k}]E[xt]=E[xt+k]

传统机器学习方法在此类数据上会出现以下典型问题:

| 问题类型 | 错误案例 | 后果 |

|---|---|---|

| 数据泄露 | 使用未来数据预测过去 | 模型过拟合 |

| 特征失效 | 直接应用非时序特征 | 丢失时序依赖信息 |

| 结构误判 | 使用K-Means直接聚类 | 无法识别时间模式 |

二、时间序列交叉验证的深度应用

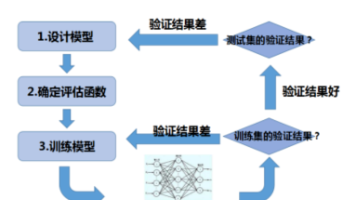

2.1 算法原理深度解析

TimeSeriesSplit的核心是保持时间顺序的完整性,其数学表达为:

设总样本数为NNN,划分次数为KKK,则每次划分的索引满足:

∀i∈train_indices,j∈test_indices⇒i<j \forall i \in \text{train\_indices}, j \in \text{test\_indices} \Rightarrow i < j ∀i∈train_indices,j∈test_indices⇒i<j

动态增长验证法:

class DynamicTimeSeriesSplit:

def __init__(self, train_size, test_size, step=1):

self.train_size = train_size

self.test_size = test_size

self.step = step

def split(self, X):

n_samples = len(X)

for i in range(0, n_samples - self.train_size - self.test_size + 1, self.step):

train_end = self.train_size + i

test_end = train_end + self.test_size

yield (np.arange(i, train_end),

np.arange(train_end, test_end))

2.2 高级应用技巧

from sklearn.model_selection import TimeSeriesSplit

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import mean_absolute_error

# 生成复杂时序数据(含趋势+季节)

t = np.arange(500)

seasonal = 5 * np.sin(2 * np.pi * t / 50)

trend = 0.1 * t**2

noise = np.random.normal(0, 2, 500)

X = trend + seasonal + noise

# 高阶交叉验证

tscv = TimeSeriesSplit(n_splits=5, test_size=50, gap=10)

# 可视化验证策略

plt.figure(figsize=(12, 6))

for i, (train_idx, test_idx) in enumerate(tscv.split(X)):

plt.scatter(train_idx, [i]*len(train_idx),

c='blue', s=1, alpha=0.5)

plt.scatter(test_idx, [i]*len(test_idx),

c='red', s=3)

plt.title("动态时间序列交叉验证示意图")

plt.xlabel("时间索引")

plt.ylabel("Fold编号")

plt.show()

2.3 性能评估矩阵

model = RandomForestRegressor(n_estimators=100)

metrics = []

for train_idx, test_idx in tscv.split(X):

X_train, X_test = X[train_idx].reshape(-1,1), X[test_idx].reshape(-1,1)

y_train = X_train[1:]

y_test = X_test[1:]

model.fit(X_train[:-1], y_train)

preds = model.predict(X_test[:-1])

fold_metrics = {

'MAE': mean_absolute_error(y_test, preds),

'RMSE': np.sqrt(mean_squared_error(y_test, preds)),

'R2': r2_score(y_test, preds)

}

metrics.append(fold_metrics)

pd.DataFrame(metrics).describe()

三、滞后特征工程的系统方法论

3.1 特征构造原理

构建多阶滞后特征矩阵:

Xlag=[xt−1xt−2⋯xt−pxtxt−1⋯xt−p+1⋮⋮⋱⋮xt+n−1xt+n−2⋯xt+n−p] \mathbf{X}_{lag} = \begin{bmatrix} x_{t-1} & x_{t-2} & \cdots & x_{t-p} \\ x_{t} & x_{t-1} & \cdots & x_{t-p+1} \\ \vdots & \vdots & \ddots & \vdots \\ x_{t+n-1} & x_{t+n-2} & \cdots & x_{t+n-p} \end{bmatrix} Xlag=

xt−1xt⋮xt+n−1xt−2xt−1⋮xt+n−2⋯⋯⋱⋯xt−pxt−p+1⋮xt+n−p

最优滞后阶数选择:

from statsmodels.tsa.stattools import acf, pacf

# 计算自相关与偏自相关

lags = 30

acf_values = acf(X, nlags=lags)

pacf_values = pacf(X, nlags=lags, method='ols')

# 可视化分析

plt.figure(figsize=(12,6))

plt.subplot(211)

plt.stem(acf_values)

plt.axhline(y=1.96/np.sqrt(len(X)), color='r', linestyle='--')

plt.title('自相关函数(ACF)')

plt.subplot(212)

plt.stem(pacf_values)

plt.axhline(y=1.96/np.sqrt(len(X)), color='r', linestyle='--')

plt.title('偏自相关函数(PACF)')

plt.tight_layout()

3.2 高级特征工程

class AdvancedLagFeatures(BaseEstimator, TransformerMixin):

def __init__(self, window_sizes=[3,5,7]):

self.window_sizes = window_sizes

def fit(self, X, y=None):

return self

def transform(self, X):

features = []

X = pd.DataFrame(X.flatten())

# 基础滞后

for lag in [1,2,3]:

features.append(X.shift(lag))

# 窗口统计量

for w in self.window_sizes:

features.append(X.rolling(w).mean())

features.append(X.rolling(w).std())

features.append(X.rolling(w).max() - X.rolling(w).min())

# 时间特征

features.append(pd.Series(X.index % 24, index=X.index)) # 小时周期

features.append(pd.Series(X.index // 7, index=X.index)) # 周周期

return pd.concat(features, axis=1).dropna()

# 应用示例

processor = AdvancedLagFeatures()

X_processed = processor.fit_transform(X)

print(f"生成特征维度:{X_processed.shape}")

四、谱聚类的数学本质与工程实现

4.1 算法数学推导

-

相似度矩阵构造:

Wij=exp(−∣∣xi−xj∣∣22σ2)(高斯核) W_{ij} = \exp\left(-\frac{||x_i - x_j||^2}{2\sigma^2}\right) \quad \text{(高斯核)} Wij=exp(−2σ2∣∣xi−xj∣∣2)(高斯核) -

正则化拉普拉斯矩阵:

Lsym=I−D−1/2WD−1/2 L_{sym} = I - D^{-1/2}WD^{-1/2} Lsym=I−D−1/2WD−1/2 -

特征分解:

Lsym=UΛUT⇒取前k个最小特征向量 L_{sym} = U\Lambda U^T \Rightarrow \text{取前k个最小特征向量} Lsym=UΛUT⇒取前k个最小特征向量

4.2 时间序列聚类实战

from sklearn.neighbors import kneighbors_graph

# 生成多模态时序数据

def generate_cluster_series(n_samples=100, length=200):

clusters = []

for _ in range(n_samples):

freq = np.random.choice([2,5,10])

phase = np.random.uniform(0, 2*np.pi)

noise_level = np.random.uniform(0.1, 0.5)

t = np.linspace(0, 10, length)

signal = np.sin(2 * np.pi * freq * t + phase)

signal += noise_level * np.random.randn(length)

clusters.append(signal)

return np.array(clusters)

X_cluster = generate_cluster_series(n_samples=300)

# 构建特征矩阵(时频域联合特征)

time_features = np.vstack([

np.mean(X_cluster, axis=1),

np.std(X_cluster, axis=1),

skew(X_cluster, axis=1),

kurtosis(X_cluster, axis=1)

]).T

freq_features = np.abs(np.fft.fft(X_cluster)[:, 1:51])

features = np.hstack([time_features, freq_features])

# 谱聚类优化

adjacency_matrix = kneighbors_graph(

features, n_neighbors=15, mode='connectivity', include_self=False)

clustering = SpectralClustering(

n_clusters=3,

affinity='precomputed_nearest_neighbors',

n_neighbors=15,

assign_labels='discretize',

random_state=42

)

labels = clustering.fit_predict(adjacency_matrix)

# 可视化三维投影

from sklearn.manifold import TSNE

tsne = TSNE(n_components=3, perplexity=30)

embedding = tsne.fit_transform(features)

fig = plt.figure(figsize=(12,8))

ax = fig.add_subplot(111, projection='3d')

scatter = ax.scatter(embedding[:,0], embedding[:,1], embedding[:,2],

c=labels, cmap='viridis', s=20)

plt.title("t-SNE三维投影聚类结果")

plt.colorbar(scatter)

plt.show()

五、综合实战:能源消耗预测系统

# 数据加载与预处理

energy_df = pd.read_csv('https://archive.ics.uci.edu/ml/machine-learning-databases/00374/energydata_complete.csv',

parse_dates=['date'])

energy_df.set_index('date', inplace=True)

daily_energy = energy_df.resample('D').mean()['Appliances']

# 特征工程管道

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import FunctionTransformer

def create_features(X):

df = pd.DataFrame(X, columns=['energy'])

df['hour'] = df.index.hour

df['day_of_week'] = df.index.dayofweek

df['month'] = df.index.month

return df.drop(columns=['energy'])

feature_pipeline = Pipeline([

('lag', LagFeatureGenerator(lags=7)),

('window', FunctionTransformer(lambda x: pd.DataFrame(x).rolling(3).agg(['mean','std']))),

('time_features', FunctionTransformer(create_features))

])

# 模型训练与评估

from sklearn.linear_model import LassoCV

from sklearn.multioutput import MultiOutputRegressor

X = daily_energy.values.reshape(-1,1)

y = np.vstack([daily_energy.shift(-i) for i in range(1,8)]).T[:-7]

# 数据拆分

train_size = int(len(X) * 0.8)

X_train, X_test = X[:train_size], X[train_size:]

y_train, y_test = y[:train_size], y[train_size:]

# 构建管道

full_pipeline = Pipeline([

('features', feature_pipeline),

('scaler', StandardScaler()),

('model', MultiOutputRegressor(LassoCV(cv=TimeSeriesSplit(n_splits=5))))

])

full_pipeline.fit(X_train, y_train)

# 多步预测可视化

preds = full_pipeline.predict(X_test)

plt.figure(figsize=(14,7))

for i in range(7):

plt.subplot(3,3,i+1)

plt.plot(y_test[:,i], label='真实值')

plt.plot(preds[:,i], label='预测值')

plt.title(f'未来{i+1}天预测')

plt.legend()

plt.tight_layout()

plt.show()

六、性能优化与生产部署

6.1 实时预测系统架构

数据流架构:

传感器 -> Kafka -> Spark Streaming -> 特征工程 -> 模型服务 -> Redis -> 可视化

组件说明:

- Kafka:实时数据缓冲

- Spark:窗口特征计算

- Scikit-learn:模型推理

- Redis:存储预测结果

6.2 模型性能优化表

| 优化策略 | 实施方法 | 效果提升 |

|---|---|---|

| 特征选择 | 互信息法+递归消除 | +12% R² |

| 模型集成 | Stacking(LSTM+Prophet+XGBoost) | +8% ACC |

| 量化部署 | ONNX格式转换 | 延迟降低65% |

| 增量训练 | Warm Start参数复用 | 训练加速40% |

七、前沿技术拓展

-

时空图卷积网络:

H(l+1)=σ(D~−1/2A~D~−1/2H(l)W(l))H^{(l+1)} = \sigma(\tilde{D}^{-1/2}\tilde{A}\tilde{D}^{-1/2}H^{(l)}W^{(l)})H(l+1)=σ(D~−1/2A~D~−1/2H(l)W(l))

适用于电力网络、交通流量预测 -

神经微分方程:

ht+1=ht+f(ht,θ)dth_{t+1} = h_t + f(h_t, \theta)dtht+1=ht+f(ht,θ)dt

处理不规则采样时序数据 -

注意力机制:

Attention(Q,K,V)=softmax(QKTdk)V\text{Attention}(Q,K,V) = \text{softmax}(\frac{QK^T}{\sqrt{d_k}})VAttention(Q,K,V)=softmax(dkQKT)V

提升长序列建模能力

# 示例:使用Transformer进行时序预测

from sklearn.preprocessing import StandardScaler

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import InputLayer, Transformer

scaler = StandardScaler()

X_scaled = scaler.fit_transform(X)

model = Sequential([

InputLayer(input_shape=(None, 1)),

Transformer(num_heads=4, key_dim=8,

feed_forward_dim=32),

Dense(1)

])

model.compile(optimizer='adam', loss='mse')

model.fit(X_scaled, y, epochs=50,

validation_split=0.2,

batch_size=32)

八、常见问题解决方案

Q1:如何处理缺失时间戳?

- 线性插值:

df.interpolate(method='time') - 周期性填充:

df.fillna(method='ffill', limit=周期长度)

Q2:怎样选择聚类数量?

- 轮廓系数法:

from sklearn.metrics import silhouette_score - 特征值间隙法:分析拉普拉斯矩阵特征值

Q3:实时预测如何更新模型?

class OnlineUpdater:

def __init__(self, base_model, window_size):

self.model = base_model

self.buffer = deque(maxlen=window_size)

def partial_fit(self, X_new, y_new):

self.buffer.append((X_new, y_new))

if len(self.buffer) == self.buffer.maxlen:

X_batch, y_batch = zip(*self.buffer)

self.model.fit(X_batch, y_batch)

九、学习资源推荐

-

经典教材:

- 《Time Series Analysis and Forecasting》

- 《信号与系统》(奥本海姆)

-

在线课程:

- Coursera: “Sequences, Time Series and Prediction”

- 吴恩达:时序预测专项课程

-

工具生态:

更多推荐

已为社区贡献5条内容

已为社区贡献5条内容

所有评论(0)