MMDetection新手安装使用教程(无限踩坑)

MMDetection是商汤和港中文大学针对目标检测任务推出的一个开源项目,它基于Pytorch实现了大量的目标检测算法,例如fast_rcnn,frater_rcnn,detr等算法。提示:以下是本篇文章正文内容,下面案例可供参考以上就是今天要讲的内容,本文主要介绍了mmdetection的安装以及采用标准COCO数据集的使用过程,后续如果读者需要,也会介绍自己数据集的制作以及利用mmdetec

提示:文章写完后,目录可以自动生成,如何生成可参考右边的帮助文档

文章目录

前言

MMDetection是商汤和港中文大学针对目标检测任务推出的一个开源项目,它基于Pytorch实现了大量的目标检测算法,例如fast_rcnn,frater_rcnn,detr等算法。

提示:以下是本篇文章正文内容,下面案例可供参考

一、MMDetection安装过程

1.torch+torchvision的安装

首先我们要安装mmdetection利用GPU推理训练的CUDA以及Pytorch框架。以我自身的RTX3060 12G为例,我安装的是CUDA11.3+torch1.11.0+torchvision0.12.0.

CUDA11.3的安装请查看其他教程链接。链接: link

这里推荐创建一个新的虚拟环境进行安装,打开anaconda prompt

// 创建虚拟环境

conda create -n 环境名 python=X.X

以python3.9为例(以自己的python版本为准)

conda create -n pythorch2 python=3.9

激活环境

activate pythorch2

之后下载torch1.11.0和torchvision0.12.0文件,文件下载链接如下:

链接: link

注意自己的python版本以及win平台

往下滑下载找到对应的torchvision版本,torch1.11对应torchvision0.12.0版本

打开在下载好的两个文件的文件夹中,复制文件的地址

回到我们的虚拟环境中进行安装torch

回到我们的虚拟环境中进行安装torch

同样的方法安装torchvision

同样的方法安装torchvision

安装完成后进行pip list查看

安装完成后进行pip list查看

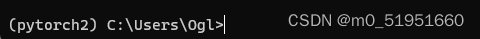

测试torch安装是否成功,显示true表明torch安装成功

2.mmdetection的安装

官网安装简介链接如下:

https://mmdetection.readthedocs.io/zh_CN/latest/get_started.html

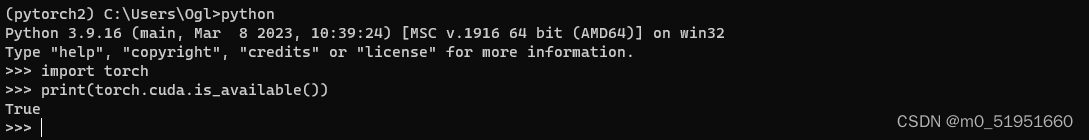

显卡的注意事项:对于30系列的显卡建议安装CUDA11.X的版本

官网给出了基本的安装步骤,如下图:

在步骤1中,建议采用方案a进行安装,因为在之后使用的过程中,如果采用方案b作为第三方库进行安装的话,在后续的使用过程中可能会出现部分项目无法编辑的问题。

当然,官网文档中也给出了使用pip 进行安装的教程,这两种安装方法都可以,还是在我们的虚拟环境中进行pip install 安装。

// 安装mmengine

pip install mmengine;

// 安装 MMCV(以cuda113+torch1.11为例)

pip install "mmcv>=2.0.0" -f https://download.openmmlab.com/mmcv/dist/cu113/torch1.11.0/index.html;

// 安装mmdet

git clone https://github.com/open-mmlab/mmdetection.git

cd mmdetection

pip install -v -e .

# "-v" 指详细说明,或更多的输出

# "-e" 表示在可编辑模式下安装项目,因此对代码所做的任何本地修改都会生效,从而无需重新安装。;

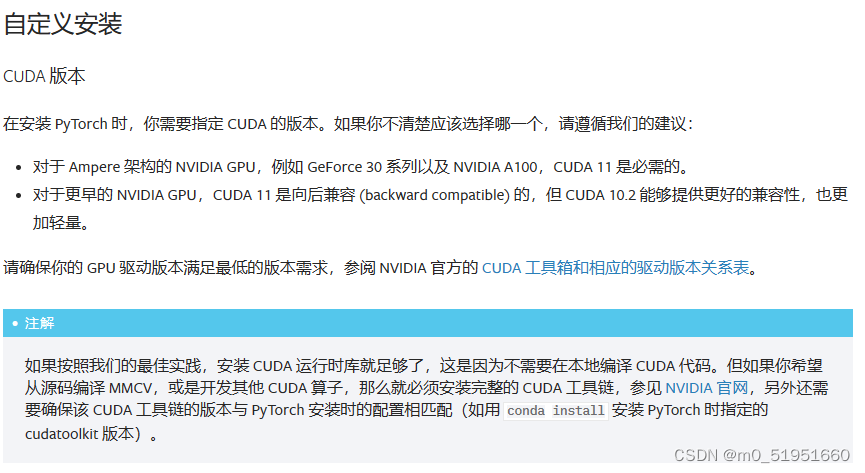

安装完成后在pip list查看会有如下三个库

之后在运行项目的时候如果出现缺少一些基本库的现象,采用pip install进行下载安装即可。例如安装CV2

之后在运行项目的时候如果出现缺少一些基本库的现象,采用pip install进行下载安装即可。例如安装CV2

pip install opencv-python

二、MMDetection的使用步骤

1.下载项目文件

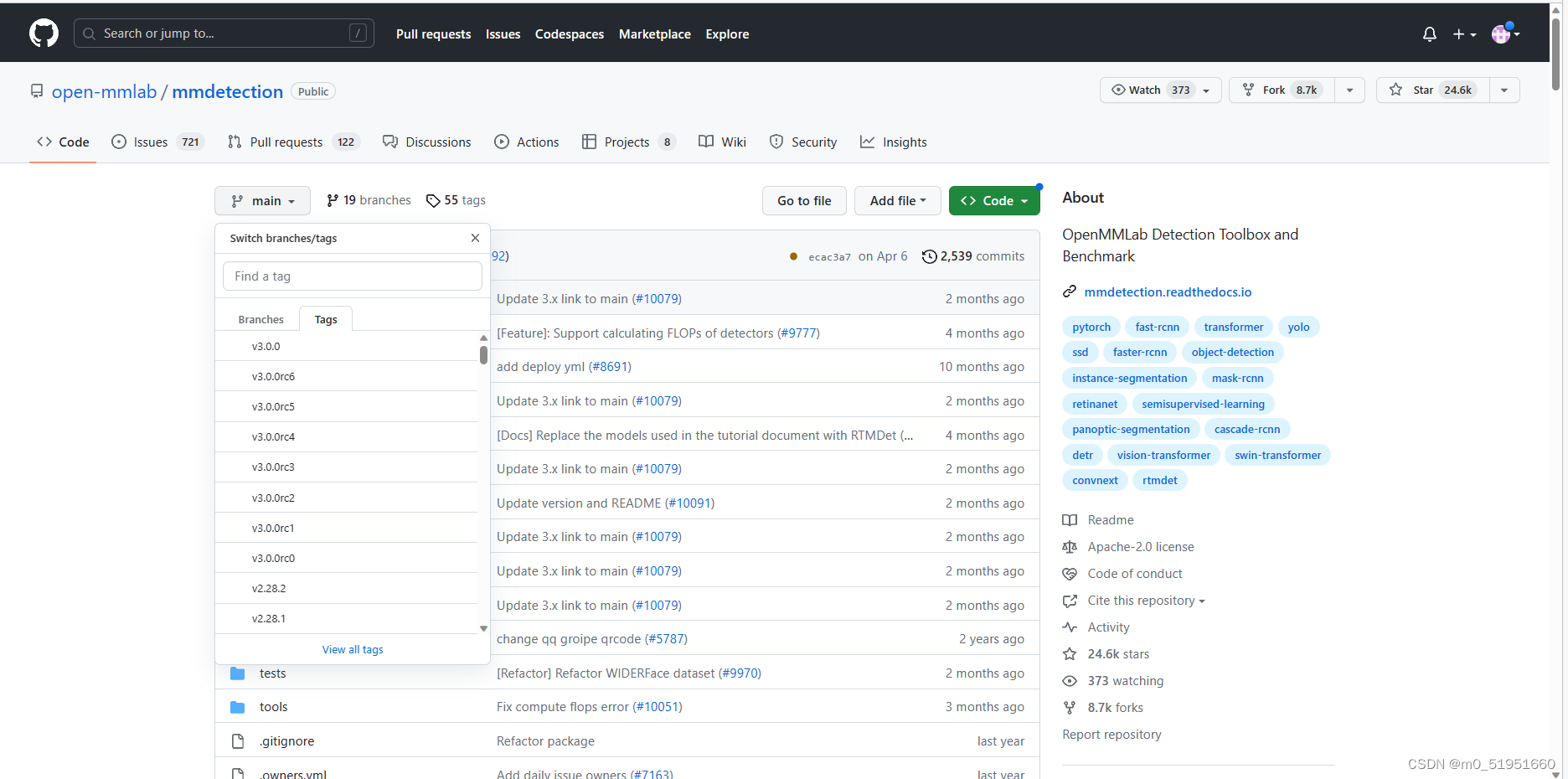

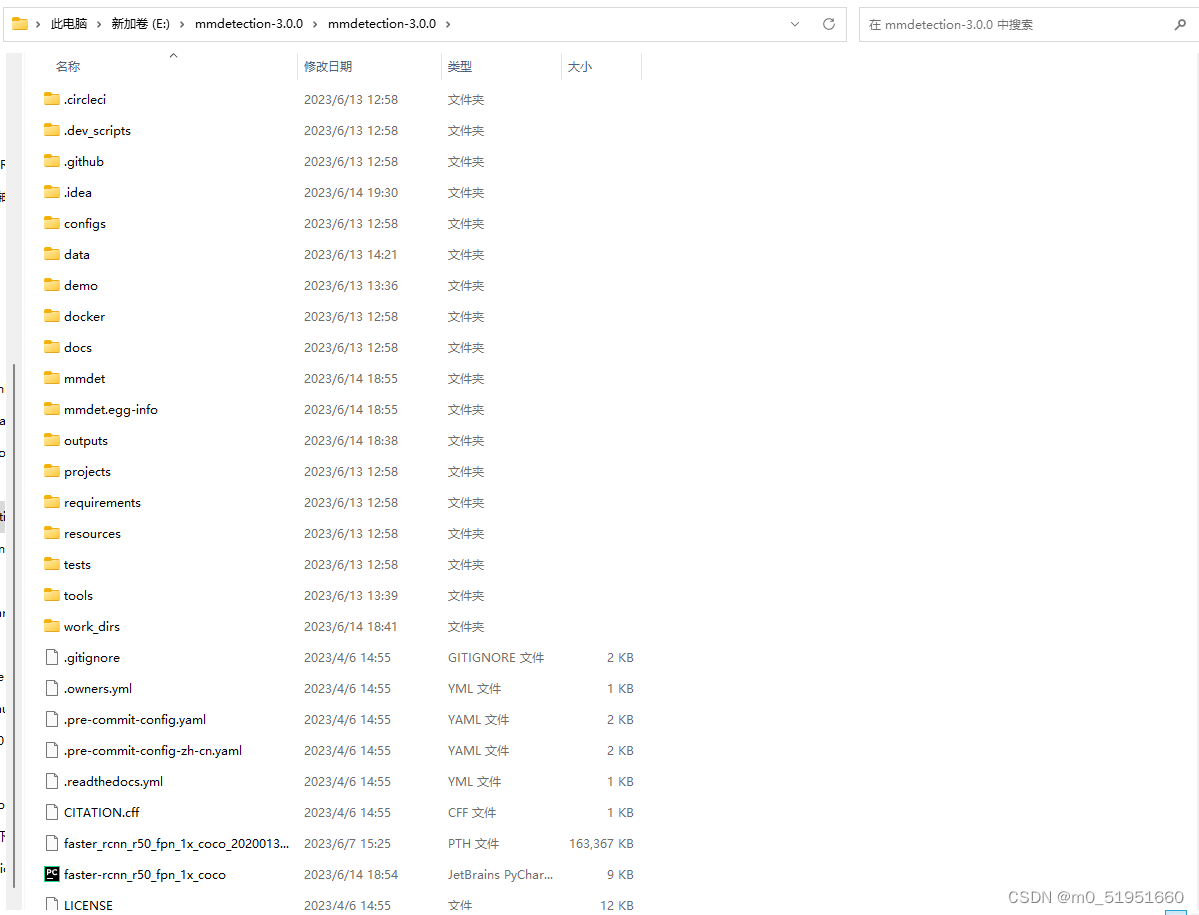

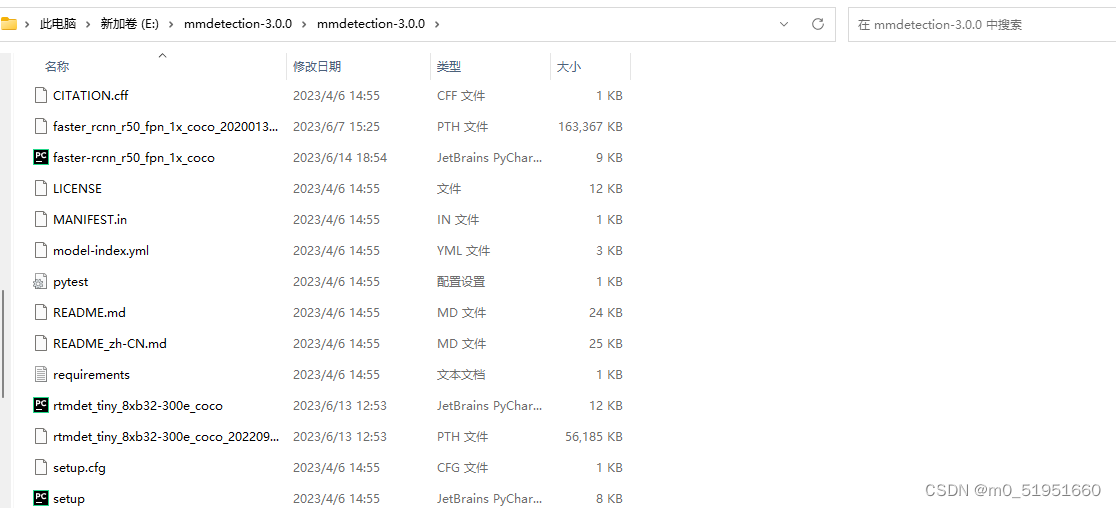

我下载的是mmdetection3.0.0项目文件,如下图:

在Tags中选择v3.0.0版本项目,链接如下:

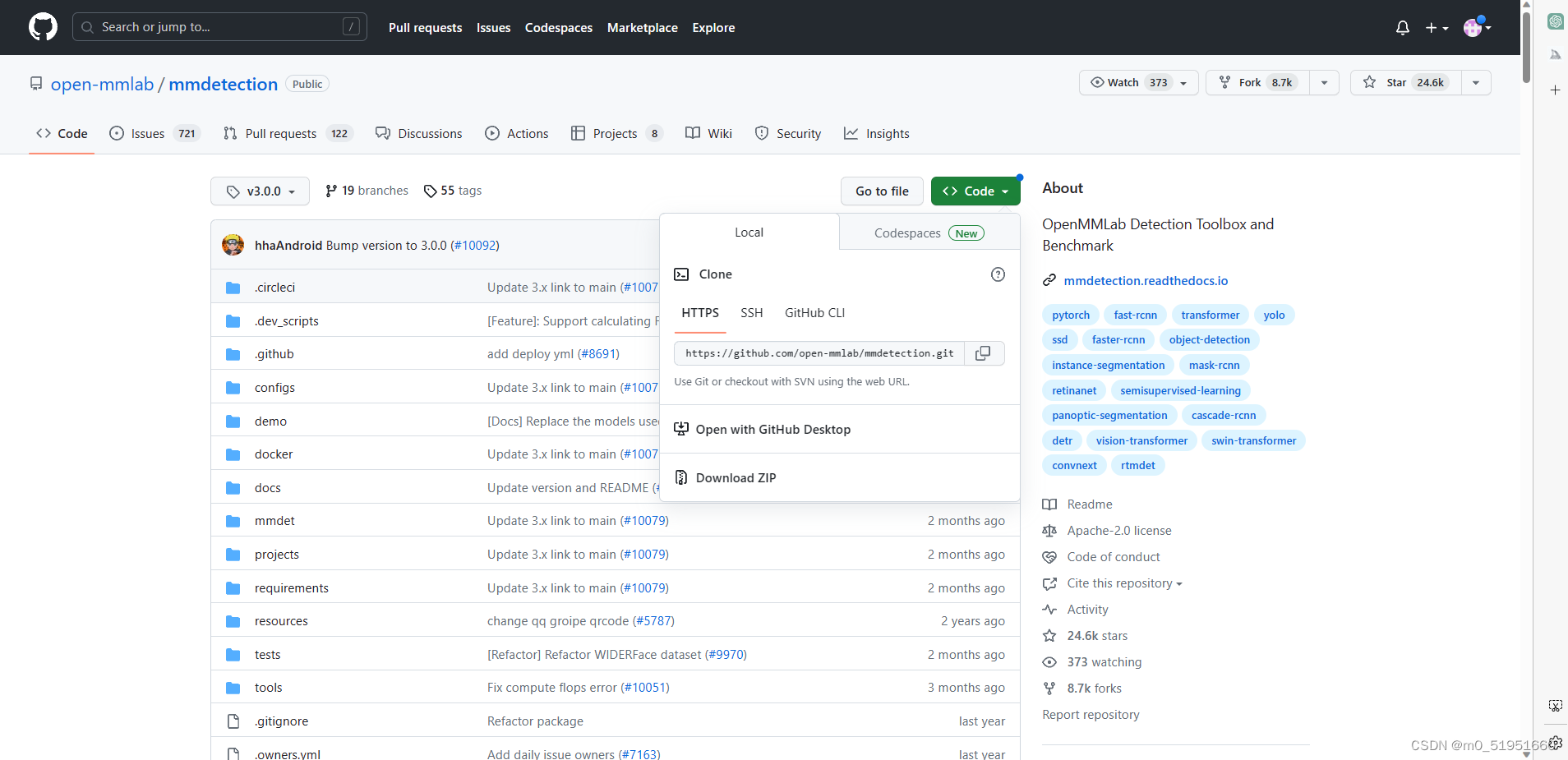

https://github.com/open-mmlab/mmdetection/tree/v3.0.0 之后Download Zip下载到本地即可

之后Download Zip下载到本地即可

2.使用教程

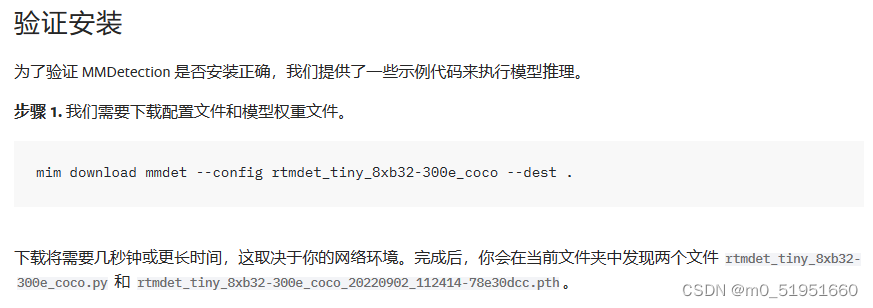

2.1验证安装是否成功

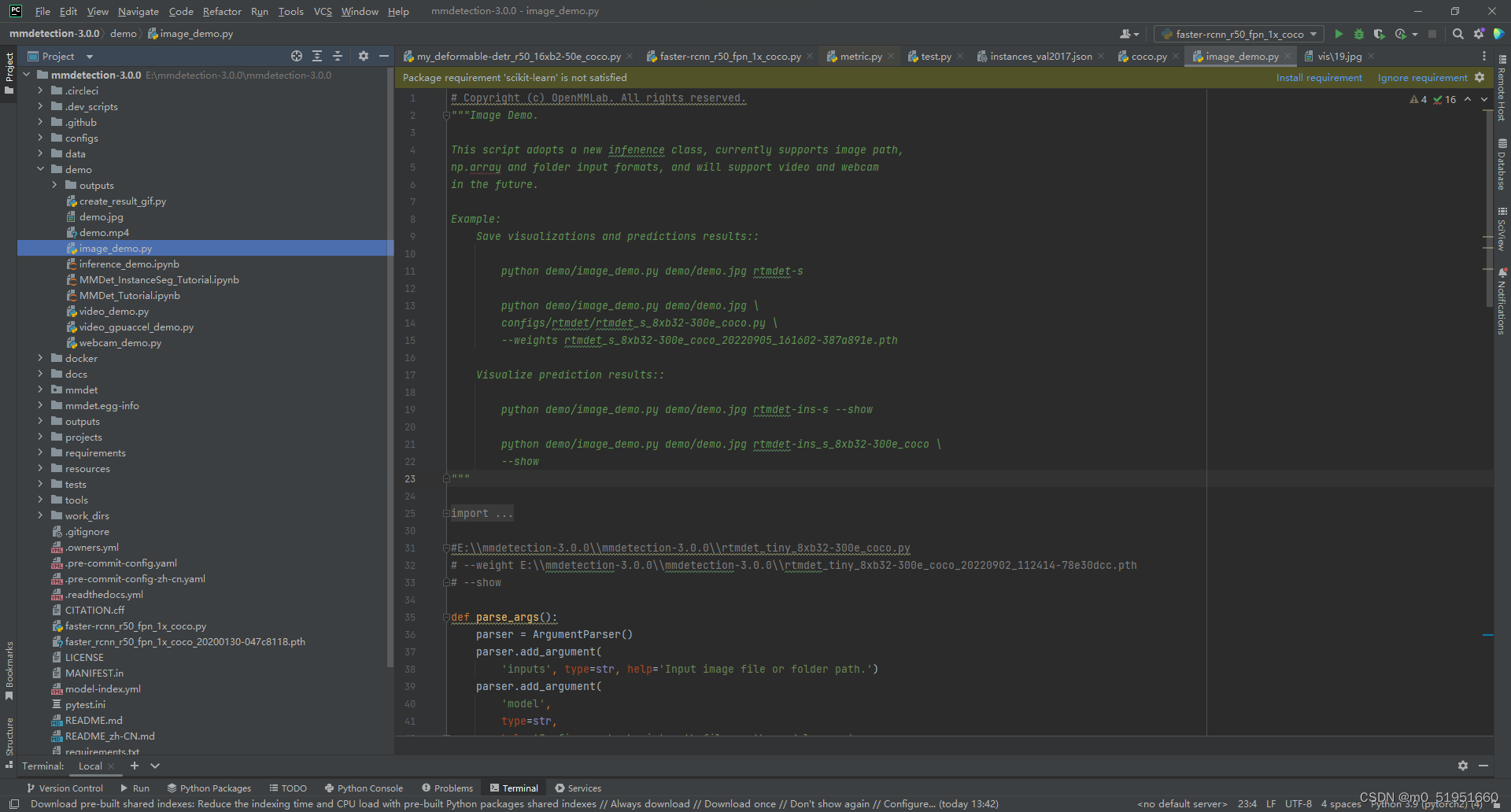

用pycharm打开项目文件(注意将解释器配置成自己创建的虚拟环境),在image_demo.py中进行验证

官网给出了验证过程,当然我们也可以在pycharm中进行验证。首先在官网下载需要的模型文件和权重文件

官网给出了验证过程,当然我们也可以在pycharm中进行验证。首先在官网下载需要的模型文件和权重文件

mim download mmdet --config rtmdet_tiny_8xb32-300e_coco --dest .

在cmd运行上面指令,一般会下载到用户文件夹(C:\Users\Ogl),将下载好的两个文件放到mmdetection3.0.0项目文件夹中

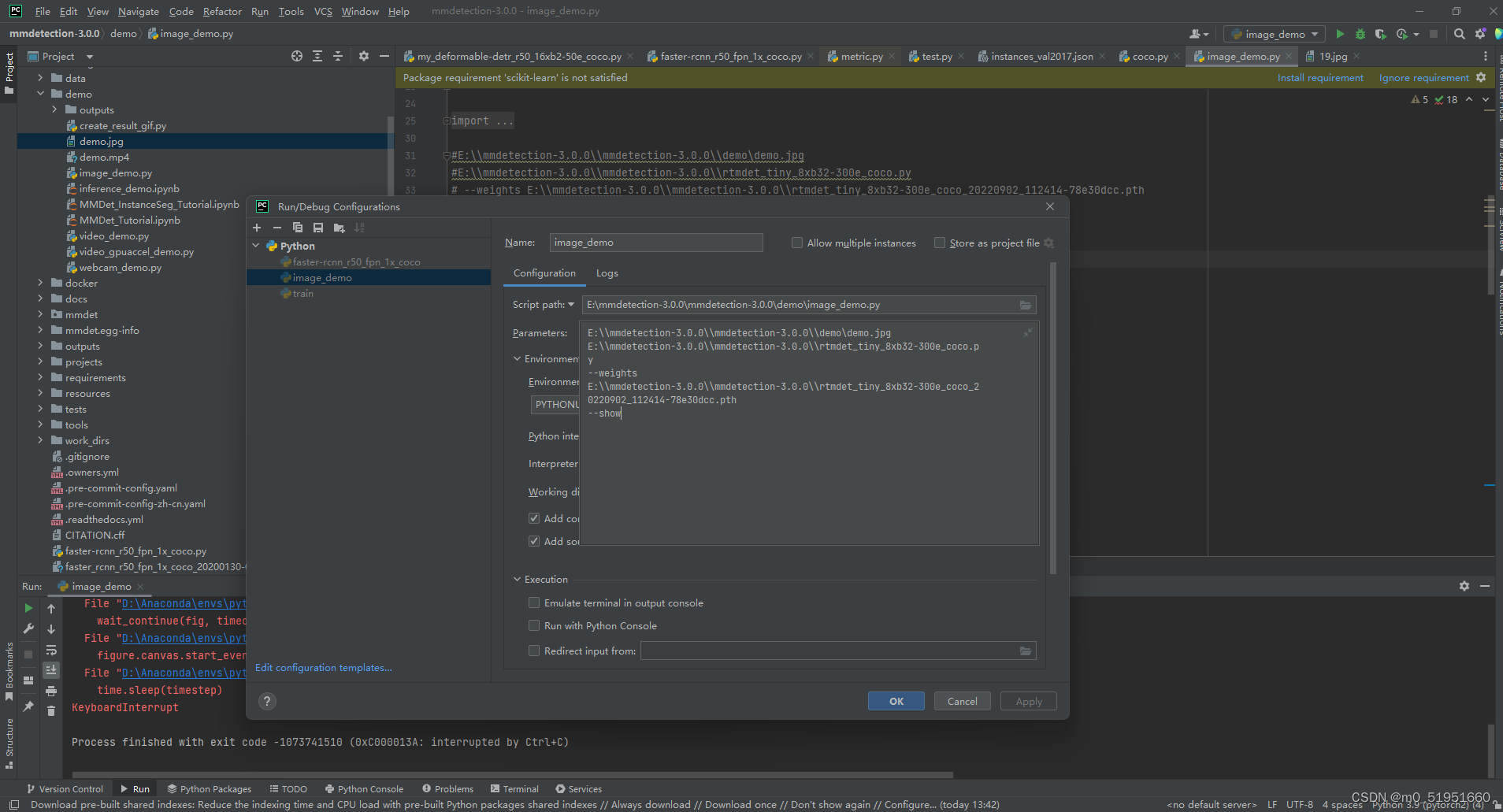

之后在pycharm的image_demo.py运行前传入这两个文件的地址以及demo图片的地址作为参数传入。

复制三个文件的绝对路径,示例如下:(注意是双斜杠\\)

E:\\mmdetection-3.0.0\\mmdetection-3.0.0\\demo\\demo.jpg(图片地址)

E:\\mmdetection-3.0.0\\mmdetection-3.0.0\\rtmdet_tiny_8xb32-300e_coco.py(模型文件地址)

--weights

E:\\mmdetection-3.0.0\\mmdetection-3.0.0\\rtmdet_tiny_8xb32-300e_coco_20220902_112414-78e30dcc.pth(权重文件地址)

--show

将文件地址复制到参数项(parameters)中

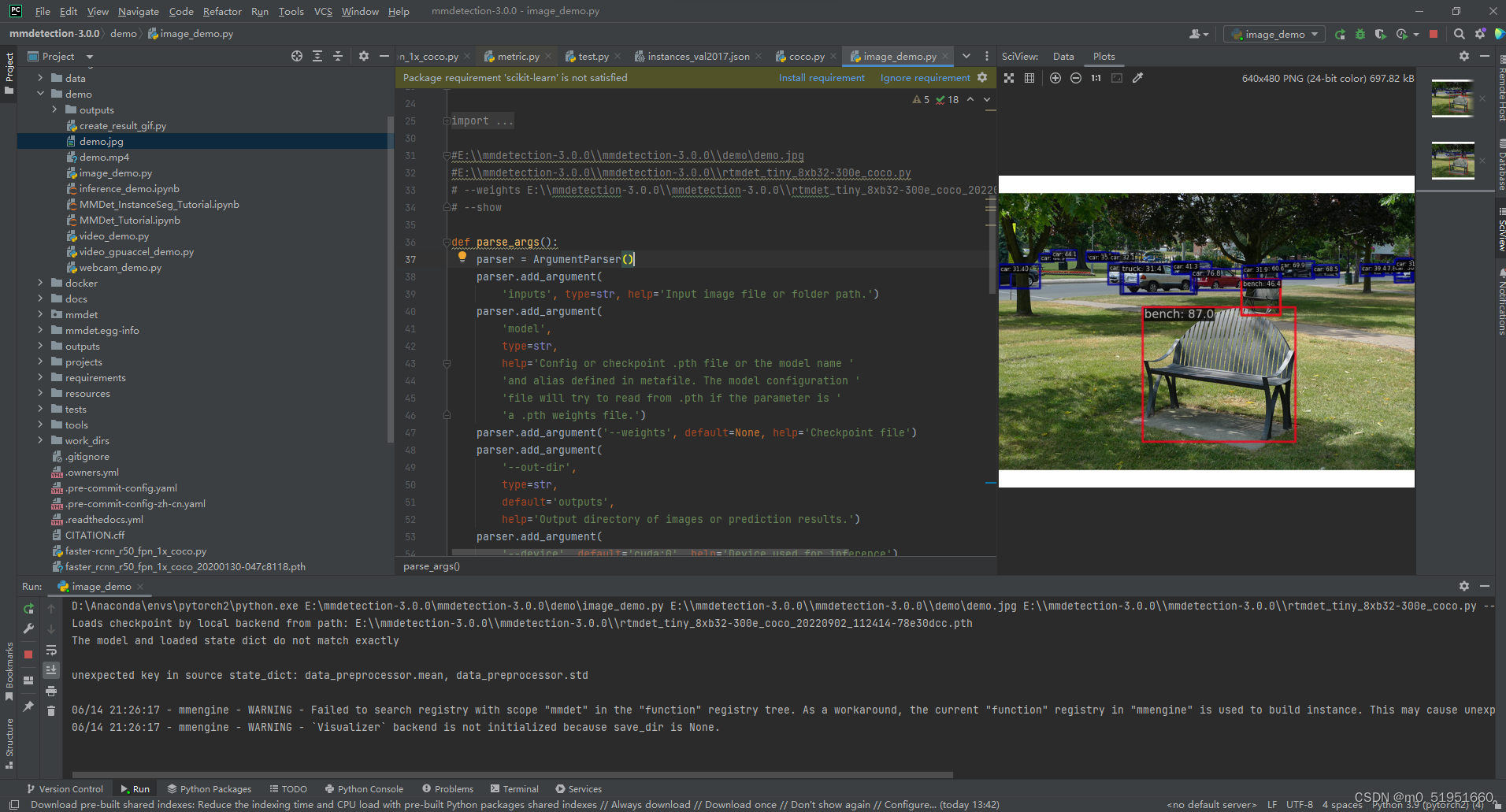

之后运行image_demo.py即可

能显示demo.jpg图片的物体被成功识别及预测后,自此我们的mmdetection安装运行成功

2.2选择模型对COCO数据集进行推理训练

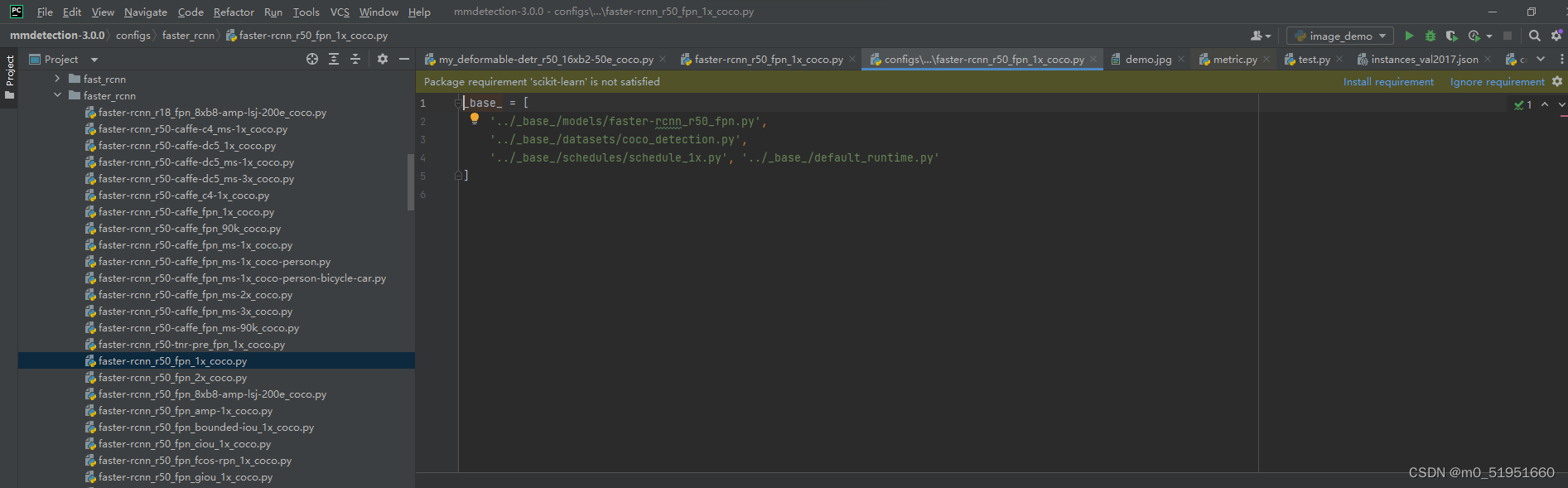

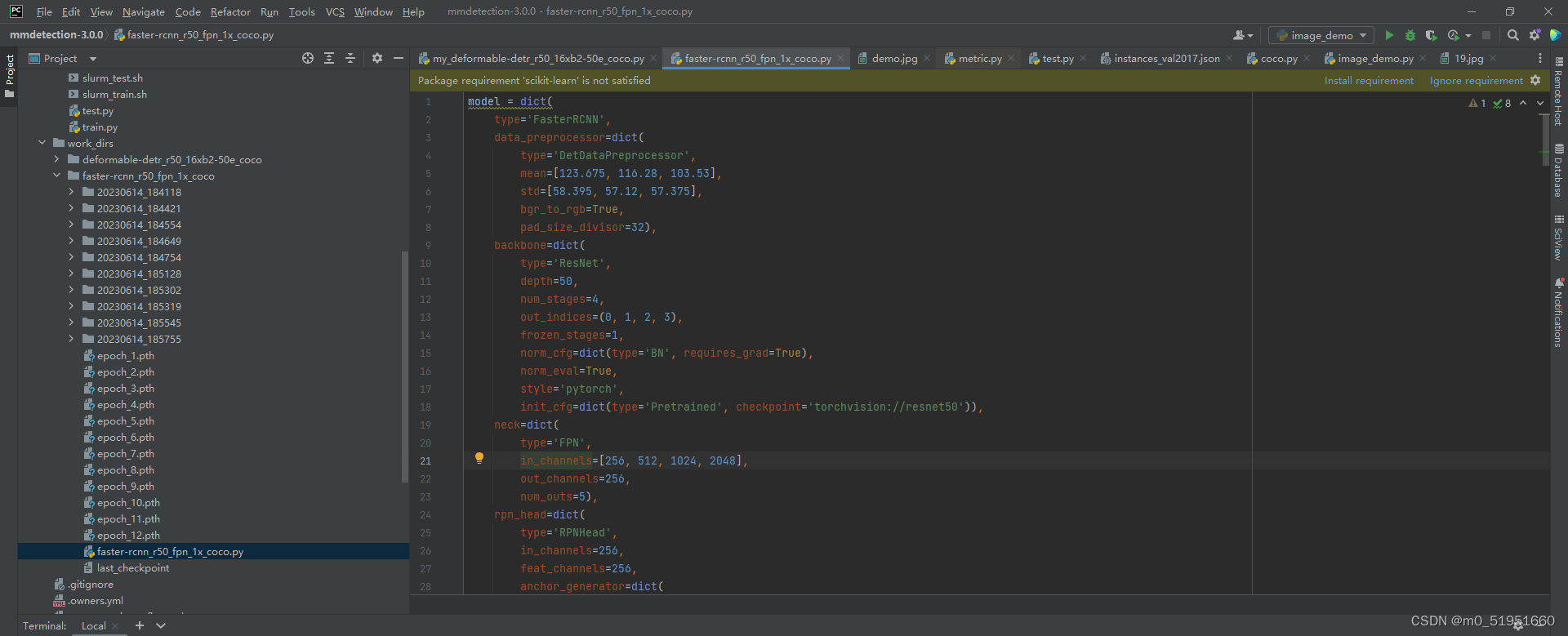

本文以faster_rcnn_r50_fpn_1x_coco.py为例,我们在configs中找到模型文件 之后在./tools/train.py中传入该文件地址,在work_dirs中会生成一个faster_rcnn_r50_fpn_1x_coco.py文件,该文件包含之前py文件的各种配置文件,方便我们后续对模型进行修改

之后在./tools/train.py中传入该文件地址,在work_dirs中会生成一个faster_rcnn_r50_fpn_1x_coco.py文件,该文件包含之前py文件的各种配置文件,方便我们后续对模型进行修改

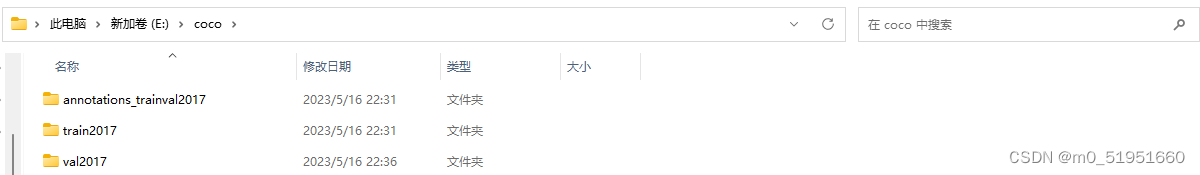

之后下载coco数据集,我这里用的是coco2017数据集

之后下载coco数据集,我这里用的是coco2017数据集

如果要使用自己的数据集需按照官网的数据集格式准备数据集,mmdetection官网数据集准备链接如下:

链接: link 在detection3.0.0项目新建data文件夹,将COCO数据集放到data文件夹中,就可以运行tools/train.py 进行训练了

在detection3.0.0项目新建data文件夹,将COCO数据集放到data文件夹中,就可以运行tools/train.py 进行训练了

打开terminal窗口,在窗口中输入以下指令

(pytorch2) PS E:\mmdetection-3.0.0\mmdetection-3.0.0> python .\tools\train.py E:\\mmdetection-3.0.0\\mmdetection-3.0.0\\work_dirs\\faster-rcnn_r50_fpn_1x_coco\\faster-rcnn_r50_fpn_1x_coco.py

成功运行后会在终端提示如下信息,并开始训练。

(pytorch2) PS E:\mmdetection-3.0.0\mmdetection-3.0.0> python .\tools\train.py E:\\mmdetection-3.0.0\\mmdetection-3.0.0\\work_dirs\\faster-rcnn_r50_fpn_1x_coco\\faster-rcnn_r50_fpn_1x_coco.py

06/15 14:05:09 - mmengine - INFO -

------------------------------------------------------------

System environment:

sys.platform: win32

Python: 3.9.16 (main, Mar 8 2023, 10:39:24) [MSC v.1916 64 bit (AMD64)]

CUDA available: True

numpy_random_seed: 201700358

GPU 0: NVIDIA GeForce RTX 3060

CUDA_HOME: C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.3

NVCC: Cuda compilation tools, release 11.3, V11.3.58

MSVC: 用于 x64 的 Microsoft (R) C/C++ 优化编译器 19.34.31942 版

GCC: n/a

PyTorch: 1.11.0+cu113

PyTorch compiling details: PyTorch built with:

- C++ Version: 199711

- MSVC 192829337

- Intel(R) Math Kernel Library Version 2020.0.2 Product Build 20200624 for Intel(R) 64 architecture applications

- Intel(R) MKL-DNN v2.5.2 (Git Hash a9302535553c73243c632ad3c4c80beec3d19a1e)

- OpenMP 2019

- LAPACK is enabled (usually provided by MKL)

- CPU capability usage: AVX2

- CUDA Runtime 11.3

- NVCC architecture flags: -gencode;arch=compute_37,code=sm_37;-gencode;arch=compute_50,code=sm_50;-gencode;arch=compute_60,code=sm_60;-gencode;arch=compute_61,code=sm_61;-gencode;arch=compute_70,code=sm_70;-gencode;arch=compute_75,code=sm_75;-gencode;arch=compute_80,code=sm_80;-gencode;arch=compute_86,code=sm_86;-gencode;arch=compute_37,code=compute_37

- CuDNN 8.2

- Magma 2.5.4

- Build settings: BLAS_INFO=mkl, BUILD_TYPE=Release, CUDA_VERSION=11.3, CUDNN_VERSION=8.2.0, CXX_COMPILER=C:/actions-runner/_work/pytorch/pytorch/builder/windows/tmp_bin/sccache-cl.exe, CXX_FLAGS=/DWIN32 /D_WINDOWS /GR /EHsc /w /b

igobj -DUSE_PTHREADPOOL -openmp:experimental -IC:/actions-runner/_work/pytorch/pytorch/builder/windows/mkl/include -DNDEBUG -DUSE_KINETO -DLIBKINETO_NOCUPTI -DUSE_FBGEMM -DUSE_XNNPACK -DSYMBOLICATE_MOBILE_DEBUG_HANDLE -DEDGE_PROFILE

R_USE_KINETO, LAPACK_INFO=mkl, PERF_WITH_AVX=1, PERF_WITH_AVX2=1, PERF_WITH_AVX512=1, TORCH_VERSION=1.11.0, USE_CUDA=ON, USE_CUDNN=ON, USE_EXCEPTION_PTR=1, USE_GFLAGS=OFF, USE_GLOG=OFF, USE_MKL=ON, USE_MKLDNN=OFF, USE_MPI=OFF, USE_NCCL=OFF, USE_NNPACK=OFF, USE_OPENMP=ON, USE_ROCM=OFF,

TorchVision: 0.12.0+cu113

OpenCV: 4.7.0

MMEngine: 0.7.4

Runtime environment:

cudnn_benchmark: False

mp_cfg: {'mp_start_method': 'fork', 'opencv_num_threads': 0}

dist_cfg: {'backend': 'nccl'}

seed: 201700358

Distributed launcher: none

Distributed training: False

GPU number: 1

------------------------------------------------------------

06/15 14:05:09 - mmengine - INFO - Config:

model = dict(

type='FasterRCNN',

data_preprocessor=dict(

type='DetDataPreprocessor',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

bgr_to_rgb=True,

pad_size_divisor=32),

backbone=dict(

type='ResNet',

depth=50,

num_stages=4,

out_indices=(0, 1, 2, 3),

frozen_stages=1,

norm_cfg=dict(type='BN', requires_grad=True),

norm_eval=True,

style='pytorch',

init_cfg=dict(type='Pretrained', checkpoint='torchvision://resnet50')),

neck=dict(

type='FPN',

in_channels=[256, 512, 1024, 2048],

out_channels=256,

num_outs=5),

rpn_head=dict(

type='RPNHead',

in_channels=256,

feat_channels=256,

anchor_generator=dict(

type='AnchorGenerator',

scales=[8],

ratios=[0.5, 1.0, 2.0],

strides=[4, 8, 16, 32, 64]),

bbox_coder=dict(

type='DeltaXYWHBBoxCoder',

target_means=[0.0, 0.0, 0.0, 0.0],

target_stds=[1.0, 1.0, 1.0, 1.0]),

loss_cls=dict(

type='CrossEntropyLoss', use_sigmoid=True, loss_weight=1.0),

loss_bbox=dict(type='L1Loss', loss_weight=1.0)),

roi_head=dict(

type='StandardRoIHead',

bbox_roi_extractor=dict(

type='SingleRoIExtractor',

roi_layer=dict(type='RoIAlign', output_size=7, sampling_ratio=0),

out_channels=256,

featmap_strides=[4, 8, 16, 32]),

bbox_head=dict(

type='Shared2FCBBoxHead',

in_channels=256,

fc_out_channels=1024,

roi_feat_size=7,

num_classes=2,

bbox_coder=dict(

type='DeltaXYWHBBoxCoder',

target_means=[0.0, 0.0, 0.0, 0.0],

target_stds=[0.1, 0.1, 0.2, 0.2]),

reg_class_agnostic=False,

loss_cls=dict(

type='CrossEntropyLoss', use_sigmoid=False, loss_weight=1.0),

loss_bbox=dict(type='L1Loss', loss_weight=1.0))),

train_cfg=dict(

rpn=dict(

assigner=dict(

type='MaxIoUAssigner',

pos_iou_thr=0.7,

neg_iou_thr=0.3,

min_pos_iou=0.3,

match_low_quality=True,

ignore_iof_thr=-1),

sampler=dict(

type='RandomSampler',

num=256,

pos_fraction=0.5,

neg_pos_ub=-1,

add_gt_as_proposals=False),

allowed_border=-1,

pos_weight=-1,

debug=False),

rpn_proposal=dict(

nms_pre=2000,

max_per_img=1000,

nms=dict(type='nms', iou_threshold=0.7),

min_bbox_size=0),

rcnn=dict(

assigner=dict(

type='MaxIoUAssigner',

pos_iou_thr=0.5,

neg_iou_thr=0.5,

min_pos_iou=0.5,

match_low_quality=False,

ignore_iof_thr=-1),

sampler=dict(

type='RandomSampler',

num=512,

pos_fraction=0.25,

neg_pos_ub=-1,

add_gt_as_proposals=True),

pos_weight=-1,

debug=False)),

test_cfg=dict(

rpn=dict(

nms_pre=1000,

max_per_img=1000,

nms=dict(type='nms', iou_threshold=0.7),

min_bbox_size=0),

rcnn=dict(

score_thr=0.05,

nms=dict(type='nms', iou_threshold=0.5),

max_per_img=100)))

dataset_type = 'CocoDataset'

data_root = 'data/coco/'

backend_args = None

train_pipeline = [

dict(type='LoadImageFromFile', backend_args=None),

dict(type='LoadAnnotations', with_bbox=True),

dict(type='Resize', scale=(1333, 800), keep_ratio=True),

dict(type='RandomFlip', prob=0.5),

dict(type='PackDetInputs')

]

test_pipeline = [

dict(type='LoadImageFromFile', backend_args=None),

dict(type='Resize', scale=(1333, 800), keep_ratio=True),

dict(type='LoadAnnotations', with_bbox=True),

dict(

type='PackDetInputs',

meta_keys=('img_id', 'img_path', 'ori_shape', 'img_shape',

'scale_factor'))

]

train_dataloader = dict(

batch_size=2,

num_workers=2,

persistent_workers=True,

sampler=dict(type='DefaultSampler', shuffle=True),

batch_sampler=dict(type='AspectRatioBatchSampler'),

dataset=dict(

type='CocoDataset',

data_root='data/labelme-data/coco-formatcoco/',

ann_file='annotations/instances_train2017.json',

data_prefix=dict(img='images/train2017/'),

filter_cfg=dict(filter_empty_gt=True, min_size=32),

pipeline=[

dict(type='LoadImageFromFile', backend_args=None),

dict(type='LoadAnnotations', with_bbox=True),

dict(type='Resize', scale=(1333, 800), keep_ratio=True),

dict(type='RandomFlip', prob=0.5),

dict(type='PackDetInputs')

],

backend_args=None))

val_dataloader = dict(

batch_size=1,

num_workers=2,

persistent_workers=True,

drop_last=False,

sampler=dict(type='DefaultSampler', shuffle=False),

dataset=dict(

type='CocoDataset',

data_root='data/labelme-data/coco-formatcoco/',

ann_file='annotations/instances_val2017.json',

data_prefix=dict(img='images/val2017/'),

test_mode=True,

pipeline=[

dict(type='LoadImageFromFile', backend_args=None),

dict(type='Resize', scale=(1333, 800), keep_ratio=True),

dict(type='LoadAnnotations', with_bbox=True),

dict(

type='PackDetInputs',

meta_keys=('img_id', 'img_path', 'ori_shape', 'img_shape',

'scale_factor'))

],

backend_args=None))

test_dataloader = dict(

batch_size=1,

num_workers=2,

persistent_workers=True,

drop_last=False,

sampler=dict(type='DefaultSampler', shuffle=False),

dataset=dict(

type='CocoDataset',

data_root='data/labelme-data/coco-formatcoco/',

ann_file='annotations/instances_val2017.json',

data_prefix=dict(img='images/val2017/'),

test_mode=True,

pipeline=[

dict(type='LoadImageFromFile', backend_args=None),

dict(type='Resize', scale=(1333, 800), keep_ratio=True),

dict(type='LoadAnnotations', with_bbox=True),

dict(

type='PackDetInputs',

meta_keys=('img_id', 'img_path', 'ori_shape', 'img_shape',

'scale_factor'))

],

backend_args=None))

val_evaluator = dict(

type='CocoMetric',

ann_file=

'data/labelme-data/coco-formatcoco/annotations/instances_val2017.json',

metric='bbox',

format_only=False,

backend_args=None)

test_evaluator = dict(

type='CocoMetric',

ann_file=

'data/labelme-data/coco-formatcoco/annotations/instances_val2017.json',

metric='bbox',

format_only=False,

backend_args=None)

train_cfg = dict(type='EpochBasedTrainLoop', max_epochs=50, val_interval=1)

val_cfg = dict(type='ValLoop')

test_cfg = dict(type='TestLoop')

param_scheduler = [

dict(

type='LinearLR', start_factor=0.001, by_epoch=False, begin=0, end=500),

dict(

type='MultiStepLR',

begin=0,

end=50,

by_epoch=True,

milestones=[8, 11],

gamma=0.1)

]

optim_wrapper = dict(

type='OptimWrapper',

optimizer=dict(type='SGD', lr=0.02, momentum=0.9, weight_decay=0.0001))

auto_scale_lr = dict(enable=False, base_batch_size=16)

default_scope = 'mmdet'

default_hooks = dict(

timer=dict(type='IterTimerHook'),

logger=dict(type='LoggerHook', interval=50),

param_scheduler=dict(type='ParamSchedulerHook'),

checkpoint=dict(type='CheckpointHook', interval=1),

sampler_seed=dict(type='DistSamplerSeedHook'),

visualization=dict(

type='DetVisualizationHook', draw=True, test_out_dir='out'))

env_cfg = dict(

cudnn_benchmark=False,

mp_cfg=dict(mp_start_method='fork', opencv_num_threads=0),

dist_cfg=dict(backend='nccl'))

vis_backends = [dict(type='LocalVisBackend')]

visualizer = dict(

type='DetLocalVisualizer',

vis_backends=[dict(type='LocalVisBackend')],

name='visualizer')

log_processor = dict(type='LogProcessor', window_size=50, by_epoch=True)

log_level = 'INFO'

load_from = '.\\work_dirs\\faster-rcnn_r50_fpn_1x_coco\\epoch_50.pth'

resume = False

launcher = 'none'

work_dir = './work_dirs\\faster-rcnn_r50_fpn_1x_coco'

06/15 14:05:10 - mmengine - INFO - Distributed training is not used, all SyncBatchNorm (SyncBN) layers in the model will be automatically reverted to BatchNormXd layers if they are used.

06/15 14:05:10 - mmengine - INFO - Hooks will be executed in the following order:

before_run:

(VERY_HIGH ) RuntimeInfoHook

(BELOW_NORMAL) LoggerHook

--------------------

before_train:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) IterTimerHook

(VERY_LOW ) CheckpointHook

--------------------

before_train_epoch:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) IterTimerHook

(NORMAL ) DistSamplerSeedHook

--------------------

before_train_iter:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) IterTimerHook

--------------------

after_train_iter:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) IterTimerHook

(BELOW_NORMAL) LoggerHook

(LOW ) ParamSchedulerHook

(VERY_LOW ) CheckpointHook

--------------------

after_train_epoch:

(NORMAL ) IterTimerHook

(LOW ) ParamSchedulerHook

(VERY_LOW ) CheckpointHook

--------------------

before_val_epoch:

(NORMAL ) IterTimerHook

--------------------

before_val_iter:

(NORMAL ) IterTimerHook

--------------------

after_val_iter:

(NORMAL ) IterTimerHook

(NORMAL ) DetVisualizationHook

(BELOW_NORMAL) LoggerHook

--------------------

after_val_epoch:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) IterTimerHook

(BELOW_NORMAL) LoggerHook

(LOW ) ParamSchedulerHook

(VERY_LOW ) CheckpointHook

--------------------

after_train:

(VERY_LOW ) CheckpointHook

--------------------

before_test_epoch:

(NORMAL ) IterTimerHook

--------------------

before_test_iter:

(NORMAL ) IterTimerHook

--------------------

after_test_iter:

(NORMAL ) IterTimerHook

(NORMAL ) DetVisualizationHook

(BELOW_NORMAL) LoggerHook

--------------------

after_test_epoch:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) IterTimerHook

(BELOW_NORMAL) LoggerHook

--------------------

after_run:

(BELOW_NORMAL) LoggerHook

--------------------

loading annotations into memory...

Done (t=0.01s)

creating index...

index created!

loading annotations into memory...

Done (t=0.02s)

creating index...

index created!

loading annotations into memory...

Done (t=0.00s)

creating index...

index created!

06/15 14:05:12 - mmengine - INFO - load model from: torchvision://resnet50

06/15 14:05:12 - mmengine - INFO - Loads checkpoint by torchvision backend from path: torchvision://resnet50

06/15 14:05:12 - mmengine - WARNING - The model and loaded state dict do not match exactly

unexpected key in source state_dict: fc.weight, fc.bias

Loads checkpoint by local backend from path: .\work_dirs\faster-rcnn_r50_fpn_1x_coco\epoch_50.pth

06/15 14:05:12 - mmengine - INFO - Load checkpoint from .\work_dirs\faster-rcnn_r50_fpn_1x_coco\epoch_50.pth

06/15 14:05:12 - mmengine - WARNING - "FileClient" will be deprecated in future. Please use io functions in https://mmengine.readthedocs.io/en/latest/api/fileio.html#file-io

06/15 14:05:12 - mmengine - WARNING - "HardDiskBackend" is the alias of "LocalBackend" and the former will be deprecated in future.

06/15 14:05:12 - mmengine - INFO - Checkpoints will be saved to E:\mmdetection-3.0.0\mmdetection-3.0.0\work_dirs\faster-rcnn_r50_fpn_1x_coco.

06/15 14:05:20 - mmengine - INFO - Exp name: faster-rcnn_r50_fpn_1x_coco_20230615_140507

06/15 14:05:20 - mmengine - INFO - Epoch(train) [1][9/9] lr: 3.4032e-04 eta: 0:06:53 time: 0.9368 data_time: 0.2160 memory: 4485 loss: 0.8013 loss_rpn_cls: 0.0591 loss_rpn_bbox: 0.0445 loss_cls: 0.2363 acc: 92.2852 loss_bbox: 0.4615

06/15 14:05:20 - mmengine - INFO - Saving checkpoint at 1 epochs

06/15 14:05:23 - mmengine - INFO - Evaluating bbox...

Loading and preparing results...

DONE (t=0.00s)

creating index...

index created!

Running per image evaluation...

Evaluate annotation type *bbox*

DONE (t=0.02s).

Accumulating evaluation results...

DONE (t=0.02s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.153

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=1000 ] = 0.435

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=1000 ] = 0.073

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=1000 ] = 0.318

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=1000 ] = -1.000

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=1000 ] = -1.000

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.317

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=300 ] = 0.317

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=1000 ] = 0.317

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=1000 ] = 0.317

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=1000 ] = -1.000

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=1000 ] = -1.000

06/15 14:05:23 - mmengine - INFO - bbox_mAP_copypaste: 0.153 0.435 0.073 0.318 -1.000 -1.000

06/15 14:05:23 - mmengine - INFO - Epoch(val) [1][2/2] coco/bbox_mAP: 0.1530 coco/bbox_mAP_50: 0.4350 coco/bbox_mAP_75: 0.0730 coco/bbox_mAP_s: 0.3180 coco/bbox_mAP_m: -1.0000 coco/bbox_mAP_l: -1.0000 data_time: 0.6178 time: 0.8028

06/15 14:05:27 - mmengine - INFO - Exp name: faster-rcnn_r50_fpn_1x_coco_20230615_140507

06/15 14:05:27 - mmengine - INFO - Epoch(train) [2][9/9] lr: 7.0068e-04 eta: 0:05:02 time: 0.6997 data_time: 0.1117 memory: 5159 loss: 0.8078 loss_rpn_cls: 0.0608 loss_rpn_bbox: 0.0441 loss_cls: 0.2357 acc: 92.7734 loss_bbox: 0.4672

06/15 14:05:27 - mmengine - INFO - Saving checkpoint at 2 epochs

06/15 14:05:29 - mmengine - INFO - Evaluating bbox...

Loading and preparing results...

DONE (t=0.00s)

creating index...

index created!

Running per image evaluation...

Evaluate annotation type *bbox*

DONE (t=0.02s).

Accumulating evaluation results...

DONE (t=0.01s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.140

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=1000 ] = 0.401

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=1000 ] = 0.037

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=1000 ] = 0.314

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=1000 ] = -1.000

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=1000 ] = -1.000

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.312

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=300 ] = 0.312

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=1000 ] = 0.312

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=1000 ] = 0.312

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=1000 ] = -1.000

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=1000 ] = -1.000

06/15 14:05:29 - mmengine - INFO - bbox_mAP_copypaste: 0.140 0.401 0.037 0.314 -1.000 -1.000

06/15 14:05:29 - mmengine - INFO - Epoch(val) [2][2/2] coco/bbox_mAP: 0.1400 coco/bbox_mAP_50: 0.4010 coco/bbox_mAP_75: 0.0370 coco/bbox_mAP_s: 0.3140 coco/bbox_mAP_m: -1.0000 coco/bbox_mAP_l: -1.0000 data_time: 0.0082 time: 0.0971

06/15 14:05:33 - mmengine - INFO - Exp name: faster-rcnn_r50_fpn_1x_coco_20230615_140507

06/15 14:05:33 - mmengine - INFO - Epoch(train) [3][9/9] lr: 1.0610e-03 eta: 0:04:20 time: 0.6156 data_time: 0.0756 memory: 5388 loss: 0.8012 loss_rpn_cls: 0.0611 loss_rpn_bbox: 0.0444 loss_cls: 0.2323 acc: 94.4336 loss_bbox: 0.4634

06/15 14:05:33 - mmengine - INFO - Saving checkpoint at 3 epochs

06/15 14:05:34 - mmengine - INFO - Evaluating bbox...

Loading and preparing results...

DONE (t=0.00s)

creating index...

index created!

Running per image evaluation...

Evaluate annotation type *bbox*

DONE (t=0.00s).

Accumulating evaluation results...

DONE (t=0.02s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.148

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=1000 ] = 0.445

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=1000 ] = 0.052

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=1000 ] = 0.319

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=1000 ] = -1.000

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=1000 ] = -1.000

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.317

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=300 ] = 0.317

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=1000 ] = 0.317

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=1000 ] = 0.317

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=1000 ] = -1.000

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=1000 ] = -1.000

06/15 14:05:34 - mmengine - INFO - bbox_mAP_copypaste: 0.148 0.445 0.052 0.319 -1.000 -1.000

06/15 14:05:34 - mmengine - INFO - Epoch(val) [3][2/2] coco/bbox_mAP: 0.1480 coco/bbox_mAP_50: 0.4450 coco/bbox_mAP_75: 0.0520 coco/bbox_mAP_s: 0.3190 coco/bbox_mAP_m: -1.0000 coco/bbox_mAP_l: -1.0000 data_time: 0.0111 time: 0.0956

06/15 14:05:38 - mmengine - INFO - Exp name: faster-rcnn_r50_fpn_1x_coco_20230615_140507

06/15 14:05:38 - mmengine - INFO - Epoch(train) [4][9/9] lr: 1.4214e-03 eta: 0:03:58 time: 0.5761 data_time: 0.0581 memory: 5388 loss: 0.8093 loss_rpn_cls: 0.0605 loss_rpn_bbox: 0.0454 loss_cls: 0.2331 acc: 95.0195 loss_bbox: 0.4703

06/15 14:05:38 - mmengine - INFO - Saving checkpoint at 4 epochs

06/15 14:05:40 - mmengine - INFO - Evaluating bbox...

Loading and preparing results...

DONE (t=0.00s)

creating index...

index created!

Running per image evaluation...

Evaluate annotation type *bbox*

DONE (t=0.02s).

Accumulating evaluation results...

DONE (t=0.00s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.153

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=1000 ] = 0.421

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=1000 ] = 0.153

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=1000 ] = 0.268

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=1000 ] = -1.000

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=1000 ] = -1.000

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.267

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=300 ] = 0.267

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=1000 ] = 0.267

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=1000 ] = 0.267

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=1000 ] = -1.000

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=1000 ] = -1.000

06/15 14:05:40 - mmengine - INFO - bbox_mAP_copypaste: 0.153 0.421 0.153 0.268 -1.000 -1.000

06/15 14:05:40 - mmengine - INFO - Epoch(val) [4][2/2] coco/bbox_mAP: 0.1530 coco/bbox_mAP_50: 0.4210 coco/bbox_mAP_75: 0.1530 coco/bbox_mAP_s: 0.2680 coco/bbox_mAP_m: -1.0000 coco/bbox_mAP_l: -1.0000 data_time: 0.0104 time: 0.0912

06/15 14:05:44 - mmengine - INFO - Exp name: faster-rcnn_r50_fpn_1x_coco_20230615_140507

06/15 14:05:44 - mmengine - INFO - Epoch(train) [5][9/9] lr: 1.7818e-03 eta: 0:03:42 time: 0.5506 data_time: 0.0474 memory: 5159 loss: 0.8008 loss_rpn_cls: 0.0594 loss_rpn_bbox: 0.0455 loss_cls: 0.2366 acc: 92.1875 loss_bbox: 0.4593

06/15 14:05:44 - mmengine - INFO - Saving checkpoint at 5 epochs

06/15 14:05:47 - mmengine - INFO - Evaluating bbox...

Loading and preparing results...

DONE (t=0.00s)

creating index...

index created!

Running per image evaluation...

Evaluate annotation type *bbox*

DONE (t=0.04s).

Accumulating evaluation results...

DONE (t=0.01s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.170

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=1000 ] = 0.525

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=1000 ] = 0.087

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=1000 ] = 0.290

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=1000 ] = -1.000

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=1000 ] = -1.000

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.288

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=300 ] = 0.288

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=1000 ] = 0.288

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=1000 ] = 0.288

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=1000 ] = -1.000

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=1000 ] = -1.000

06/15 14:05:47 - mmengine - INFO - bbox_mAP_copypaste: 0.170 0.525 0.087 0.290 -1.000 -1.000

06/15 14:05:47 - mmengine - INFO - Epoch(val) [5][2/2] coco/bbox_mAP: 0.1700 coco/bbox_mAP_50: 0.5250 coco/bbox_mAP_75: 0.0870 coco/bbox_mAP_s: 0.2900 coco/bbox_mAP_m: -1.0000 coco/bbox_mAP_l: -1.0000 data_time: 0.0156 time: 0.1525

;

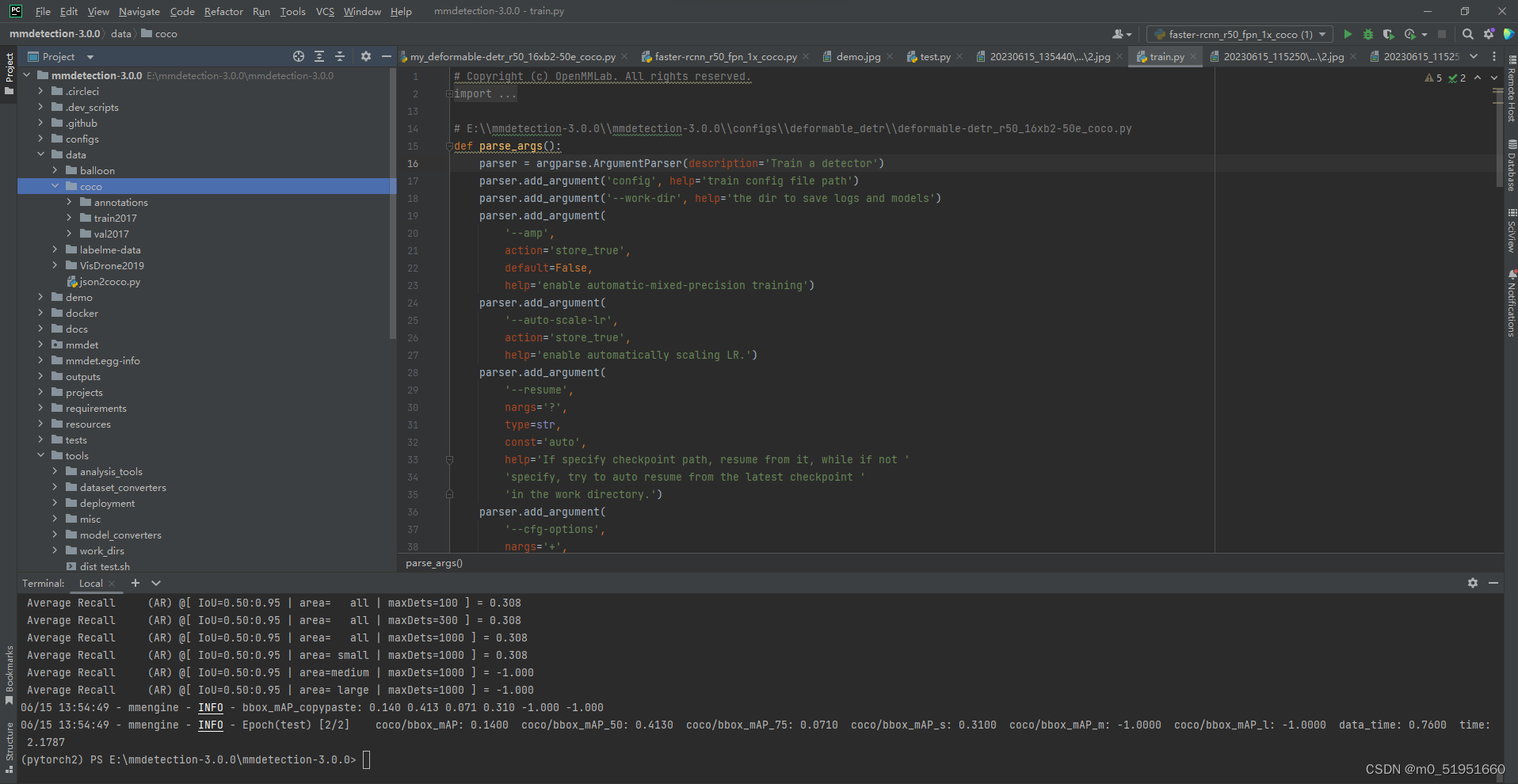

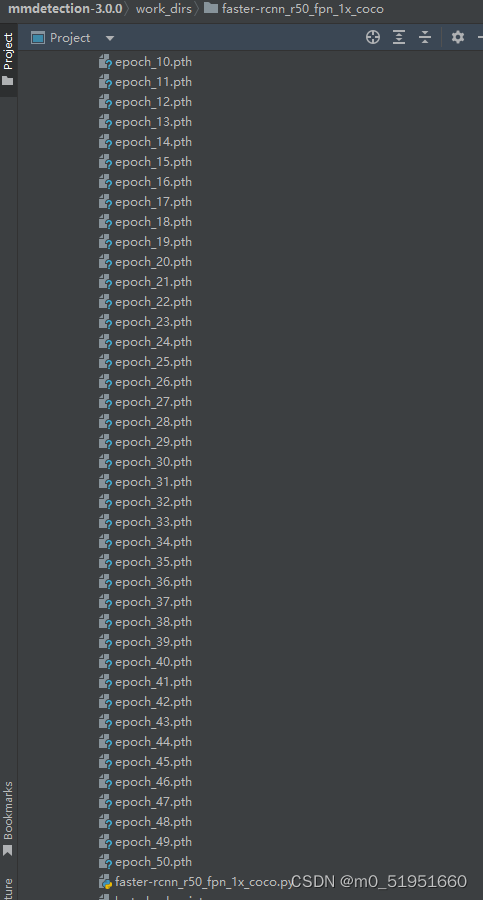

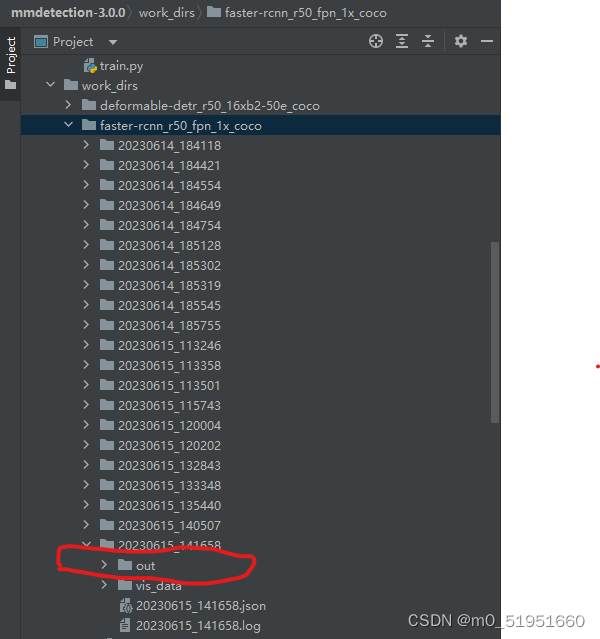

当然我们也可以用到text.py 来对模型进行测试, 需要出入模型文件地址和训练的权重文件地址,训练的权重文件一般保存在work_dirs 文件夹下,我这里是E:\mmdetection-3.0.0\mmdetection-3.0.0\work_dirs\faster-rcnn_r50_fpn_1x_coco文件夹下

找到最后的epoch训练得到的权重参数,如下:

我这里总共训练了50次,就选择epoch_50.pth作为权重文件进行测试,测试指令如下:

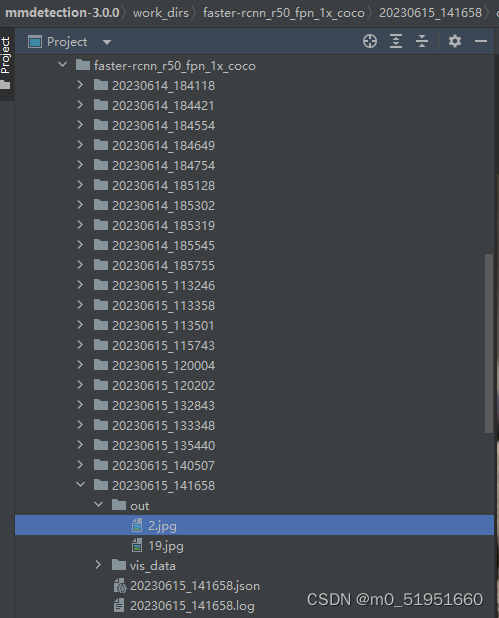

(pytorch2) PS E:\mmdetection-3.0.0\mmdetection-3.0.0> python .\tools\test.py .\work_dirs/faster-rcnn_r50_fpn_1x_coco/faster-rcnn_r50_fpn_1x_coco.py .\work_dirs\faster-rcnn_r50_fpn_1x_coco\epoch_50.pth --show-dir out

在该文件夹下会生成一个out文件夹,就是对测试图片的识别和预测。

总结

以上就是今天要讲的内容,本文主要介绍了mmdetection的安装以及采用标准COCO数据集的使用过程,后续如果读者需要,也会介绍自己数据集的制作以及利用mmdetection中的模型进行训练和测试。

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)