【昇腾】用MindIE(服务化方式)拉起glm4-9b-chat模型问题解决

【昇腾】用MindIE拉起glm4-9b-chat模型问题解决:LLMInferEngine failed to init LLMInferModelsERR: Failed to init endpoint! Please check the service log or console output.Killedmake sure the folder's owner has execute

·

问题描述:

用MindIE拉glm4-9b-chat模型,报错如下:

LLMInferEngine failed to init LLMInferModels

ERR: Failed to init endpoint! Please check the service log or console output.

Killed

问题定位

日志信息太少,多打些,重新测试

#MindIE Service+LLM日志

export MINDIE_LOG_TO_STDOUT=true #输入到屏幕

export MINDIE_LOG_LEVEL="debug"

#CANN日志收集

export ASCEND_GLOBAL_LOG_LEVEL=0 #debug级别

export ASCEND_SLOG_PRINT_TO_STDOUT=1 #输入到屏幕

#加速库日志 |默认输出路径为

export ATB_LOG_LEVEL=DEBUG

export ATB_LOG_TO_STDOUT=1 #输入到屏幕

#算子库日志 |默认输出路径 ~/atb/log

export ASDOPS_LOG_LEVEL=DEBUG

export ASDOPS_LOG_TO_STDOUT=1 #输入到屏幕报错如下:

Failed to get vocab size from tokenizer wrapper with exception: ValueError: safe_get_tokenizer_from_pretrained failed. Please check the input parameters model_path and kwargs. If the input parameters are valid and the required files exist in model_path, make sure the folder's owner has execute permission. Otherwise, please check the function stack for detailed exception information.

详细信息如下:

[WARNING] APP(1146,mindieservice_daemon):2025-03-19-19:33:36.595.960 [log_inner.cpp:79]1147 build/CMakeFiles/torch_npu.dir/compiler_depend.ts:get_default_custom_lib_path:118: "[PTA]:"config.ini is not exists""

[INFO] APP(1146,mindieservice_daemon):2025-03-19-19:33:36.596.099 [log_inner.cpp:82]1147 build/CMakeFiles/torch_npu.dir/compiler_depend.ts:GetDispatchTimeout:522: "[PTA]:"set dispatchTimeout_ 600 s.""

[2025-03-19 19:33:37.810928] [1146] [281472789114816] [llm] [ERROR] [model_deploy_config.cpp:152] Failed to get vocab size from tokenizer wrapper with exception: ValueError: safe_get_tokenizer_from_pretrained failed. Please check the input parameters model_path and kwargs. If the input parameters are valid and the required files exist in model_path, make sure the folder's owner has execute permission. Otherwise, please check the function stack for detailed exception information.

At:

/usr/local/Ascend/atb-models/atb_llm/models/base/model_utils.py(207): wrapper

/usr/local/Ascend/atb-models/atb_llm/models/base/router.py(323): get_tokenizer

/usr/local/Ascend/atb-models/atb_llm/models/base/router.py(151): tokenizer

/usr/local/Ascend/atb-models/atb_llm/runner/tokenizer_wrapper.py(20): __init__

/usr/local/lib/python3.11/site-packages/mindie_llm/modeling/model_wrapper/atb/atb_tokenizer_wrapper.py(8): __init__

/usr/local/lib/python3.11/site-packages/mindie_llm/modeling/model_wrapper/__init__.py(28): get_tokenizer_wrapper

LLMInferEngine failed to init LLMInferModels

[2025-03-19 19:33:37.811+08:00] [1146] [ascend] [system] [server] [start engine(mindieservice_llm_engine)] [fail]

[2025-03-19 19:33:37.811+08:00] [1146] [1147] [mindie-server] [ERROR] [infer_backend_manager.cpp:236] : [MIE04E06011C] [infer_backend_manager] Failed to init engine: mindieservice_llm_engine

[2025-03-19 19:33:37.811+08:00] [1146] [1147] [mindie-server] [ERROR] [endpoint.cpp:53] : [MIE04E02011C] [endpoint] Failed to init engine! Please check in the mindservice.log, pythonlog.log or console output.

[2025-03-19 19:33:37.811+08:00] [1146] [ascend] [system] [server] [start mindie server] [fail]

[INFO] APP(1146,mindieservice_daemon):2025-03-19-19:33:47.812.236 [log_inner.cpp:82]1147 torch_npu/csrc/InitNpuBindings.cpp:THPModule_npu_shutdown_synchronize:87: "[PTA]:"NPU shutdown synchronize begin.""

[INFO] APP(1146,mindieservice_daemon):2025-03-19-19:33:47.812.300 [log_inner.cpp:82]1147 torch_npu/csrc/InitNpuBindings.cpp:THPModule_npu_shutdown:57: "[PTA]:"NPU shutdown begin.""

[2025-03-19 19:33:48.608+08:00] [1146] [ascend] [system] [server] [start endpoint] [fail]

[2025-03-19 19:33:48.609+08:00] [1146] [ascend] [system] [server] [start mindie server] [fail]

ERR: Failed to init endpoint! Please check the service log or console output.

[2025-03-19 19:33:48.610+08:00] [1146] [1147] [mindie-server] [INFO] [llm_daemon.cpp:142] : [daemon] ERR: Failed to init endpoint! Please check the service log or console output.

Killed解决办法:

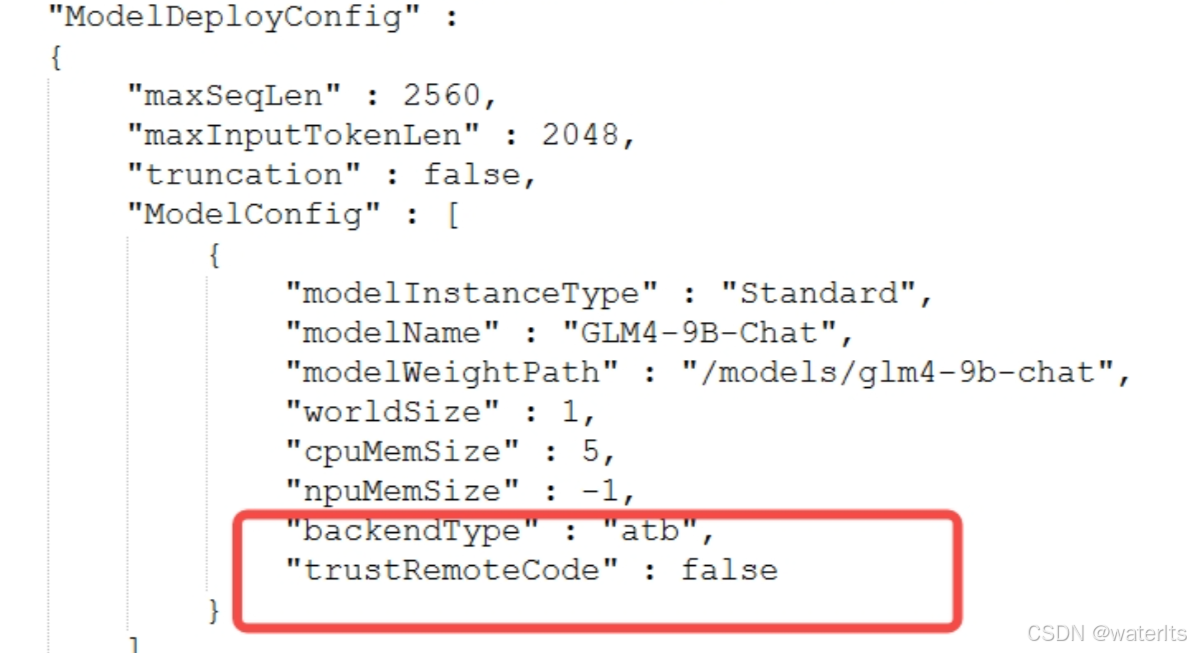

修改mindie配置文件,trustRemoteCode字段从false改为true:

vim /usr/local/Ascend/mindie/latest/mindie-service/conf/config.json

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)