大模型上下文长度的挑战与突破

摘要 大型语言模型的上下文长度扩展面临计算复杂度、内存消耗和信息衰减三大挑战。传统Transformer的自注意力机制存在O(n²)复杂度,导致长序列处理困难。突破性技术如FlashAttention采用分块计算降低内存访问开销,线性注意力机制则通过近似计算将复杂度降至O(n)。这些创新使模型能处理百万token级上下文,为长文档理解、复杂推理等任务开辟了新可能。未来,高效注意力机制与硬件优化的结

引言

随着大型语言模型的快速发展,上下文长度已成为衡量模型能力的关键指标之一。从早期的512 token到如今数百万token的上下文窗口,这一演进不仅代表了技术的飞跃,更意味着人工智能在处理长文档、复杂对话和深度推理方面的能力边界正在不断拓展。本文将深入探讨大模型上下文长度的技术挑战、突破性解决方案以及未来发展趋势。

上下文长度的基本概念

什么是上下文长度?

上下文长度指的是语言模型在一次推理过程中能够处理和记忆的token数量。这一参数直接决定了模型能够理解的文本范围,影响着模型在长文档理解、多轮对话和历史信息保持等方面的表现。

上下文长度的重要性

足够的上下文长度对模型能力至关重要:

- 长文档处理:能够理解和分析完整的文档、报告或书籍

- 连贯对话:在多轮对话中保持上下文一致性

- 复杂推理:进行需要大量背景知识的复杂逻辑推理

- 知识检索:从大量文本中提取和整合相关信息

技术挑战与瓶颈

计算复杂度问题

传统Transformer架构的自注意力机制在计算复杂度上面临着严峻挑战:

import torch

import torch.nn as nn

import math

class StandardAttention(nn.Module):

def __init__(self, d_model, n_heads):

super().__init__()

self.d_model = d_model

self.n_heads = n_heads

self.head_dim = d_model // n_heads

self.qkv = nn.Linear(d_model, d_model * 3)

self.out_proj = nn.Linear(d_model, d_model)

def forward(self, x, mask=None):

batch_size, seq_len, d_model = x.shape

# 计算QKV [复杂度: O(n^2 * d)]

qkv = self.qkv(x)

q, k, v = qkv.chunk(3, dim=-1)

# 注意力计算 [复杂度: O(n^2)]

attention_scores = torch.matmul(q, k.transpose(-2, -1)) / math.sqrt(self.head_dim)

if mask is not None:

attention_scores = attention_scores.masked_fill(mask == 0, -1e9)

attention_weights = torch.softmax(attention_scores, dim=-1)

output = torch.matmul(attention_weights, v)

return self.out_proj(output)

# 计算复杂度分析

def analyze_complexity(seq_len, d_model):

standard_complexity = seq_len ** 2 * d_model # 标准注意力

linear_complexity = seq_len * d_model # 线性注意力

return {

"sequence_length": seq_len,

"standard_complexity": standard_complexity,

"linear_complexity": linear_complexity,

"ratio": standard_complexity / linear_complexity

}

内存消耗限制

随着上下文长度的增加,内存消耗呈二次方增长,成为主要限制因素:

| 上下文长度 | 注意力矩阵大小 | 内存占用(FP16) | 训练可行性 |

|---|---|---|---|

| 2K tokens | 2K × 2K | 8 MB | 容易 |

| 8K tokens | 8K × 8K | 128 MB | 中等 |

| 32K tokens | 32K × 32K | 2 GB | 困难 |

| 100K tokens | 100K × 100K | 20 GB | 极具挑战 |

| 1M tokens | 1M × 1M | 2 TB | 不可行 |

信息衰减问题

在长上下文中,模型往往难以有效利用 distant token 的信息,出现信息衰减现象:

class InformationRetentionAnalyzer:

def __init__(self, model, tokenizer):

self.model = model

self.tokenizer = tokenizer

def analyze_attention_patterns(self, long_text, key_positions):

"""分析长文本中的注意力模式"""

inputs = self.tokenizer(long_text, return_tensors="pt", truncation=False)

with torch.no_grad():

outputs = self.model(**inputs, output_attentions=True)

attention_maps = outputs.attentions

retention_scores = {}

for layer_idx, attention in enumerate(attention_maps):

# 分析关键位置的信息保持

layer_scores = []

for pos in key_positions:

# 计算该位置对其他位置的注意力分布

attention_from_pos = attention[0, :, pos, :].mean(dim=0)

retention_score = attention_from_pos[pos:].sum().item()

layer_scores.append(retention_score)

retention_scores[f'layer_{layer_idx}'] = layer_scores

return retention_scores

突破性技术解决方案

高效注意力机制

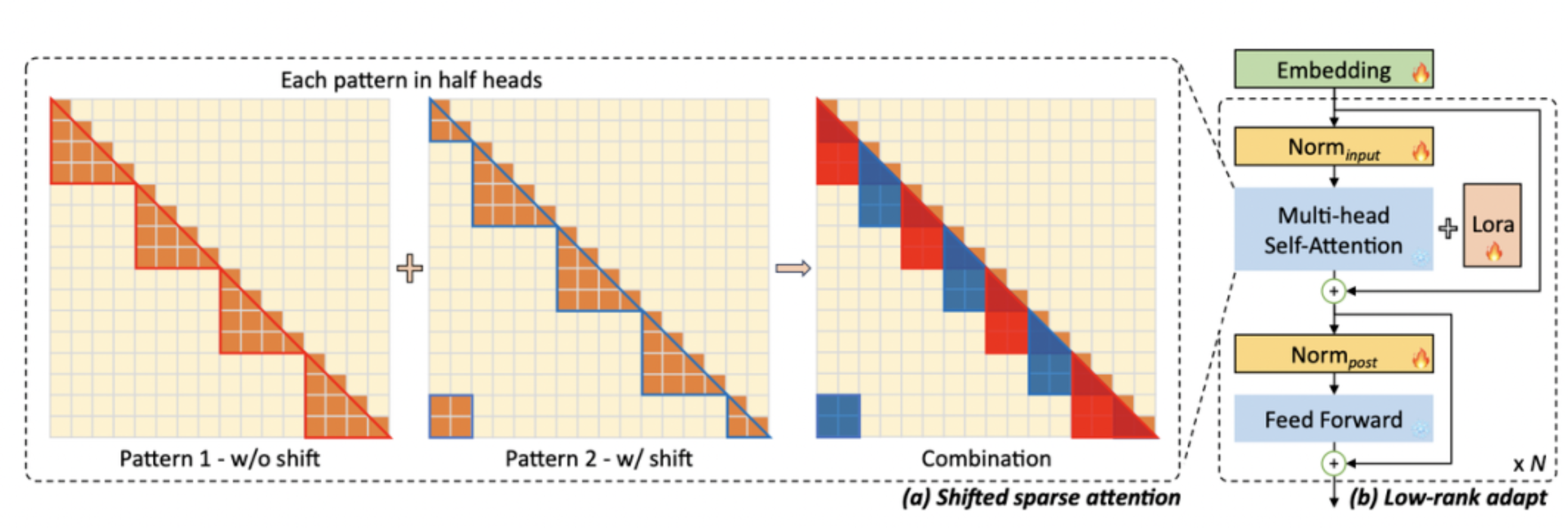

各种高效注意力机制通过近似或优化计算方式来突破长度限制:

import torch

from torch import nn

import torch.nn.functional as F

class FlashAttention(nn.Module):

"""FlashAttention实现示例"""

def __init__(self, d_model, n_heads, block_size=64):

super().__init__()

self.d_model = d_model

self.n_heads = n_heads

self.head_dim = d_model // n_heads

self.block_size = block_size

self.wq = nn.Linear(d_model, d_model)

self.wk = nn.Linear(d_model, d_model)

self.wv = nn.Linear(d_model, d_model)

self.wo = nn.Linear(d_model, d_model)

def forward(self, x):

batch_size, seq_len, _ = x.shape

Q = self.wq(x)

K = self.wk(x)

V = self.wv(x)

# 分块处理以减少内存访问

output = torch.zeros_like(x)

for block_start in range(0, seq_len, self.block_size):

block_end = min(block_start + self.block_size, seq_len)

Q_block = Q[:, block_start:block_end, :]

# 计算分块注意力

attn_weights = torch.matmul(Q_block, K.transpose(-2, -1)) / math.sqrt(self.head_dim)

attn_weights = F.softmax(attn_weights, dim=-1)

output_block = torch.matmul(attn_weights, V)

output[:, block_start:block_end, :] = output_block

return self.wo(output)

class LinearAttention(nn.Module):

"""线性注意力机制"""

def __init__(self, d_model, n_heads, feature_dim=256):

super().__init__()

self.d_model = d_model

self.n_heads = n_heads

self.feature_dim = feature_dim

self.phi = nn.Linear(d_model, feature_dim) # 特征映射

self.wq = nn.Linear(d_model, d_model)

self.wk = nn.Linear(d_model, d_model)

self.wv = nn.Linear(d_model, d_model)

self.wo = nn.Linear(d_model, d_model)

def forward(self, x):

Q = self.wq(x)

K = self.phi(self.wk(x)) # 应用特征映射

V = self.wv(x)

# 线性复杂度计算

KV = torch.einsum('bnd,bne->bde', K, V)

Z = torch.einsum('bnd,bd->bn', K, torch.ones_like(K).sum(dim=1))

numerator = torch.einsum('bnd,bde->bne', self.phi(Q), KV)

denominator = torch.einsum('bnd,bd->bn', self.phi(Q), Z).unsqueeze(-1)

output = numerator / (denominator + 1e-8)

return self.wo(output)

外推与插值技术

通过位置编码的改进来扩展模型的上下文长度:

class RotaryPositionEmbedding(nn.Module):

"""旋转位置编码(RoPE)"""

def __init__(self, dim, max_seq_len=4096, base=10000):

super().__init__()

self.dim = dim

self.max_seq_len = max_seq_len

self.base = base

# 预计算频率

inv_freq = 1.0 / (base ** (torch.arange(0, dim, 2).float() / dim))

self.register_buffer('inv_freq', inv_freq)

def forward(self, x, seq_len=None):

batch_size, seq_len, _ = x.shape

device = x.device

# 生成位置序列

t = torch.arange(seq_len, device=device).type_as(self.inv_freq)

# 计算正弦余弦位置编码

freqs = torch.einsum('i,j->ij', t, self.inv_freq)

emb = torch.cat((freqs, freqs), dim=-1)

# 应用旋转位置编码

cos_emb = emb.cos()

sin_emb = emb.sin()

return cos_emb, sin_emb

class NTKScalingRoPE(RotaryPositionEmbedding):

"""NTK感知的缩放RoPE,用于长度外推"""

def __init__(self, dim, max_seq_len=4096, base=10000, scaling_factor=1.0):

super().__init__(dim, max_seq_len, base)

self.scaling_factor = scaling_factor

def forward(self, x, seq_len=None):

# 调整base实现NTK缩放

adjusted_base = self.base * self.scaling_factor ** (self.dim / (self.dim - 2))

inv_freq = 1.0 / (adjusted_base ** (torch.arange(0, self.dim, 2).float() / self.dim))

t = torch.arange(seq_len, device=x.device).type_as(inv_freq)

freqs = torch.einsum('i,j->ij', t, inv_freq)

emb = torch.cat((freqs, freqs), dim=-1)

cos_emb = emb.cos()

sin_emb = emb.sin()

return cos_emb, sin_emb

层次化注意力架构

通过分层处理来管理长上下文:

class HierarchicalAttention(nn.Module):

"""层次化注意力机制"""

def __init__(self, d_model, n_heads, chunk_size=512, overlap=64):

super().__init__()

self.d_model = d_model

self.n_heads = n_heads

self.chunk_size = chunk_size

self.overlap = overlap

self.local_attention = nn.MultiheadAttention(d_model, n_heads)

self.global_attention = nn.MultiheadAttention(d_model, n_heads)

def chunk_sequence(self, x):

"""将序列分块"""

batch_size, seq_len, d_model = x.shape

chunks = []

start = 0

while start < seq_len:

end = min(start + self.chunk_size, seq_len)

chunk = x[:, start:end, :]

chunks.append(chunk)

start += self.chunk_size - self.overlap

return chunks

def forward(self, x):

chunks = self.chunk_sequence(x)

processed_chunks = []

# 局部注意力处理每个块

for chunk in chunks:

chunk = chunk.transpose(0, 1) # MultiheadAttention需要seq_first

attended_chunk, _ = self.local_attention(chunk, chunk, chunk)

processed_chunks.append(attended_chunk.transpose(0, 1))

# 全局注意力整合所有块

if len(processed_chunks) > 1:

# 选择每个块的摘要token进行全局注意力

summary_tokens = torch.stack([chunk[:, 0, :] for chunk in processed_chunks], dim=1)

summary_tokens = summary_tokens.transpose(0, 1)

global_context, _ = self.global_attention(summary_tokens, summary_tokens, summary_tokens)

global_context = global_context.transpose(0, 1)

# 将全局上下文注入到每个块中

for i, chunk in enumerate(processed_chunks):

global_info = global_context[:, i:i+1, :].expand_as(chunk)

processed_chunks[i] = chunk + global_info

# 重新组装序列

output = torch.cat(processed_chunks, dim=1)

return output

评估与性能分析

长上下文能力评估基准

建立系统的评估体系来衡量模型的长上下文处理能力:

class LongContextEvaluator:

def __init__(self, model, tokenizer, test_datasets):

self.model = model

self.tokenizer = tokenizer

self.test_datasets = test_datasets

def evaluate_needle_in_haystack(self, context_lengths=[1000, 5000, 10000, 50000]):

"""针在干草堆测试:在长文本中检索关键信息"""

results = {}

for length in context_lengths:

accuracy_scores = []

for test_case in self.generate_needle_tests(length):

prompt = test_case['context'] + "\n问题:" + test_case['question']

inputs = self.tokenizer(prompt, return_tensors="pt", truncation=True, max_length=length)

with torch.no_grad():

outputs = self.model.generate(**inputs, max_new_tokens=50)

answer = self.tokenizer.decode(outputs[0], skip_special_tokens=True)

accuracy = self.calculate_answer_similarity(answer, test_case['expected_answer'])

accuracy_scores.append(accuracy)

results[length] = sum(accuracy_scores) / len(accuracy_scores)

return results

def evaluate_multi_hop_reasoning(self, context_lengths):

"""多跳推理能力评估"""

reasoning_scores = {}

for length in context_lengths:

test_cases = self.create_multi_hop_tests(length)

correct_count = 0

for case in test_cases:

if self.run_reasoning_test(case, length):

correct_count += 1

reasoning_scores[length] = correct_count / len(test_cases)

return reasoning_scores

def calculate_perplexity_over_length(self, text_segments):

"""计算不同长度下的困惑度变化"""

perplexities = []

for segment in text_segments:

inputs = self.tokenizer(segment, return_tensors="pt")

with torch.no_grad():

outputs = self.model(**inputs, labels=inputs.input_ids)

loss = outputs.loss

perplexity = torch.exp(loss).item()

perplexities.append(perplexity)

return perplexities

不同技术的性能对比

| 技术方案 | 最大上下文长度 | 推理速度 | 内存效率 | 信息保持能力 |

|---|---|---|---|---|

| 标准注意力 | 4K-8K | 基准 | 基准 | 基准 |

| 窗口注意力 | 32K-64K | 快 | 高 | 局部优秀 |

| 线性注意力 | 100K+ | 中等 | 很高 | 全局良好 |

| 分块注意力 | 256K+ | 慢 | 极高 | 依赖分块策略 |

| 混合注意力 | 1M+ | 可变 | 极高 | 优秀 |

实际应用场景

长文档处理

超长上下文能力在文档处理中的革命性应用:

class LongDocumentProcessor:

def __init__(self, model, tokenizer, max_length=100000):

self.model = model

self.tokenizer = tokenizer

self.max_length = max_length

def process_legal_document(self, document_text):

"""处理法律文档"""

analysis_tasks = [

"提取关键条款",

"识别潜在风险",

"总结各方权利义务",

"分析法律后果"

]

results = {}

for task in analysis_tasks:

prompt = f"文档内容:{document_text}\n\n请执行以下任务:{task}"

result = self.generate_analysis(prompt)

results[task] = result

return results

def academic_paper_analysis(self, paper_text):

"""学术论文深度分析"""

analysis_prompt = f"""

论文全文:

{paper_text}

请进行以下分析:

1. 研究贡献和创新点

2. 方法学优缺点

3. 实验结果可靠性

4. 未来工作建议

5. 相关文献对比

"""

return self.generate_analysis(analysis_prompt)

复杂对话系统

实现真正连贯的长程对话:

class LongContextDialogueSystem:

def __init__(self, model, tokenizer, context_window=50000):

self.model = model

self.tokenizer = tokenizer

self.context_window = context_window

self.conversation_history = []

def add_message(self, role, content):

"""添加对话消息"""

self.conversation_history.append({"role": role, "content": content})

# 维护上下文窗口

current_length = sum(len(msg["content"]) for msg in self.conversation_history)

while current_length > self.context_window and len(self.conversation_history) > 1:

removed = self.conversation_history.pop(0)

current_length -= len(removed["content"])

def generate_response(self, user_message):

"""生成响应"""

self.add_message("user", user_message)

# 构建对话上下文

context = self.format_conversation_context()

inputs = self.tokenizer(context, return_tensors="pt", truncation=True, max_length=self.context_window)

with torch.no_grad():

outputs = self.model.generate(**inputs, max_new_tokens=500, temperature=0.7)

response = self.tokenizer.decode(outputs[0], skip_special_tokens=True)

assistant_response = response[len(context):]

self.add_message("assistant", assistant_response)

return assistant_response

def format_conversation_context(self):

"""格式化对话上下文"""

context = ""

for msg in self.conversation_history:

context += f"{msg['role']}: {msg['content']}\n\n"

return context

未来发展方向

技术挑战与研究方向

当前仍面临的主要挑战和未来研究方向:

- 线性复杂度保证:开发真正线性复杂度的注意力机制

- 信息压缩技术:研究有效的信息摘要和压缩方法

- 动态上下文管理:实现自适应的上下文长度调整

- 多模态长上下文:扩展到图像、音频等多模态场景

潜在应用拓展

超长上下文技术将开启新的应用领域:

- 代码库级别编程助手:理解整个项目代码库

- 企业知识管理:处理企业级文档和知识库

- 科学研究:分析完整的科研文献和实验数据

- 个性化教育:基于完整学习历史提供个性化指导

结论

大模型上下文长度的扩展是人工智能发展的重要里程碑。从计算复杂度优化到内存效率提升,从位置编码外推到注意力机制创新,各种技术突破正在不断推动上下文边界的拓展。随着百万token级别上下文窗口的实现,大模型在长文档理解、复杂推理和持续对话等方面的能力将得到质的飞跃。未来,随着技术的进一步成熟,超长上下文处理能力将成为大模型的标准配置,为人工智能在各行各业的深度应用奠定坚实基础。

更多推荐

已为社区贡献28条内容

已为社区贡献28条内容

所有评论(0)