qwenvl2.5-3b模型微调

注意:需要将原始模型中的preprocessor_config.json 和chat_template.json拷贝到微调后模型的目录中。执行:python train_qwenvl.py。全参数微调需要更大的显存,因此可能需要降低。学习率通常比 LoRA 更小(例如。可以看出识别结果是对的。

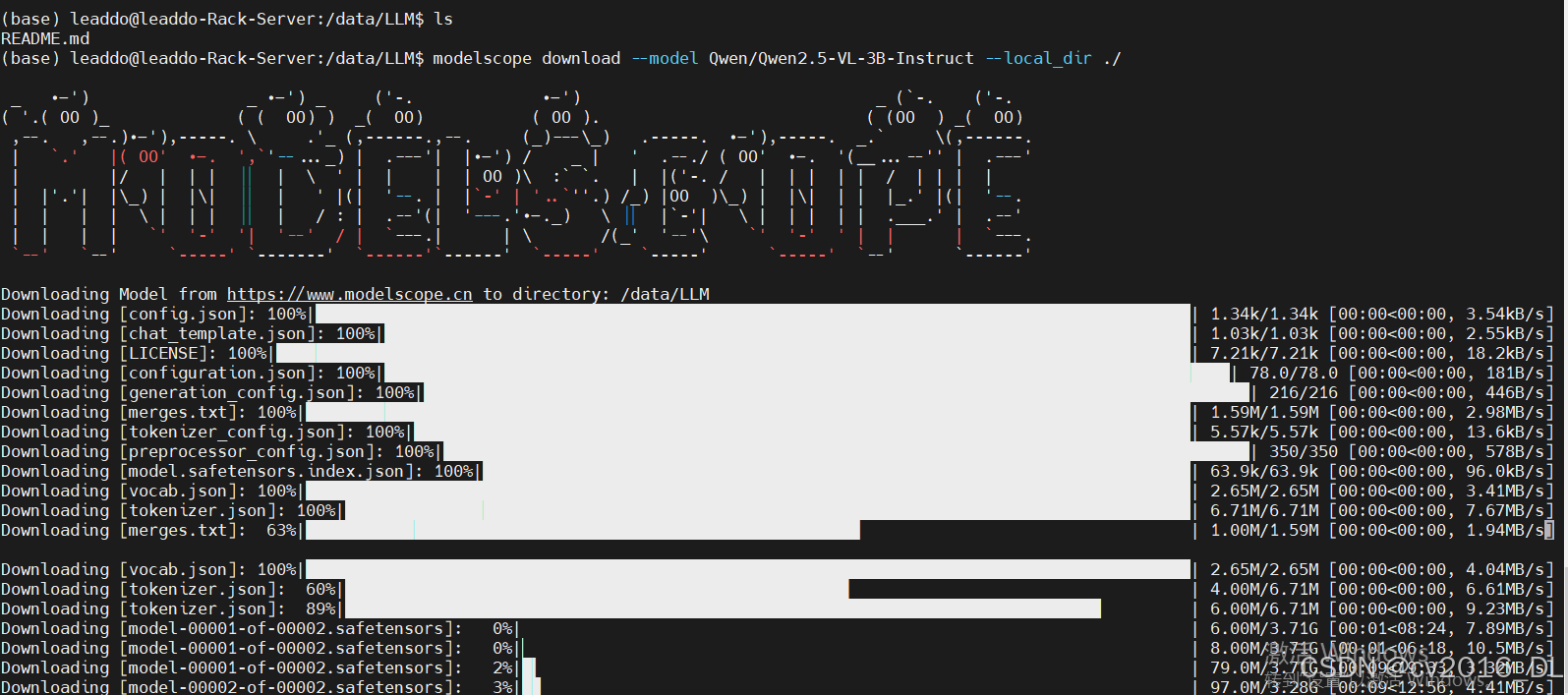

1.下载模型

pip install modelscope

pip install filelock

modelscope download --model Qwen/Qwen2.5-VL-3B-Instruct --local_dir ./Qwen2.5-VL-3B-Instructmodelscope download --model Qwen/Qwen2.5-VL-3B-Instruct --local_dir ./Qwen2.5-VL-3B-Instruct

拉取镜像:

docker pull modelscope-registry.cn-hangzhou.cr.aliyuncs.com/modelscope-repo/modelscope:ubuntu22.04-cuda12.4.0-py310-torch2.6.0-vllm0.8.5.post1-modelscope1.27.1-swift3.5.3创建容器:

docker run -it --name qwenvl --network=host -v /data:/data -v /nfs/lide01/shiwei:/nfs --gpus all --shm-size 32G modelscope-registry.cn-hangzhou.cr.aliyuncs.com/modelscope-repo/modelscope:ubuntu22.04-cuda12.4.0-py310-torch2.6.0-vllm0.8.5.post1-modelscope1.27.1-swift3.5.3 /bin/bash

docker run -it --name qwenvl --network=host -v /data:/data -v /nfs/lide01/shiwei:/nfs --gpus all --shm-size 32G modelscope-registry.cn-hangzhou.cr.aliyuncs.com/modelscope-repo/modelscope:ubuntu22.04-cuda12.4.0-py310-torch2.6.0-vllm0.8.5.post1-modelscope1.27.1-swift3.5.3 /bin/bash

常见镜像地址:

2.测试下载的模型

from transformers import Qwen2_5_VLForConditionalGeneration, AutoProcessor

from PIL import Image

# 根据实际情况修改

model_path = "./qwen2.5-vl-3b-instruct" # 修改为本地模型下载地址

img_path = "./98uq.jpg" # 输入图片地址

#question = "Please describe the entity target content in these images." # 针对图片的提问,可以是中文/英文,但是最后的输出结果不完全一样; 描述一下这张图片的内容。

question = "Detect all objects in the image and return their locations in the form of coordinates. The format of output should be like {“bbox”: [x1, y1, x2, y2], “label”: the name of this object in Chinese, “cof”: What is the confidence score that this object actually exists? The output is normalized to 0-1.}" # 检测相关的prompt;

# question = "Read all texts in the image, output in lines. " ocr相关任务;

# question = "QwenVL HTML with image caption" html格式输出的测试;

# 加载模型

model = Qwen2_5_VLForConditionalGeneration.from_pretrained(model_path, torch_dtype="auto", device_map="auto")

#print(model)

processor = AutoProcessor.from_pretrained(model_path)

# 输入配置

image = Image.open(img_path)

messages = [

{

"role": "user",

"content": [

{

"type": "image",

},

{"type": "text", "text": question},

],

}

]

text_prompt = processor.apply_chat_template(messages, add_generation_prompt=True) #处理成相应的格式

inputs = processor(text=[text_prompt], images=[image], padding=True, return_tensors="pt")

inputs = inputs.to('cuda')

# 推理

generated_ids = model.generate(**inputs, max_new_tokens=128)

generated_ids_trimmed = [

out_ids[len(in_ids) :] for in_ids, out_ids in zip(inputs.input_ids, generated_ids)

]

output_text = processor.batch_decode(

generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False

)

print(output_text)

3.处理数据

完成从数据下载到生成csv的过程:

data2csv.py

# 导入所需的库

from modelscope.msdatasets import MsDataset

import os

import pandas as pd

MAX_DATA_NUMBER = 1000

dataset_id = 'AI-ModelScope/LaTeX_OCR'

subset_name = 'default'

split = 'train'

dataset_dir = 'LaTeX_OCR'

csv_path = './latex_ocr_train.csv'

# 检查目录是否已存在

if not os.path.exists(dataset_dir):

# 从modelscope下载COCO 2014图像描述数据集

ds = MsDataset.load(dataset_id, subset_name=subset_name, split=split)

print(len(ds))

# 设置处理的图片数量上限

total = min(MAX_DATA_NUMBER, len(ds))

# 创建保存图片的目录

os.makedirs(dataset_dir, exist_ok=True)

# 初始化存储图片路径和描述的列表

image_paths = []

texts = []

for i in range(total):

# 获取每个样本的信息

item = ds[i]

text = item['text']

image = item['image']

# 保存图片并记录路径

image_path = os.path.abspath(f'{dataset_dir}/{i}.jpg')

image.save(image_path)

# 将路径和描述添加到列表中

image_paths.append(image_path)

texts.append(text)

# 每处理50张图片打印一次进度

if (i + 1) % 50 == 0:

print(f'Processing {i+1}/{total} images ({(i+1)/total*100:.1f}%)')

# 将图片路径和描述保存为CSV文件

df = pd.DataFrame({

'image_path': image_paths,

'text': texts,

})

# 将数据保存为CSV文件

df.to_csv(csv_path, index=False)

print(f'数据处理完成,共处理了{total}张图片')

else:

print(f'{dataset_dir}目录已存在,跳过数据处理步骤')在同一目录下,用以下代码,将csv文件转换为json文件(训练集+验证集):

csv2json.py

import pandas as pd

import json

csv_path = './latex_ocr_train.csv'

train_json_path = './latex_ocr_train.json'

val_json_path = './latex_ocr_val.json'

df = pd.read_csv(csv_path)

# Create conversation format

conversations = []

# Add image conversations

for i in range(len(df)):

conversations.append({

"id": f"identity_{i+1}",

"conversations": [

{

"role": "user",

"value": f"{df.iloc[i]['image_path']}"

},

{

"role": "assistant",

"value": str(df.iloc[i]['text'])

}

]

})

# print(conversations)

# Save to JSON

# Split into train and validation sets

train_conversations = conversations[:-4]

val_conversations = conversations[-4:]

# Save train set

with open(train_json_path, 'w', encoding='utf-8') as f:

json.dump(train_conversations, f, ensure_ascii=False, indent=2)

# Save validation set

with open(val_json_path, 'w', encoding='utf-8') as f:

json.dump(val_conversations, f, ensure_ascii=False, indent=2)4.开始微调

train_qwenvl.py

import torch

from datasets import Dataset

from modelscope import snapshot_download, AutoTokenizer

from swanlab.integration.transformers import SwanLabCallback

from qwen_vl_utils import process_vision_info

from peft import LoraConfig, TaskType, get_peft_model, PeftModel

from transformers import (

TrainingArguments,

Trainer,

DataCollatorForSeq2Seq,

Qwen2_5_VLForConditionalGeneration,

AutoProcessor,

)

import swanlab

import json

import os

prompt = "你是一个LaText OCR助手,目标是读取用户输入的照片,转换成LaTex公式。"

model_id = "./Qwen2.5-VL-3B-Instruct"

local_model_path = "./Qwen2.5-VL-3B-Instruct"

train_dataset_json_path = "latex_ocr_train.json"

val_dataset_json_path = "latex_ocr_val.json"

output_dir = "./output/Qwen2-VL-3B-LatexOCR"

MAX_LENGTH = 8192

def process_func(example):

"""

将数据集进行预处理

"""

input_ids, attention_mask, labels = [], [], []

conversation = example["conversations"]

image_file_path = conversation[0]["value"]

output_content = conversation[1]["value"]

messages = [

{

"role": "user",

"content": [

{

"type": "image",

"image": f"{image_file_path}",

"resized_height": 500,

"resized_width": 100,

},

{"type": "text", "text": prompt},

],

}

]

text = processor.apply_chat_template(

messages, tokenize=False, add_generation_prompt=True

) # 获取文本

image_inputs, video_inputs = process_vision_info(messages) # 获取数据数据(预处理过)

inputs = processor(

text=[text],

images=image_inputs,

videos=video_inputs,

padding=True,

return_tensors="pt",

)

inputs = {key: value.tolist() for key, value in inputs.items()} #tensor -> list,为了方便拼接

instruction = inputs

response = tokenizer(f"{output_content}", add_special_tokens=False)

input_ids = (

instruction["input_ids"][0] + response["input_ids"] + [tokenizer.pad_token_id]

)

attention_mask = instruction["attention_mask"][0] + response["attention_mask"] + [1]

labels = (

[-100] * len(instruction["input_ids"][0])

+ response["input_ids"]

+ [tokenizer.pad_token_id]

)

if len(input_ids) > MAX_LENGTH: # 做一个截断

input_ids = input_ids[:MAX_LENGTH]

attention_mask = attention_mask[:MAX_LENGTH]

labels = labels[:MAX_LENGTH]

input_ids = torch.tensor(input_ids)

attention_mask = torch.tensor(attention_mask)

labels = torch.tensor(labels)

inputs['pixel_values'] = torch.tensor(inputs['pixel_values'])

inputs['image_grid_thw'] = torch.tensor(inputs['image_grid_thw']).squeeze(0) #由(1,h,w)变换为(h,w)

return {"input_ids": input_ids, "attention_mask": attention_mask, "labels": labels,

"pixel_values": inputs['pixel_values'], "image_grid_thw": inputs['image_grid_thw']}

def predict(messages, model):

# 准备推理

text = processor.apply_chat_template(

messages, tokenize=False, add_generation_prompt=True

)

image_inputs, video_inputs = process_vision_info(messages)

inputs = processor(

text=[text],

images=image_inputs,

videos=video_inputs,

padding=True,

return_tensors="pt",

)

inputs = inputs.to("cuda")

# 生成输出

generated_ids = model.generate(**inputs, max_new_tokens=MAX_LENGTH)

generated_ids_trimmed = [

out_ids[len(in_ids) :] for in_ids, out_ids in zip(inputs.input_ids, generated_ids)

]

output_text = processor.batch_decode(

generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False

)

return output_text[0]

# 使用Transformers加载模型权重

tokenizer = AutoTokenizer.from_pretrained(local_model_path, use_fast=False, trust_remote_code=True)

processor = AutoProcessor.from_pretrained(local_model_path)

origin_model = Qwen2_5_VLForConditionalGeneration.from_pretrained(local_model_path, device_map="auto", torch_dtype=torch.bfloat16, trust_remote_code=True,)

origin_model.enable_input_require_grads() # 开启梯度检查点时,要执行该方法

# 处理数据集:读取json文件

train_ds = Dataset.from_json(train_dataset_json_path)

train_dataset = train_ds.map(process_func)

# 配置LoRA

config = LoraConfig(

task_type=TaskType.CAUSAL_LM,

target_modules=["q_proj", "k_proj", "v_proj", "o_proj", "gate_proj", "up_proj", "down_proj"],

inference_mode=False, # 训练模式

r=64, # Lora 秩

lora_alpha=16, # Lora alaph,具体作用参见 Lora 原理

lora_dropout=0.05, # Dropout 比例

bias="none",

)

# 获取LoRA模型

train_peft_model = get_peft_model(origin_model, config)

# 配置训练参数

args = TrainingArguments(

output_dir=output_dir,

per_device_train_batch_size=4,

gradient_accumulation_steps=4,

logging_steps=10,

logging_first_step=10,

num_train_epochs=2,

save_steps=100,

learning_rate=1e-4,

save_on_each_node=True,

gradient_checkpointing=True,

report_to="none",

)

# 设置SwanLab回调

swanlab_callback = SwanLabCallback(

project="Qwen2-VL-ft-latexocr",

experiment_name="7B-1kdata",

config={

"model": "https://modelscope.cn/models/Qwen/Qwen2-VL-3B-Instruct",

"dataset": "https://modelscope.cn/datasets/AI-ModelScope/LaTeX_OCR/summary",

# "github": "https://github.com/datawhalechina/self-llm",

"model_id": model_id,

"train_dataset_json_path": train_dataset_json_path,

"val_dataset_json_path": val_dataset_json_path,

"output_dir": output_dir,

"prompt": prompt,

"train_data_number": len(train_ds),

"token_max_length": MAX_LENGTH,

"lora_rank": 64,

"lora_alpha": 16,

"lora_dropout": 0.1,

},

)

# 配置Trainer

trainer = Trainer(

model=train_peft_model,

args=args,

train_dataset=train_dataset,

data_collator=DataCollatorForSeq2Seq(tokenizer=tokenizer, padding=True),

callbacks=[swanlab_callback],

)

# 开启模型训练

trainer.train()

# ====================测试===================

# 配置测试参数

val_config = LoraConfig(

task_type=TaskType.CAUSAL_LM,

target_modules=["q_proj", "k_proj", "v_proj", "o_proj", "gate_proj", "up_proj", "down_proj"],

inference_mode=True, # 训练模式

r=64, # Lora 秩

lora_alpha=16, # Lora alaph,具体作用参见 Lora 原理

lora_dropout=0.05, # Dropout 比例

bias="none",

)

# 获取测试模型,从output_dir中获取最新的checkpoint

load_model_path = f"{output_dir}/checkpoint-{max([int(d.split('-')[-1]) for d in os.listdir(output_dir) if d.startswith('checkpoint-')])}"

print(f"load_model_path: {load_model_path}")

val_peft_model = PeftModel.from_pretrained(origin_model, model_id=load_model_path, config=val_config)

# 读取测试数据

with open(val_dataset_json_path, "r") as f:

test_dataset = json.load(f)

test_image_list = []

for item in test_dataset:

image_file_path = item["conversations"][0]["value"]

label = item["conversations"][1]["value"]

messages = [{

"role": "user",

"content": [

{

"type": "image",

"image": image_file_path,

"resized_height": 100,

"resized_width": 500,

},

{

"type": "text",

"text": prompt,

}

]}]

response = predict(messages, val_peft_model)

print(f"predict:{response}")

print(f"gt:{label}\n")

test_image_list.append(swanlab.Image(image_file_path, caption=response))

swanlab.log({"Prediction": test_image_list})

# 在Jupyter Notebook中运行时要停止SwanLab记录,需要调用swanlab.finish()

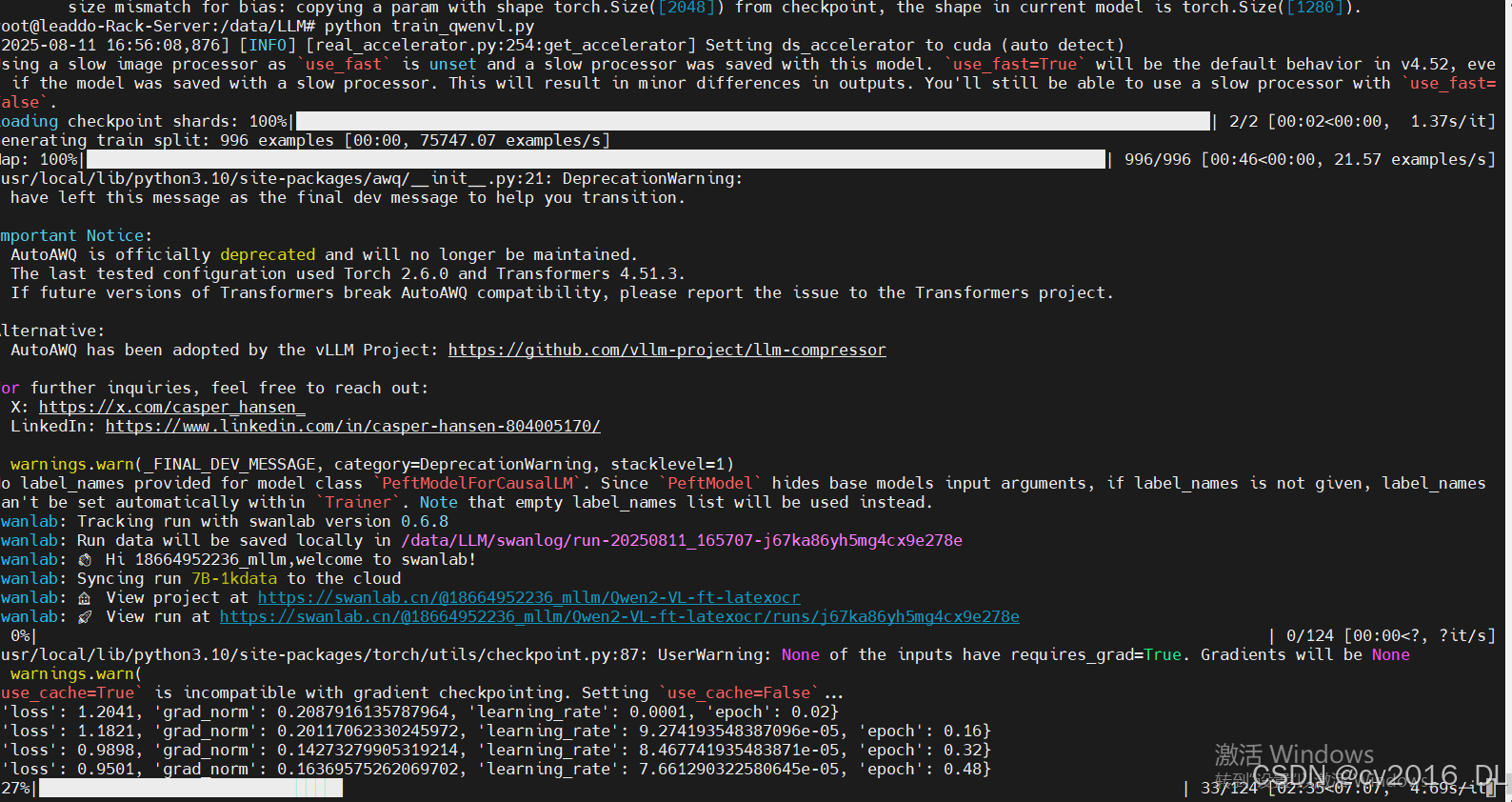

swanlab.finish()执行:python train_qwenvl.py

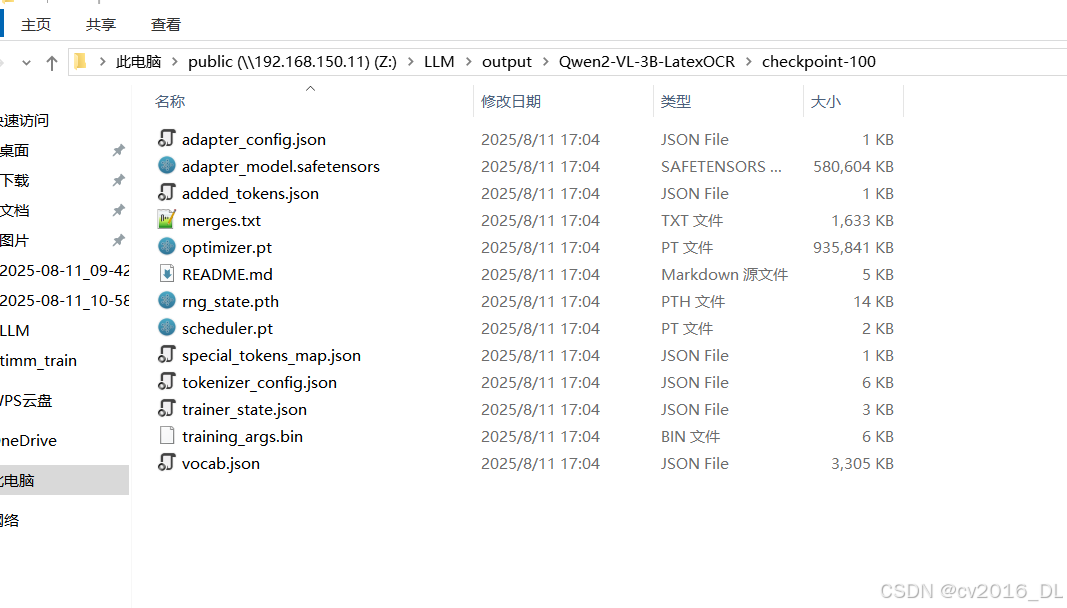

保存微调后模型(lora参数 adapter_model):

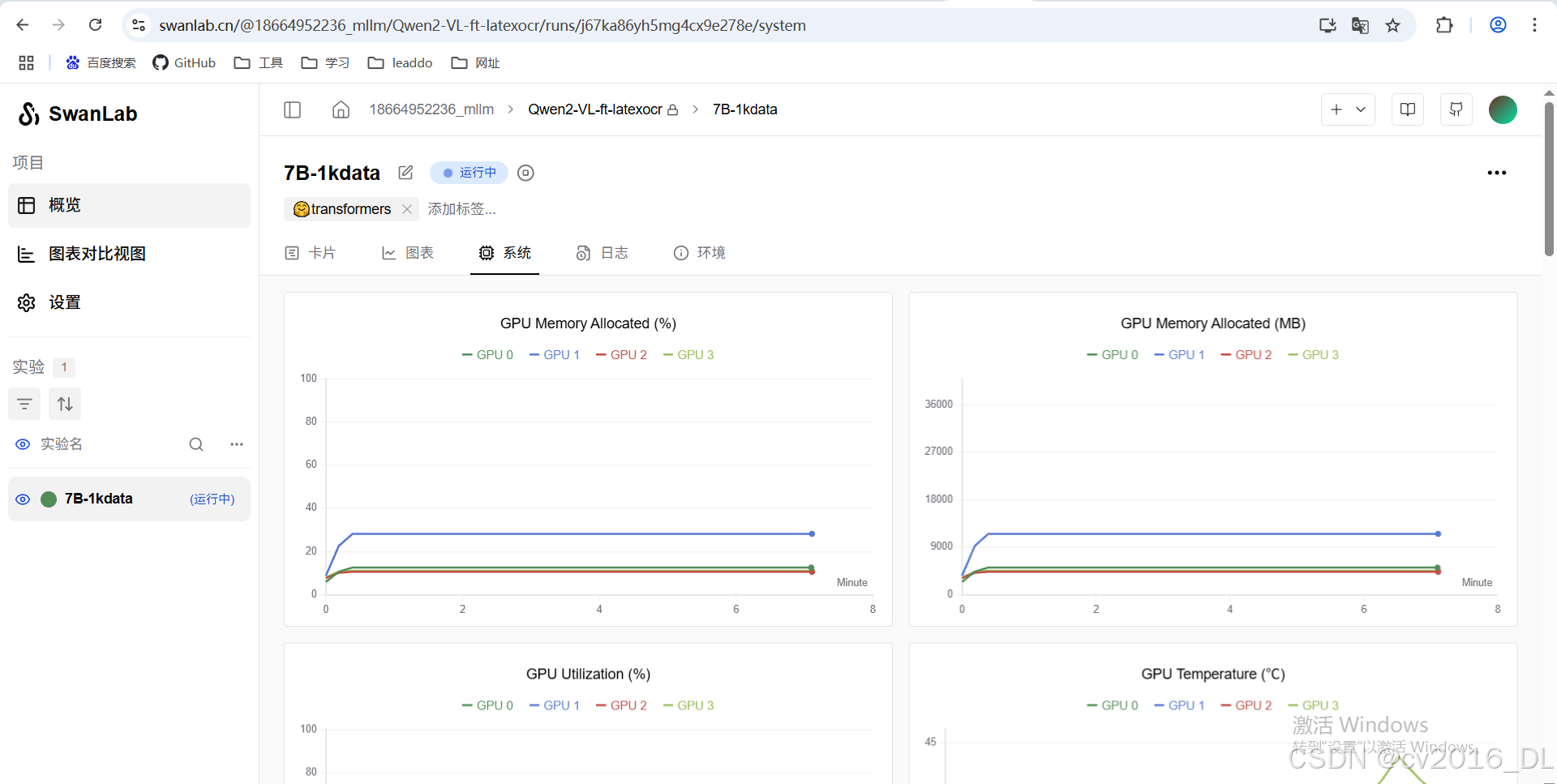

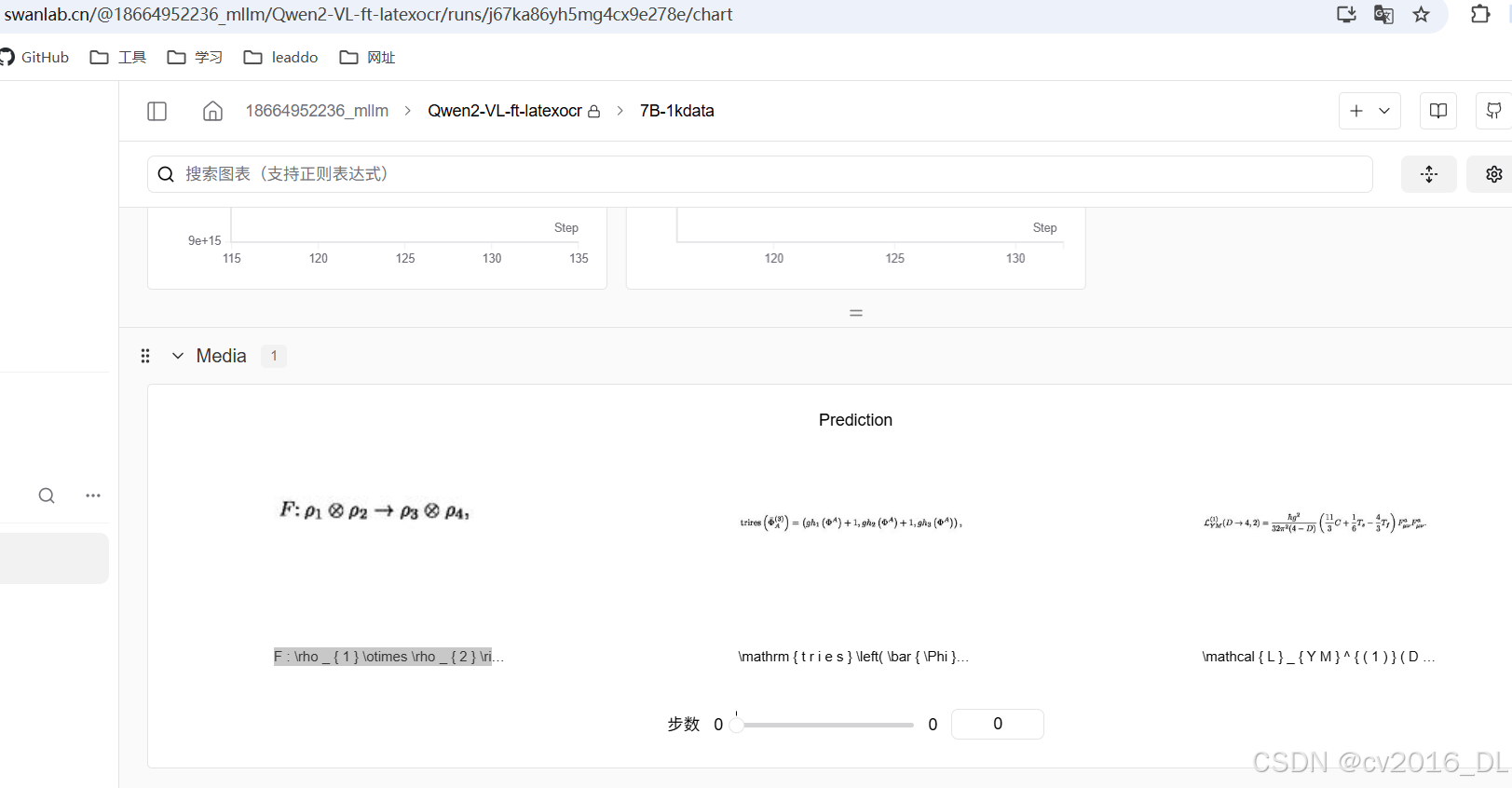

访问SwanLab查看训练微调中间过程,需要自己注册账号:

微调后效果:

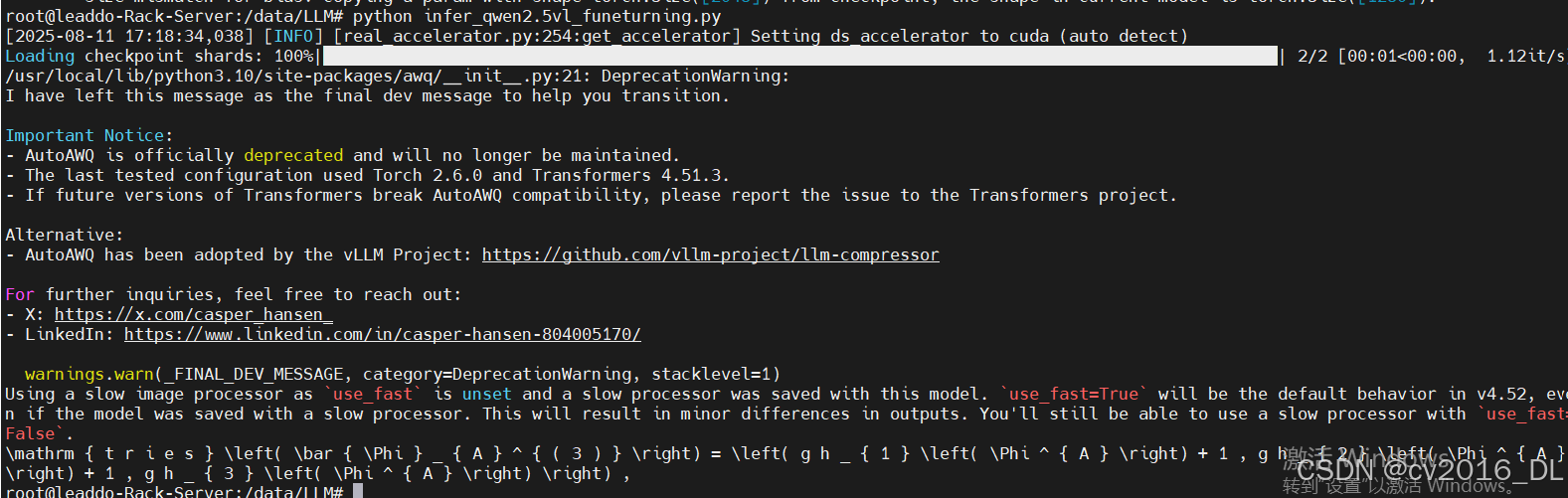

5.微调模型合并以及测试

测试脚本:

infer_qwen2.5vl_funeturning.py

from transformers import Qwen2_5_VLForConditionalGeneration, AutoProcessor

from qwen_vl_utils import process_vision_info

from peft import PeftModel, LoraConfig, TaskType

prompt = "你是一个LaText OCR助手,目标是读取用户输入的照片,转换成LaTex公式。"

local_model_path = "./Qwen2.5-VL-3B-Instruct"

lora_model_path = "./output/Qwen2-VL-3B-LatexOCR/checkpoint-124"

test_image_path = "./LaTeX_OCR/997.jpg"

config = LoraConfig(

task_type=TaskType.CAUSAL_LM,

target_modules=["q_proj", "k_proj", "v_proj", "o_proj", "gate_proj", "up_proj", "down_proj"],

inference_mode=True,

r=64, # Lora 秩

lora_alpha=16, # Lora alaph,具体作用参见 Lora 原理

lora_dropout=0.05, # Dropout 比例

bias="none",

)

# default: Load the model on the available device(s)

model = Qwen2_5_VLForConditionalGeneration.from_pretrained(

local_model_path, torch_dtype="auto", device_map="auto"

)

model = PeftModel.from_pretrained(model, model_id=f"{lora_model_path}", config=config)

processor = AutoProcessor.from_pretrained(local_model_path)

messages = [

{

"role": "user",

"content": [

{

"type": "image",

"image": test_image_path,

"resized_height": 100,

"resized_width": 500,

},

{"type": "text", "text": f"{prompt}"},

],

}

]

# Preparation for inference

text = processor.apply_chat_template(

messages, tokenize=False, add_generation_prompt=True

)

image_inputs, video_inputs = process_vision_info(messages)

inputs = processor(

text=[text],

images=image_inputs,

videos=video_inputs,

padding=True,

return_tensors="pt",

)

inputs = inputs.to("cuda")

# Inference: Generation of the output

generated_ids = model.generate(**inputs, max_new_tokens=8192)

generated_ids_trimmed = [

out_ids[len(in_ids) :] for in_ids, out_ids in zip(inputs.input_ids, generated_ids)

]

output_text = processor.batch_decode(

generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False

)

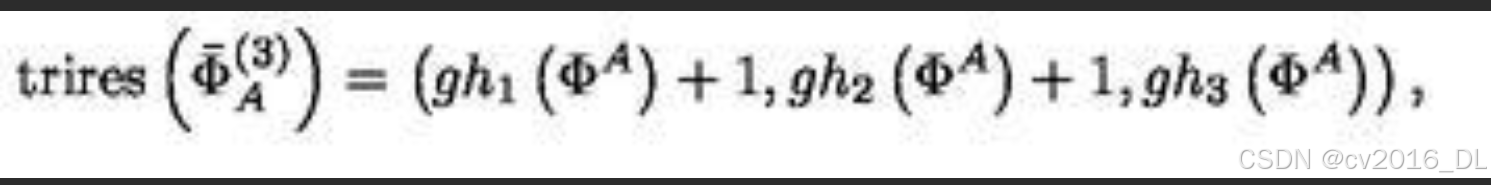

print(output_text[0])输出结果:

997.jpg

可以看出识别结果是对的。

测试原始模型输出的脚本:

from transformers import Qwen2_5_VLForConditionalGeneration, AutoProcessor

from qwen_vl_utils import process_vision_info

prompt = "你是一个LaText OCR助手,目标是读取用户输入的照片,转换成LaTex公式。"

local_model_path = "./Qwen2.5-VL-3B-Instruct" # 原始模型路径

test_image_path = "./LaTeX_OCR/997.jpg" # 测试图片路径

# 直接加载原始模型(不加载 LoRA)

model = Qwen2_5_VLForConditionalGeneration.from_pretrained(

local_model_path,

torch_dtype="auto",

device_map="auto"

)

processor = AutoProcessor.from_pretrained(local_model_path)

# 构造输入消息(与微调时格式一致)

messages = [

{

"role": "user",

"content": [

{

"type": "image",

"image": test_image_path,

"resized_height": 100,

"resized_width": 500,

},

{"type": "text", "text": f"{prompt}"},

],

}

]

# 预处理输入

text = processor.apply_chat_template(

messages, tokenize=False, add_generation_prompt=True

)

image_inputs, video_inputs = process_vision_info(messages)

inputs = processor(

text=[text],

images=image_inputs,

videos=video_inputs,

padding=True,

return_tensors="pt",

).to("cuda") # 移动到 GPU(如果可用)

# 生成输出

generated_ids = model.generate(**inputs, max_new_tokens=8192)

generated_ids_trimmed = [

out_ids[len(in_ids):] for in_ids, out_ids in zip(inputs.input_ids, generated_ids)

]

output_text = processor.batch_decode(

generated_ids_trimmed,

skip_special_tokens=True,

clean_up_tokenization_spaces=False

)

print(output_text[0]) # 打印模型输出

6.全量微调

全量微调需要移除 LoRA 相关代码:

删除 peft 相关的导入和配置(LoraConfig, get_peft_model, PeftModel),

全参数微调需要更大的显存,因此可能需要降低 batch_size 或启用梯度检查点(gradient_checkpointing=True)。

学习率通常比 LoRA 更小(例如 5e-5 而不是 1e-4)。

train_full.py

import torch

from datasets import Dataset

from modelscope import snapshot_download, AutoTokenizer

from swanlab.integration.transformers import SwanLabCallback

from qwen_vl_utils import process_vision_info

from transformers import (

TrainingArguments,

Trainer,

DataCollatorForSeq2Seq,

Qwen2_5_VLForConditionalGeneration,

AutoProcessor,

)

import swanlab

import json

import os

# 配置参数

prompt = "你是一个LaText OCR助手,目标是读取用户输入的照片,转换成LaTex公式。"

model_id = "./Qwen2.5-VL-3B-Instruct"

local_model_path = "./Qwen2.5-VL-3B-Instruct"

train_dataset_json_path = "latex_ocr_train.json"

val_dataset_json_path = "latex_ocr_val.json"

output_dir = "./output/Qwen2-VL-3B-Full-Finetune" # 修改输出目录

MAX_LENGTH = 8192

# 数据预处理函数(与原脚本一致)

def process_func(example):

conversation = example["conversations"]

image_file_path = conversation[0]["value"]

output_content = conversation[1]["value"]

messages = [

{

"role": "user",

"content": [

{"type": "image", "image": image_file_path, "resized_height": 500, "resized_width": 100},

{"type": "text", "text": prompt},

],

}

]

text = processor.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

image_inputs, video_inputs = process_vision_info(messages)

inputs = processor(

text=[text],

images=image_inputs,

videos=video_inputs,

padding=True,

return_tensors="pt",

)

inputs = {key: value.tolist() for key, value in inputs.items()}

instruction = inputs

response = tokenizer(f"{output_content}", add_special_tokens=False)

input_ids = instruction["input_ids"][0] + response["input_ids"] + [tokenizer.pad_token_id]

attention_mask = instruction["attention_mask"][0] + response["attention_mask"] + [1]

labels = [-100] * len(instruction["input_ids"][0]) + response["input_ids"] + [tokenizer.pad_token_id]

if len(input_ids) > MAX_LENGTH:

input_ids = input_ids[:MAX_LENGTH]

attention_mask = attention_mask[:MAX_LENGTH]

labels = labels[:MAX_LENGTH]

return {

"input_ids": torch.tensor(input_ids),

"attention_mask": torch.tensor(attention_mask),

"labels": torch.tensor(labels),

"pixel_values": torch.tensor(inputs['pixel_values']),

"image_grid_thw": torch.tensor(inputs['image_grid_thw']).squeeze(0),

}

# 预测函数(与原脚本一致)

def predict(messages, model):

text = processor.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

image_inputs, video_inputs = process_vision_info(messages)

inputs = processor(

text=[text],

images=image_inputs,

videos=video_inputs,

padding=True,

return_tensors="pt",

).to("cuda")

generated_ids = model.generate(**inputs, max_new_tokens=MAX_LENGTH)

generated_ids_trimmed = [out_ids[len(in_ids):] for in_ids, out_ids in zip(inputs.input_ids, generated_ids)]

return processor.batch_decode(generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False)[0]

# 加载原始模型和处理器

tokenizer = AutoTokenizer.from_pretrained(local_model_path, use_fast=False, trust_remote_code=True)

processor = AutoProcessor.from_pretrained(local_model_path)

model = Qwen2_5_VLForConditionalGeneration.from_pretrained(

local_model_path,

device_map="auto",

torch_dtype=torch.bfloat16,

trust_remote_code=True,

)

model.enable_input_require_grads() # 启用梯度检查点时必需

# 加载数据集

train_ds = Dataset.from_json(train_dataset_json_path)

train_dataset = train_ds.map(process_func)

# 全参数微调的训练配置

args = TrainingArguments(

output_dir=output_dir,

per_device_train_batch_size=2, # 降低 batch_size 以适应显存

gradient_accumulation_steps=8, # 增加梯度累积步数

logging_steps=10,

num_train_epochs=2,

save_steps=100,

learning_rate=5e-5, # 更小的学习率

save_on_each_node=True,

gradient_checkpointing=True, # 启用梯度检查点

report_to="none",

)

# SwanLab 回调(移除 LoRA 相关配置)

swanlab_callback = SwanLabCallback(

project="Qwen2-VL-Full-Finetune",

experiment_name="3B-LaTeX-OCR",

config={

"model": "https://modelscope.cn/models/Qwen/Qwen2.5-VL-3B-Instruct",

"dataset": "https://modelscope.cn/datasets/AI-ModelScope/LaTeX_OCR/summary",

"model_id": model_id,

"train_dataset_json_path": train_dataset_json_path,

"val_dataset_json_path": val_dataset_json_path,

"output_dir": output_dir,

"prompt": prompt,

"train_data_number": len(train_ds),

"token_max_length": MAX_LENGTH,

},

)

# 初始化 Trainer(直接使用原始模型)

trainer = Trainer(

model=model, # 直接使用原始模型,而非 LoRA 模型

args=args,

train_dataset=train_dataset,

data_collator=DataCollatorForSeq2Seq(tokenizer=tokenizer, padding=True),

callbacks=[swanlab_callback],

)

# 开始训练

trainer.train()

# ====================测试阶段===================

# 加载验证数据集

with open(val_dataset_json_path, "r") as f:

test_dataset = json.load(f)

test_image_list = []

for item in test_dataset:

image_file_path = item["conversations"][0]["value"]

label = item["conversations"][1]["value"]

messages = [{

"role": "user",

"content": [

{"type": "image", "image": image_file_path, "resized_height": 100, "resized_width": 500},

{"type": "text", "text": prompt},

]

}]

response = predict(messages, model) # 直接使用原始模型预测

print(f"Predict: {response}\nGround Truth: {label}\n")

test_image_list.append(swanlab.Image(image_file_path, caption=response))

# 记录结果到 SwanLab

swanlab.log({"Prediction": test_image_list})

swanlab.finish()

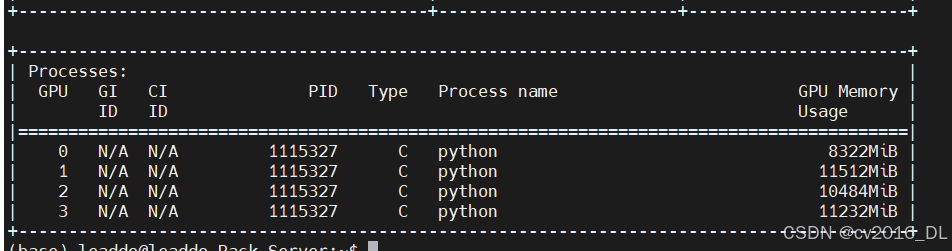

全量微调显存占用情况:

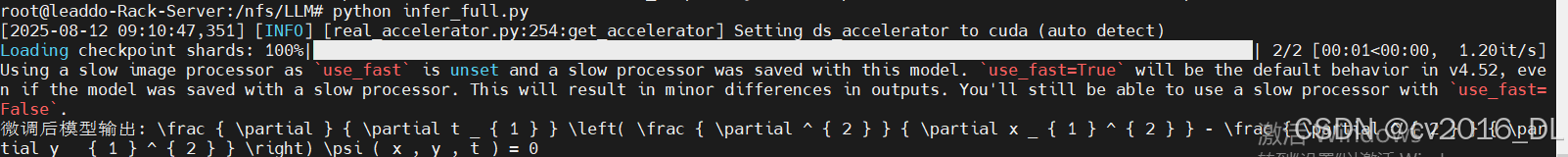

测试全量微调后模型效果:

注意:需要将原始模型中的preprocessor_config.json 和chat_template.json拷贝到微调后模型的目录中。

测试全量微调脚本:

infer_full.py

from transformers import Qwen2_5_VLForConditionalGeneration, AutoProcessor

from qwen_vl_utils import process_vision_info

# 注意:这里加载的是微调后的模型路径!

finetuned_model_path = "./output/Qwen2-VL-3B-Full-Finetune/checkpoint-124" # 替换为你的微调输出目录

test_image_path = "./LaTeX_OCR/997.jpg"

prompt = "你是一个LaText OCR助手,目标是读取用户输入的照片,转换成LaTex公式。"

# 加载微调后的模型和处理器

model = Qwen2_5_VLForConditionalGeneration.from_pretrained(

finetuned_model_path,

torch_dtype="auto",

device_map="auto"

)

processor = AutoProcessor.from_pretrained(finetuned_model_path)

# 构造输入(与训练时格式一致)

messages = [

{

"role": "user",

"content": [

{"type": "image", "image": test_image_path, "resized_height": 100, "resized_width": 500},

{"type": "text", "text": prompt},

],

}

]

# 预处理和生成

text = processor.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

image_inputs, _ = process_vision_info(messages)

inputs = processor(

text=[text],

images=image_inputs,

padding=True,

return_tensors="pt",

).to("cuda") # 确保数据在GPU上

# 生成输出

generated_ids = model.generate(**inputs, max_new_tokens=8192)

generated_ids_trimmed = [out_ids[len(in_ids):] for in_ids, out_ids in zip(inputs.input_ids, generated_ids)]

output_text = processor.batch_decode(

generated_ids_trimmed,

skip_special_tokens=True,

clean_up_tokenization_spaces=False

)

print("微调后模型输出:", output_text[0])

结果输出:

7.参考文档

更多推荐

已为社区贡献14条内容

已为社区贡献14条内容

所有评论(0)